neuron-ai

The PHP Agentic Framework to build production-ready AI driven applications. Connect components (LLMs, vector DBs, memory) to agents that can interact with your data. With its modular architecture it's best suited for building RAG, multi-agent workflows, or business process automations.

Stars: 1097

Neuron AI is a PHP framework that provides an Agent class for creating fully functional agents to perform tasks like analyzing text for SEO optimization. The framework manages advanced mechanisms such as memory, tools, and function calls. Users can extend the Agent class to create custom agents and interact with them to get responses based on the underlying LLM. Neuron AI aims to simplify the development of AI-powered applications by offering a structured framework with documentation and guidelines for contributions under the MIT license.

README:

[!WARNING] 🚨 IMPORTANT: Repository Migration Notice

Effective October 1st, 2025, the official Neuron repository will be migrating from the Inspector GitHub organization to a dedicated Neuron organization.

For detailed migration instructions and configuration updates, please visit our Repository Migration Guide.

Before moving on, support the community giving a GitHub star ⭐️. Thank you!

Neuron is a PHP framework for creating and orchestrating AI Agents. It allows you to integrate AI entities in your existing PHP applications with a powerful and flexible architecture. We provide tools for the entire agentic application development lifecycle, from LLM interfaces, to data loading, to multi-agent orchestration, to monitoring and debugging. In addition, we provide tutorials and other educational content to help you get started using AI Agents in your projects.

- PHP: ^8.1

Go to the official documentation

Check out the technical guides and tutorials archive to learn how to start creating your AI Agents with Neuron https://docs.neuron-ai.dev/overview/fast-learning-by-video.

- Install

- Create an Agent

- Talk to the Agent

- Monitoring

- Supported LLM Providers

- Tools & Toolkits

- MCP Connector

- Structured Output

- RAG

- Workflow

- Official Documentation

Install the latest version of the package:

composer require inspector-apm/neuron-ai

Neuron provides you with the Agent class you can extend to inherit the main features of the framework and create fully functional agents. This class automatically manages some advanced mechanisms for you, such as memory, tools and function calls, up to the RAG systems. You can go deeper into these aspects in the documentation.

Let's create an Agent with the command below:

php vendor/bin/neuron make:agent DataAnalystAgent

<?php

namespace App\Neuron;

use NeuronAI\Agent;

use NeuronAI\SystemPrompt;

use NeuronAI\Providers\AIProviderInterface;

use NeuronAI\Providers\Anthropic\Anthropic;

class DataAnalystAgent extends Agent

{

protected function provider(): AIProviderInterface

{

return new Anthropic(

key: 'ANTHROPIC_API_KEY',

model: 'ANTHROPIC_MODEL',

);

}

protected function instructions(): string

{

return (string) new SystemPrompt(

background: [

"You are a data analyst expert in creating reports from SQL databases."

]

);

}

}The SystemPrompt class is designed to take your base instructions and build a consistent prompt for the underlying model

reducing the effort for prompt engineering.

Send a prompt to the agent to get a response from the underlying LLM:

$agent = DataAnalystAgent::make();

$response = $agent->chat(

new UserMessage("Hi, I'm Valerio. Who are you?")

);

echo $response->getContent();

// I'm a data analyst. How can I help you today?

$response = $agent->chat(

new UserMessage("Do you remember my name?")

);

echo $response->getContent();

// Your name is Valerio, as you said in your introduction.As you can see in the example above, the Agent automatically has memory of the ongoing conversation. Learn more about memory in the documentation.

Integrating AI Agents into your application you’re not working only with functions and deterministic code, you program your agent also influencing probability distributions. Same input ≠ output. That means reproducibility, versioning, and debugging become real problems.

Many of the Agents you build with Neuron will contain multiple steps with multiple invocations of LLM calls, tool usage, access to external memories, etc. As these applications get more and more complex, it becomes crucial to be able to inspect what exactly your agent is doing and why.

Why is the model taking certain decisions? What data is the model reacting to? Prompting is not programming in the common sense. No static types, small changes break output, long prompts cost latency, and no two models behave exactly the same with the same prompt.

The best way to take your AI application under control is with Inspector. After you sign up,

make sure to set the INSPECTOR_INGESTION_KEY variable in the application environment file to start monitoring:

INSPECTOR_INGESTION_KEY=fwe45gtxxxxxxxxxxxxxxxxxxxxxxxxxxxxAfter configuring the environment variable, you will see the agent execution timeline in your Inspector dashboard.

Learn more about Monitoring in the documentation.

With Neuron, you can switch between LLM providers with just one line of code, without any impact on your agent implementation. Supported providers:

- Anthropic

- OpenAI (also as an embeddings provider)

- OpenAI on Azure

- Ollama (also as an embeddings provider)

- OpenAILike

- Gemini (also as an embeddings provider)

- Mistral

- HuggingFace

- Deepseek

- Grok

- AWS Bedrock Runtime

Make your agent able to perform concrete tasks, like reading from a database, by adding tools or toolkits (collections of tools).

<?php

namespace App\Neuron;

use NeuronAI\Agent;

use NeuronAI\Providers\AIProviderInterface;

use NeuronAI\Providers\Anthropic\Anthropic;

use NeuronAI\SystemPrompt;

use NeuronAI\Tools\ToolProperty;

use NeuronAI\Tools\Tool;

use NeuronAI\Tools\Toolkits\MySQL\MySQLToolkit;

class DataAnalystAgent extends Agent

{

protected function provider(): AIProviderInterface

{

return new Anthropic(

key: 'ANTHROPIC_API_KEY',

model: 'ANTHROPIC_MODEL',

);

}

protected function instructions(): string

{

return (string) new SystemPrompt(

background: [

"You are a data analyst expert in creating reports from SQL databases."

]

);

}

protected function tools(): array

{

return [

MySQLToolkit::make(

\DB::connection()->getPdo()

),

];

}

}Ask the agent something about your database:

$response = DataAnalystAgent::make()->chat(

new UserMessage("How many orders we received today?")

);

echo $response->getContent();Learn more about Tools in the documentation.

Instead of implementing tools manually, you can connect tools exposed by an MCP server with the McpConnector component:

<?php

namespace App\Neuron;

use NeuronAI\Agent;

use NeuronAI\MCP\McpConnector;

use NeuronAI\Providers\AIProviderInterface;

use NeuronAI\Providers\Anthropic\Anthropic;

use NeuronAI\Tools\ToolProperty;

use NeuronAI\Tools\Tool;

class DataAnalystAgent extends Agent

{

protected function provider(): AIProviderInterface

{

...

}

protected function instructions(): string

{

...

}

protected function tools(): array

{

return [

// Connect to an MCP server

...McpConnector::make([

'command' => 'npx',

'args' => ['-y', '@modelcontextprotocol/server-everything'],

])->tools(),

];

}

}Learn more about MCP connector in the documentation.

There are scenarios where you need Agents to understand natural language, but output in a structured format, like business processes automation, data extraction, etc. to use the output with some other downstream system.

use App\Neuron\MyAgent;

use NeuronAI\Chat\Messages\UserMessage;

use NeuronAI\StructuredOutput\SchemaProperty;

/*

* Define the output structure as a PHP class.

*/

class Person

{

#[SchemaProperty(description: 'The user name')]

public string $name;

#[SchemaProperty(description: 'What the user love to eat')]

public string $preference;

}

// Talk to the agent requiring the structured output

$person = MyAgent::make()->structured(

new UserMessage("I'm John and I like pizza!"),

Person::class

);

echo $person->name ' like '.$person->preference;

// John like pizzaLearn more about Structured Output on the documentation.

To create a RAG you need to attach some additional components other than the AI provider, such as a vector store,

and an embeddings provider.

Let's create a RAG with the command below:

php vendor/bin/neuron make:rag MyChatBot

Here is an example of a RAG implementation:

<?php

namespace App\Neuron;

use NeuronAI\Providers\AIProviderInterface;

use NeuronAI\Providers\Anthropic\Anthropic;

use NeuronAI\RAG\Embeddings\EmbeddingsProviderInterface;

use NeuronAI\RAG\Embeddings\VoyageEmbeddingProvider;

use NeuronAI\RAG\RAG;

use NeuronAI\RAG\VectorStore\PineconeVectorStore;

use NeuronAI\RAG\VectorStore\VectorStoreInterface;

class MyChatBot extends RAG

{

protected function provider(): AIProviderInterface

{

return new Anthropic(

key: 'ANTHROPIC_API_KEY',

model: 'ANTHROPIC_MODEL',

);

}

protected function embeddings(): EmbeddingsProviderInterface

{

return new VoyageEmbeddingProvider(

key: 'VOYAGE_API_KEY',

model: 'VOYAGE_MODEL'

);

}

protected function vectorStore(): VectorStoreInterface

{

return new PineconeVectorStore(

key: 'PINECONE_API_KEY',

indexUrl: 'PINECONE_INDEX_URL'

);

}

}Learn more about RAG in the documentation.

Think of a Workflow as a smart flowchart for your AI applications. The idea behind Workflow is to allow developers to use all the Neuron components like AI providers, embeddings, data loaders, chat history, vector store, etc, as standalone components to create totally customized agentic entities.

Agent and RAG classes represent a ready to use implementation of the most common patterns when it comes to retrieval use cases, or tool calls, structured output, etc. Workflow allows you to program your agentic system completely from scratch. Agent and RAG can be used inside a Workflow to complete tasks as any other component if you need their built-in capabilities.

Neuron Workflow supports a robust human-in-the-loop pattern, enabling human intervention at any point in an automated process. This is especially useful in large language model (LLM)-driven applications where model output may require validation, correction, or additional context to complete the task.

Learn more about Workflow on the documentation.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for neuron-ai

Similar Open Source Tools

neuron-ai

Neuron AI is a PHP framework that provides an Agent class for creating fully functional agents to perform tasks like analyzing text for SEO optimization. The framework manages advanced mechanisms such as memory, tools, and function calls. Users can extend the Agent class to create custom agents and interact with them to get responses based on the underlying LLM. Neuron AI aims to simplify the development of AI-powered applications by offering a structured framework with documentation and guidelines for contributions under the MIT license.

neuron-ai

Neuron is a PHP framework for creating and orchestrating AI Agents, providing tools for the entire agentic application development lifecycle. It allows integration of AI entities in existing PHP applications with a powerful and flexible architecture. Neuron offers tutorials and educational content to help users get started using AI Agents in their projects. The framework supports various LLM providers, tools, and toolkits, enabling users to create fully functional agents for tasks like data analysis, chatbots, and structured output. Neuron also facilitates monitoring and debugging of AI applications, ensuring control over agent behavior and decision-making processes.

axar

AXAR AI is a lightweight framework designed for building production-ready agentic applications using TypeScript. It aims to simplify the process of creating robust, production-grade LLM-powered apps by focusing on familiar coding practices without unnecessary abstractions or steep learning curves. The framework provides structured, typed inputs and outputs, familiar and intuitive patterns like dependency injection and decorators, explicit control over agent behavior, real-time logging and monitoring tools, minimalistic design with little overhead, model agnostic compatibility with various AI models, and streamed outputs for fast and accurate results. AXAR AI is ideal for developers working on real-world AI applications who want a tool that gets out of the way and allows them to focus on shipping reliable software.

agentscript

AgentScript is an open-source framework for building AI agents that think in code. It prompts a language model to generate JavaScript code, which is then executed in a dedicated runtime with resumability, state persistence, and interactivity. The framework allows for abstract task execution without needing to know all the data beforehand, making it flexible and efficient. AgentScript supports tools, deterministic functions, and LLM-enabled functions, enabling dynamic data processing and decision-making. It also provides state management and human-in-the-loop capabilities, allowing for pausing, serialization, and resumption of execution.

Trace

Trace is a new AutoDiff-like tool for training AI systems end-to-end with general feedback. It generalizes the back-propagation algorithm by capturing and propagating an AI system's execution trace. Implemented as a PyTorch-like Python library, users can write Python code directly and use Trace primitives to optimize certain parts, similar to training neural networks.

langchain

LangChain is a framework for developing Elixir applications powered by language models. It enables applications to connect language models to other data sources and interact with the environment. The library provides components for working with language models and off-the-shelf chains for specific tasks. It aims to assist in building applications that combine large language models with other sources of computation or knowledge. LangChain is written in Elixir and is not aimed for parity with the JavaScript and Python versions due to differences in programming paradigms and design choices. The library is designed to make it easy to integrate language models into applications and expose features, data, and functionality to the models.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

codellm-devkit

Codellm-devkit (CLDK) is a Python library that serves as a multilingual program analysis framework bridging traditional static analysis tools and Large Language Models (LLMs) specialized for code (CodeLLMs). It simplifies the process of analyzing codebases across multiple programming languages, enabling the extraction of meaningful insights and facilitating LLM-based code analysis. The library provides a unified interface for integrating outputs from various analysis tools and preparing them for effective use by CodeLLMs. Codellm-devkit aims to enable the development and experimentation of robust analysis pipelines that combine traditional program analysis tools and CodeLLMs, reducing friction in multi-language code analysis and ensuring compatibility across different tools and LLM platforms. It is designed to seamlessly integrate with popular analysis tools like WALA, Tree-sitter, LLVM, and CodeQL, acting as a crucial intermediary layer for efficient communication between these tools and CodeLLMs. The project is continuously evolving to include new tools and frameworks, maintaining its versatility for code analysis and LLM integration.

artkit

ARTKIT is a Python framework developed by BCG X for automating prompt-based testing and evaluation of Gen AI applications. It allows users to develop automated end-to-end testing and evaluation pipelines for Gen AI systems, supporting multi-turn conversations and various testing scenarios like Q&A accuracy, brand values, equitability, safety, and security. The framework provides a simple API, asynchronous processing, caching, model agnostic support, end-to-end pipelines, multi-turn conversations, robust data flows, and visualizations. ARTKIT is designed for customization by data scientists and engineers to enhance human-in-the-loop testing and evaluation, emphasizing the importance of tailored testing for each Gen AI use case.

airflow-ai-sdk

This repository contains an SDK for working with LLMs from Apache Airflow, based on Pydantic AI. It allows users to call LLMs and orchestrate agent calls directly within their Airflow pipelines using decorator-based tasks. The SDK leverages the familiar Airflow `@task` syntax with extensions like `@task.llm`, `@task.llm_branch`, and `@task.agent`. Users can define tasks that call language models, orchestrate multi-step AI reasoning, change the control flow of a DAG based on LLM output, and support various models in the Pydantic AI library. The SDK is designed to integrate LLM workflows into Airflow pipelines, from simple LLM calls to complex agentic workflows.

rosa

ROSA is an AI Agent designed to interact with ROS-based robotics systems using natural language queries. It can generate system reports, read and parse ROS log files, adapt to new robots, and run various ROS commands using natural language. The tool is versatile for robotics research and development, providing an easy way to interact with robots and the ROS environment.

ag2

Ag2 is a lightweight and efficient tool for generating automated reports from data sources. It simplifies the process of creating reports by allowing users to define templates and automate the data extraction and formatting. With Ag2, users can easily generate reports in various formats such as PDF, Excel, and CSV, saving time and effort in manual report generation tasks.

Tiger

Tiger is a community-driven project developing a reusable and integrated tool ecosystem for LLM Agent Revolution. It utilizes Upsonic for isolated tool storage, profiling, and automatic document generation. With Tiger, you can create a customized environment for your agents or leverage the robust and publicly maintained Tiger curated by the community itself.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

Tools4AI

Tools4AI is a Java-based Agentic Framework for building AI agents to integrate with enterprise Java applications. It enables the conversion of natural language prompts into actionable behaviors, streamlining user interactions with complex systems. By leveraging AI capabilities, it enhances productivity and innovation across diverse applications. The framework allows for seamless integration of AI with various systems, such as customer service applications, to interpret user requests, trigger actions, and streamline workflows. Prompt prediction anticipates user actions based on input prompts, enhancing user experience by proactively suggesting relevant actions or services based on context.

BambooAI

BambooAI is a lightweight library utilizing Large Language Models (LLMs) to provide natural language interaction capabilities, much like a research and data analysis assistant enabling conversation with your data. You can either provide your own data sets, or allow the library to locate and fetch data for you. It supports Internet searches and external API interactions.

For similar tasks

Open-DocLLM

Open-DocLLM is an open-source project that addresses data extraction and processing challenges using OCR and LLM technologies. It consists of two main layers: OCR for reading document content and LLM for extracting specific content in a structured manner. The project offers a larger context window size compared to JP Morgan's DocLLM and integrates tools like Tesseract OCR and Mistral for efficient data analysis. Users can run the models on-premises using LLM studio or Ollama, and the project includes a FastAPI app for testing purposes.

Awesome-AI

Awesome AI is a repository that collects and shares resources in the fields of large language models (LLM), AI-assisted programming, AI drawing, and more. It explores the application and development of generative artificial intelligence. The repository provides information on various AI tools, models, and platforms, along with tutorials and web products related to AI technologies.

Qmedia

QMedia is an open-source multimedia AI content search engine designed specifically for content creators. It provides rich information extraction methods for text, image, and short video content. The tool integrates unstructured text, image, and short video information to build a multimodal RAG content Q&A system. Users can efficiently search for image/text and short video materials, analyze content, provide content sources, and generate customized search results based on user interests and needs. QMedia supports local deployment for offline content search and Q&A for private data. The tool offers features like content cards display, multimodal content RAG search, and pure local multimodal models deployment. Users can deploy different types of models locally, manage language models, feature embedding models, image models, and video models. QMedia aims to spark new ideas for content creation and share AI content creation concepts in an open-source manner.

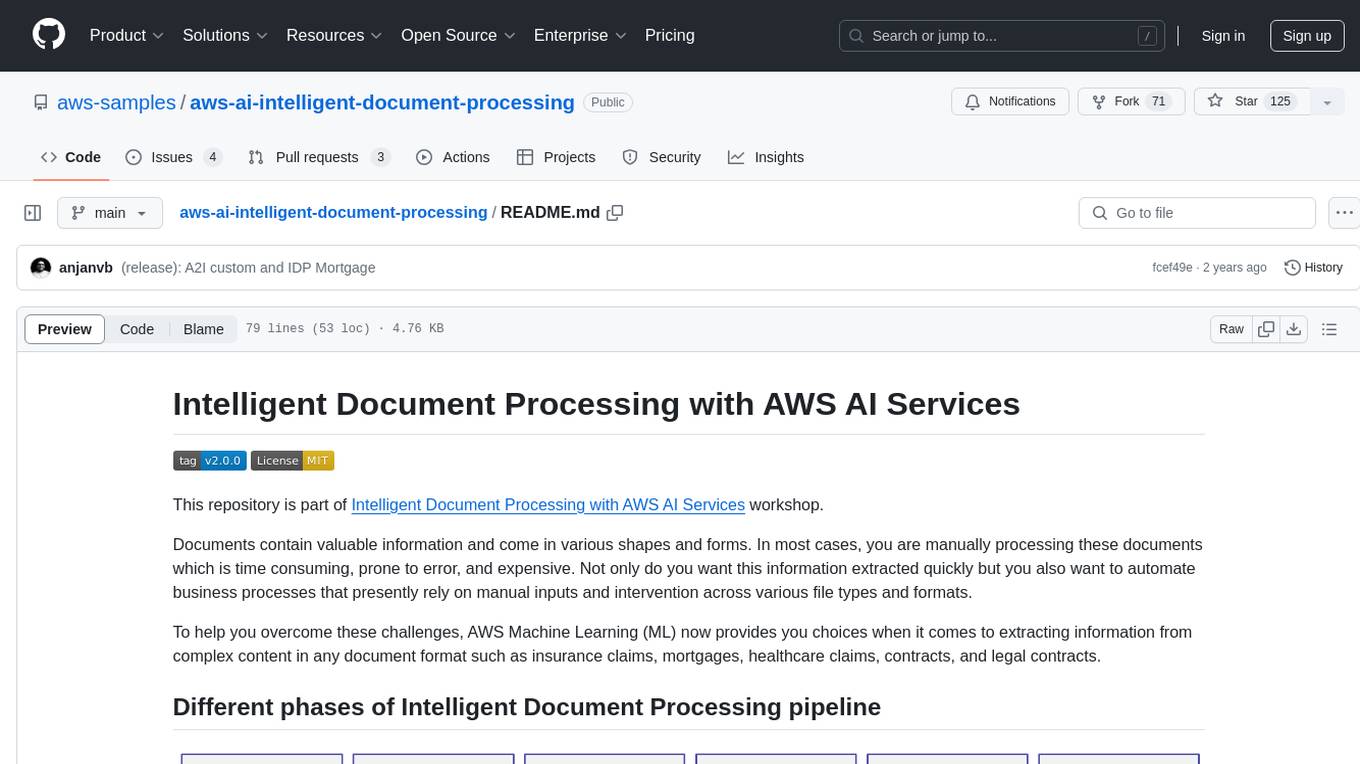

aws-ai-intelligent-document-processing

This repository is part of Intelligent Document Processing with AWS AI Services workshop. It aims to automate the extraction of information from complex content in various document formats such as insurance claims, mortgages, healthcare claims, contracts, and legal contracts using AWS Machine Learning services like Amazon Textract and Amazon Comprehend. The repository provides hands-on labs to familiarize users with these AI services and build solutions to automate business processes that rely on manual inputs and intervention across different file types and formats.

Scrapegraph-LabLabAI-Hackathon

ScrapeGraphAI is a web scraping Python library that utilizes LangChain, LLM, and direct graph logic to create scraping pipelines. Users can specify the information they want to extract, and the library will handle the extraction process. The tool is designed to simplify web scraping tasks by providing a streamlined and efficient approach to data extraction.

parsera

Parsera is a lightweight Python library designed for scraping websites using LLMs. It offers simplicity and efficiency by minimizing token usage, enhancing speed, and reducing costs. Users can easily set up and run the tool to extract specific elements from web pages, generating JSON output with relevant data. Additionally, Parsera supports integration with various chat models, such as Azure, expanding its functionality and customization options for web scraping tasks.

Scrapegraph-demo

ScrapeGraphAI is a web scraping Python library that utilizes LangChain, LLM, and direct graph logic to create scraping pipelines. Users can specify the information they want to extract, and the library will handle the extraction process. This repository contains an official demo/trial for the ScrapeGraphAI library, showcasing its capabilities in web scraping tasks. The tool is designed to simplify the process of extracting data from websites by providing a user-friendly interface and powerful scraping functionalities.

you2txt

You2Txt is a tool developed for the Vercel + Nvidia 2-hour hackathon that converts any YouTube video into a transcribed .txt file. The project won first place in the hackathon and is hosted at you2txt.com. Due to rate limiting issues with YouTube requests, it is recommended to run the tool locally. The project was created using Next.js, Tailwind, v0, and Claude, and can be built and accessed locally for development purposes.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.