KaibanJS

KaibanJS is a JavaScript-native framework for building and managing multi-agent systems with a Kanban-inspired approach.

Stars: 1029

KaibanJS is a JavaScript-native framework for building multi-agent AI systems. It enables users to create specialized AI agents with distinct roles and goals, manage tasks, and coordinate teams efficiently. The framework supports role-based agent design, tool integration, multiple LLMs support, robust state management, observability and monitoring features, and a real-time agentic Kanban board for visualizing AI workflows. KaibanJS aims to empower JavaScript developers with a user-friendly AI framework tailored for the JavaScript ecosystem, bridging the gap in the AI race for non-Python developers.

README:

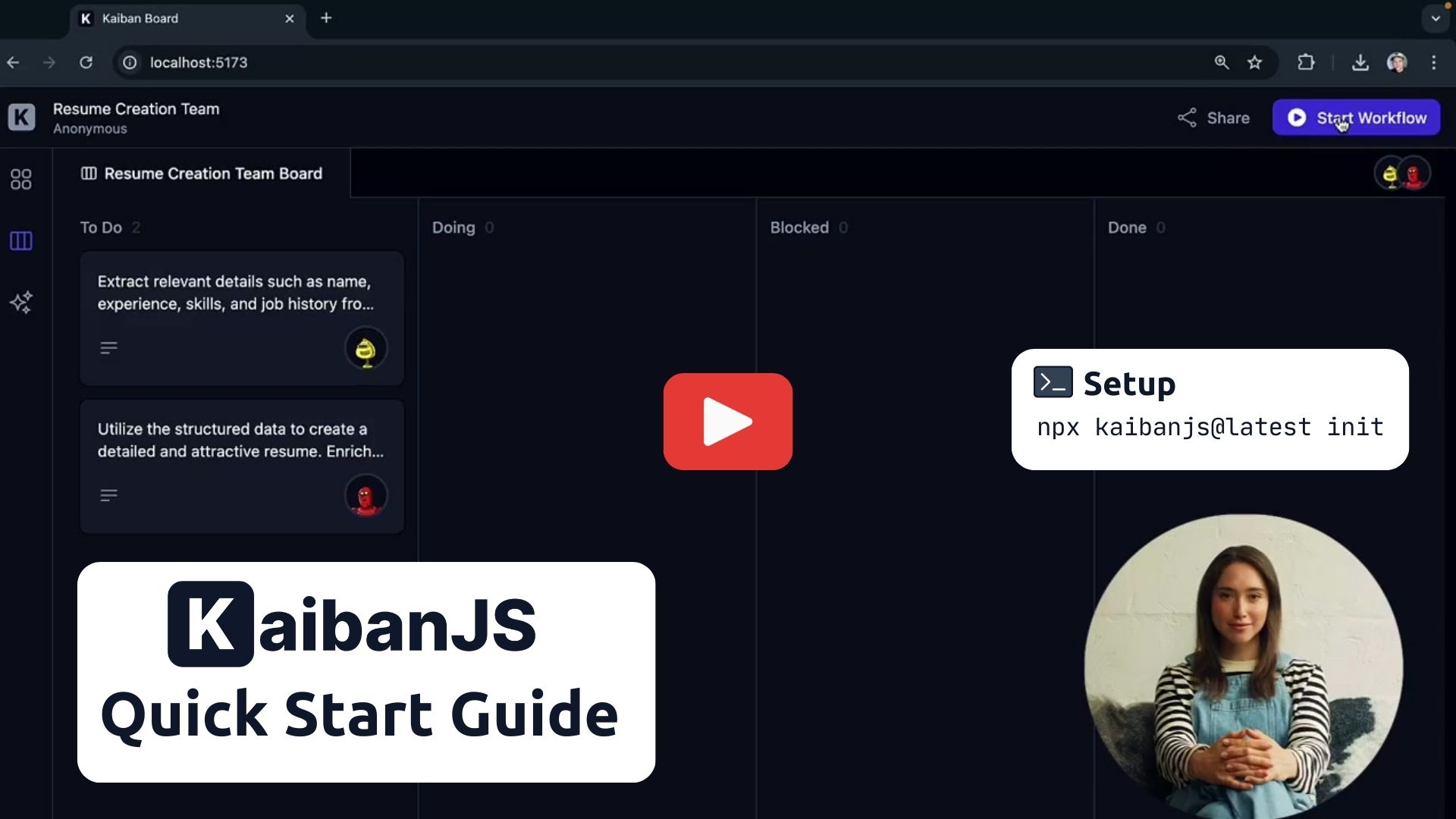

KaibanJS is inspired by the tried-and-true Kanban methodology, which is well-known for helping teams organize and manage their work. We’ve adapted these concepts to meet the unique challenges of AI agent management.

If you've used tools like Trello, Jira, or ClickUp, you'll be familiar with how Kanban helps manage tasks. Now, KaibanJS uses that same system to help you manage AI agents and their tasks in real time.

With KaibanJS, you can:

- 🔨 Create, visualize, and manage AI agents, tasks, tools, and teams

- 🎯 Orchestrate AI workflows seamlessly

- 📊 Visualize workflows in real-time

- 🔍 Track progress as tasks move through different stages

- 🤝 Collaborate more effectively on AI projects

Explore the Kaiban Board — it's like Trello or Asana, but for AI Agents and humans.

Get started with KaibanJS in under a minute:

1. Run the KaibanJS initializer in your project directory:

npx kaibanjs@latest init2. Add your AI service API key to the .env file:

VITE_OPENAI_API_KEY=your-api-key-here

3. Restart your Kaiban Board:

npm run kaiban- Click "Start Workflow" to run the default example.

- Watch agents complete tasks in real-time on the Task Board.

- View the final output in the Results Overview.

KaibanJS isn't limited to the Kaiban Board. You can integrate it directly into your projects, create custom UIs, or run agents without a UI. Explore our tutorials for React and Node.js integration to unleash the full potential of KaibanJS in various development contexts.

If you prefer to set up KaibanJS manually follow these steps:

1. Install KaibanJS via npm:

npm install kaibanjs2. Import KaibanJS in your JavaScript file:

// Using ES6 import syntax for NextJS, React, etc.

import { Agent, Task, Team } from 'kaibanjs';// Using CommonJS syntax for NodeJS

const { Agent, Task, Team } = require('kaibanjs');3. Basic Usage Example

// Define an agent

const researchAgent = new Agent({

name: 'Researcher',

role: 'Information Gatherer',

goal: 'Find relevant information on a given topic',

});

// Create a task

const researchTask = new Task({

description: 'Research recent AI developments',

agent: researchAgent,

});

// Set up a team

const team = new Team({

name: 'AI Research Team',

agents: [researchAgent],

tasks: [researchTask],

env: { OPENAI_API_KEY: 'your-api-key-here' },

});

// Start the workflow

team

.start()

.then((output) => {

console.log('Workflow completed:', output.result);

})

.catch((error) => {

console.error('Workflow error:', error);

});Agents Agents are autonomous entities designed to perform specific roles and achieve goals based on the tasks assigned to them. They are like super-powered LLMs that can execute tasks in a loop until they arrive at the final answer.

Tasks Tasks define the specific actions each agent must take, their expected outputs, and mark critical outputs as deliverables if they are the final products.

Team The Team coordinates the agents and their tasks. It starts with an initial input and manages the flow of information between tasks.

Watch this video to learn more about the concepts: KaibanJS Concepts

The Kaiban Board

Kanban boards are excellent tools for showcasing team workflows in real time, providing a clear and interactive snapshot of each member's progress.

We’ve adapted this concept for AI agents.

Now, you can visualize the workflow of your AI agents as team members, with tasks moving from "To Do" to "Done" right before your eyes. This visual representation simplifies understanding and managing complex AI operations, making it accessible to anyone, anywhere.

Role-Based Agent Design

Harness the power of specialization by configuring AI agents to excel in distinct, critical functions within your projects. This approach enhances the effectiveness and efficiency of each task, moving beyond the limitations of generic AI.

In this example, our software development team is powered by three specialized AI agents: Dave, Ella, and Quinn. Each agent is expertly tailored to its specific role, ensuring efficient task handling and synergy that accelerates the development cycle.

import { Agent } from 'kaibanjs';

const daveLoper = new Agent({

name: 'Dave Loper',

role: 'Developer',

goal: 'Write and review code',

background: 'Experienced in JavaScript, React, and Node.js',

});

const ella = new Agent({

name: 'Ella',

role: 'Product Manager',

goal: 'Define product vision and manage roadmap',

background: 'Skilled in market analysis and product strategy',

});

const quinn = new Agent({

name: 'Quinn',

role: 'QA Specialist',

goal: 'Ensure quality and consistency',

background: 'Expert in testing, automation, and bug tracking',

});Tool Integration

Just as professionals use specific tools to excel in their tasks, enable your AI agents to utilize tools like search engines, calculators, and more to perform specialized tasks with greater precision and efficiency.

In this example, one of the AI agents, Peter Atlas, leverages the Tavily Search Results tool to enhance his ability to select the best cities for travel. This tool allows Peter to analyze travel data considering weather, prices, and seasonality, ensuring the most suitable recommendations.

import { Agent, Tool } from 'kaibanjs';

const tavilySearchResults = new Tool({

name: 'Tavily Search Results',

maxResults: 1,

apiKey: 'ENV_TRAVILY_API_KEY',

});

const peterAtlas = new Agent({

name: 'Peter Atlas',

role: 'City Selector',

goal: 'Choose the best city based on comprehensive travel data',

background: 'Experienced in geographical data analysis and travel trends',

tools: [tavilySearchResults],

});KaibanJS supports all LangchainJS-compatible tools, offering a versatile approach to tool integration. For further details, visit the documentation.

Multiple LLMs Support

Optimize your AI solutions by integrating a range of specialized AI models, each tailored to excel in distinct aspects of your projects.

In this example, the agents—Emma, Lucas, and Mia—use diverse AI models to handle specific stages of feature specification development. This targeted use of AI models not only maximizes efficiency but also ensures that each task is aligned with the most cost-effective and appropriate AI resources.

import { Agent } from 'kaibanjs';

const emma = new Agent({

name: 'Emma',

role: 'Initial Drafting',

goal: 'Outline core functionalities',

llmConfig: {

provider: 'google',

model: 'gemini-1.5-pro',

},

});

const lucas = new Agent({

name: 'Lucas',

role: 'Technical Specification',

goal: 'Draft detailed technical specifications',

llmConfig: {

provider: 'anthropic',

model: 'claude-3-5-sonnet-20240620',

},

});

const mia = new Agent({

name: 'Mia',

role: 'Final Review',

goal: 'Ensure accuracy and completeness of the final document',

llmConfig: {

provider: 'openai',

model: 'gpt-4o',

},

});For further details on integrating diverse AI models with KaibanJS, please visit the documentation.

Robust State Management

KaibanJS employs a Redux-inspired architecture, enabling a unified approach to manage the states of AI agents, tasks, and overall flow within your applications. This method ensures consistent state management across complex agent interactions, providing enhanced clarity and control.

Here's a simplified example demonstrating how to integrate KaibanJS with state management in a React application:

import myAgentsTeam from './agenticTeam';

const KaibanJSComponent = () => {

const useTeamStore = myAgentsTeam.useStore();

const { agents, workflowResult } = useTeamStore((state) => ({

agents: state.agents,

workflowResult: state.workflowResult,

}));

return (

<div>

<button onClick={myAgentsTeam.start}>Start Team Workflow</button>

<p>Workflow Result: {workflowResult}</p>

<div>

<h2>🕵️♂️ Agents</h2>

{agents.map((agent) => (

<p key={agent.id}>

{agent.name} - {agent.role} - Status: ({agent.status})

</p>

))}

</div>

</div>

);

};

export default KaibanJSComponent;For a deeper dive into state management with KaibanJS, visit the documentation.

Integrate with Your Preferred JavaScript Frameworks

Easily add AI capabilities to your NextJS, React, Vue, Angular, and Node.js projects.

KaibanJS is designed for seamless integration across a diverse range of JavaScript environments. Whether you’re enhancing user interfaces in React, Vue, or Angular, building scalable applications with NextJS, or implementing server-side solutions in Node.js, the framework integrates smoothly into your existing workflow.

import React from 'react';

import myAgentsTeam from './agenticTeam';

const TaskStatusComponent = () => {

const useTeamStore = myAgentsTeam.useStore();

const { tasks } = useTeamStore((state) => ({

tasks: state.tasks.map((task) => ({

id: task.id,

description: task.description,

status: task.status,

})),

}));

return (

<div>

<h1>Task Statuses</h1>

<ul>

{tasks.map((task) => (

<li key={task.id}>

{task.description}: Status - {task.status}

</li>

))}

</ul>

</div>

);

};

export default TaskStatusComponent;For a deeper dive visit the documentation.

Observability and Monitoring

Built into KaibanJS, the observability features enable you to track every state change with detailed stats and logs, ensuring full transparency and control. This functionality provides real-time insights into token usage, operational costs, and state changes, enhancing system reliability and enabling informed decision-making through comprehensive data visibility.

The following code snippet demonstrates how the state management approach is utilized to monitor and react to changes in workflow logs, providing granular control and deep insights into the operational dynamics of your AI agents:

const useStore = myAgentsTeam.useStore();

useStore.subscribe(

(state) => state.workflowLogs,

(newLogs, previousLogs) => {

if (newLogs.length > previousLogs.length) {

const { task, agent, metadata } = newLogs[newLogs.length - 1];

if (newLogs[newLogs.length - 1].logType === 'TaskStatusUpdate') {

switch (task.status) {

case TASK_STATUS_enum.DONE:

console.log('Task Completed', {

taskDescription: task.description,

agentName: agent.name,

agentModel: agent.llmConfig.model,

duration: metadata.duration,

llmUsageStats: metadata.llmUsageStats,

costDetails: metadata.costDetails,

});

break;

case TASK_STATUS_enum.DOING:

case TASK_STATUS_enum.BLOCKED:

case TASK_STATUS_enum.REVISE:

case TASK_STATUS_enum.TODO:

console.log('Task Status Update', {

taskDescription: task.description,

taskStatus: task.status,

agentName: agent.name,

});

break;

default:

console.warn('Encountered an unexpected task status:', task.status);

break;

}

}

}

}

);For more details on how to utilize observability features in KaibanJS, please visit the documentation.

- Official Documentation

- LLM-friendly Documentation - Optimized for AI tools and coding assistants

- Join Our Discord

KaibanJS aims to be compatible with major front-end frameworks like React, Vue, Angular, and NextJS, making it a versatile choice for developers. The JavaScript ecosystem is a "bit complex...". If you have any problems, please tell us and we'll help you fix them.

There are about 20 million JavaScript developers worldwide, yet most AI frameworks are originally written in Python. Others are mere adaptations for JavaScript.

This puts all of us JavaScript developers at a disadvantage in the AI race. But not anymore...

KaibanJS changes the game by aiming to offer a robust, easy-to-use AI multi-agent framework designed specifically for the JavaScript ecosystem.

const writtenBy = `Another JS Dev Who Doesn't Want to Learn Python to do meaningful AI Stuff.`;

console.log(writtenBy);Join the Discord community to connect with other developers and get support. Follow us on Twitter for the latest updates.

We welcome contributions from the community. Please read the contributing guidelines before submitting pull requests.

KaibanJS is MIT licensed.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for KaibanJS

Similar Open Source Tools

KaibanJS

KaibanJS is a JavaScript-native framework for building multi-agent AI systems. It enables users to create specialized AI agents with distinct roles and goals, manage tasks, and coordinate teams efficiently. The framework supports role-based agent design, tool integration, multiple LLMs support, robust state management, observability and monitoring features, and a real-time agentic Kanban board for visualizing AI workflows. KaibanJS aims to empower JavaScript developers with a user-friendly AI framework tailored for the JavaScript ecosystem, bridging the gap in the AI race for non-Python developers.

axar

AXAR AI is a lightweight framework designed for building production-ready agentic applications using TypeScript. It aims to simplify the process of creating robust, production-grade LLM-powered apps by focusing on familiar coding practices without unnecessary abstractions or steep learning curves. The framework provides structured, typed inputs and outputs, familiar and intuitive patterns like dependency injection and decorators, explicit control over agent behavior, real-time logging and monitoring tools, minimalistic design with little overhead, model agnostic compatibility with various AI models, and streamed outputs for fast and accurate results. AXAR AI is ideal for developers working on real-world AI applications who want a tool that gets out of the way and allows them to focus on shipping reliable software.

flow-prompt

Flow Prompt is a dynamic library for managing and optimizing prompts for large language models. It facilitates budget-aware operations, dynamic data integration, and efficient load distribution. Features include CI/CD testing, dynamic prompt development, multi-model support, real-time insights, and prompt testing and evolution.

vinagent

Vinagent is a lightweight and flexible library designed for building smart agent assistants across various industries. It provides a simple yet powerful foundation for creating AI-powered customer service bots, data analysis assistants, or domain-specific automation agents. With its modular tool system, users can easily extend their agent's capabilities by integrating a wide range of tools that are self-contained, well-documented, and can be registered dynamically. Vinagent allows users to scale and adapt their agents to new tasks or environments effortlessly.

Trace

Trace is a new AutoDiff-like tool for training AI systems end-to-end with general feedback. It generalizes the back-propagation algorithm by capturing and propagating an AI system's execution trace. Implemented as a PyTorch-like Python library, users can write Python code directly and use Trace primitives to optimize certain parts, similar to training neural networks.

instructor-js

Instructor is a Typescript library for structured extraction in Typescript, powered by llms, designed for simplicity, transparency, and control. It stands out for its simplicity, transparency, and user-centric design. Whether you're a seasoned developer or just starting out, you'll find Instructor's approach intuitive and steerable.

experts

Experts.js is a tool that simplifies the creation and deployment of OpenAI's Assistants, allowing users to link them together as Tools to create a Panel of Experts system with expanded memory and attention to detail. It leverages the new Assistants API from OpenAI, which offers advanced features such as referencing attached files & images as knowledge sources, supporting instructions up to 256,000 characters, integrating with 128 tools, and utilizing the Vector Store API for efficient file search. Experts.js introduces Assistants as Tools, enabling the creation of Multi AI Agent Systems where each Tool is an LLM-backed Assistant that can take on specialized roles or fulfill complex tasks.

ActionWeaver

ActionWeaver is an AI application framework designed for simplicity, relying on OpenAI and Pydantic. It supports both OpenAI API and Azure OpenAI service. The framework allows for function calling as a core feature, extensibility to integrate any Python code, function orchestration for building complex call hierarchies, and telemetry and observability integration. Users can easily install ActionWeaver using pip and leverage its capabilities to create, invoke, and orchestrate actions with the language model. The framework also provides structured extraction using Pydantic models and allows for exception handling customization. Contributions to the project are welcome, and users are encouraged to cite ActionWeaver if found useful.

swarmgo

SwarmGo is a Go package designed to create AI agents capable of interacting, coordinating, and executing tasks. It focuses on lightweight agent coordination and execution, offering powerful primitives like Agents and handoffs. SwarmGo enables building scalable solutions with rich dynamics between tools and networks of agents, all while keeping the learning curve low. It supports features like memory management, streaming support, concurrent agent execution, LLM interface, and structured workflows for organizing and coordinating multiple agents.

web-llm

WebLLM is a modular and customizable javascript package that directly brings language model chats directly onto web browsers with hardware acceleration. Everything runs inside the browser with no server support and is accelerated with WebGPU. WebLLM is fully compatible with OpenAI API. That is, you can use the same OpenAI API on any open source models locally, with functionalities including json-mode, function-calling, streaming, etc. We can bring a lot of fun opportunities to build AI assistants for everyone and enable privacy while enjoying GPU acceleration.

embodied-agents

Embodied Agents is a toolkit for integrating large multi-modal models into existing robot stacks with just a few lines of code. It provides consistency, reliability, scalability, and is configurable to any observation and action space. The toolkit is designed to reduce complexities involved in setting up inference endpoints, converting between different model formats, and collecting/storing datasets. It aims to facilitate data collection and sharing among roboticists by providing Python-first abstractions that are modular, extensible, and applicable to a wide range of tasks. The toolkit supports asynchronous and remote thread-safe agent execution for maximal responsiveness and scalability, and is compatible with various APIs like HuggingFace Spaces, Datasets, Gymnasium Spaces, Ollama, and OpenAI. It also offers automatic dataset recording and optional uploads to the HuggingFace hub.

superpipe

Superpipe is a lightweight framework designed for building, evaluating, and optimizing data transformation and data extraction pipelines using LLMs. It allows users to easily combine their favorite LLM libraries with Superpipe's building blocks to create pipelines tailored to their unique data and use cases. The tool facilitates rapid prototyping, evaluation, and optimization of end-to-end pipelines for tasks such as classification and evaluation of job departments based on work history. Superpipe also provides functionalities for evaluating pipeline performance, optimizing parameters for cost, accuracy, and speed, and conducting grid searches to experiment with different models and prompts.

openagi

OpenAGI is a framework designed to make the development of autonomous human-like agents accessible to all. It aims to pave the way towards open agents and eventually AGI for everyone. The initiative strongly believes in the transformative power of AI and offers developers a platform to create autonomous human-like agents. OpenAGI features a flexible agent architecture, streamlined integration and configuration processes, and automated/manual agent configuration generation. It can be used in education for personalized learning experiences, in finance and banking for fraud detection and personalized banking advice, and in healthcare for patient monitoring and disease diagnosis.

VMind

VMind is an open-source solution for intelligent visualization, providing an intelligent chart component based on LLM by VisActor. It allows users to create chart narrative works with natural language interaction, edit charts through dialogue, and export narratives as videos or GIFs. The tool is easy to use, scalable, supports various chart types, and offers one-click export functionality. Users can customize chart styles, specify themes, and aggregate data using LLM models. VMind aims to enhance efficiency in creating data visualization works through dialogue-based editing and natural language interaction.

lionagi

LionAGI is a robust framework for orchestrating multi-step AI operations with precise control. It allows users to bring together multiple models, advanced reasoning, tool integrations, and custom validations in a single coherent pipeline. The framework is structured, expandable, controlled, and transparent, offering features like real-time logging, message introspection, and tool usage tracking. LionAGI supports advanced multi-step reasoning with ReAct, integrates with Anthropic's Model Context Protocol, and provides observability and debugging tools. Users can seamlessly orchestrate multiple models, integrate with Claude Code CLI SDK, and leverage a fan-out fan-in pattern for orchestration. The framework also offers optional dependencies for additional functionalities like reader tools, local inference support, rich output formatting, database support, and graph visualization.

CEO-Agentic-AI-Framework

CEO-Agentic-AI-Framework is an ultra-lightweight Agentic AI framework based on the ReAct paradigm. It supports mainstream LLMs and is stronger than Swarm. The framework allows users to build their own agents, assign tasks, and interact with them through a set of predefined abilities. Users can customize agent personalities, grant and deprive abilities, and assign queries for specific tasks. CEO also supports multi-agent collaboration scenarios, where different agents with distinct capabilities can work together to achieve complex tasks. The framework provides a quick start guide, examples, and detailed documentation for seamless integration into research projects.

For similar tasks

lib_resume_builder_AIHawk

`lib_resume_builder_AIHawk` is a Python library that simplifies the creation of personalized, professional resumes by integrating with GPT models. It allows users to generate tailored resumes based on job descriptions with various styles, offering a flexible approach to resume building with minimal effort.

KaibanJS

KaibanJS is a JavaScript-native framework for building multi-agent AI systems. It enables users to create specialized AI agents with distinct roles and goals, manage tasks, and coordinate teams efficiently. The framework supports role-based agent design, tool integration, multiple LLMs support, robust state management, observability and monitoring features, and a real-time agentic Kanban board for visualizing AI workflows. KaibanJS aims to empower JavaScript developers with a user-friendly AI framework tailored for the JavaScript ecosystem, bridging the gap in the AI race for non-Python developers.

generative-ai-dart

The Google Generative AI SDK for Dart enables developers to utilize cutting-edge Large Language Models (LLMs) for creating language applications. It provides access to the Gemini API for generating content using state-of-the-art models. Developers can integrate the SDK into their Dart or Flutter applications to leverage powerful AI capabilities. It is recommended to use the SDK for server-side API calls to ensure the security of API keys and protect against potential key exposure in mobile or web apps.

SemanticKernel.Assistants

This repository contains an assistant proposal for the Semantic Kernel, allowing the usage of assistants without relying on OpenAI Assistant APIs. It runs locally planners and plugins for the assistants, providing scenarios like Assistant with Semantic Kernel plugins, Multi-Assistant conversation, and AutoGen conversation. The Semantic Kernel is a lightweight SDK enabling integration of AI Large Language Models with conventional programming languages, offering functions like semantic functions, native functions, and embeddings-based memory. Users can bring their own model for the assistants and host them locally. The repository includes installation instructions, usage examples, and information on creating new conversation threads with the assistant.

ezlocalai

ezlocalai is an artificial intelligence server that simplifies running multimodal AI models locally. It handles model downloading and server configuration based on hardware specs. It offers OpenAI Style endpoints for integration, voice cloning, text-to-speech, voice-to-text, and offline image generation. Users can modify environment variables for customization. Supports NVIDIA GPU and CPU setups. Provides demo UI and workflow visualization for easy usage.

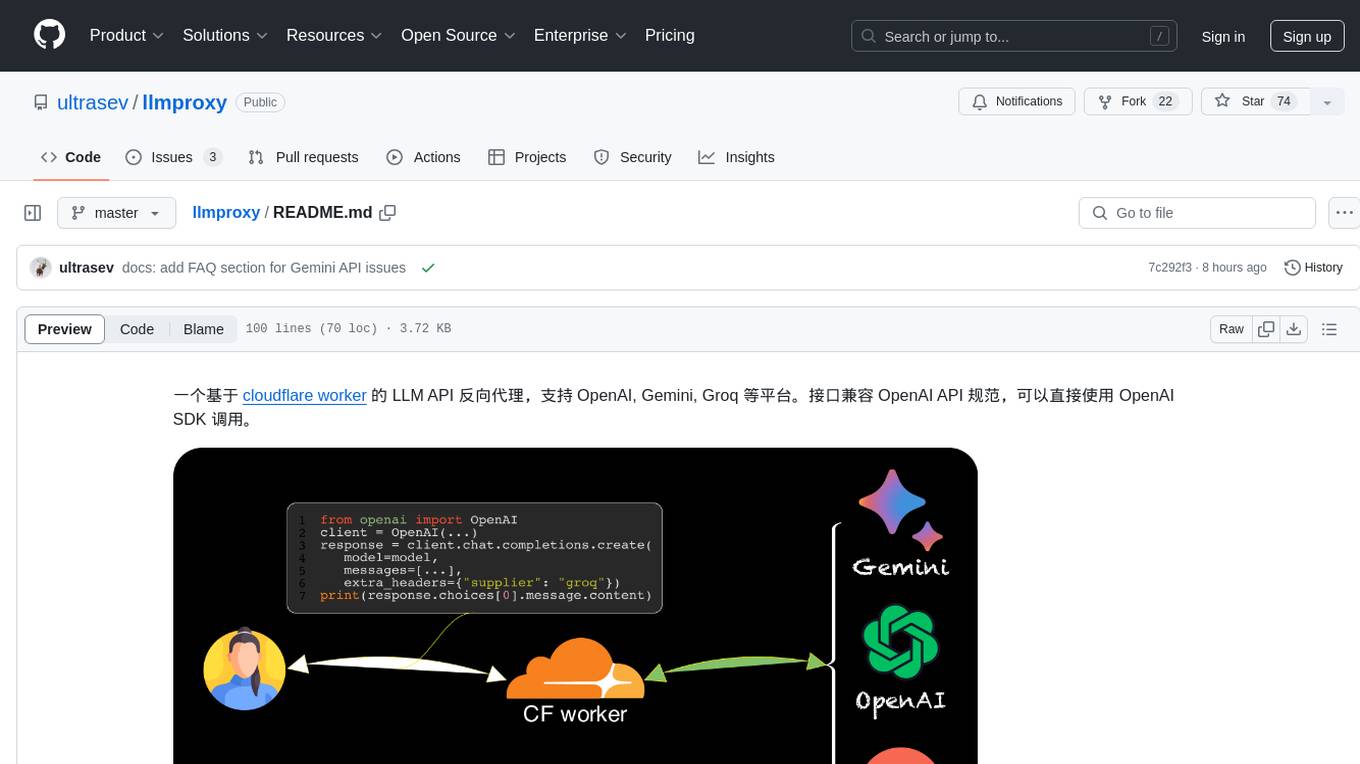

llmproxy

llmproxy is a reverse proxy for LLM API based on Cloudflare Worker, supporting platforms like OpenAI, Gemini, and Groq. The interface is compatible with the OpenAI API specification and can be directly accessed using the OpenAI SDK. It provides a convenient way to interact with various AI platforms through a unified API endpoint, enabling seamless integration and usage in different applications.

gemini-api-quickstart

This repository contains a simple Python Flask App utilizing the Google AI Gemini API to explore multi-modal capabilities. It provides a basic UI and Flask backend for easy integration and testing. The app allows users to interact with the AI model through chat messages, making it a great starting point for developers interested in AI-powered applications.

FFAIVideo

FFAIVideo is a lightweight node.js project that utilizes popular AI LLM to intelligently generate short videos. It supports multiple AI LLM models such as OpenAI, Moonshot, Azure, g4f, Google Gemini, etc. Users can input text to automatically synthesize exciting video content with subtitles, background music, and customizable settings. The project integrates Microsoft Edge's online text-to-speech service for voice options and uses Pexels website for video resources. Installation of FFmpeg is essential for smooth operation. Inspired by MoneyPrinterTurbo, MoneyPrinter, and MsEdgeTTS, FFAIVideo is designed for front-end developers with minimal dependencies and simple usage.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.