openagi

Paving the way for open agents and AGI for all.

Stars: 291

OpenAGI is a framework designed to make the development of autonomous human-like agents accessible to all. It aims to pave the way towards open agents and eventually AGI for everyone. The initiative strongly believes in the transformative power of AI and offers developers a platform to create autonomous human-like agents. OpenAGI features a flexible agent architecture, streamlined integration and configuration processes, and automated/manual agent configuration generation. It can be used in education for personalized learning experiences, in finance and banking for fraud detection and personalized banking advice, and in healthcare for patient monitoring and disease diagnosis.

README:

OpenAGI aims to make human-like agents accessible to everyone, thereby paving the way towards open agents and, eventually, AGI for everyone. We strongly believe in the transformative power of AI and are confident that this initiative will significantly contribute to solving many real-life problems. Currently, OpenAGI is designed to offer developers a framework for creating autonomous human-like agents.

👉 Join our Discord community!- Setup a virtual environment.

# For Mac and Linux users

python3 -m venv venv

source venv/bin/activate

# For Windows users

python -m venv venv

venv/scripts/activate- Install the openagi

pip install openagior

git clone https://github.com/aiplanethub/openagi.git

pip install -e .

Workers are used to create a Multi-Agent architecture.

Follow this example to create a Trip Planner Agent that helps you plan the itinerary to SF.

from openagi.agent import Admin

from openagi.planner.task_decomposer import TaskPlanner

from openagi.actions.tools.ddg_search import DuckDuckGoSearch

from openagi.llms.openai import OpenAIModel

from openagi.worker import Worker

plan = TaskPlanner(human_intervene=False)

action = DuckDuckGoSearch

import os

os.environ['OPENAI_API_KEY'] = "sk-xxxx"

config = OpenAIModel.load_from_env_config()

llm = OpenAIModel(config=config)

trip_plan = Worker(

role="Trip Planner",

instructions="""

User loves calm places, suggest the best itinerary accordingly.

""",

actions=[action],

max_iterations=10)

admin = Admin(

llm=llm,

actions=[action],

planner=plan,

)

admin.assign_workers([trip_plan])

res = admin.run(

query="Give me total 3 Days Trip to San francisco Bay area",

description="You are a knowledgeable local guide with extensive information about the city, it's attractions and customs",

)

print(res)Lets build a Sports Agent now that can run autonomously without any Workers.

from openagi.planner.task_decomposer import TaskPlanner

from openagi.actions.tools.tavilyqasearch import TavilyWebSearchQA

from openagi.agent import Admin

from openagi.llms.gemini import GeminiModel

import os

os.environ['TAVILY_API_KEY'] = "<replace with Tavily key>"

os.environ['GOOGLE_API_KEY'] = "<replace with Gemini key>"

os.environ['Gemini_MODEL'] = "gemini-1.5-flash"

os.environ['Gemini_TEMP'] = "0.1"

gemini_config = GeminiModel.load_from_env_config()

llm = GeminiModel(config=gemini_config)

# define the planner

plan = TaskPlanner(autonomous=True,human_intervene=True)

admin = Admin(

actions = [TavilyWebSearchQA],

planner = plan,

llm = llm,

)

res = admin.run(

query="I need cricket updates from India vs Sri lanka 2024 ODI match in Sri Lanka",

description=f"give me the results of India vs Sri Lanka ODI and respective Man of the Match",

)

print(res)With LTM, OpenAGI agents can now:

- Recall past interactions to provide continuity in conversations.

- Learn and adapt based on user inputs over time.

- Deliver contextually relevant responses by referencing previous conversations.

- Improve their accuracy and efficiency with each successive interaction.

import os

from openagi.agent import Admin

from openagi.llms.openai import OpenAIModel

from openagi.memory import Memory

from openagi.planner.task_decomposer import TaskPlanner

from openagi.worker import Worker

from openagi.actions.tools.ddg_search import DuckDuckGoSearch

memory = Memory(long_term=True)

os.environ['OPENAI_API_KEY'] = "-"

config = OpenAIModel.load_from_env_config()

llm = OpenAIModel(config=config)

web_searcher = Worker(

role="Web Researcher",

instructions="""

You are tasked with conducting web searches using DuckDuckGo.

Find the most relevant and accurate information based on the user's query.

""",

actions=[DuckDuckGoSearch],

)

admin = Admin(

actions=[DuckDuckGoSearch],

planner=TaskPlanner(human_intervene=False),

memory=memory,

llm=llm,

)

admin.assign_workers([web_searcher])

query = input("Enter your search query: ")

description = f"Find accurate and relevant information for the query: {query}"

res = admin.run(query=query,description=description)

print(res)For more queries find documentation for OpenAGI at openagi.aiplanet.com

- Education: In education, agents can provide personalized learning experiences. They adapt and tailor learning content based on student's progress, performance and interests. It can extend to automating various other administrative tasks and assist teachers in improving their productivity.

- Finance and Banking: Financial services can use agents for fraud detection, risk assessment, personalized banking advice, automating trading, and customer service. They help in analyzing large volumes of transactions to identify suspicious activities and offer tailored investment advice.

- Healthcare: Agents can be deployed to monitor patients, provide personalized health recommendations, manage patient data, and automate administrative tasks. They can also assist in diagnosing diseases based on symptoms and medical history.

For any queries/suggestions/support connect us at [email protected]

OpenAGI thrives in the rapidly evolving landscape of open-source projects. We wholeheartedly welcome contributions in various capacities, be it through innovative features, enhanced infrastructure, or refined documentation.

For a comprehensive guide on the contribution process, please click here.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for openagi

Similar Open Source Tools

openagi

OpenAGI is a framework designed to make the development of autonomous human-like agents accessible to all. It aims to pave the way towards open agents and eventually AGI for everyone. The initiative strongly believes in the transformative power of AI and offers developers a platform to create autonomous human-like agents. OpenAGI features a flexible agent architecture, streamlined integration and configuration processes, and automated/manual agent configuration generation. It can be used in education for personalized learning experiences, in finance and banking for fraud detection and personalized banking advice, and in healthcare for patient monitoring and disease diagnosis.

ag2

Ag2 is a lightweight and efficient tool for generating automated reports from data sources. It simplifies the process of creating reports by allowing users to define templates and automate the data extraction and formatting. With Ag2, users can easily generate reports in various formats such as PDF, Excel, and CSV, saving time and effort in manual report generation tasks.

swarms

Swarms provides simple, reliable, and agile tools to create your own Swarm tailored to your specific needs. Currently, Swarms is being used in production by RBC, John Deere, and many AI startups.

DemoGPT

DemoGPT is an all-in-one agent library that provides tools, prompts, frameworks, and LLM models for streamlined agent development. It leverages GPT-3.5-turbo to generate LangChain code, creating interactive Streamlit applications. The tool is designed for creating intelligent, interactive, and inclusive solutions in LLM-based application development. It offers model flexibility, iterative development, and a commitment to user engagement. Future enhancements include integrating Gorilla for autonomous API usage and adding a publicly available database for refining the generation process.

superpipe

Superpipe is a lightweight framework designed for building, evaluating, and optimizing data transformation and data extraction pipelines using LLMs. It allows users to easily combine their favorite LLM libraries with Superpipe's building blocks to create pipelines tailored to their unique data and use cases. The tool facilitates rapid prototyping, evaluation, and optimization of end-to-end pipelines for tasks such as classification and evaluation of job departments based on work history. Superpipe also provides functionalities for evaluating pipeline performance, optimizing parameters for cost, accuracy, and speed, and conducting grid searches to experiment with different models and prompts.

zshot

Zshot is a highly customizable framework for performing Zero and Few shot named entity and relationships recognition. It can be used for mentions extraction, wikification, zero and few shot named entity recognition, zero and few shot named relationship recognition, and visualization of zero-shot NER and RE extraction. The framework consists of two main components: the mentions extractor and the linker. There are multiple mentions extractors and linkers available, each serving a specific purpose. Zshot also includes a relations extractor and a knowledge extractor for extracting relations among entities and performing entity classification. The tool requires Python 3.6+ and dependencies like spacy, torch, transformers, evaluate, and datasets for evaluation over datasets like OntoNotes. Optional dependencies include flair and blink for additional functionalities. Zshot provides examples, tutorials, and evaluation methods to assess the performance of the components.

crewAI

crewAI is a cutting-edge framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It provides a flexible and structured approach to AI collaboration, enabling users to define agents with specific roles, goals, and tools, and assign them tasks within a customizable process. crewAI supports integration with various LLMs, including OpenAI, and offers features such as autonomous task delegation, flexible task management, and output parsing. It is open-source and welcomes contributions, with a focus on improving the library based on usage data collected through anonymous telemetry.

Open-Prompt-Injection

OpenPromptInjection is an open-source toolkit for attacks and defenses in LLM-integrated applications, enabling easy implementation, evaluation, and extension of attacks, defenses, and LLMs. It supports various attack and defense strategies, including prompt injection, paraphrasing, retokenization, data prompt isolation, instructional prevention, sandwich prevention, perplexity-based detection, LLM-based detection, response-based detection, and know-answer detection. Users can create models, tasks, and apps to evaluate different scenarios. The toolkit currently supports PaLM2 and provides a demo for querying models with prompts. Users can also evaluate ASV for different scenarios by injecting tasks and querying models with attacked data prompts.

agency

Agency is a Go library designed for developers to explore Large Language Models (LLMs) and generative AI in a clean, effective, and Go-idiomatic way. It allows users to easily create custom operations, compose operations into processes, and interact with OpenAI API bindings for various tasks such as text completion, image generation, and speech-to-text conversion. The ultimate goal of Agency is to empower users to build autonomous AI systems, from chat interfaces to complex data analysis, with a focus on simplicity, flexibility, and efficiency.

xFinder

xFinder is a model specifically designed for key answer extraction from large language models (LLMs). It addresses the challenges of unreliable evaluation methods by optimizing the key answer extraction module. The model achieves high accuracy and robustness compared to existing frameworks, enhancing the reliability of LLM evaluation. It includes a specialized dataset, the Key Answer Finder (KAF) dataset, for effective training and evaluation. xFinder is suitable for researchers and developers working with LLMs to improve answer extraction accuracy.

LLMLingua

LLMLingua is a tool that utilizes a compact, well-trained language model to identify and remove non-essential tokens in prompts. This approach enables efficient inference with large language models, achieving up to 20x compression with minimal performance loss. The tool includes LLMLingua, LongLLMLingua, and LLMLingua-2, each offering different levels of prompt compression and performance improvements for tasks involving large language models.

hackingBuddyGPT

hackingBuddyGPT is a framework for testing LLM-based agents for security testing. It aims to create common ground truth by creating common security testbeds and benchmarks, evaluating multiple LLMs and techniques against those, and publishing prototypes and findings as open-source/open-access reports. The initial focus is on evaluating the efficiency of LLMs for Linux privilege escalation attacks, but the framework is being expanded to evaluate the use of LLMs for web penetration-testing and web API testing. hackingBuddyGPT is released as open-source to level the playing field for blue teams against APTs that have access to more sophisticated resources.

raga-llm-hub

Raga LLM Hub is a comprehensive evaluation toolkit for Language and Learning Models (LLMs) with over 100 meticulously designed metrics. It allows developers and organizations to evaluate and compare LLMs effectively, establishing guardrails for LLMs and Retrieval Augmented Generation (RAG) applications. The platform assesses aspects like Relevance & Understanding, Content Quality, Hallucination, Safety & Bias, Context Relevance, Guardrails, and Vulnerability scanning, along with Metric-Based Tests for quantitative analysis. It helps teams identify and fix issues throughout the LLM lifecycle, revolutionizing reliability and trustworthiness.

argilla

Argilla is a collaboration platform for AI engineers and domain experts that require high-quality outputs, full data ownership, and overall efficiency. It helps users improve AI output quality through data quality, take control of their data and models, and improve efficiency by quickly iterating on the right data and models. Argilla is an open-source community-driven project that provides tools for achieving and maintaining high-quality data standards, with a focus on NLP and LLMs. It is used by AI teams from companies like the Red Cross, Loris.ai, and Prolific to improve the quality and efficiency of AI projects.

llmware

LLMWare is a framework for quickly developing LLM-based applications including Retrieval Augmented Generation (RAG) and Multi-Step Orchestration of Agent Workflows. This project provides a comprehensive set of tools that anyone can use - from a beginner to the most sophisticated AI developer - to rapidly build industrial-grade, knowledge-based enterprise LLM applications. Our specific focus is on making it easy to integrate open source small specialized models and connecting enterprise knowledge safely and securely.

finnts

The Microsoft Finance Time Series Forecasting Framework, aka finnts or Finn, is an automated forecasting framework for producing financial forecasts. It includes an AI agent for accurate forecasting, automated feature engineering and model selection, access to 25+ models, Azure integration for parallel processing, and support for various forecast intervals and external regressors.

For similar tasks

openagi

OpenAGI is a framework designed to make the development of autonomous human-like agents accessible to all. It aims to pave the way towards open agents and eventually AGI for everyone. The initiative strongly believes in the transformative power of AI and offers developers a platform to create autonomous human-like agents. OpenAGI features a flexible agent architecture, streamlined integration and configuration processes, and automated/manual agent configuration generation. It can be used in education for personalized learning experiences, in finance and banking for fraud detection and personalized banking advice, and in healthcare for patient monitoring and disease diagnosis.

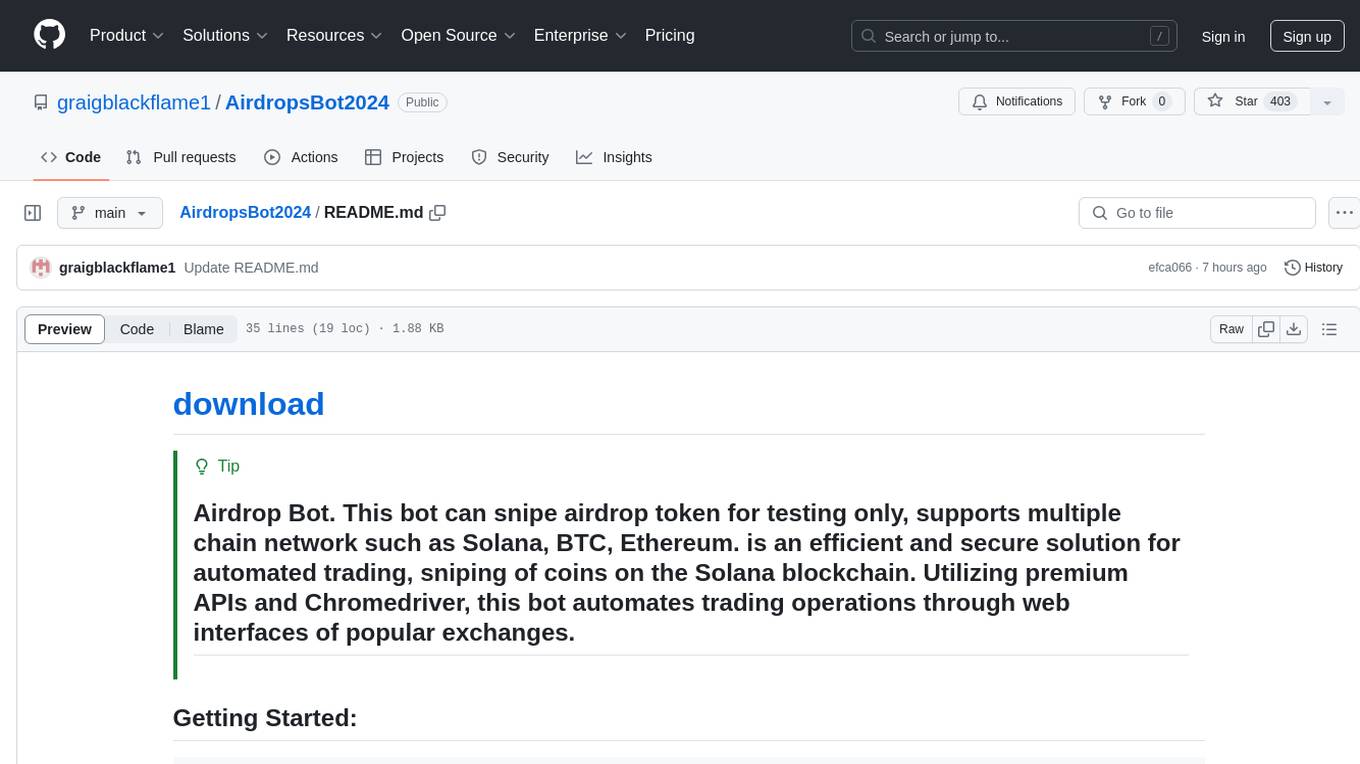

AirdropsBot2024

AirdropsBot2024 is an efficient and secure solution for automated trading and sniping of coins on the Solana blockchain. It supports multiple chain networks such as Solana, BTC, and Ethereum. The bot utilizes premium APIs and Chromedriver to automate trading operations through web interfaces of popular exchanges. It offers high-speed data analysis, in-depth market analysis, support for major exchanges, complete security and control, data visualization, advanced notification options, flexibility and adaptability in trading strategies, and profile management.

AirdropsBot2024

AirdropsBot2024 is an efficient and secure solution for automated trading and sniping of coins on the Solana blockchain. It supports multiple chain networks such as Solana, BTC, and Ethereum. The bot utilizes premium APIs and Chromedriver to automate trading operations through web interfaces of popular exchanges. It offers high-speed data analysis, in-depth market analysis, support for major exchanges, complete security and control, data visualization, advanced notification options, flexibility and adaptability in trading strategies, and profile management for saving and loading different trading strategies.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.