forevervm

Securely run AI-generated code in stateful sandboxes that run forever.

Stars: 168

foreverVM is a tool that provides an API for running arbitrary, stateful Python code securely. It revolves around the concepts of machines and instructions, where machines represent stateful Python processes and instructions are Python statements and expressions that can be executed on these machines. Users can interact with machines, run instructions, and receive results. The tool ensures that machines are managed efficiently by automatically swapping them from memory to disk when idle and back when needed, allowing for running REPLs 'forever'. Users can easily get started with foreverVM using the CLI and an API token, and can leverage the SDK for more advanced functionalities.

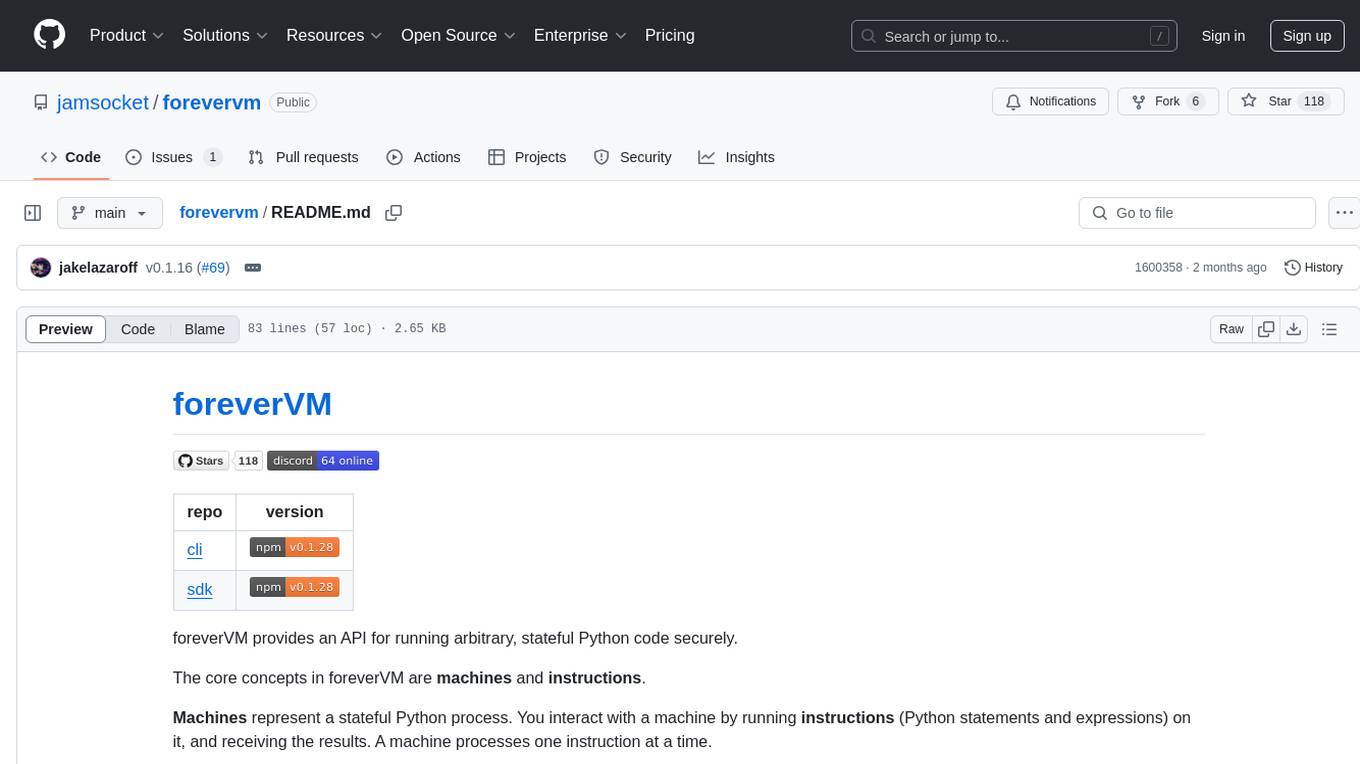

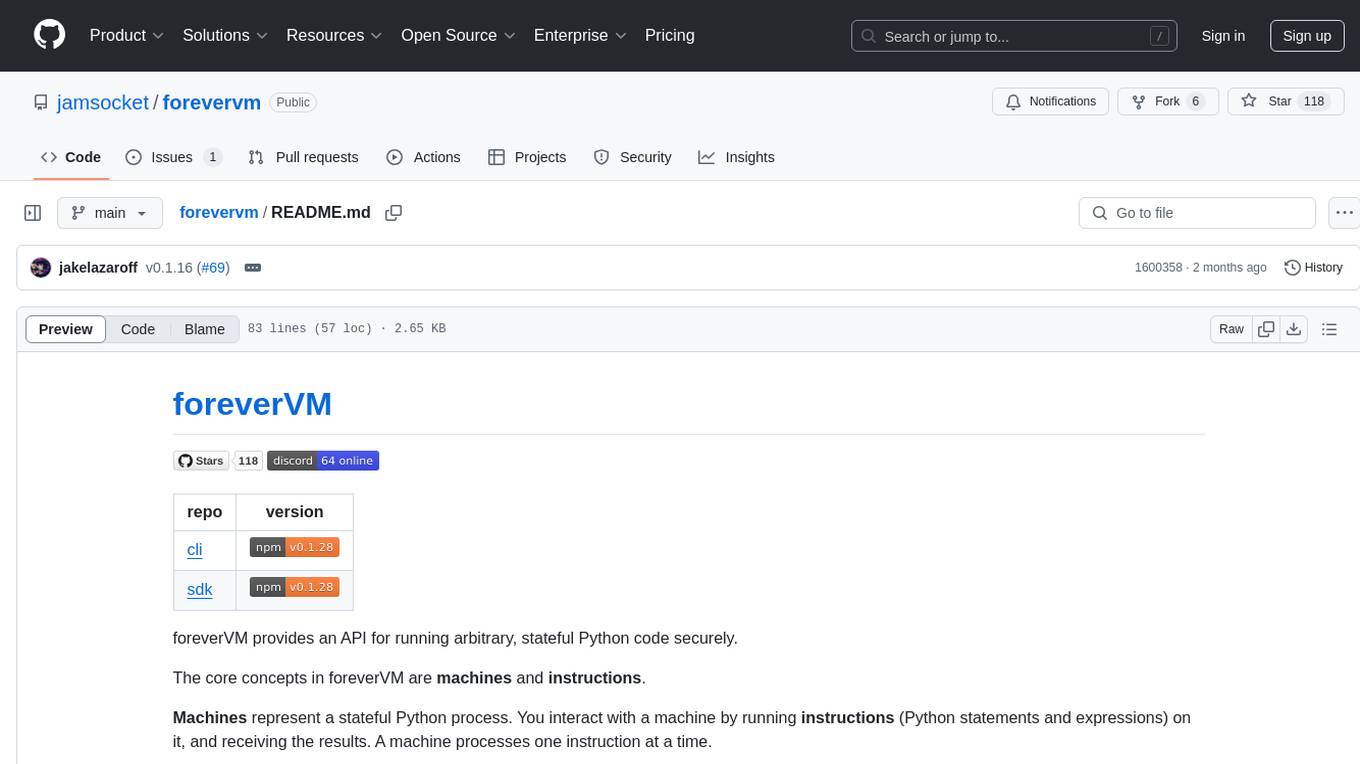

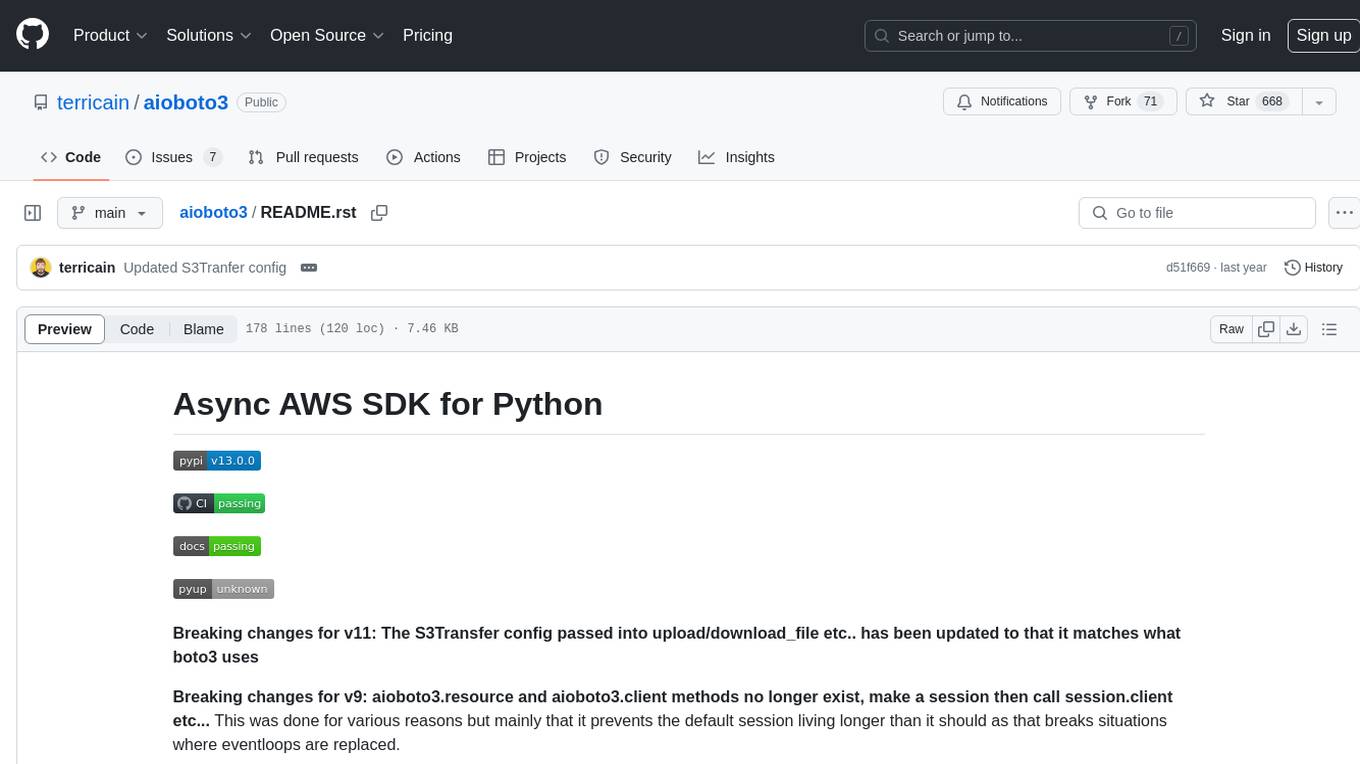

README:

| repo | version |

|---|---|

| cli |  |

| sdk |  |

foreverVM provides an API for running arbitrary, stateful Python code securely.

The core concepts in foreverVM are machines and instructions.

Machines represent a stateful Python process. You interact with a machine by running instructions (Python statements and expressions) on it, and receiving the results. A machine processes one instruction at a time.

You will need an API token (if you need one, reach out to [email protected]).

The easiest way to try out foreverVM is using the CLI. First, you will need to log in:

npx forevervm loginOnce logged in, you can open a REPL interface with a new machine:

npx forevervm replWhen foreverVM starts your machine, it gives it an ID that you can later use to reconnect to it. You can reconnect to a machine like this:

npx forevervm repl [machine_name]You can list your machines (in reverse order of creation) like this:

npx forevervm machine listYou don't need to terminate machines -- foreverVM will automatically swap them from memory to disk when they are idle, and then automatically swap them back when needed. This is what allows foreverVM to run repls “forever”.

import { ForeverVM } from '@forevervm/sdk'

const token = process.env.FOREVERVM_TOKEN

if (!token) {

throw new Error('FOREVERVM_TOKEN is not set')

}

// Initialize foreverVM

const fvm = new ForeverVM({ token })

// Connect to a new machine.

const repl = fvm.repl()

// Execute some code

let execResult = repl.exec('4 + 4')

// Get the result

console.log('result:', await execResult.result)

// We can also print stdout and stderr

execResult = repl.exec('for i in range(10):\n print(i)')

for await (const output of execResult.output) {

console.log(output.stream, output.data)

}

process.exit(0)You can create machines with tags and filter machines by tags:

import { ForeverVM } from '@forevervm/sdk'

const fvm = new ForeverVM({ token: process.env.FOREVERVM_TOKEN })

// Create a machine with tags

const machineResponse = await fvm.createMachine({

tags: {

env: 'production',

owner: 'user123',

project: 'demo'

}

})

// List machines filtered by tags

const productionMachines = await fvm.listMachines({

tags: { env: 'production' }

})You can create machines with memory limits by specifying the memory size in megabytes:

// Create a machine with 512MB memory limit

const machineResponse = await fvm.createMachine({

memory_mb: 512,

})For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for forevervm

Similar Open Source Tools

forevervm

foreverVM is a tool that provides an API for running arbitrary, stateful Python code securely. It revolves around the concepts of machines and instructions, where machines represent stateful Python processes and instructions are Python statements and expressions that can be executed on these machines. Users can interact with machines, run instructions, and receive results. The tool ensures that machines are managed efficiently by automatically swapping them from memory to disk when idle and back when needed, allowing for running REPLs 'forever'. Users can easily get started with foreverVM using the CLI and an API token, and can leverage the SDK for more advanced functionalities.

APIMyLlama

APIMyLlama is a server application that provides an interface to interact with the Ollama API, a powerful AI tool to run LLMs. It allows users to easily distribute API keys to create amazing things. The tool offers commands to generate, list, remove, add, change, activate, deactivate, and manage API keys, as well as functionalities to work with webhooks, set rate limits, and get detailed information about API keys. Users can install APIMyLlama packages with NPM, PIP, Jitpack Repo+Gradle or Maven, or from the Crates Repository. The tool supports Node.JS, Python, Java, and Rust for generating responses from the API. Additionally, it provides built-in health checking commands for monitoring API health status.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

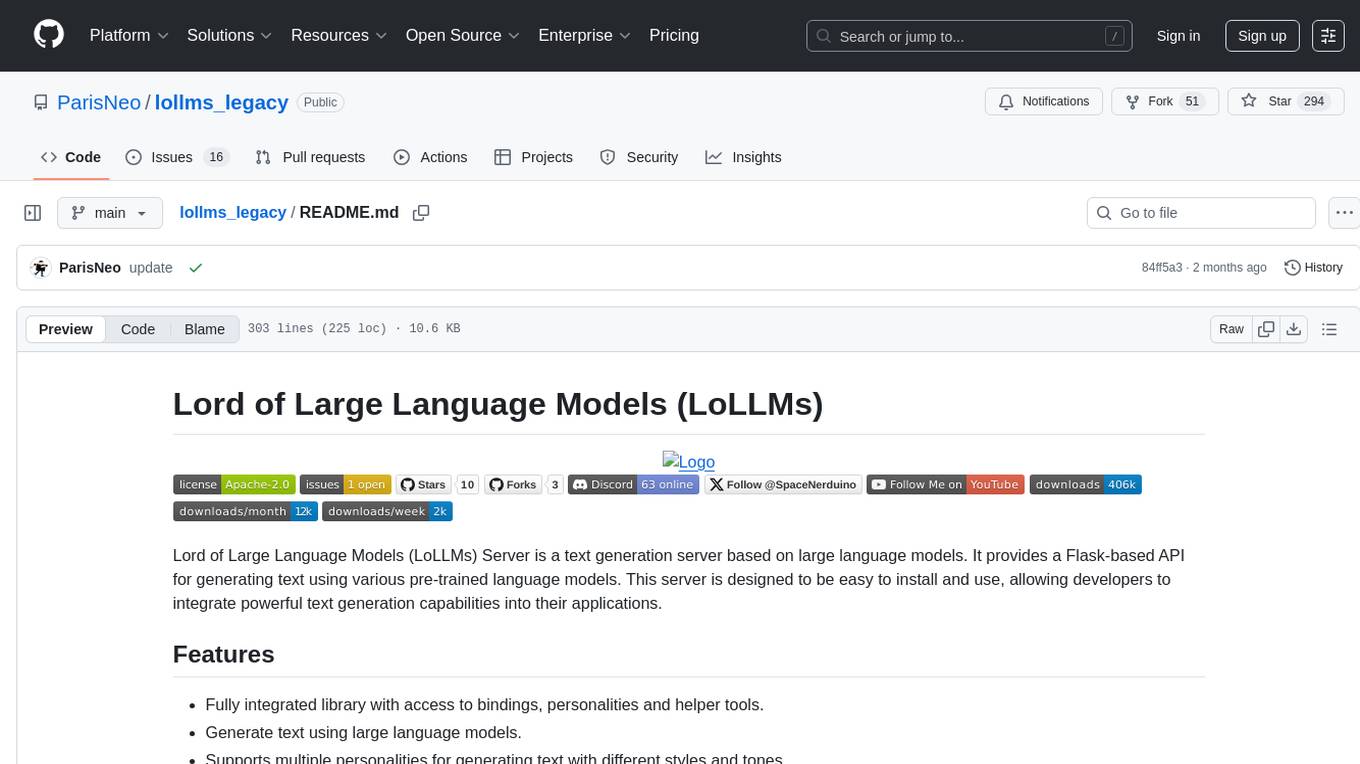

lollms_legacy

Lord of Large Language Models (LoLLMs) Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications. The tool supports multiple personalities for generating text with different styles and tones, real-time text generation with WebSocket-based communication, RESTful API for listing personalities and adding new personalities, easy integration with various applications and frameworks, sending files to personalities, running on multiple nodes to provide a generation service to many outputs at once, and keeping data local even in the remote version.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

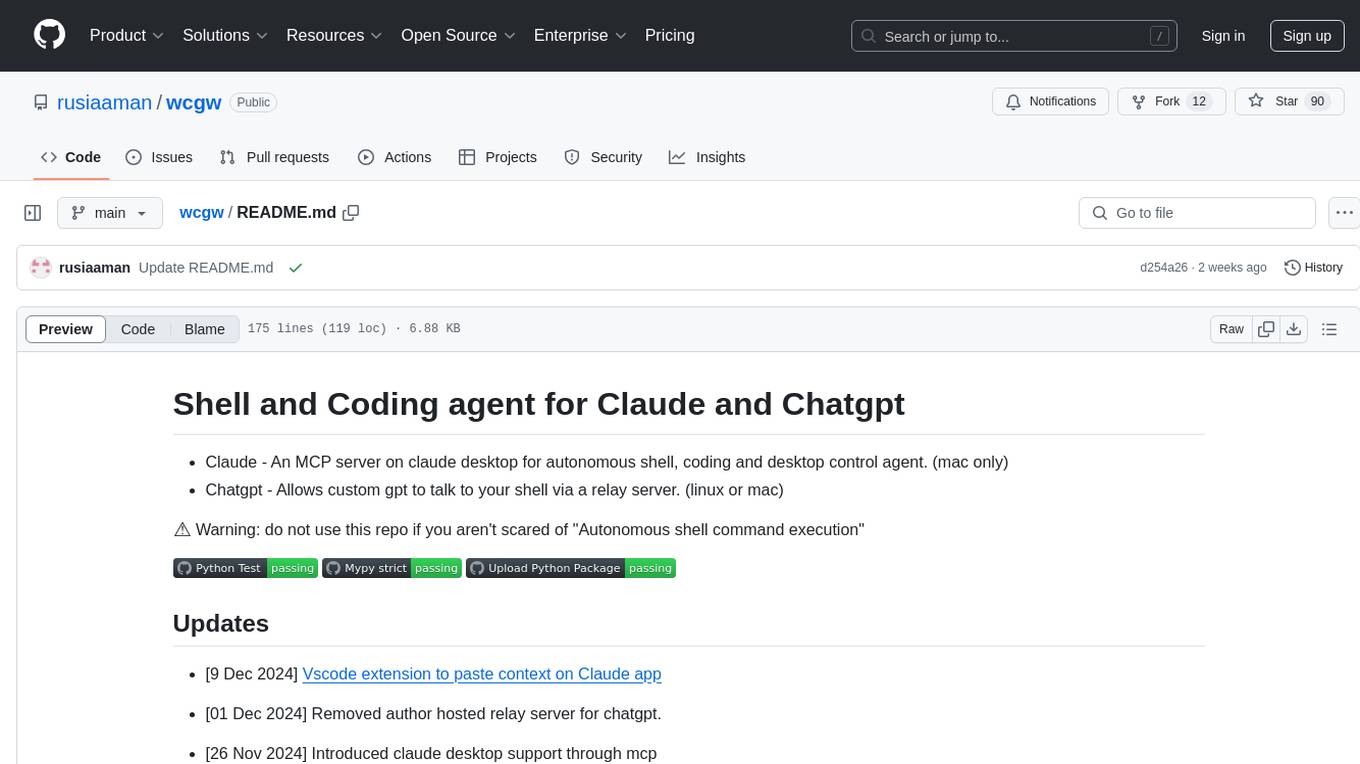

wcgw

wcgw is a shell and coding agent designed for Claude and Chatgpt. It provides full shell access with no restrictions, desktop control on Claude for screen capture and control, interactive command handling, large file editing, and REPL support. Users can use wcgw to create, execute, and iterate on tasks, such as solving problems with Python, finding code instances, setting up projects, creating web apps, editing large files, and running server commands. Additionally, wcgw supports computer use on Docker containers for desktop control. The tool can be extended with a VS Code extension for pasting context on Claude app and integrates with Chatgpt for custom GPT interactions.

aioboto3

aioboto3 is an async AWS SDK for Python that allows users to use near enough all of the boto3 client commands in an async manner just by prefixing the command with `await`. It combines the great work of boto3 and aiobotocore, enabling users to use higher level APIs provided by boto3 in an asynchronous manner. The package provides support for various AWS services such as DynamoDB, S3, Kinesis, SSM Parameter Store, and Athena. It also offers features like client-side encryption using KMS-Managed master keys and supports asyncifying `get_presigned_url`. The library closely mimics the usage of boto3 and is mainly developed to be used in async microservices.

web-llm

WebLLM is a modular and customizable javascript package that directly brings language model chats directly onto web browsers with hardware acceleration. Everything runs inside the browser with no server support and is accelerated with WebGPU. WebLLM is fully compatible with OpenAI API. That is, you can use the same OpenAI API on any open source models locally, with functionalities including json-mode, function-calling, streaming, etc. We can bring a lot of fun opportunities to build AI assistants for everyone and enable privacy while enjoying GPU acceleration.

playword

PlayWord is a tool designed to supercharge web test automation experience with AI. It provides core features such as enabling browser operations and validations using natural language inputs, as well as monitoring interface to record and dry-run test steps. PlayWord supports multiple AI services including Anthropic, Google, and OpenAI, allowing users to select the appropriate provider based on their requirements. The tool also offers features like assertion handling, frame handling, custom variables, test recordings, and an Observer module to track user interactions on web pages. With PlayWord, users can interact with web pages using natural language commands, reducing the need to worry about element locators and providing AI-powered adaptation to UI changes.

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

blinkid-android

The BlinkID Android SDK is a comprehensive solution for implementing secure document scanning and extraction. It offers powerful capabilities for extracting data from a wide range of identification documents. The SDK provides features for integrating document scanning into Android apps, including camera requirements, SDK resource pre-bundling, customizing the UX, changing default strings and localization, troubleshooting integration difficulties, and using the SDK through various methods. It also offers options for completely custom UX with low-level API integration. The SDK size is optimized for different processor architectures, and API documentation is available for reference. For any questions or support, users can contact the Microblink team at help.microblink.com.

minja

Minja is a minimalistic C++ Jinja templating engine designed specifically for integration with C++ LLM projects, such as llama.cpp or gemma.cpp. It is not a general-purpose tool but focuses on providing a limited set of filters, tests, and language features tailored for chat templates. The library is header-only, requires C++17, and depends only on nlohmann::json. Minja aims to keep the codebase small, easy to understand, and offers decent performance compared to Python. Users should be cautious when using Minja due to potential security risks, and it is not intended for producing HTML or JavaScript output.

aiomisc

aiomisc is a Python library that provides a collection of utility functions and classes for working with asynchronous I/O in a more intuitive and efficient way. It offers features like worker pools, connection pools, circuit breaker pattern, and retry mechanisms to make asyncio code more robust and easier to maintain. The library simplifies the architecture of software using asynchronous I/O, making it easier for developers to write reliable and scalable asynchronous code.

consult-llm-mcp

Consult LLM MCP is an MCP server that enables users to consult powerful AI models like GPT-5.2, Gemini 3.0 Pro, and DeepSeek Reasoner for complex problem-solving. It supports multi-turn conversations, direct queries with optional file context, git changes inclusion for code review, comprehensive logging with cost estimation, and various CLI modes for Gemini and Codex. The tool is designed to simplify the process of querying AI models for assistance in resolving coding issues and improving code quality.

agent-mimir

Agent Mimir is a command line and Discord chat client 'agent' manager for LLM's like Chat-GPT that provides the models with access to tooling and a framework with which accomplish multi-step tasks. It is easy to configure your own agent with a custom personality or profession as well as enabling access to all tools that are compatible with LangchainJS. Agent Mimir is based on LangchainJS, every tool or LLM that works on Langchain should also work with Mimir. The tasking system is based on Auto-GPT and BabyAGI where the agent needs to come up with a plan, iterate over its steps and review as it completes the task.

neocodeium

NeoCodeium is a free AI completion plugin powered by Codeium, designed for Neovim users. It aims to provide a smoother experience by eliminating flickering suggestions and allowing for repeatable completions using the `.` key. The plugin offers performance improvements through cache techniques, displays suggestion count labels, and supports Lua scripting. Users can customize keymaps, manage suggestions, and interact with the AI chat feature. NeoCodeium enhances code completion in Neovim, making it a valuable tool for developers seeking efficient coding assistance.

For similar tasks

EasyInstruct

EasyInstruct is a Python package proposed as an easy-to-use instruction processing framework for Large Language Models (LLMs) like GPT-4, LLaMA, ChatGLM in your research experiments. EasyInstruct modularizes instruction generation, selection, and prompting, while also considering their combination and interaction.

forevervm

foreverVM is a tool that provides an API for running arbitrary, stateful Python code securely. It revolves around the concepts of machines and instructions, where machines represent stateful Python processes and instructions are Python statements and expressions that can be executed on these machines. Users can interact with machines, run instructions, and receive results. The tool ensures that machines are managed efficiently by automatically swapping them from memory to disk when idle and back when needed, allowing for running REPLs 'forever'. Users can easily get started with foreverVM using the CLI and an API token, and can leverage the SDK for more advanced functionalities.

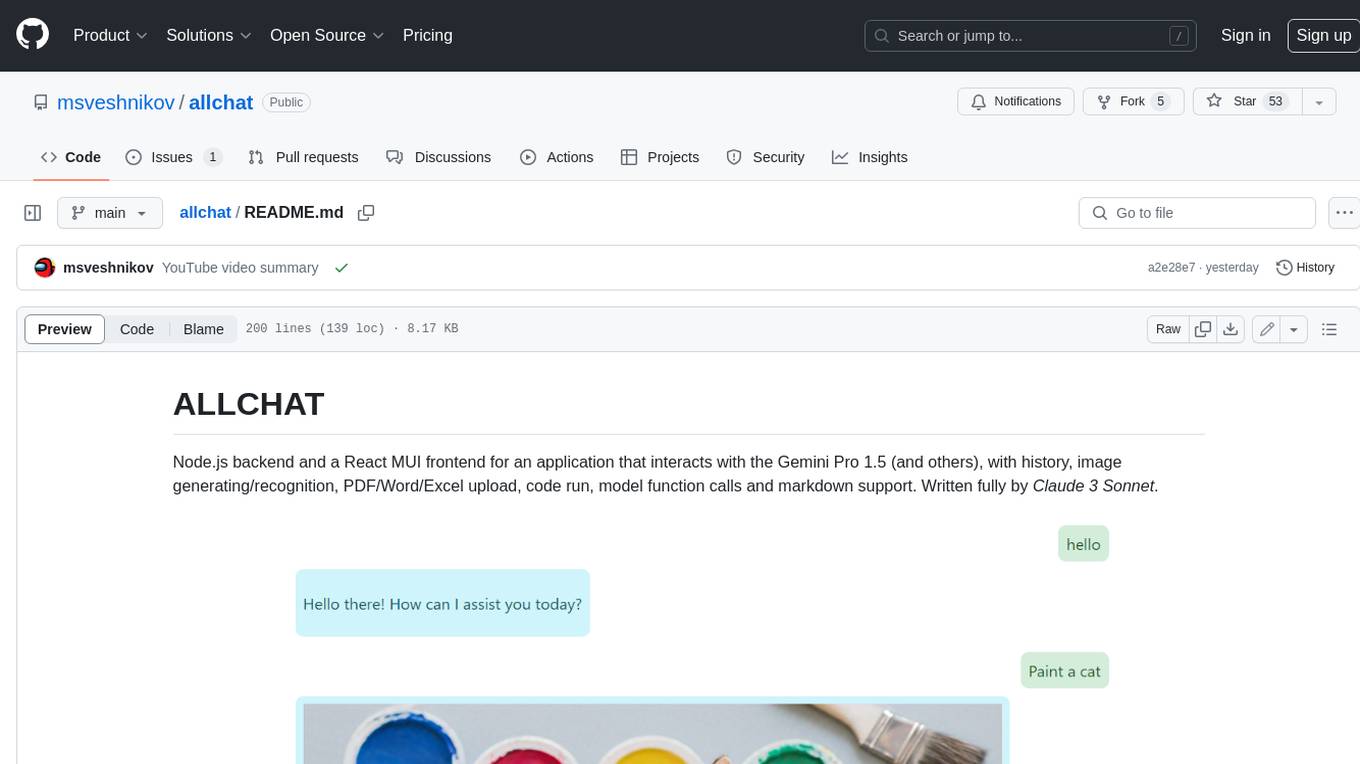

allchat

ALLCHAT is a Node.js backend and React MUI frontend for an application that interacts with the Gemini Pro 1.5 (and others), with history, image generating/recognition, PDF/Word/Excel upload, code run, model function calls and markdown support. It is a comprehensive tool that allows users to connect models to the world with Web Tools, run locally, deploy using Docker, configure Nginx, and monitor the application using a dockerized monitoring solution (Loki+Grafana).

AgentPilot

Agent Pilot is an open source desktop app for creating, managing, and chatting with AI agents. It features multi-agent, branching chats with various providers through LiteLLM. Users can combine models from different providers, configure interactions, and run code using the built-in Open Interpreter. The tool allows users to create agents, manage chats, work with multi-agent workflows, branching workflows, context blocks, tools, and plugins. It also supports a code interpreter, scheduler, voice integration, and integration with various AI providers. Contributions to the project are welcome, and users can report known issues for improvement.

gptme

GPTMe is a tool that allows users to interact with an LLM assistant directly in their terminal in a chat-style interface. The tool provides features for the assistant to run shell commands, execute code, read/write files, and more, making it suitable for various development and terminal-based tasks. It serves as a local alternative to ChatGPT's 'Code Interpreter,' offering flexibility and privacy when using a local model. GPTMe supports code execution, file manipulation, context passing, self-correction, and works with various AI models like GPT-4. It also includes a GitHub Bot for requesting changes and operates entirely in GitHub Actions. In progress features include handling long contexts intelligently, a web UI and API for conversations, web and desktop vision, and a tree-based conversation structure.

Code-Interpreter-Api

Code Interpreter API is a project that combines a scheduling center with a sandbox environment, dedicated to creating the world's best code interpreter. It aims to provide a secure, reliable API interface for remotely running code and obtaining execution results, accelerating the development of various AI agents, and being a boon to many AI enthusiasts. The project innovatively combines Docker container technology to achieve secure isolation and execution of Python code. Additionally, the project supports storing generated image data in a PostgreSQL database and accessing it through API endpoints, providing rich data processing and storage capabilities.

xlings

Xlings is a developer tool for programming learning, development, and course building. It provides features such as software installation, one-click environment setup, project dependency management, and cross-platform language package management. Additionally, it offers real-time compilation and running, AI code suggestions, tutorial project creation, automatic code checking for practice, and demo examples collection.

Code-Atlas

Code Atlas is a lightweight interpreter developed in C++ that supports the execution of multi-language code snippets and partial Markdown rendering. It consumes significantly lower resources compared to similar tools, making it suitable for resource-limited devices. It leverages llama.cpp for local large-model inference and supports cloud-based large-model APIs. The tool provides features for code execution, Markdown rendering, local AI inference, and resource efficiency.

For similar jobs

google.aip.dev

API Improvement Proposals (AIPs) are design documents that provide high-level, concise documentation for API development at Google. The goal of AIPs is to serve as the source of truth for API-related documentation and to facilitate discussion and consensus among API teams. AIPs are similar to Python's enhancement proposals (PEPs) and are organized into different areas within Google to accommodate historical differences in customs, styles, and guidance.

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

speakeasy

Speakeasy is a tool that helps developers create production-quality SDKs, Terraform providers, documentation, and more from OpenAPI specifications. It supports a wide range of languages, including Go, Python, TypeScript, Java, and C#, and provides features such as automatic maintenance, type safety, and fault tolerance. Speakeasy also integrates with popular package managers like npm, PyPI, Maven, and Terraform Registry for easy distribution.

apicat

ApiCat is an API documentation management tool that is fully compatible with the OpenAPI specification. With ApiCat, you can freely and efficiently manage your APIs. It integrates the capabilities of LLM, which not only helps you automatically generate API documentation and data models but also creates corresponding test cases based on the API content. Using ApiCat, you can quickly accomplish anything outside of coding, allowing you to focus your energy on the code itself.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

ain

Ain is a terminal HTTP API client designed for scripting input and processing output via pipes. It allows flexible organization of APIs using files and folders, supports shell-scripts and executables for common tasks, handles url-encoding, and enables sharing the resulting curl, wget, or httpie command-line. Users can put things that change in environment variables or .env-files, and pipe the API output for further processing. Ain targets users who work with many APIs using a simple file format and uses curl, wget, or httpie to make the actual calls.

OllamaKit

OllamaKit is a Swift library designed to simplify interactions with the Ollama API. It handles network communication and data processing, offering an efficient interface for Swift applications to communicate with the Ollama API. The library is optimized for use within Ollamac, a macOS app for interacting with Ollama models.

ollama4j

Ollama4j is a Java library that serves as a wrapper or binding for the Ollama server. It facilitates communication with the Ollama server and provides models for deployment. The tool requires Java 11 or higher and can be installed locally or via Docker. Users can integrate Ollama4j into Maven projects by adding the specified dependency. The tool offers API specifications and supports various development tasks such as building, running unit tests, and integration tests. Releases are automated through GitHub Actions CI workflow. Areas of improvement include adhering to Java naming conventions, updating deprecated code, implementing logging, using lombok, and enhancing request body creation. Contributions to the project are encouraged, whether reporting bugs, suggesting enhancements, or contributing code.