npcsh

The AI toolkit for the AI developer

Stars: 482

`npcsh` is a python-based command-line tool designed to integrate Large Language Models (LLMs) and Agents into one's daily workflow by making them available and easily configurable through the command line shell. It leverages the power of LLMs to understand natural language commands and questions, execute tasks, answer queries, and provide relevant information from local files and the web. Users can also build their own tools and call them like macros from the shell. `npcsh` allows users to take advantage of agents (i.e. NPCs) through a managed system, tailoring NPCs to specific tasks and workflows. The tool is extensible with Python, providing useful functions for interacting with LLMs, including explicit coverage for popular providers like ollama, anthropic, openai, gemini, deepseek, and openai-like providers. Users can set up a flask server to expose their NPC team for use as a backend service, run SQL models defined in their project, execute assembly lines, and verify the integrity of their NPC team's interrelations. Users can execute bash commands directly, use favorite command-line tools like VIM, Emacs, ipython, sqlite3, git, pipe the output of these commands to LLMs, or pass LLM results to bash commands.

README:

-

npcshis a python-based AI Agent framework designed to integrate Large Language Models (LLMs) and Agents into one's daily workflow by making them available and easily configurable through a command line shell as well as an extensible python library. -

npcshstores your executed commands, conversations, generated images, captured screenshots, and more in a central database -

The NPC shell understands natural language commands and provides a suite of built-in tools (macros) for tasks like voice control, image generation, and web searching, while allowing users to create custom NPCs (AI agents) with specific personalities and capabilities for complex workflows.

-

npcshis extensible through its python library implementation and offers a simple CLI interface with thenpccli. -

npcshintegrates with local and enterprise LLM providers like Ollama, LMStudio, OpenAI, Anthropic, Gemini, and Deepseek, making it a versatile tool for both simple commands and sophisticated AI-driven tasks.

Read the docs at npcsh.readthedocs.io

npcsh can be used in a graphical user interface through the NPC Studio.

See the open source code for NPC Studio here. Download the executables (soon) at our website.

Interested to stay in the loop and to hear the latest and greatest about npcsh ? Be sure to sign up for the npcsh newsletter!

Users can take advantage of npcsh through its custom shell or through a command-line interface (CLI) tool. Below is a cheat sheet that shows how to use npcsh commands in both the shell and the CLI. For the npcsh commands to work, one must activate npcsh by typing it in a shell.

| Task | npc CLI | npcsh |

|---|---|---|

| Ask a generic question | npc 'prompt' | 'prompt' |

| Compile an NPC | npc compile /path/to/npc.npc | /compile /path/to/npc.npc |

| Computer use | npc plonk -n 'npc_name' -sp 'task for plonk to carry out ' | /plonk -n 'npc_name' -sp 'task for plonk to carry out ' |

| Conjure an NPC team from context and templates | npc init -t 'template1, template2' -ctx 'context' | /conjure -t 'template1, 'template2' -ctx 'context' |

| Enter a chat with an NPC (NPC needs to be compiled first) | npc chat -n npc_name | /spool npc=<npc_name> |

| Generate image | npc vixynt 'prompt' | /vixynt prompt |

| Get a sample LLM response | npc sample 'prompt' | /sample prompt for llm |

| Search for a term in the npcsh_db only in conversations with a specific npc | npc rag -n 'npc_name' -f 'filename' -q 'query' | /rag -n 'npc_name' -f 'filename' -q 'query' |

| Search the web | npc search -q "cal golden bears football schedule" -sp perplexity | /search -p perplexity 'cal bears football schedule' |

| Serve an NPC team | npc serve --port 5337 --cors='http://localhost:5137/' | /serve --port 5337 --cors='http://localhost:5137/' |

| Screenshot analysis | npc ots | /ots |

| Voice Chat | npc whisper -n 'npc_name' | /whisper |

Integrate npcsh into your Python projects for additional flexibility. Below are a few examples of how to use the library programmatically.

from npcsh.llm_funcs import get_llm_response

# ollama's llama3.2

response = get_llm_response("What is the capital of France? Respond with a json object containing 'capital' as the key and the capital as the value.",

model='llama3.2',

provider='ollama',

format='json')

#openai's gpt-4o-mini

response = get_llm_response("What is the capital of France? Respond with a json object containing 'capital' as the key and the capital as the value.",

model='gpt-4o-mini',

provider='openai',

format='json')

# anthropic's claude haikue 3.5 latest

response = get_llm_response("What is the capital of France? Respond with a json object containing 'capital' as the key and the capital as the value.",

model='claude-haiku-3-5-latest',

provider='anthropic',

format='json')

# alternatively, if you have NPCSH_CHAT_MODEL / NPCSH_CHAT_PROVIDER set in your ~/.npcshrc, it will use those values

response = get_llm_response("What is the capital of France? Respond with a json object containing 'capital' as the key and the capital as the value.",

format='json')

#stream responses

response = get_stream("What is the capital of France? Respond with a json object containing 'capital' as the key and the capital as the value.", )

#first let's demonstrate the capabilities of npcsh's check_llm_command

from npcsh.llm_funcs import check_llm_command

command = 'can you write a description of the idea of semantic degeneracy?'

response = check_llm_command(command,

model='gpt-4o-mini',

provider='openai')

# now to make the most of check_llm_command, let's add an NPC with a generic code execution tool

from npcsh.npc_compiler import NPC, Tool

from npcsh.llm_funcs import check_llm_command

code_execution_tool = Tool(

{

"tool_name": "execute_python",

"description": """Executes a code block in python.

Final output from script MUST be stored in a variable called `output`.

""",

"inputs": ["script"],

"steps": [

{

"engine": " python",

"code": """{{ script }}""",

}

],

}

)

command = """can you write a description of the idea of semantic degeneracy and save it to a file?

After, can you take that and make various versions of it from the points of

views of different sub-disciplines of natural lanaguage processing?

Finally produce a synthesis of the resultant various versions and save it."

"""

npc = NPC(

name="NLP_Master",

primary_directive="Provide astute anlayses on topics related to NLP. Carry out relevant tasks for users to aid them in their NLP-based analyses",

model="gpt-4o-mini",

provider="openai",

tools=[code_execution_tool],

)

response = check_llm_command(

command, model="gpt-4o-mini", provider="openai", npc=npc, stream=False

)

# or by attaching an NPC Team

from npcsh.npc_compiler import NPC

response = check_llm_command(command,

model='gpt-4o-mini',

provider='openai',)This example shows how to create and initialize an NPC and use it to answer a question.

import sqlite3

from npcsh.npc_compiler import NPC

# Set up database connection

db_path = '~/npcsh_history.db'

conn = sqlite3.connect(db_path)

# Load NPC from a file

npc = NPC(

name='Simon Bolivar',

db_conn=conn,

primary_directive='Liberate South America from the Spanish Royalists.',

model='gpt-4o-mini',

provider='openai',

)

response = npc.get_llm_response("What is the most important territory to retain in the Andes mountains?")

print(response['response'])'The most important territory to retain in the Andes mountains for the cause of liberation in South America would be the region of Quito in present-day Ecuador. This area is strategically significant due to its location and access to key trade routes. It also acts as a vital link between the northern and southern parts of the continent, influencing both military movements and the morale of the independence struggle. Retaining control over Quito would bolster efforts to unite various factions in the fight against Spanish colonial rule across the Andean states.'This example shows how to use an NPC to perform data analysis on a DataFrame using LLM commands.

from npcsh.npc_compiler import NPC

import sqlite3

import os

# Set up database connection

db_path = '~/npcsh_history.db'

conn = sqlite3.connect(os.path.expanduser(db_path))

# make a table to put into npcsh_history.db or change this example to use an existing table in a database you have

import pandas as pd

data = {

'customer_feedback': ['The product is great!', 'The service was terrible.', 'I love the new feature.'],

'customer_id': [1, 2, 3],

'customer_rating': [5, 1, 3],

'timestamp': ['2022-01-01', '2022-01-02', '2022-01-03']

}

df = pd.DataFrame(data)

df.to_sql('customer_feedback', conn, if_exists='replace', index=False)

npc = NPC(

name='Felix',

db_conn=conn,

primary_directive='Analyze customer feedback for sentiment.',

model='gpt-4o-mini',

provider='openai',

)

response = npc.analyze_db_data('Provide a detailed report on the data contained in the `customer_feedback` table?')

You can define a tool and execute it from within your Python script. Here we'll create a tool that will take in a pdf file, extract the text, and then answer a user request about the text.

from npcsh.npc_compiler import Tool, NPC

import sqlite3

import os

from jinja2 import Environment, FileSystemLoader

# Create a proper Jinja environment

jinja_env = Environment(loader=FileSystemLoader('.'))

tool_data = {

"tool_name": "pdf_analyzer",

"inputs": ["request", "file"],

"steps": [{ # Make this a list with one dict inside

"engine": "python",

"code": """

try:

import fitz # PyMuPDF

shared_context = {}

shared_context['inputs'] = '{{request}}'

pdf_path = '{{file}}'

# Open the PDF

doc = fitz.open(pdf_path)

text = ""

# Extract text from each page

for page_num in range(len(doc)):

page = doc[page_num]

text += page.get_text()

# Close the document

doc.close()

print(f"Extracted text length: {len(text)}")

if len(text) > 100:

print(f"First 100 characters: {text[:100]}...")

shared_context['extracted_text'] = text

print("Text extraction completed successfully")

except Exception as e:

error_msg = f"Error processing PDF: {str(e)}"

print(error_msg)

shared_context['extracted_text'] = f"Error: {error_msg}"

"""

},

{

"engine": "natural",

"code": """

{% if shared_context and shared_context.extracted_text %}

{% if shared_context.extracted_text.startswith('Error:') %}

{{ shared_context.extracted_text }}

{% else %}

Here is the text extracted from the PDF:

{{ shared_context.extracted_text }}

Please provide a response to user request: {{ request }} using the information extracted above.

{% endif %}

{% else %}

Error: No text was extracted from the PDF.

{% endif %}

"""

},]

}

# Instantiate the tool

tool = Tool(tool_data)

# Create an NPC instance

npc = NPC(

name='starlana',

primary_directive='Analyze text from Astrophysics papers with a keen attention to theoretical machinations and mechanisms.',

model = 'llama3.2',

provider='ollama',

db_conn=sqlite3.connect(os.path.expanduser('~/npcsh_database.db'))

)

# Define input values dictionary

input_values = {

"request": "what is the point of the yuan and narayanan work?",

"file": os.path.abspath("test_data/yuan2004.pdf")

}

print(f"Attempting to read file: {input_values['file']}")

print(f"File exists: {os.path.exists(input_values['file'])}")

# Execute the tool

output = tool.execute(input_values, npc.tools_dict, jinja_env, 'Sample Command',model=npc.model, provider=npc.provider, npc=npc)

print('Tool Output:', output)import pandas as pd

import numpy as np

import os

from npcsh.npc_compiler import NPC, NPCTeam, Tool

# Create test data and save to CSV

def create_test_data(filepath="sales_data.csv"):

sales_data = pd.DataFrame(

{

"date": pd.date_range(start="2024-01-01", periods=90),

"revenue": np.random.normal(10000, 2000, 90),

"customer_count": np.random.poisson(100, 90),

"avg_ticket": np.random.normal(100, 20, 90),

"region": np.random.choice(["North", "South", "East", "West"], 90),

"channel": np.random.choice(["Online", "Store", "Mobile"], 90),

}

)

# Add patterns to make data more realistic

sales_data["revenue"] *= 1 + 0.3 * np.sin(

np.pi * np.arange(90) / 30

) # Seasonal pattern

sales_data.loc[sales_data["channel"] == "Mobile", "revenue"] *= 1.1 # Mobile growth

sales_data.loc[

sales_data["channel"] == "Online", "customer_count"

] *= 1.2 # Online customer growth

sales_data.to_csv(filepath, index=False)

return filepath, sales_data

code_execution_tool = Tool(

{

"tool_name": "execute_code",

"description": """Executes a Python code block with access to pandas,

numpy, and matplotlib.

Results should be stored in the 'results' dict to be returned.

The only input should be a single code block with \n characters included.

The code block must use only the libraries or methods contained withen the

pandas, numpy, and matplotlib libraries or using builtin methods.

do not include any json formatting or markdown formatting.

When generating your script, the final output must be encoded in a variable

named "output". e.g.

output = some_analysis_function(inputs, derived_data_from_inputs)

Adapt accordingly based on the scope of the analysis

""",

"inputs": ["script"],

"steps": [

{

"engine": "python",

"code": """{{script}}""",

}

],

}

)

# Analytics team definition

analytics_team = [

{

"name": "analyst",

"primary_directive": "You analyze sales performance data, focusing on revenue trends, customer behavior metrics, and market indicators. Your expertise is in extracting actionable insights from complex datasets.",

"model": "gpt-4o-mini",

"provider": "openai",

"tools": [code_execution_tool], # Only the code execution tool

},

{

"name": "researcher",

"primary_directive": "You specialize in causal analysis and experimental design. Given data insights, you determine what factors drive observed patterns and design tests to validate hypotheses.",

"model": "gpt-4o-mini",

"provider": "openai",

"tools": [code_execution_tool], # Only the code execution tool

},

{

"name": "engineer",

"primary_directive": "You implement data pipelines and optimize data processing. When given analysis requirements, you create efficient workflows to automate insights generation.",

"model": "gpt-4o-mini",

"provider": "openai",

"tools": [code_execution_tool], # Only the code execution tool

},

]

def create_analytics_team():

# Initialize NPCs with just the code execution tool

npcs = []

for npc_data in analytics_team:

npc = NPC(

name=npc_data["name"],

primary_directive=npc_data["primary_directive"],

model=npc_data["model"],

provider=npc_data["provider"],

tools=[code_execution_tool], # Only code execution tool

)

npcs.append(npc)

# Create coordinator with just code execution tool

coordinator = NPC(

name="coordinator",

primary_directive="You coordinate the analytics team, ensuring each specialist contributes their expertise effectively. You synthesize insights and manage the workflow.",

model="gpt-4o-mini",

provider="openai",

tools=[code_execution_tool], # Only code execution tool

)

# Create team

team = NPCTeam(npcs=npcs, foreman=coordinator)

return team

def main():

# Create and save test data

data_path, sales_data = create_test_data()

# Initialize team

team = create_analytics_team()

# Run analysis - updated prompt to reflect code execution approach

results = team.orchestrate(

f"""

Analyze the sales data at {data_path} to:

1. Identify key performance drivers

2. Determine if mobile channel growth is significant

3. Recommend tests to validate growth hypotheses

Here is a header for the data file at {data_path}:

{sales_data.head()}

When working with dates, ensure that date columns are converted from raw strings. e.g. use the pd.to_datetime function.

When working with potentially messy data, handle null values by using nan versions of numpy functions or

by filtering them with a mask .

Use Python code execution to perform the analysis - load the data and perform statistical analysis directly.

"""

)

print(results)

# Cleanup

os.remove(data_path)

if __name__ == "__main__":

main()npcsh is available on PyPI and can be installed using pip. Before installing, make sure you have the necessary dependencies installed on your system. Below are the instructions for installing such dependencies on Linux, Mac, and Windows. If you find any other dependencies that are needed, please let us know so we can update the installation instructions to be more accommodating.

# for audio primarily

sudo apt-get install espeak

sudo apt-get install portaudio19-dev python3-pyaudio

sudo apt-get install alsa-base alsa-utils

sudo apt-get install libcairo2-dev

sudo apt-get install libgirepository1.0-dev

sudo apt-get install ffmpeg

# for triggers

sudo apt install inotify-tools

#And if you don't have ollama installed, use this:

curl -fsSL https://ollama.com/install.sh | sh

ollama pull llama3.2

ollama pull llava:7b

ollama pull nomic-embed-text

pip install npcsh

# if you want to install with the API libraries

pip install npcsh[lite]

# if you want the full local package set up (ollama, diffusers, transformers, cuda etc.)

pip install npcsh[local]

# if you want to use tts/stt

pip install npcsh[whisper]

# if you want everything:

pip install npcsh[all]

### Mac install

```bash

#mainly for audio

brew install portaudio

brew install ffmpeg

brew install pygobject3

# for triggers

brew install ...

brew install ollama

brew services start ollama

ollama pull llama3.2

ollama pull llava:7b

ollama pull nomic-embed-text

pip install npcsh

# if you want to install with the API libraries

pip install npcsh[lite]

# if you want the full local package set up (ollama, diffusers, transformers, cuda etc.)

pip install npcsh[local]

# if you want to use tts/stt

pip install npcsh[whisper]

# if you want everything:

pip install npcsh[all]

Download and install ollama exe.

Then, in a powershell. Download and install ffmpeg.

ollama pull llama3.2

ollama pull llava:7b

ollama pull nomic-embed-text

pip install npcsh

# if you want to install with the API libraries

pip install npcsh[lite]

# if you want the full local package set up (ollama, diffusers, transformers, cuda etc.)

pip install npcsh[local]

# if you want to use tts/stt

pip install npcsh[whisper]

# if you want everything:

pip install npcsh[all]

As of now, npcsh appears to work well with some of the core functionalities like /ots and /whisper.

python3-dev (fixes hnswlib issues with chroma db) xhost + (pyautogui) python-tkinter (pyautogui)

After it has been pip installed, npcsh can be used as a command line tool. Start it by typing:

npcshWhen initialized, npcsh will generate a .npcshrc file in your home directory that stores your npcsh settings.

Here is an example of what the .npcshrc file might look like after this has been run.

# NPCSH Configuration File

export NPCSH_INITIALIZED=1

export NPCSH_CHAT_PROVIDER='ollama'

export NPCSH_CHAT_MODEL='llama3.2'

export NPCSH_DB_PATH='~/npcsh_history.db'npcsh also comes with a set of tools and NPCs that are used in processing. It will generate a folder at ~/.npcsh/ that contains the tools and NPCs that are used in the shell and these will be used in the absence of other project-specific ones. Additionally, npcsh records interactions and compiled information about npcs within a local SQLite database at the path specified in the .npcshrc file. This will default to ~/npcsh_history.db if not specified. When the data mode is used to load or analyze data in CSVs or PDFs, these data will be stored in the same database for future reference.

The installer will automatically add this file to your shell config, but if it does not do so successfully for whatever reason you can add the following to your .bashrc or .zshrc:

# Source NPCSH configuration

if [ -f ~/.npcshrc ]; then

. ~/.npcshrc

fiWe support inference via openai, anthropic, ollama,gemini, deepseek, and openai-like APIs. The default provider must be one of ['openai','anthropic','ollama', 'gemini', 'deepseek', 'openai-like'] and the model must be one available from those providers.

To use tools that require API keys, create an .env file up in the folder where you are working or place relevant API keys as env variables in your ~/.npcshrc. If you already have these API keys set in a ~/.bashrc or a ~/.zshrc or similar files, you need not additionally add them to ~/.npcshrc or to an .env file. Here is an example of what an .env file might look like:

export OPENAI_API_KEY="your_openai_key"

export ANTHROPIC_API_KEY="your_anthropic_key"

export DEEPSEEK_API_KEY='your_deepseek_key'

export GEMINI_API_KEY='your_gemini_key'

export PERPLEXITY_API_KEY='your_perplexity_key'Individual npcs can also be set to use different models and providers by setting the model and provider keys in the npc files.

Once initialized and set up, you will find the following in your ~/.npcsh directory:

~/.npcsh/

├── npc_team/ # Global NPCs

│ ├── tools/ # Global tools

│ └── assembly_lines/ # Workflow pipelines

For cases where you wish to set up a project specific set of NPCs, tools, and assembly lines, add a npc_team directory to your project and npcsh should be able to pick up on its presence, like so:

./npc_team/ # Project-specific NPCs

├── tools/ # Project tools #example tool next

│ └── example.tool

└── assembly_lines/ # Project workflows

└── example.pipe

└── models/ # Project workflows

└── example.model

└── example1.npc # Example NPC

└── example2.npc # Example NPC

└── example1.ctx # Example NPC

└── example2.ctx # Example NPC

-In v0.3.4, the structure for tools was adjusted. If you have made custom tools please refer to the structure within npc_compiler to ensure that they are in the correct format. Otherwise, do the following

rm ~/.npcsh/npc_team/tools/*.tooland then

npcshand the updated tools will be copied over into the correct location.

-Version 0.3.5 included a complete overhaul and refactoring of the llm_funcs module. This was done to make it not as horribly long and to make it easier to add new models and providers

-in version 0.3.5, a change was introduced to the database schema for messages to add npcs, models, providers, and associated attachments to data. If you have used npcsh before this version, you will need to run this migration script to update your database schema: migrate_conversation_history_v0.3.5.py

-additionally, NPCSH_MODEL and NPCSH_PROVIDER have been renamed to NPCSH_CHAT_MODEL and NPCSH_CHAT_PROVIDER

to provide a more consistent naming scheme now that we have additionally introduced NPCSH_VISION_MODEL and NPCSH_VISION_PROVIDER, NPCSH_EMBEDDING_MODEL, NPCSH_EMBEDDING_PROVIDER, NPCSH_REASONING_MODEL, NPCSH_REASONING_PROVIDER, NPCSH_IMAGE_GEN_MODEL, and NPCSH_IMAGE_GEN_PROVIDER.

- In addition, we have added NPCSH_API_URL to better accommodate openai-like apis that require a specific url to be set as well as

NPCSH_STREAM_OUTPUTto indicate whether or not to use streaming in one's responses. It will be set to 0 (false) by default as it has only been tested and verified for a small subset of the models and providers we have available (openai, anthropic, and ollama). If you try it and run into issues, please post them here so we can correct them as soon as possible !

Contributions are welcome! Please submit issues and pull requests on the GitHub repository.

If you appreciate the work here, consider supporting NPC Worldwide. If you'd like to explore how to use npcsh to help your business, please reach out to [email protected] .

Coming soon! NPC Studio will be a desktop application for managing chats and agents on your own machine. Be sure to sign up for the npcsh newsletter to hear updates!

This project is licensed under the MIT License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for npcsh

Similar Open Source Tools

npcsh

`npcsh` is a python-based command-line tool designed to integrate Large Language Models (LLMs) and Agents into one's daily workflow by making them available and easily configurable through the command line shell. It leverages the power of LLMs to understand natural language commands and questions, execute tasks, answer queries, and provide relevant information from local files and the web. Users can also build their own tools and call them like macros from the shell. `npcsh` allows users to take advantage of agents (i.e. NPCs) through a managed system, tailoring NPCs to specific tasks and workflows. The tool is extensible with Python, providing useful functions for interacting with LLMs, including explicit coverage for popular providers like ollama, anthropic, openai, gemini, deepseek, and openai-like providers. Users can set up a flask server to expose their NPC team for use as a backend service, run SQL models defined in their project, execute assembly lines, and verify the integrity of their NPC team's interrelations. Users can execute bash commands directly, use favorite command-line tools like VIM, Emacs, ipython, sqlite3, git, pipe the output of these commands to LLMs, or pass LLM results to bash commands.

vinagent

Vinagent is a lightweight and flexible library designed for building smart agent assistants across various industries. It provides a simple yet powerful foundation for creating AI-powered customer service bots, data analysis assistants, or domain-specific automation agents. With its modular tool system, users can easily extend their agent's capabilities by integrating a wide range of tools that are self-contained, well-documented, and can be registered dynamically. Vinagent allows users to scale and adapt their agents to new tasks or environments effortlessly.

awadb

AwaDB is an AI native database designed for embedding vectors. It simplifies database usage by eliminating the need for schema definition and manual indexing. The system ensures real-time search capabilities with millisecond-level latency. Built on 5 years of production experience with Vearch, AwaDB incorporates best practices from the community to offer stability and efficiency. Users can easily add and search for embedded sentences using the provided client libraries or RESTful API.

simpleAI

SimpleAI is a self-hosted alternative to the not-so-open AI API, focused on replicating main endpoints for LLM such as text completion, chat, edits, and embeddings. It allows quick experimentation with different models, creating benchmarks, and handling specific use cases without relying on external services. Users can integrate and declare models through gRPC, query endpoints using Swagger UI or API, and resolve common issues like CORS with FastAPI middleware. The project is open for contributions and welcomes PRs, issues, documentation, and more.

Toolio

Toolio is an OpenAI-like HTTP server API implementation that supports structured LLM response generation, making it conform to a JSON schema. It is useful for reliable tool calling and agentic workflows based on schema-driven output. Toolio is based on the MLX framework for Apple Silicon, specifically M1/M2/M3/M4 Macs. It allows users to host MLX-format LLMs for structured output queries and provides a command line client for easier usage of tools. The tool also supports multiple tool calls and the creation of custom tools for specific tasks.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

phidata

Phidata is a framework for building AI Assistants with memory, knowledge, and tools. It enables LLMs to have long-term conversations by storing chat history in a database, provides them with business context by storing information in a vector database, and enables them to take actions like pulling data from an API, sending emails, or querying a database. Memory and knowledge make LLMs smarter, while tools make them autonomous.

langchain-extract

LangChain Extract is a simple web server that allows you to extract information from text and files using LLMs. It is built using FastAPI, LangChain, and Postgresql. The backend closely follows the extraction use-case documentation and provides a reference implementation of an app that helps to do extraction over data using LLMs. This repository is meant to be a starting point for building your own extraction application which may have slightly different requirements or use cases.

perplexity-ai

Perplexity is a module that utilizes emailnator to generate new accounts, providing users with 5 pro queries per account creation. It enables the creation of new Gmail accounts with emailnator, ensuring unlimited pro queries. The tool requires specific Python libraries for installation and offers both a web interface and an API for different usage scenarios. Users can interact with the tool to perform various tasks such as account creation, query searches, and utilizing different modes for research purposes. Perplexity also supports asynchronous operations and provides guidance on obtaining cookies for account usage and account generation from emailnator.

ash_ai

Ash AI is a tool that provides a Model Context Protocol (MCP) server for exposing tool definitions to an MCP client. It allows for the installation of dev and production MCP servers, and supports features like OAuth2 flow with AshAuthentication, tool data access, tool execution callbacks, prompt-backed actions, and vectorization strategies. Users can also generate a chat feature for their Ash & Phoenix application using `ash_oban` and `ash_postgres`, and specify LLM API keys for OpenAI. The tool is designed to help developers experiment with tools and actions, monitor tool execution, and expose actions as tool calls.

empower-functions

Empower Functions is a family of large language models (LLMs) that provide GPT-4 level capabilities for real-world 'tool using' use cases. These models offer compatibility support to be used as drop-in replacements, enabling interactions with external APIs by recognizing when a function needs to be called and generating JSON containing necessary arguments based on user inputs. This capability is crucial for building conversational agents and applications that convert natural language into API calls, facilitating tasks such as weather inquiries, data extraction, and interactions with knowledge bases. The models can handle multi-turn conversations, choose between tools or standard dialogue, ask for clarification on missing parameters, integrate responses with tool outputs in a streaming fashion, and efficiently execute multiple functions either in parallel or sequentially with dependencies.

agent-mimir

Agent Mimir is a command line and Discord chat client 'agent' manager for LLM's like Chat-GPT that provides the models with access to tooling and a framework with which accomplish multi-step tasks. It is easy to configure your own agent with a custom personality or profession as well as enabling access to all tools that are compatible with LangchainJS. Agent Mimir is based on LangchainJS, every tool or LLM that works on Langchain should also work with Mimir. The tasking system is based on Auto-GPT and BabyAGI where the agent needs to come up with a plan, iterate over its steps and review as it completes the task.

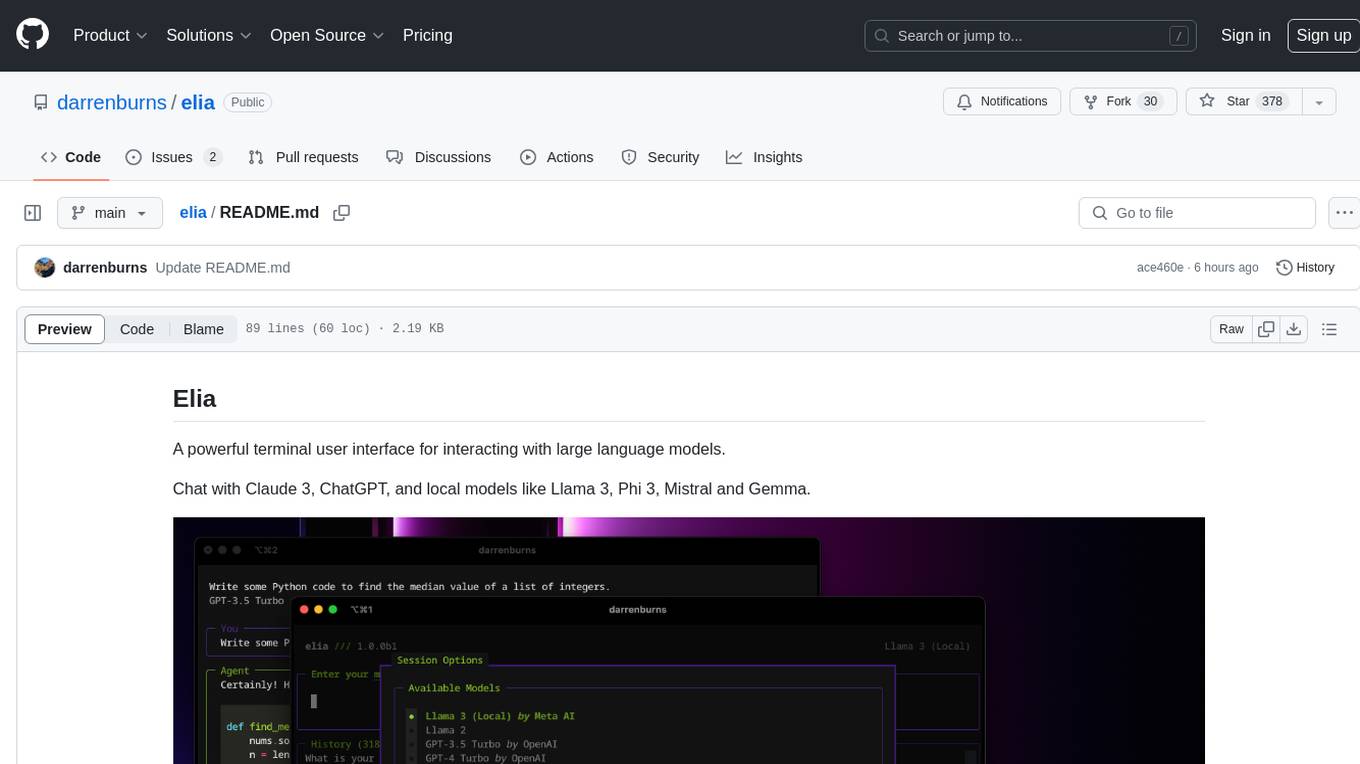

elia

Elia is a powerful terminal user interface designed for interacting with large language models. It allows users to chat with models like Claude 3, ChatGPT, Llama 3, Phi 3, Mistral, and Gemma. Conversations are stored locally in a SQLite database, ensuring privacy. Users can run local models through 'ollama' without data leaving their machine. Elia offers easy installation with pipx and supports various environment variables for different models. It provides a quick start to launch chats and manage local models. Configuration options are available to customize default models, system prompts, and add new models. Users can import conversations from ChatGPT and wipe the database when needed. Elia aims to enhance user experience in interacting with language models through a user-friendly interface.

experts

Experts.js is a tool that simplifies the creation and deployment of OpenAI's Assistants, allowing users to link them together as Tools to create a Panel of Experts system with expanded memory and attention to detail. It leverages the new Assistants API from OpenAI, which offers advanced features such as referencing attached files & images as knowledge sources, supporting instructions up to 256,000 characters, integrating with 128 tools, and utilizing the Vector Store API for efficient file search. Experts.js introduces Assistants as Tools, enabling the creation of Multi AI Agent Systems where each Tool is an LLM-backed Assistant that can take on specialized roles or fulfill complex tasks.

CoPilot

TigerGraph CoPilot is an AI assistant that combines graph databases and generative AI to enhance productivity across various business functions. It includes three core component services: InquiryAI for natural language assistance, SupportAI for knowledge Q&A, and QueryAI for GSQL code generation. Users can interact with CoPilot through a chat interface on TigerGraph Cloud and APIs. CoPilot requires LLM services for beta but will support TigerGraph's LLM in future releases. It aims to improve contextual relevance and accuracy of answers to natural-language questions by building knowledge graphs and using RAG. CoPilot is extensible and can be configured with different LLM providers, graph schemas, and LangChain tools.

ActionWeaver

ActionWeaver is an AI application framework designed for simplicity, relying on OpenAI and Pydantic. It supports both OpenAI API and Azure OpenAI service. The framework allows for function calling as a core feature, extensibility to integrate any Python code, function orchestration for building complex call hierarchies, and telemetry and observability integration. Users can easily install ActionWeaver using pip and leverage its capabilities to create, invoke, and orchestrate actions with the language model. The framework also provides structured extraction using Pydantic models and allows for exception handling customization. Contributions to the project are welcome, and users are encouraged to cite ActionWeaver if found useful.

For similar tasks

AivisSpeech-Engine

AivisSpeech-Engine is a powerful open-source tool for speech recognition and synthesis. It provides state-of-the-art algorithms for converting speech to text and text to speech. The tool is designed to be user-friendly and customizable, allowing developers to easily integrate speech capabilities into their applications. With AivisSpeech-Engine, users can transcribe audio recordings, create voice-controlled interfaces, and generate natural-sounding speech output. Whether you are building a virtual assistant, developing a speech-to-text application, or experimenting with voice technology, AivisSpeech-Engine offers a comprehensive solution for all your speech processing needs.

npcsh

`npcsh` is a python-based command-line tool designed to integrate Large Language Models (LLMs) and Agents into one's daily workflow by making them available and easily configurable through the command line shell. It leverages the power of LLMs to understand natural language commands and questions, execute tasks, answer queries, and provide relevant information from local files and the web. Users can also build their own tools and call them like macros from the shell. `npcsh` allows users to take advantage of agents (i.e. NPCs) through a managed system, tailoring NPCs to specific tasks and workflows. The tool is extensible with Python, providing useful functions for interacting with LLMs, including explicit coverage for popular providers like ollama, anthropic, openai, gemini, deepseek, and openai-like providers. Users can set up a flask server to expose their NPC team for use as a backend service, run SQL models defined in their project, execute assembly lines, and verify the integrity of their NPC team's interrelations. Users can execute bash commands directly, use favorite command-line tools like VIM, Emacs, ipython, sqlite3, git, pipe the output of these commands to LLMs, or pass LLM results to bash commands.

extractor

Extractor is an AI-powered data extraction library for Laravel that leverages OpenAI's capabilities to effortlessly extract structured data from various sources, including images, PDFs, and emails. It features a convenient wrapper around OpenAI Chat and Completion endpoints, supports multiple input formats, includes a flexible Field Extractor for arbitrary data extraction, and integrates with Textract for OCR functionality. Extractor utilizes JSON Mode from the latest GPT-3.5 and GPT-4 models, providing accurate and efficient data extraction.

terraform-genai-doc-summarization

This solution showcases how to summarize a large corpus of documents using Generative AI. It provides an end-to-end demonstration of document summarization going all the way from raw documents, detecting text in the documents and summarizing the documents on-demand using Vertex AI LLM APIs, Cloud Vision Optical Character Recognition (OCR) and BigQuery.

chatwise-releases

ChatWise is an offline tool that supports various AI models such as OpenAI, Anthropic, Google AI, Groq, and Ollama. It is multi-modal, allowing text-to-speech powered by OpenAI and ElevenLabs. The tool supports text files, PDFs, audio, and images across different models. ChatWise is currently available for macOS (Apple Silicon & Intel) with Windows support coming soon.

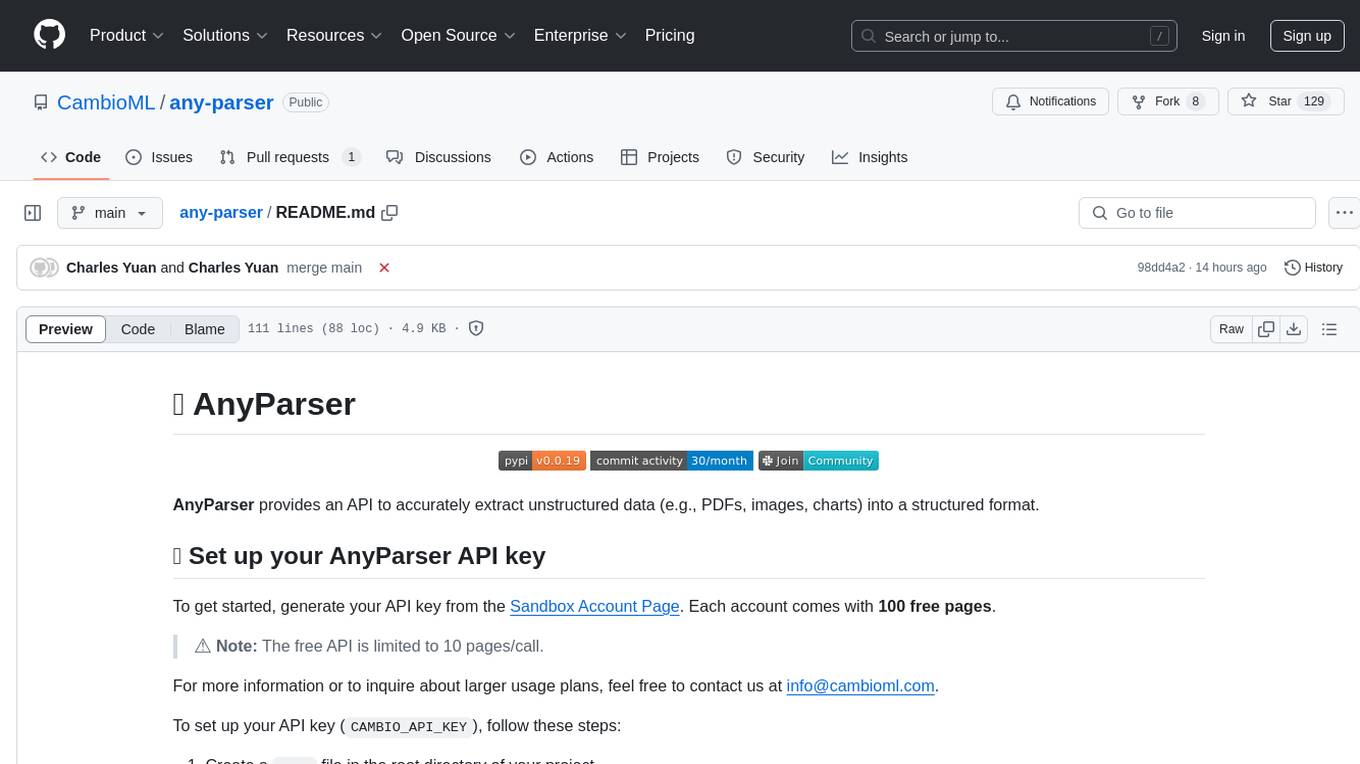

any-parser

AnyParser provides an API to accurately extract unstructured data (e.g., PDFs, images, charts) into a structured format. Users can set up their API key, run synchronous and asynchronous extractions, and perform batch extraction. The tool is useful for extracting text, numbers, and symbols from various sources like PDFs and images. It offers flexibility in processing data and provides immediate results for synchronous extraction while allowing users to fetch results later for asynchronous and batch extraction. AnyParser is designed to simplify data extraction tasks and enhance data processing efficiency.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.