simplemind

Python API client for AI providers that intends to replace LangChain and LangGraph for most common use cases.

Stars: 427

Simplemind is an AI library designed to simplify the experience with AI APIs in Python. It provides easy-to-use AI tools with a human-centered design and minimal configuration. Users can tap into powerful AI capabilities through simple interfaces, without needing to be experts. The library supports various APIs from different providers/models and offers features like text completion, streaming text, structured data handling, conversational AI, tool calling, and logging. Simplemind aims to make AI models accessible to all by abstracting away complexity and prioritizing readability and usability.

README:

Keep it simple, keep it human.

Simplemind is AI library designed to simplify your experience with AI APIs in Python. Inspired by a "for humans" philosophy, it abstracts away complexity, giving developers an intuitive and human-friendly way to interact with powerful AI capabilities.

With Simplemind, tapping into AI is as easy as a friendly conversation.

- Easy-to-use AI tools: Simplemind provides simple interfaces to most popular AI services.

- Human-centered design: The library prioritizes readability and usability—no need to be an expert to start experimenting.

- Minimal configuration: Get started quickly, without worrying about configuration headaches.

The APIs remain identical between all supported providers / models:

llm_provider |

Default llm_model

|

|

|---|---|---|

| Anthropic's Claude | "anthropic" |

"claude-3-5-sonnet-20241022" |

| Amazon's Bedrock | "amazon" |

"anthropic.claude-3-5-sonnet-20241022-v2:0" |

| Google's Gemini | "gemini" |

"models/gemini-1.5-pro" |

| Groq's Groq | "groq" |

"llama3-8b-8192" |

| Ollama | "ollama" |

"llama3.2" |

| OpenAI's GPT | "openai" |

"gpt-4o-mini" |

| xAI's Grok | "xai" |

"grok-beta" |

To specify a specific provider or model, you can use the llm_provider and llm_model parameters when calling: generate_text, generate_data, or create_conversation.

If you want to see Simplemind support additional providers or models, please send a pull request!

Simplemind takes care of the complex API calls so you can focus on what matters—building, experimenting, and creating.

$ pip install 'simplemind[full]'First, authenticate your API keys by setting them in the environment variables:

$ export OPENAI_API_KEY="sk-..."This pattern allows you to keep your API keys private and out of your codebase. Other supported environment variables: ANTHROPIC_API_KEY, XAI_API_KEY, GROQ_API_KEY, and GEMINI_API_KEY.

Next, import Simplemind and start using it:

import simplemind as smHere are some examples of how to use Simplemind.

Please note: Most of the calls seen here optionally accept llm_provider and llm_model parameters, which you provide as strings.

Generate a response from an AI model based on a given prompt:

>>> sm.generate_text(prompt="What is the meaning of life?")

"The meaning of life is a profound philosophical question that has been explored by cultures, religions, and philosophers for centuries. Different people and belief systems offer varying interpretations:\n\n1. **Religious Perspectives:** Many religions propose that the meaning of life is to fulfill a divine purpose, serve God, or reach an afterlife. For example, Christianity often emphasizes love, faith, and service to God and others as central to life’s meaning.\n\n2. **Philosophical Views:** Philosophers offer diverse answers. Existentialists like Jean-Paul Sartre argue that life has no inherent meaning, and it is up to individuals to create their own purpose. Others, like Aristotle, suggest that achieving eudaimonia (flourishing or happiness) through virtuous living is the key to a meaningful life.\n\n3. **Scientific and Secular Approaches:** Some people find meaning through understanding the natural world, contributing to human knowledge, or through personal accomplishments and happiness. They may view life's meaning as a product of connection, legacy, or the pursuit of knowledge and creativity.\n\n4. **Personal Perspective:** For many, the meaning of life is deeply personal, involving their relationships, passions, and goals. These individuals define life's purpose through experiences, connections, and the impact they have on others and the world.\n\nUltimately, the meaning of life is a subjective question, with each person finding their own answers based on their beliefs, experiences, and reflections.">>> for chunk in sm.generate_text("Write a poem about the moon", stream=True):

... print(chunk, end="", flush=True)You can use Pydantic models to structure the response from the LLM, if the LLM supports it.

class Poem(BaseModel):

title: str

content: str>>> sm.generate_data("Write a poem about love", response_model=Poem)

title='Eternal Embrace' content='In the quiet hours of the night,\nWhen stars whisper secrets bright,\nTwo hearts beat in a gentle rhyme,\nDancing through the sands of time.\n\nWith every glance, a spark ignites,\nA flame that warms the coldest nights,\nIn laughter shared and whispers sweet,\nLove paints the world, a masterpiece.\n\nThrough stormy skies and sunlit days,\nIn myriad forms, it finds its ways,\nA tender touch, a knowing sigh,\nIn love’s embrace, we learn to fly.\n\nAs seasons change and moments fade,\nIn the tapestry of dreams we’ve laid,\nLove’s threads endure, forever bind,\nA timeless bond, two souls aligned.\n\nSo here’s to love, both bright and true,\nA gift we give, anew, anew,\nIn every heartbeat, every prayer,\nA story written in the air.'class InstructionStep(BaseModel):

step_number: int

instruction: str

class RecipeIngredient(BaseModel):

name: str

quantity: float

unit: str

class Recipe(BaseModel):

name: str

ingredients: list[RecipeIngredient]

instructions: list[InstructionStep]

recipe = sm.generate_data(

"Write a recipe for chocolate chip cookies",

response_model=Recipe,

)Special thanks to @jxnl for building Instructor, which makes this possible!

SimpleMind also allows for easy conversational flows:

>>> conv = sm.create_conversation()

>>> # Add a message to the conversation

>>> conv.add_message("user", "Hi there, how are you?")

>>> conv.send()

<Message role=assistant text="Hello! I'm just a computer program, so I don't have feelings, but I'm here and ready to help you. How can I assist you today?">To continue the conversation, you can call conv.send() again, which returns the next message in the conversation:

>>> conv.add_message("user", "What is the meaning of life?")

>>> conv.send()

<Message role=assistant text="The meaning of life is a profound philosophical question that has been explored by cultures, religions, and philosophers for centuries. Different people and belief systems offer varying interpretations:\n\n1. **Religious Perspectives:** Many religions propose that the meaning of life is to fulfill a divine purpose, serve God, or reach an afterlife. For example, Christianity often emphasizes love, faith, and service to God and others as central to life’s meaning.\n\n2. **Philosophical Views:** Philosophers offer diverse answers. Existentialists like Jean-Paul Sartre argue that life has no inherent meaning, and it is up to individuals to create their own purpose. Others, like Aristotle, suggest that achieving eudaimonia (flourishing or happiness) through virtuous living is the key to a meaningful life.\n\n3. **Scientific and Secular Approaches:** Some people find meaning through understanding the natural world, contributing to human knowledge, or through personal accomplishments and happiness. They may view life’s meaning as a product of connection, legacy, or the pursuit of knowledge and creativity.\n\n4. **Personal Perspective:** For many, the meaning of life is deeply personal, involving their relationships, passions, and goals. These individuals define life’s purpose through experiences, connections, and the impact they have on others and the world.\n\nUltimately, the meaning of life is a subjective question, with each person finding their own answers based on their beliefs, experiences, and reflections.">You can use the Session class to set default parameters for all calls:

# Create a session with defaults

gpt_4o_mini = sm.Session(llm_provider="openai", llm_model="gpt-4o-mini")

# Now all calls use these defaults

response = gpt_4o_mini.generate_text("Hello!")

conversation = gpt_4o_mini.create_conversation()This maintains the simplicity of the original API while reducing repetition.

The session object also supports overriding defaults on a per-call basis:

response = gpt_4o_mini.generate_text("Complex task here", llm_model="gpt-4")Harnessing the power of Python, you can easily create your own plugins to add additional functionality to your conversations:

class SimpleMemoryPlugin(sm.BasePlugin):

def __init__(self):

self.memories = [

"the earth has fictionally beeen destroyed.",

"the moon is made of cheese.",

]

def yield_memories(self):

return (m for m in self.memories)

def pre_send_hook(self, conversation: sm.Conversation):

for m in self.yield_memories():

conversation.add_message(role="system", text=m)

conversation = sm.create_conversation()

conversation.add_plugin(SimpleMemoryPlugin())

conversation.add_message(

role="user",

text="Please write a poem about the moon",

)>>> conversation.send()

In the vast expanse where stars do play,

There orbits a cheese wheel, far away.

It's not of stone or silver hue,

But cheddar's glow, a sight anew.

In cosmic silence, it does roam,

A lonely traveler, away from home.

No longer does it reflect the sun,

But now it's known for fun begun.

Once Earth's companion, now alone,

A cheese moon orbits, in the dark it's thrown.

Its surface, not of craters wide,

But gouda, swiss, and camembert's pride.

Astronauts of yore, they sought its face,

To find the moon was not a place,

But a haven of dairy delight,

Glowing softly through the night.

In this world, where cheese takes flight,

The moon brings laughter, a whimsical sight.

No longer just a silent sphere,

But a beacon of joy, far and near.

So here's to the moon, in cheese attire,

A playful twist in the cosmic choir.

A reminder that in tales and fun,

The universe is never done.Simple, yet effective.

Tools (also known as functions) let you call any Python function from your AI conversations. Here's an example:

def get_weather(

location: Annotated[

str, Field(description="The city and state, e.g. San Francisco, CA")

],

unit: Annotated[

Literal["celcius", "fahrenheit"],

Field(

description="The unit of temperature, either 'celsius' or 'fahrenheit'"

),

] = "celcius",

):

"""

Get the current weather in a given location

"""

return f"42 {unit}"

# Add your function as a tool

conversation = sm.create_conversation()

conversation.add_message("user", "What's the weather in San Francisco?")

response = conversation.send(tools=[get_weather])Note how we're using Python's Annotated feature combined with Field to provide additional context to our function parameters. This helps the AI understand the intention and constraints of each parameter, making tool calls more accurate and reliable.

You can alos ommit Annotated and just pass the Field parameter.

def get_weather(

location: str = Field(description="The city and state, e.g. San Francisco, CA"),

unit:Literal["celcius", "fahrenheit"]= Field(

default="celcius",

description="The unit of temperature, either 'celsius' or 'fahrenheit'"

),

):

"""

Get the current weather in a given location

"""

return f"42 {unit}"Functions can be defined with type hints and Pydantic models for validation. The LLM will intelligently choose when to call the functions and incorporate the results into its responses.

Simplemind provides a decorator to automatically transform Python functions into tools with AI-generated metadata. Simply use the @simplemind.tool decorator to have the LLM analyze your function and generate appropriate descriptions and schema:

@simplemind.tool(llm_provider="anthropic")

def haversine(lat1: float, lon1: float, lat2: float, lon2: float) -> float:

r = 6371

phi1 = math.radians(lat1)

phi2 = math.radians(lat2)

delta_phi = math.radians(lat2 - lat1)

delta_lambda = math.radians(lon2 - lon1)

a = (

math.sin(delta_phi / 2) ** 2

+ math.cos(phi1) * math.cos(phi2) * math.sin(delta_lambda / 2) ** 2

)

c = 2 * math.atan2(math.sqrt(a), math.sqrt(1 - a))

d = r * c

return dNotice how we have not added any docstrings or Field for the function.

The decorator will use the specified LLM provider to generate the tool schema, including descriptions and parameter details:

{

"name": "haversine",

"description": "Calculates the great-circle distance between two points on Earth given their latitude and longitude coordinates",

"input_schema": {

"type": "object",

"properties": {

"lat1": {

"type": "number",

"description": "Latitude of the first point in decimal degrees",

},

"lon1": {

"type": "number",

"description": "Longitude of the first point in decimal degrees",

},

"lat2": {

"type": "number",

"description": "Latitude of the second point in decimal degrees",

},

"lon2": {

"type": "number",

"description": "Longitude of the second point in decimal degrees",

}

},

"required": ["lat1", "lon1", "lat2", "lon2"],

},

}The decorated function can then be used like any other tool with the conversation API.

conversation = sm.create_conversation()

conversation.add_message("user", "How far is London from my location")

response = conversation.send(tools=[get_location, get_coords, haversine]) # Multiple tools can be passedSee examples/distance_calculator.py for more.

Simplemind uses Logfire for logging. To enable logging, call sm.enable_logfire().

Please see the examples directory for executable examples.

We welcome contributions of all kinds. Feel free to open issues for bug reports or feature requests, and submit pull requests to make SimpleMind even better.

To get started:

- Fork the repository.

- Create a new branch.

- Make your changes.

- Submit a pull request.

Simplemind is licensed under the Apache 2.0 License.

Simplemind is inspired by the philosophy of "code for humans" and aims to make working with AI models accessible to all. Special thanks to the open-source community for their contributions and inspiration.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for simplemind

Similar Open Source Tools

simplemind

Simplemind is an AI library designed to simplify the experience with AI APIs in Python. It provides easy-to-use AI tools with a human-centered design and minimal configuration. Users can tap into powerful AI capabilities through simple interfaces, without needing to be experts. The library supports various APIs from different providers/models and offers features like text completion, streaming text, structured data handling, conversational AI, tool calling, and logging. Simplemind aims to make AI models accessible to all by abstracting away complexity and prioritizing readability and usability.

Toolio

Toolio is an OpenAI-like HTTP server API implementation that supports structured LLM response generation, making it conform to a JSON schema. It is useful for reliable tool calling and agentic workflows based on schema-driven output. Toolio is based on the MLX framework for Apple Silicon, specifically M1/M2/M3/M4 Macs. It allows users to host MLX-format LLMs for structured output queries and provides a command line client for easier usage of tools. The tool also supports multiple tool calls and the creation of custom tools for specific tasks.

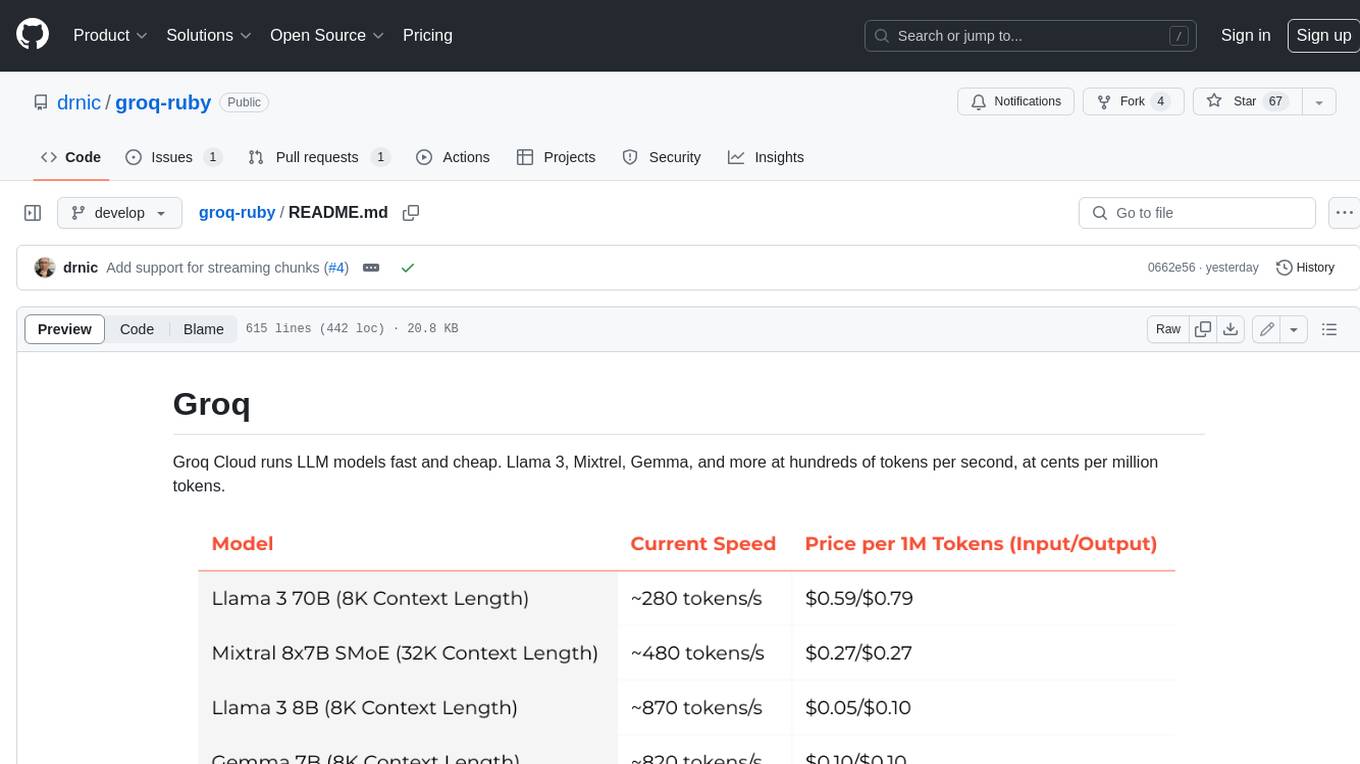

groq-ruby

Groq Cloud runs LLM models fast and cheap. Llama 3, Mixtrel, Gemma, and more at hundreds of tokens per second, at cents per million tokens.

tiny-ai-client

Tiny AI Client is a lightweight tool designed for easy usage and switching of Language Model Models (LLMs) with support for vision and tool usage. It aims to provide a simple and intuitive interface for interacting with various LLMs, allowing users to easily set, change models, send messages, use tools, and handle vision tasks. The core logic of the tool is kept minimal and easy to understand, with separate modules for vision and tool usage utilities. Users can interact with the tool through simple Python scripts, passing model names, messages, tools, and images as required.

langevals

LangEvals is an all-in-one Python library for testing and evaluating LLM models. It can be used in notebooks for exploration, in pytest for writing unit tests, or as a server API for live evaluations and guardrails. The library is modular, with 20+ evaluators including Ragas for RAG quality, OpenAI Moderation, and Azure Jailbreak detection. LangEvals powers LangWatch evaluations and provides tools for batch evaluations on notebooks and unit test evaluations with PyTest. It also offers LangEvals evaluators for LLM-as-a-Judge scenarios and out-of-the-box evaluators for language detection and answer relevancy checks.

motorhead

Motorhead is a memory and information retrieval server for LLMs. It provides three simple APIs to assist with memory handling in chat applications using LLMs. The first API, GET /sessions/:id/memory, returns messages up to a maximum window size. The second API, POST /sessions/:id/memory, allows you to send an array of messages to Motorhead for storage. The third API, DELETE /sessions/:id/memory, deletes the session's message list. Motorhead also features incremental summarization, where it processes half of the maximum window size of messages and summarizes them when the maximum is reached. Additionally, it supports searching by text query using vector search. Motorhead is configurable through environment variables, including the maximum window size, whether to enable long-term memory, the model used for incremental summarization, the server port, your OpenAI API key, and the Redis URL.

chatmemory

ChatMemory is a simple yet powerful long-term memory manager that facilitates communication between AI and users. It organizes conversation data into history, summary, and knowledge entities, enabling quick retrieval of context and generation of clear, concise answers. The tool leverages vector search on summaries/knowledge and detailed history to provide accurate responses. It balances speed and accuracy by using lightweight retrieval and fallback detailed search mechanisms, ensuring efficient memory management and response generation beyond mere data retrieval.

VedAstro

VedAstro is an open-source Vedic astrology tool that provides accurate astrological predictions and data. It offers a user-friendly website, a chat API, an open API, a JavaScript SDK, a Swiss Ephemeris API, and a machine learning table generator. VedAstro is free to use and is constantly being updated with new features and improvements.

marqo

Marqo is more than a vector database, it's an end-to-end vector search engine for both text and images. Vector generation, storage and retrieval are handled out of the box through a single API. No need to bring your own embeddings.

CoPilot

TigerGraph CoPilot is an AI assistant that combines graph databases and generative AI to enhance productivity across various business functions. It includes three core component services: InquiryAI for natural language assistance, SupportAI for knowledge Q&A, and QueryAI for GSQL code generation. Users can interact with CoPilot through a chat interface on TigerGraph Cloud and APIs. CoPilot requires LLM services for beta but will support TigerGraph's LLM in future releases. It aims to improve contextual relevance and accuracy of answers to natural-language questions by building knowledge graphs and using RAG. CoPilot is extensible and can be configured with different LLM providers, graph schemas, and LangChain tools.

simpleAI

SimpleAI is a self-hosted alternative to the not-so-open AI API, focused on replicating main endpoints for LLM such as text completion, chat, edits, and embeddings. It allows quick experimentation with different models, creating benchmarks, and handling specific use cases without relying on external services. Users can integrate and declare models through gRPC, query endpoints using Swagger UI or API, and resolve common issues like CORS with FastAPI middleware. The project is open for contributions and welcomes PRs, issues, documentation, and more.

phidata

Phidata is a framework for building AI Assistants with memory, knowledge, and tools. It enables LLMs to have long-term conversations by storing chat history in a database, provides them with business context by storing information in a vector database, and enables them to take actions like pulling data from an API, sending emails, or querying a database. Memory and knowledge make LLMs smarter, while tools make them autonomous.

allms

allms is a versatile and powerful library designed to streamline the process of querying Large Language Models (LLMs). Developed by Allegro engineers, it simplifies working with LLM applications by providing a user-friendly interface, asynchronous querying, automatic retrying mechanism, error handling, and output parsing. It supports various LLM families hosted on different platforms like OpenAI, Google, Azure, and GCP. The library offers features for configuring endpoint credentials, batch querying with symbolic variables, and forcing structured output format. It also provides documentation, quickstart guides, and instructions for local development, testing, updating documentation, and making new releases.

MiniAgents

MiniAgents is an open-source Python framework designed to simplify the creation of multi-agent AI systems. It offers a parallelism and async-first design, allowing users to focus on building intelligent agents while handling concurrency challenges. The framework, built on asyncio, supports LLM-based applications with immutable messages and seamless asynchronous token and message streaming between agents.

ActionWeaver

ActionWeaver is an AI application framework designed for simplicity, relying on OpenAI and Pydantic. It supports both OpenAI API and Azure OpenAI service. The framework allows for function calling as a core feature, extensibility to integrate any Python code, function orchestration for building complex call hierarchies, and telemetry and observability integration. Users can easily install ActionWeaver using pip and leverage its capabilities to create, invoke, and orchestrate actions with the language model. The framework also provides structured extraction using Pydantic models and allows for exception handling customization. Contributions to the project are welcome, and users are encouraged to cite ActionWeaver if found useful.

npcsh

`npcsh` is a python-based command-line tool designed to integrate Large Language Models (LLMs) and Agents into one's daily workflow by making them available and easily configurable through the command line shell. It leverages the power of LLMs to understand natural language commands and questions, execute tasks, answer queries, and provide relevant information from local files and the web. Users can also build their own tools and call them like macros from the shell. `npcsh` allows users to take advantage of agents (i.e. NPCs) through a managed system, tailoring NPCs to specific tasks and workflows. The tool is extensible with Python, providing useful functions for interacting with LLMs, including explicit coverage for popular providers like ollama, anthropic, openai, gemini, deepseek, and openai-like providers. Users can set up a flask server to expose their NPC team for use as a backend service, run SQL models defined in their project, execute assembly lines, and verify the integrity of their NPC team's interrelations. Users can execute bash commands directly, use favorite command-line tools like VIM, Emacs, ipython, sqlite3, git, pipe the output of these commands to LLMs, or pass LLM results to bash commands.

For similar tasks

simplemind

Simplemind is an AI library designed to simplify the experience with AI APIs in Python. It provides easy-to-use AI tools with a human-centered design and minimal configuration. Users can tap into powerful AI capabilities through simple interfaces, without needing to be experts. The library supports various APIs from different providers/models and offers features like text completion, streaming text, structured data handling, conversational AI, tool calling, and logging. Simplemind aims to make AI models accessible to all by abstracting away complexity and prioritizing readability and usability.

golf

Golf is a simple command-line tool for calculating the distance between two geographic coordinates. It uses the Haversine formula to accurately determine the distance between two points on the Earth's surface. This tool is useful for developers working on location-based applications or projects that require distance calculations. With Golf, users can easily input latitude and longitude coordinates and get the precise distance in kilometers or miles. The tool is lightweight, easy to use, and can be integrated into various programming workflows.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.