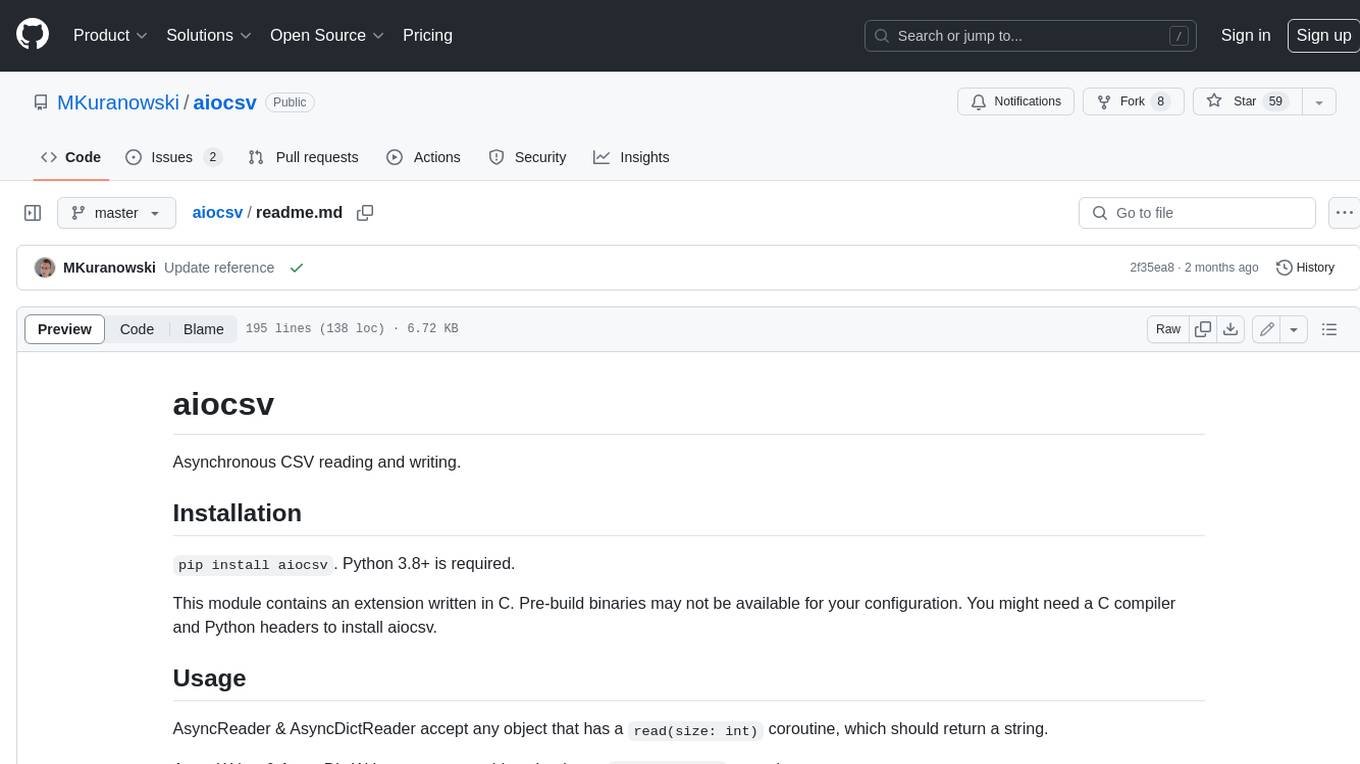

aiocsv

Python: Asynchronous CSV reading/writing

Stars: 71

aiocsv is a Python module that provides asynchronous CSV reading and writing. It is designed to be a drop-in replacement for the Python's builtin csv module, but with the added benefit of being able to read and write CSV files asynchronously. This makes it ideal for use in applications that need to process large CSV files efficiently.

README:

Asynchronous CSV reading and writing.

pip install aiocsv. Python 3.8+ is required.

This module contains an extension written in C. Pre-build binaries may not be available for your configuration. You might need a C compiler and Python headers to install aiocsv.

AsyncReader & AsyncDictReader accept any object that has a read(size: int) coroutine,

which should return a string.

AsyncWriter & AsyncDictWriter accept any object that has a write(b: str) coroutine.

Reading is implemented using a custom CSV parser, which should behave exactly like the CPython parser.

Writing is implemented using the synchronous csv.writer and csv.DictWriter objects - the serializers write data to a StringIO, and that buffer is then rewritten to the underlying asynchronous file.

Example usage with aiofiles.

import asyncio

import csv

import aiofiles

from aiocsv import AsyncReader, AsyncDictReader, AsyncWriter, AsyncDictWriter

async def main():

# simple reading

async with aiofiles.open("some_file.csv", mode="r", encoding="utf-8", newline="") as afp:

async for row in AsyncReader(afp):

print(row) # row is a list

# dict reading, tab-separated

async with aiofiles.open("some_other_file.tsv", mode="r", encoding="utf-8", newline="") as afp:

async for row in AsyncDictReader(afp, delimiter="\t"):

print(row) # row is a dict

# simple writing, "unix"-dialect

async with aiofiles.open("new_file.csv", mode="w", encoding="utf-8", newline="") as afp:

writer = AsyncWriter(afp, dialect="unix")

await writer.writerow(["name", "age"])

await writer.writerows([

["John", 26], ["Sasha", 42], ["Hana", 37]

])

# dict writing, all quoted, "NULL" for missing fields

async with aiofiles.open("new_file2.csv", mode="w", encoding="utf-8", newline="") as afp:

writer = AsyncDictWriter(afp, ["name", "age"], restval="NULL", quoting=csv.QUOTE_ALL)

await writer.writeheader()

await writer.writerow({"name": "John", "age": 26})

await writer.writerows([

{"name": "Sasha", "age": 42},

{"name": "Hana"}

])

asyncio.run(main())aiocsv strives to be a drop-in replacement for Python's builtin

csv module. However, there are 3 notable differences:

- Readers accept objects with async

readmethods, instead of an AsyncIterable over lines from a file. -

AsyncDictReader.fieldnamescan beNone- useawait AsyncDictReader.get_fieldnames()instead. - Changes to

csv.field_size_limitare not picked up by existing Reader instances. The field size limit is cached on Reader instantiation to avoid expensive function calls on each character of the input.

Other, minor, differences include:

-

AsyncReader.line_num,AsyncDictReader.line_numandAsyncDictReader.dialectare not settable, -

AsyncDictReader.readeris ofAsyncReadertype, -

AsyncDictWriter.writeris ofAsyncWritertype, -

AsyncDictWriterprovides an extra, read-onlydialectproperty.

AsyncReader(

asyncfile: aiocsv.protocols.WithAsyncRead,

dialect: str | csv.Dialect | Type[csv.Dialect] = "excel",

**csv_dialect_kwargs: Unpack[aiocsv.protocols.CsvDialectKwargs],

)

An object that iterates over records in the given asynchronous CSV file. Additional keyword arguments are understood as dialect parameters.

Iterating over this object returns parsed CSV rows (List[str]).

Methods:

__aiter__(self) -> selfasync __anext__(self) -> List[str]

Read-only properties:

-

dialect(aiocsv.protocols.DialectLike): The dialect used when parsing -

line_num(int): The number of lines read from the source file. This coincides with a 1-based index of the line number of the last line of the recently parsed record.

AsyncDictReader(

asyncfile: aiocsv.protocols.WithAsyncRead,

fieldnames: Optional[Sequence[str]] = None,

restkey: Optional[str] = None,

restval: Optional[str] = None,

dialect: str | csv.Dialect | Type[csv.Dialect] = "excel",

**csv_dialect_kwargs: Unpack[aiocsv.protocols.CsvDialectKwargs],

)

An object that iterates over records in the given asynchronous CSV file. All arguments work exactly the same was as in csv.DictReader.

Iterating over this object returns parsed CSV rows (Dict[str, str]).

Methods:

__aiter__(self) -> selfasync __anext__(self) -> Dict[str, str]async get_fieldnames(self) -> List[str]

Properties:

-

fieldnames(List[str] | None): field names used when converting rows to dictionaries

⚠️ Unlike csv.DictReader, this property can't read the fieldnames if they are missing - it's not possible toawaiton the header row in a property getter. Useawait reader.get_fieldnames().reader = csv.DictReader(some_file) reader.fieldnames # ["cells", "from", "the", "header"] areader = aiofiles.AsyncDictReader(same_file_but_async) areader.fieldnames # ⚠️ None await areader.get_fieldnames() # ["cells", "from", "the", "header"]

-

restkey(str | None): If a row has more cells then the header, all remaining cells are stored under this key in the returned dictionary. Defaults toNone. -

restval(str | None): If a row has less cells then the header, then missing keys will use this value. Defaults toNone. -

reader: Underlyingaiofiles.AsyncReaderinstance

Read-only properties:

-

dialect(aiocsv.protocols.DialectLike): Link toself.reader.dialect- the current csv.Dialect -

line_num(int): The number of lines read from the source file. This coincides with a 1-based index of the line number of the last line of the recently parsed record.

AsyncWriter(

asyncfile: aiocsv.protocols.WithAsyncWrite,

dialect: str | csv.Dialect | Type[csv.Dialect] = "excel",

**csv_dialect_kwargs: Unpack[aiocsv.protocols.CsvDialectKwargs],

)

An object that writes csv rows to the given asynchronous file. In this object "row" is a sequence of values.

Additional keyword arguments are passed to the underlying csv.writer instance.

Methods:

-

async writerow(self, row: Iterable[Any]) -> None: Writes one row to the specified file. -

async writerows(self, rows: Iterable[Iterable[Any]]) -> None: Writes multiple rows to the specified file.

Readonly properties:

-

dialect(aiocsv.protocols.DialectLike): Link to underlying's csv.writer'sdialectattribute

AsyncDictWriter(

asyncfile: aiocsv.protocols.WithAsyncWrite,

fieldnames: Sequence[str],

restval: Any = "",

extrasaction: Literal["raise", "ignore"] = "raise",

dialect: str | csv.Dialect | Type[csv.Dialect] = "excel",

**csv_dialect_kwargs: Unpack[aiocsv.protocols.CsvDialectKwargs],

)

An object that writes csv rows to the given asynchronous file. In this object "row" is a mapping from fieldnames to values.

Additional keyword arguments are passed to the underlying csv.DictWriter instance.

Methods:

-

async writeheader(self) -> None: Writes header row to the specified file. -

async writerow(self, row: Mapping[str, Any]) -> None: Writes one row to the specified file. -

async writerows(self, rows: Iterable[Mapping[str, Any]]) -> None: Writes multiple rows to the specified file.

Properties:

-

fieldnames(Sequence[str]): Sequence of keys to identify the order of values when writing rows to the underlying file -

restval(Any): Placeholder value used when a key from fieldnames is missing in a row, defaults to"" -

extrasaction(Literal["raise", "ignore"]): Action to take when there are keys in a row, which are not present in fieldnames, defaults to"raise"which causes ValueError to be raised on extra keys, may be also set to"ignore"to ignore any extra keys -

writer: Link to the underlyingAsyncWriter

Readonly properties:

-

dialect(aiocsv.protocols.DialectLike): Link to underlying's csv.reader'sdialectattribute

A typing.Protocol describing an asynchronous file, which can be read.

A typing.Protocol describing an asynchronous file, which can be written to.

Type of an instantiated dialect property. Thank CPython for an incredible mess of

having unrelated and disjoint csv.Dialect and _csv.Dialect classes.

Type of the dialect argument, as used in the csv module.

Keyword arguments used by csv module to override the dialect settings during reader/writer

instantiation.

Contributions are welcome, however please open an issue beforehand. aiocsv is meant as

a replacement for the built-in csv, any features not present in the latter will be rejected.

To create a wheel (and a source tarball), run python -m build.

For local development, use a virtual environment.

pip install --editable . will build the C extension and make it available for the current

venv. This is required for running the tests. However, due to the mess of Python packaging

this will force an optimized build without debugging symbols. If you need to debug the C part

of aiocsv and build the library with e.g. debugging symbols, the only sane way is to

run python setup.py build --debug and manually copy the shared object/DLL from build/lib*/aiocsv

to aiocsv.

This project uses pytest with

pytest-asyncio for testing. Run pytest

after installing the library in the manner explained above.

This library uses black and isort for formatting and pyright in strict mode for type checking.

For the C part of library, please use clang-format for formatting and clang-tidy linting, however this are not yet integrated in the CI.

pip install -r requirements.dev.txt will pull all of the development tools mentioned above,

however this might not be necessary depending on your setup. For example, if you use VS Code

with the Python extension, pyright is already bundled and doesn't need to be installed again.

Use Python, Pylance (should be installed automatically alongside Python extension), black and isort Python extensions.

You will need to install all dev dependencies from requirements.dev.txt, except for pyright.

Recommended .vscode/settings.json:

{

"C_Cpp.codeAnalysis.clangTidy.enabled": true,

"python.testing.pytestArgs": [

"."

],

"python.testing.unittestEnabled": false,

"python.testing.pytestEnabled": true,

"[python]": {

"editor.formatOnSave": true,

"editor.codeActionsOnSave": {

"source.organizeImports": "always"

}

},

"[c]": {

"editor.formatOnSave": true

}

}For the C part of the library, C/C++ extension is sufficient.

Ensure that your system has Python headers installed. Usually a separate package like python3-dev

needs to be installed, consult with your system repositories on that. .vscode/c_cpp_properties.json

needs to manually include Python headers under includePath. On my particular system this

config file looks like this:

{

"configurations": [

{

"name": "Linux",

"includePath": [

"${workspaceFolder}/**",

"/usr/include/python3.11"

],

"defines": [],

"compilerPath": "/usr/bin/clang",

"cStandard": "c17",

"cppStandard": "c++17",

"intelliSenseMode": "linux-clang-x64"

}

],

"version": 4

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiocsv

Similar Open Source Tools

aiocsv

aiocsv is a Python module that provides asynchronous CSV reading and writing. It is designed to be a drop-in replacement for the Python's builtin csv module, but with the added benefit of being able to read and write CSV files asynchronously. This makes it ideal for use in applications that need to process large CSV files efficiently.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

langchain-decorators

LangChain Decorators is a layer on top of LangChain that provides syntactic sugar for writing custom langchain prompts and chains. It offers a more pythonic way of writing code, multiline prompts without breaking code flow, IDE support for hinting and type checking, leveraging LangChain ecosystem, support for optional parameters, and sharing parameters between prompts. It simplifies streaming, automatic LLM selection, defining custom settings, debugging, and passing memory, callback, stop, etc. It also provides functions provider, dynamic function schemas, binding prompts to objects, defining custom settings, and debugging options. The project aims to enhance the LangChain library by making it easier to use and more efficient for writing custom prompts and chains.

promptic

Promptic is a tool designed for LLM app development, providing a productive and pythonic way to build LLM applications. It leverages LiteLLM, allowing flexibility to switch LLM providers easily. Promptic focuses on building features by providing type-safe structured outputs, easy-to-build agents, streaming support, automatic prompt caching, and built-in conversation memory.

langchainrb

Langchain.rb is a Ruby library that makes it easy to build LLM-powered applications. It provides a unified interface to a variety of LLMs, vector search databases, and other tools, making it easy to build and deploy RAG (Retrieval Augmented Generation) systems and assistants. Langchain.rb is open source and available under the MIT License.

llm-rag-workshop

The LLM RAG Workshop repository provides a workshop on using Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) to generate and understand text in a human-like manner. It includes instructions on setting up the environment, indexing Zoomcamp FAQ documents, creating a Q&A system, and using OpenAI for generation based on retrieved information. The repository focuses on enhancing language model responses with retrieved information from external sources, such as document databases or search engines, to improve factual accuracy and relevance of generated text.

swarmzero

SwarmZero SDK is a library that simplifies the creation and execution of AI Agents and Swarms of Agents. It supports various LLM Providers such as OpenAI, Azure OpenAI, Anthropic, MistralAI, Gemini, Nebius, and Ollama. Users can easily install the library using pip or poetry, set up the environment and configuration, create and run Agents, collaborate with Swarms, add tools for complex tasks, and utilize retriever tools for semantic information retrieval. Sample prompts are provided to help users explore the capabilities of the agents and swarms. The SDK also includes detailed examples and documentation for reference.

magentic

Easily integrate Large Language Models into your Python code. Simply use the `@prompt` and `@chatprompt` decorators to create functions that return structured output from the LLM. Mix LLM queries and function calling with regular Python code to create complex logic.

scaleapi-python-client

The Scale AI Python SDK is a tool that provides a Python interface for interacting with the Scale API. It allows users to easily create tasks, manage projects, upload files, and work with evaluation tasks, training tasks, and Studio assignments. The SDK handles error handling and provides detailed documentation for each method. Users can also manage teammates, project groups, and batches within the Scale Studio environment. The SDK supports various functionalities such as creating tasks, retrieving tasks, canceling tasks, auditing tasks, updating task attributes, managing files, managing team members, and working with evaluation and training tasks.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

top_secret

Top Secret is a Ruby gem designed to filter sensitive information from free text before sending it to external services or APIs, such as chatbots and LLMs. It provides default filters for credit cards, emails, phone numbers, social security numbers, people's names, and locations, with the ability to add custom filters. Users can configure the tool to handle sensitive information redaction, scan for sensitive data, batch process messages, and restore filtered text from external services. Top Secret uses Regex and NER filters to detect and redact sensitive information, allowing users to override default filters, disable specific filters, and add custom filters globally. The tool is suitable for applications requiring data privacy and security measures.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output with respect to defined Context-Free Grammar (CFG) rules. It supports general-purpose programming languages like Python, Go, SQL, JSON, and more, allowing users to define custom grammars using EBNF syntax. The tool compares favorably to other constrained decoders and offers features like fast grammar-guided generation, compatibility with HuggingFace Language Models, and the ability to work with various decoding strategies.

Lumos

Lumos is a Chrome extension powered by a local LLM co-pilot for browsing the web. It allows users to summarize long threads, news articles, and technical documentation. Users can ask questions about reviews and product pages. The tool requires a local Ollama server for LLM inference and embedding database. Lumos supports multimodal models and file attachments for processing text and image content. It also provides options to customize models, hosts, and content parsers. The extension can be easily accessed through keyboard shortcuts and offers tools for automatic invocation based on prompts.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output based on a Context-Free Grammar (CFG). It supports various programming languages like Python, Go, SQL, Math, JSON, and more. Users can define custom grammars using EBNF syntax. SynCode offers fast generation, seamless integration with HuggingFace Language Models, and the ability to sample with different decoding strategies.

mjai.app

mjai.app is a platform for mahjong AI competition. It contains an implementation of a mahjong game simulator for evaluating submission files. The simulator runs Docker internally, and there is a base class for developing bots that communicate via the mjai protocol. Submission files are deployed in a Docker container, and the Docker image is pushed to Docker Hub. The Mjai protocol used is customized based on Mortal's Mjai Engine implementation.

For similar tasks

aiocsv

aiocsv is a Python module that provides asynchronous CSV reading and writing. It is designed to be a drop-in replacement for the Python's builtin csv module, but with the added benefit of being able to read and write CSV files asynchronously. This makes it ideal for use in applications that need to process large CSV files efficiently.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.