Open-DocLLM

None

Stars: 124

Open-DocLLM is an open-source project that addresses data extraction and processing challenges using OCR and LLM technologies. It consists of two main layers: OCR for reading document content and LLM for extracting specific content in a structured manner. The project offers a larger context window size compared to JP Morgan's DocLLM and integrates tools like Tesseract OCR and Mistral for efficient data analysis. Users can run the models on-premises using LLM studio or Ollama, and the project includes a FastAPI app for testing purposes.

README:

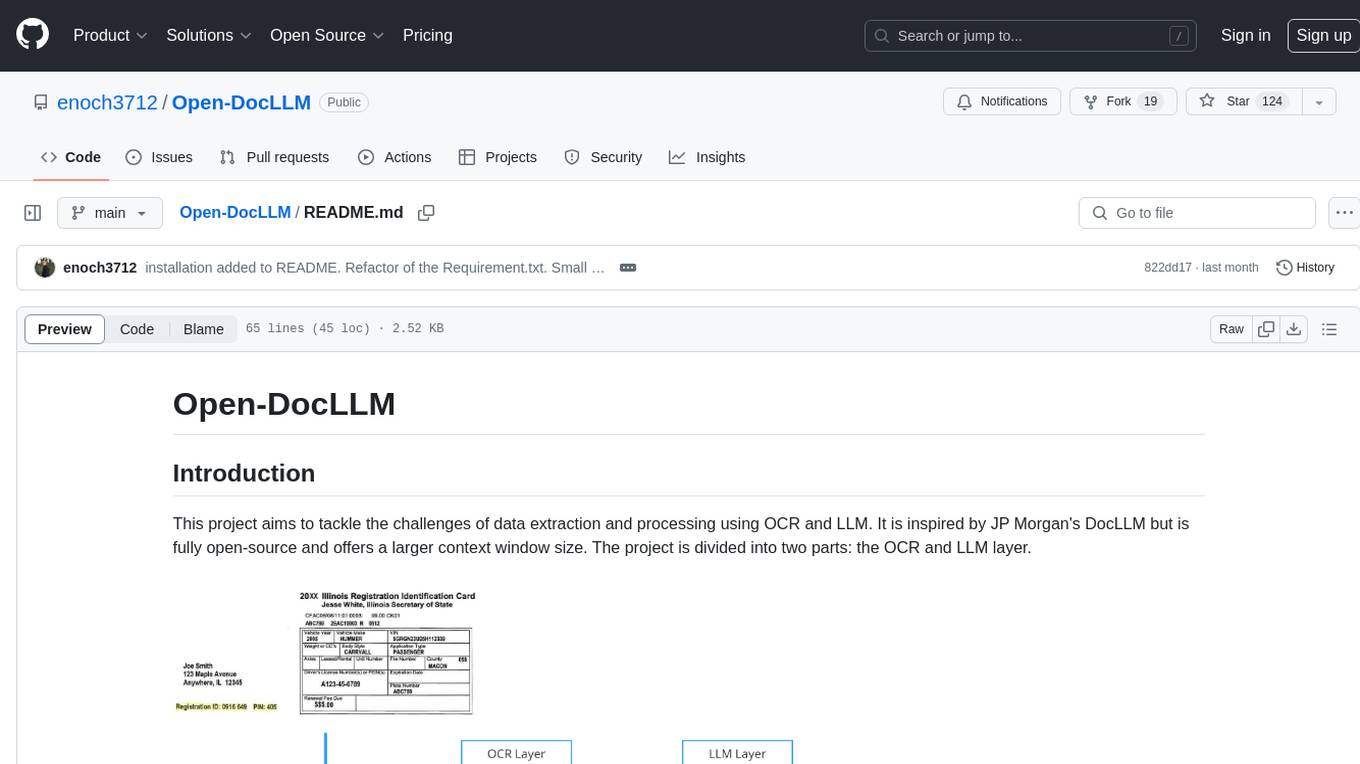

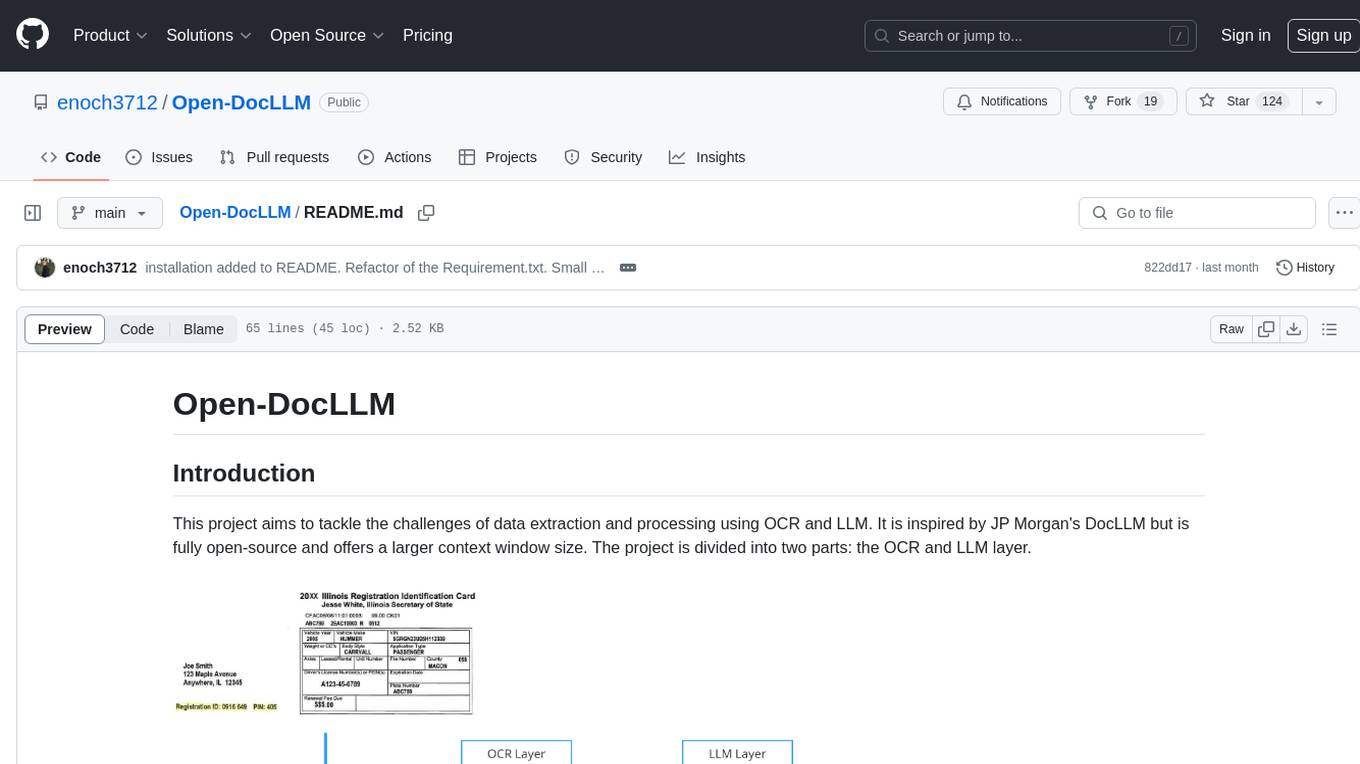

This project aims to tackle the challenges of data extraction and processing using OCR and LLM. It is inspired by JP Morgan's DocLLM but is fully open-source and offers a larger context window size. The project is divided into two parts: the OCR and LLM layer.

The OCR layer is responsible for reading all the content from a document. It involves the following steps:

-

Convert pages to images: Any type of file is converted into an image so that all the content in the document can be read.

-

Preprocess image for OCR: The image is adjusted to improve its quality and readability.

-

Tesseract OCR: The Tesseract OCR, the most popular open-source OCR in the world, is used to read the content from the images.

The LLM layer is responsible for extracting specific content from the document in a structured way. It involves defining an extraction contract and extracting the JSON data.

You can run the models on-premises using LLM studio or Ollama. This project uses LlamaIndex and Ollama.

The repo includes a FastAPI app with one endpoint for testing. Make sure to point to the proper Tesseract executable and change the key in the config.py file.

-

Install Tessaract https://github.com/tesseract-ocr/tesseract

-

Install the required Python packages.

pip install -r requirements.txt- Run fast api

uvicorn main:app --reload- go to the Swgger page: http://localhost:8000/docs

- Build the Docker image.

docker build -t your-image-name .- Run the Docker container.

docker run -p 8000:8000 your-image-name- go to the Swgger page: http://localhost:8000/docs

The project also explores advanced cases like a 1 million token context using LLM Lingua and Mistral Yarn 128k context window.

The integration of OCR and LLM technologies in this project marks a pivotal advancement in analyzing unstructured data. The combination of open-source projects like Tesseract and Mistral makes a perfect implementation that could be used in an on-premise use case.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Open-DocLLM

Similar Open Source Tools

Open-DocLLM

Open-DocLLM is an open-source project that addresses data extraction and processing challenges using OCR and LLM technologies. It consists of two main layers: OCR for reading document content and LLM for extracting specific content in a structured manner. The project offers a larger context window size compared to JP Morgan's DocLLM and integrates tools like Tesseract OCR and Mistral for efficient data analysis. Users can run the models on-premises using LLM studio or Ollama, and the project includes a FastAPI app for testing purposes.

Chinese-Tiny-LLM

Chinese-Tiny-LLM is a repository containing procedures for cleaning Chinese web corpora and pre-training code. It introduces CT-LLM, a 2B parameter language model focused on the Chinese language. The model primarily uses Chinese data from a 1,200 billion token corpus, showing excellent performance in Chinese language tasks. The repository includes tools for filtering, deduplication, and pre-training, aiming to encourage further research and innovation in language model development.

kdbai-samples

KDB.AI is a time-based vector database that allows developers to build scalable, reliable, and real-time applications by providing advanced search, recommendation, and personalization for Generative AI applications. It supports multiple index types, distance metrics, top-N and metadata filtered retrieval, as well as Python and REST interfaces. The repository contains samples demonstrating various use-cases such as temporal similarity search, document search, image search, recommendation systems, sentiment analysis, and more. KDB.AI integrates with platforms like ChatGPT, Langchain, and LlamaIndex. The setup steps require Unix terminal, Python 3.8+, and pip installed. Users can install necessary Python packages and run Jupyter notebooks to interact with the samples.

SuperKnowa

SuperKnowa is a fast framework to build Enterprise RAG (Retriever Augmented Generation) Pipelines at Scale, powered by watsonx. It accelerates Enterprise Generative AI applications to get prod-ready solutions quickly on private data. The framework provides pluggable components for tackling various Generative AI use cases using Large Language Models (LLMs), allowing users to assemble building blocks to address challenges in AI-driven text generation. SuperKnowa is battle-tested from 1M to 200M private knowledge base & scaled to billions of retriever tokens.

conversational-agent-langchain

This repository contains a Rest-Backend for a Conversational Agent that allows embedding documents, semantic search, QA based on documents, and document processing with Large Language Models. It uses Aleph Alpha and OpenAI Large Language Models to generate responses to user queries, includes a vector database, and provides a REST API built with FastAPI. The project also features semantic search, secret management for API keys, installation instructions, and development guidelines for both backend and frontend components.

graphrag-local-ollama

GraphRAG Local Ollama is a repository that offers an adaptation of Microsoft's GraphRAG, customized to support local models downloaded using Ollama. It enables users to leverage local models with Ollama for large language models (LLMs) and embeddings, eliminating the need for costly OpenAPI models. The repository provides a simple setup process and allows users to perform question answering over private text corpora by building a graph-based text index and generating community summaries for closely-related entities. GraphRAG Local Ollama aims to improve the comprehensiveness and diversity of generated answers for global sensemaking questions over datasets.

PulsarRPA

PulsarRPA is a high-performance, distributed, open-source Robotic Process Automation (RPA) framework designed to handle large-scale RPA tasks with ease. It provides a comprehensive solution for browser automation, web content understanding, and data extraction. PulsarRPA addresses challenges of browser automation and accurate web data extraction from complex and evolving websites. It incorporates innovative technologies like browser rendering, RPA, intelligent scraping, advanced DOM parsing, and distributed architecture to ensure efficient, accurate, and scalable web data extraction. The tool is open-source, customizable, and supports cutting-edge information extraction technology, making it a preferred solution for large-scale web data extraction.

DistiLlama

DistiLlama is a Chrome extension that leverages a locally running Large Language Model (LLM) to perform various tasks, including text summarization, chat, and document analysis. It utilizes Ollama as the locally running LLM instance and LangChain for text summarization. DistiLlama provides a user-friendly interface for interacting with the LLM, allowing users to summarize web pages, chat with documents (including PDFs), and engage in text-based conversations. The extension is easy to install and use, requiring only the installation of Ollama and a few simple steps to set up the environment. DistiLlama offers a range of customization options, including the choice of LLM model and the ability to configure the summarization chain. It also supports multimodal capabilities, allowing users to interact with the LLM through text, voice, and images. DistiLlama is a valuable tool for researchers, students, and professionals who seek to leverage the power of LLMs for various tasks without compromising data privacy.

aigt

AIGT is a repository containing scripts for deep learning in guided medical interventions, focusing on ultrasound imaging. It provides a complete workflow from formatting and annotations to real-time model deployment. Users can set up an Anaconda environment, run Slicer notebooks, acquire tracked ultrasound data, and process exported data for training. The repository includes tools for segmentation, image export, and annotation creation.

dataherald

Dataherald is a natural language-to-SQL engine built for enterprise-level question answering over structured data. It allows you to set up an API from your database that can answer questions in plain English. You can use Dataherald to: * Allow business users to get insights from the data warehouse without going through a data analyst * Enable Q+A from your production DBs inside your SaaS application * Create a ChatGPT plug-in from your proprietary data

HuggingFists

HuggingFists is a low-code data flow tool that enables convenient use of LLM and HuggingFace models. It provides functionalities similar to Langchain, allowing users to design, debug, and manage data processing workflows, create and schedule workflow jobs, manage resources environment, and handle various data artifact resources. The tool also offers account management for users, allowing centralized management of data source accounts and API accounts. Users can access Hugging Face models through the Inference API or locally deployed models, as well as datasets on Hugging Face. HuggingFists supports breakpoint debugging, branch selection, function calls, workflow variables, and more to assist users in developing complex data processing workflows.

LangSim

LangSim is a tool developed to address the challenge of using simulation tools in computational chemistry and materials science, which typically require cryptic input files or programming experience. The tool provides a Large Language Model (LLM) extension with agents to couple the LLM to scientific simulation codes and calculate physical properties from a natural language interface. It aims to simplify the process of interacting with simulation tools by enabling users to query the large language model directly from a Python environment or a web-based interface.

wandb

Weights & Biases (W&B) is a platform that helps users build better machine learning models faster by tracking and visualizing all components of the machine learning pipeline, from datasets to production models. It offers tools for tracking, debugging, evaluating, and monitoring machine learning applications. W&B provides integrations with popular frameworks like PyTorch, TensorFlow/Keras, Hugging Face Transformers, PyTorch Lightning, XGBoost, and Sci-Kit Learn. Users can easily log metrics, visualize performance, and compare experiments using W&B. The platform also supports hosting options in the cloud or on private infrastructure, making it versatile for various deployment needs.

vertex-ai-creative-studio

GenMedia Creative Studio is an application showcasing the capabilities of Google Cloud Vertex AI generative AI creative APIs. It includes features like Gemini for prompt rewriting and multimodal evaluation of generated images. The app is built with Mesop, a Python-based UI framework, enabling rapid development of web and internal apps. The Experimental folder contains stand-alone applications and upcoming features demonstrating cutting-edge generative AI capabilities, such as image generation, prompting techniques, and audio/video tools.

bocoel

BoCoEL is a tool that leverages Bayesian Optimization to efficiently evaluate large language models by selecting a subset of the corpus for evaluation. It encodes individual entries into embeddings, uses Bayesian optimization to select queries, retrieves from the corpus, and provides easily managed evaluations. The tool aims to reduce computation costs during evaluation with a dynamic budget, supporting models like GPT2, Pythia, and LLAMA through integration with Hugging Face transformers and datasets. BoCoEL offers a modular design and efficient representation of the corpus to enhance evaluation quality.

LlamaIndexTS

LlamaIndex.TS is a data framework for your LLM application. Use your own data with large language models (LLMs, OpenAI ChatGPT and others) in Typescript and Javascript.

For similar tasks

Open-DocLLM

Open-DocLLM is an open-source project that addresses data extraction and processing challenges using OCR and LLM technologies. It consists of two main layers: OCR for reading document content and LLM for extracting specific content in a structured manner. The project offers a larger context window size compared to JP Morgan's DocLLM and integrates tools like Tesseract OCR and Mistral for efficient data analysis. Users can run the models on-premises using LLM studio or Ollama, and the project includes a FastAPI app for testing purposes.

Awesome-AI

Awesome AI is a repository that collects and shares resources in the fields of large language models (LLM), AI-assisted programming, AI drawing, and more. It explores the application and development of generative artificial intelligence. The repository provides information on various AI tools, models, and platforms, along with tutorials and web products related to AI technologies.

Qmedia

QMedia is an open-source multimedia AI content search engine designed specifically for content creators. It provides rich information extraction methods for text, image, and short video content. The tool integrates unstructured text, image, and short video information to build a multimodal RAG content Q&A system. Users can efficiently search for image/text and short video materials, analyze content, provide content sources, and generate customized search results based on user interests and needs. QMedia supports local deployment for offline content search and Q&A for private data. The tool offers features like content cards display, multimodal content RAG search, and pure local multimodal models deployment. Users can deploy different types of models locally, manage language models, feature embedding models, image models, and video models. QMedia aims to spark new ideas for content creation and share AI content creation concepts in an open-source manner.

aws-ai-intelligent-document-processing

This repository is part of Intelligent Document Processing with AWS AI Services workshop. It aims to automate the extraction of information from complex content in various document formats such as insurance claims, mortgages, healthcare claims, contracts, and legal contracts using AWS Machine Learning services like Amazon Textract and Amazon Comprehend. The repository provides hands-on labs to familiarize users with these AI services and build solutions to automate business processes that rely on manual inputs and intervention across different file types and formats.

Scrapegraph-LabLabAI-Hackathon

ScrapeGraphAI is a web scraping Python library that utilizes LangChain, LLM, and direct graph logic to create scraping pipelines. Users can specify the information they want to extract, and the library will handle the extraction process. The tool is designed to simplify web scraping tasks by providing a streamlined and efficient approach to data extraction.

parsera

Parsera is a lightweight Python library designed for scraping websites using LLMs. It offers simplicity and efficiency by minimizing token usage, enhancing speed, and reducing costs. Users can easily set up and run the tool to extract specific elements from web pages, generating JSON output with relevant data. Additionally, Parsera supports integration with various chat models, such as Azure, expanding its functionality and customization options for web scraping tasks.

Scrapegraph-demo

ScrapeGraphAI is a web scraping Python library that utilizes LangChain, LLM, and direct graph logic to create scraping pipelines. Users can specify the information they want to extract, and the library will handle the extraction process. This repository contains an official demo/trial for the ScrapeGraphAI library, showcasing its capabilities in web scraping tasks. The tool is designed to simplify the process of extracting data from websites by providing a user-friendly interface and powerful scraping functionalities.

you2txt

You2Txt is a tool developed for the Vercel + Nvidia 2-hour hackathon that converts any YouTube video into a transcribed .txt file. The project won first place in the hackathon and is hosted at you2txt.com. Due to rate limiting issues with YouTube requests, it is recommended to run the tool locally. The project was created using Next.js, Tailwind, v0, and Claude, and can be built and accessed locally for development purposes.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.