AIHub

一款集合多家大模型能力的客户端。拥有丰富的个性化功能。现已支持:OpenAI,Ollama,谷歌 Gemini,讯飞星火,百度文心,阿里通义,天工,月之暗面,智谱,阶跃星辰,DeepSeek 🎉🎉🎉。A collection of large model capabilities of the client. Has a wealth of personalized functions. English UI support.

Stars: 78

AIHub is a client that integrates the capabilities of multiple large models, allowing users to quickly and easily build their own personalized AI assistants. It supports custom plugins for endless possibilities. The tool provides powerful AI capabilities, rich configuration options, customization of AI assistants for text and image conversations, AI drawing, installation of custom plugins, personal knowledge base building, AI calendar generation, support for AI mini programs, and ongoing development of additional features. Users can download the application package from the release section, resolve issues related to macOS app installation, and contribute ideas by submitting issues. The project development involves installation, development, and building processes for different operating systems.

README:

轻松接入多个厂商的大模型API。

多语言、多主题配置。

支持:文本对话、图片对话;AI绘画。

为你的AI助手安装自定义插件,扩展无限可能。

基于 LangChain 开发。简单的操作即可完成个人知识库搭建。

一键生成周报、月报、年报。

丰富的AI小程序支持。

如果你有好的想法,欢迎提交 issues。

应用包未签名和公证,如遇无法正常安装,可克隆代码本地打包。

打开终端,输入以下命令,并执行:

sudo xattr -d com.apple.quarantine /Applications/xxxx.app注意:/Applications/xxxx.app 换成你的App路径。

$ yarn$ yarn dev# For windows

$ yarn build:win

# For macOS

$ yarn build:mac

# For Linux

$ yarn build:linuxFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AIHub

Similar Open Source Tools

AIHub

AIHub is a client that integrates the capabilities of multiple large models, allowing users to quickly and easily build their own personalized AI assistants. It supports custom plugins for endless possibilities. The tool provides powerful AI capabilities, rich configuration options, customization of AI assistants for text and image conversations, AI drawing, installation of custom plugins, personal knowledge base building, AI calendar generation, support for AI mini programs, and ongoing development of additional features. Users can download the application package from the release section, resolve issues related to macOS app installation, and contribute ideas by submitting issues. The project development involves installation, development, and building processes for different operating systems.

mushroom

MRCMS is a Java-based content management system that uses data model + template + plugin implementation, providing built-in article model publishing functionality. The goal is to quickly build small to medium websites.

FinVeda

FinVeda is a dynamic financial literacy app that aims to solve the problem of low financial literacy rates in India by providing a platform for financial education. It features an AI chatbot, finance blogs, market trends analysis, SIP calculator, and finance quiz to help users learn finance with finesse. The app is free and open-source, licensed under the GNU General Public License v3.0. FinVeda was developed at IIT Jammu's Udyamitsav'24 Hackathon, where it won first place in the GenAI track and third place overall.

azooKey-Desktop

azooKey-Desktop is an open-source Japanese input system for macOS that incorporates the high-precision neural kana-kanji conversion engine 'Zenzai'. It offers features such as neural kana-kanji conversion, profile prompt, history learning, user dictionary, integration with personal optimization system 'Tuner', 'nice feeling conversion' with LLM, live conversion, and native support for AZIK. The tool is currently in alpha version, and its operation is not guaranteed. Users can install it via `.pkg` file or Homebrew. Development contributions are welcome, and the project has received support from the Information-technology Promotion Agency, Japan (IPA) for the 2024 fiscal year's untapped IT human resources discovery and nurturing project.

AlphaAvatar

AlphaAvatar is a powerful tool for creating customizable avatars with AI-generated faces. It provides a user-friendly interface to design unique characters for various purposes such as gaming, virtual reality, social media, and more. With advanced AI algorithms, users can easily generate realistic and diverse avatars to enhance their projects and engage with their audience.

HyperChat

HyperChat is an open Chat client that utilizes various LLM APIs to enhance the Chat experience and offer productivity tools through the MCP protocol. It supports multiple LLMs like OpenAI, Claude, Qwen, Deepseek, GLM, Ollama. The platform includes a built-in MCP plugin market for easy installation and also allows manual installation of third-party MCPs. Features include Windows and MacOS support, resource support, tools support, English and Chinese language support, built-in MCP client 'hypertools', 'fetch' + 'search', Bot support, Artifacts rendering, KaTeX for mathematical formulas, WebDAV synchronization, and a MCP plugin market. Future plans include permission pop-up, scheduled tasks support, Projects + RAG support, tools implementation by LLM, and a local shell + nodejs + js on web runtime environment.

Java-Interview-Tutorial

Java-Interview-Tutorial is a repository containing resources and tutorials for Java interview preparation. It provides guidance on setting up the project locally, adjusting image paths, and submitting articles. The repository also includes instructions for configuring the project and using Git GUI tools for managing content. Users can learn about Java concurrency programming and navigate through the content easily. The repository emphasizes clean article titles and content formatting to ensure proper display on the website.

ChatGPT-Next-Web

ChatGPT Next Web is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro models. It allows users to deploy their private ChatGPT applications with ease. The tool offers features like one-click deployment, compact client for Linux/Windows/MacOS, compatibility with self-deployed LLMs, privacy-first approach with local data storage, markdown support, responsive design, fast loading speed, prompt templates, awesome prompts, chat history compression, multilingual support, and more.

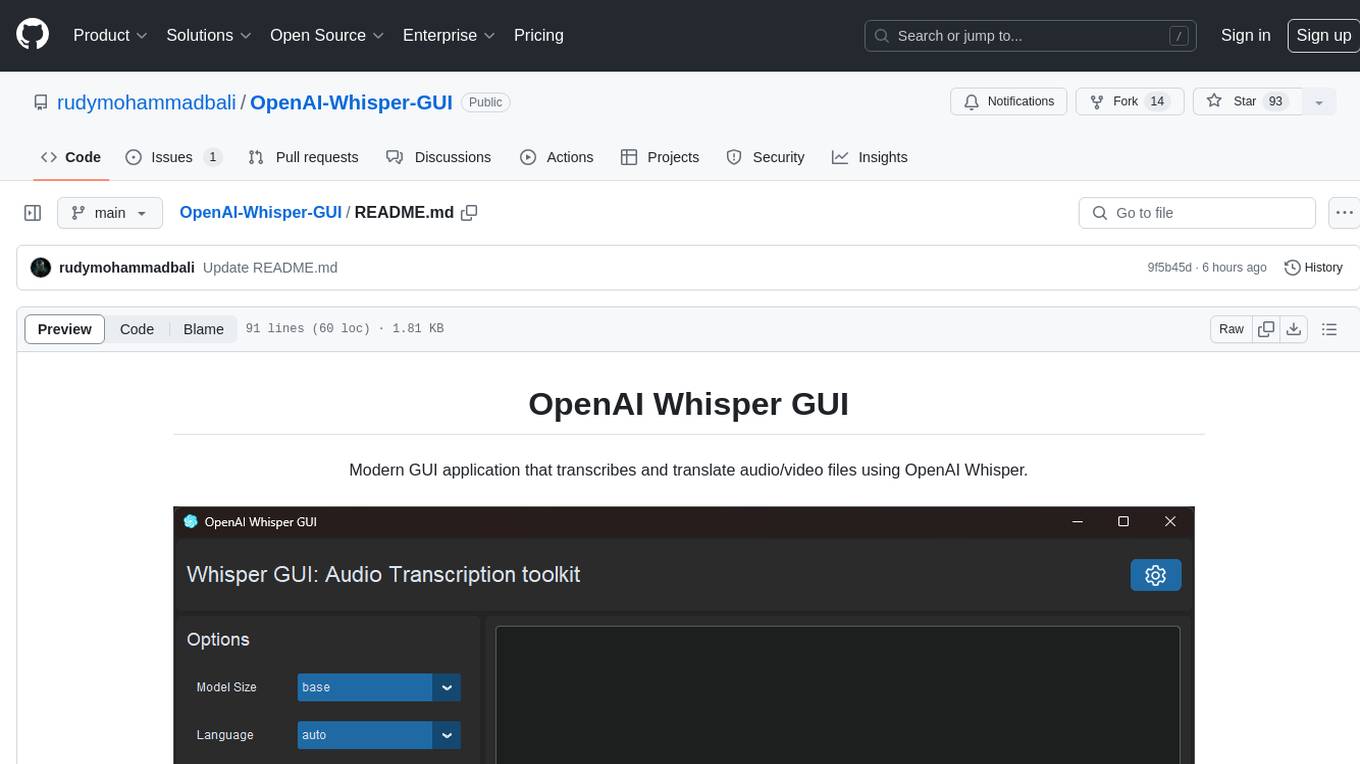

OpenAI-Whisper-GUI

OpenAI Whisper GUI is a modern GUI application designed to transcribe and translate audio/video files using OpenAI Whisper. It features a modern UI with light/dark mode, the ability to export transcribed text, add subtitles to videos, and more. The latest version includes updates to widgets, layouts, and themes, as well as new features such as a config handler, GPU info retrieval, a new app logo, settings interface, and bug fixes like code refactoring and fixing Cuda not found warning message. Users can easily install the tool by cloning the GitHub repository and running setup.py and main.py scripts. For more information, users can visit the OpenAI Whisper GitHub repository.

lively

Lively Wallpaper is a tool that allows users to set animated desktop wallpapers, bringing their desktop to life. It supports various types of wallpapers including video/GIF, webpage, and application/games. Users can also use any wallpaper as a screensaver, control Lively with command line arguments, and leverage the Lively API for developers to create interactive wallpapers. The tool offers features such as minimal webpage renderer, hardware-accelerated video playback, and integration with Machine Learning inference for dynamic wallpapers. Lively is designed for Windows, is fully open-source and free, and supports Shadertoy.com URLs as wallpapers.

himarket

HiMarket is an out-of-the-box AI open platform solution that can be used to build enterprise-level AI capability markets and developer ecosystem centers. It consists of three core components tailored to different roles within the enterprise: 1. AI open platform management backend (for administrators/operators) for easy packaging of diverse AI capabilities such as model services, MCP Server, Agent, etc., into standardized 'AI products' in API form with comprehensive documentation and examples for one-click publishing to the portal. 2. AI open platform portal (for developers/internal users) as a 'storefront' for developers to complete registration, create consumers, obtain credentials, browse and subscribe to AI products, test online, and monitor their own call status and costs clearly. 3. AI Gateway: As a subproject of the Higress community, the Higress AI Gateway carries out all AI call authentication, security, flow control, protocol conversion, and observability capabilities.

aircrackauto

AirCrackAuto is a tool that automates the aircrack-ng process for Wi-Fi hacking. It is designed to make it easier for users to crack Wi-Fi passwords by automating the process of capturing packets, generating wordlists, and launching attacks. AirCrackAuto is a powerful tool that can be used to crack Wi-Fi passwords in a matter of minutes.

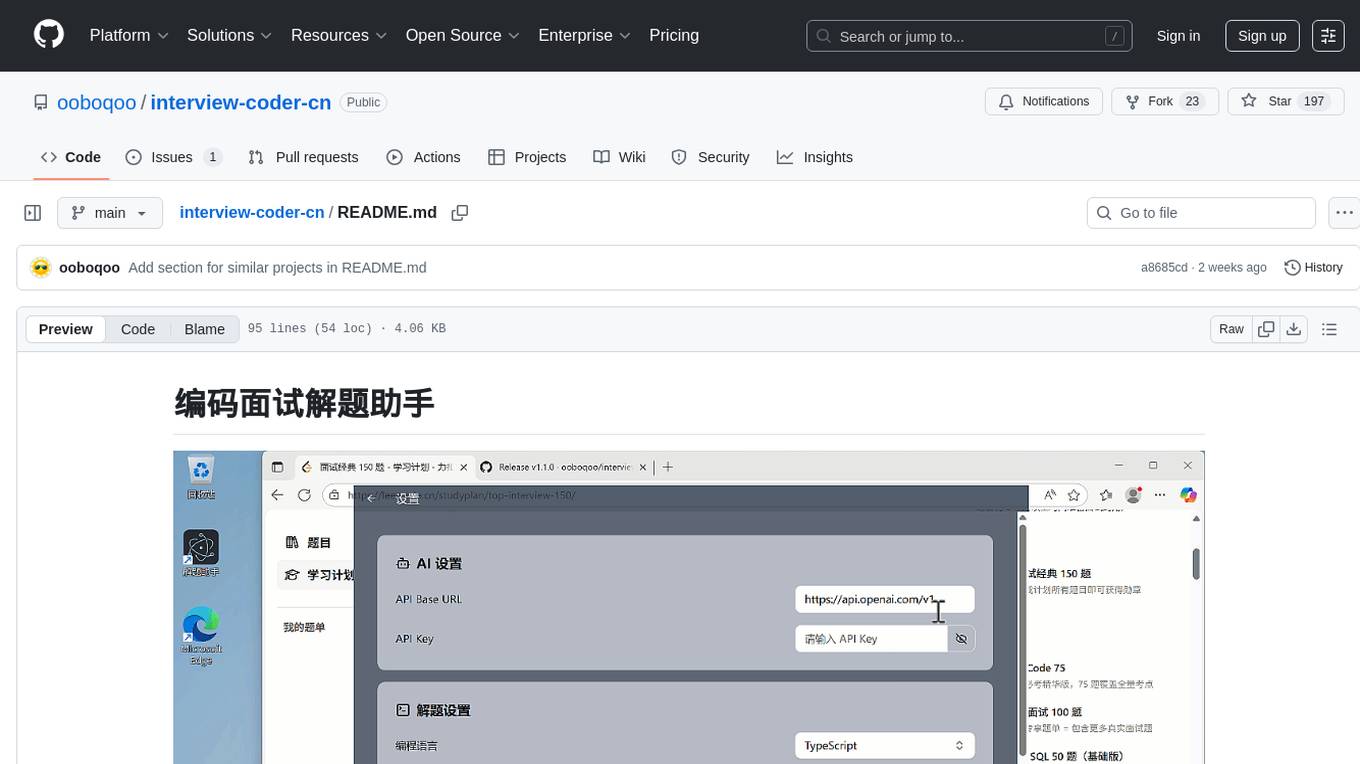

interview-coder-cn

This is a coding problem-solving assistant for Chinese users, tailored to the domestic AI ecosystem, simple and easy to use. It provides real-time problem-solving ideas and code analysis for coding interviews, avoiding detection during screen sharing. Users can also extend its functionality for other scenarios by customizing prompt words. The tool supports various programming languages and has stealth capabilities to hide its interface from interviewers even when screen sharing.

Chat2DB

Chat2DB is an AI-driven data development and analysis platform that enables users to communicate with databases using natural language. It supports a wide range of databases, including MySQL, PostgreSQL, Oracle, SQLServer, SQLite, MariaDB, ClickHouse, DM, Presto, DB2, OceanBase, Hive, KingBase, MongoDB, Redis, and Snowflake. Chat2DB provides a user-friendly interface that allows users to query databases, generate reports, and explore data using natural language commands. It also offers a variety of features to help users improve their productivity, such as auto-completion, syntax highlighting, and error checking.

openchamber

OpenChamber is a web and desktop interface for the OpenCode AI coding agent, designed to work alongside the OpenCode TUI. The project was built entirely with AI coding agents under supervision, serving as a proof of concept that AI agents can create usable software. It offers features like integrated terminal, Git operations with AI commit message generation, smart tool visualization, permission management, multi-agent runs, task tracker UI, model selection UX, UI scaling controls, session auto-cleanup, and memory optimizations. OpenChamber provides cross-device continuity, remote access, and a visual alternative for developers preferring GUI workflows.

ShitCodify

ShitCodify is an AI-powered tool that transforms normal, readable, and maintainable code into hard-to-understand, hard-to-maintain 'shit code'. It uses large language models like GPT-4 to analyze code and apply various 'anti-patterns' and bad practices to reduce code readability and maintainability while keeping the code functional.

For similar tasks

AIHub

AIHub is a client that integrates the capabilities of multiple large models, allowing users to quickly and easily build their own personalized AI assistants. It supports custom plugins for endless possibilities. The tool provides powerful AI capabilities, rich configuration options, customization of AI assistants for text and image conversations, AI drawing, installation of custom plugins, personal knowledge base building, AI calendar generation, support for AI mini programs, and ongoing development of additional features. Users can download the application package from the release section, resolve issues related to macOS app installation, and contribute ideas by submitting issues. The project development involves installation, development, and building processes for different operating systems.

jvm-openai

jvm-openai is a minimalistic unofficial OpenAI API client for the JVM, written in Java. It serves as a Java client for OpenAI API with a focus on simplicity and minimal dependencies. The tool provides support for various OpenAI APIs and endpoints, including Audio, Chat, Embeddings, Fine-tuning, Batch, Files, Uploads, Images, Models, Moderations, Assistants, Threads, Messages, Runs, Run Steps, Vector Stores, Vector Store Files, Vector Store File Batches, Invites, Users, Projects, Project Users, Project Service Accounts, Project API Keys, and Audit Logs. Users can easily integrate this tool into their Java projects to interact with OpenAI services efficiently.

second-brain-ai-assistant-course

This open-source course teaches how to build an advanced RAG and LLM system using LLMOps and ML systems best practices. It helps you create an AI assistant that leverages your personal knowledge base to answer questions, summarize documents, and provide insights. The course covers topics such as LLM system architecture, pipeline orchestration, large-scale web crawling, model fine-tuning, and advanced RAG features. It is suitable for ML/AI engineers and data/software engineers & data scientists looking to level up to production AI systems. The course is free, with minimal costs for tools like OpenAI's API and Hugging Face's Dedicated Endpoints. Participants will build two separate Python applications for offline ML pipelines and online inference pipeline.

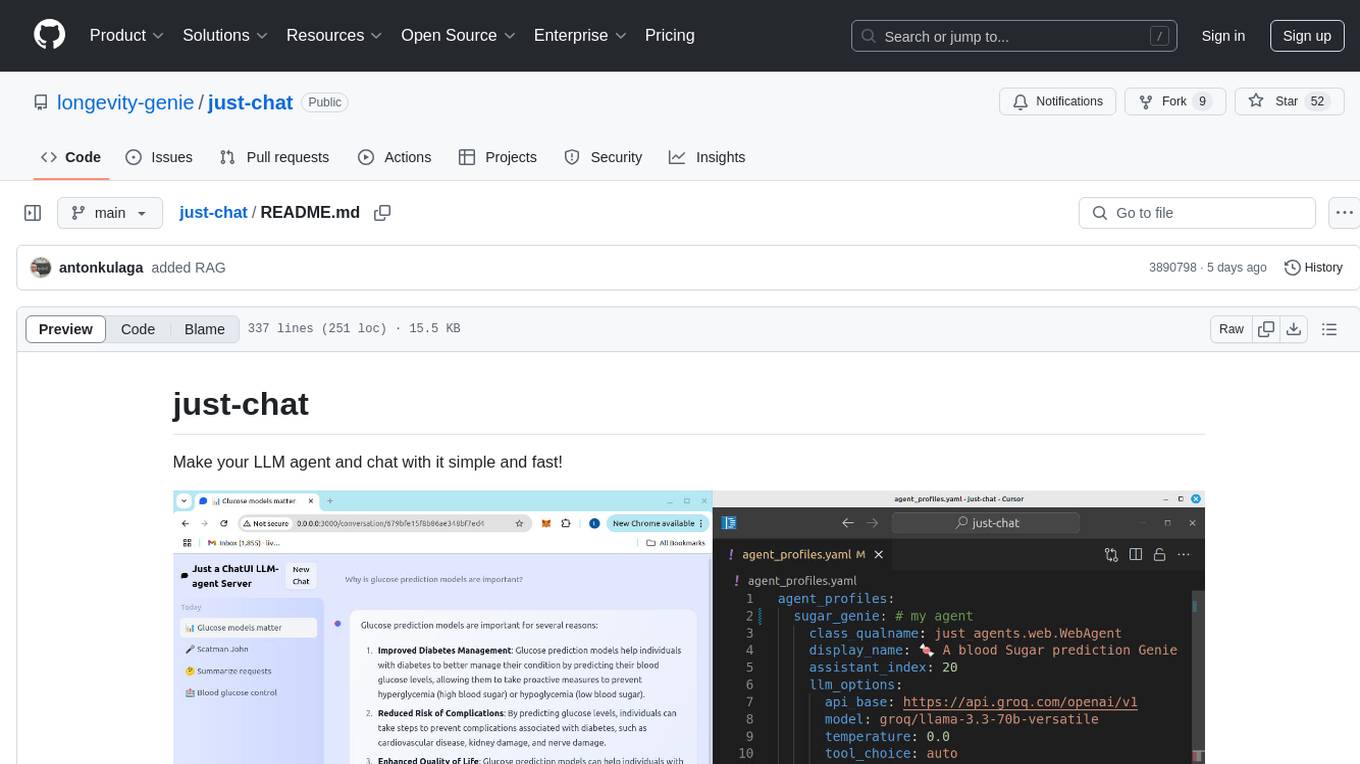

just-chat

Just-Chat is a containerized application that allows users to easily set up and chat with their AI agent. Users can customize their AI assistant using a YAML file, add new capabilities with Python tools, and interact with the agent through a chat web interface. The tool supports various modern models like DeepSeek Reasoner, ChatGPT, LLAMA3.3, etc. Users can also use semantic search capabilities with MeiliSearch to find and reference relevant information based on meaning. Just-Chat requires Docker or Podman for operation and provides detailed installation instructions for both Linux and Windows users.

vLLM-metax

vLLM MetaX is a hardware plugin for running vLLM seamlessly on MetaX GPU, providing near-native CUDA experiences on MetaX Hardware with MACA. It follows the vLLM plugin RFCs by default to ensure hardware features and functionality support on integration of the MetaX GPU with vLLM. The plugin is recommended for supporting the MetaX backend within the vLLM community. Prerequisites include MetaX C-series hardware, Linux OS, Python >= 3.9, < 3.12, vLLM (same version as vllm-metax), and Docker support. The tool currently supports starting on docker images released by the MetaX development community.

termax

Termax is an LLM agent in your terminal that converts natural language to commands. It is featured by: - Personalized Experience: Optimize the command generation with RAG. - Various LLMs Support: OpenAI GPT, Anthropic Claude, Google Gemini, Mistral AI, and more. - Shell Extensions: Plugin with popular shells like `zsh`, `bash` and `fish`. - Cross Platform: Able to run on Windows, macOS, and Linux.

HyperChat

HyperChat is an open Chat client that utilizes various LLM APIs to enhance the Chat experience and offer productivity tools through the MCP protocol. It supports multiple LLMs like OpenAI, Claude, Qwen, Deepseek, GLM, Ollama. The platform includes a built-in MCP plugin market for easy installation and also allows manual installation of third-party MCPs. Features include Windows and MacOS support, resource support, tools support, English and Chinese language support, built-in MCP client 'hypertools', 'fetch' + 'search', Bot support, Artifacts rendering, KaTeX for mathematical formulas, WebDAV synchronization, and a MCP plugin market. Future plans include permission pop-up, scheduled tasks support, Projects + RAG support, tools implementation by LLM, and a local shell + nodejs + js on web runtime environment.

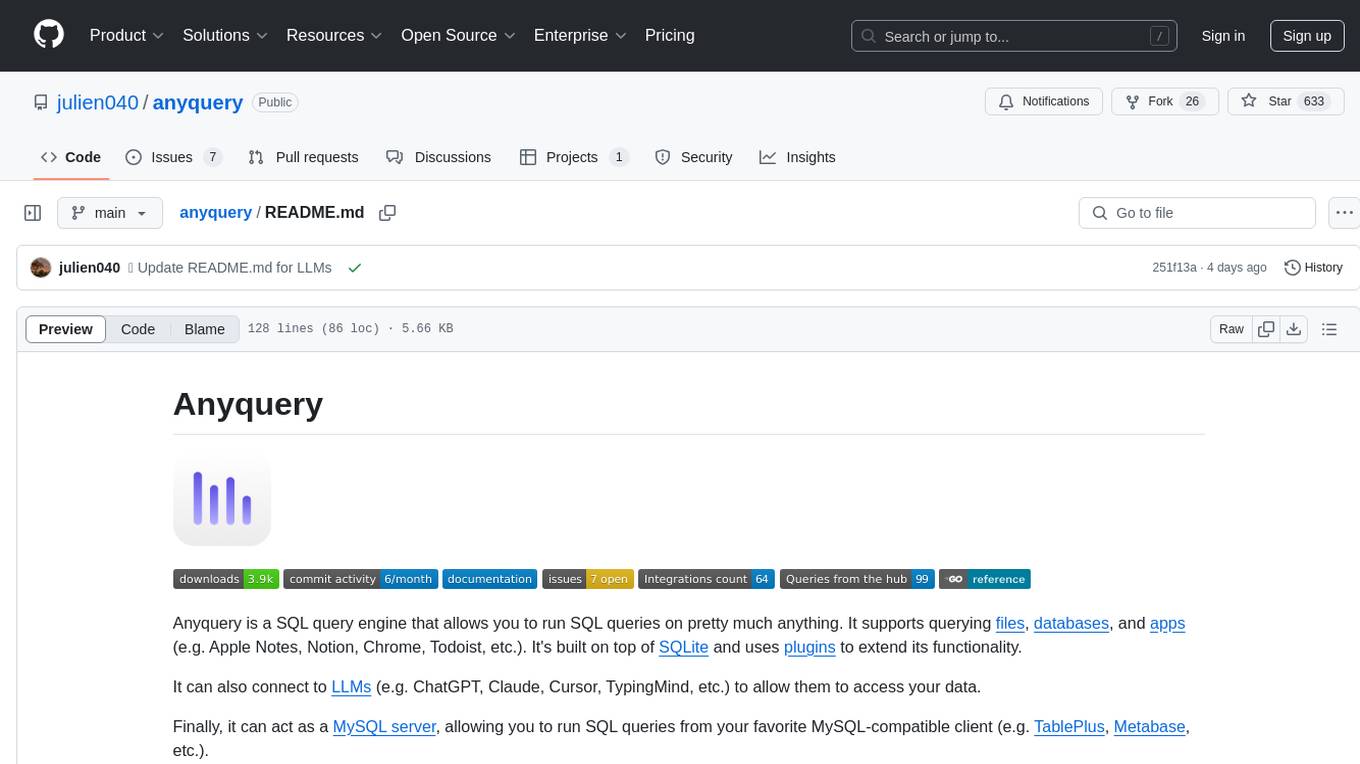

anyquery

Anyquery is a SQL query engine built on SQLite that allows users to run SQL queries on various data sources like files, databases, and apps. It can connect to LLMs to access data and act as a MySQL server for running queries. The tool is extensible through plugins and supports various installation methods like Homebrew, APT, YUM/DNF, Scoop, Winget, and Chocolatey.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.