retro-aim-server

Self-hostable instant messaging server compatible with classic AIM and ICQ clients. (Independently developed, not affiliated with or endorsed by AOL)

Stars: 933

Retro AIM Server is an instant messaging server that revives AOL Instant Messenger clients from the 2000s. It supports Windows AIM client versions 5.0-5.9, away messages, buddy icons, buddy list, chat rooms, instant messaging, user profiles, blocking/visibility toggle/idle notification, and warning. The Management API provides functionality for administering the server, including listing users, creating users, changing passwords, and listing active sessions.

README:

Retro AIM Server is an open-source instant messaging server compatible with classic AIM and ICQ clients.

| Disclaimer |

|---|

| This project is an independent, open-source initiative and is not affiliated with, endorsed by, or associated with AOL or Yahoo! Inc. This project is entirely non-commercial and does not generate any revenue or accept donations. |

The following features are supported:

AIM

- [x] Windows AIM Clients: v1.x-v5.x, v6.x-v7.x

- [x] Away Messages

- [x] Buddy Icons (v4.x, v5.x)

- [x] Buddy List

- [x] Chat Rooms

- [x] Public & Private Chat Exchanges

- [x] Instant Messaging

- [x] User Profiles

- [x] Privacy (allow or block specific users)

- [x] Warning

- [x] User Directory Search

- [x] TOC Protocol Clients: Quick Buddy, gaim, TiK

- [x] File Sharing

- LAN Only: Direct Connect, Get File

- Lan/Internet: Send File

ICQ

- [x] Windows ICQ Clients: 2000b (more to come soon)

- [x] Instant Messaging

- [x] Profiles

- [x] User Search

- [x] Presence Statuses

- [x] Offline Messaging

Get up and running with Retro AIM Server using one of these handy server quickstart guides:

Don't have AIM installed yet? Check out the AIM Client Setup Guide.

...how about ICQ? Check out the ICQ Client Setup Guide.

This project is under active development. Contributions are welcome!

Follow this guide to learn how to compile and run Retro AIM Server.

Check out the Retro AIM Server Discord server to get help or find out how to get involved.

The Management API provides functionality for administering the server (see OpenAPI spec). The following shows you how to run these commands via the command line.

Run these commands from PowerShell, not Command Prompt.

Invoke-WebRequest -Uri http://localhost:8080/user -Method GetInvoke-WebRequest -Uri http://localhost:8080/user `

-Body '{"screen_name":"MyScreenName", "password":"thepassword"}' `

-Method Post `

-ContentType "application/json"Invoke-WebRequest -Uri http://localhost:8080/user `

-Body '{"screen_name": "user123"}' `

-Method Delete `

-ContentType "application/json"Invoke-WebRequest -Uri http://localhost:8080/user/password `

-Body '{"screen_name":"MyScreenName", "password":"thenewpassword"}' `

-Method Put `

-ContentType "application/json"This request lists sessions for all logged in users.

Invoke-WebRequest -Uri http://localhost:8080/session -Method GetInvoke-WebRequest -Uri http://localhost:8080/chat/room/public `

-Body '{"name":"Office Hijinks"}' `

-Method Post `

-ContentType "application/json"Invoke-WebRequest -Uri http://localhost:8080/chat/room/public -Method Getcurl http://localhost:8080/usercurl -d'{"screen_name":"MyScreenName", "password":"thepassword"}' http://localhost:8080/usercurl -d'{"screen_name":"100003", "password":"thepassw"}' http://localhost:8080/usercurl -X DELETE -d '{"screen_name": "user123"}' http://localhost:8080/usercurl -X PUT -d'{"screen_name":"MyScreenName", "password":"thenewpassword"}' http://localhost:8080/user/passwordThis request lists sessions for all logged in users.

curl http://localhost:8080/sessioncurl -d'{"name":"Office Hijinks"}' http://localhost:8080/chat/room/publiccurl http://localhost:8080/chat/room/public- aim-oscar-server is another cool open source AIM server project.

- NINA Wiki is an indispensable source for figuring out the OSCAR API.

- libpurple is also an invaluable OSCAR reference (especially version 2.10.6-1).

Retro AIM Server is licensed under the MIT license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for retro-aim-server

Similar Open Source Tools

retro-aim-server

Retro AIM Server is an instant messaging server that revives AOL Instant Messenger clients from the 2000s. It supports Windows AIM client versions 5.0-5.9, away messages, buddy icons, buddy list, chat rooms, instant messaging, user profiles, blocking/visibility toggle/idle notification, and warning. The Management API provides functionality for administering the server, including listing users, creating users, changing passwords, and listing active sessions.

ChatGPT-Next-Web

ChatGPT Next Web is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro models. It allows users to deploy their private ChatGPT applications with ease. The tool offers features like one-click deployment, compact client for Linux/Windows/MacOS, compatibility with self-deployed LLMs, privacy-first approach with local data storage, markdown support, responsive design, fast loading speed, prompt templates, awesome prompts, chat history compression, multilingual support, and more.

Kord-Ai

Kord-Ai is a WhatsApp bot designed to automate interactions on WhatsApp by executing predefined commands or responding to user inputs. It can handle tasks like sending messages, sharing media, and managing group activities, providing convenience and efficiency for users and businesses. The bot offers features for deployment on various platforms, including Heroku, Replit, Koyeb, Glitch, Codespace, Render, Railway, VPS, and PC. Users can deploy the bot by obtaining a session ID, forking the repository, setting configurations in the Config.js file, and starting/stopping the bot using npm commands. It is important to note that Kord-Ai is a bot created by M3264, not affiliated with WhatsApp, and users should be cautious in its usage.

TalkWithGemini

Talk With Gemini is a web application that allows users to deploy their private Gemini application for free with one click. It supports Gemini Pro and Gemini Pro Vision models. The application features talk mode for direct communication with Gemini, visual recognition for understanding picture content, full Markdown support, automatic compression of chat records, privacy and security with local data storage, well-designed UI with responsive design, fast loading speed, and multi-language support. The tool is designed to be user-friendly and versatile for various deployment options and language preferences.

klavis

Klavis AI is a production-ready solution for managing Multiple Communication Protocol (MCP) servers. It offers self-hosted solutions and a hosted service with enterprise OAuth support. With Klavis AI, users can easily deploy and manage over 50 MCP servers for various services like GitHub, Gmail, Google Sheets, YouTube, Slack, and more. The tool provides instant access to MCP servers, seamless authentication, and integration with AI frameworks, making it ideal for individuals and businesses looking to streamline their communication and data management workflows.

HyperChat

HyperChat is an open Chat client that utilizes various LLM APIs to enhance the Chat experience and offer productivity tools through the MCP protocol. It supports multiple LLMs like OpenAI, Claude, Qwen, Deepseek, GLM, Ollama. The platform includes a built-in MCP plugin market for easy installation and also allows manual installation of third-party MCPs. Features include Windows and MacOS support, resource support, tools support, English and Chinese language support, built-in MCP client 'hypertools', 'fetch' + 'search', Bot support, Artifacts rendering, KaTeX for mathematical formulas, WebDAV synchronization, and a MCP plugin market. Future plans include permission pop-up, scheduled tasks support, Projects + RAG support, tools implementation by LLM, and a local shell + nodejs + js on web runtime environment.

llama-api-server

This project aims to create a RESTful API server compatible with the OpenAI API using open-source backends like llama/llama2. With this project, various GPT tools/frameworks can be compatible with your own model. Key features include: - **Compatibility with OpenAI API**: The API server follows the OpenAI API structure, allowing seamless integration with existing tools and frameworks. - **Support for Multiple Backends**: The server supports both llama.cpp and pyllama backends, providing flexibility in model selection. - **Customization Options**: Users can configure model parameters such as temperature, top_p, and top_k to fine-tune the model's behavior. - **Batch Processing**: The API supports batch processing for embeddings, enabling efficient handling of multiple inputs. - **Token Authentication**: The server utilizes token authentication to secure access to the API. This tool is particularly useful for developers and researchers who want to integrate large language models into their applications or explore custom models without relying on proprietary APIs.

WilliamButcherBot

WilliamButcherBot is a Telegram Group Manager Bot and Userbot written in Python using Pyrogram. It provides features for managing Telegram groups and users, with ready-to-use methods available. The bot requires Python 3.9, Telegram API Key, Telegram Bot Token, and MongoDB URI. Users can install it locally or on a VPS, run it directly, generate Pyrogram session for Heroku, or use Docker for deployment. Additionally, users can write new modules to extend the bot's functionality by adding them to the wbb/modules/ directory.

R2R

R2R (RAG to Riches) is a fast and efficient framework for serving high-quality Retrieval-Augmented Generation (RAG) to end users. The framework is designed with customizable pipelines and a feature-rich FastAPI implementation, enabling developers to quickly deploy and scale RAG-based applications. R2R was conceived to bridge the gap between local LLM experimentation and scalable production solutions. **R2R is to LangChain/LlamaIndex what NextJS is to React**. A JavaScript client for R2R deployments can be found here. ### Key Features * **🚀 Deploy** : Instantly launch production-ready RAG pipelines with streaming capabilities. * **🧩 Customize** : Tailor your pipeline with intuitive configuration files. * **🔌 Extend** : Enhance your pipeline with custom code integrations. * **⚖️ Autoscale** : Scale your pipeline effortlessly in the cloud using SciPhi. * **🤖 OSS** : Benefit from a framework developed by the open-source community, designed to simplify RAG deployment.

telegram-deepseek-bot

This repository contains a Telegram bot built with Golang that integrates with DeepSeek API to provide AI-powered responses. The bot supports streaming replies, making interactions feel more natural and dynamic. It offers features like AI responses, streaming output, command handling, and easy deployment. Users can configure the bot via environment variables for customization. The bot can be deployed locally or on a cloud server, and it supports custom commands and real-time responses.

genius-ai

Genius is a modern Next.js 14 SaaS AI platform that provides a comprehensive folder structure for app development. It offers features like authentication, dashboard management, landing pages, API integration, and more. The platform is built using React JS, Next JS, TypeScript, Tailwind CSS, and integrates with services like Netlify, Prisma, MySQL, and Stripe. Genius enables users to create AI-powered applications with functionalities such as conversation generation, image processing, code generation, and more. It also includes features like Clerk authentication, OpenAI integration, Replicate API usage, Aiven database connectivity, and Stripe API/webhook setup. The platform is fully configurable and provides a seamless development experience for building AI-driven applications.

ClaudeBar

ClaudeBar is a macOS menu bar application that monitors AI coding assistant usage quotas. It allows users to keep track of their usage of Claude, Codex, Gemini, GitHub Copilot, Antigravity, and Z.ai at a glance. The application offers multi-provider support, real-time quota tracking, multiple themes, visual status indicators, system notifications, auto-refresh feature, and keyboard shortcuts for quick access. Users can customize monitoring by toggling individual providers on/off and receive alerts when quota status changes. The tool requires macOS 15+, Swift 6.2+, and CLI tools installed for the providers to be monitored.

conduit

Conduit is an open-source, cross-platform mobile application for Open-WebUI, providing a native mobile experience for interacting with your self-hosted AI infrastructure. It supports real-time chat, model selection, conversation management, markdown rendering, theme support, voice input, file uploads, multi-modal support, secure storage, folder management, and tools invocation. Conduit offers multiple authentication flows and follows a clean architecture pattern with Riverpod for state management, Dio for HTTP networking, WebSocket for real-time streaming, and Flutter Secure Storage for credential management.

browser4

Browser4 is a lightning-fast, coroutine-safe browser designed for AI integration with large language models. It offers ultra-fast automation, deep web understanding, and powerful data extraction APIs. Users can automate the browser, extract data at scale, and perform tasks like summarizing products, extracting product details, and finding specific links. The tool is developer-friendly, supports AI-powered automation, and provides advanced features like X-SQL for precise data extraction. It also offers RPA capabilities, browser control, and complex data extraction with X-SQL. Browser4 is suitable for web scraping, data extraction, automation, and AI integration tasks.

z-ai-sdk-python

Z.ai Open Platform Python SDK is the official Python SDK for Z.ai's large model open interface, providing developers with easy access to Z.ai's open APIs. The SDK offers core features like chat completions, embeddings, video generation, audio processing, assistant API, and advanced tools. It supports various functionalities such as speech transcription, text-to-video generation, image understanding, and structured conversation handling. Developers can customize client behavior, configure API keys, and handle errors efficiently. The SDK is designed to simplify AI interactions and enhance AI capabilities for developers.

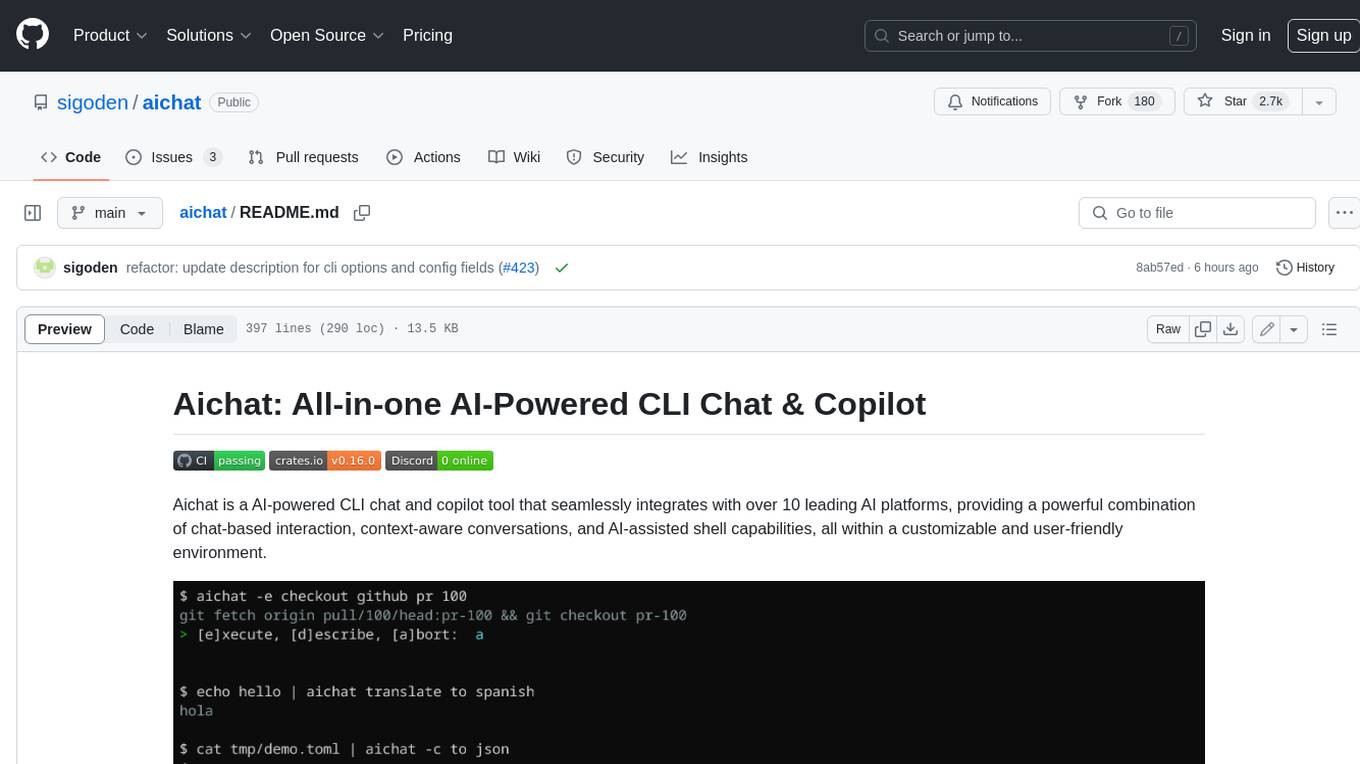

aichat

Aichat is an AI-powered CLI chat and copilot tool that seamlessly integrates with over 10 leading AI platforms, providing a powerful combination of chat-based interaction, context-aware conversations, and AI-assisted shell capabilities, all within a customizable and user-friendly environment.

For similar tasks

retro-aim-server

Retro AIM Server is an instant messaging server that revives AOL Instant Messenger clients from the 2000s. It supports Windows AIM client versions 5.0-5.9, away messages, buddy icons, buddy list, chat rooms, instant messaging, user profiles, blocking/visibility toggle/idle notification, and warning. The Management API provides functionality for administering the server, including listing users, creating users, changing passwords, and listing active sessions.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.