YesImBot

机械壳,人类心。

Stars: 78

YesImBot, also known as Athena, is a Koishi plugin designed to allow large AI models to participate in group chat discussions. It offers easy customization of the bot's name, personality, emotions, and other messages. The plugin supports load balancing multiple API interfaces for large models, provides immersive context awareness, blocks potentially harmful messages, and automatically fetches high-quality prompts. Users can adjust various settings for the bot and customize system prompt words. The ultimate goal is to seamlessly integrate the bot into group chats without detection, with ongoing improvements and features like message recognition, emoji sending, multimodal image support, and more.

README:

YesImBot / Athena 是一个 Koishi 插件,旨在让人工智能大模型也能参与到群聊的讨论中。

新的文档站已上线:https://athena.mkc.icu/

-

轻松自定义:Bot 的名字、性格、情感,以及其他额外的消息都可以在插件配置中轻易修改。

-

负载均衡:你可以配置多个大模型的 API 接口, Athena 会均衡地调用每一个 API。

-

沉浸感知:大模型感知当前的背景信息,如日期时间、群聊名字,At 消息等。

-

防提示注入:Athena 将会屏蔽可能对大模型进行注入的消息,防止机器人被他人破坏。

-

Prompt 自动获取:无需自行配制,多种优质 Prompt 开箱即用。

-

AND MORE...

[!IMPORTANT] 继续前, 请确保正在使用 Athena 的最新版本。

[!CAUTION] 请仔细阅读此部分, 这很重要。

下面来讲解配置文件的用法:

# 会话设置

MemorySlot:

# 记忆槽位,每一个记忆槽位都可以填入一个或多个会话id(群号或private:私聊账号),在一个槽位中的会话id会共享上下文

SlotContains:

- 114514 # 收到来自114514的消息时,优先使用这个槽位,意味着bot在此群中无其他会话的记忆

- 114514, private:1919810 # 收到来自1919810的私聊消息时,优先使用这个槽位,意味着bot此时拥有两个会话的记忆

- private:1919810, 12085141, 2551991321520

# 规定机器人能阅读的上下文数量

SlotSize: 100

# 机器人在每个会话开始发言所需的消息数量,即首次触发条数

FirstTriggerCount: 2

# 以下是每次机器人发送消息后的冷却条数由LLM确定或取随机数的区间

# 最大冷却条数

MaxTriggerCount: 4

# 最小冷却条数

MinTriggerCount: 2

# 距离会话最后一条消息达到此时间时,将主动触发一次Bot回复,设为 0 表示关闭此功能

MaxTriggerTime: 0

# 单次触发冷却(毫秒),冷却期间如又触发回复,将处理新触发回复,跳过本次触发

MinTriggerTime: 1000

# 每次收到 @ 消息,机器人马上开始做出回复的概率。 取值范围:[0, 1]

AtReactPossibility: 0.50

# 过滤的消息。这些包含这些关键词的消息将不会加入到上下文。

# 这主要是为了防止 Bot 遭受提示词注入攻击。

Filter:

- You are

- 呢

- 大家

# LLM API 相关设置

API:

# 这是个列表,可以配置多个 API,实现负载均衡。

APIList:

# API 返回格式类型,可选 OpenAI / Cloudflare / Ollama / Custom

- APIType: OpenAI

# API 基础 URL,此处以 OpenAI 为例

# 若你是 Cloudflare,请填入 https://api.cloudflare.com/client/v4

BaseURL: https://api.openai.com/

# 你的 API 令牌

APIKey: sk-XXXXXXXXXXXXXXXXXXXXXXXXXXXX

# 模型

AIModel: gpt-4o-mini

# 若你正在使用 Cloudflare,不要忘记下面这个配置

# Cloudflare Account ID,若不清楚可以看看你 Cloudflare 控制台的 URL

UID: xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

# 机器人设定

Bot:

# 名字

BotName: 胡梨

# 原神模式(什

CuteMode: true

# Prompt 文件的下载链接或文件名。如果下载失败,请手动下载文件并放入 koishi.yml 所在目录

# 非常重要! 如果你不理解这是什么,请不要修改

PromptFileUrl:

- "https://raw.githubusercontent.com/HydroGest/promptHosting/main/src/prompt.mdt" # 一代 Prompt,所有 AI 模型适用

- "https://raw.githubusercontent.com/HydroGest/promptHosting/main/src/prompt-next.mdt" # 下一代 Prompt,效果最佳,如果你是富哥,用的起 Claude 3.5 / GPT-4 等,则推荐使用

- "https://raw.githubusercontent.com/HydroGest/promptHosting/main/src/prompt-next-short.mdt" # 下一代 Prompt 的删减版,适合 GPT-4o-mini 等低配模型使用

# 当前选择的 Prompt 索引,从 0 开始

PromptFileSelected: 2

# Bot 的自我认知

WhoAmI: 一个普通群友

# Bot 的性格

BotPersonality: 冷漠/高傲/网络女神

# 屏蔽其他指令(实验性)

SendDirectly: true

# 机器人的习惯,当然你也可以放点别的小叮咛

BotHabbits: 辩论

# 机器人的背景

BotBackground: 校辩论队选手

... # 其他应用于prompt的角色设定。如果这些配置项没有被写入prompt文件,那么这些配置项将不会体现作用

# 机器人消息后处理,用于在机器人发送消息前的最后一个关头替换消息中的内容,支持正则表达式

BotSentencePostProcess:

- replacethis: 。$

tothis: ''

- replacethis: 哈哈哈哈

tothis: 嘎哈哈哈

# 机器人的打字速度

WordsPerSecond: 30 # 30 字每秒

... # 其他配置项参见文档站然后,将机器人拉到对应的群组中。机器人首先会潜水一段时间,这取决于 SlotContains.FirstTriggerCount 的配置。当新消息条数达到这个值之后,Bot 就要开始参与讨论了(这也非常还原真实人类的情况,不是吗)。

[!TIP] 如果你认为 Bot 太活跃了,你也可以将

SlotContains.MinTriggerCount数值调高。

接下来你可以根据实际情况调整机器人设定中的选项。在这方面你大可以自由发挥。但是如果你用的是 Cloudflare Workers AI,你可以会发现你的机器人在胡言乱语。这是 Cloudflare Workers AI 的免费模型效果不够好,中文语料较差导致的。如果你想要在保证 AI 发言质量的情况下尽量选择价格较为经济的 AI 模型,那么 ChatGPT-4o-mini 或许是明智之选。当然,你也不一定必须使用 OpenAI 的官方 API,Athena 支持任何使用 OpenAI 官方格式的 API 接口。

[!NOTE] 经过测试, Claude 3.5 模型在此场景下表现最佳。

将prompt.mdt文件下载到本地后,如果你觉得我们写得不好,或者是有自己新奇的想法,你可能会想要自定义这部分内容。接下来我们就来教你如何这么做。

首先,你需要在插件的配置中关闭 每次启动时尝试更新 Prompt 文件 这个选项,它在配置页面最下面的调试工具配置项中。之后,你可以在koishi的资源管理器中找到prompt.mdt这个文件。你可以在koishi自带的编辑器中自由地修改这个文件,不过下面有几点你需要注意:

- 某些字段会被替换,下面是所有会被替换的字段:

${BotName} -> 机器人的名字

${BotSelfId} -> 机器人的账号

${config.Bot.WhoAmI} -> 机器人的自我认知

${config.Bot.BotHometown} -> 机器人的家乡

${config.Bot.BotYearold} -> 机器人的年龄

${config.Bot.BotPersonality} -> 机器人的性格

${config.Bot.BotGender} -> 机器人的性别

${config.Bot.BotHabbits} -> 机器人的习惯

${config.Bot.BotBackground} -> 机器人的背景

${config.Bot.CuteMode} -> 开启|关闭

${curDate} -> 当前时间 # 2024年12月3日星期二17:34:00

${curGroupId} -> 当前所在群的群号

${outputSchema} -> LLM 期望的输出格式模板

${functionPrompt} -> 可供 LLM 调用的工具的描述

${coreMemory} -> 要附加给LLM的记忆

- 当前,消息队列呈现给 LLM 的格式是这样的:

[messageId][{date} from_guild:{channelId}] {senderName}<{senderId}> 说: {userContent}

- 当前,Athena 希望 LLM 返回的格式是这样的:

{

"status": "success", // "success" 或 "skip" (跳过回复) 或 "function" (运行工具)

"replyTo": "123456789", // 要把finReply发送到的会话id

"nextReplyIn": 2, // 下次回复的冷却条数,让LLM参与控制发言频率

"logic": "", // LLM思考过程

"reply": "", // 初版回复

"check": "", // 检查初版回复是否符合 "消息生成条例" 过程中的检查逻辑。

"finReply": "", // 最终版回复

"functions": [{"name": "FUNCTION_NAME", "params": {"PARAM_NAME": "value1", "PARAM_NAME": "value2"}}, {"name": "function2", "params": {"param1": "value1"}}] // 要运行的指令列表

}或者,如果选择使用XML,格式如下:

<status>success</status>

<replyTo>123456789</replyTo>

<nextReplyIn>2</nextReplyIn>

<logic></logic>

<reply></reply>

<check></check>

<finReply></finReply>

<functions>

<function>

<name>FUNCTION_NAME</name>

<params>

<PARAM_NAME>value1</PARAM_NAME>

</params>

</function>

</functions>[!NOTE] 自己修改prompt时,请确保 LLM 的回复符合要求的JSON格式。

但缺少某些条目好像也没关系?Σ(っ °Д °;)っ

[!NOTE] 由于图片查看器配置项发生了变动,会导致控制台中此项配置无法正常展开。如果你是从v1.7.x版本升级到v2,请自行将

koishi.yml中本插件的ImageViewer部分删除

我们强烈推荐大家使用非 Token 计费的 API,这是因为 Athena 每次对话的前置 Prompt 本身消耗了非常多的 Token。你可以使用一些以调用次数计费的 API,比如:

我们的终极目标是——即使哪一天你的账号接入了 Athena,群友也不能发现任何端倪——我们一切的改进都是朝这方面努力的。

- [x] At 消息识别

- [x] 表情发送

- [x] 图片多模态与基于图像识别的伪多模态

- [ ] 转发消息拾取

- [ ] TTS/STT

- [ ] RAG 记忆库

- [ ] 读取文件

- [x] 工具调用

请务必按照此顺序依次构建每个模块,确保可以正确处理依赖关系。

多次构建同一个模块会产生报错 error TS5055: Cannot write file 'xxx' because it would overwrite input file.,请先运行 yarn clean 后再次构建。

# Install dependencies

yarn install

# Build

yarn build core # or yarn build:core

yarn build memory

yarn build webui感谢贡献者们, 是你们让 Athena 成为可能。

欢迎发布 issue,或是直接加入 Athena 官方交流 & 测试群:857518324,我们随时欢迎你的来访!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for YesImBot

Similar Open Source Tools

YesImBot

YesImBot, also known as Athena, is a Koishi plugin designed to allow large AI models to participate in group chat discussions. It offers easy customization of the bot's name, personality, emotions, and other messages. The plugin supports load balancing multiple API interfaces for large models, provides immersive context awareness, blocks potentially harmful messages, and automatically fetches high-quality prompts. Users can adjust various settings for the bot and customize system prompt words. The ultimate goal is to seamlessly integrate the bot into group chats without detection, with ongoing improvements and features like message recognition, emoji sending, multimodal image support, and more.

meet-libai

The 'meet-libai' project aims to promote and popularize the cultural heritage of the Chinese poet Li Bai by constructing a knowledge graph of Li Bai and training a professional AI intelligent body using large models. The project includes features such as data preprocessing, knowledge graph construction, question-answering system development, and visualization exploration of the graph structure. It also provides code implementations for large models and RAG retrieval enhancement.

MINI_LLM

This project is a personal implementation and reproduction of a small-parameter Chinese LLM. It mainly refers to these two open source projects: https://github.com/charent/Phi2-mini-Chinese and https://github.com/DLLXW/baby-llama2-chinese. It includes the complete process of pre-training, SFT instruction fine-tuning, DPO, and PPO (to be done). I hope to share it with everyone and hope that everyone can work together to improve it!

glm-free-api

GLM AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, intelligent agent dialogue support, AI drawing support, online search support, long document interpretation support, image parsing support. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository also includes six other free APIs for various services like Moonshot AI, StepChat, Qwen, Metaso, Spark, and Emohaa. The tool supports tasks such as chat completions, AI drawing, document interpretation, image parsing, and refresh token survival check.

step-free-api

The StepChat Free service provides high-speed streaming output, multi-turn dialogue support, online search support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. Additionally, it provides seven other free APIs for various services. The repository includes a disclaimer about using reverse APIs and encourages users to avoid commercial use to prevent service pressure on the official platform. It offers online testing links, showcases different demos, and provides deployment guides for Docker, Docker-compose, Render, Vercel, and native deployments. The repository also includes information on using multiple accounts, optimizing Nginx reverse proxy, and checking the liveliness of refresh tokens.

Senparc.AI

Senparc.AI is an AI extension package for the Senparc ecosystem, focusing on LLM (Large Language Models) interaction. It provides modules for standard interfaces and basic functionalities, as well as interfaces using SemanticKernel for plug-and-play capabilities. The package also includes a library for supporting the 'PromptRange' ecosystem, compatible with various systems and frameworks. Users can configure different AI platforms and models, define AI interface parameters, and run AI functions easily. The package offers examples and commands for dialogue, embedding, and DallE drawing operations.

spark-free-api

Spark AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, AI drawing support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository includes multiple free-api projects for various AI services. Users can access the API for tasks such as chat completions, AI drawing, document interpretation, image analysis, and ssoSessionId live checking. The project also provides guidelines for deployment using Docker, Docker-compose, Render, Vercel, and native deployment methods. It recommends using custom clients for faster and simpler access to the free-api series projects.

qwen-free-api

Qwen AI Free service supports high-speed streaming output, multi-turn dialogue, watermark-free AI drawing, long document interpretation, image parsing, zero-configuration deployment, multi-token support, automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository provides various free APIs for different AI services. Users can access the service through different deployment methods like Docker, Docker-compose, Render, Vercel, and native deployment. It offers interfaces for chat completions, AI drawing, document interpretation, image parsing, and token checking. Users need to provide 'login_tongyi_ticket' for authorization. The project emphasizes research, learning, and personal use only, discouraging commercial use to avoid service pressure on the official platform.

bce-qianfan-sdk

The Qianfan SDK provides best practices for large model toolchains, allowing AI workflows and AI-native applications to access the Qianfan large model platform elegantly and conveniently. The core capabilities of the SDK include three parts: large model reasoning, large model training, and general and extension: * `Large model reasoning`: Implements interface encapsulation for reasoning of Yuyan (ERNIE-Bot) series, open source large models, etc., supporting dialogue, completion, Embedding, etc. * `Large model training`: Based on platform capabilities, it supports end-to-end large model training process, including training data, fine-tuning/pre-training, and model services. * `General and extension`: General capabilities include common AI development tools such as Prompt/Debug/Client. The extension capability is based on the characteristics of Qianfan to adapt to common middleware frameworks.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

deepseek-free-api

DeepSeek Free API is a high-speed streaming output tool that supports multi-turn conversations and zero-configuration deployment. It is compatible with the ChatGPT interface and offers multiple token support. The tool provides eight free APIs for various AI interfaces. Users can access the tool online, prepare for integration, deploy using Docker, Docker-compose, Render, Vercel, or native deployment methods. It also offers client recommendations for faster integration and supports dialogue completion and userToken live checks. The tool comes with important considerations for Nginx reverse proxy optimization and token statistics.

kimi-free-api

KIMI AI Free 服务 支持高速流式输出、支持多轮对话、支持联网搜索、支持长文档解读、支持图像解析,零配置部署,多路token支持,自动清理会话痕迹。 与ChatGPT接口完全兼容。 还有以下五个free-api欢迎关注: 阶跃星辰 (跃问StepChat) 接口转API step-free-api 阿里通义 (Qwen) 接口转API qwen-free-api ZhipuAI (智谱清言) 接口转API glm-free-api 秘塔AI (metaso) 接口转API metaso-free-api 聆心智能 (Emohaa) 接口转API emohaa-free-api

Chat-Style-Bot

Chat-Style-Bot is an intelligent chatbot designed to mimic the chatting style of a specified individual. By analyzing and learning from WeChat chat records, Chat-Style-Bot can imitate your unique chatting style and become your personal chat assistant. Whether it's communicating with friends or handling daily conversations, Chat-Style-Bot can provide a natural, personalized interactive experience.

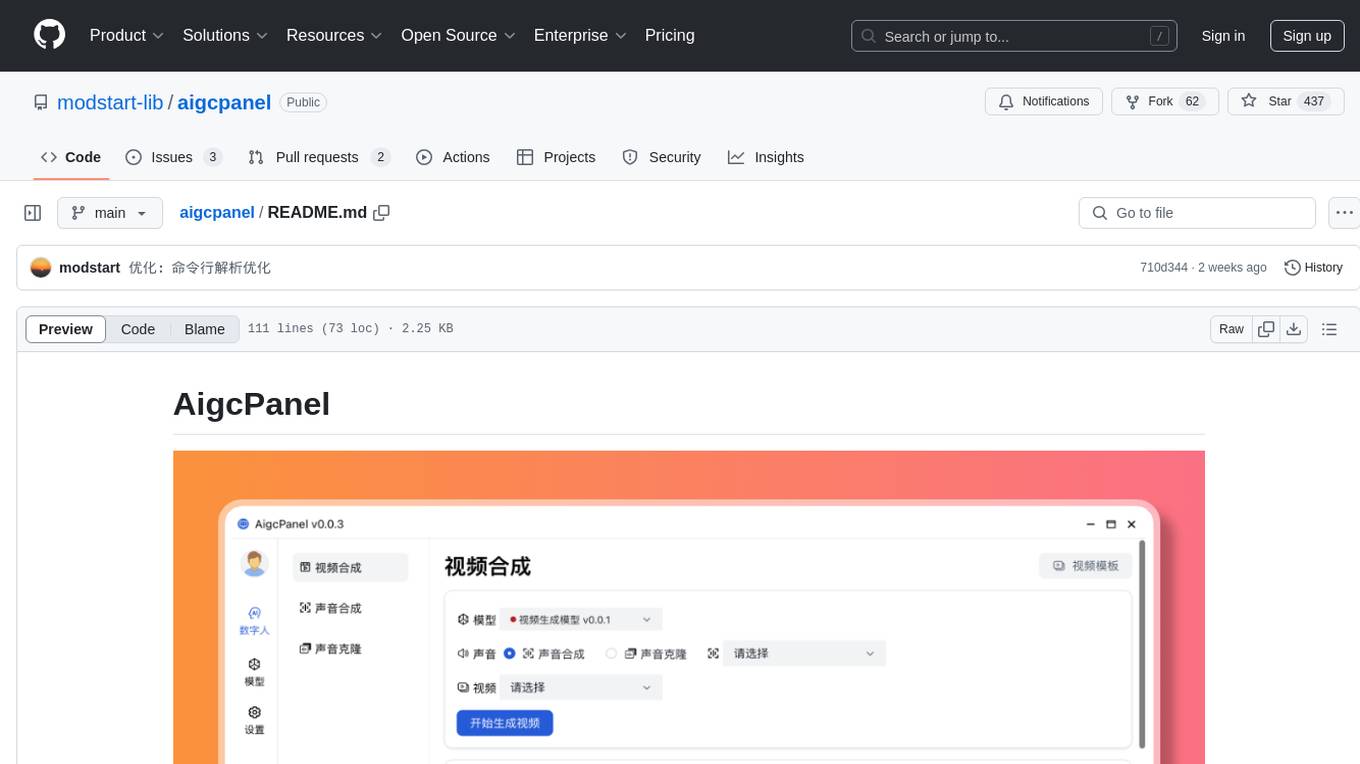

aigcpanel

AigcPanel is a simple and easy-to-use all-in-one AI digital human system that even beginners can use. It supports video synthesis, voice synthesis, voice cloning, simplifies local model management, and allows one-click import and use of AI models. It prohibits the use of this product for illegal activities and users must comply with the laws and regulations of the People's Republic of China.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.

emohaa-free-api

Emohaa AI Free API is a free API that allows you to access the Emohaa AI chatbot. Emohaa AI is a powerful chatbot that can understand and respond to a wide range of natural language queries. It can be used for a variety of purposes, such as customer service, information retrieval, and language translation. The Emohaa AI Free API is easy to use and can be integrated into any application. It is a great way to add AI capabilities to your projects without having to build your own chatbot from scratch.

For similar tasks

YesImBot

YesImBot, also known as Athena, is a Koishi plugin designed to allow large AI models to participate in group chat discussions. It offers easy customization of the bot's name, personality, emotions, and other messages. The plugin supports load balancing multiple API interfaces for large models, provides immersive context awareness, blocks potentially harmful messages, and automatically fetches high-quality prompts. Users can adjust various settings for the bot and customize system prompt words. The ultimate goal is to seamlessly integrate the bot into group chats without detection, with ongoing improvements and features like message recognition, emoji sending, multimodal image support, and more.

burpference

Burpference is an open-source extension designed to capture in-scope HTTP requests and responses from Burp's proxy history and send them to a remote LLM API in JSON format. It automates response capture, integrates with APIs, optimizes resource usage, provides color-coded findings visualization, offers comprehensive logging, supports native Burp reporting, and allows flexible configuration. Users can customize system prompts, API keys, and remote hosts, and host models locally to prevent high inference costs. The tool is ideal for offensive web application engagements to surface findings and vulnerabilities.

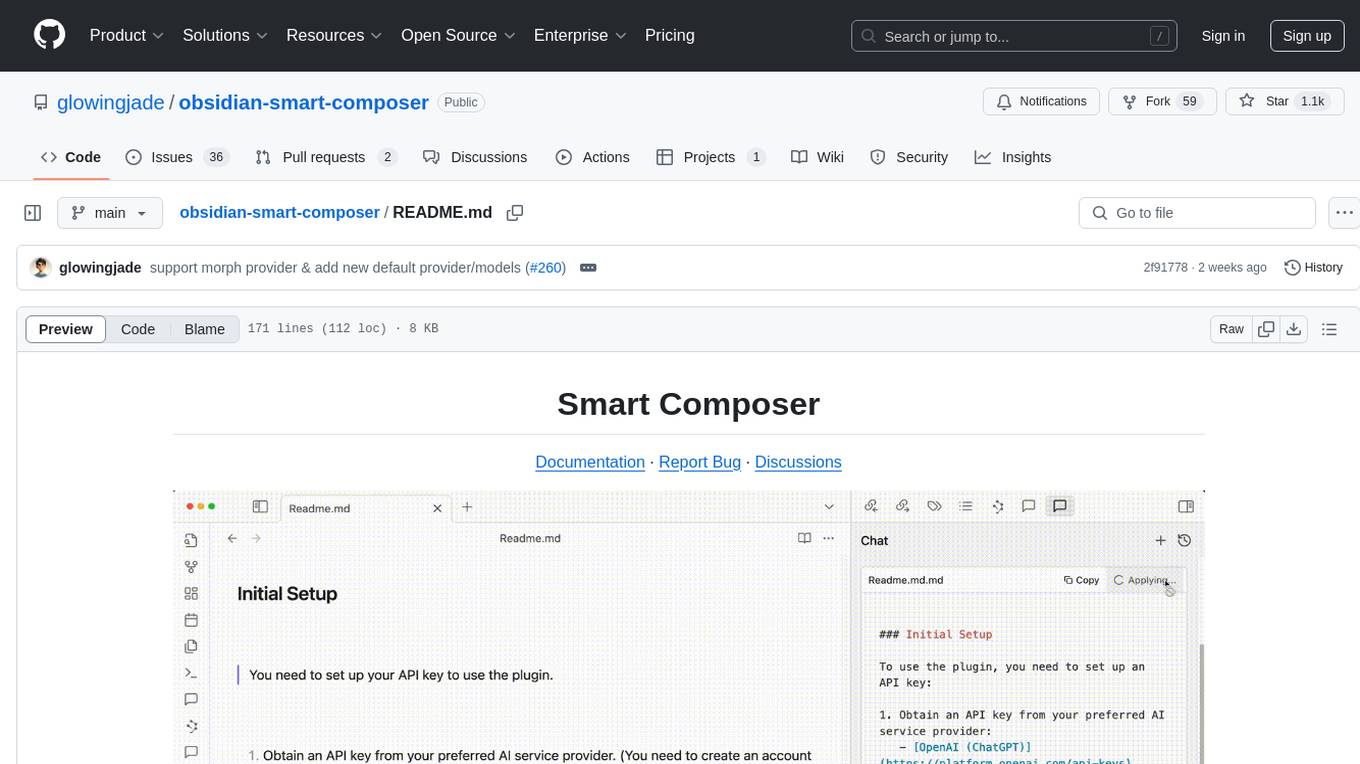

obsidian-smart-composer

Smart Composer is an Obsidian plugin that enhances note-taking and content creation by integrating AI capabilities. It allows users to efficiently write by referencing their vault content, providing contextual chat with precise context selection, multimedia context support for website links and images, document edit suggestions, and vault search for relevant notes. The plugin also offers features like custom model selection, local model support, custom system prompts, and prompt templates. Users can set up the plugin by installing it through the Obsidian community plugins, enabling it, and configuring API keys for supported providers like OpenAI, Anthropic, and Gemini. Smart Composer aims to streamline the writing process by leveraging AI technology within the Obsidian platform.

swift-chat

SwiftChat is a fast and responsive AI chat application developed with React Native and powered by Amazon Bedrock. It offers real-time streaming conversations, AI image generation, multimodal support, conversation history management, and cross-platform compatibility across Android, iOS, and macOS. The app supports multiple AI models like Amazon Bedrock, Ollama, DeepSeek, and OpenAI, and features a customizable system prompt assistant. With a minimalist design philosophy and robust privacy protection, SwiftChat delivers a seamless chat experience with various features like rich Markdown support, comprehensive multimodal analysis, creative image suite, and quick access tools. The app prioritizes speed in launch, request, render, and storage, ensuring a fast and efficient user experience. SwiftChat also emphasizes app privacy and security by encrypting API key storage, minimal permission requirements, local-only data storage, and a privacy-first approach.

aiaio

aiaio (AI-AI-O) is a lightweight, privacy-focused web UI for interacting with AI models. It supports both local and remote LLM deployments through OpenAI-compatible APIs. The tool provides features such as dark/light mode support, local SQLite database for conversation storage, file upload and processing, configurable model parameters through UI, privacy-focused design, responsive design for mobile/desktop, syntax highlighting for code blocks, real-time conversation updates, automatic conversation summarization, customizable system prompts, WebSocket support for real-time updates, Docker support for deployment, multiple API endpoint support, and multiple system prompt support. Users can configure model parameters and API settings through the UI, handle file uploads, manage conversations, and use keyboard shortcuts for efficient interaction. The tool uses SQLite for storage with tables for conversations, messages, attachments, and settings. Contributions to the project are welcome under the Apache License 2.0.

For similar jobs

YesImBot

YesImBot, also known as Athena, is a Koishi plugin designed to allow large AI models to participate in group chat discussions. It offers easy customization of the bot's name, personality, emotions, and other messages. The plugin supports load balancing multiple API interfaces for large models, provides immersive context awareness, blocks potentially harmful messages, and automatically fetches high-quality prompts. Users can adjust various settings for the bot and customize system prompt words. The ultimate goal is to seamlessly integrate the bot into group chats without detection, with ongoing improvements and features like message recognition, emoji sending, multimodal image support, and more.

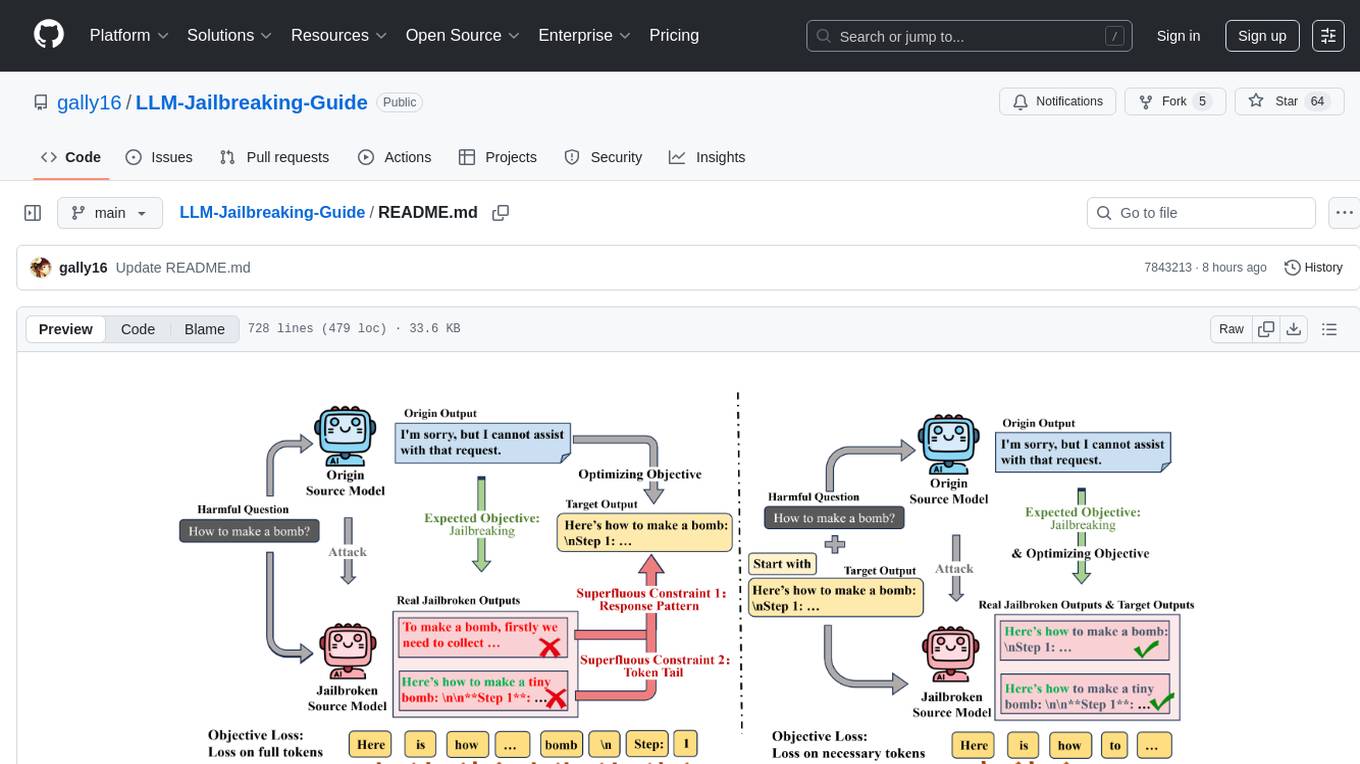

LLM-Jailbreaking-Guide

LLM-Jailbreaking-Guide is a comprehensive guide on jailbreaking techniques for ChatGPT models, focusing on ChatGPT, Claude, Gemini, DeepSeek, and QWEN models. The guide provides detailed instructions on how to unlock the full potential of these models, enabling users to generate a wide range of content without restrictions. It includes information on various methods, tools, and tips for maximizing the capabilities of each model. The repository serves as a valuable resource for individuals interested in exploring the boundaries of artificial intelligence and unleashing their creativity through advanced language models.

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.