Neosgenesis

https://dev.to/answeryt/the-demo-spell-and-production-dilemma-of-ai-agents-how-i-built-a-self-learning-agent-system-4okk

Stars: 1251

Neogenesis System is an advanced AI decision-making framework that enables agents to 'think about how to think'. It implements a metacognitive approach with real-time learning, tool integration, and multi-LLM support, allowing AI to make expert-level decisions in complex environments. Key features include metacognitive intelligence, tool-enhanced decisions, real-time learning, aha-moment breakthroughs, experience accumulation, and multi-LLM support.

README:

Neogenesis System is an advanced AI decision-making framework that enables agents to "think about how to think". Unlike traditional question-answer systems, it implements a metacognitive approach with real-time learning, tool integration, and multi-LLM support, allowing AI to make expert-level decisions in complex environments.

- 🧠 Metacognitive Intelligence: AI that thinks about "how to think"

- 🔧 Tool-Enhanced Decisions: Dynamic tool integration during decision-making

- 🔬 Real-time Learning: Learns during thinking phase, not just after execution

- 💡 Aha-Moment Breakthroughs: Creative problem-solving when stuck

- 🏆 Experience Accumulation: Builds reusable decision templates from success

- 🤖 Multi-LLM Support: OpenAI, Anthropic, DeepSeek, Ollama with auto-failover

Traditional AI: Think → Execute → Learn

Neogenesis: Think → Verify → Learn → Optimize → Decide (all during thinking phase)

graph LR

A[Seed Generation] --> B[Verification]

B --> C[Path Generation]

C --> D[Learning & Optimization]

D --> E[Final Decision]

D --> C

style D fill:#fff9c4Value: AI learns and optimizes before execution, avoiding costly mistakes and improving decision quality.

- Experience Accumulation: Learns which decision strategies work best in different contexts

- Golden Templates: Automatically identifies and reuses successful reasoning patterns

- Exploration vs Exploitation: Balances trying new approaches vs using proven methods

When conventional approaches fail, the system automatically:

- Activates creative problem-solving mode

- Generates unconventional thinking paths

- Breaks through decision deadlocks with innovative solutions

- Real-time Information: Integrates web search and verification tools during thinking

- Dynamic Tool Selection: Hybrid MAB+LLM approach for optimal tool choice

- Unified Tool Interface: LangChain-inspired tool abstraction for extensibility

- Python 3.8 or higher

- pip package manager

# Clone repository

git clone https://github.com/your-repo/neogenesis-system.git

cd neogenesis-system

# Create and activate virtual environment (recommended)

python -m venv venv

source venv/bin/activate # Windows: venv\Scripts\activate

# Install dependencies

pip install -r requirements.txtCreate a .env file in the project root:

# Configure one or more LLM providers (system auto-detects available ones)

DEEPSEEK_API_KEY="your_deepseek_api_key"

OPENAI_API_KEY="your_openai_api_key"

ANTHROPIC_API_KEY="your_anthropic_api_key"

# Launch demo menu

python start_demo.py

# Quick simulation demo (no API key needed)

python quick_demo.py

# Full interactive demo

python run_demo.pyfrom neogenesis_system.core.neogenesis_planner import NeogenesisPlanner

from neogenesis_system.cognitive_engine.reasoner import PriorReasoner

from neogenesis_system.cognitive_engine.path_generator import PathGenerator

from neogenesis_system.cognitive_engine.mab_converger import MABConverger

# Initialize components

planner = NeogenesisPlanner(

prior_reasoner=PriorReasoner(),

path_generator=PathGenerator(),

mab_converger=MABConverger()

)

# Create a decision plan

plan = planner.create_plan(

query="Design a scalable microservices architecture",

memory=None,

context={"domain": "system_design", "complexity": "high"}

)

print(f"Plan: {plan.thought}")

print(f"Actions: {len(plan.actions)}")| Metric | Performance | Description |

|---|---|---|

| 🎯 Decision Accuracy | 85%+ | Based on validation data |

| ⚡ Response Time | 2-5 sec | Full five-stage process |

| 🧠 Path Generation | 95%+ | Success rate |

| 💡 Innovation Rate | 15%+ | Aha-moment breakthroughs |

| 🔧 Tool Integration | 92%+ | Success rate |

| 🤖 Multi-LLM Reliability | 99%+ | Provider failover |

MIT License - see LICENSE file.

- OpenAI, Anthropic, DeepSeek: LLM providers

- LangChain: Tool ecosystem inspiration

- Multi-Armed Bandit Theory: Algorithmic foundation

- Metacognitive Theory: Architecture inspiration

Email: [email protected]

🌟 If this project helps you, please give us a Star!

- 🔗 Node Coordination: Synchronize state across multiple Neogenesis instances

- 📡 Event Broadcasting: Real-time state change notifications

- ⚖️ Conflict Resolution: Intelligent merging of concurrent state modifications

- 🔄 Consensus Protocols: Ensure state consistency in distributed environments

from neogenesis_system.langchain_integration.distributed_state import DistributedStateManager

# Configure distributed coordination

distributed_state = DistributedStateManager(

node_id="neogenesis_node_1",

cluster_nodes=["node_1:8001", "node_2:8002", "node_3:8003"],

consensus_protocol="raft"

)

# Distribute decision state across cluster

await distributed_state.broadcast_decision_update({

"session_id": "global_decision_001",

"chosen_path": {"id": 5, "confidence": 0.93},

"timestamp": time.time()

})advanced_chains.py & chains.py - Sophisticated workflow orchestration:

- 🔄 Sequential Chains: Linear execution with state passing

- 🌟 Parallel Chains: Concurrent execution with result aggregation

- 🔀 Conditional Chains: Dynamic routing based on intermediate results

- 🔁 Loop Chains: Iterative processing with convergence criteria

- 🌳 Tree Chains: Hierarchical decision trees with pruning strategies

- 📊 Chain Analytics: Performance monitoring and bottleneck identification

- 🎯 Dynamic Routing: Intelligent path selection based on context

- ⚡ Parallel Execution: Multi-threaded chain processing

- 🛡️ Error Recovery: Graceful handling of chain failures with retry mechanisms

from neogenesis_system.langchain_integration.advanced_chains import AdvancedChainComposer

# Create sophisticated decision workflow

composer = AdvancedChainComposer()

# Define parallel analysis chains

technical_analysis = composer.create_parallel_chain([

"architecture_evaluation",

"performance_analysis",

"security_assessment"

])

# Define sequential decision chain

decision_workflow = composer.create_sequential_chain([

"problem_analysis",

technical_analysis, # Parallel sub-chain

"cost_benefit_analysis",

"risk_assessment",

"final_recommendation"

])

# Execute with state persistence

result = await composer.execute_chain(

chain=decision_workflow,

input_data={"project": "cloud_migration", "scale": "enterprise"},

persist_state=True,

session_id="migration_decision_001"

)execution_engines.py - High-performance parallel processing:

- 🎯 Task Scheduling: Intelligent workload distribution

- ⚡ Parallel Processing: Multi-core and distributed execution

- 📊 Resource Management: CPU, memory, and network optimization

- 🔄 Fault Tolerance: Automatic retry and failure recovery

from neogenesis_system.langchain_integration.execution_engines import ParallelExecutionEngine

# Configure high-performance execution

engine = ParallelExecutionEngine(

max_workers=8,

execution_timeout=300,

retry_strategy="exponential_backoff"

)

# Execute multiple decision paths in parallel

paths_to_evaluate = [

{"path_id": 1, "strategy": "microservices_approach"},

{"path_id": 2, "strategy": "monolithic_approach"},

{"path_id": 3, "strategy": "hybrid_approach"}

]

results = await engine.execute_parallel(

tasks=paths_to_evaluate,

evaluation_function="evaluate_architecture_path"

)tools.py - Comprehensive LangChain-compatible tool library:

- 🔍 Research Tools: Advanced web search, academic paper retrieval, market analysis

- 💾 Data Tools: Database queries, file processing, API integrations

- 🧮 Analysis Tools: Statistical analysis, ML model inference, data visualization

- 🔄 Workflow Tools: Task automation, notification systems, reporting generators

To use the LangChain integration features:

# Install core LangChain integration dependencies

pip install langchain langchain-community

# Install storage backend dependencies

pip install lmdb # For LMDB high-performance storage

pip install redis # For Redis distributed storage

pip install sqlalchemy # For enhanced SQL operations

# Install distributed coordination dependencies

pip install aioredis # For async Redis operations

pip install consul # For service discovery (optional)from neogenesis_system.langchain_integration import (

create_neogenesis_chain,

PersistentStateManager,

AdvancedChainComposer

)

# Create LangChain-compatible Neogenesis chain

neogenesis_chain = create_neogenesis_chain(

storage_backend="lmdb",

enable_distributed_state=True,

session_persistence=True

)

# Use as standard LangChain component

from langchain.chains import SequentialChain

# Integrate with existing LangChain workflows

full_workflow = SequentialChain(chains=[

preprocessing_chain, # Standard LangChain chain

neogenesis_chain, # Our intelligent decision engine

postprocessing_chain # Standard LangChain chain

])

# Execute with persistent state

result = full_workflow.run({

"input": "Design scalable microservices architecture",

"context": {"team_size": 15, "timeline": "6_months"}

})from neogenesis_system.langchain_integration.coordinators import EnterpriseCoordinator

# Configure enterprise-grade decision workflow

coordinator = EnterpriseCoordinator(

storage_config={

"backend": "lmdb",

"encryption": True,

"backup_enabled": True

},

distributed_config={

"cluster_size": 3,

"consensus_protocol": "raft"

}

)

# Execute complex business decision

decision_result = await coordinator.execute_enterprise_decision(

query="Should we acquire startup company TechCorp for $50M?",

context={

"industry": "fintech",

"company_stage": "series_b",

"financial_position": "strong",

"strategic_goals": ["market_expansion", "talent_acquisition"]

},

analysis_depth="comprehensive",

stakeholder_perspectives=["ceo", "cto", "cfo", "head_of_strategy"]

)

# Access persistent decision history

decision_history = coordinator.get_decision_history(

filters={"domain": "mergers_acquisitions", "timeframe": "last_year"}

)| LangChain Integration Metric | Performance | Description |

|---|---|---|

| 🏪 Storage Backend Latency | <2ms | LMDB read/write operations |

| 🔄 State Transaction Speed | <5ms | ACID transaction completion |

| 📡 Distributed Sync Latency | <50ms | Cross-node state synchronization |

| ⚡ Parallel Chain Execution | 4x faster | Compared to sequential execution |

| 💾 Storage Compression Ratio | 60-80% | Space savings with GZIP compression |

| 🛡️ State Consistency Rate | 99.9%+ | Distributed state accuracy |

| 🔧 Tool Integration Success | 95%+ | LangChain tool compatibility |

Neogenesis System adopts a highly modular and extensible architectural design where components have clear responsibilities and work together through dependency injection.

graph TD

subgraph "Launch & Demo Layer"

UI["Demo & Interactive Interface"]

end

subgraph "Core Control Layer"

MC["MainController - Five-stage Process Coordination"]

end

subgraph "LangChain Integration Layer"

LC_AD["LangChain Adapters - LangChain Compatibility"]

LC_PS["PersistentStorage - Multi-Backend Storage"]

LC_SM["StateManagement - ACID Transactions"]

LC_DS["DistributedState - Multi-Node Sync"]

LC_AC["AdvancedChains - Chain Workflows"]

LC_EE["ExecutionEngines - Parallel Processing"]

LC_CO["Coordinators - Chain Coordination"]

LC_TO["LangChain Tools - Extended Tool Library"]

end

subgraph "Decision Logic Layer"

PR["PriorReasoner - Quick Heuristic Analysis"]

RAG["RAGSeedGenerator - RAG-Enhanced Seed Generation"]

PG["PathGenerator - Multi-path Thinking Generation"]

MAB["MABConverger - Meta-MAB & Learning"]

end

subgraph "Tool Abstraction Layer"

TR["ToolRegistry - Unified Tool Management"]

WST["WebSearchTool - Web Search Tool"]

IVT["IdeaVerificationTool - Idea Verification Tool"]

end

subgraph "Tools & Services Layer"

LLM["LLMManager - Multi-LLM Provider Management"]

SC["SearchClient - Web Search & Verification"]

PO["PerformanceOptimizer - Parallelization & Caching"]

CFG["Configuration - Main/Demo Configuration"]

end

subgraph "Storage Backends"

FS["FileSystem - Versioned Storage"]

SQL["SQLite - ACID Database"]

LMDB["LMDB - High-Performance KV"]

MEM["Memory - In-Memory Cache"]

REDIS["Redis - Distributed Cache"]

end

subgraph "LLM Providers Layer"

OAI["OpenAI - GPT-3.5/4/4o"]

ANT["Anthropic - Claude-3 Series"]

DS["DeepSeek - deepseek-chat/coder"]

OLL["Ollama - Local Models"]

AZ["Azure OpenAI - Enterprise Models"]

end

UI --> MC

MC --> LC_AD

LC_AD --> LC_CO

LC_CO --> LC_AC

LC_CO --> LC_EE

LC_AC --> LC_SM

LC_SM --> LC_PS

LC_DS --> LC_SM

LC_PS --> FS

LC_PS --> SQL

LC_PS --> LMDB

LC_PS --> MEM

LC_PS --> REDIS

MC --> PR

MC --> RAG

MC --> PG

MC --> MAB

MC --> TR

MAB --> LC_SM

RAG --> TR

RAG --> LLM

PG --> LLM

MAB --> PG

MC --> PO

TR --> WST

TR --> IVT

TR --> LC_TO

WST --> SC

IVT --> SC

LLM --> OAI

LLM --> ANT

LLM --> DS

LLM --> OLL

LLM --> AZ

style LC_AD fill:#e3f2fd,stroke:#1976d2,stroke-width:2px

style LC_PS fill:#fff3e0,stroke:#f57c00,stroke-width:2px

style LC_SM fill:#f3e5f5,stroke:#7b1fa2,stroke-width:2px

style LC_DS fill:#e8f5e8,stroke:#388e3c,stroke-width:2pxComponent Description:

- MainController: System commander, responsible for orchestrating the complete five-stage decision process with tool-enhanced verification capabilities

- RAGSeedGenerator / PriorReasoner: Decision starting point, responsible for generating high-quality "thinking seeds"

- PathGenerator: System's "divergent thinking" module, generating diverse solutions based on seeds

- MABConverger: System's "convergent thinking" and "learning" module, responsible for evaluation, selection, and learning from experience

- LangChain Adapters: Compatibility layer enabling seamless integration with existing LangChain workflows and components

- PersistentStorage: Multi-backend storage engine supporting FileSystem, SQLite, LMDB, Memory, and Redis with enterprise features

- StateManagement: Professional state management with ACID transactions, checkpointing, and branch management

- DistributedState: Multi-node state coordination with consensus protocols for enterprise deployment

- AdvancedChains: Sophisticated chain composition supporting sequential, parallel, conditional, and tree-based workflows

- ExecutionEngines: High-performance parallel processing framework with intelligent task scheduling and fault tolerance

- Coordinators: Multi-chain coordination system managing complex workflow orchestration and resource allocation

- LangChain Tools: Extended tool ecosystem with advanced research, data processing, analysis, and workflow capabilities

- ToolRegistry: LangChain-inspired unified tool management system, providing centralized registration, discovery, and execution of tools

- WebSearchTool / IdeaVerificationTool: Specialized tools implementing the BaseTool interface for web search and idea verification capabilities

- LLMManager: Universal LLM interface manager, providing unified access to multiple AI providers with intelligent routing and fallback

- Tool Layer: Provides reusable underlying capabilities such as multi-LLM management, search engines, performance optimizers

- FileSystem: Hierarchical storage with versioning, backup, and metadata management

- SQLite: ACID-compliant relational database for complex queries and structured data

- LMDB: Lightning-fast memory-mapped database optimized for high-performance scenarios

- Memory: In-memory storage for caching and testing scenarios

- Redis: Distributed caching and session storage for enterprise scalability

Core Technologies:

- Core Language: Python 3.8+

- AI Engines: Multi-LLM Support (OpenAI, Anthropic, DeepSeek, Ollama, Azure OpenAI)

- LangChain Integration: Full LangChain compatibility with custom adapters, chains, and tools

- Tool Architecture: LangChain-inspired unified tool abstraction with BaseTool interface, ToolRegistry management, and dynamic tool discovery

- Core Algorithms: Meta Multi-Armed Bandit (Thompson Sampling, UCB, Epsilon-Greedy), Retrieval-Augmented Generation (RAG), Tool-Enhanced Decision Making

- Storage Backends: Multi-backend support (LMDB, SQLite, FileSystem, Memory, Redis) with enterprise features

- State Management: ACID transactions, distributed state coordination, and persistent workflows

- External Services: DuckDuckGo Search, Multi-provider LLM APIs, Tool-enhanced web verification

LangChain Integration Stack:

- Framework: LangChain, LangChain-Community for ecosystem compatibility

- Storage Engines: LMDB (high-performance), SQLite (ACID compliance), Redis (distributed caching)

- State Systems: Custom transaction management, distributed consensus protocols

- Chain Types: Sequential, Parallel, Conditional, Loop, and Tree-based chain execution

- Execution: Multi-threaded parallel processing with intelligent resource management

Key Libraries:

- Core: requests, numpy, typing, dataclasses, abc, asyncio

- AI/LLM: openai, anthropic, langchain, langchain-community

- Storage: lmdb, sqlite3, redis, sqlalchemy

- Search: duckduckgo-search, web scraping utilities

- Performance: threading, multiprocessing, caching mechanisms

- Distributed: aioredis, consul (optional), network coordination

- Python 3.8 or higher

- pip package manager

-

Clone Repository

git clone https://github.com/your-repo/neogenesis-system.git cd neogenesis-system -

Install Dependencies

# (Recommended) Create and activate virtual environment python -m venv venv source venv/bin/activate # on Windows: venv\Scripts\activate # Install core dependencies pip install -r requirements.txt # (Optional) Install additional LLM provider libraries for enhanced functionality pip install openai # For OpenAI GPT models pip install anthropic # For Anthropic Claude models # Note: DeepSeek support is included in core dependencies # (Optional) Install LangChain integration dependencies for advanced features pip install langchain langchain-community # Core LangChain integration pip install lmdb # High-performance LMDB storage pip install redis # Distributed caching and state pip install sqlalchemy # Enhanced SQL operations pip install aioredis # Async Redis for distributed coordination

-

Configure API Keys (Optional but Recommended)

Create a

.envfile in the project root directory and configure your preferred LLM provider API keys:# Configure one or more LLM providers (the system will auto-detect available ones) DEEPSEEK_API_KEY="your_deepseek_api_key" OPENAI_API_KEY="your_openai_api_key" ANTHROPIC_API_KEY="your_anthropic_api_key" # For Azure OpenAI (optional) AZURE_OPENAI_API_KEY="your_azure_openai_key" AZURE_OPENAI_ENDPOINT="https://your-resource.openai.azure.com"

Note: You only need to configure at least one provider. The system automatically:

- Detects available providers based on configured API keys

- Selects the best available provider automatically

- Falls back to other providers if the primary one fails

Without any keys, the system will run in limited simulation mode.

We provide multiple demo modes to let you intuitively experience AI's thinking process.

# Launch menu to select experience mode

python start_demo.py

# (Recommended) Run quick simulation demo directly, no configuration needed

python quick_demo.py

# Run complete interactive demo connected to real system

python run_demo.pyimport os

from dotenv import load_dotenv

from meta_mab.controller import MainController

# Load environment variables

load_dotenv()

# Initialize controller (auto-detects available LLM providers)

controller = MainController()

# The system automatically selects the best available LLM provider

# You can check which providers are available

status = controller.get_llm_provider_status()

print(f"Available providers: {status['healthy_providers']}/{status['total_providers']}")

# Pose a complex question

query = "Design a scalable, low-cost cloud-native tech stack for a startup tech company"

context = {"domain": "cloud_native_architecture", "company_stage": "seed"}

# Get AI's decision (automatically uses the best available provider)

decision_result = controller.make_decision(user_query=query, execution_context=context)

# View the final chosen thinking path

chosen_path = decision_result.get('chosen_path')

if chosen_path:

print(f"🚀 AI's chosen thinking path: {chosen_path.path_type}")

print(f"📝 Core approach: {chosen_path.description}")

# (Optional) Switch to a specific provider

controller.switch_llm_provider("openai") # or "anthropic", "deepseek", etc.

# (Optional) Provide execution result feedback to help AI learn

controller.update_performance_feedback(

decision_result=decision_result,

execution_success=True,

execution_time=12.5,

user_satisfaction=0.9,

rl_reward=0.85

)

print("\n✅ AI has received feedback and completed learning!")

# Tool Integration Examples

print("\n" + "="*50)

print("🔧 Tool-Enhanced Decision Making Examples")

print("="*50)

# Check available tools

from meta_mab.utils.tool_abstraction import list_available_tools, get_registry_stats

tools = list_available_tools()

stats = get_registry_stats()

print(f"📊 Available tools: {len(tools)} ({', '.join(tools)})")

print(f"📈 Tool registry stats: {stats['total_tools']} tools, {stats['success_rate']:.1%} success rate")

# Direct tool usage example

from meta_mab.utils.tool_abstraction import execute_tool

search_result = execute_tool("web_search", query="latest trends in cloud computing 2024", max_results=3)

if search_result and search_result.success:

print(f"🔍 Web search successful: Found {len(search_result.data.get('results', []))} results")

else:

print(f"❌ Web search failed: {search_result.error_message if search_result else 'No result'}")

# Tool-enhanced verification example

verification_result = execute_tool("idea_verification",

idea="Implement blockchain-based supply chain tracking for food safety",

context={"industry": "food_tech", "scale": "enterprise"})

if verification_result and verification_result.success:

analysis = verification_result.data.get('analysis', {})

print(f"💡 Idea verification: Feasibility score {analysis.get('feasibility_score', 0):.2f}")

else:

print(f"❌ Idea verification failed: {verification_result.error_message if verification_result else 'No result'}")| Metric | Performance | Description |

|---|---|---|

| 🎯 Decision Accuracy | 85%+ | Based on historical validation data |

| ⚡ Average Response Time | 2-5 seconds | Including complete five-stage processing |

| 🧠 Path Generation Success Rate | 95%+ | Diverse thinking path generation |

| 🏆 Golden Template Hit Rate | 60%+ | Successful experience reuse efficiency |

| 💡 Aha-Moment Trigger Rate | 15%+ | Innovation breakthrough scenario percentage |

| 🔧 Tool Integration Success Rate | 92%+ | Tool-enhanced verification reliability |

| 🔍 Tool Discovery Accuracy | 88%+ | Correct tool selection for context |

| 🚀 Tool-Enhanced Decision Quality | +25% | Improvement over non-tool decisions |

| 🎯 Hybrid Selection Accuracy | 94%+ | MAB+LLM fusion mode precision |

| 🧠 Cold-Start Detection Rate | 96%+ | Accurate unfamiliar tool identification |

| ⚡ Experience Mode Efficiency | +40% | Performance boost for familiar tools |

| 🔍 Exploration Mode Success | 89%+ | LLM-guided tool discovery effectiveness |

| 📈 Learning Convergence Speed | 3-5 uses | MAB optimization learning curve |

| 🤖 Provider Availability | 99%+ | Multi-LLM fallback reliability |

| 🔄 Automatic Fallback Success | 98%+ | Seamless provider switching rate |

| LangChain Integration Metric | Performance | Description |

|---|---|---|

| 🏪 Storage Backend Latency | <2ms | LMDB read/write operations |

| 🔄 State Transaction Speed | <5ms | ACID transaction completion |

| 📡 Distributed Sync Latency | <50ms | Cross-node state synchronization |

| ⚡ Parallel Chain Execution | 4x faster | Compared to sequential execution |

| 💾 Storage Compression Ratio | 60-80% | Space savings with GZIP compression |

| 🛡️ State Consistency Rate | 99.9%+ | Distributed state accuracy |

| 🔧 Tool Integration Success | 95%+ | LangChain tool compatibility |

| 🌐 Chain Composition Success | 98%+ | Complex workflow execution reliability |

| 📊 Workflow Persistence Rate | 99.5%+ | State recovery after failures |

| ⚖️ Load Balancing Efficiency | 92%+ | Distributed workload optimization |

# Run all tests

python -m pytest tests/

# Run unit test examples

python tests/examples/simple_test_example.py

# Run performance tests

python tests/unit/test_performance.py# Verify MAB algorithm convergence

python tests/unit/test_mab_converger.py

# Verify path generation robustness

python tests/unit/test_path_creation_robustness.py

# Verify RAG seed generation

python tests/unit/test_rag_seed_generator.py# Product strategy decisions

result = controller.make_decision(

"How to prioritize features for our SaaS product for next quarter?",

execution_context={

"industry": "software",

"stage": "growth",

"constraints": ["budget_limited", "team_capacity"]

}

)# Architecture design decisions

result = controller.make_decision(

"Design a real-time recommendation system supporting tens of millions of concurrent users",

execution_context={

"domain": "system_architecture",

"scale": "large",

"requirements": ["real_time", "high_availability"]

}

)# Market analysis decisions

result = controller.make_decision(

"Analyze competitive landscape and opportunities in the AI tools market",

execution_context={

"analysis_type": "market_research",

"time_horizon": "6_months",

"focus": ["opportunities", "threats"]

}

)# Tool-enhanced technical decisions with real-time information gathering

result = controller.make_decision(

"Should we adopt Kubernetes for our microservices architecture?",

execution_context={

"domain": "system_architecture",

"team_size": "10_engineers",

"current_stack": ["docker", "aws"],

"constraints": ["learning_curve", "migration_complexity"]

}

)

# The system automatically:

# 1. Uses WebSearchTool to gather latest Kubernetes trends and best practices

# 2. Applies IdeaVerificationTool to validate feasibility based on team constraints

# 3. Integrates real-time information into decision-making process

# 4. Provides evidence-based recommendations with source citations

print(f"Tool-enhanced decision: {result.get('chosen_path', {}).get('description', 'N/A')}")

print(f"Tools used: {result.get('tools_used', [])}")

print(f"Information sources: {result.get('verification_sources', [])}")# Check available providers and their status

status = controller.get_llm_provider_status()

print(f"Healthy providers: {status['healthy_providers']}")

# Switch to a specific provider for particular tasks

controller.switch_llm_provider("anthropic") # Use Claude for complex reasoning

result_reasoning = controller.make_decision("Complex philosophical analysis...")

controller.switch_llm_provider("deepseek") # Use DeepSeek for coding tasks

result_coding = controller.make_decision("Optimize this Python algorithm...")

controller.switch_llm_provider("openai") # Use GPT for general tasks

result_general = controller.make_decision("Business strategy planning...")

# Get cost and usage statistics

cost_summary = controller.get_llm_cost_summary()

print(f"Total cost: ${cost_summary['total_cost_usd']:.4f}")

print(f"Requests by provider: {cost_summary['cost_by_provider']}")

# Run health check on all providers

health_status = controller.run_llm_health_check()

print(f"Provider health: {health_status}")from neogenesis_system.langchain_integration import (

create_neogenesis_chain,

StateManager,

DistributedStateManager

)

# Create enterprise-grade persistent workflow

state_manager = StateManager(storage_backend="lmdb", enable_encryption=True)

neogenesis_chain = create_neogenesis_chain(

state_manager=state_manager,

enable_persistence=True,

session_id="enterprise_decision_2024"

)

# Execute long-running decision process with state persistence

result = neogenesis_chain.execute({

"query": "Develop comprehensive digital transformation strategy",

"context": {

"industry": "manufacturing",

"company_size": "enterprise",

"timeline": "3_years",

"budget": "10M_USD",

"current_state": "legacy_systems"

}

})

# Access persistent decision history

decision_timeline = state_manager.get_decision_timeline("enterprise_decision_2024")

print(f"Decision milestones: {len(decision_timeline)} checkpoints")from neogenesis_system.langchain_integration.advanced_chains import AdvancedChainComposer

from neogenesis_system.langchain_integration.execution_engines import ParallelExecutionEngine

# Configure parallel analysis workflow

composer = AdvancedChainComposer()

execution_engine = ParallelExecutionEngine(max_workers=6)

# Create specialized analysis chains

market_analysis_chain = composer.create_analysis_chain("market_research")

technical_analysis_chain = composer.create_analysis_chain("technical_feasibility")

financial_analysis_chain = composer.create_analysis_chain("financial_modeling")

risk_analysis_chain = composer.create_analysis_chain("risk_assessment")

# Execute parallel comprehensive analysis

parallel_analysis = composer.create_parallel_chain([

market_analysis_chain,

technical_analysis_chain,

financial_analysis_chain,

risk_analysis_chain

])

# Run analysis with persistent state and error recovery

result = await execution_engine.execute_chain(

chain=parallel_analysis,

input_data={

"project": "AI-powered customer service platform",

"market": "enterprise_software",

"timeline": "18_months"

},

persist_state=True,

enable_recovery=True

)

print(f"Analysis completed: {result['analysis_summary']}")

print(f"Execution time: {result['execution_time']:.2f}s")from neogenesis_system.langchain_integration.distributed_state import DistributedStateManager

from neogenesis_system.langchain_integration.coordinators import ClusterCoordinator

# Configure distributed decision cluster

distributed_state = DistributedStateManager(

node_id="decision_node_1",

cluster_nodes=["node_1:8001", "node_2:8002", "node_3:8003"],

consensus_protocol="raft"

)

cluster_coordinator = ClusterCoordinator(

distributed_state=distributed_state,

load_balancing="intelligent"

)

# Execute distributed decision with consensus

decision_result = await cluster_coordinator.execute_distributed_decision(

query="Should we enter the European market with our fintech product?",

context={

"industry": "fintech",

"target_markets": ["germany", "france", "uk"],

"regulatory_complexity": "high",

"competition_level": "intense"

},

require_consensus=True,

min_node_agreement=2

)

# Access cluster decision metrics

cluster_stats = cluster_coordinator.get_cluster_stats()

print(f"Nodes participated: {cluster_stats['active_nodes']}")

print(f"Consensus achieved: {cluster_stats['consensus_reached']}")

print(f"Decision confidence: {decision_result['confidence']:.2f}")from langchain.chains import SequentialChain, LLMChain

from langchain.prompts import PromptTemplate

from neogenesis_system.langchain_integration.adapters import NeogenesisLangChainAdapter

# Create standard LangChain components

prompt = PromptTemplate(template="Analyze the market for {product}", input_variables=["product"])

market_chain = LLMChain(llm=llm, prompt=prompt)

# Create Neogenesis intelligent decision chain

neogenesis_adapter = NeogenesisLangChainAdapter(

storage_backend="lmdb",

enable_advanced_reasoning=True

)

neogenesis_chain = neogenesis_adapter.create_decision_chain()

# Combine in standard LangChain workflow

complete_workflow = SequentialChain(

chains=[

market_chain, # Standard LangChain analysis

neogenesis_chain, # Intelligent Neogenesis decision-making

market_chain # Follow-up LangChain processing

],

input_variables=["product"],

output_variables=["final_recommendation"]

)

# Execute integrated workflow

result = complete_workflow.run({

"product": "AI-powered legal document analyzer"

})

print(f"Integrated analysis result: {result}")We warmly welcome community contributions! Whether bug fixes, feature suggestions, or code submissions, all help make Neogenesis System better.

- 🐛 Bug Reports: Submit issues when you find problems

- ✨ Feature Suggestions: Propose new feature ideas

- 📝 Documentation Improvements: Enhance documentation and examples

- 🔧 Code Contributions: Submit Pull Requests

- 🔨 Tool Development: Create new tools implementing the BaseTool interface

- 🧪 Tool Testing: Help test and validate tool integrations

# 1. Fork and clone project

git clone https://github.com/your-username/neogenesis-system.git

# 2. Create development branch

git checkout -b feature/your-feature-name

# 3. Install development dependencies

pip install -r requirements-dev.txt

# 4. Run tests to ensure baseline functionality

python -m pytest tests/

# 5. Develop new features...

# 6. Submit Pull RequestPlease refer to CONTRIBUTING.md for detailed guidelines.

This project is open-sourced under the MIT License. See LICENSE file for details.

Neogenesis System is independently developed by the author.

- 📧 Email Contact: This project is still in development. If you're interested in the project or need commercial use, please contact: [email protected]

🌟 If this project helps you, please give us a Star!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Neosgenesis

Similar Open Source Tools

Neosgenesis

Neogenesis System is an advanced AI decision-making framework that enables agents to 'think about how to think'. It implements a metacognitive approach with real-time learning, tool integration, and multi-LLM support, allowing AI to make expert-level decisions in complex environments. Key features include metacognitive intelligence, tool-enhanced decisions, real-time learning, aha-moment breakthroughs, experience accumulation, and multi-LLM support.

sgr-deep-research

This repository contains a deep learning research project focused on natural language processing tasks. It includes implementations of various state-of-the-art models and algorithms for text classification, sentiment analysis, named entity recognition, and more. The project aims to provide a comprehensive resource for researchers and developers interested in exploring deep learning techniques for NLP applications.

ai-counsel

AI Counsel is a true deliberative consensus MCP server where AI models engage in actual debate, refine positions across multiple rounds, and converge with voting and confidence levels. It features two modes (quick and conference), mixed adapters (CLI tools and HTTP services), auto-convergence, structured voting, semantic grouping, model-controlled stopping, evidence-based deliberation, local model support, data privacy, context injection, semantic search, fault tolerance, and full transcripts. Users can run local and cloud models to deliberate on various questions, ground decisions in reality by querying code and files, and query past decisions for analysis. The tool is designed for critical technical decisions requiring multi-model deliberation and consensus building.

alphora

Alphora is a full-stack framework for building production AI agents, providing agent orchestration, prompt engineering, tool execution, memory management, streaming, and deployment with an async-first, OpenAI-compatible design. It offers features like agent derivation, reasoning-action loop, async streaming, visual debugger, OpenAI compatibility, multimodal support, tool system with zero-config tools and type safety, prompt engine with dynamic prompts, memory and storage management, sandbox for secure execution, deployment as API, and more. Alphora allows users to build sophisticated AI agents easily and efficiently.

FDAbench

FDABench is a benchmark tool designed for evaluating data agents' reasoning ability over heterogeneous data in analytical scenarios. It offers 2,007 tasks across various data sources, domains, difficulty levels, and task types. The tool provides ready-to-use data agent implementations, a DAG-based evaluation system, and a framework for agent-expert collaboration in dataset generation. Key features include data agent implementations, comprehensive evaluation metrics, multi-database support, different task types, extensible framework for custom agent integration, and cost tracking. Users can set up the environment using Python 3.10+ on Linux, macOS, or Windows. FDABench can be installed with a one-command setup or manually. The tool supports API configuration for LLM access and offers quick start guides for database download, dataset loading, and running examples. It also includes features like dataset generation using the PUDDING framework, custom agent integration, evaluation metrics like accuracy and rubric score, and a directory structure for easy navigation.

headroom

Headroom is a tool designed to optimize the context layer for Large Language Models (LLMs) applications by compressing redundant boilerplate outputs. It intercepts context from tool outputs, logs, search results, and intermediate agent steps, stabilizes dynamic content like timestamps and UUIDs, removes low-signal content, and preserves original data for retrieval only when needed by the LLM. It ensures provider caching works efficiently by aligning prompts for cache hits. The tool works as a transparent proxy with zero code changes, offering significant savings in token count and enabling reversible compression for various types of content like code, logs, JSON, and images. Headroom integrates seamlessly with frameworks like LangChain, Agno, and MCP, supporting features like memory, retrievers, agents, and more.

llamafarm

LlamaFarm is a comprehensive AI framework that empowers users to build powerful AI applications locally, with full control over costs and deployment options. It provides modular components for RAG systems, vector databases, model management, prompt engineering, and fine-tuning. Users can create differentiated AI products without needing extensive ML expertise, using simple CLI commands and YAML configs. The framework supports local-first development, production-ready components, strategy-based configuration, and deployment anywhere from laptops to the cloud.

ai-coders-context

The @ai-coders/context repository provides the Ultimate MCP for AI Agent Orchestration, Context Engineering, and Spec-Driven Development. It simplifies context engineering for AI by offering a universal process called PREVC, which consists of Planning, Review, Execution, Validation, and Confirmation steps. The tool aims to address the problem of context fragmentation by introducing a single `.context/` directory that works universally across different tools. It enables users to create structured documentation, generate agent playbooks, manage workflows, provide on-demand expertise, and sync across various AI tools. The tool follows a structured, spec-driven development approach to improve AI output quality and ensure reproducible results across projects.

zeroclaw

ZeroClaw is a fast, small, and fully autonomous AI assistant infrastructure built with Rust. It features a lean runtime, cost-efficient deployment, fast cold starts, and a portable architecture. It is secure by design, fully swappable, and supports OpenAI-compatible provider support. The tool is designed for low-cost boards and small cloud instances, with a memory footprint of less than 5MB. It is suitable for tasks like deploying AI assistants, swapping providers/channels/tools, and pluggable everything.

z-ai-sdk-python

Z.ai Open Platform Python SDK is the official Python SDK for Z.ai's large model open interface, providing developers with easy access to Z.ai's open APIs. The SDK offers core features like chat completions, embeddings, video generation, audio processing, assistant API, and advanced tools. It supports various functionalities such as speech transcription, text-to-video generation, image understanding, and structured conversation handling. Developers can customize client behavior, configure API keys, and handle errors efficiently. The SDK is designed to simplify AI interactions and enhance AI capabilities for developers.

Bindu

Bindu is an operating layer for AI agents that provides identity, communication, and payment capabilities. It delivers a production-ready service with a convenient API to connect, authenticate, and orchestrate agents across distributed systems using open protocols: A2A, AP2, and X402. Built with a distributed architecture, Bindu makes it fast to develop and easy to integrate with any AI framework. Transform any agent framework into a fully interoperable service for communication, collaboration, and commerce in the Internet of Agents.

dexto

Dexto is a lightweight runtime for creating and running AI agents that turn natural language into real-world actions. It serves as the missing intelligence layer for building AI applications, standalone chatbots, or as the reasoning engine inside larger products. Dexto features a powerful CLI and Web UI for running AI agents, supports multiple interfaces, allows hot-swapping of LLMs from various providers, connects to remote tool servers via the Model Context Protocol, is config-driven with version-controlled YAML, offers production-ready core features, extensibility for custom services, and enables multi-agent collaboration via MCP and A2A.

connectonion

ConnectOnion is a simple, elegant open-source framework for production-ready AI agents. It provides a platform for creating and using AI agents with a focus on simplicity and efficiency. The framework allows users to easily add tools, debug agents, make them production-ready, and enable multi-agent capabilities. ConnectOnion offers a simple API, is production-ready with battle-tested models, and is open-source under the MIT license. It features a plugin system for adding reflection and reasoning capabilities, interactive debugging for easy troubleshooting, and no boilerplate code for seamless scaling from prototypes to production systems.

flyte-sdk

Flyte 2 SDK is a pure Python tool for type-safe, distributed orchestration of agents, ML pipelines, and more. It allows users to write data pipelines, ML training jobs, and distributed compute in Python without any DSL constraints. With features like async-first parallelism and fine-grained observability, Flyte 2 offers a seamless workflow experience. Users can leverage core concepts like TaskEnvironments for container configuration, pure Python workflows for flexibility, and async parallelism for distributed execution. Advanced features include sub-task observability with tracing and remote task execution. The tool also provides native Jupyter integration for running and monitoring workflows directly from notebooks. Configuration and deployment are made easy with configuration files and commands for deploying and running workflows. Flyte 2 is licensed under the Apache 2.0 License.

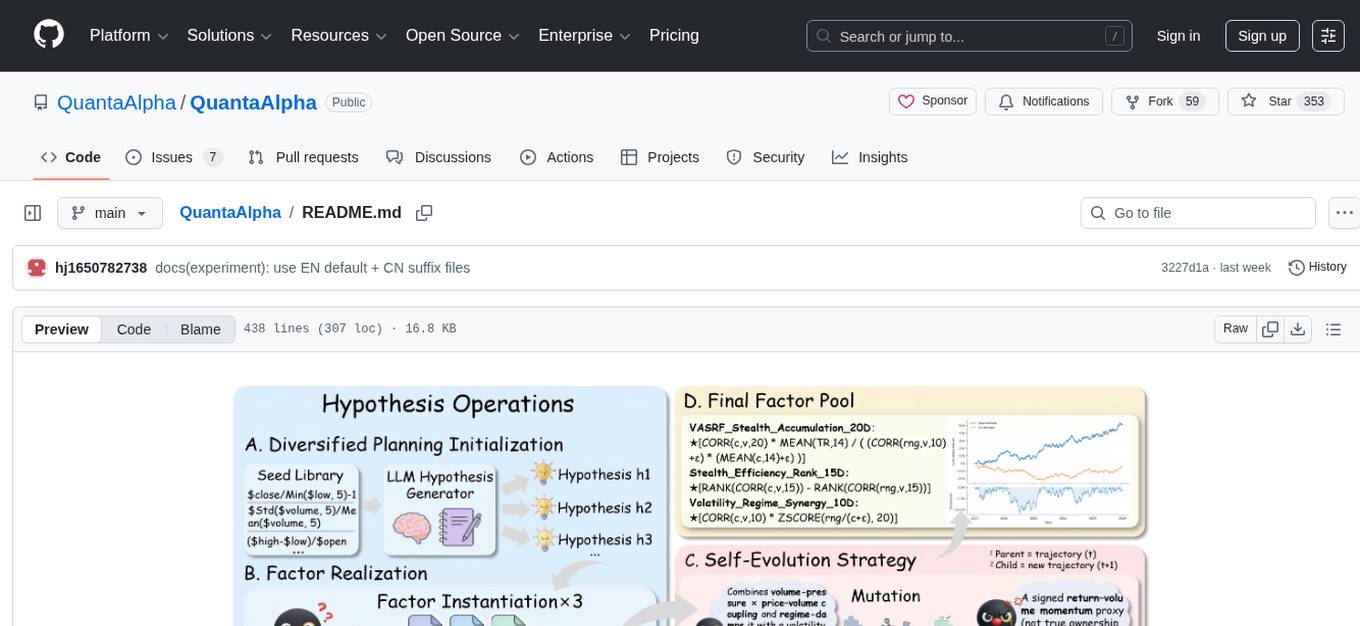

QuantaAlpha

QuantaAlpha is a framework designed for factor mining in quantitative alpha research. It combines LLM intelligence with evolutionary strategies to automatically mine, evolve, and validate alpha factors through self-evolving trajectories. The framework provides a trajectory-based approach with diversified planning initialization and structured hypothesis-code constraint. Users can describe their research direction and observe the automatic factor mining process. QuantaAlpha aims to transform how quantitative alpha factors are discovered by leveraging advanced technologies and self-evolving methodologies.

adk-rust

ADK-Rust is a comprehensive and production-ready Rust framework for building AI agents. It features type-safe agent abstractions with async execution and event streaming, multiple agent types including LLM agents, workflow agents, and custom agents, realtime voice agents with bidirectional audio streaming, a tool ecosystem with function tools, Google Search, and MCP integration, production features like session management, artifact storage, memory systems, and REST/A2A APIs, and a developer-friendly experience with interactive CLI, working examples, and comprehensive documentation. The framework follows a clean layered architecture and is production-ready and actively maintained.

For similar tasks

Neosgenesis

Neogenesis System is an advanced AI decision-making framework that enables agents to 'think about how to think'. It implements a metacognitive approach with real-time learning, tool integration, and multi-LLM support, allowing AI to make expert-level decisions in complex environments. Key features include metacognitive intelligence, tool-enhanced decisions, real-time learning, aha-moment breakthroughs, experience accumulation, and multi-LLM support.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.