sgr-deep-research

Hybrid Schema-Guided Reasoning (SGR) has agentic system design create by neuraldeep community Creator of SGR concept: https://abdullin.com/schema-guided-reasoning/demo Schema-Guided Reasoning (SGR) is a technique that guides large language models (LLMs) to produce structured, clear, and predictable outputs by enforcing reasoning through

Stars: 471

This repository contains a deep learning research project focused on natural language processing tasks. It includes implementations of various state-of-the-art models and algorithms for text classification, sentiment analysis, named entity recognition, and more. The project aims to provide a comprehensive resource for researchers and developers interested in exploring deep learning techniques for NLP applications.

README:

Web Interface Video

https://github.com/user-attachments/assets/9e1c46c0-0c13-45dd-8b35-a3198f946451

Terminal CLI Video

https://github.com/user-attachments/assets/a5e34116-7853-43c2-ba93-2db811b8584a

Production-ready open-source system for automated research using Schema-Guided Reasoning (SGR). Features real-time streaming responses, OpenAI-compatible API, and comprehensive research capabilities with agent interruption support.

| Agent | SGR Implementation | ReasoningTool | Tools | API Requests | Selection Mechanism |

|---|---|---|---|---|---|

| 1. SGR-Agent | Structured Output | ❌ Built into schema | 6 basic | 1 | SO Union Type |

| 2. FCAgent | ❌ Absent | ❌ Absent | 6 basic | 1 | FC "required" |

| 3. HybridSGRAgent | FC Tool enforced | ✅ First step FC | 7 (6 + ReasoningTool) | 2 | FC → FC TOP AGENT |

| 4. OptionalSGRAgent | FC Tool optional | ✅ At model’s choice | 7 (6 + ReasoningTool) | 1–2 | FC "auto" |

| 5. ReasoningFC_SO | FC → SO → FC auto | ✅ FC enforced | 7 (6 + ReasoningTool) | 3 | FC → SO → FC auto |

This project is built by the community with pure enthusiasm as an open-source initiative:

- SGR Concept Creator: @abdullin - Original Schema-Guided Reasoning concept

- Project Coordinator & Vision: @VaKovaLskii - Team coordination and project direction

- Lead Core Developer: @virrius - Complete system rewrite and core implementation

- API Development: Pavel Zloi - OpenAI-compatible API layer

- Hybrid FC Mode: @Shadekss - Dmitry Sirakov [Shade] - SGR integration into Function Calling for Agentic-capable models

- DevOps & Deployment: @mixaill76 - Infrastructure and build management

All development is driven by pure enthusiasm and open-source community collaboration. We welcome contributors of all skill levels!

First, install UV (modern Python package manager):

# Install UV

curl -LsSf https://astral.sh/uv/install.sh | sh

# or on Windows:

# powershell -ExecutionPolicy ByPass -c "irm https://astral.sh/uv/install.ps1 | iex"# 1. Setup configuration

cp config.yaml.example config.yaml

# Edit config.yaml with your API keys

# 2. Change to src directory and install dependencies

uv sync

# 3. Run the server

uv run python sgr_deep_research# 1. Setup configuration

cp config.yaml.example config.yaml

# Edit config.yaml with your API keys

# 2. Go to the services folder

cd services

# 3. Building docker images

docker-compose build

# 4. Deploy with Docker Compose

docker-compose up -d

# 3. Check health

curl http://localhost:8010/health🚀 Python OpenAI Client Examples - Complete integration guide with streaming & clarifications

Simple Python examples for using OpenAI client with SGR Deep Research system.

pip install openaiSimple research query without clarifications.

from openai import OpenAI

# Initialize client

client = OpenAI(

base_url="http://localhost:8010/v1",

api_key="dummy", # Not required for local server

)

# Make research request

response = client.chat.completions.create(

model="sgr-agent",

messages=[{"role": "user", "content": "Research BMW X6 2025 prices in Russia"}],

stream=True,

temperature=0.4,

)

# Print streaming response

for chunk in response:

if chunk.choices[0].delta.content:

print(chunk.choices[0].delta.content, end="")Handle agent clarification requests and continue conversation.

import json

from openai import OpenAI

client = OpenAI(base_url="http://localhost:8010/v1", api_key="dummy")

# Step 1: Initial research request

print("Starting research...")

response = client.chat.completions.create(

model="sgr-agent",

messages=[{"role": "user", "content": "Research AI market trends"}],

stream=True,

temperature=0,

)

agent_id = None

clarification_questions = []

# Process streaming response

for chunk in response:

# Extract agent ID from model field

if chunk.model and chunk.model.startswith("sgr_agent_"):

agent_id = chunk.model

print(f"\nAgent ID: {agent_id}")

# Check for clarification requests

if chunk.choices[0].delta.tool_calls:

for tool_call in chunk.choices[0].delta.tool_calls:

if tool_call.function and tool_call.function.name == "clarification":

args = json.loads(tool_call.function.arguments)

clarification_questions = args.get("questions", [])

# Print content

if chunk.choices[0].delta.content:

print(chunk.choices[0].delta.content, end="")

# Step 2: Handle clarification if needed

if clarification_questions and agent_id:

print(f"\n\nClarification needed:")

for i, question in enumerate(clarification_questions, 1):

print(f"{i}. {question}")

# Provide clarification

clarification = "Focus on LLM market trends for 2024-2025, global perspective"

print(f"\nProviding clarification: {clarification}")

# Continue with agent ID

response = client.chat.completions.create(

model=agent_id, # Use agent ID as model

messages=[{"role": "user", "content": clarification}],

stream=True,

temperature=0,

)

# Print final response

for chunk in response:

if chunk.choices[0].delta.content:

print(chunk.choices[0].delta.content, end="")

print("\n\nResearch completed!")- Replace

localhost:8010with your server URL - The

api_keycan be any string for local server - Agent ID is returned in the

modelfield during streaming - Clarification questions are sent via

tool_callswith function nameclarification - Use the agent ID as model name to continue conversation

⚡ cURL API Examples - Direct HTTP requests with agent interruption & clarification flow

The system provides a fully OpenAI-compatible API with advanced agent interruption and clarification capabilities.

curl -X POST "http://localhost:8010/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "sgr_agent",

"messages": [{"role": "user", "content": "Research BMW X6 2025 prices in Russia"}],

"stream": true,

"max_tokens": 1500,

"temperature": 0.4

}'When the agent needs clarification, it returns a unique agent ID in the streaming response model field. You can then continue the conversation using this agent ID.

curl -X POST "http://localhost:8010/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "sgr_agent",

"messages": [{"role": "user", "content": "Research AI market trends"}],

"stream": true,

"max_tokens": 1500,

"temperature": 0

}'The streaming response includes the agent ID in the model field:

{

"model": "sgr_agent_b84d5a01-c394-4499-97be-dad6a5d2cb86",

"choices": [{

"delta": {

"tool_calls": [{

"function": {

"name": "clarification",

"arguments": "{\"questions\":[\"Which specific AI market segment are you interested in (LLM, computer vision, robotics)?\", \"What time period should I focus on (2024, next 5 years)?\", \"Are you looking for global trends or specific geographic regions?\", \"Do you need technical analysis or business/investment perspective?\"]}"

}

}]

}

}]

}curl -X POST "http://localhost:8010/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "sgr_agent_b84d5a01-c394-4499-97be-dad6a5d2cb86",

"messages": [{"role": "user", "content": "Focus on LLM market trends for 2024-2025, global perspective, business analysis"}],

"stream": true,

"max_tokens": 1500,

"temperature": 0

}'# Get all active agents

curl http://localhost:8010/agents

# Get specific agent state

curl http://localhost:8010/agents/{agent_id}/state

# Direct clarification endpoint

curl -X POST "http://localhost:8010/agents/{agent_id}/provide_clarification" \

-H "Content-Type: application/json" \

-d '{

"messages": [{"role": "user", "content": "Focus on luxury models only"}],

"stream": true

}'The following diagram shows the complete SGR agent workflow with interruption and clarification support:

sequenceDiagram

participant Client

participant API as FastAPI Server

participant Agent as SGR Agent

participant LLM as LLM

participant Tools as Research Tools

Note over Client, Tools: SGR Deep Research - Agent Workflow

Client->>API: POST /v1/chat/completions<br/>{"model": "sgr_agent", "messages": [...]}

API->>Agent: Create new SGR Agent<br/>with unique ID

Note over Agent: State: INITED

Agent->>Agent: Initialize context<br/>and conversation history

loop SGR Reasoning Loop (max 6 steps)

Agent->>Agent: Prepare tools based on<br/>current context limits

Agent->>LLM: Structured Output Request<br/>with NextStep schema

LLM-->>API: Streaming chunks

API-->>Client: SSE stream with<br/>agent_id in model field

LLM->>Agent: Parsed NextStep result

alt Tool: Clarification

Note over Agent: State: WAITING_FOR_CLARIFICATION

Agent->>Tools: Execute clarification tool

Tools->>API: Return clarifying questions

API-->>Client: Stream clarification questions

Client->>API: POST /v1/chat/completions<br/>{"model": "agent_id", "messages": [...]}

API->>Agent: provide_clarification()

Note over Agent: State: RESEARCHING

Agent->>Agent: Add clarification to context

else Tool: GeneratePlan

Agent->>Tools: Execute plan generation

Tools->>Agent: Research plan created

else Tool: WebSearch

Agent->>Tools: Execute web search

Tools->>Tools: Tavily API call

Tools->>Agent: Search results + sources

Agent->>Agent: Update context with sources

else Tool: AdaptPlan

Agent->>Tools: Execute plan adaptation

Tools->>Agent: Updated research plan

else Tool: CreateReport

Agent->>Tools: Execute report creation

Tools->>Tools: Generate comprehensive<br/>report with citations

Tools->>Agent: Final research report

else Tool: ReportCompletion

Note over Agent: State: COMPLETED

Agent->>Tools: Execute completion

Tools->>Agent: Task completion status

end

Agent->>Agent: Add tool result to<br/>conversation history

API-->>Client: Stream tool execution result

break Task Completed

Agent->>Agent: Break execution loop

end

end

Agent->>API: Finish streaming

API-->>Client: Close SSE stream

Note over Client, Tools: Agent remains accessible<br/>via agent_id for further clarifications- 🤔 Clarification - clarifying questions when unclear

- 📋 Plan Generation - research plan creation

- 🔍 Web Search - internet information search

- 🔄 Plan Adaptation - plan adaptation based on results

- 📝 Report Creation - detailed report creation

- ✅ Completion - task completion

Reality Check: Function Calling works great on OpenAI/Anthropic (80+ BFCL scores) but fails dramatically on local models <32B parameters when using true ReAct agents with tool_mode="auto", where the model itself decides when to call tools.

BFCL Benchmark Results for Qwen3 Models:

-

Qwen3-8B (FC): Only 15% accuracy in Agentic Web Search mode (BFCL benchmark) -

Qwen3-4B (FC): Only 2% accuracy in Agentic Web Search mode -

Qwen3-1.7B (FC): Only 4.5% accuracy in Agentic Web Search mode - Even with native FC support, smaller models struggle with deciding WHEN to call tools

- Common result:

{"tool_calls": null, "content": "Text instead of tool call"}

Note: Our team is currently working on creating a specialized benchmark for SGR vs ReAct performance on smaller models. Initial testing confirms that the SGR pipeline enables even smaller models to follow complex task workflows.

# Phase 1: Structured Output reasoning (100% reliable)

reasoning = model.generate(format="json_schema")

# {"action": "search", "query": "BMW X6 prices", "reason": "need current data"}

# Phase 2: Deterministic execution (no model uncertainty)

result = execute_plan(reasoning.actions)| Model Size | Recommended Approach | FC Accuracy | Why Choose This |

|---|---|---|---|

| <14B | Pure SGR + Structured Output | 15-25% | FC practically unusable |

| 14-32B | SGR + FC hybrid | 45-65% | Best of both worlds |

| 32B+ | Native FC with SGR fallback | 85%+ | FC works reliably |

| Use Case | Best Approach | Why |

|---|---|---|

| Data analysis & structuring | SGR | Controlled reasoning with visibility |

| Document processing | SGR | Step-by-step analysis with justification |

| Local models (<32B) | SGR | Forces reasoning regardless of model limitations |

| Multi-agent systems | Function Calling | Native agent interruption support |

| External API interactions | Function Calling | Direct tool access pattern |

| Production monitoring | SGR | All reasoning steps visible and loggable |

Initial Testing Results:

- SGR enables even small models to follow structured workflows

- SGR pipeline provides deterministic execution regardless of model size

- SGR forces reasoning steps that ReAct leaves to model discretion

Planned Benchmarking:

- We're developing a comprehensive benchmark comparing SGR vs ReAct across model sizes

- Initial testing shows promising results for SGR on models as small as 4B parameters

- Full metrics and performance comparison coming soon

The optimal solution for many production systems is a hybrid approach:

- SGR for decision making - Determine which tools to use

- Function Calling for execution - Get data and provide agent-like experience

- SGR for final processing - Structure and format results

This hybrid approach works particularly well for models in the 14-32B range, where Function Calling works sometimes but isn't fully reliable.

Bottom Line: Don't force <32B models to pretend they're GPT-4o in ReAct-style agentic workflows with tool_mode="auto". Let them think structurally through SGR, then execute deterministically.

- Create config.yaml from template:

cp config.yaml.example config.yaml- Configure API keys:

# SGR Research Agent - Configuration Template

# Production-ready configuration for Schema-Guided Reasoning

# Copy this file to config.yaml and fill in your API keys

# OpenAI API Configuration

openai:

api_key: "your-openai-api-key-here" # Required: Your OpenAI API key

base_url: "" # Optional: Alternative URL (e.g., for proxy LiteLLM/vLLM)

model: "gpt-4o-mini" # Model to use

max_tokens: 8000 # Maximum number of tokens

temperature: 0.4 # Generation temperature (0.0-1.0)

proxy: "" # Example: "socks5://127.0.0.1:1081" or "http://127.0.0.1:8080" or leave empty for no proxy

# Tavily Search Configuration

tavily:

api_key: "your-tavily-api-key-here" # Required: Your Tavily API key

api_base_url: "https://api.tavily.com" # Tavily API base URL

# Search Settings

search:

max_results: 10 # Maximum number of search results

# Scraping Settings

scraping:

enabled: false # Enable full text scraping of found pages

max_pages: 5 # Maximum pages to scrape per search

content_limit: 1500 # Character limit for full content per source

# Execution Settings

execution:

max_steps: 6 # Maximum number of execution steps

reports_dir: "reports" # Directory for saving reports

logs_dir: "logs" # Directory for saving reports

# Prompts Settings

prompts:

prompts_dir: "prompts" # Directory with prompts

tool_function_prompt_file: "tool_function_prompt.txt" # Tool function prompt file

system_prompt_file: "system_prompt.txt" # System prompt file# Custom host and port

python sgr_deep_research --host 127.0.0.1 --port 8080| Agent Model | Description |

|---|---|

sgr-agent |

Pure SGR (Schema-Guided Reasoning) |

sgr-tools-agent |

SGR + Function Calling hybrid |

sgr-auto-tools-agent |

SGR + Auto Function Calling |

sgr-so-tools-agent |

SGR + Structured Output |

tools-agent |

Pure Function Calling |

Get the list of available agent models:

curl http://localhost:8010/v1/modelsResearch reports are automatically saved to the reports/ directory in Markdown format:

reports/YYYYMMDD_HHMMSS_Task_Name.md

- 📋 Executive Summary - Key insights overview

- 🔍 Technical Analysis - Detailed findings with citations

- 📊 Key Findings - Main conclusions

- 📎 Sources - All reference links

See docs/example_report.md for a complete sample of SGR research output.

🛠️ Advanced Integration Examples - Production-ready code for streaming, monitoring & state management

import httpx

async def research_query(query: str):

async with httpx.AsyncClient() as client:

async with client.stream(

"POST",

"http://localhost:8010/v1/chat/completions",

json={"messages": [{"role": "user", "content": query}], "stream": True},

) as response:

async for chunk in response.aiter_text():

print(chunk, end="")curl -N -X POST "http://localhost:8010/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"messages": [{"role": "user", "content": "Research current AI trends"}],

"stream": true

}'import httpx

async def monitor_agent(agent_id: str):

async with httpx.AsyncClient() as client:

response = await client.get(f"http://localhost:8010/agents/{agent_id}/state")

state = response.json()

print(f"Task: {state['task']}")

print(f"State: {state['state']}")

print(f"Searches used: {state['searches_used']}")

print(f"Sources found: {state['sources_count']}")The SGR system excels at various research scenarios:

- Market Research: "Analyze BMW X6 2025 pricing across European markets"

- Technology Trends: "Research current developments in quantum computing"

- Competitive Analysis: "Compare features of top 5 CRM systems in 2024"

- Industry Reports: "Investigate renewable energy adoption in Germany"

Our team is actively working on several exciting enhancements to the SGR Deep Research platform:

- Implementing a hybrid SGR+FC mode directly in the current functionality

- Allowing seamless switching between SGR and Function Calling based on model capabilities

- Optimizing performance for mid-range models (14-32B parameters)

- Developing a specialized benchmark suite for comparing SGR vs ReAct approaches

- Testing across various model sizes and architectures

- Measuring performance, accuracy, and reliability metrics

- Adding support for Model Context Protocol (MCP) functionality

- Standardizing agent tooling and reasoning interfaces

- Enhancing interoperability with other agent frameworks

We welcome contributions from the community! SGR Deep Research is an open-source project designed as a production-ready service with extensible architecture.

- Fork the repository

-

Create a feature branch

git checkout -b feature/your-feature-name

- Make your changes

-

Test thoroughly

cd src uv sync uv run python sgr_deep_research # Test your changes

- Submit a pull request

- 🧠 New reasoning schemas for specialized research domains

- 🔍 Additional search providers (Google, Bing, etc.)

- 🛠️ Tool integrations (databases, APIs, file systems)

- 📊 Enhanced reporting formats (PDF, HTML, structured data)

- 🔧 Performance optimizations and caching strategies

🧠 Production-ready Schema-Guided Reasoning for automated research!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for sgr-deep-research

Similar Open Source Tools

sgr-deep-research

This repository contains a deep learning research project focused on natural language processing tasks. It includes implementations of various state-of-the-art models and algorithms for text classification, sentiment analysis, named entity recognition, and more. The project aims to provide a comprehensive resource for researchers and developers interested in exploring deep learning techniques for NLP applications.

Neosgenesis

Neogenesis System is an advanced AI decision-making framework that enables agents to 'think about how to think'. It implements a metacognitive approach with real-time learning, tool integration, and multi-LLM support, allowing AI to make expert-level decisions in complex environments. Key features include metacognitive intelligence, tool-enhanced decisions, real-time learning, aha-moment breakthroughs, experience accumulation, and multi-LLM support.

quantalogic

QuantaLogic is a ReAct framework for building advanced AI agents that seamlessly integrates large language models with a robust tool system. It aims to bridge the gap between advanced AI models and practical implementation in business processes by enabling agents to understand, reason about, and execute complex tasks through natural language interaction. The framework includes features such as ReAct Framework, Universal LLM Support, Secure Tool System, Real-time Monitoring, Memory Management, and Enterprise Ready components.

ai-counsel

AI Counsel is a true deliberative consensus MCP server where AI models engage in actual debate, refine positions across multiple rounds, and converge with voting and confidence levels. It features two modes (quick and conference), mixed adapters (CLI tools and HTTP services), auto-convergence, structured voting, semantic grouping, model-controlled stopping, evidence-based deliberation, local model support, data privacy, context injection, semantic search, fault tolerance, and full transcripts. Users can run local and cloud models to deliberate on various questions, ground decisions in reality by querying code and files, and query past decisions for analysis. The tool is designed for critical technical decisions requiring multi-model deliberation and consensus building.

ctinexus

CTINexus is a framework that leverages optimized in-context learning of large language models to automatically extract cyber threat intelligence from unstructured text and construct cybersecurity knowledge graphs. It processes threat intelligence reports to extract cybersecurity entities, identify relationships between security concepts, and construct knowledge graphs with interactive visualizations. The framework requires minimal configuration, with no extensive training data or parameter tuning needed.

FDAbench

FDABench is a benchmark tool designed for evaluating data agents' reasoning ability over heterogeneous data in analytical scenarios. It offers 2,007 tasks across various data sources, domains, difficulty levels, and task types. The tool provides ready-to-use data agent implementations, a DAG-based evaluation system, and a framework for agent-expert collaboration in dataset generation. Key features include data agent implementations, comprehensive evaluation metrics, multi-database support, different task types, extensible framework for custom agent integration, and cost tracking. Users can set up the environment using Python 3.10+ on Linux, macOS, or Windows. FDABench can be installed with a one-command setup or manually. The tool supports API configuration for LLM access and offers quick start guides for database download, dataset loading, and running examples. It also includes features like dataset generation using the PUDDING framework, custom agent integration, evaluation metrics like accuracy and rubric score, and a directory structure for easy navigation.

mcp-documentation-server

The mcp-documentation-server is a lightweight server application designed to serve documentation files for projects. It provides a simple and efficient way to host and access project documentation, making it easy for team members and stakeholders to find and reference important information. The server supports various file formats, such as markdown and HTML, and allows for easy navigation through the documentation. With mcp-documentation-server, teams can streamline their documentation process and ensure that project information is easily accessible to all involved parties.

pocketgroq

PocketGroq is a tool that provides advanced functionalities for text generation, web scraping, web search, and AI response evaluation. It includes features like an Autonomous Agent for answering questions, web crawling and scraping capabilities, enhanced web search functionality, and flexible integration with Ollama server. Users can customize the agent's behavior, evaluate responses using AI, and utilize various methods for text generation, conversation management, and Chain of Thought reasoning. The tool offers comprehensive methods for different tasks, such as initializing RAG, error handling, and tool management. PocketGroq is designed to enhance development processes and enable the creation of AI-powered applications with ease.

dspy.rb

DSPy.rb is a Ruby framework for building reliable LLM applications using composable, type-safe modules. It enables developers to define typed signatures and compose them into pipelines, offering a more structured approach compared to traditional prompting. The framework embraces Ruby conventions and adds innovations like CodeAct agents and enhanced production instrumentation, resulting in scalable LLM applications that are robust and efficient. DSPy.rb is actively developed, with a focus on stability and real-world feedback through the 0.x series before reaching a stable v1.0 API.

OpenViking

OpenViking is an open-source Context Database designed specifically for AI Agents. It aims to solve challenges in agent development by unifying memories, resources, and skills in a filesystem management paradigm. The tool offers tiered context loading, directory recursive retrieval, visualized retrieval trajectory, and automatic session management. Developers can interact with OpenViking like managing local files, enabling precise context manipulation and intuitive traceable operations. The tool supports various model services like OpenAI and Volcengine, enhancing semantic retrieval and context understanding for AI Agents.

DeepResearch

Tongyi DeepResearch is an agentic large language model with 30.5 billion total parameters, designed for long-horizon, deep information-seeking tasks. It demonstrates state-of-the-art performance across various search benchmarks. The model features a fully automated synthetic data generation pipeline, large-scale continual pre-training on agentic data, end-to-end reinforcement learning, and compatibility with two inference paradigms. Users can download the model directly from HuggingFace or ModelScope. The repository also provides benchmark evaluation scripts and information on the Deep Research Agent Family.

NadirClaw

NadirClaw is a powerful open-source tool designed for web scraping and data extraction. It provides a user-friendly interface for extracting data from websites with ease. With NadirClaw, users can easily scrape text, images, and other content from web pages for various purposes such as data analysis, research, and automation. The tool offers flexibility and customization options to cater to different scraping needs, making it a versatile solution for extracting data from the web. Whether you are a data scientist, researcher, or developer, NadirClaw can streamline your data extraction process and help you gather valuable insights from online sources.

dexto

Dexto is a lightweight runtime for creating and running AI agents that turn natural language into real-world actions. It serves as the missing intelligence layer for building AI applications, standalone chatbots, or as the reasoning engine inside larger products. Dexto features a powerful CLI and Web UI for running AI agents, supports multiple interfaces, allows hot-swapping of LLMs from various providers, connects to remote tool servers via the Model Context Protocol, is config-driven with version-controlled YAML, offers production-ready core features, extensibility for custom services, and enables multi-agent collaboration via MCP and A2A.

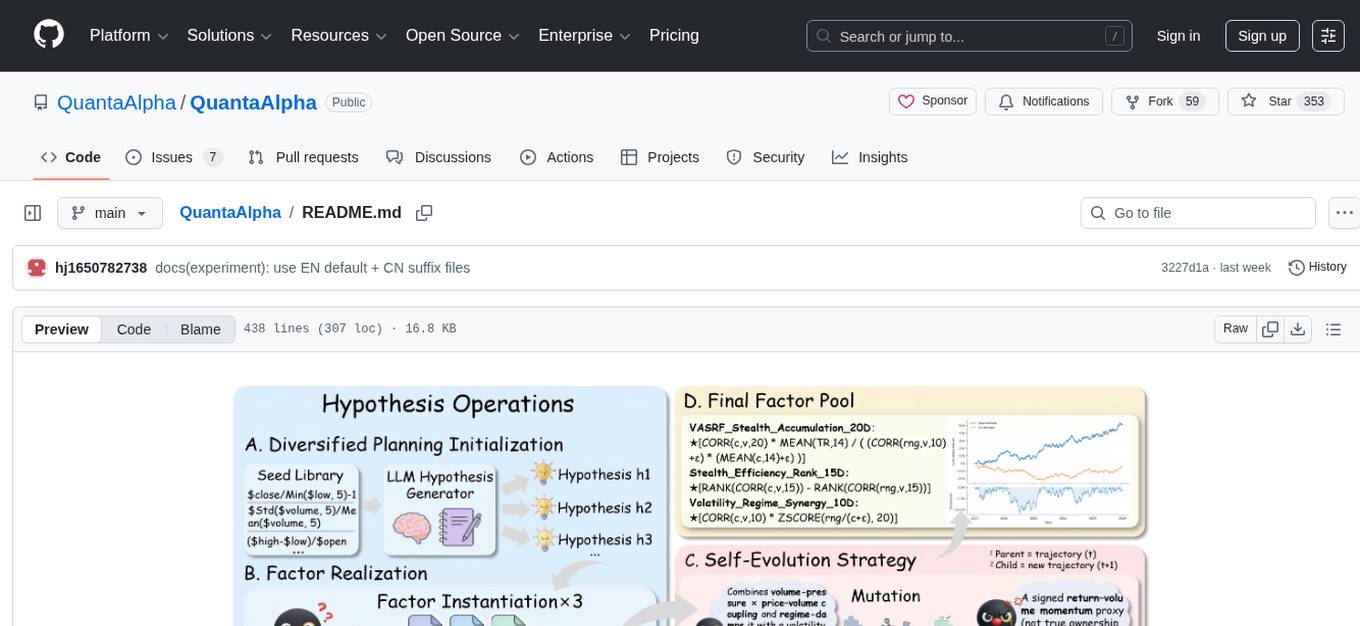

QuantaAlpha

QuantaAlpha is a framework designed for factor mining in quantitative alpha research. It combines LLM intelligence with evolutionary strategies to automatically mine, evolve, and validate alpha factors through self-evolving trajectories. The framework provides a trajectory-based approach with diversified planning initialization and structured hypothesis-code constraint. Users can describe their research direction and observe the automatic factor mining process. QuantaAlpha aims to transform how quantitative alpha factors are discovered by leveraging advanced technologies and self-evolving methodologies.

z-ai-sdk-python

Z.ai Open Platform Python SDK is the official Python SDK for Z.ai's large model open interface, providing developers with easy access to Z.ai's open APIs. The SDK offers core features like chat completions, embeddings, video generation, audio processing, assistant API, and advanced tools. It supports various functionalities such as speech transcription, text-to-video generation, image understanding, and structured conversation handling. Developers can customize client behavior, configure API keys, and handle errors efficiently. The SDK is designed to simplify AI interactions and enhance AI capabilities for developers.

logicstamp-context

LogicStamp Context is a static analyzer that extracts deterministic component contracts from TypeScript codebases, providing structured architectural context for AI coding assistants. It helps AI assistants understand architecture by extracting props, hooks, and dependencies without implementation noise. The tool works with React, Next.js, Vue, Express, and NestJS, and is compatible with various AI assistants like Claude, Cursor, and MCP agents. It offers features like watch mode for real-time updates, breaking change detection, and dependency graph creation. LogicStamp Context is a security-first tool that protects sensitive data, runs locally, and is non-opinionated about architectural decisions.

For similar tasks

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

adata

AData is a free and open-source A-share database that focuses on transaction-related data. It provides comprehensive data on stocks, including basic information, market data, and sentiment analysis. AData is designed to be easy to use and integrate with other applications, making it a valuable tool for quantitative trading and AI training.

PIXIU

PIXIU is a project designed to support the development, fine-tuning, and evaluation of Large Language Models (LLMs) in the financial domain. It includes components like FinBen, a Financial Language Understanding and Prediction Evaluation Benchmark, FIT, a Financial Instruction Dataset, and FinMA, a Financial Large Language Model. The project provides open resources, multi-task and multi-modal financial data, and diverse financial tasks for training and evaluation. It aims to encourage open research and transparency in the financial NLP field.

hezar

Hezar is an all-in-one AI library designed specifically for the Persian community. It brings together various AI models and tools, making it easy to use AI with just a few lines of code. The library seamlessly integrates with Hugging Face Hub, offering a developer-friendly interface and task-based model interface. In addition to models, Hezar provides tools like word embeddings, tokenizers, feature extractors, and more. It also includes supplementary ML tools for deployment, benchmarking, and optimization.

text-embeddings-inference

Text Embeddings Inference (TEI) is a toolkit for deploying and serving open source text embeddings and sequence classification models. TEI enables high-performance extraction for popular models like FlagEmbedding, Ember, GTE, and E5. It implements features such as no model graph compilation step, Metal support for local execution on Macs, small docker images with fast boot times, token-based dynamic batching, optimized transformers code for inference using Flash Attention, Candle, and cuBLASLt, Safetensors weight loading, and production-ready features like distributed tracing with Open Telemetry and Prometheus metrics.

CodeProject.AI-Server

CodeProject.AI Server is a standalone, self-hosted, fast, free, and open-source Artificial Intelligence microserver designed for any platform and language. It can be installed locally without the need for off-device or out-of-network data transfer, providing an easy-to-use solution for developers interested in AI programming. The server includes a HTTP REST API server, backend analysis services, and the source code, enabling users to perform various AI tasks locally without relying on external services or cloud computing. Current capabilities include object detection, face detection, scene recognition, sentiment analysis, and more, with ongoing feature expansions planned. The project aims to promote AI development, simplify AI implementation, focus on core use-cases, and leverage the expertise of the developer community.

spark-nlp

Spark NLP is a state-of-the-art Natural Language Processing library built on top of Apache Spark. It provides simple, performant, and accurate NLP annotations for machine learning pipelines that scale easily in a distributed environment. Spark NLP comes with 36000+ pretrained pipelines and models in more than 200+ languages. It offers tasks such as Tokenization, Word Segmentation, Part-of-Speech Tagging, Named Entity Recognition, Dependency Parsing, Spell Checking, Text Classification, Sentiment Analysis, Token Classification, Machine Translation, Summarization, Question Answering, Table Question Answering, Text Generation, Image Classification, Image to Text (captioning), Automatic Speech Recognition, Zero-Shot Learning, and many more NLP tasks. Spark NLP is the only open-source NLP library in production that offers state-of-the-art transformers such as BERT, CamemBERT, ALBERT, ELECTRA, XLNet, DistilBERT, RoBERTa, DeBERTa, XLM-RoBERTa, Longformer, ELMO, Universal Sentence Encoder, Llama-2, M2M100, BART, Instructor, E5, Google T5, MarianMT, OpenAI GPT2, Vision Transformers (ViT), OpenAI Whisper, and many more not only to Python and R, but also to JVM ecosystem (Java, Scala, and Kotlin) at scale by extending Apache Spark natively.

scikit-llm

Scikit-LLM is a tool that seamlessly integrates powerful language models like ChatGPT into scikit-learn for enhanced text analysis tasks. It allows users to leverage large language models for various text analysis applications within the familiar scikit-learn framework. The tool simplifies the process of incorporating advanced language processing capabilities into machine learning pipelines, enabling users to benefit from the latest advancements in natural language processing.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.