adata

免费开源A股量化交易数据库; 专注A股,专注量化,向阳而生; 开放、纯净、持续、为Ai(爱)发电。为个人量化交易而生,保卫3000点,珍惜底部机会......【股票数据,股票行情数据,股票量化数据,股票交易数据,k线行情数据,股票概念数据,股票数据接口,行情数据接口,量化交易数据】【多数据源融合,动态设置代理,保障数据高可用性】

Stars: 1880

AData is a free and open-source A-share database that focuses on transaction-related data. It provides comprehensive data on stocks, including basic information, market data, and sentiment analysis. AData is designed to be easy to use and integrate with other applications, making it a valuable tool for quantitative trading and AI training.

README:

0、介绍

专注A股,专注量化,向阳而生;开放、纯净、持续、为Ai(爱)发电。

专注股票行情数据,为了保证数据的高可用性,采用多数据源融合切换。

目标:支持个人量化行情的需要;众人拾柴火焰高,欢迎加入。

市场寒冷,发热不易,坚持更难;如有帮助到你,右上角点 ⭐Star 一键三连,谢谢支持和收藏^_^

一、快速开始

# 首次安装

pip install adata

# 指定镜像源

pip install adata -i http://mirrors.aliyun.com/pypi/simple/

# 升级版本

pip install -U adata

# 指定镜像源

pip install -U adata -i http://mirrors.aliyun.com/pypi/simple/注:国内镜像可能存在同步延迟,可使用官方镜像源,以下是镜像源

阿里云【推荐】:http://mirrors.aliyun.com/pypi/simple/

清华大学:https://pypi.tuna.tsinghua.edu.cn/simple

官方镜像源:https://pypi.org/simple

获取所有的股票代码

import adata

res_df = adata.stock.info.all_code()

print(res_df)示例结果:

stock_code short_name exchange

0 001324 N长青科 SZ

1 301361 众智科技 SZ

2 300514 友讯达 SZ

... ... ... ...

5490 300367 网力退 SZ

5491 300372 欣泰退 SZ

5492 300431 暴风退 SZ

[5493 rows x 3 columns]获取到股票代码后,传入对应的stock_code参数,查询对应股票的行情信息。

import adata

# k_type: k线类型:1.日;2.周;3.月 默认:1 日k

res_df = adata.stock.market.get_market(stock_code='000001', k_type=1, start_date='2021-01-01')

print(res_df)示例结果:

trade_time open close ... pre_close stock_code trade_date

0 2021-01-04 00:00:00 18.69 18.19 ... 18.93 000001 2021-01-04

1 2021-01-05 00:00:00 17.99 17.76 ... 18.19 000001 2021-01-05

2 2021-01-06 00:00:00 17.67 19.15 ... 17.76 000001 2021-01-06

.. ... ... ... ... ... ... ...

573 2023-05-18 00:00:00 12.57 12.49 ... 12.49 000001 2023-05-18

574 2023-05-19 00:00:00 12.43 12.34 ... 12.49 000001 2023-05-19

575 2023-05-22 00:00:00 12.31 12.38 ... 12.34 000001 2023-05-22

[576 rows :x 13 columns]请参考下面数据列表和相关字典文档,找到对应的函数并查看对应的函数注释,进行正确使用。

项目是基于公开接口,可能存在限制等,因此增加代理设置功能

import adata

# 设置代理,代理是全局设置,代理失效后可重新设置。参数:ip,proxy_url

adata.proxy(is_proxy=True, ip='60.167.21.27:1133')

res_df = adata.stock.info.all_code()

print(res_df)

注:

- proxy_url: 获取代理Ip的链接;ip和proxy_url方式选择其一;

- 每次请求获取一次,为节省ip资源建议使用自建的代理池。

二、数据列表

整理了最新版本的数据列表和相关使用Api,详细内容和相关使用参数,请参考数据字典文档。

| 数据 | API | 说明 | 备注 |

|---|---|---|---|

| A股代码 | stock.info.all_code() | 所有A股代码信息 | |

| 股本信息 | stock.info.get_stock_shares() | 获取单只股票的股本信息 | 来源:东方财富 |

| 申万一二级行业 | stock.info.get_industry_sw() | 获取单只股票的申万一二级行业 | 来源:百度 |

| 概念 | |||

| 来源:同花顺 | |||

| 概念代码 | stock.info.all_concept_code_ths() | 所有A股概念代码信息(同花顺) | 来源:同花顺公开数据 |

| 概念成分列表 | stock.info.concept_constituent_ths() | 获取同花顺概念指数的成分股(同花顺) | 注意:返回结果只有股票代码和股票简称,可根据概念名称查询 |

| 股票所属概念 | stock.info.get_concept_ths() | 获取单只股票所属的概念板块 | F10 |

| 来源:东方财富 | |||

| 概念代码 | stock.info.all_concept_code_east() | 所有A股概念代码信息(东方财富) | 来源:东方财富 |

| 概念成分列表 | stock.info.concept_constituent_east() | 获取同花顺概念指数的成分股(东方财富) | 注意:返回结果只有股票代码和股票简称,可根据概念名称查询 |

| 股票所属概念 | stock.info.get_concept_east() | 获取单只股票所属的概念板块 | 核心题材 |

| 股票所属板块 | stock.info.get_plate_east() | 获取单只股票所属的板块 | 1. 行业 2. 地域板块 3.概念,综合的概念接口 |

| 指数 | |||

| 指数代码 | stock.info.all_index_code() | 获取所有A股市场的指数代码 | 来源同花顺,可能存在同花顺对代码重新编码的情况 |

| 指数对应的成分股 | stock.info.index_constituent() | 获取对应指数的成分股列表 | |

| 其它 | |||

| 股票交易日历 | stock.info.trade_calendar() | 获取股票交易日信息 | 来源:深交所 |

| 数据 | API | 说明 | 备注 |

|---|---|---|---|

| 分红信息 | stock.market.get_dividend() | 获取单只股票的分红信息 | |

| 股票行情 | stock.market.get_market() | 获取单只股票的行情信息-日、周、月 k线 | |

| stock.market.get_market_min() | 获取单个股票的今日分时行情 | 只能获取当天 | |

| 实时行情 | stock.market.list_market_current() | 获取多个股票最新行情信息 | 实时行情 数据源:2个,新浪和腾讯 |

| stock.market.get_market_five() | 获取单个股票的5档行情信息 | 实时行情 数据源:2个,腾讯和百度 |

|

| stock.market.get_market_bar() | 获取单个股票的分笔成交行情 | 实时行情 股市通 |

|

| 概念行情-同花顺 | stock.market.get_market_concept_ths() | 获取单个概念的行情信息-日、周、月 k线 | 获取同花顺概念行情时, 请注意传入参数是指数代码还是概念代码, 指数代码8开头,index_code |

| stock.market.get_market_concept_min_ths() | 获取同花顺概念行情-当日分时 | 只能获取当天 | |

| stock.market.get_market_concept_current_ths() | 获取同花顺当前的概念行情 | 实时行情 | |

| 概念行情-东方财富 | stock.market.get_market_concept_east() | 获取单个概念的行情信息-日、周、月 k线 | 获取东方财富概念行情时, 指数代码BK开头,index_code |

| stock.market.get_market_concept_min_east() | 获取同花顺概念行情-当日分时 | 只能获取当天 | |

| stock.market.get_market_concept_current_east() | 获取同花顺当前的概念行情 | 实时行情 | |

| 指数行情 | stock.market.get_market_index() | 获取指数的行情信息-日、周、月 k线 | |

| stock.market.get_market_index_min() | 获取指数的行情-当日分时 | ||

| stock.market.get_market_index_current() | 获取当前的指数行情 | 实时行情 | |

| 个股资金流 | stock.market.get_capital_flow_min() | 获取单个股票的今日分时资金流向 | 最新实时数据 |

| stock.market.get_capital_flow() | 获取单个股票的资金流向 | 历史日度数据 | |

| 概念资金流 | stock.market.all_capital_flow_east() | 获取所有东财概念近N日资金流向 | 获取近1,5,10日资金流向 数据源:东方财富 |

注:概念和指数从本质来看是一样的,所以相关的接口和返回结果是一致的,概念是各个厂商自定义的指数,指数是官方或者权威机构定义的,都是一揽子股票的组合。

| 数据 | API | 说明 | 备注 |

|---|---|---|---|

| 核心财务数据 | stock.finance.get_core_index() | 获取单只股票的核心财务数据 | 来源:东方财富 三大报表详细数据,暂时不提供 |

| 数据 | API | 说明 | 备注 |

|---|---|---|---|

| ETF(场内) | fund.info.all_etf_exchange_traded_info() | 获取所有A股市场的ETF信息 | 来源:1. 东方财富 |

| 数据 | API | 说明 | 备注 |

|---|---|---|---|

| ETF行情 | fund.market.get_market_etf() | 获取ETF的行情信息-日、周、月 k线 | 来源:同花顺 |

| fund.market.get_market_etf_min() | 获取ETF的行情-当日分时 | ||

| fund.market.get_market_etf_current() | 获取当前的ETF行情 | 实时行情 |

| 数据 | API | 说明 | 备注 |

|---|---|---|---|

| 可转债代码 | bond.info.all_convert_code() | 获取所有A股市场的可转换债券代码信息 | 来源:1. 同花顺 |

| 可转债行情 | bond.market.list_market_current() | 获取A股市场的可转换债券最新行情 | 来源:新浪 |

| 数据 | API | 说明 | 备注 |

|---|---|---|---|

| 最近一个月的股票解禁列表 | sentiment.stock_lifting_last_month() | 查询最近一个月的股票解禁列表 | 来源:1. 同花顺 |

| 全市场融资融券余额列表 | sentiment.securities_margin() | 查询全市场融资融券余额列表 | 来源:1. 东方财富 |

| 北向资金-行情 | |||

| sentiment.north.north_flow_current() | 获取北向资金(沪深港通)当前流入资金的行情 | 来源:1.东方财富 | |

| sentiment.north.north_flow_min() | 获取北向资金分时行情 | ||

| sentiment.north.north_flow() | 获取北向资金历史流入行情 | ||

| 热度榜单 | sentiment.hot.pop_rank_100_east | 东方财富人气100榜单 | 来源:东方财富 |

| sentiment.hot.hot_rank_100_ths() | 同花顺热度100排行榜 | 来源:同花顺 | |

| sentiment.hot.hot_concept_20_ths() | 同花顺热门概念板块20排行榜 | 来源:同花顺 | |

| sentiment.hot.list_a_list_daily() | 龙虎榜单列表 | 来源:东方财富 | |

| sentiment.get_a_list_info() | 单只股票龙虎榜信息详情 | 来源:东方财富 | |

| 其它数据排期中 | TODO | 若您有相关资源可以一起参与贡献 |

三、数据源

| 数据源 | 板块 | 描述 |

|---|---|---|

| 同花顺 | 数据中心,行情中心,问财 | 让投资变的更简单 |

| 百度股市通 | 股市通 | 科技让投资更简单 |

| 东方财富 | 数据中心,行情中心 | 财经门户 |

| 腾讯理财 | 行情中心 | |

| 新浪财经 | 新浪财经 | 门户网站 |

--------------------------------------------感谢各位大厂提供的数据----------------------------------------------

主要记录查阅过的项目和相关平台,并对此项目产生了深远印象,特此鸣谢。

| akshare | 聚宽量化 | baostock | MyData |

|---|

| 版本号 | 内容 | 发布日期 | 备注 | |

|---|---|---|---|---|

| ✅ | 0.x.x | 股票 | 2023-04-05 ~ | 预览版本 |

| ✅ ️ | 1.x.x | 股票 | 2023-10-01 | 中国Ai股 |

| ☑️ | 2.x.x | 基金、债券 | 开发中 | 场内可交易基金:ETF、可转债 |

| ☑️ | 3.x.x | xxx | 排期中 |

-

关于AData,我们只关注交易产生的数据。在A股只有交易数据是真实的,对于量化和AI训练,也只需要关心交易相关的行情数据,做到真正的专注。当然,你可能会说财务数据等也非常有用,但财务数据相对滞后,而且可能ZJ,甚至有XL可能,最终对于普通交易者可能就成了接盘侠。财务数据在我们这里,只做股票池筛选作用,不做实时交易指标推荐。

-

根据多年的数据治理经验,函数和字典在设计上面,符合标准的数据存储,可根据数据字典建表落地到数据库。

-

距离15年已过8年,时光匆匆,抓住底部机会。

注:

- 永久免费开源A股数据库,只有交易相关的数据,专注量化交易。

- 送给A股的各位朋友一首歌:谢天笑-向阳花,愿你我向阳而生。

- Fork 本仓库

- 新建 Feat_xxx 分支

- 提交代码(注意代码风格和本项目一致即可)

- 新建 Pull Request

对于项目有支持,包括但不仅限:内容贡献,bug提交,思想交流等等,对项目有影响的个人和机构

| Simon | bigbigbigfish | LuneZ99 | 匿名用户 | thue | Triones009 |

|---|---|---|---|---|---|

| yxm0513 | hanxuanliang | akihara-sam | Andy | baei2048 | zpsakura |

| Lorry1123 | 多维人格 |

- 添加wx好友,备注:Adata量化进交流群;

- 扫码关注向阳花策略,不定期分享量化的知识,一起实盘量化切磋;

- 创始交流群和公众号都是近期建立,意在提供一个交流的平台,欢迎讨论交流;

- 一起保卫3000点直到突破6124点。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for adata

Similar Open Source Tools

adata

AData is a free and open-source A-share database that focuses on transaction-related data. It provides comprehensive data on stocks, including basic information, market data, and sentiment analysis. AData is designed to be easy to use and integrate with other applications, making it a valuable tool for quantitative trading and AI training.

yudao-ui-admin-vue3

The yudao-ui-admin-vue3 repository is an open-source project focused on building a fast development platform for developers in China. It utilizes Vue3 and Element Plus to provide features such as configurable themes, internationalization, dynamic route permission generation, common component encapsulation, and rich examples. The project supports the latest front-end technologies like Vue3 and Vite4, and also includes tools like TypeScript, pinia, vueuse, vue-i18n, vue-router, unocss, iconify, and wangeditor. It offers a range of development tools and features for system functions, infrastructure, workflow management, payment systems, member centers, data reporting, e-commerce systems, WeChat public accounts, ERP systems, and CRM systems.

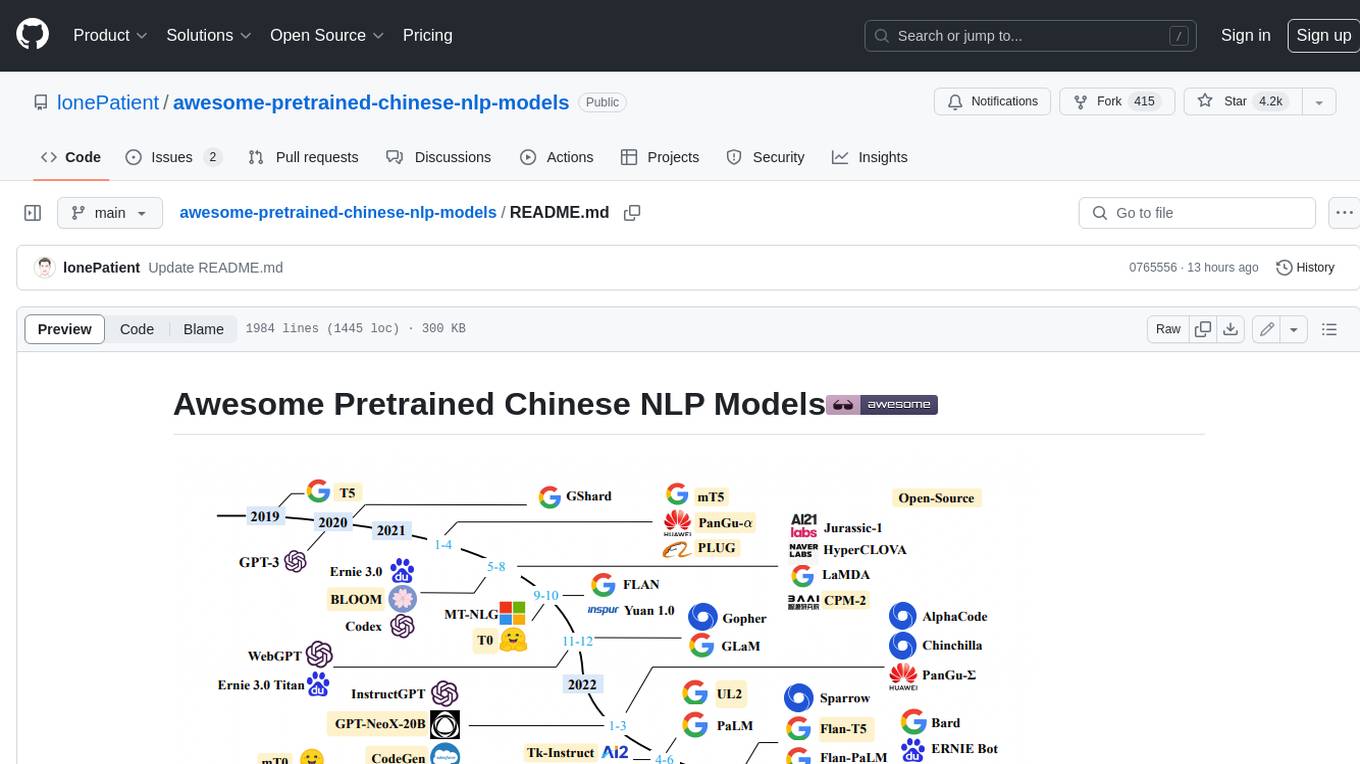

Chinese-LLaMA-Alpaca-3

Chinese-LLaMA-Alpaca-3 is a project based on Meta's latest release of the new generation open-source large model Llama-3. It is the third phase of the Chinese-LLaMA-Alpaca open-source large model series projects (Phase 1, Phase 2). This project open-sources the Chinese Llama-3 base model and the Chinese Llama-3-Instruct instruction fine-tuned large model. These models incrementally pre-train with a large amount of Chinese data on the basis of the original Llama-3 and further fine-tune using selected instruction data, enhancing Chinese basic semantics and instruction understanding capabilities. Compared to the second-generation related models, significant performance improvements have been achieved.

awesome-hosting

awesome-hosting is a curated list of hosting services sorted by minimal plan price. It includes various categories such as Web Services Platform, Backend-as-a-Service, Lambda, Node.js, Static site hosting, WordPress hosting, VPS providers, managed databases, GPU cloud services, and LLM/Inference API providers. Each category lists multiple service providers along with details on their minimal plan, trial options, free tier availability, open-source support, and specific features. The repository aims to help users find suitable hosting solutions based on their budget and requirements.

PaddleScience

PaddleScience is a scientific computing suite developed based on the deep learning framework PaddlePaddle. It utilizes the learning ability of deep neural networks and the automatic (higher-order) differentiation mechanism of PaddlePaddle to solve problems in physics, chemistry, meteorology, and other fields. It supports three solving methods: physics mechanism-driven, data-driven, and mathematical fusion, and provides basic APIs and detailed documentation for users to use and further develop.

sanic-web

Sanic-Web is a lightweight, end-to-end, and easily customizable large model application project built on technologies such as Dify, Ollama & Vllm, Sanic, and Text2SQL. It provides a one-stop solution for developing large model applications, supporting graphical data-driven Q&A using ECharts, handling table-based Q&A with CSV files, and integrating with third-party RAG systems for general knowledge Q&A. As a lightweight framework, Sanic-Web enables rapid iteration and extension to facilitate the quick implementation of large model projects.

yudao-cloud

Yudao-cloud is an open-source project designed to provide a fast development platform for developers in China. It includes various system functions, infrastructure, member center, data reports, workflow, mall system, WeChat public account, CRM, ERP, etc. The project is based on Java backend with Spring Boot and Spring Cloud Alibaba microservices architecture. It supports multiple databases, message queues, authentication systems, dynamic menu loading, SaaS multi-tenant system, code generator, real-time communication, integration with third-party services like WeChat, Alipay, and more. The project is well-documented and follows the Alibaba Java development guidelines, ensuring clean code and architecture.

BlossomLM

BlossomLM is a series of open-source conversational large language models. This project aims to provide a high-quality general-purpose SFT dataset in both Chinese and English, making fine-tuning accessible while also providing pre-trained model weights. **Hint**: BlossomLM is a personal non-commercial project.

yudao-boot-mini

yudao-boot-mini is an open-source project focused on developing a rapid development platform for developers in China. It includes features like system functions, infrastructure, member center, data reports, workflow, mall system, WeChat official account, CRM, ERP, etc. The project is based on Spring Boot with Java backend and Vue for frontend. It offers various functionalities such as user management, role management, menu management, department management, workflow management, payment system, code generation, API documentation, database documentation, file service, WebSocket integration, message queue, Java monitoring, and more. The project is licensed under the MIT License, allowing both individuals and enterprises to use it freely without restrictions.

Awesome-AGI

Awesome-AGI is a curated list of resources related to Artificial General Intelligence (AGI), including models, pipelines, applications, and concepts. It provides a comprehensive overview of the current state of AGI research and development, covering various aspects such as model training, fine-tuning, deployment, and applications in different domains. The repository also includes resources on prompt engineering, RLHF, LLM vocabulary expansion, long text generation, hallucination mitigation, controllability and safety, and text detection. It serves as a valuable resource for researchers, practitioners, and anyone interested in the field of AGI.

ruoyi-vue-pro

The ruoyi-vue-pro repository is an open-source project that provides a comprehensive development platform with various functionalities such as system features, infrastructure, member center, data reports, workflow, payment system, mall system, ERP system, CRM system, and AI big model. It is built using Java backend with Spring Boot framework and Vue frontend with different versions like Vue3 with element-plus, Vue3 with vben(ant-design-vue), and Vue2 with element-ui. The project aims to offer a fast development platform for developers and enterprises, supporting features like dynamic menu loading, button-level access control, SaaS multi-tenancy, code generator, real-time communication, integration with third-party services like WeChat, Alipay, and cloud services, and more.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

indie-hacker-tools-plus

Indie Hacker Tools Plus is a curated repository of essential tools and technology stacks for independent developers. The repository aims to help developers enhance efficiency, save costs, and mitigate risks by using popular and validated tools. It provides a collection of tools recognized by the industry to empower developers with the most refined technical support. Developers can contribute by submitting articles, software, or resources through issues or pull requests.

pmhub

PmHub is a smart project management system based on SpringCloud, SpringCloud Alibaba, and LLM. It aims to help students quickly grasp the architecture design and development process of microservices/distributed projects. PmHub provides a platform for students to experience the transformation from monolithic to microservices architecture, understand the pros and cons of both architectures, and prepare for job interviews. It offers popular technologies like SpringCloud-Gateway, Nacos, Sentinel, and provides high-quality code, continuous integration, product design documents, and an enterprise workflow system. PmHub is suitable for beginners and advanced learners who want to master core knowledge of microservices/distributed projects.

Chinese-LLaMA-Alpaca

This project open sources the **Chinese LLaMA model and the Alpaca large model fine-tuned with instructions**, to further promote the open research of large models in the Chinese NLP community. These models **extend the Chinese vocabulary based on the original LLaMA** and use Chinese data for secondary pre-training, further enhancing the basic Chinese semantic understanding ability. At the same time, the Chinese Alpaca model further uses Chinese instruction data for fine-tuning, significantly improving the model's understanding and execution of instructions.

For similar tasks

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

adata

AData is a free and open-source A-share database that focuses on transaction-related data. It provides comprehensive data on stocks, including basic information, market data, and sentiment analysis. AData is designed to be easy to use and integrate with other applications, making it a valuable tool for quantitative trading and AI training.

PIXIU

PIXIU is a project designed to support the development, fine-tuning, and evaluation of Large Language Models (LLMs) in the financial domain. It includes components like FinBen, a Financial Language Understanding and Prediction Evaluation Benchmark, FIT, a Financial Instruction Dataset, and FinMA, a Financial Large Language Model. The project provides open resources, multi-task and multi-modal financial data, and diverse financial tasks for training and evaluation. It aims to encourage open research and transparency in the financial NLP field.

hezar

Hezar is an all-in-one AI library designed specifically for the Persian community. It brings together various AI models and tools, making it easy to use AI with just a few lines of code. The library seamlessly integrates with Hugging Face Hub, offering a developer-friendly interface and task-based model interface. In addition to models, Hezar provides tools like word embeddings, tokenizers, feature extractors, and more. It also includes supplementary ML tools for deployment, benchmarking, and optimization.

text-embeddings-inference

Text Embeddings Inference (TEI) is a toolkit for deploying and serving open source text embeddings and sequence classification models. TEI enables high-performance extraction for popular models like FlagEmbedding, Ember, GTE, and E5. It implements features such as no model graph compilation step, Metal support for local execution on Macs, small docker images with fast boot times, token-based dynamic batching, optimized transformers code for inference using Flash Attention, Candle, and cuBLASLt, Safetensors weight loading, and production-ready features like distributed tracing with Open Telemetry and Prometheus metrics.

CodeProject.AI-Server

CodeProject.AI Server is a standalone, self-hosted, fast, free, and open-source Artificial Intelligence microserver designed for any platform and language. It can be installed locally without the need for off-device or out-of-network data transfer, providing an easy-to-use solution for developers interested in AI programming. The server includes a HTTP REST API server, backend analysis services, and the source code, enabling users to perform various AI tasks locally without relying on external services or cloud computing. Current capabilities include object detection, face detection, scene recognition, sentiment analysis, and more, with ongoing feature expansions planned. The project aims to promote AI development, simplify AI implementation, focus on core use-cases, and leverage the expertise of the developer community.

spark-nlp

Spark NLP is a state-of-the-art Natural Language Processing library built on top of Apache Spark. It provides simple, performant, and accurate NLP annotations for machine learning pipelines that scale easily in a distributed environment. Spark NLP comes with 36000+ pretrained pipelines and models in more than 200+ languages. It offers tasks such as Tokenization, Word Segmentation, Part-of-Speech Tagging, Named Entity Recognition, Dependency Parsing, Spell Checking, Text Classification, Sentiment Analysis, Token Classification, Machine Translation, Summarization, Question Answering, Table Question Answering, Text Generation, Image Classification, Image to Text (captioning), Automatic Speech Recognition, Zero-Shot Learning, and many more NLP tasks. Spark NLP is the only open-source NLP library in production that offers state-of-the-art transformers such as BERT, CamemBERT, ALBERT, ELECTRA, XLNet, DistilBERT, RoBERTa, DeBERTa, XLM-RoBERTa, Longformer, ELMO, Universal Sentence Encoder, Llama-2, M2M100, BART, Instructor, E5, Google T5, MarianMT, OpenAI GPT2, Vision Transformers (ViT), OpenAI Whisper, and many more not only to Python and R, but also to JVM ecosystem (Java, Scala, and Kotlin) at scale by extending Apache Spark natively.

scikit-llm

Scikit-LLM is a tool that seamlessly integrates powerful language models like ChatGPT into scikit-learn for enhanced text analysis tasks. It allows users to leverage large language models for various text analysis applications within the familiar scikit-learn framework. The tool simplifies the process of incorporating advanced language processing capabilities into machine learning pipelines, enabling users to benefit from the latest advancements in natural language processing.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.