obsidian-smart-composer

AI chat assistant for Obsidian with contextual awareness, smart writing assistance, and one-click edits. Features vault-aware conversations, semantic search, and local model support.

Stars: 1102

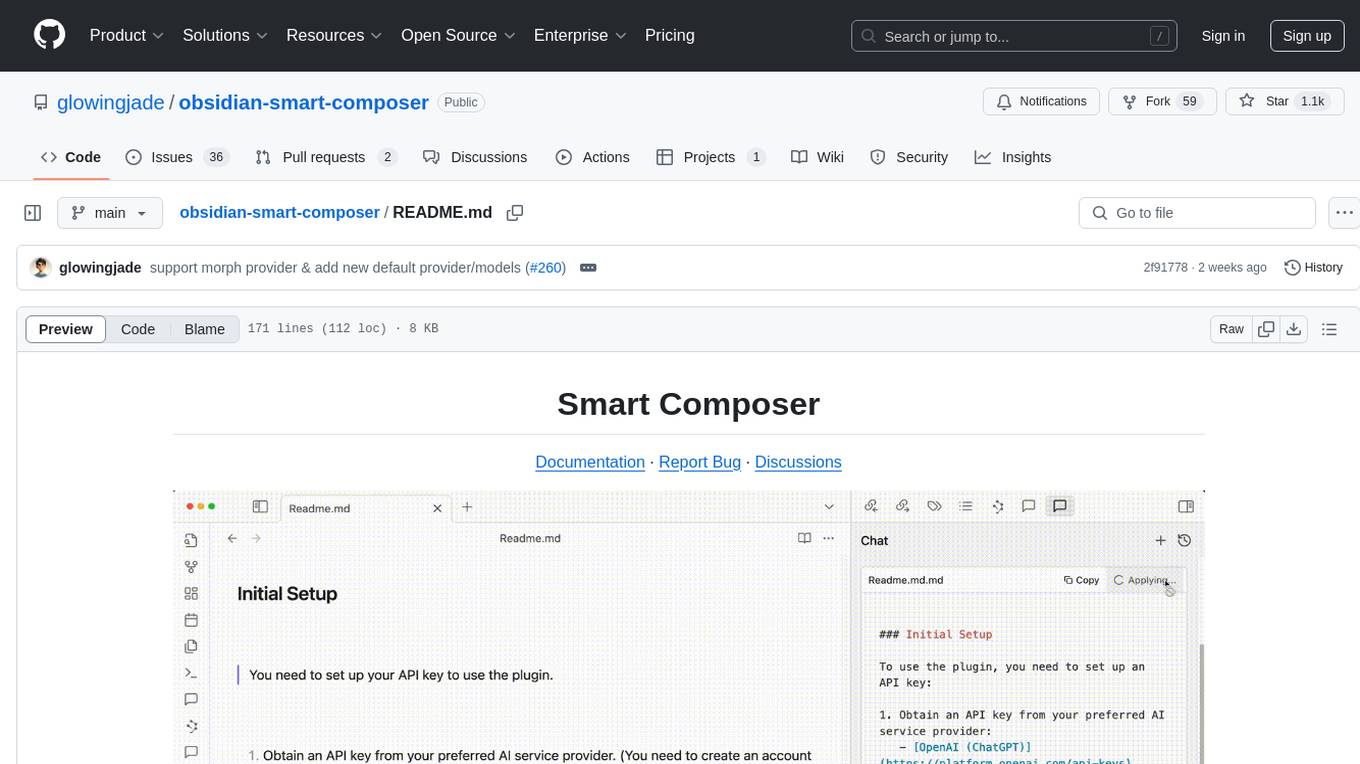

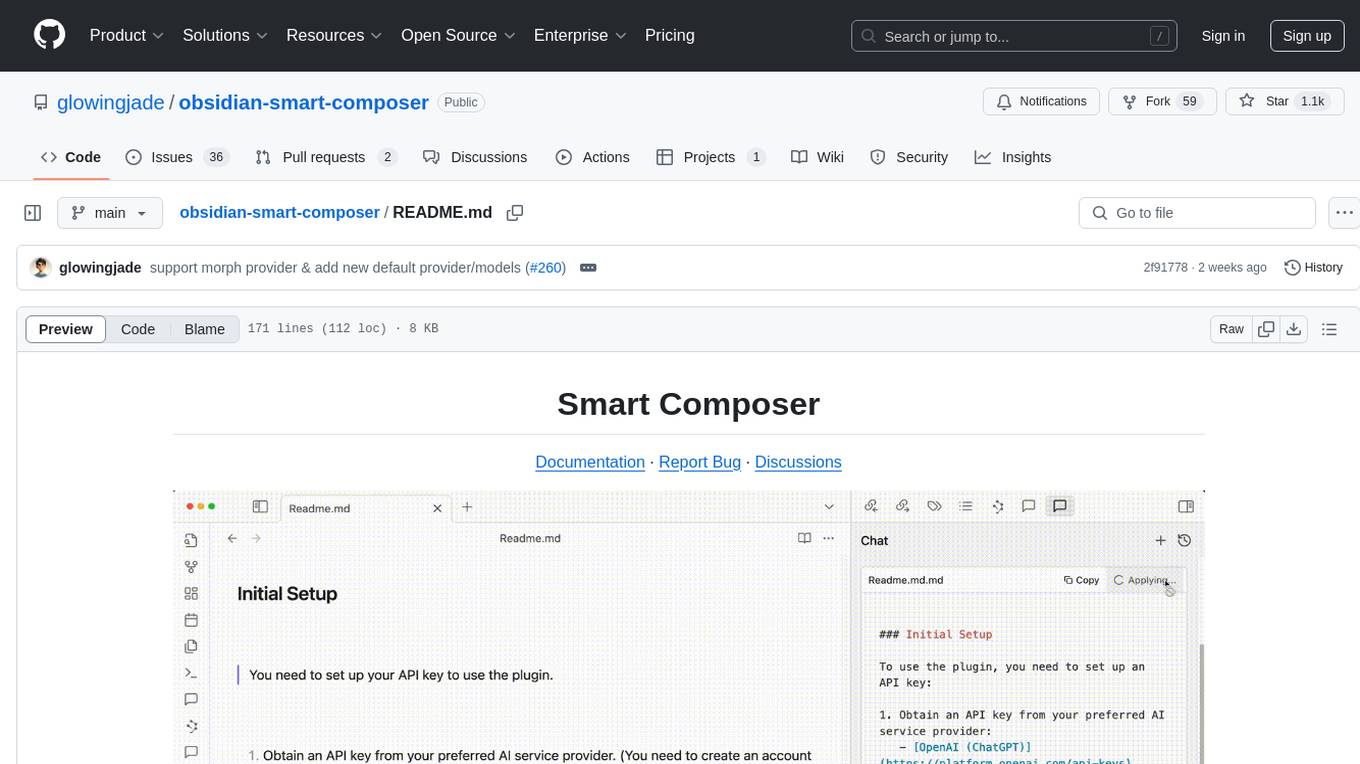

Smart Composer is an Obsidian plugin that enhances note-taking and content creation by integrating AI capabilities. It allows users to efficiently write by referencing their vault content, providing contextual chat with precise context selection, multimedia context support for website links and images, document edit suggestions, and vault search for relevant notes. The plugin also offers features like custom model selection, local model support, custom system prompts, and prompt templates. Users can set up the plugin by installing it through the Obsidian community plugins, enabling it, and configuring API keys for supported providers like OpenAI, Anthropic, and Gemini. Smart Composer aims to streamline the writing process by leveraging AI technology within the Obsidian platform.

README:

Documentation · Report Bug · Discussions

Everytime we ask ChatGPT, we need to put so much context information for each query. Why spend time putting background infos that are already in your vault?

Smart Composer is an Obsidian plugin that helps you write efficiently with AI by easily referencing your vault content. Inspired by Cursor AI and ChatGPT Canvas, this plugin unifies your note-taking and content creation process within Obsidian.

Upgrade your note-taking experience with our Contextual AI Assistant, inspired by Cursor AI. Unlike typical AI plugins, our assistant allows you to precisely select the context for your conversation.

- Type

@<fname>to choose specific files/folders as your conversation context - Get responses based on selected vault content

Now, you can add website links and images as additional context for your queries.

- Website content is automatically extracted

-

Image support: Add images directly to your chat through:

- Upload button

- Drag & drop

- Paste from clipboard

- Youtube link support: YouTube transcripts are fetched and included as context

- Coming soon: Support for external files (PDF, DOCX, ...)

Smart Composer suggests edits to your document. You can apply with a single click.

- Offers document change recommendations

- Apply suggested changes instantly

Automatically find and use relevant notes from your vault to enhance AI responses.

- Hit

Cmd+Shift+Enterto run Vault Search answer - Semantic search across your vault to find the most relevant context

-

Custom Model Selection: Use your own model by setting your API Key (stored locally). Supported providers:

- OpenAI

- Anthropic

- Google (Gemini)

- Groq

- DeepSeek

- OpenRouter

- Azure OpenAI

- Ollama

- LM Studio

- MorphLLM

- Any other OpenAI-compatible providers

- Local Model Support: Run open-source LLMs and embedding models locally with Ollama for complete privacy and offline usage.

- Custom System Prompts: Define your own system prompts that will be applied to every chat conversation.

-

Prompt Templates: Create and reuse templates for common queries by typing

/in the chat view. Perfect for standardizing repetitive tasks.- Create templates from any selected text with one click

⚠️ Important: Installer Version Requirement

Smart Composer requires a recent version of the Obsidian installer. If you experience issues with the plugin not loading properly:

First, try updating Obsidian normally at

Settings > General > Check for updates.If issues persist, manually update your Obsidian installer:

- Download the latest installer from Obsidian's download page

- Close Obsidian completely

- Run the new installer

- Open Obsidian Settings

- Navigate to "Community plugins" and click "Browse"

- Search for "Smart Composer" and click Install

- Enable the plugin in Community plugins

- Set up your API key in plugin settings

- OpenAI : ChatGPT API Keys

- Anthropic : Claude API Keys

- Gemini : Gemini API Keys

- Groq : Groq API Keys

💡 Free Option Available: While rate-limited, Gemini API provides the best performance among free models for Smart Composer. Recommended for users looking for a free option.

📚 For detailed setup instructions and documentation, please visit our Documentation.

To see our up-to-date project roadmap and progress, please check out our GitHub Projects kanban board.

Some of our planned features include:

- Support for external files (PDF, DOCX, etc.)

- Mentioning with tags or other metadata

We value your input and want to ensure you can easily share your thoughts and report any issues:

-

Bug Reports: If you encounter any bugs or unexpected behavior, please submit an issue on our GitHub Issues page. Be sure to include as much detail as possible to help us reproduce and address the problem.

-

Feature Requests: For new feature ideas or enhancements, please use our GitHub Discussions - Ideas & Feature Requests page. Create a new discussion to share your suggestions. This allows for community engagement and helps us prioritize future developments.

-

Show and Tell: We love seeing how you use Smart Composer! Share your unique use cases, workflows, or interesting applications of the plugin in the GitHub Discussions - Smart Composer Showcase page.

Your feedback and experiences are crucial in making Smart Composer better for everyone!

We welcome all kinds of contributions to Smart Composer, including bug reports, bug fixes, documentation improvements, and feature enhancements.

For major feature ideas, please create an issue first to discuss feasibility and implementation approach.

If you're interested in contributing, please refer to our CONTRIBUTING.md file for detailed information on:

- Setting up the development environment

- Our development workflow

- Working with the database schema

- The process for submitting pull requests

- Known issues and solutions for developers

These contributors were instrumental in shaping the initial vision, architecture, and design of Smart Composer:

@glowingjade (Twitter), @kevin-on, @realsnoopso (Twitter · LinkedIn), @woosukji

We also want to thank everyone else who has contributed. Your time and effort help make Smart Composer better for everyone!

This project is licensed under the MIT License.

If you find Smart Composer valuable, consider supporting its development:

Follow me on X (Twitter) @andy_suh_ for updates and announcements!

Your support helps maintain and improve this plugin. Every contribution is appreciated and makes a difference. Thank you for your support!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for obsidian-smart-composer

Similar Open Source Tools

obsidian-smart-composer

Smart Composer is an Obsidian plugin that enhances note-taking and content creation by integrating AI capabilities. It allows users to efficiently write by referencing their vault content, providing contextual chat with precise context selection, multimedia context support for website links and images, document edit suggestions, and vault search for relevant notes. The plugin also offers features like custom model selection, local model support, custom system prompts, and prompt templates. Users can set up the plugin by installing it through the Obsidian community plugins, enabling it, and configuring API keys for supported providers like OpenAI, Anthropic, and Gemini. Smart Composer aims to streamline the writing process by leveraging AI technology within the Obsidian platform.

CodeGPT

CodeGPT is an extension for JetBrains IDEs that provides access to state-of-the-art large language models (LLMs) for coding assistance. It offers a range of features to enhance the coding experience, including code completions, a ChatGPT-like interface for instant coding advice, commit message generation, reference file support, name suggestions, and offline development support. CodeGPT is designed to keep privacy in mind, ensuring that user data remains secure and private.

ProxyAI

ProxyAI is an open-source AI copilot for JetBrains, offering advanced code assistance features powered by top-tier language models. Users can customize their coding experience, receive AI-suggested code changes, autocomplete suggestions, and context-aware naming suggestions. The tool also allows users to chat with images, reference project files and folders, web docs, git history, and search the web. ProxyAI prioritizes user privacy by not collecting sensitive information and only gathering anonymous usage data with consent.

nanobrowser

Nanobrowser is an open-source AI web automation tool that runs in your browser. It is a free alternative to OpenAI Operator with flexible LLM options and a multi-agent system. Nanobrowser offers premium web automation capabilities while keeping users in complete control, with features like a multi-agent system, interactive side panel, task automation, follow-up questions, and multiple LLM support. Users can easily download and install Nanobrowser as a Chrome extension, configure agent models, and accomplish tasks such as news summary, GitHub research, and shopping research with just a sentence. The tool uses a specialized multi-agent system powered by large language models to understand and execute complex web tasks. Nanobrowser is actively developed with plans to expand LLM support, implement security measures, optimize memory usage, enable session replay, and develop specialized agents for domain-specific tasks. Contributions from the community are welcome to improve Nanobrowser and build the future of web automation.

refact

This repository contains Refact WebUI for fine-tuning and self-hosting of code models, which can be used inside Refact plugins for code completion and chat. Users can fine-tune open-source code models, self-host them, download and upload Lloras, use models for code completion and chat inside Refact plugins, shard models, host multiple small models on one GPU, and connect GPT-models for chat using OpenAI and Anthropic keys. The repository provides a Docker container for running the self-hosted server and supports various models for completion, chat, and fine-tuning. Refact is free for individuals and small teams under the BSD-3-Clause license, with custom installation options available for GPU support. The community and support include contributing guidelines, GitHub issues for bugs, a community forum, Discord for chatting, and Twitter for product news and updates.

Director

Director is a framework to build video agents that can reason through complex video tasks like search, editing, compilation, generation, etc. It enables users to summarize videos, search for specific moments, create clips instantly, integrate GenAI projects and APIs, add overlays, generate thumbnails, and more. Built on VideoDB's 'video-as-data' infrastructure, Director is perfect for developers, creators, and teams looking to simplify media workflows and unlock new possibilities.

weam

Weam is an open source platform designed to help teams systematically adopt AI. It provides a production-ready stack with Next.js frontend and Node.js/Python backend, allowing for immediate deployment and use. Weam connects to major LLM providers, enabling easy access to the latest AI models. The platform organizes AI interactions into 'Brains' for different departments, offering customization and expansion options. Features include chat system, productivity tools, sharing & access controls, prompt library, AI agents, RAG, MCP, enterprise features, pre-built automations, and upcoming AI app solutions. Weam is free, open source, and scalable to meet growing needs.

pyqt-openai

VividNode is a cross-platform AI desktop chatbot application for LLM such as GPT, Claude, Gemini, Llama chatbot interaction and image generation. It offers customizable features, local chat history, and enhanced performance without requiring a browser. The application is powered by GPT4Free and allows users to interact with chatbots and generate images seamlessly. VividNode supports Windows, Mac, and Linux, securely stores chat history locally, and provides features like chat interface customization, image generation, focus and accessibility modes, and extensive customization options with keyboard shortcuts for efficient operations.

nexent

Nexent is a powerful tool for analyzing and visualizing network traffic data. It provides comprehensive insights into network behavior, helping users to identify patterns, anomalies, and potential security threats. With its user-friendly interface and advanced features, Nexent is suitable for network administrators, cybersecurity professionals, and anyone looking to gain a deeper understanding of their network infrastructure.

MyDeviceAI

MyDeviceAI is a personal AI assistant app for iPhone that brings the power of artificial intelligence directly to the device. It focuses on privacy, performance, and personalization by running AI models locally and integrating with privacy-focused web services. The app offers seamless user experience, web search integration, advanced reasoning capabilities, personalization features, chat history access, and broad device support. It requires macOS, Xcode, CocoaPods, Node.js, and a React Native development environment for installation. The technical stack includes React Native framework, AI models like Qwen 3 and BGE Small, SearXNG integration, Redux for state management, AsyncStorage for storage, Lucide for UI components, and tools like ESLint and Prettier for code quality.

ha-llmvision

LLM Vision is a Home Assistant integration that allows users to analyze images, videos, and camera feeds using multimodal LLMs. It supports providers such as OpenAI, Anthropic, Google Gemini, LocalAI, and Ollama. Users can input images and videos from camera entities or local files, with the option to downscale images for faster processing. The tool provides detailed instructions on setting up LLM Vision and each supported provider, along with usage examples and service call parameters.

kollektiv

Kollektiv is a Retrieval-Augmented Generation (RAG) system designed to enable users to chat with their favorite documentation easily. It aims to provide LLMs with access to the most up-to-date knowledge, reducing inaccuracies and improving productivity. The system utilizes intelligent web crawling, advanced document processing, vector search, multi-query expansion, smart re-ranking, AI-powered responses, and dynamic system prompts. The technical stack includes Python/FastAPI for backend, Supabase, ChromaDB, and Redis for storage, OpenAI and Anthropic Claude 3.5 Sonnet for AI/ML, and Chainlit for UI. Kollektiv is licensed under a modified version of the Apache License 2.0, allowing free use for non-commercial purposes.

WritingTools

Writing Tools is an Apple Intelligence-inspired application for Windows, Linux, and macOS that supercharges your writing with an AI LLM. It allows users to instantly proofread, optimize text, and summarize content from webpages, YouTube videos, documents, etc. The tool is privacy-focused, open-source, and supports multiple languages. It offers powerful features like grammar correction, content summarization, and LLM chat mode, making it a versatile writing assistant for various tasks.

chatty

Chatty is a private AI tool that runs large language models natively and privately in the browser, ensuring in-browser privacy and offline usability. It supports chat history management, open-source models like Gemma and Llama2, responsive design, intuitive UI, markdown & code highlight, chat with files locally, custom memory support, export chat messages, voice input support, response regeneration, and light & dark mode. It aims to bring popular AI interfaces like ChatGPT and Gemini into an in-browser experience.

PyWxDump

PyWxDump is a powerful tool designed to help extract and manage WeChat data efficiently. It allows users to read local databases, view chat histories, and export data in various formats such as CSV and HTML. With features like multi-account support, version compatibility, data export, AI training, and automated responses, PyWxDump offers flexibility for training AI models and developing automated replies.

WeClone

WeClone is an all-in-one solution for creating your digital twin from chat records. It allows users to fine-tune large language models using their chat history, capturing their unique style and personality to integrate into a chatbot, effectively creating a digital avatar. The tool offers digital cloning, chatbot integration, user-friendly interface for managing chat records, fine-tuning with LoRA, and cross-platform compatibility.

For similar tasks

YesImBot

YesImBot, also known as Athena, is a Koishi plugin designed to allow large AI models to participate in group chat discussions. It offers easy customization of the bot's name, personality, emotions, and other messages. The plugin supports load balancing multiple API interfaces for large models, provides immersive context awareness, blocks potentially harmful messages, and automatically fetches high-quality prompts. Users can adjust various settings for the bot and customize system prompt words. The ultimate goal is to seamlessly integrate the bot into group chats without detection, with ongoing improvements and features like message recognition, emoji sending, multimodal image support, and more.

burpference

Burpference is an open-source extension designed to capture in-scope HTTP requests and responses from Burp's proxy history and send them to a remote LLM API in JSON format. It automates response capture, integrates with APIs, optimizes resource usage, provides color-coded findings visualization, offers comprehensive logging, supports native Burp reporting, and allows flexible configuration. Users can customize system prompts, API keys, and remote hosts, and host models locally to prevent high inference costs. The tool is ideal for offensive web application engagements to surface findings and vulnerabilities.

obsidian-smart-composer

Smart Composer is an Obsidian plugin that enhances note-taking and content creation by integrating AI capabilities. It allows users to efficiently write by referencing their vault content, providing contextual chat with precise context selection, multimedia context support for website links and images, document edit suggestions, and vault search for relevant notes. The plugin also offers features like custom model selection, local model support, custom system prompts, and prompt templates. Users can set up the plugin by installing it through the Obsidian community plugins, enabling it, and configuring API keys for supported providers like OpenAI, Anthropic, and Gemini. Smart Composer aims to streamline the writing process by leveraging AI technology within the Obsidian platform.

swift-chat

SwiftChat is a fast and responsive AI chat application developed with React Native and powered by Amazon Bedrock. It offers real-time streaming conversations, AI image generation, multimodal support, conversation history management, and cross-platform compatibility across Android, iOS, and macOS. The app supports multiple AI models like Amazon Bedrock, Ollama, DeepSeek, and OpenAI, and features a customizable system prompt assistant. With a minimalist design philosophy and robust privacy protection, SwiftChat delivers a seamless chat experience with various features like rich Markdown support, comprehensive multimodal analysis, creative image suite, and quick access tools. The app prioritizes speed in launch, request, render, and storage, ensuring a fast and efficient user experience. SwiftChat also emphasizes app privacy and security by encrypting API key storage, minimal permission requirements, local-only data storage, and a privacy-first approach.

aiaio

aiaio (AI-AI-O) is a lightweight, privacy-focused web UI for interacting with AI models. It supports both local and remote LLM deployments through OpenAI-compatible APIs. The tool provides features such as dark/light mode support, local SQLite database for conversation storage, file upload and processing, configurable model parameters through UI, privacy-focused design, responsive design for mobile/desktop, syntax highlighting for code blocks, real-time conversation updates, automatic conversation summarization, customizable system prompts, WebSocket support for real-time updates, Docker support for deployment, multiple API endpoint support, and multiple system prompt support. Users can configure model parameters and API settings through the UI, handle file uploads, manage conversations, and use keyboard shortcuts for efficient interaction. The tool uses SQLite for storage with tables for conversations, messages, attachments, and settings. Contributions to the project are welcome under the Apache License 2.0.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.