nanobrowser

Open-Source Chrome extension for AI-powered web automation. Run multi-agent workflows using your own LLM API key. Alternative to OpenAI Operator.

Stars: 9751

Nanobrowser is an open-source AI web automation tool that runs in your browser. It is a free alternative to OpenAI Operator with flexible LLM options and a multi-agent system. Nanobrowser offers premium web automation capabilities while keeping users in complete control, with features like a multi-agent system, interactive side panel, task automation, follow-up questions, and multiple LLM support. Users can easily download and install Nanobrowser as a Chrome extension, configure agent models, and accomplish tasks such as news summary, GitHub research, and shopping research with just a sentence. The tool uses a specialized multi-agent system powered by large language models to understand and execute complex web tasks. Nanobrowser is actively developed with plans to expand LLM support, implement security measures, optimize memory usage, enable session replay, and develop specialized agents for domain-specific tasks. Contributions from the community are welcome to improve Nanobrowser and build the future of web automation.

README:

Nanobrowser is an open-source AI web automation tool that runs in your browser. A free alternative to OpenAI Operator with flexible LLM options and multi-agent system.

⬇️ Get Nanobrowser from Chrome Web Store for free

👏 Join the community in Discord | X

🌟 Loving Nanobrowser? Give us a star and help spread the word!

❤️ Support the project by sponsoring us - every contribution helps keep Nanobrowser free and open source!

Nanobrowser's multi-agent system analyzing HuggingFace in real-time, with the Planner intelligently self-correcting when encountering obstacles and dynamically instructing the Navigator to adjust its approach—all running locally in your browser.

Looking for a powerful AI browser agent without the $200/month price tag of OpenAI Operator? Nanobrowser , as a chrome extension, delivers premium web automation capabilities while keeping you in complete control:

- 100% Free - No subscription fees or hidden costs. Just install and use your own API keys, and you only pay what you use with your own API keys.

- Privacy-Focused - Everything runs in your local browser. Your credentials stay with you, never shared with any cloud service.

- Flexible LLM Options - Connect to your preferred LLM providers with the freedom to choose different models for different agents.

- Fully Open Source - Complete transparency in how your browser is automated. No black boxes or hidden processes.

Note: We currently support OpenAI, Anthropic, Gemini, Ollama, Groq, Cerebras, Llama and custom OpenAI-Compatible providers, more providers will be supported.

- Multi-agent System: Specialized AI agents collaborate to accomplish complex web workflows

- Interactive Side Panel: Intuitive chat interface with real-time status updates

- Task Automation: Seamlessly automate repetitive web automation tasks across websites

- Follow-up Questions: Ask contextual follow-up questions about completed tasks

- Conversation History: Easily access and manage your AI agent interaction history

- Multiple LLM Support: Connect your preferred LLM providers and assign different models to different agents

Officially Supported:

- Chrome - Full support with all features

- Edge - Full support with all features

Not Supported:

- Firefox, Safari, and other Chromium variants (Opera, Arc, etc.)

Note: While Nanobrowser may function on other Chromium-based browsers, we recommend using Chrome or Edge for the best experience and guaranteed compatibility.

-

Install from Chrome Web Store (Stable Version):

- Visit the Nanobrowser Chrome Web Store page

- Click "Add to Chrome" button

- Confirm the installation when prompted

Important Note: For latest features, install from "Manually Install Latest Version" below, as Chrome Web Store version may be delayed due to review process.

-

Configure Agent Models:

- Click the Nanobrowser icon in your toolbar to open the sidebar

- Click the

Settingsicon (top right) - Add your LLM API keys

- Choose which model to use for different agents (Navigator, Planner)

To get the most recent version with all the latest features:

-

Download

- Download the latest

nanobrowser.zipfile from the official Github release page.

- Download the latest

-

Install:

- Unzip

nanobrowser.zip. - Open

chrome://extensions/in Chrome - Enable

Developer mode(top right) - Click

Load unpacked(top left) - Select the unzipped

nanobrowserfolder.

- Unzip

-

Configure Agent Models

- Click the Nanobrowser icon in your toolbar to open the sidebar

- Click the

Settingsicon (top right). - Add your LLM API keys.

- Choose which model to use for different agents (Navigator, Planner)

-

Upgrading:

- Download the latest

nanobrowser.zipfile from the release page. - Unzip and replace your existing Nanobrowser files with the new ones.

- Go to

chrome://extensions/in Chrome and click the refresh icon on the Nanobrowser card.

- Download the latest

If you prefer to build Nanobrowser yourself, follow these steps:

-

Prerequisites:

-

Clone the Repository:

git clone https://github.com/nanobrowser/nanobrowser.git cd nanobrowser -

Install Dependencies:

pnpm install

-

Build the Extension:

pnpm build

-

Load the Extension:

- The built extension will be in the

distdirectory - Follow the installation steps from the Manually Install section to load the extension into your browser

- The built extension will be in the

-

Development Mode (optional):

pnpm dev

Nanobrowser allows you to configure different LLM models for each agent to balance performance and cost. Here are recommended configurations:

-

Planner: Claude Sonnet 4

- Better reasoning and planning capabilities

-

Navigator: Claude Haiku 3.5

- Efficient for web navigation tasks

- Good balance of performance and cost

-

Planner: Claude Haiku or GPT-4o

- Reasonable performance at lower cost

- May require more iterations for complex tasks

-

Navigator: Gemini 2.5 Flash or GPT-4o-mini

- Lightweight and cost-efficient

- Suitable for basic navigation tasks

-

Setup Options:

- Use Ollama or other custom OpenAI-compatible providers to run models locally

- Zero API costs and complete privacy with no data leaving your machine

-

Recommended Models:

- Qwen3-30B-A3B-Instruct-2507

- Falcon3 10B

- Qwen 2.5 Coder 14B

- Mistral Small 24B

- Latest test results from community

- We welcome community experience sharing with other local models in our Discord

-

Prompt Engineering:

- Local models require more specific and cleaner prompts

- Avoid high-level, ambiguous commands

- Break complex tasks into clear, detailed steps

- Provide explicit context and constraints

Note: The cost-effective configuration may produce less stable outputs and require more iterations for complex tasks.

Tip: Feel free to experiment with your own model configurations! Found a great combination? Share it with the community in our Discord to help others optimize their setup.

Here are some powerful tasks you can accomplish with just a sentence:

-

News Summary:

"Go to TechCrunch and extract top 10 headlines from the last 24 hours"

-

GitHub Research:

"Look for the trending Python repositories on GitHub with most stars"

-

Shopping Research:

"Find a portable Bluetooth speaker on Amazon with a water-resistant design, under $50. It should have a minimum battery life of 10 hours"

We're actively developing Nanobrowser with exciting features on the horizon, welcome to join us!

Check out our detailed roadmap and upcoming features in our GitHub Discussions.

We need your help to make Nanobrowser even better! Contributions of all kinds are welcome:

-

Share Prompts & Use Cases

- Join our Discord server.

- share how you're using Nanobrowser. Help us build a library of useful prompts and real-world use cases.

-

Provide Feedback

- Try Nanobrowser and give us feedback on its performance or suggest improvements in our Discord server.

-

Contribute Code

- Check out our CONTRIBUTING.md for guidelines on how to contribute code to the project.

- Submit pull requests for bug fixes, features, or documentation improvements.

We believe in the power of open source and community collaboration. Join us in building the future of web automation!

If you discover a security vulnerability, please DO NOT disclose it publicly through issues, pull requests, or discussions.

Instead, please create a GitHub Security Advisory to report the vulnerability responsibly. This allows us to address the issue before it's publicly disclosed.

We appreciate your help in keeping Nanobrowser and its users safe!

Join our growing community of developers and users:

- Discord - Chat with team and community

- Twitter - Follow for updates and announcements

- GitHub Discussions - Share ideas and ask questions

Nanobrowser builds on top of other awesome open-source projects:

Huge thanks to their creators and contributors!

This project is licensed under the Apache License 2.0 - see the LICENSE file for details.

Made with ❤️ by the Nanobrowser Team.

Like Nanobrowser? Give us a star 🌟 and join us in Discord | X

We explicitly DO NOT endorse, support, or participate in any projects involving cryptocurrencies, tokens, NFTs, or other blockchain-related applications based on this codebase.

Any such derivative projects are NOT Affiliated with, or maintained by, or in any way connected to the official Nanobrowser project or its core team.

We assume NO LIABILITY for any losses, damages, or issues arising from the use of third-party derivative projects. Users interact with these projects at their own risk.

We reserve the right to publicly distance ourselves from any misuse or misleading use of our name, codebase, or brand.

We encourage open-source innovation but urge our community to be discerning and cautious. Please ensure you understand the risks before using any software or service built upon our codebase by independent developers.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for nanobrowser

Similar Open Source Tools

nanobrowser

Nanobrowser is an open-source AI web automation tool that runs in your browser. It is a free alternative to OpenAI Operator with flexible LLM options and a multi-agent system. Nanobrowser offers premium web automation capabilities while keeping users in complete control, with features like a multi-agent system, interactive side panel, task automation, follow-up questions, and multiple LLM support. Users can easily download and install Nanobrowser as a Chrome extension, configure agent models, and accomplish tasks such as news summary, GitHub research, and shopping research with just a sentence. The tool uses a specialized multi-agent system powered by large language models to understand and execute complex web tasks. Nanobrowser is actively developed with plans to expand LLM support, implement security measures, optimize memory usage, enable session replay, and develop specialized agents for domain-specific tasks. Contributions from the community are welcome to improve Nanobrowser and build the future of web automation.

weam

Weam is an open source platform designed to help teams systematically adopt AI. It provides a production-ready stack with Next.js frontend and Node.js/Python backend, allowing for immediate deployment and use. Weam connects to major LLM providers, enabling easy access to the latest AI models. The platform organizes AI interactions into 'Brains' for different departments, offering customization and expansion options. Features include chat system, productivity tools, sharing & access controls, prompt library, AI agents, RAG, MCP, enterprise features, pre-built automations, and upcoming AI app solutions. Weam is free, open source, and scalable to meet growing needs.

LLMstudio

LLMstudio by TensorOps is a platform that offers prompt engineering tools for accessing models from providers like OpenAI, VertexAI, and Bedrock. It provides features such as Python Client Gateway, Prompt Editing UI, History Management, and Context Limit Adaptability. Users can track past runs, log costs and latency, and export history to CSV. The tool also supports automatic switching to larger-context models when needed. Coming soon features include side-by-side comparison of LLMs, automated testing, API key administration, project organization, and resilience against rate limits. LLMstudio aims to streamline prompt engineering, provide execution history tracking, and enable effortless data export, offering an evolving environment for teams to experiment with advanced language models.

plandex

Plandex is an open source, terminal-based AI coding engine designed for complex tasks. It uses long-running agents to break up large tasks into smaller subtasks, helping users work through backlogs, navigate unfamiliar technologies, and save time on repetitive tasks. Plandex supports various AI models, including OpenAI, Anthropic Claude, Google Gemini, and more. It allows users to manage context efficiently in the terminal, experiment with different approaches using branches, and review changes before applying them. The tool is platform-independent and runs from a single binary with no dependencies.

AIOStreams

AIOStreams is a versatile tool that combines streams from various addons into one platform, offering extensive customization options. Users can change result formats, filter results by various criteria, remove duplicates, prioritize services, sort results, specify size limits, and more. The tool scrapes results from selected addons, applies user configurations, and presents the results in a unified manner. It simplifies the process of finding and accessing desired content from multiple sources, enhancing user experience and efficiency.

ai_automation_suggester

An integration for Home Assistant that leverages AI models to understand your unique home environment and propose intelligent automations. By analyzing your entities, devices, areas, and existing automations, the AI Automation Suggester helps you discover new, context-aware use cases you might not have considered, ultimately streamlining your home management and improving efficiency, comfort, and convenience. The tool acts as a personal automation consultant, providing actionable YAML-based automations that can save energy, improve security, enhance comfort, and reduce manual intervention. It turns the complexity of a large Home Assistant environment into actionable insights and tangible benefits.

agent-zero

Agent Zero is a personal and organic AI framework designed to be dynamic, organically growing, and learning as you use it. It is fully transparent, readable, comprehensible, customizable, and interactive. The framework uses the computer as a tool to accomplish tasks, with no single-purpose tools pre-programmed. It emphasizes multi-agent cooperation, complete customization, and extensibility. Communication is key in this framework, allowing users to give proper system prompts and instructions to achieve desired outcomes. Agent Zero is capable of dangerous actions and should be run in an isolated environment. The framework is prompt-based, highly customizable, and requires a specific environment to run effectively.

Simplifine

Simplifine is an open-source library designed for easy LLM finetuning, enabling users to perform tasks such as supervised fine tuning, question-answer finetuning, contrastive loss for embedding tasks, multi-label classification finetuning, and more. It provides features like WandB logging, in-built evaluation tools, automated finetuning parameters, and state-of-the-art optimization techniques. The library offers bug fixes, new features, and documentation updates in its latest version. Users can install Simplifine via pip or directly from GitHub. The project welcomes contributors and provides comprehensive documentation and support for users.

refact

This repository contains Refact WebUI for fine-tuning and self-hosting of code models, which can be used inside Refact plugins for code completion and chat. Users can fine-tune open-source code models, self-host them, download and upload Lloras, use models for code completion and chat inside Refact plugins, shard models, host multiple small models on one GPU, and connect GPT-models for chat using OpenAI and Anthropic keys. The repository provides a Docker container for running the self-hosted server and supports various models for completion, chat, and fine-tuning. Refact is free for individuals and small teams under the BSD-3-Clause license, with custom installation options available for GPU support. The community and support include contributing guidelines, GitHub issues for bugs, a community forum, Discord for chatting, and Twitter for product news and updates.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

AgentForge

AgentForge is a low-code framework tailored for the rapid development, testing, and iteration of AI-powered autonomous agents and Cognitive Architectures. It is compatible with a range of LLM models and offers flexibility to run different models for different agents based on specific needs. The framework is designed for seamless extensibility and database-flexibility, making it an ideal playground for various AI projects. AgentForge is a beta-testing ground and future-proof hub for crafting intelligent, model-agnostic autonomous agents.

CodeGPT

CodeGPT is an extension for JetBrains IDEs that provides access to state-of-the-art large language models (LLMs) for coding assistance. It offers a range of features to enhance the coding experience, including code completions, a ChatGPT-like interface for instant coding advice, commit message generation, reference file support, name suggestions, and offline development support. CodeGPT is designed to keep privacy in mind, ensuring that user data remains secure and private.

MyDeviceAI

MyDeviceAI is a personal AI assistant app for iPhone that brings the power of artificial intelligence directly to the device. It focuses on privacy, performance, and personalization by running AI models locally and integrating with privacy-focused web services. The app offers seamless user experience, web search integration, advanced reasoning capabilities, personalization features, chat history access, and broad device support. It requires macOS, Xcode, CocoaPods, Node.js, and a React Native development environment for installation. The technical stack includes React Native framework, AI models like Qwen 3 and BGE Small, SearXNG integration, Redux for state management, AsyncStorage for storage, Lucide for UI components, and tools like ESLint and Prettier for code quality.

kollektiv

Kollektiv is a Retrieval-Augmented Generation (RAG) system designed to enable users to chat with their favorite documentation easily. It aims to provide LLMs with access to the most up-to-date knowledge, reducing inaccuracies and improving productivity. The system utilizes intelligent web crawling, advanced document processing, vector search, multi-query expansion, smart re-ranking, AI-powered responses, and dynamic system prompts. The technical stack includes Python/FastAPI for backend, Supabase, ChromaDB, and Redis for storage, OpenAI and Anthropic Claude 3.5 Sonnet for AI/ML, and Chainlit for UI. Kollektiv is licensed under a modified version of the Apache License 2.0, allowing free use for non-commercial purposes.

promptbook

Promptbook is a library designed to build responsible, controlled, and transparent applications on top of large language models (LLMs). It helps users overcome limitations of LLMs like hallucinations, off-topic responses, and poor quality output by offering features such as fine-tuning models, prompt-engineering, and orchestrating multiple prompts in a pipeline. The library separates concerns, establishes a common format for prompt business logic, and handles low-level details like model selection and context size. It also provides tools for pipeline execution, caching, fine-tuning, anomaly detection, and versioning. Promptbook supports advanced techniques like Retrieval-Augmented Generation (RAG) and knowledge utilization to enhance output quality.

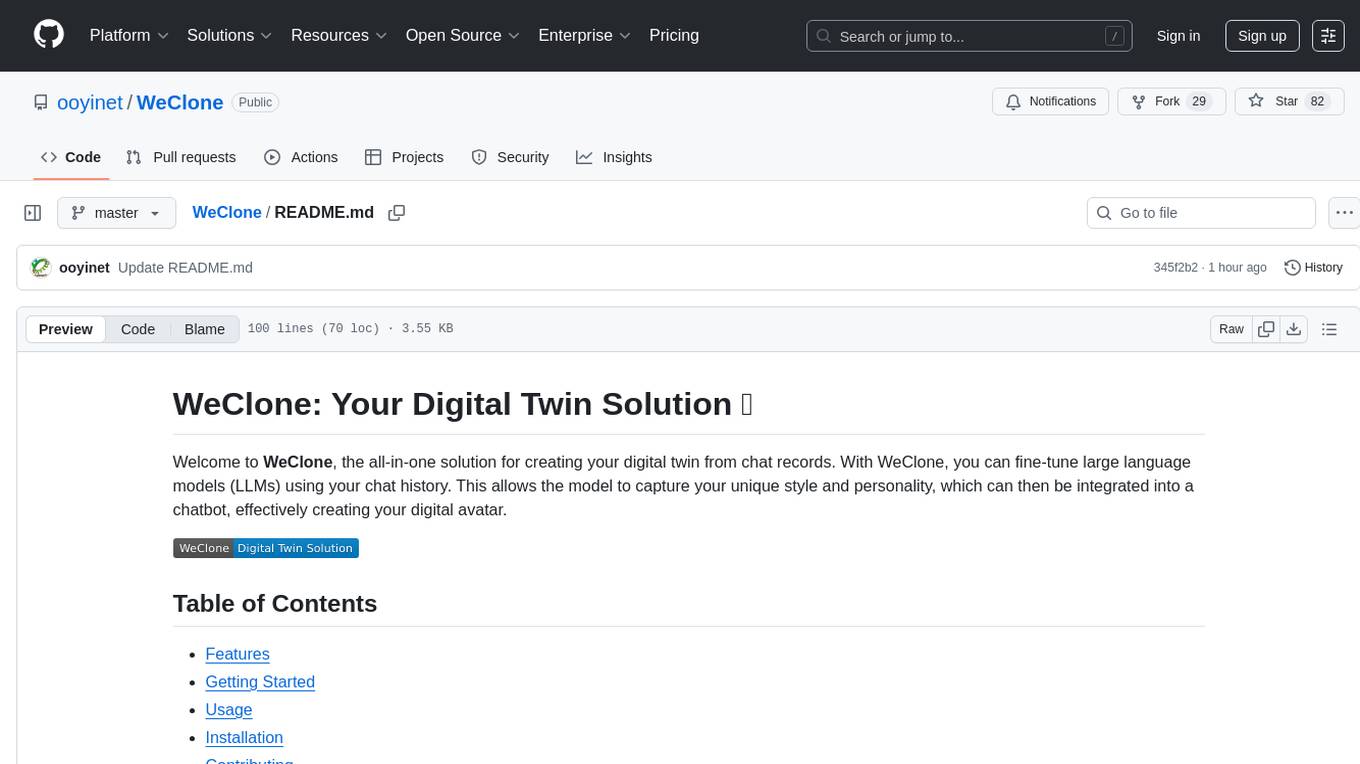

WeClone

WeClone is an all-in-one solution for creating your digital twin from chat records. It allows users to fine-tune large language models using their chat history, capturing their unique style and personality to integrate into a chatbot, effectively creating a digital avatar. The tool offers digital cloning, chatbot integration, user-friendly interface for managing chat records, fine-tuning with LoRA, and cross-platform compatibility.

For similar tasks

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

floneum

Floneum is a graph editor that makes it easy to develop your own AI workflows. It uses large language models (LLMs) to run AI models locally, without any external dependencies or even a GPU. This makes it easy to use LLMs with your own data, without worrying about privacy. Floneum also has a plugin system that allows you to improve the performance of LLMs and make them work better for your specific use case. Plugins can be used in any language that supports web assembly, and they can control the output of LLMs with a process similar to JSONformer or guidance.

mindsdb

MindsDB is a platform for customizing AI from enterprise data. You can create, serve, and fine-tune models in real-time from your database, vector store, and application data. MindsDB "enhances" SQL syntax with AI capabilities to make it accessible for developers worldwide. With MindsDB’s nearly 200 integrations, any developer can create AI customized for their purpose, faster and more securely. Their AI systems will constantly improve themselves — using companies’ own data, in real-time.

aiscript

AiScript is a lightweight scripting language that runs on JavaScript. It supports arrays, objects, and functions as first-class citizens, and is easy to write without the need for semicolons or commas. AiScript runs in a secure sandbox environment, preventing infinite loops from freezing the host. It also allows for easy provision of variables and functions from the host.

activepieces

Activepieces is an open source replacement for Zapier, designed to be extensible through a type-safe pieces framework written in Typescript. It features a user-friendly Workflow Builder with support for Branches, Loops, and Drag and Drop. Activepieces integrates with Google Sheets, OpenAI, Discord, and RSS, along with 80+ other integrations. The list of supported integrations continues to grow rapidly, thanks to valuable contributions from the community. Activepieces is an open ecosystem; all piece source code is available in the repository, and they are versioned and published directly to npmjs.com upon contributions. If you cannot find a specific piece on the pieces roadmap, please submit a request by visiting the following link: Request Piece Alternatively, if you are a developer, you can quickly build your own piece using our TypeScript framework. For guidance, please refer to the following guide: Contributor's Guide

superagent-js

Superagent is an open source framework that enables any developer to integrate production ready AI Assistants into any application in a matter of minutes.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.