semantic-kernel

Integrate cutting-edge LLM technology quickly and easily into your apps

Stars: 26288

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

README:

Build intelligent AI agents and multi-agent systems with this enterprise-ready orchestration framework

Semantic Kernel is a model-agnostic SDK that empowers developers to build, orchestrate, and deploy AI agents and multi-agent systems. Whether you're building a simple chatbot or a complex multi-agent workflow, Semantic Kernel provides the tools you need with enterprise-grade reliability and flexibility.

- Python: 3.10+

- .NET: .NET 8.0+

- Java: JDK 17+

- OS Support: Windows, macOS, Linux

- Model Flexibility: Connect to any LLM with built-in support for OpenAI, Azure OpenAI, Hugging Face, NVidia and more

- Agent Framework: Build modular AI agents with access to tools/plugins, memory, and planning capabilities

- Multi-Agent Systems: Orchestrate complex workflows with collaborating specialist agents

- Plugin Ecosystem: Extend with native code functions, prompt templates, OpenAPI specs, or Model Context Protocol (MCP)

- Vector DB Support: Seamless integration with Azure AI Search, Elasticsearch, Chroma, and more

- Multimodal Support: Process text, vision, and audio inputs

- Local Deployment: Run with Ollama, LMStudio, or ONNX

- Process Framework: Model complex business processes with a structured workflow approach

- Enterprise Ready: Built for observability, security, and stable APIs

First, set the environment variable for your AI Services:

Azure OpenAI:

export AZURE_OPENAI_API_KEY=AAA....or OpenAI directly:

export OPENAI_API_KEY=sk-...pip install semantic-kerneldotnet add package Microsoft.SemanticKernel

dotnet add package Microsoft.SemanticKernel.Agents.CoreSee semantic-kernel-java build for instructions.

Create a simple assistant that responds to user prompts:

import asyncio

from semantic_kernel.agents import ChatCompletionAgent

from semantic_kernel.connectors.ai.open_ai import AzureChatCompletion

async def main():

# Initialize a chat agent with basic instructions

agent = ChatCompletionAgent(

service=AzureChatCompletion(),

name="SK-Assistant",

instructions="You are a helpful assistant.",

)

# Get a response to a user message

response = await agent.get_response(messages="Write a haiku about Semantic Kernel.")

print(response.content)

asyncio.run(main())

# Output:

# Language's essence,

# Semantic threads intertwine,

# Meaning's core revealed.using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Agents;

var builder = Kernel.CreateBuilder();

builder.AddAzureOpenAIChatCompletion(

Environment.GetEnvironmentVariable("AZURE_OPENAI_DEPLOYMENT"),

Environment.GetEnvironmentVariable("AZURE_OPENAI_ENDPOINT"),

Environment.GetEnvironmentVariable("AZURE_OPENAI_API_KEY")

);

var kernel = builder.Build();

ChatCompletionAgent agent =

new()

{

Name = "SK-Agent",

Instructions = "You are a helpful assistant.",

Kernel = kernel,

};

await foreach (AgentResponseItem<ChatMessageContent> response

in agent.InvokeAsync("Write a haiku about Semantic Kernel."))

{

Console.WriteLine(response.Message);

}

// Output:

// Language's essence,

// Semantic threads intertwine,

// Meaning's core revealed.Enhance your agent with custom tools (plugins) and structured output:

import asyncio

from typing import Annotated

from pydantic import BaseModel

from semantic_kernel.agents import ChatCompletionAgent

from semantic_kernel.connectors.ai.open_ai import AzureChatCompletion, OpenAIChatPromptExecutionSettings

from semantic_kernel.functions import kernel_function, KernelArguments

class MenuPlugin:

@kernel_function(description="Provides a list of specials from the menu.")

def get_specials(self) -> Annotated[str, "Returns the specials from the menu."]:

return """

Special Soup: Clam Chowder

Special Salad: Cobb Salad

Special Drink: Chai Tea

"""

@kernel_function(description="Provides the price of the requested menu item.")

def get_item_price(

self, menu_item: Annotated[str, "The name of the menu item."]

) -> Annotated[str, "Returns the price of the menu item."]:

return "$9.99"

class MenuItem(BaseModel):

price: float

name: str

async def main():

# Configure structured output format

settings = OpenAIChatPromptExecutionSettings()

settings.response_format = MenuItem

# Create agent with plugin and settings

agent = ChatCompletionAgent(

service=AzureChatCompletion(),

name="SK-Assistant",

instructions="You are a helpful assistant.",

plugins=[MenuPlugin()],

arguments=KernelArguments(settings)

)

response = await agent.get_response(messages="What is the price of the soup special?")

print(response.content)

# Output:

# The price of the Clam Chowder, which is the soup special, is $9.99.

asyncio.run(main()) using System.ComponentModel;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Agents;

using Microsoft.SemanticKernel.ChatCompletion;

var builder = Kernel.CreateBuilder();

builder.AddAzureOpenAIChatCompletion(

Environment.GetEnvironmentVariable("AZURE_OPENAI_DEPLOYMENT"),

Environment.GetEnvironmentVariable("AZURE_OPENAI_ENDPOINT"),

Environment.GetEnvironmentVariable("AZURE_OPENAI_API_KEY")

);

var kernel = builder.Build();

kernel.Plugins.Add(KernelPluginFactory.CreateFromType<MenuPlugin>());

ChatCompletionAgent agent =

new()

{

Name = "SK-Assistant",

Instructions = "You are a helpful assistant.",

Kernel = kernel,

Arguments = new KernelArguments(new PromptExecutionSettings() { FunctionChoiceBehavior = FunctionChoiceBehavior.Auto() })

};

await foreach (AgentResponseItem<ChatMessageContent> response

in agent.InvokeAsync("What is the price of the soup special?"))

{

Console.WriteLine(response.Message);

}

sealed class MenuPlugin

{

[KernelFunction, Description("Provides a list of specials from the menu.")]

public string GetSpecials() =>

"""

Special Soup: Clam Chowder

Special Salad: Cobb Salad

Special Drink: Chai Tea

""";

[KernelFunction, Description("Provides the price of the requested menu item.")]

public string GetItemPrice(

[Description("The name of the menu item.")]

string menuItem) =>

"$9.99";

}Build a system of specialized agents that can collaborate:

import asyncio

from semantic_kernel.agents import ChatCompletionAgent, ChatHistoryAgentThread

from semantic_kernel.connectors.ai.open_ai import AzureChatCompletion, OpenAIChatCompletion

billing_agent = ChatCompletionAgent(

service=AzureChatCompletion(),

name="BillingAgent",

instructions="You handle billing issues like charges, payment methods, cycles, fees, discrepancies, and payment failures."

)

refund_agent = ChatCompletionAgent(

service=AzureChatCompletion(),

name="RefundAgent",

instructions="Assist users with refund inquiries, including eligibility, policies, processing, and status updates.",

)

triage_agent = ChatCompletionAgent(

service=OpenAIChatCompletion(),

name="TriageAgent",

instructions="Evaluate user requests and forward them to BillingAgent or RefundAgent for targeted assistance."

" Provide the full answer to the user containing any information from the agents",

plugins=[billing_agent, refund_agent],

)

thread: ChatHistoryAgentThread = None

async def main() -> None:

print("Welcome to the chat bot!\n Type 'exit' to exit.\n Try to get some billing or refund help.")

while True:

user_input = input("User:> ")

if user_input.lower().strip() == "exit":

print("\n\nExiting chat...")

return False

response = await triage_agent.get_response(

messages=user_input,

thread=thread,

)

if response:

print(f"Agent :> {response}")

# Agent :> I understand that you were charged twice for your subscription last month, and I'm here to assist you with resolving this issue. Here’s what we need to do next:

# 1. **Billing Inquiry**:

# - Please provide the email address or account number associated with your subscription, the date(s) of the charges, and the amount charged. This will allow the billing team to investigate the discrepancy in the charges.

# 2. **Refund Process**:

# - For the refund, please confirm your subscription type and the email address associated with your account.

# - Provide the dates and transaction IDs for the charges you believe were duplicated.

# Once we have these details, we will be able to:

# - Check your billing history for any discrepancies.

# - Confirm any duplicate charges.

# - Initiate a refund for the duplicate payment if it qualifies. The refund process usually takes 5-10 business days after approval.

# Please provide the necessary details so we can proceed with resolving this issue for you.

if __name__ == "__main__":

asyncio.run(main())- 📖 Try our Getting Started Guide or learn about Building Agents

- 🔌 Explore over 100 Detailed Samples

- 💡 Learn about core Semantic Kernel Concepts

- Authentication Errors: Check that your API key environment variables are correctly set

- Model Availability: Verify your Azure OpenAI deployment or OpenAI model access

- Check our GitHub issues for known problems

- Search the Discord community for solutions

- Include your SDK version and full error messages when asking for help

We welcome your contributions and suggestions to the SK community! One of the easiest ways to participate is to engage in discussions in the GitHub repository. Bug reports and fixes are welcome!

For new features, components, or extensions, please open an issue and discuss with us before sending a PR. This is to avoid rejection as we might be taking the core in a different direction, but also to consider the impact on the larger ecosystem.

To learn more and get started:

-

Read the documentation

-

Learn how to contribute to the project

-

Ask questions in the GitHub discussions

-

Ask questions in the Discord community

-

Follow the team on our blog

This project has adopted the Microsoft Open Source Code of Conduct. For more information, see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

Copyright (c) Microsoft Corporation. All rights reserved.

Licensed under the MIT license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for semantic-kernel

Similar Open Source Tools

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

ExtractThinker

ExtractThinker is a library designed for extracting data from files and documents using Language Model Models (LLMs). It offers ORM-style interaction between files and LLMs, supporting multiple document loaders such as Tesseract OCR, Azure Form Recognizer, AWS TextExtract, and Google Document AI. Users can customize extraction using contract definitions, process documents asynchronously, handle various document formats efficiently, and split and process documents. The project is inspired by the LangChain ecosystem and focuses on Intelligent Document Processing (IDP) using LLMs to achieve high accuracy in document extraction tasks.

mobius

Mobius is an AI infra platform including realtime computing and training. It is built on Ray, a distributed computing framework, and provides a number of features that make it well-suited for online machine learning tasks. These features include: * **Cross Language**: Mobius can run in multiple languages (only Python and Java are supported currently) with high efficiency. You can implement your operator in different languages and run them in one job. * **Single Node Failover**: Mobius has a special failover mechanism that only needs to rollback the failed node itself, in most cases, to recover the job. This is a huge benefit if your job is sensitive about failure recovery time. * **AutoScaling**: Mobius can generate a new graph with different configurations in runtime without stopping the job. * **Fusion Training**: Mobius can combine TensorFlow/Pytorch and streaming, then building an e2e online machine learning pipeline. Mobius is still under development, but it has already been used to power a number of real-world applications, including: * A real-time recommendation system for a major e-commerce company * A fraud detection system for a large financial institution * A personalized news feed for a major news organization If you are interested in using Mobius for your own online machine learning projects, you can find more information in the documentation.

aigne-framework

AIGNE Framework is a functional AI application development framework designed to simplify and accelerate the process of building modern applications. It combines functional programming features, powerful artificial intelligence capabilities, and modular design principles to help developers easily create scalable solutions. With key features like modular design, TypeScript support, multiple AI model support, flexible workflow patterns, MCP protocol integration, code execution capabilities, and Blocklet ecosystem integration, AIGNE Framework offers a comprehensive solution for developers. The framework provides various workflow patterns such as Workflow Router, Workflow Sequential, Workflow Concurrency, Workflow Handoff, Workflow Reflection, Workflow Orchestration, Workflow Code Execution, and Workflow Group Chat to address different application scenarios efficiently. It also includes built-in MCP support for running MCP servers and integrating with external MCP servers, along with packages for core functionality, agent library, CLI, and various models like OpenAI, Gemini, Claude, and Nova.

agent-squad

Agent Squad is a flexible, lightweight open-source framework for orchestrating multiple AI agents to handle complex conversations. It intelligently routes queries, maintains context across interactions, and offers pre-built components for quick deployment. The system allows easy integration of custom agents and conversation messages storage solutions, making it suitable for various applications from simple chatbots to sophisticated AI systems, scaling efficiently.

zenml

ZenML is an extensible, open-source MLOps framework for creating portable, production-ready machine learning pipelines. By decoupling infrastructure from code, ZenML enables developers across your organization to collaborate more effectively as they develop to production.

multi-agent-orchestrator

Multi-Agent Orchestrator is a flexible and powerful framework for managing multiple AI agents and handling complex conversations. It intelligently routes queries to the most suitable agent based on context and content, supports dual language implementation in Python and TypeScript, offers flexible agent responses, context management across agents, extensible architecture for customization, universal deployment options, and pre-built agents and classifiers. It is suitable for various applications, from simple chatbots to sophisticated AI systems, accommodating diverse requirements and scaling efficiently.

LightAgent

LightAgent is a lightweight, open-source Agentic AI development framework with memory, tools, and a tree of thought. It supports multi-agent collaboration, autonomous learning, tool integration, complex task handling, and multi-model support. It also features a streaming API, tool generator, agent self-learning, adaptive tool mechanism, and more. LightAgent is designed for intelligent customer service, data analysis, automated tools, and educational assistance.

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

datachain

DataChain is an open-source Python library for processing and curating unstructured data at scale. It supports AI-driven data curation using local ML models and LLM APIs, handles large datasets, and is Python-friendly with Pydantic objects. It excels at optimizing batch operations and is designed for offline data processing, curation, and ETL. Typical use cases include Computer Vision data curation, LLM analytics, and validation.

rag-chat

The `@upstash/rag-chat` package simplifies the development of retrieval-augmented generation (RAG) chat applications by providing Next.js compatibility with streaming support, built-in vector store, optional Redis compatibility for fast chat history management, rate limiting, and disableRag option. Users can easily set up the environment variables and initialize RAGChat to interact with AI models, manage knowledge base, chat history, and enable debugging features. Advanced configuration options allow customization of RAGChat instance with built-in rate limiting, observability via Helicone, and integration with Next.js route handlers and Vercel AI SDK. The package supports OpenAI models, Upstash-hosted models, and custom providers like TogetherAi and Replicate.

extrapolate

Extrapolate is an app that uses Artificial Intelligence to show you how your face ages over time. It generates a 3-second GIF of your aging face and allows you to store and retrieve photos from Cloudflare R2 using Workers. Users can deploy their own version of Extrapolate on Vercel by setting up ReplicateHQ and Upstash accounts, as well as creating a Cloudflare R2 instance with a Cloudflare Worker to handle uploads and reads. The tool provides a fun and interactive way to visualize the aging process through AI technology.

Agentarium

Agentarium is a powerful Python framework for managing and orchestrating AI agents with ease. It provides a flexible and intuitive way to create, manage, and coordinate interactions between multiple AI agents in various environments. The framework offers advanced agent management, robust interaction management, a checkpoint system for saving and restoring agent states, data generation through agent interactions, performance optimization, flexible environment configuration, and an extensible architecture for customization.

gpustack

GPUStack is an open-source GPU cluster manager designed for running large language models (LLMs). It supports a wide variety of hardware, scales with GPU inventory, offers lightweight Python package with minimal dependencies, provides OpenAI-compatible APIs, simplifies user and API key management, enables GPU metrics monitoring, and facilitates token usage and rate metrics tracking. The tool is suitable for managing GPU clusters efficiently and effectively.

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

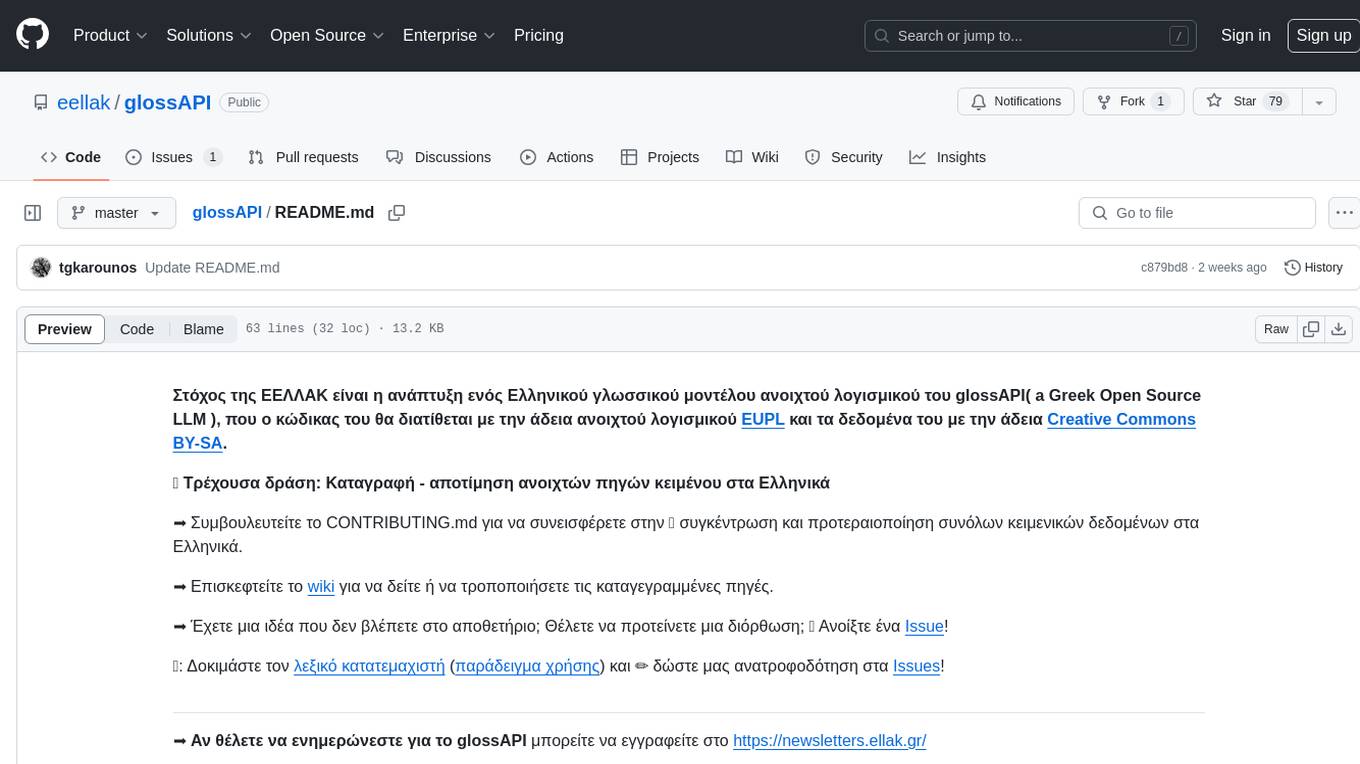

glossAPI

The glossAPI project aims to develop a Greek language model as open-source software, with code licensed under EUPL and data under Creative Commons BY-SA. The project focuses on collecting and evaluating open text sources in Greek, with efforts to prioritize and gather textual data sets. The project encourages contributions through the CONTRIBUTING.md file and provides resources in the wiki for viewing and modifying recorded sources. It also welcomes ideas and corrections through issue submissions. The project emphasizes the importance of open standards, ethically secured data, privacy protection, and addressing digital divides in the context of artificial intelligence and advanced language technologies.

For similar tasks

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

floneum

Floneum is a graph editor that makes it easy to develop your own AI workflows. It uses large language models (LLMs) to run AI models locally, without any external dependencies or even a GPU. This makes it easy to use LLMs with your own data, without worrying about privacy. Floneum also has a plugin system that allows you to improve the performance of LLMs and make them work better for your specific use case. Plugins can be used in any language that supports web assembly, and they can control the output of LLMs with a process similar to JSONformer or guidance.

mindsdb

MindsDB is a platform for customizing AI from enterprise data. You can create, serve, and fine-tune models in real-time from your database, vector store, and application data. MindsDB "enhances" SQL syntax with AI capabilities to make it accessible for developers worldwide. With MindsDB’s nearly 200 integrations, any developer can create AI customized for their purpose, faster and more securely. Their AI systems will constantly improve themselves — using companies’ own data, in real-time.

aiscript

AiScript is a lightweight scripting language that runs on JavaScript. It supports arrays, objects, and functions as first-class citizens, and is easy to write without the need for semicolons or commas. AiScript runs in a secure sandbox environment, preventing infinite loops from freezing the host. It also allows for easy provision of variables and functions from the host.

activepieces

Activepieces is an open source replacement for Zapier, designed to be extensible through a type-safe pieces framework written in Typescript. It features a user-friendly Workflow Builder with support for Branches, Loops, and Drag and Drop. Activepieces integrates with Google Sheets, OpenAI, Discord, and RSS, along with 80+ other integrations. The list of supported integrations continues to grow rapidly, thanks to valuable contributions from the community. Activepieces is an open ecosystem; all piece source code is available in the repository, and they are versioned and published directly to npmjs.com upon contributions. If you cannot find a specific piece on the pieces roadmap, please submit a request by visiting the following link: Request Piece Alternatively, if you are a developer, you can quickly build your own piece using our TypeScript framework. For guidance, please refer to the following guide: Contributor's Guide

superagent-js

Superagent is an open source framework that enables any developer to integrate production ready AI Assistants into any application in a matter of minutes.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.