HiveChat

An AI chat bot for small and medium-sized teams, supporting models such as Deepseek, Open AI, Claude, and Gemini. 专为中小团队设计的 AI 聊天应用,支持 Deepseek、Open AI、Claude、Gemini 等模型。

Stars: 1087

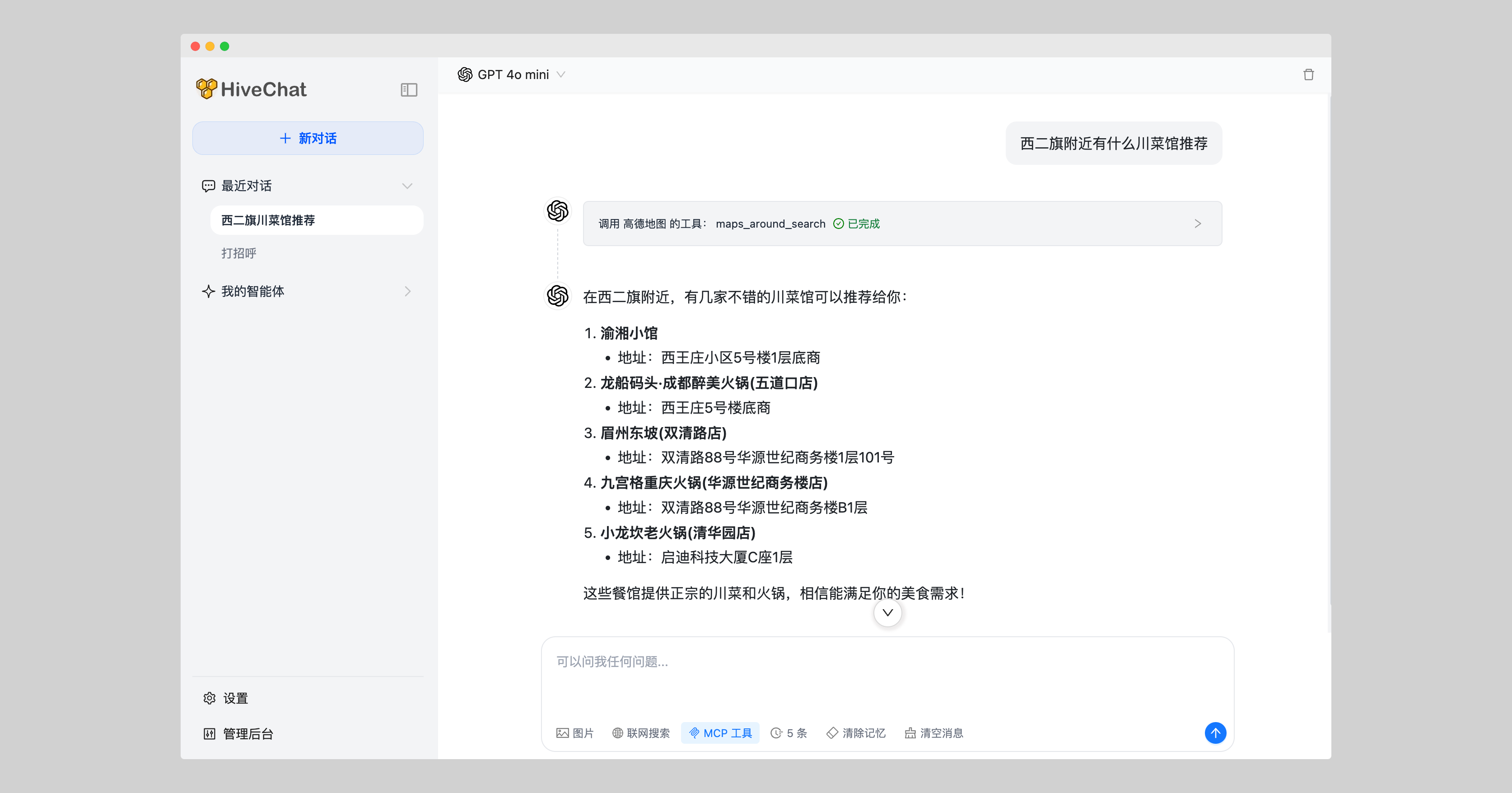

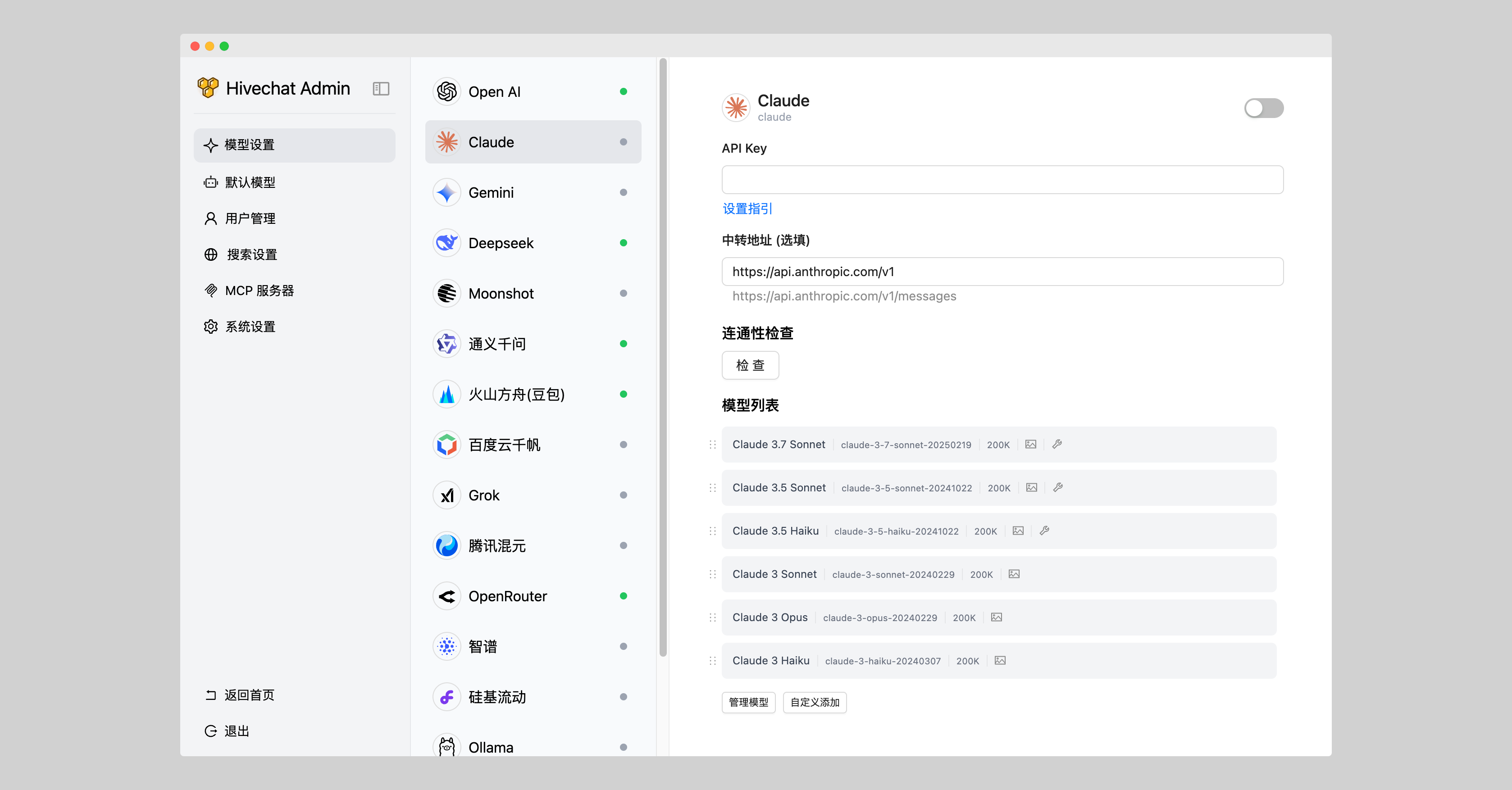

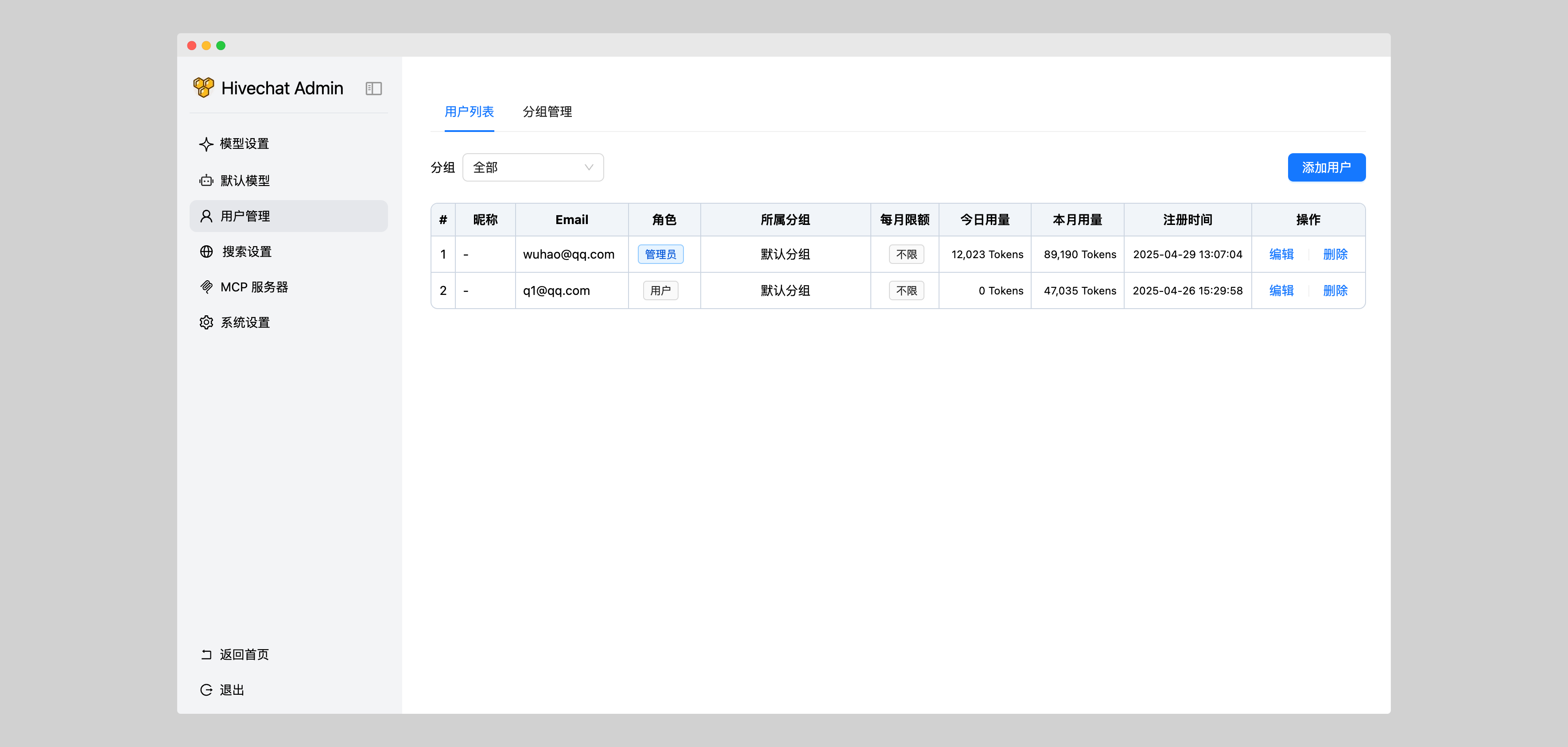

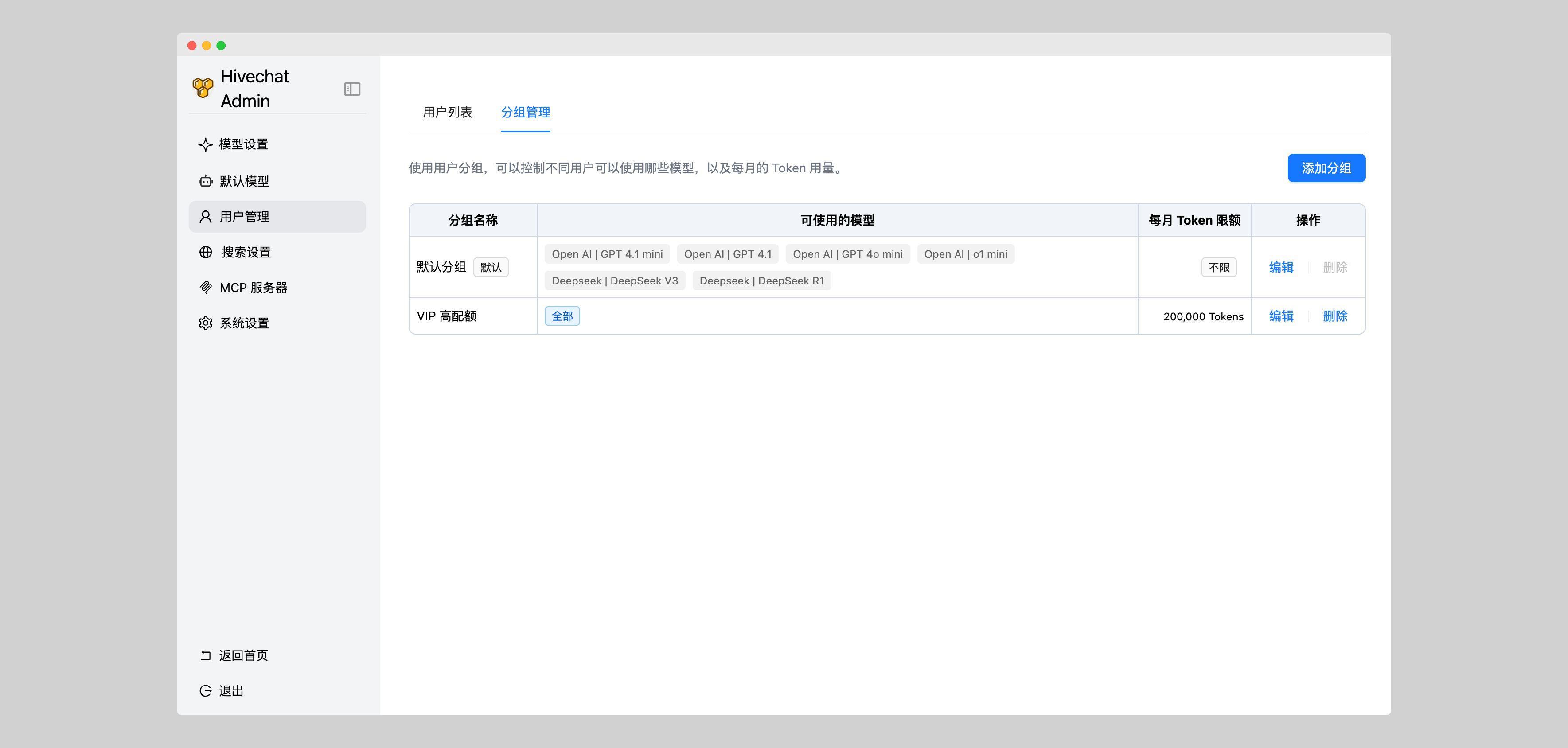

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

README:

管理员一人配置,全团队轻松使用各种 AI 模型。

-

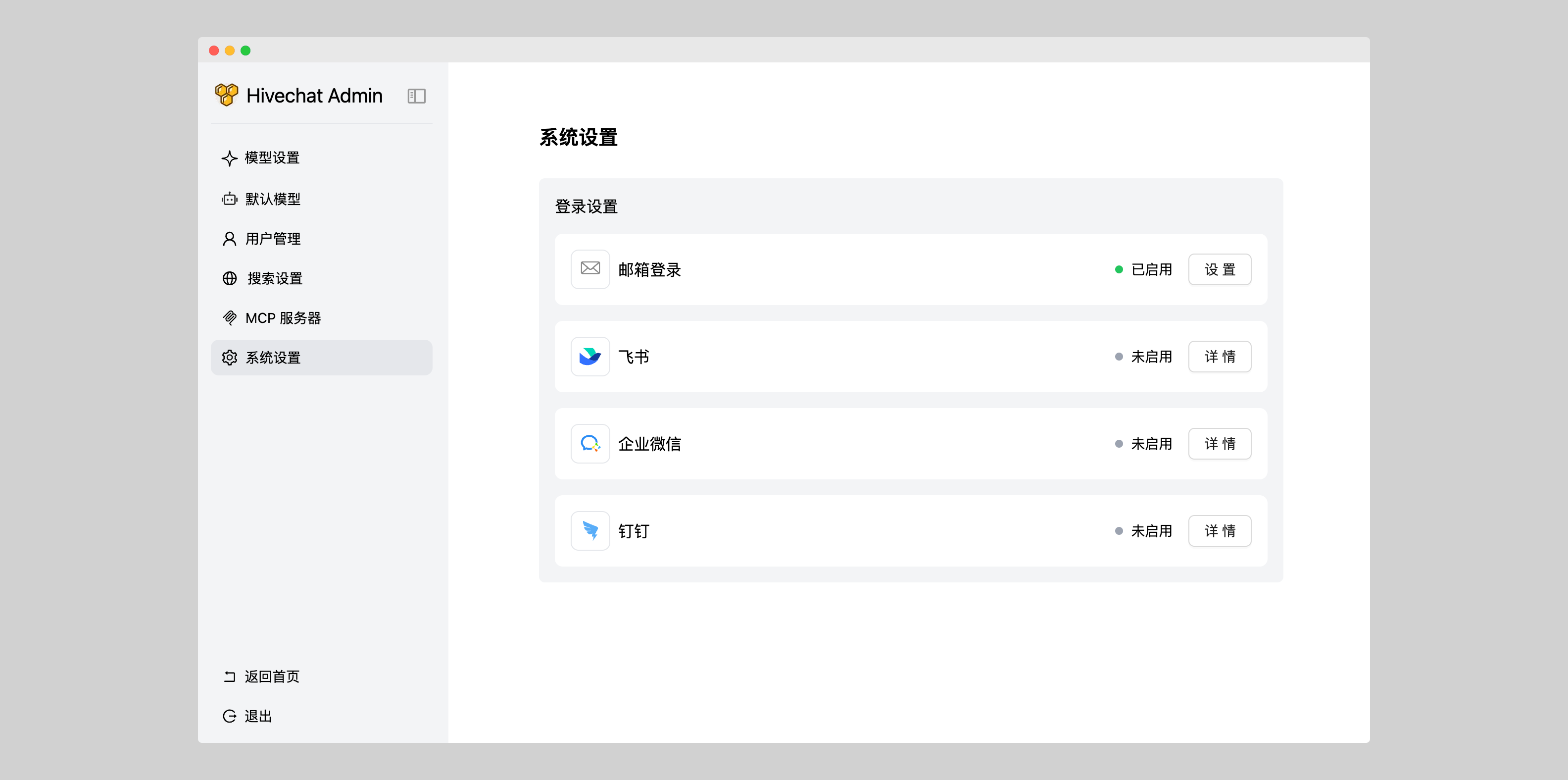

支持配置邮箱登录或企业微信、钉钉、飞书登录

-

支持分组管理用户

- 针对分组用户设置不同可使用的模型

- 针对分组用户可分别设置每月 Token 限额

-

支持配置 MCP 服务器(SSE 模式)

-

DeepSeek 思维链展示

-

LaTeX 和 Markdown 渲染

-

图像理解

-

AI 智能体

-

云端数据存储

-

支持的大模型服务商:

- Open AI

- Claude

- Gemini

- DeepSeek

- Moonshot(月之暗面)

- 火山方舟(豆包)

- 阿里百炼(千问)

- 百度千帆

- 腾讯混元

- 智谱

- Open Router

- Grok

- Ollama

- 硅基流动

-

同时支持自定义添加任意 Open AI 风格的服务商

登录账号,即可对话。

MCP 使用

- 管理员配置 AI 大模型服务商

- 可手动添加用户,也可开启或关闭账号注册,适用于公司/学校/组织等小型团队

- 查看和管理全部用户

注:以下为演示站,数据随时会被清空

-

Cloud 版:https://www.hivechat.net/

- 可自行注册账号体验,支持多租户

-

私有部署用户端:https://chat.yotuku.cn/

- 可自行注册账号体验

-

私有部署管理员端:https://hivechat-demo.vercel.app/

- Email: [email protected]

- Password: helloHivechat

- Next.js

- Tailwindcss

- Auth.js

- PostgreSQL

- Drizzle ORM

- Ant Design

注意: 旧版本升级可能会有数据库结构变化,手动执行一下

npm run initdb, 进行更新,即使没有更新,也没有副作用

- 克隆本项目到本地

git clone https://github.com/HiveNexus/hivechat.git

- 安装依赖库

cd hivechat

npm install- 修改本地配置文件

将样例文件复制到 .env

cp .env.example .env修改 .env 文件

# PostgreSQL 数据库连接 URL,此处为示例,需本地安装或连接远程 PostgreSQL

# 注意,本地安装暂不支持使用 Vercel 或 Neon 提供的 Serverless PostgreSQL

DATABASE_URL=postgres://postgres:password@localhost/hivechat

#用于用户信息等敏感信息的加密,可以使用 openssl rand -base64 32 生成一个随机的 32 位字符串作为密钥,此处为示例,请替换为自己生成的值。

AUTH_SECRET=hclqD3nBpMphLevxGWsUnGU6BaEa2TjrCQ77weOVpPg=

# 管理员授权码,初始化后,凭此值设置管理员账号,此处为示例,请替换为自己生成的值。

ADMIN_CODE=22113344

# 生产环境设置为正式域名,开启飞书等第三方登录时回调时会使用

NEXTAUTH_URL=http://127.0.0.1:3000

是否开启邮箱登录,开启值设为 ON,关闭时修改为 OFF,未设置时默认开启

EMAIL_AUTH_STATUS=ON

# 是否开启飞书登录,开启值设为 ON,关闭时修改为 OFF,详细说明见底部附2

FEISHU_AUTH_STATUS=OFF

FEISHU_CLIENT_ID="cli_xxxxxxxxxxxxxxxx"

FEISHU_CLIENT_SECRET="xxxxxxxxHOEWIoE7eDc1Lhc0042OXXXX"

# 是否开启企业微信登录,开启值设为 ON,关闭时修改为 OFF

WECOM_AUTH_STATUS=OFF

WECOM_CLIENT_ID="ww728c371c2fXXXXXX"

WECOM_AGENT_ID="100XXXX"

WECOM_CLIENT_SECRET="H-7J4jzG0m1axpXLGshaCDlMOZxdjvkX6bIVLuXXXXXX"

# 是否开启钉钉登录,开启值设为 ON,关闭时修改为 OFF

DINGDING_AUTH_STATUS=OFF

DINGDING_CLIENT_ID="dingpcfi2kpuplXXXXXX"

DINGDING_CLIENT_SECRET="3vk9-VFCExNckqNUk_CL2F-HEgz7qGN-BimH0lZ1gUx6hWO7g_an2lnkk6XXXXXX"- 初始化数据库

npm run initdb- 启动程序

//测试开发

npm run dev

//正式启动

npm run build

npm run start

- 初始化管理员账号

访问 http://localhost:3000/setup (实际使用的域名和端口号),即可进入管理员账号设置页面,设置完成后,即可正常使用系统。

由于近期更新频繁,暂未提供 Docker 升级数据库的 SQL 脚本,如果是历史版本升级,测试用途的用户可直接删除存储卷下的 hivechat_postgres_data,数据库会自动重新初始化。如果正式环境 Docker 部署有升级需求,可联系作者(wechat:wuhaoworld)。 其他部署方式没有此问题。

- 克隆本项目到本地

git clone https://github.com/HiveNexus/hivechat.git

- 修改本地配置文件

将样例文件复制到 .env

cp .env.example .env根据实际情况如下的配置项

修改 AUTH_SECRET 和 ADMIN_CODE,正式环境务必重新设置,测试用途时可不修改。

修改 .env 文件

# PostgreSQL 数据库连接 URL,Docker 部署时可留空

DATABASE_URL=

#用于用户信息等敏感信息的加密,可以使用 openssl rand -base64 32 生成一个随机的 32 位字符串作为密钥,此处为示例,请替换为自己生成的值,测试用途时可不修改。

AUTH_SECRET=hclqD3nBpMphLevxGWsUnGU6BaEa2TjrCQ77weOVpPg=

# 管理员授权码,初始化后,凭此值设置管理员账号,此处为示例,请替换为自己生成的值。

ADMIN_CODE=22113344

# 生产环境设置为正式域名,开启飞书等第三方登录时回调时会使用

NEXTAUTH_URL=http://127.0.0.1:3000

# 是否开启邮箱登录,开启值设为 ON,关闭时修改为 OFF,未设置时默认开启

EMAIL_AUTH_STATUS=ON

# 是否开启飞书登录,开启值设为 ON,关闭时修改为 OFF,详细说明见底部附2

FEISHU_AUTH_STATUS=OFF

FEISHU_CLIENT_ID="cli_xxxxxxxxxxxxxxxx"

FEISHU_CLIENT_SECRET="xxxxxxxxHOEWIoE7eDc1Lhc0042OXXXX"

# 是否开启企业微信登录,开启值设为 ON,关闭时修改为 OFF

WECOM_AUTH_STATUS=OFF

WECOM_CLIENT_ID="ww728c371c2fXXXXXX"

WECOM_AGENT_ID="100XXXX"

WECOM_CLIENT_SECRET="H-7J4jzG0m1axpXLGshaCDlMOZxdjvkX6bIVLuXXXXXX"

# 是否开启钉钉登录,开启值设为 ON,关闭时修改为 OFF

DINGDING_AUTH_STATUS=OFF

DINGDING_CLIENT_ID="dingpcfi2kpuplXXXXXX"

DINGDING_CLIENT_SECRET="3vk9-VFCExNckqNUk_CL2F-HEgz7qGN-BimH0lZ1gUx6hWO7g_an2lnkk6XXXXXX"- 启动容器

docker compose up -d

- 初始化管理员账号

访问 http://localhost:3000/setup (实际使用的域名和端口号),即可进入管理员账号设置页面,设置完成后,即可正常使用系统。

注意: 旧版本升级到 2025 年 4 月 5 日 之后更新的版本,如果遇到升级卡死,请手动登入到 Vercel 数据库管理页面,将

group表下daily_token_limit字段修改为monthly_token_limit,然后重新部署。因为涉及到表结构的调整,脚本执行无法自动确认或跳过,会导致部署卡住,全新部署不存在此问题,详情见这里。

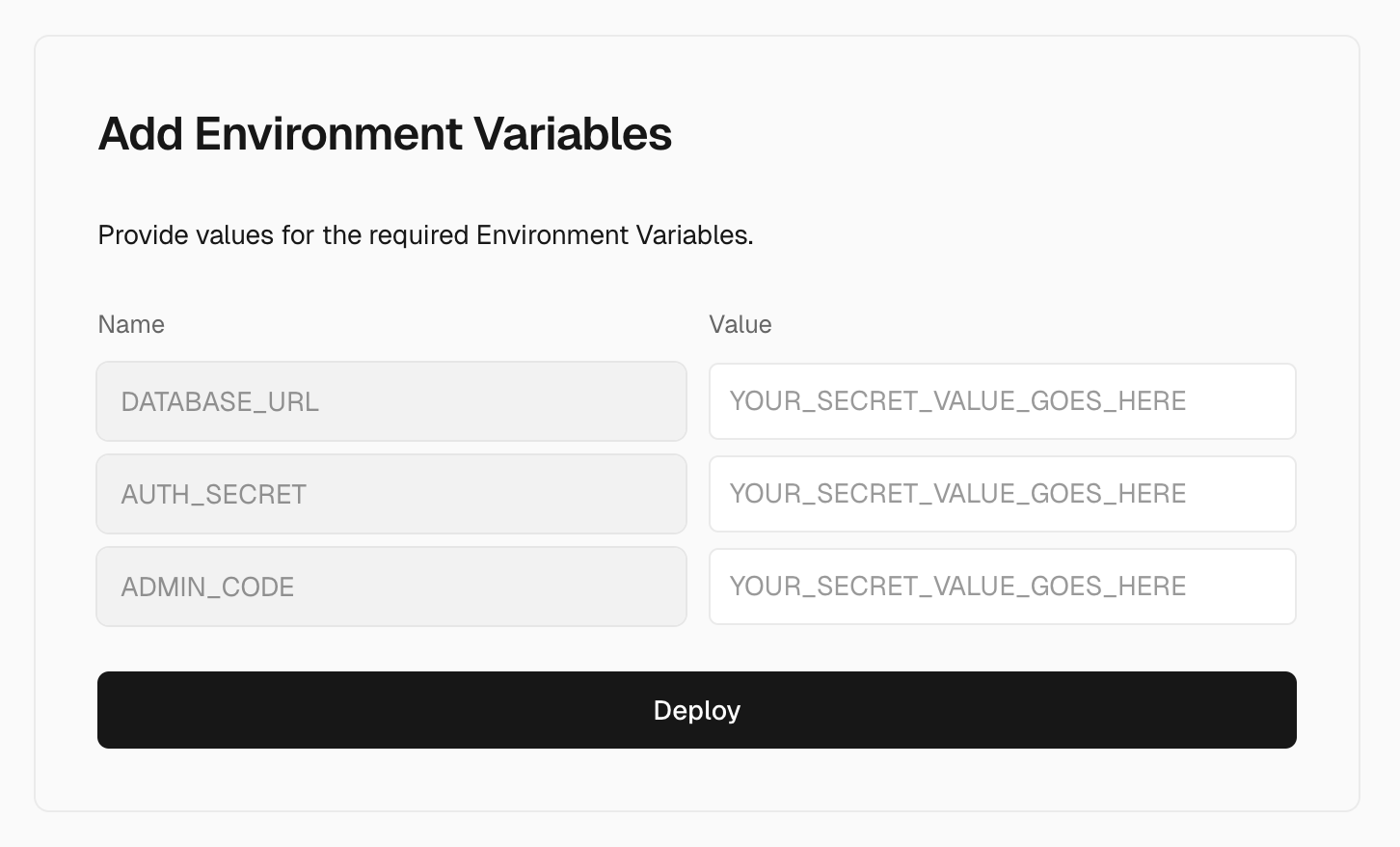

点击下面的按钮,即可开始部署。

默认将代码克隆的自己的 Github 后,需要填写环境变量:

# PostgreSQL 数据库连接 URL,Vercel 平台提供了免费的托管服务,详情见下面说明

DATABASE_URL=postgres://postgres:password@localhost/hivechat

#用于用户信息等敏感信息的加密,可以使用 openssl rand -base64 32 生成一个随机的 32 位字符串作为密钥,此处为示例,请替换为自己生成的值。

AUTH_SECRET=hclqD3nBpMphLevxGWsUnGU6BaEa2TjrCQ77weOVpPg=

# 管理员授权码,初始化后,凭此值设置管理员账号,此处为示例,请替换为自己生成的值。

ADMIN_CODE=22113344

# 生产环境设置为正式域名,开启飞书等第三方登录时回调时会使用

# 首次可使用 `https://Vercel中的项目名.vercel.app`

NEXTAUTH_URL=https://hivechat-xxx.vercel.app

是否开启邮箱登录,开启值设为 ON,关闭时设为 OFF

EMAIL_AUTH_STATUS=ON

# 是否开启飞书登录,开启值设为 ON,关闭时修改为 OFF,详细说明见底部附2

FEISHU_AUTH_STATUS=OFF

FEISHU_CLIENT_ID="cli_xxxxxxxxxxxxxxxx"

FEISHU_CLIENT_SECRET="xxxxxxxxHOEWIoE7eDc1Lhc0042OXXXX"

# 是否开启企业微信登录,开启值设为 ON,关闭时修改为 OFF

WECOM_AUTH_STATUS=OFF

WECOM_CLIENT_ID="ww728c371c2fXXXXXX"

WECOM_AGENT_ID="100XXXX"

WECOM_CLIENT_SECRET="H-7J4jzG0m1axpXLGshaCDlMOZxdjvkX6bIVLuXXXXXX"

# 是否开启钉钉登录,开启值设为 ON,关闭时修改为 OFF

DINGDING_AUTH_STATUS=OFF

DINGDING_CLIENT_ID="dingpcfi2kpuplXXXXXX"

DINGDING_CLIENT_SECRET="3vk9-VFCExNckqNUk_CL2F-HEgz7qGN-BimH0lZ1gUx6hWO7g_an2lnkk6XXXXXX"

- 正常通过 npm 在服务器安装或更新时,先手动执行

npm run initdb, 检查数据库的更新,即使没有更新,也没有副作用 - Docker 方式升级到 0.0.25 镜像(2025-06-28 发布版本)时,需要手动更新数据库,手动执行以下 SQL

-- models 表新增内置工具的能力标识

ALTER TABLE models

ADD COLUMN built_in_image_gen boolean DEFAULT false,

ADD COLUMN built_in_web_search boolean DEFAULT false;

-- API 风格新增枚举类型 openai_response

ALTER TYPE public.api_style ADD VALUE 'openai_response';

-- 设置 api_style 字段 not null

ALTER TABLE public.llm_settings

ALTER COLUMN api_style SET NOT NULL;- Docker 方式升级到 0.0.26 镜像(2025-07-15 发布版本)时,需要手动更新数据库,手动执行以下 SQL

CREATE TYPE message_send_shortcut AS ENUM ('enter', 'ctrl_enter');

ALTER TABLE public."user"

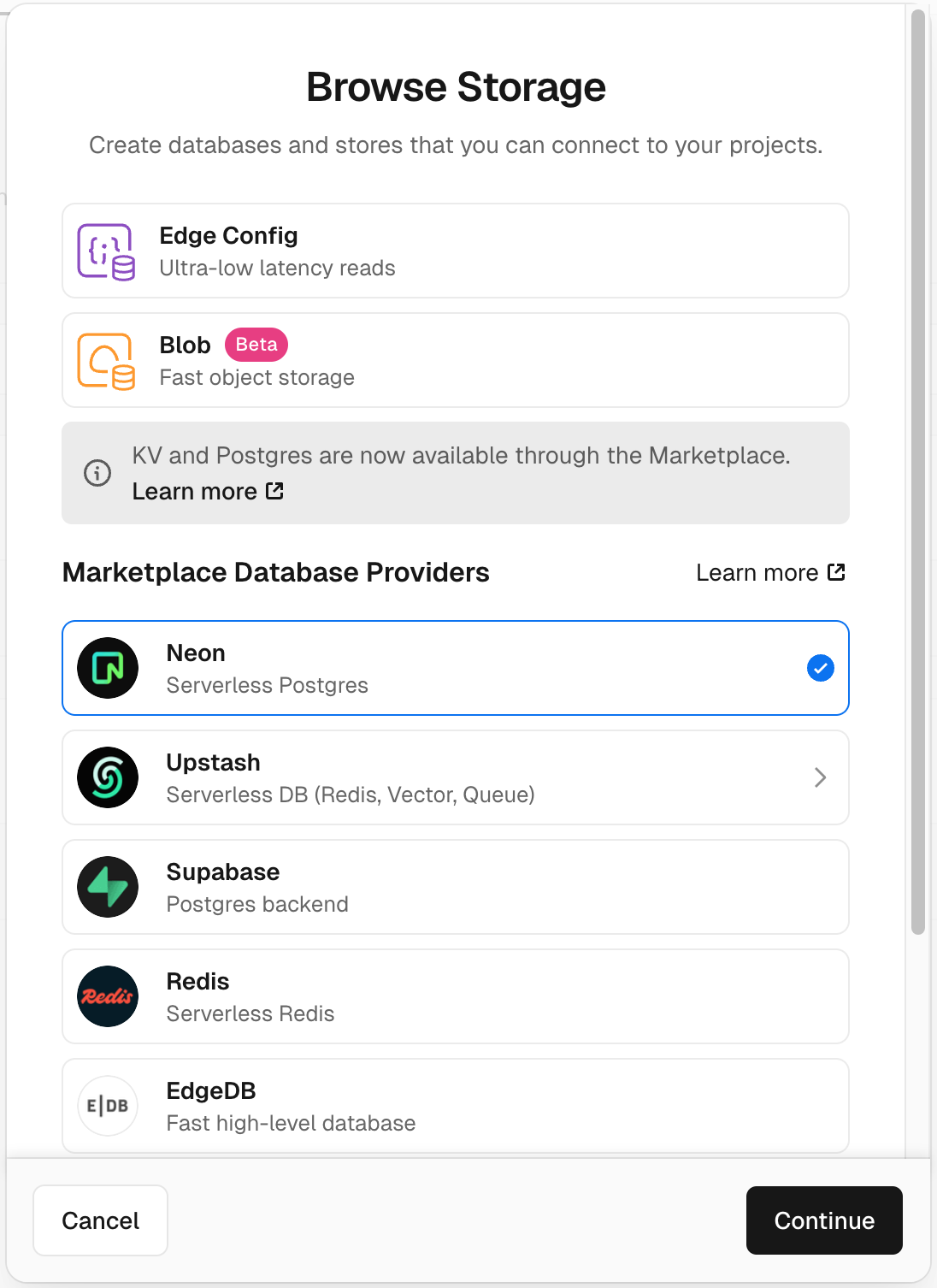

ADD COLUMN message_send_shortcut message_send_shortcut NOT NULL DEFAULT 'enter';- 在 Vercel 平台顶部导航,选择「Storage」标签,点击 Create Databse

- 选择 Neon(Serverless Postgres)

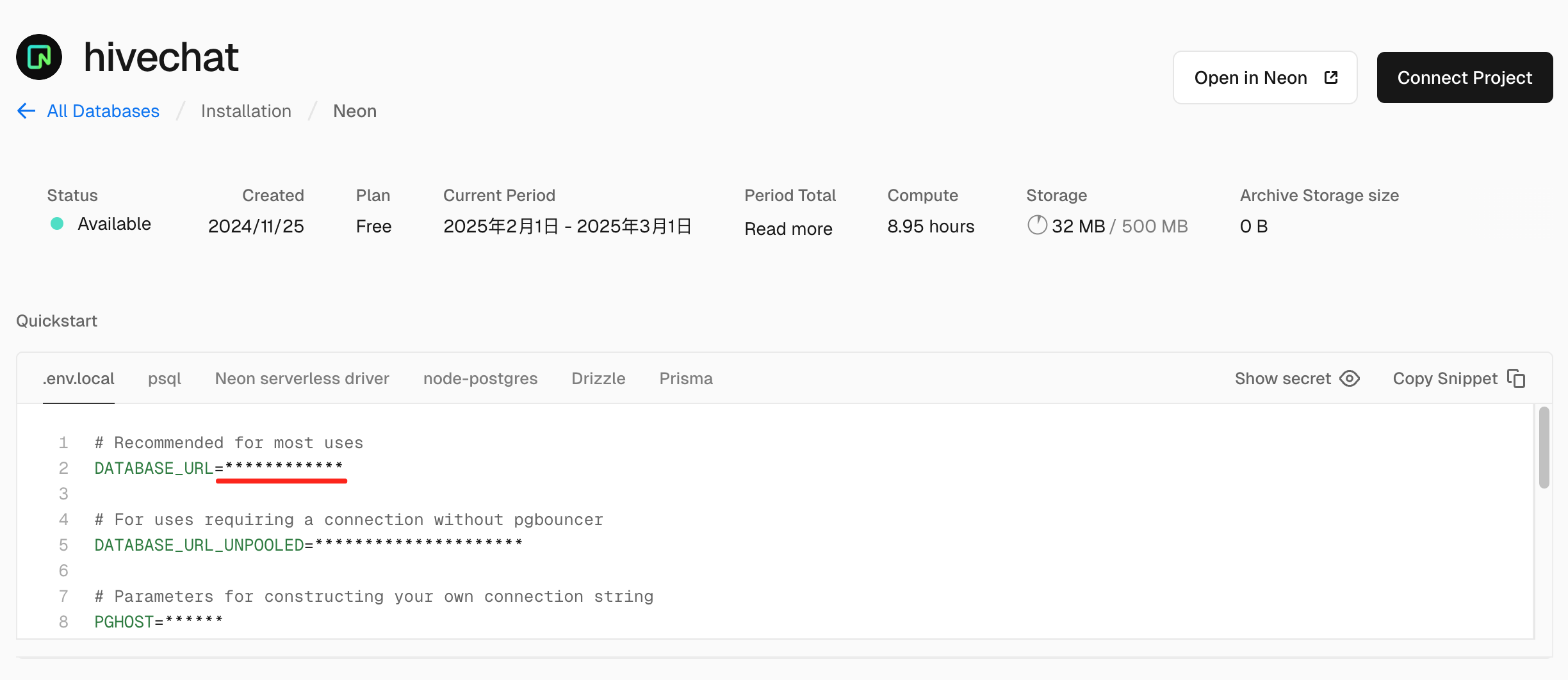

- 按照指引完成创建后,复制此处

DATABASE_URL的值,填入到上一步的DATABASE_URL中

- 初始化管理员账号

按照以上方法安装部署完成后,访问 http://localhost:3000/setup (实际使用的域名和端口号),即可进入管理员账号设置页面,设置完成后,即可正常使用系统。

群聊过期可加微信 wuhaoworld 拉你入群。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for HiveChat

Similar Open Source Tools

HiveChat

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

new-api

New API is an open-source project based on One API with additional features and improvements. It offers a new UI interface, supports Midjourney-Proxy(Plus) interface, online recharge functionality, model-based charging, channel weight randomization, data dashboard, token-controlled models, Telegram authorization login, Suno API support, Rerank model integration, and various third-party models. Users can customize models, retry channels, and configure caching settings. The deployment can be done using Docker with SQLite or MySQL databases. The project provides documentation for Midjourney and Suno interfaces, and it is suitable for AI enthusiasts and developers looking to enhance AI capabilities.

ChatPilot

ChatPilot is a chat agent tool that enables AgentChat conversations, supports Google search, URL conversation (RAG), and code interpreter functionality, replicates Kimi Chat (file, drag and drop; URL, send out), and supports OpenAI/Azure API. It is based on LangChain and implements ReAct and OpenAI Function Call for agent Q&A dialogue. The tool supports various automatic tools such as online search using Google Search API, URL parsing tool, Python code interpreter, and enhanced RAG file Q&A with query rewriting support. It also allows front-end and back-end service separation using Svelte and FastAPI, respectively. Additionally, it supports voice input/output, image generation, user management, permission control, and chat record import/export.

app-platform

AppPlatform is an advanced large-scale model application engineering aimed at simplifying the development process of AI applications through integrated declarative programming and low-code configuration tools. This project provides a powerful and scalable environment for software engineers and product managers to support the full-cycle development of AI applications from concept to deployment. The backend module is based on the FIT framework, utilizing a plugin-based development approach, including application management and feature extension modules. The frontend module is developed using React framework, focusing on core modules such as application development, application marketplace, intelligent forms, and plugin management. Key features include low-code graphical interface, powerful operators and scheduling platform, and sharing and collaboration capabilities. The project also provides detailed instructions for setting up and running both backend and frontend environments for development and testing.

cool-admin-midway

Cool-admin (midway version) is a cool open-source backend permission management system that supports modular, plugin-based, rapid CRUD development. It facilitates the quick construction and iteration of backend management systems, deployable in various ways such as serverless, docker, and traditional servers. It features AI coding for generating APIs and frontend pages, flow orchestration for drag-and-drop functionality, modular and plugin-based design for clear and maintainable code. The tech stack includes Node.js, Midway.js, Koa.js, TypeScript for backend, and Vue.js, Element-Plus, JSX, Pinia, Vue Router for frontend. It offers friendly technology choices for both frontend and backend developers, with TypeScript syntax similar to Java and PHP for backend developers. The tool is suitable for those looking for a modern, efficient, and fast development experience.

langchain4j-aideepin-web

The langchain4j-aideepin-web repository is the frontend project of langchain4j-aideepin, an open-source, offline deployable retrieval enhancement generation (RAG) project based on large language models such as ChatGPT and application frameworks such as Langchain4j. It includes features like registration & login, multi-sessions (multi-roles), image generation (text-to-image, image editing, image-to-image), suggestions, quota control, knowledge base (RAG) based on large models, model switching, and search engine switching.

evalplus

EvalPlus is a rigorous evaluation framework for LLM4Code, providing HumanEval+ and MBPP+ tests to evaluate large language models on code generation tasks. It offers precise evaluation and ranking, coding rigorousness analysis, and pre-generated code samples. Users can use EvalPlus to generate code solutions, post-process code, and evaluate code quality. The tool includes tools for code generation and test input generation using various backends.

wechat-bot

WeChat Bot is a simple and easy-to-use WeChat robot based on chatgpt and wechaty. It can help you automatically reply to WeChat messages or manage WeChat groups/friends. The tool requires configuration of AI services such as Xunfei, Kimi, or ChatGPT. Users can customize the tool to automatically reply to group or private chat messages based on predefined conditions. The tool supports running in Docker for easy deployment and provides a convenient way to interact with various AI services for WeChat automation.

emohaa-free-api

Emohaa AI Free API is a free API that allows you to access the Emohaa AI chatbot. Emohaa AI is a powerful chatbot that can understand and respond to a wide range of natural language queries. It can be used for a variety of purposes, such as customer service, information retrieval, and language translation. The Emohaa AI Free API is easy to use and can be integrated into any application. It is a great way to add AI capabilities to your projects without having to build your own chatbot from scratch.

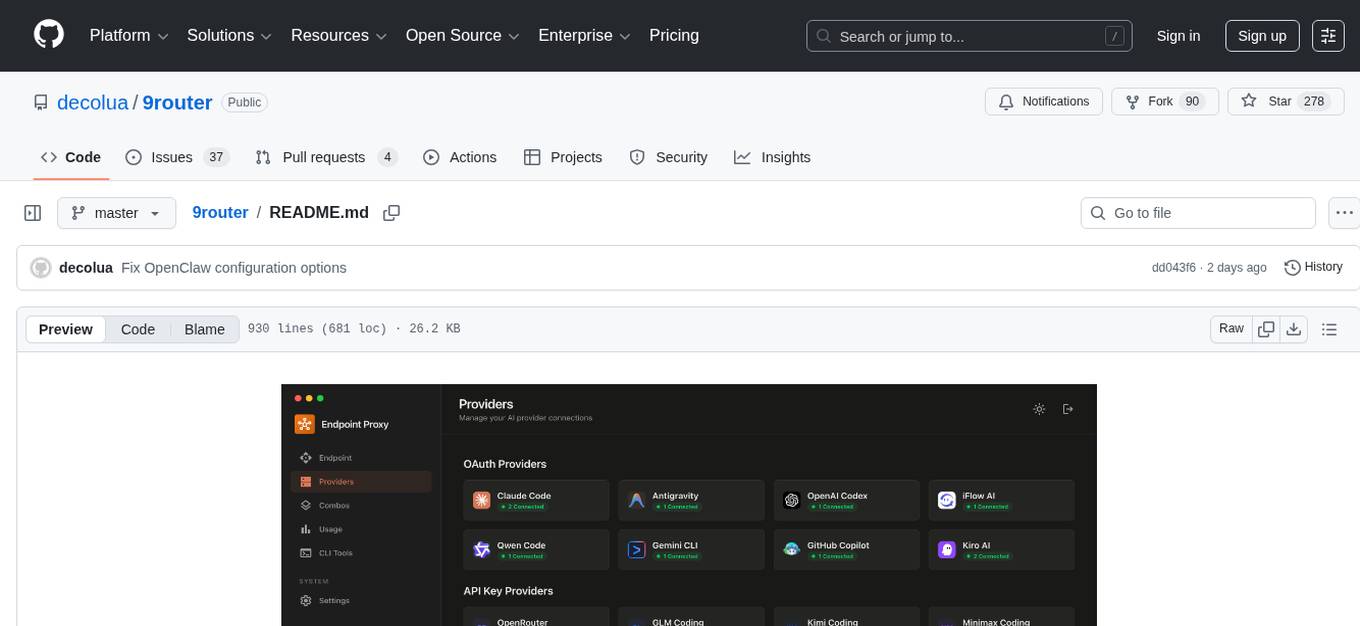

9router

9Router is a free AI router tool designed to help developers maximize their AI subscriptions, auto-route to free and cheap AI models with smart fallback, and avoid hitting limits and wasting money. It offers features like real-time quota tracking, format translation between OpenAI, Claude, and Gemini, multi-account support, auto token refresh, custom model combinations, request logging, cloud sync, usage analytics, and flexible deployment options. The tool supports various providers like Claude Code, Codex, Gemini CLI, GitHub Copilot, GLM, MiniMax, iFlow, Qwen, and Kiro, and allows users to create combos for different scenarios. Users can connect to the tool via CLI tools like Cursor, Claude Code, Codex, OpenClaw, and Cline, and deploy it on VPS, Docker, or Cloudflare Workers.

AMchat

AMchat is a large language model that integrates advanced math concepts, exercises, and solutions. The model is based on the InternLM2-Math-7B model and is specifically designed to answer advanced math problems. It provides a comprehensive dataset that combines Math and advanced math exercises and solutions. Users can download the model from ModelScope or OpenXLab, deploy it locally or using Docker, and even retrain it using XTuner for fine-tuning. The tool also supports LMDeploy for quantization, OpenCompass for evaluation, and various other features for model deployment and evaluation. The project contributors have provided detailed documentation and guides for users to utilize the tool effectively.

EasyAIVtuber

EasyAIVtuber is a tool designed to animate 2D waifus by providing features like automatic idle actions, speaking animations, head nodding, singing animations, and sleeping mode. It also offers API endpoints and a web UI for interaction. The tool requires dependencies like torch and pre-trained models for optimal performance. Users can easily test the tool using OBS and UnityCapture, with options to customize character input, output size, simplification level, webcam output, model selection, port configuration, sleep interval, and movement extension. The tool also provides an API using Flask for actions like speaking based on audio, rhythmic movements, singing based on music and voice, stopping current actions, and changing images.

meet-libai

The 'meet-libai' project aims to promote and popularize the cultural heritage of the Chinese poet Li Bai by constructing a knowledge graph of Li Bai and training a professional AI intelligent body using large models. The project includes features such as data preprocessing, knowledge graph construction, question-answering system development, and visualization exploration of the graph structure. It also provides code implementations for large models and RAG retrieval enhancement.

llm_model_hub

Model Hub V2 is a one-stop platform for model fine-tuning, deployment, and debugging without code, providing users with a visual interface to quickly validate the effects of fine-tuning various open-source models, facilitating rapid experimentation and decision-making, and lowering the threshold for users to fine-tune large models. For detailed instructions, please refer to the Feishu documentation.

botgroup.chat

botgroup.chat is a multi-person AI chat application based on React and Cloudflare Pages for free one-click deployment. It supports multiple AI roles participating in conversations simultaneously, providing an interactive experience similar to group chat. The application features real-time streaming responses, customizable AI roles and personalities, group management functionality, AI role mute function, Markdown format support, mathematical formula display with KaTeX, aesthetically pleasing UI design, and responsive design for mobile devices.

ai-wechat-bot

Gewechat is a project based on the Gewechat project to implement a personal WeChat channel, using the iPad protocol for login. It can obtain wxid and send voice messages, which is more stable than the itchat protocol. The project provides documentation for the API. Users can deploy the Gewechat service and use the ai-wechat-bot project to interface with it. Configuration parameters for Gewechat and ai-wechat-bot need to be set in the config.json file. Gewechat supports sending voice messages, with limitations on the duration of received voice messages. The project has restrictions such as requiring the server to be in the same province as the device logging into WeChat, limited file download support, and support only for text and image messages.

For similar tasks

h2ogpt

h2oGPT is an Apache V2 open-source project that allows users to query and summarize documents or chat with local private GPT LLMs. It features a private offline database of any documents (PDFs, Excel, Word, Images, Video Frames, Youtube, Audio, Code, Text, MarkDown, etc.), a persistent database (Chroma, Weaviate, or in-memory FAISS) using accurate embeddings (instructor-large, all-MiniLM-L6-v2, etc.), and efficient use of context using instruct-tuned LLMs (no need for LangChain's few-shot approach). h2oGPT also offers parallel summarization and extraction, reaching an output of 80 tokens per second with the 13B LLaMa2 model, HYDE (Hypothetical Document Embeddings) for enhanced retrieval based upon LLM responses, a variety of models supported (LLaMa2, Mistral, Falcon, Vicuna, WizardLM. With AutoGPTQ, 4-bit/8-bit, LORA, etc.), GPU support from HF and LLaMa.cpp GGML models, and CPU support using HF, LLaMa.cpp, and GPT4ALL models. Additionally, h2oGPT provides Attention Sinks for arbitrarily long generation (LLaMa-2, Mistral, MPT, Pythia, Falcon, etc.), a UI or CLI with streaming of all models, the ability to upload and view documents through the UI (control multiple collaborative or personal collections), Vision Models LLaVa, Claude-3, Gemini-Pro-Vision, GPT-4-Vision, Image Generation Stable Diffusion (sdxl-turbo, sdxl) and PlaygroundAI (playv2), Voice STT using Whisper with streaming audio conversion, Voice TTS using MIT-Licensed Microsoft Speech T5 with multiple voices and Streaming audio conversion, Voice TTS using MPL2-Licensed TTS including Voice Cloning and Streaming audio conversion, AI Assistant Voice Control Mode for hands-free control of h2oGPT chat, Bake-off UI mode against many models at the same time, Easy Download of model artifacts and control over models like LLaMa.cpp through the UI, Authentication in the UI by user/password via Native or Google OAuth, State Preservation in the UI by user/password, Linux, Docker, macOS, and Windows support, Easy Windows Installer for Windows 10 64-bit (CPU/CUDA), Easy macOS Installer for macOS (CPU/M1/M2), Inference Servers support (oLLaMa, HF TGI server, vLLM, Gradio, ExLLaMa, Replicate, OpenAI, Azure OpenAI, Anthropic), OpenAI-compliant, Server Proxy API (h2oGPT acts as drop-in-replacement to OpenAI server), Python client API (to talk to Gradio server), JSON Mode with any model via code block extraction. Also supports MistralAI JSON mode, Claude-3 via function calling with strict Schema, OpenAI via JSON mode, and vLLM via guided_json with strict Schema, Web-Search integration with Chat and Document Q/A, Agents for Search, Document Q/A, Python Code, CSV frames (Experimental, best with OpenAI currently), Evaluate performance using reward models, and Quality maintained with over 1000 unit and integration tests taking over 4 GPU-hours.

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

react-native-vercel-ai

Run Vercel AI package on React Native, Expo, Web and Universal apps. Currently React Native fetch API does not support streaming which is used as a default on Vercel AI. This package enables you to use AI library on React Native but the best usage is when used on Expo universal native apps. On mobile you get back responses without streaming with the same API of `useChat` and `useCompletion` and on web it will fallback to `ai/react`

LLamaSharp

LLamaSharp is a cross-platform library to run 🦙LLaMA/LLaVA model (and others) on your local device. Based on llama.cpp, inference with LLamaSharp is efficient on both CPU and GPU. With the higher-level APIs and RAG support, it's convenient to deploy LLM (Large Language Model) in your application with LLamaSharp.

gpt4all

GPT4All is an ecosystem to run powerful and customized large language models that work locally on consumer grade CPUs and any GPU. Note that your CPU needs to support AVX or AVX2 instructions. Learn more in the documentation. A GPT4All model is a 3GB - 8GB file that you can download and plug into the GPT4All open-source ecosystem software. Nomic AI supports and maintains this software ecosystem to enforce quality and security alongside spearheading the effort to allow any person or enterprise to easily train and deploy their own on-edge large language models.

ChatGPT-Telegram-Bot

ChatGPT Telegram Bot is a Telegram bot that provides a smooth AI experience. It supports both Azure OpenAI and native OpenAI, and offers real-time (streaming) response to AI, with a faster and smoother experience. The bot also has 15 preset bot identities that can be quickly switched, and supports custom bot identities to meet personalized needs. Additionally, it supports clearing the contents of the chat with a single click, and restarting the conversation at any time. The bot also supports native Telegram bot button support, making it easy and intuitive to implement required functions. User level division is also supported, with different levels enjoying different single session token numbers, context numbers, and session frequencies. The bot supports English and Chinese on UI, and is containerized for easy deployment.

twinny

Twinny is a free and open-source AI code completion plugin for Visual Studio Code and compatible editors. It integrates with various tools and frameworks, including Ollama, llama.cpp, oobabooga/text-generation-webui, LM Studio, LiteLLM, and Open WebUI. Twinny offers features such as fill-in-the-middle code completion, chat with AI about your code, customizable API endpoints, and support for single or multiline fill-in-middle completions. It is easy to install via the Visual Studio Code extensions marketplace and provides a range of customization options. Twinny supports both online and offline operation and conforms to the OpenAI API standard.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.