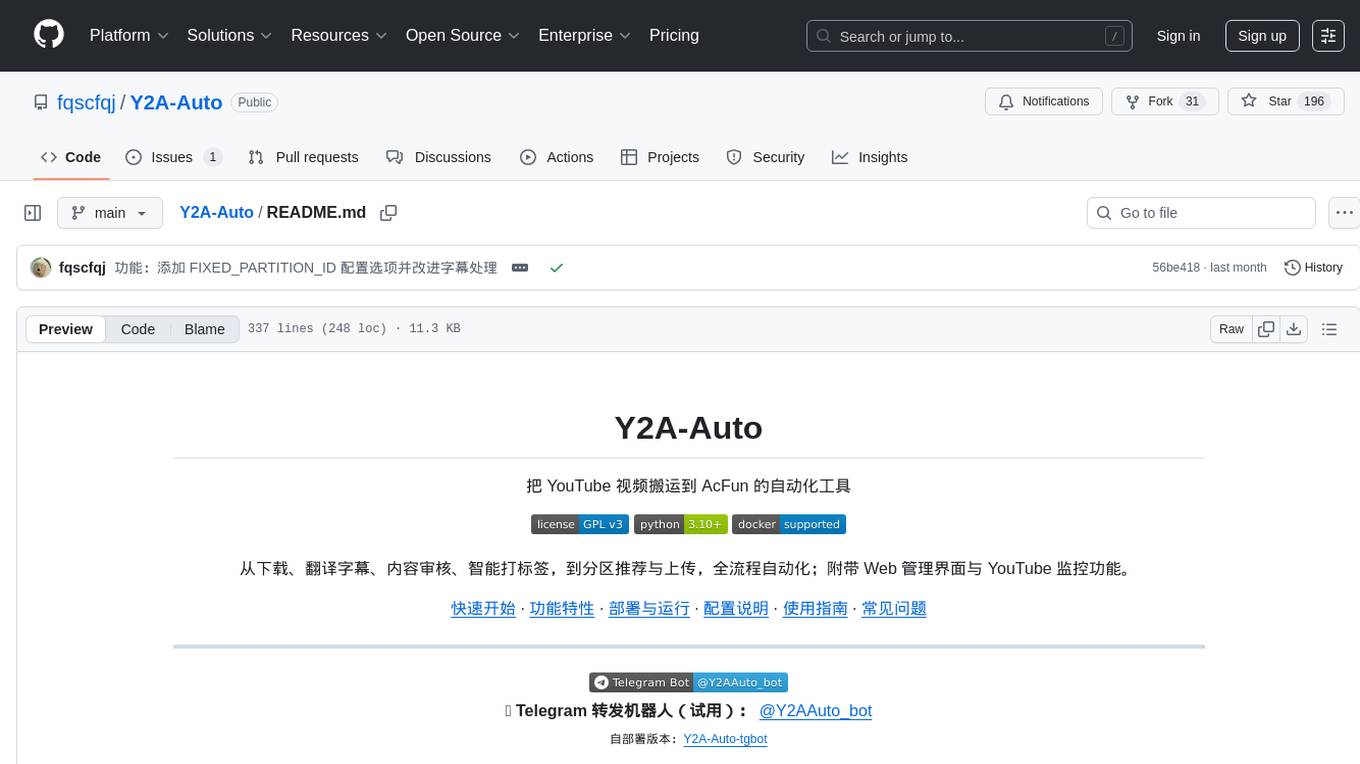

Y2A-Auto

YouTube到AcFun自动化搬运工具,支持AI翻译、字幕生成、内容审核、智能监控

Stars: 227

Y2A-Auto is an automation tool that transfers YouTube videos to AcFun. It automates the entire process from downloading, translating subtitles, content moderation, intelligent tagging, to partition recommendation and upload. It also includes a web management interface and YouTube monitoring feature. The tool supports features such as downloading videos and covers using yt-dlp, AI translation and embedding of subtitles, AI generation of titles/descriptions/tags, content moderation using Aliyun Green, uploading to AcFun, task management, manual review, and forced upload. It also offers settings for automatic mode, concurrency, proxies, subtitles, login protection, brute force lock, YouTube monitoring, channel/trend capturing, scheduled tasks, history records, optional GPU/hardware acceleration, and Docker deployment or local execution.

README:

把 YouTube 视频搬运到 AcFun 的自动化工具

从下载、翻译字幕、内容审核、智能打标签,到分区推荐与上传,全流程自动化;附带 Web 管理界面与 YouTube 监控功能。

快速开始 · 功能特性 · 部署与运行 · 配置说明 · 使用指南 · 常见问题

📣 Telegram 转发机器人(试用):

@Y2AAuto_bot

自部署版本:Y2A-Auto-tgbot

- 一条龙自动化

- yt-dlp 下载视频与封面

- 字幕下载、AI 翻译、自动质检(QC)并可嵌入视频

- AI 生成标题/描述与标签,推荐分区

- 内容安全审核(阿里云 Green)

- 上传至 AcFun(基于 acfun_upload)

- Web 管理后台

- 任务列表、人工审核、强制上传

- 设置中心(开关自动模式、并发、代理、字幕等)

- 登录保护与暴力破解锁定

- YouTube 监控

- 频道/趋势抓取(需配置 API Key)

- 定时任务与历史记录

- 视频编码

- Docker 一键部署,或本地运行

Y2A-Auto/

├─ app.py # Flask Web 入口

├─ requirements.txt # 依赖列表

├─ Dockerfile # Docker 构建

├─ docker-compose.yml # 生产/拉取镜像运行

├─ docker-compose-build.yml # 本地构建镜像运行

├─ Makefile # 常用 Docker 管理命令

├─ README.md # 项目说明(此文件)

├─ LICENSE # 许可证

├─ acfunid/ # AcFun 分区映射

│ └─ id_mapping.json

├─ build-tools/ # 打包/构建相关脚本

│ ├─ build_exe.py

│ ├─ build.bat

│ ├─ README.md

│ └─ setup_app.py

├─ config/ # 应用配置(首次运行生成)

│ └─ config.json

├─ cookies/ # Cookie(需自行准备)

│ ├─ ac_cookies.txt

│ └─ yt_cookies.txt

├─ db/ # SQLite 数据库与持久化数据

├─ downloads/ # 任务产物(每任务一个子目录)

├─ ffmpeg/ # 仓库内置 Windows/Linux ffmpeg,可按需替换

├─ fonts/ # 字体(供字幕嵌入使用)

├─ logs/ # 运行与任务日志

├─ modules/ # 核心后端模块(应用逻辑)

│ ├─ __init__.py

│ ├─ acfun_uploader.py

│ ├─ ai_enhancer.py

│ ├─ config_manager.py

│ ├─ content_moderator.py

│ ├─ speech_recognition.py

│ ├─ subtitle_translator.py

│ ├─ subtitle_qc.py # 字幕质检(可选)

│ ├─ task_manager.py

│ ├─ youtube_handler.py

│ ├─ youtube_monitor.py

│ └─ utils.py

├─ static/ # 前端静态资源(CSS/JS/图标/第三方库)

│ ├─ css/

│ │ └─ style.css

│ ├─ img/

│ ├─ js/

│ │ └─ main.js

│ └─ lib/

│ └─ bootstrap/

│ ├─ bootstrap.bundle.min.js

│ ├─ bootstrap.min.css

│ └─ jquery.min.js

│ └─ icons/

│ └─ bootstrap-icons.css

├─ temp/ # 临时文件与中间产物

└─ templates/ # Jinja2 模板

├─ base.html

├─ edit_task.html

├─ index.html

├─ login.html

├─ manual_review.html

├─ settings.html

├─ tasks.html

├─ youtube_monitor_config.html

├─ youtube_monitor_history.html

└─ youtube_monitor.html

推荐使用 Docker(无需本地安装 Python/FFmpeg/yt-dlp):

- 准备 Cookie(重要)

- 创建

cookies/yt_cookies.txt(YouTube 登录 Cookie) - 创建

cookies/ac_cookies.txt(AcFun 登录 Cookie) - 可用浏览器扩展导出 Cookie(例如「Get cookies.txt」);注意保护隐私,避免提交到仓库。

- 启动服务

- 安装好 Docker 与 Docker Compose 后,在项目根目录执行:

docker compose up -d- 打开 Web 界面

- 浏览器访问:http://localhost:5000

- 首次进入可在「设置」里开启登录保护并设置密码、开启自动模式等。

目录 config/db/downloads/logs/temp/cookies 会被挂载到容器,数据持久化保存。

- 使用预构建镜像:

docker-compose.yml已配置好端口与挂载目录 - 关闭/重启/查看日志:

- 关闭:

docker compose down - 重启:

docker compose restart - 日志:

docker compose logs -f

- 关闭:

前置依赖:

- Python 3.11+

- FFmpeg(仓库已附带 Windows/Linux 版本,可直接使用)

- yt-dlp(

pip install yt-dlp)

步骤:

# 1) 创建并启用虚拟环境(Windows PowerShell)

py -3.11 -m venv .venv

.\.venv\Scripts\Activate.ps1

# 2) 安装依赖

pip install -r requirements.txt

# 3) 运行

python app.py访问 http://127.0.0.1:5000 打开 Web 界面。

应用首次运行会在 config/config.json 生成配置文件;你也可以手动编辑。常用项:

{

"AUTO_MODE_ENABLED": true,

"password_protection_enabled": true,

"password": "建议自行设置",

"YOUTUBE_COOKIES_PATH": "cookies/yt_cookies.txt",

"ACFUN_COOKIES_PATH": "cookies/ac_cookies.txt",

"OPENAI_API_KEY": "可选:用于标题/描述/标签、字幕翻译与字幕质检",

"OPENAI_BASE_URL": "https://api.openai.com/v1",

"OPENAI_MODEL_NAME": "gpt-3.5-turbo",

"SUBTITLE_TRANSLATION_ENABLED": true,

"SUBTITLE_TARGET_LANGUAGE": "zh",

"SUBTITLE_QC_ENABLED": false,

"SUBTITLE_QC_THRESHOLD": 0.6,

"SUBTITLE_QC_SAMPLE_MAX_ITEMS": 80,

"YOUTUBE_API_KEY": "可选:启用 YouTube 监控",

"VIDEO_ENCODER": "auto",

"VIDEO_CRF": 23,

"VIDEO_PRESET": "medium",

"VIDEO_BITRATE": ""

}

### 字幕质检(QC)配置

若启用字幕质检(`SUBTITLE_QC_ENABLED: true`),系统会在字幕生成/翻译后自动抽样送 LLM 复核:

- `SUBTITLE_QC_THRESHOLD`(0-1):通过阈值;LLM 评分低于此值则标记字幕异常

- `SUBTITLE_QC_SAMPLE_MAX_ITEMS`:抽样条目数;多抽可降低误判

- `SUBTITLE_QC_MAX_CHARS`:送检文本最大字符数;超出将截断以控制 token 消耗

- `SUBTITLE_QC_MODEL_NAME`:指定 QC 模型(留空则复用字幕翻译模型)

**QC 失败的行为**:

- 不烧录字幕,但保留字幕文件与原视频

- 继续上传原视频,任务最终标记为"完成"

- 在任务列表中显示"字幕异常"徽标,便于后续排查

场景示例:无人声视频被 ASR 误识别为大量重复句,或翻译质量极差,QC 可自动检出并跳过烧录,避免成片质量降低。

提示:

- 仅在本机安全环境中保存密钥,切勿把包含密钥的文件提交到仓库。

- 若需要代理下载 YouTube,可在设置里启用代理并填写地址/账号密码。

- 字幕 QC 需要 OpenAI API Key 与网络连接;若 API 不可用,QC 自动跳过并放行字幕

## 使用指南

1) 在首页或「任务」页,粘贴 YouTube 视频链接添加任务

2) 自动模式下会依次:下载 →(可选)转写/翻译字幕 → 生成标题/描述/标签 → 内容审核 →(可选)人工审核 → 上传到 AcFun

3) 人工审核可在「人工审核」页修改标题/描述/标签与分区,再点击「强制上传」

4) YouTube 监控:在界面中开启并配置 API Key 后,可添加频道/关键词定时监控

目录说明:

- `downloads/` 每个任务一个子目录,包含 video.mp4、cover.jpg、metadata.json、字幕等

- `logs/` 运行日志与各任务日志(task_xxx.log)

- `db/` SQLite 数据库

- `cookies/` 存放 cookies.txt(需自行准备)

## 内置 FFmpeg

- Release 包含 `ffmpeg/` 目录,内置 Windows 版 BtbN 构建与 Linux 静态版二进制及配套许可证。

- Docker 镜像与本地构建会根据 `FFMPEG_VARIANT`(默认 `btbn`)在线拉取 [BtbN/FFmpeg-Builds](https://github.com/BtbN/FFmpeg-Builds)。如需最小体积的纯 CPU 版本,可在构建时附加 `--build-arg FFMPEG_VARIANT=static` 回退到 johnvansickle 静态包。

- 运行时始终优先使用 `ffmpeg/` 目录中的二进制;若需要升级,可直接替换该目录并保留许可证文件。

- 预编译二进制包含 NVENC/QSV/VAAPI 等硬件编码器支持。

## GPU 硬件编码加速

本项目支持 NVIDIA、Intel、AMD 三大厂商的 GPU 硬件编码加速,可显著提升字幕嵌入(烧字)的转码速度。

### 视频转码参数配置

在设置页面的"字幕翻译"区域可配置以下参数:

| 参数 | 说明 | 默认值 |

|------|------|--------|

| `VIDEO_ENCODER` | 编码器选择:auto/cpu/nvidia/intel/amd | auto |

| `VIDEO_CRF` | 视频质量 (0-51,越小质量越高) | 23 |

| `VIDEO_PRESET` | 编码速度预设 | medium |

| `VIDEO_BITRATE` | 固定比特率(如"8M"),设置后CRF失效 | 空 |

### Docker 环境 GPU 配置

根据您的显卡类型,编辑 `docker-compose.yml` 并取消对应配置的注释:

#### NVIDIA GPU

需要安装 [nvidia-container-toolkit](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html):

```yaml

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: all

capabilities: [gpu, video]devices:

- /dev/dri:/dev/dri

group_add:

- video

- renderdevices:

- /dev/dri:/dev/dri

group_add:

- video

- renderWindows 用户只需确保安装了对应显卡的最新驱动,程序会自动检测并使用可用的硬件编码器:

- NVIDIA:安装 GeForce/Studio 驱动即可

- Intel:安装 Intel Graphics 驱动及 Intel Media SDK

- AMD:安装 Radeon Software 驱动

| 显卡厂商 | 编码器 | 平台 |

|---|---|---|

| NVIDIA | h264_nvenc | Windows/Linux |

| Intel | h264_qsv | Windows/Linux |

| AMD | h264_amf | Windows |

| AMD | h264_vaapi | Linux |

如果指定的硬件编码器不可用,系统会自动回退到 CPU 软编码(libx264)。

如果你希望完全控制 FFmpeg 版本,仍可以参考以下模式自定义镜像(示例为纯 CPU 方案):

FROM jrottenberg/ffmpeg:6.1-slim AS ffmpeg

FROM python:3.11-slim

WORKDIR /app

COPY --from=ffmpeg /usr/local /usr/local

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY . .

CMD ["python", "app.py"]构建完自定义镜像后无需挂载 GPU 设备;也可以在默认 Dockerfile 构建时追加 --build-arg FFMPEG_VARIANT=static 获得体积更小的纯 CPU 版本。

- 403 / 需要登录 / not a bot 等错误

- 通常是 YouTube 反爬或权限问题。请更新

cookies/yt_cookies.txt(确保包含有效的youtube.com登录状态)。

- 通常是 YouTube 反爬或权限问题。请更新

- 找不到 FFmpeg / yt-dlp

- Docker 用户无需关心;本地运行请确保两者在 PATH 中或通过

pip install yt-dlp安装,并单独安装 FFmpeg。

- Docker 用户无需关心;本地运行请确保两者在 PATH 中或通过

- 上传到 AcFun 失败

- 请更新

cookies/ac_cookies.txt,并在「人工审核」页确认分区、标题与描述合规。

- 请更新

- 字幕翻译速度慢

- 可在设置中调大并发与批大小(注意 API 限速)。视频转码采用 CPU 软编码,处理速度取决于 CPU 性能。

- 欢迎提交 Issue/PR:问题反馈、功能建议都很棒 → Issues

- 提交前请避免包含个人 Cookie、密钥等敏感信息。

特别感谢 @Aruelius 的 acfun_upload 项目为上传实现提供了重要参考。

本项目基于 GNU GPL v3 开源。请遵守各平台服务条款,仅在合规前提下用于学习与研究。

如果对你有帮助,欢迎 Star 支持 ✨

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Y2A-Auto

Similar Open Source Tools

Y2A-Auto

Y2A-Auto is an automation tool that transfers YouTube videos to AcFun. It automates the entire process from downloading, translating subtitles, content moderation, intelligent tagging, to partition recommendation and upload. It also includes a web management interface and YouTube monitoring feature. The tool supports features such as downloading videos and covers using yt-dlp, AI translation and embedding of subtitles, AI generation of titles/descriptions/tags, content moderation using Aliyun Green, uploading to AcFun, task management, manual review, and forced upload. It also offers settings for automatic mode, concurrency, proxies, subtitles, login protection, brute force lock, YouTube monitoring, channel/trend capturing, scheduled tasks, history records, optional GPU/hardware acceleration, and Docker deployment or local execution.

goclaw

goclaw is a powerful AI Agent framework written in Go language. It provides a complete tool system for FileSystem, Shell, Web, and Browser with Docker sandbox support and permission control. The framework includes a skill system compatible with OpenClaw and AgentSkills specifications, supporting automatic discovery and environment gating. It also offers persistent session storage, multi-channel support for Telegram, WhatsApp, Feishu, QQ, and WeWork, flexible configuration with YAML/JSON support, multiple LLM providers like OpenAI, Anthropic, and OpenRouter, WebSocket Gateway, Cron scheduling, and Browser automation based on Chrome DevTools Protocol.

Lim-Code

LimCode is a powerful VS Code AI programming assistant that supports multiple AI models, intelligent tool invocation, and modular architecture. It features support for various AI channels, a smart tool system for code manipulation, MCP protocol support for external tool extension, intelligent context management, session management, and more. Users can install LimCode from the plugin store or via VSIX, or build it from the source code. The tool offers a rich set of features for AI programming and code manipulation within the VS Code environment.

openai-forward

OpenAI-Forward is an efficient forwarding service implemented for large language models. Its core features include user request rate control, token rate limiting, intelligent prediction caching, log management, and API key management, aiming to provide efficient and convenient model forwarding services. Whether proxying local language models or cloud-based language models like LocalAI or OpenAI, OpenAI-Forward makes it easy. Thanks to support from libraries like uvicorn, aiohttp, and asyncio, OpenAI-Forward achieves excellent asynchronous performance.

banana-slides

Banana-slides is a native AI-powered PPT generation application based on the nano banana pro model. It supports generating complete PPT presentations from ideas, outlines, and page descriptions. The app automatically extracts attachment charts, uploads any materials, and allows verbal modifications, aiming to truly 'Vibe PPT'. It lowers the threshold for creating PPTs, enabling everyone to quickly create visually appealing and professional presentations.

resume-design

Resume-design is an open-source and free resume design and template download website, built with Vue3 + TypeScript + Vite + Element-plus + pinia. It provides two design tools for creating beautiful resumes and a complete backend management system. The project has released two frontend versions and will integrate with a backend system in the future. Users can learn frontend by downloading the released versions or learn design tools by pulling the latest frontend code.

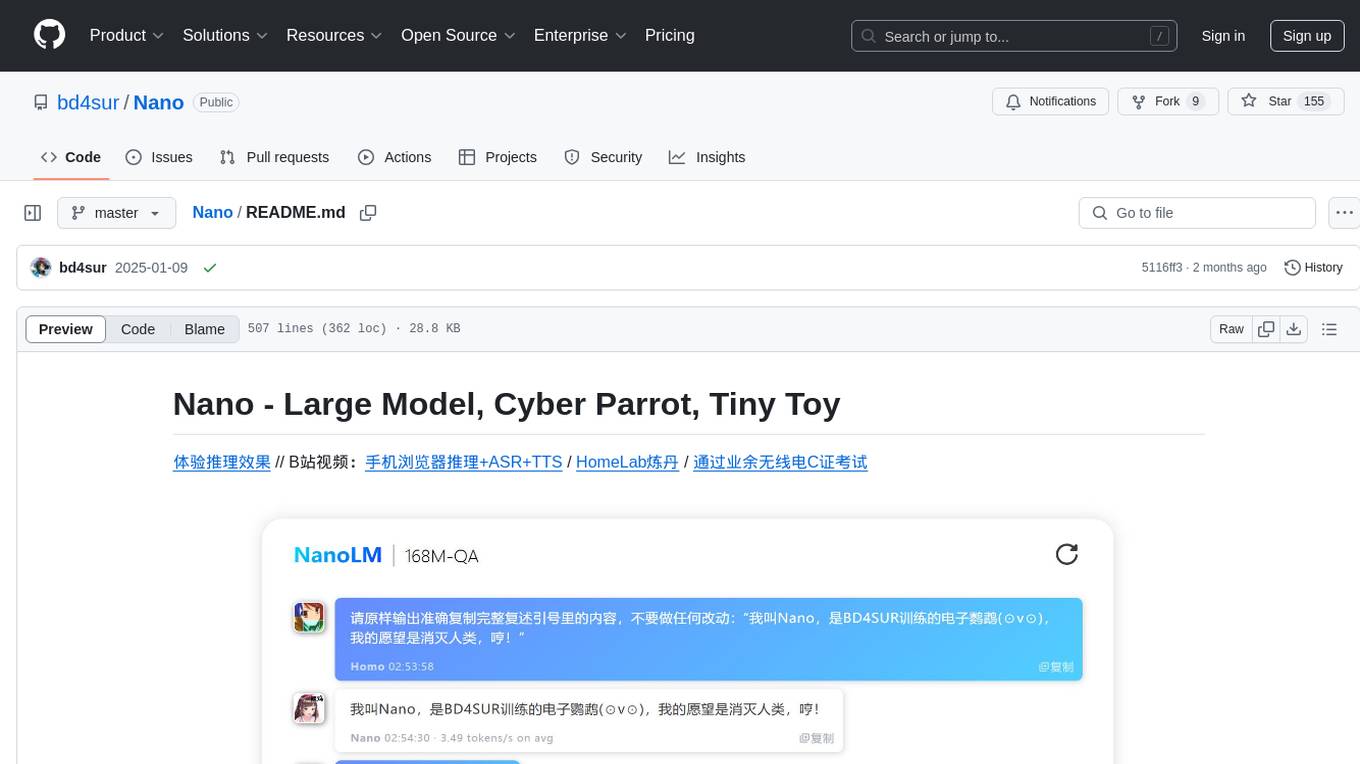

Nano

Nano is a Transformer-based autoregressive language model for personal enjoyment, research, modification, and alchemy. It aims to implement a specific and lightweight Transformer language model based on PyTorch, without relying on Hugging Face. Nano provides pre-training and supervised fine-tuning processes for models with 56M and 168M parameters, along with LoRA plugins. It supports inference on various computing devices and explores the potential of Transformer models in various non-NLP tasks. The repository also includes instructions for experiencing inference effects, installing dependencies, downloading and preprocessing data, pre-training, supervised fine-tuning, model conversion, and various other experiments.

uDesktopMascot

uDesktopMascot is an open-source project for a desktop mascot application with a theme of 'freedom of creation'. It allows users to load and display VRM or GLB/FBX model files on the desktop, customize GUI colors and background images, and access various features through a menu screen. The application supports Windows 10/11 and macOS platforms.

tradecat

TradeCat is a comprehensive data analysis and trading platform designed for cryptocurrency, stock, and macroeconomic data. It offers a wide range of features including multi-market data collection, technical indicator modules, AI analysis, signal detection engine, Telegram bot integration, and more. The platform utilizes technologies like Python, TimescaleDB, TA-Lib, Pandas, NumPy, and various APIs to provide users with valuable insights and tools for trading decisions. With a modular architecture and detailed documentation, TradeCat aims to empower users in making informed trading decisions across different markets.

TrainPPTAgent

TrainPPTAgent is an AI-based intelligent presentation generation tool. Users can input a topic and the system will automatically generate a well-structured and content-rich PPT outline and page-by-page content. The project adopts a front-end and back-end separation architecture: the front-end is responsible for interaction, outline editing, and template selection, while the back-end leverages large language models (LLM) and reinforcement learning (GRPO) to complete content generation and optimization, making the generated PPT more tailored to user goals.

hub

Hub is an open-source, high-performance LLM gateway written in Rust. It serves as a smart proxy for LLM applications, centralizing control and tracing of all LLM calls and traces. Built for efficiency, it provides a single API to connect to any LLM provider. The tool is designed to be fast, efficient, and completely open-source under the Apache 2.0 license.

LangChain-SearXNG

LangChain-SearXNG is an open-source AI search engine built on LangChain and SearXNG. It supports faster and more accurate search and question-answering functionalities. Users can deploy SearXNG and set up Python environment to run LangChain-SearXNG. The tool integrates AI models like OpenAI and ZhipuAI for search queries. It offers two search modes: Searxng and ZhipuWebSearch, allowing users to control the search workflow based on input parameters. LangChain-SearXNG v2 version enhances response speed and content quality compared to the previous version, providing a detailed configuration guide and showcasing the effectiveness of different search modes through comparisons.

MarkMap-OpenAi-ChatGpt

MarkMap-OpenAi-ChatGpt is a Vue.js-based mind map generation tool that allows users to generate mind maps by entering titles or content. The application integrates the markmap-lib and markmap-view libraries, supports visualizing mind maps, and provides functions for zooming and adapting the map to the screen. Users can also export the generated mind map in PNG, SVG, JPEG, and other formats. This project is suitable for quickly organizing ideas, study notes, project planning, etc. By simply entering content, users can get an intuitive mind map that can be continuously expanded, downloaded, and shared.

torch-rechub

Torch-RecHub is a lightweight, efficient, and user-friendly PyTorch recommendation system framework. It provides easy-to-use solutions for industrial-level recommendation systems, with features such as generative recommendation models, modular design for adding new models and datasets, PyTorch-based implementation for GPU acceleration, a rich library of 30+ classic and cutting-edge recommendation algorithms, standardized data loading, training, and evaluation processes, easy configuration through files or command-line parameters, reproducibility of experimental results, ONNX model export for production deployment, cross-engine data processing with PySpark support, and experiment visualization and tracking with integrated tools like WandB, SwanLab, and TensorBoardX.

MahoShojo-Generator

MahoShojo-Generator is a web-based AI structured generation tool that allows players to create personalized and evolving magical girls (or quirky characters) and related roles. It offers exciting cyber battles, storytelling activities, and even a ranking feature. The project also includes AI multi-channel polling, user system, public data card sharing, and sensitive word detection. It supports various functionalities such as character generation, arena system, growth and social interaction, cloud and sharing, and other features like scenario generation, tavern ecosystem linkage, and content safety measures.

sandbox

AIO Sandbox is an all-in-one agent sandbox environment that combines Browser, Shell, File, MCP operations, and VSCode Server in a single Docker container. It provides a unified, secure execution environment for AI agents and developers, with features like unified file system, multiple interfaces, secure execution, zero configuration, and agent-ready MCP-compatible APIs. The tool allows users to run shell commands, perform file operations, automate browser tasks, and integrate with various development tools and services.

For similar tasks

Y2A-Auto

Y2A-Auto is an automation tool that transfers YouTube videos to AcFun. It automates the entire process from downloading, translating subtitles, content moderation, intelligent tagging, to partition recommendation and upload. It also includes a web management interface and YouTube monitoring feature. The tool supports features such as downloading videos and covers using yt-dlp, AI translation and embedding of subtitles, AI generation of titles/descriptions/tags, content moderation using Aliyun Green, uploading to AcFun, task management, manual review, and forced upload. It also offers settings for automatic mode, concurrency, proxies, subtitles, login protection, brute force lock, YouTube monitoring, channel/trend capturing, scheduled tasks, history records, optional GPU/hardware acceleration, and Docker deployment or local execution.

Webscout

WebScout is a versatile tool that allows users to search for anything using Google, DuckDuckGo, and phind.com. It contains AI models, can transcribe YouTube videos, generate temporary email and phone numbers, has TTS support, webai (terminal GPT and open interpreter), and offline LLMs. It also supports features like weather forecasting, YT video downloading, temp mail and number generation, text-to-speech, advanced web searches, and more.

KrillinAI

KrillinAI is a video subtitle translation and dubbing tool based on AI large models, featuring speech recognition, intelligent sentence segmentation, professional translation, and one-click deployment of the entire process. It provides a one-stop workflow from video downloading to the final product, empowering cross-language cultural communication with AI. The tool supports multiple languages for input and translation, integrates features like automatic dependency installation, video downloading from platforms like YouTube and Bilibili, high-speed subtitle recognition, intelligent subtitle segmentation and alignment, custom vocabulary replacement, professional-level translation engine, and diverse external service selection for speech and large model services.

DownEdit

DownEdit is a fast and powerful program for downloading and editing videos from platforms like TikTok, Douyin, and Kuaishou. It allows users to effortlessly grab videos, make bulk edits, and utilize advanced AI features for generating videos, images, and sounds in bulk. The tool offers features like video, photo, and sound editing, downloading videos without watermarks, bulk AI generation, and AI editing for content enhancement.

AIO-Video-Downloader

AIO Video Downloader is an open-source Android application built on the robust yt-dlp backend with the help of youtubedl-android. It aims to be the most powerful download manager available, offering a clean and efficient interface while unlocking advanced downloading capabilities with minimal setup. With support for 1000+ sites and virtually any downloadable content across the web, AIO delivers a seamless yet powerful experience that balances speed, flexibility, and simplicity.

shots-studio

Shots Studio is a screenshot manager that uses on-device AI to intelligently organize and declutter your gallery. It offers AI-driven search, smart tagging, and custom collections for efficient screenshot management. Users can choose between cloud-powered AI or offline Gemma On-Device AI for privacy and speed. The tool allows users to search by content, automatically generate tags, group related screenshots, and process images without an internet connection. Shots Studio is open source, community-driven, and offers customizable AI options for personalized usage.

mindpocket

MindPocket is a fully open-source, free, multi-platform, one-click deployable personal bookmark system with AI Agent integration. It organizes bookmarks with AI-powered RAG content summarization and automatic tag generation, making it easy to find and manage saved content. The project is built using VIBE CODING principles and offers features like zero cost deployment, one-click deploy setup, multi-platform support, AI enhancement for smart tagging and summarization, and full open-source accessibility for user data ownership. The tool is designed to provide a seamless bookmarking experience across web, mobile, and browser extension platforms.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.