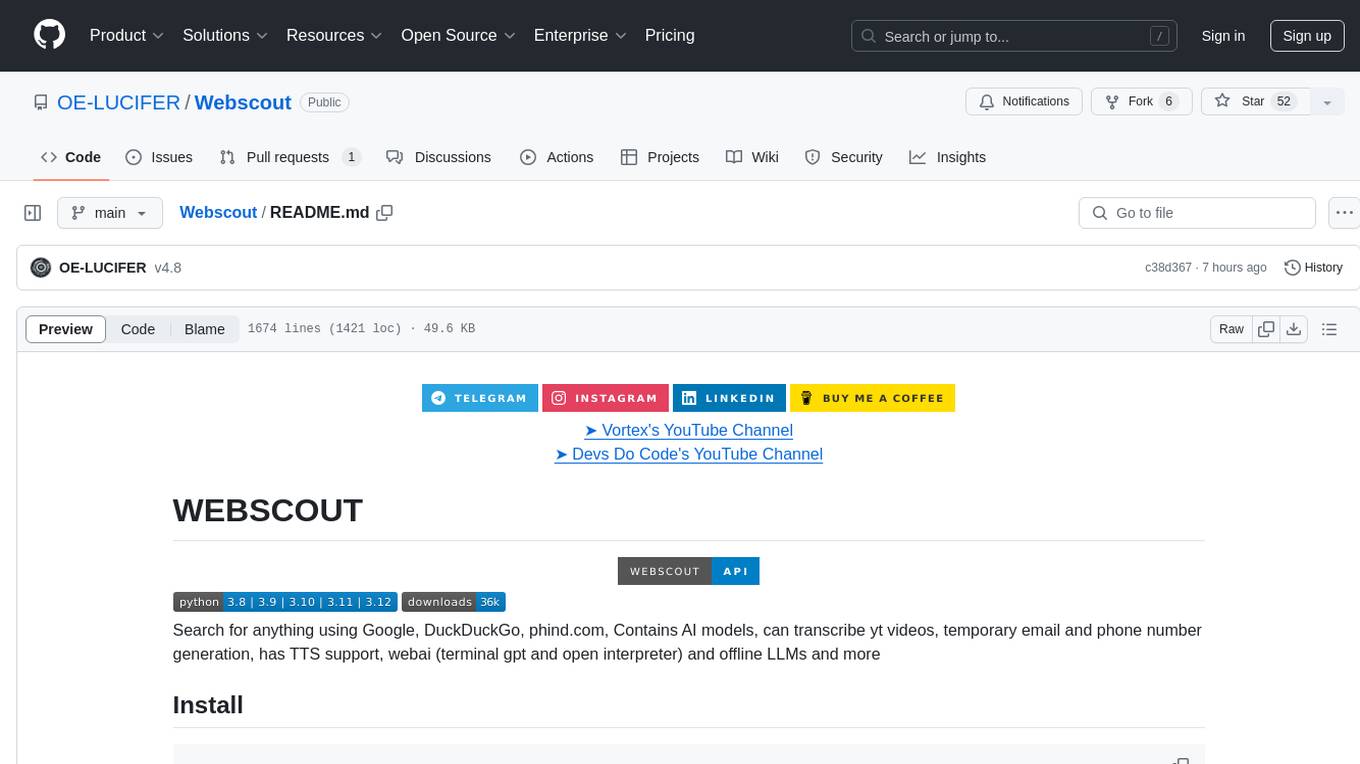

Webscout

Search for anything using Google, DuckDuckGo, phind.com, Contains AI models, can transcribe yt videos, temporary email and phone number generation, has TTS support, webai (terminal gpt and open interpreter) and offline LLMs

Stars: 203

WebScout is a versatile tool that allows users to search for anything using Google, DuckDuckGo, and phind.com. It contains AI models, can transcribe YouTube videos, generate temporary email and phone numbers, has TTS support, webai (terminal GPT and open interpreter), and offline LLMs. It also supports features like weather forecasting, YT video downloading, temp mail and number generation, text-to-speech, advanced web searches, and more.

README:

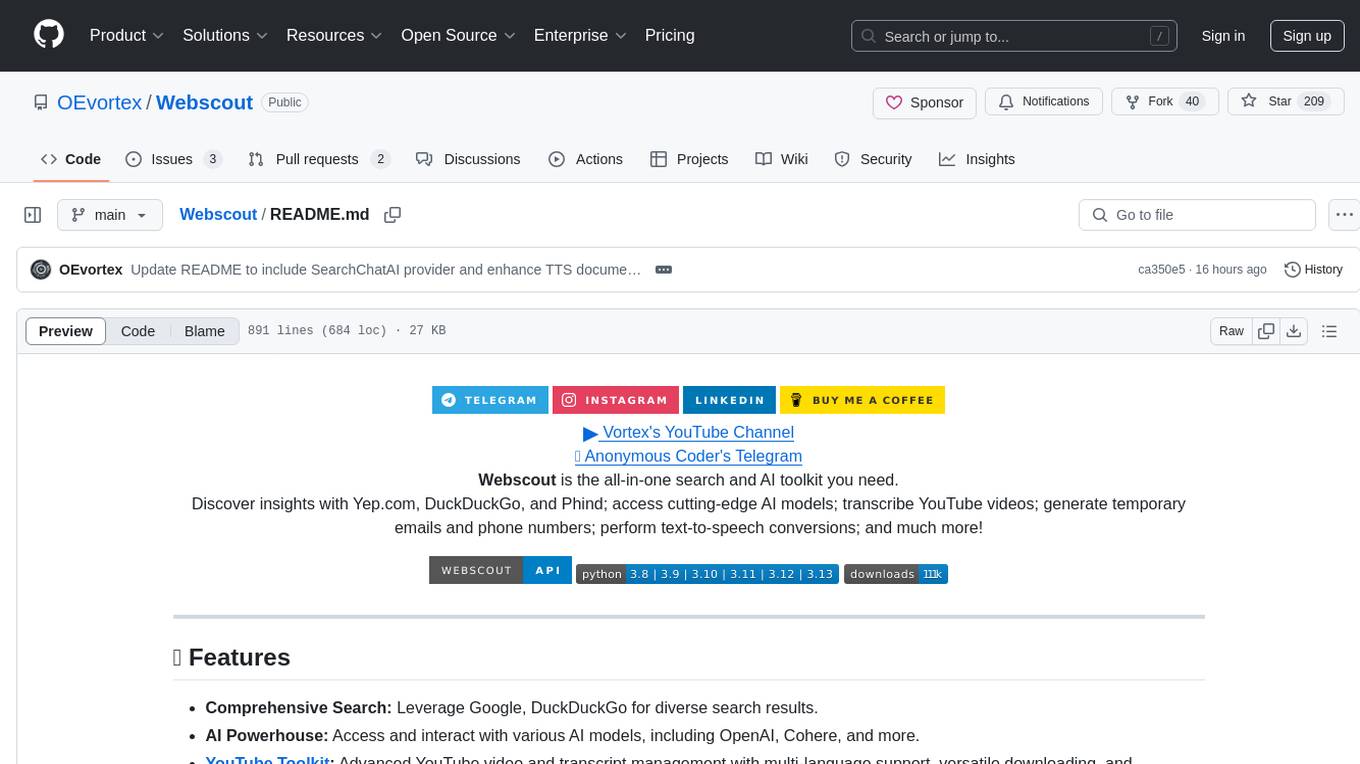

Webscout is the all-in-one search and AI toolkit you need.

Discover insights with Yep.com, DuckDuckGo, and Phind; access cutting-edge AI models; transcribe YouTube videos; generate temporary emails and phone numbers; perform text-to-speech conversions; and much more!

- Comprehensive Search: Leverage Google, DuckDuckGo for diverse search results.

- AI Powerhouse: Access and interact with various AI models, including OpenAI, Cohere, and more.

- YouTube Toolkit: Advanced YouTube video and transcript management with multi-language support, versatile downloading, and intelligent data extraction

- GitAPI: Powerful GitHub data extraction toolkit for seamless repository and user information retrieval, featuring commit tracking, issue management, and comprehensive user analytics - all without authentication requirements for public data

- Tempmail & Temp Number: Generate temporary email addresses and phone numbers for enhanced privacy.

- Text-to-Speech (TTS): Convert text into natural-sounding speech using multiple AI-powered providers like ElevenLabs, StreamElements, and Voicepods.

- GGUF Conversion & Quantization: Convert and quantize Hugging Face models to GGUF format.

- SwiftCLI: A powerful and elegant CLI framework that makes it easy to create beautiful command-line interfaces.

- LitPrinter: Provides beautiful, styled console output with rich formatting and colors

- LitLogger: Simplifies logging with customizable formats and color schemes

- LitAgent: Powerful and modern user agent generator that keeps your requests fresh and undetectable

- Text-to-Image: Generate high-quality images using a wide range of AI art providers

- Scout: Advanced web parsing and crawling library with intelligent HTML/XML parsing, web crawling, and Markdown conversion

- Awesome Prompts (Act): A curated collection of system prompts designed to transform Webscout into specialized personas, enhancing its ability to assist with specific tasks. Simply prefix your request with the act name or index number to leverage these tailored capabilities.

- Weather Tool kit Webscout provides tools to retrieve weather information.

- AIsearch AI Search Providers offer powerful and flexible AI-powered search Search Engine

pip install -U webscoutpython -m webscout --help| Command | Description |

|---|---|

| python -m webscout answers -k Text | CLI function to perform an answers search using Webscout. |

| python -m webscout images -k Text | CLI function to perform an images search using Webscout. |

| python -m webscout maps -k Text | CLI function to perform a maps search using Webscout. |

| python -m webscout news -k Text | CLI function to perform a news search using Webscout. |

| python -m webscout suggestions -k Text | CLI function to perform a suggestions search using Webscout. |

| python -m webscout text -k Text | CLI function to perform a text search using Webscout. |

| python -m webscout translate -k Text | CLI function to perform translate using Webscout. |

| python -m webscout version | A command-line interface command that prints and returns the version of the program. |

| python -m webscout videos -k Text | CLI function to perform a videos search using DuckDuckGo API. |

| python -m webscout weather -k Text | CLI function to get weather information for a location using Webscout. |

import json

import asyncio

from webscout import VNEngine

from webscout import TempMail

async def main():

vn = VNEngine()

countries = vn.get_online_countries()

if countries:

country = countries[0]['country']

numbers = vn.get_country_numbers(country)

if numbers:

number = numbers[0]['full_number']

inbox = vn.get_number_inbox(country, number)

# Serialize inbox data to JSON string

json_data = json.dumps(inbox, ensure_ascii=False, indent=4)

# Print with UTF-8 encoding

print(json_data)

async with TempMail() as client:

domains = await client.get_domains()

print("Available Domains:", domains)

email_response = await client.create_email(alias="testuser")

print("Created Email:", email_response)

messages = await client.get_messages(email_response.email)

print("Messages:", messages)

await client.delete_email(email_response.email, email_response.token)

print("Email Deleted")

if __name__ == "__main__":

asyncio.run(main())...

from webscout import YepSearch

# Initialize YepSearch

yep = YepSearch(

timeout=20, # Optional: Set custom timeout

proxies=None, # Optional: Use proxies

verify=True # Optional: SSL verification

)

# Text Search

text_results = yep.text(

keywords="artificial intelligence",

region="all", # Optional: Region for results

safesearch="moderate", # Optional: "on", "moderate", "off"

max_results=10 # Optional: Limit number of results

)

print(text_results)

# Image Search

image_results = yep.images(

keywords="nature photography",

region="all",

safesearch="moderate",

max_results=10

)

print(image_results)

# Suggestions

suggestions = yep.suggestions("hist")

print(suggestions)from webscout import GoogleS

from rich import print

searcher = GoogleS()

results = searcher.search("HelpingAI-9B", max_results=20, extract_text=False, max_text_length=200)

for result in results:

print(result)The WEBS and AsyncWEBS classes are used to retrieve search results from DuckDuckGo.com.

To use the AsyncWEBS class, you can perform asynchronous operations using Python's asyncio library.

To initialize an instance of the WEBS or AsyncWEBS classes, you can provide the following optional arguments:

Example - WEBS:

from webscout import WEBS

R = WEBS().text("python programming", max_results=5)

print(R)Example - AsyncWEBS:

import asyncio

import logging

import sys

from itertools import chain

from random import shuffle

import requests

from webscout import AsyncWEBS

# If you have proxies, define them here

proxies = None

if sys.platform.lower().startswith("win"):

asyncio.set_event_loop_policy(asyncio.WindowsSelectorEventLoopPolicy())

def get_words():

word_site = "https://www.mit.edu/~ecprice/wordlist.10000"

resp = requests.get(word_site)

words = resp.text.splitlines()

return words

async def aget_results(word):

async with AsyncWEBS(proxies=proxies) as WEBS:

results = await WEBS.text(word, max_results=None)

return results

async def main():

words = get_words()

shuffle(words)

tasks = [aget_results(word) for word in words[:10]]

results = await asyncio.gather(*tasks)

print(f"Done")

for r in chain.from_iterable(results):

print(r)

logging.basicConfig(level=logging.DEBUG)

await main()Important Note: The WEBS and AsyncWEBS classes should always be used as a context manager (with statement). This ensures proper resource management and cleanup, as the context manager will automatically handle opening and closing the HTTP client connection.

Exceptions:

-

WebscoutE: Raised when there is a generic exception during the API request.

from webscout import WEBS

# Text search for 'live free or die' using DuckDuckGo.com

with WEBS() as WEBS:

for r in WEBS.text('live free or die', region='wt-wt', safesearch='off', timelimit='y', max_results=10):

print(r)

for r in WEBS.text('live free or die', region='wt-wt', safesearch='off', timelimit='y', max_results=10):

print(r)from webscout import WEBS

# Instant answers for the query "sun" using DuckDuckGo.com

with WEBS() as WEBS:

for r in WEBS.answers("sun"):

print(r)from webscout import WEBS

# Image search for the keyword 'butterfly' using DuckDuckGo.com

with WEBS() as WEBS:

keywords = 'butterfly'

WEBS_images_gen = WEBS.images(

keywords,

region="wt-wt",

safesearch="off",

size=None,

type_image=None,

layout=None,

license_image=None,

max_results=10,

)

for r in WEBS_images_gen:

print(r)from webscout import WEBS

# Video search for the keyword 'tesla' using DuckDuckGo.com

with WEBS() as WEBS:

keywords = 'tesla'

WEBS_videos_gen = WEBS.videos(

keywords,

region="wt-wt",

safesearch="off",

timelimit="w",

resolution="high",

duration="medium",

max_results=10,

)

for r in WEBS_videos_gen:

print(r)from webscout import WEBS

import datetime

def fetch_news(keywords, timelimit):

news_list = []

with WEBS() as webs_instance:

WEBS_news_gen = webs_instance.news(

keywords,

region="wt-wt",

safesearch="off",

timelimit=timelimit,

max_results=20

)

for r in WEBS_news_gen:

# Convert the date to a human-readable format using datetime

r['date'] = datetime.datetime.fromisoformat(r['date']).strftime('%B %d, %Y')

news_list.append(r)

return news_list

def _format_headlines(news_list, max_headlines: int = 100):

headlines = []

for idx, news_item in enumerate(news_list):

if idx >= max_headlines:

break

new_headline = f"{idx + 1}. {news_item['title'].strip()} "

new_headline += f"(URL: {news_item['url'].strip()}) "

new_headline += f"{news_item['body'].strip()}"

new_headline += "\n"

headlines.append(new_headline)

headlines = "\n".join(headlines)

return headlines

# Example usage

keywords = 'latest AI news'

timelimit = 'd'

news_list = fetch_news(keywords, timelimit)

# Format and print the headlines

formatted_headlines = _format_headlines(news_list)

print(formatted_headlines)from webscout import WEBS

# Map search for the keyword 'school' in 'anantnag' using DuckDuckGo.com

with WEBS() as WEBS:

for r in WEBS.maps("school", place="anantnag", max_results=50):

print(r)from webscout import WEBS

# Translation of the keyword 'school' to German ('hi') using DuckDuckGo.com

with WEBS() as WEBS:

keywords = 'school'

r = WEBS.translate(keywords, to="hi")

print(r)from webscout import WEBS

# Suggestions for the keyword 'fly' using DuckDuckGo.com

with WEBS() as WEBS:

for r in WEBS.suggestions("fly"):

print(r)from webscout import WEBS

# Get weather information for a location using DuckDuckGo.com

with WEBS() as webs:

weather_data = webs.weather("New York")

print(weather_data)Retrieve a comprehensive list of all supported LLMs.

from webscout import model

from rich import print

all_models = model.llm.list()

print("Available models:")

print(all_models)Obtain a summary of the available LLMs, including provider details.

from webscout import model

from rich import print

summary = model.llm.summary()

print("Summary of models:")

print(summary)Filter and display LLMs available from a specific provider.

from webscout import model

from rich import print

provider_name = "PerplexityLabs" # Example provider

available_models = model.llm.get(provider_name)

if isinstance(available_models, list):

print(f"Available models for {provider_name}: {', '.join(available_models)}")

else:

print(f"Available models for {provider_name}: {available_models}")Retrieve a comprehensive list of all supported TTS voices.

from webscout import model

from rich import print

all_voices = model.tts.list()

print("Available TTS voices:")

print(all_voices)Obtain a summary of the available TTS voices, including provider details.

from webscout import model

from rich import print

summary = model.tts.summary()

print("Summary of TTS voices:")

print(summary)Filter and display TTS voices available from a specific provider.

from webscout import model

from rich import print

provider_name = "ElevenlabsTTS" # Example provider

available_voices = model.tts.get(provider_name)

if isinstance(available_voices, list):

print(f"Available voices for {provider_name}: {', '.join(available_voices)}")

elif isinstance(available_voices, dict):

print(f"Available voices for {provider_name}:")

for voice_name, voice_id in available_voices.items():

print(f" - {voice_name}: {voice_id}")

else:

print(f"Available voices for {provider_name}: {available_voices}")from webscout import WEBS as w

R = w().chat("Who are you", model='gpt-4o-mini') # mixtral-8x7b, llama-3.1-70b, claude-3-haiku, gpt-4o-mini

print(R)from webscout import PhindSearch

# Create an instance of the PHIND class

ph = PhindSearch()

# Define a prompt to send to the AI

prompt = "write a essay on phind"

# Use the 'ask' method to send the prompt and receive a response

response = ph.ask(prompt)

# Extract and print the message from the response

message = ph.get_message(response)

print(message)Using phindv2:

from webscout import Phindv2

# Create an instance of the PHIND class

ph = Phindv2()

# Define a prompt to send to the AI

prompt = ""

# Use the 'ask' method to send the prompt and receive a response

response = ph.ask(prompt)

# Extract and print the message from the response

message = ph.get_message(response)

print(message)import webscout

from webscout import GEMINI

from rich import print

COOKIE_FILE = "cookies.json"

# Optional: Provide proxy details if needed

PROXIES = {}

# Initialize GEMINI with cookie file and optional proxies

gemini = GEMINI(cookie_file=COOKIE_FILE, proxy=PROXIES)

# Ask a question and print the response

response = gemini.chat("websearch about HelpingAI and who is its developer")

print(response)from webscout import YEPCHAT

ai = YEPCHAT()

response = ai.chat(input(">>> "))

for chunk in response:

print(chunk, end="", flush=True)from webscout import BLACKBOXAI

from rich import print

ai = BLACKBOXAI(

is_conversation=True,

max_tokens=800,

timeout=30,

intro=None,

filepath=None,

update_file=True,

proxies={},

history_offset=10250,

act=None,

model=None # You can specify a model if needed

)

# Define a prompt to send to the AI

prompt = "Tell me about india"

# Use the 'chat' method to send the prompt and receive a response

r = ai.chat(prompt)

print(r)from webscout import Meta

from rich import print

# **For unauthenticated usage**

meta_ai = Meta()

# Simple text prompt

response = meta_ai.chat("What is the capital of France?")

print(response)

# Streaming response

for chunk in meta_ai.chat("Tell me a story about a cat."):

print(chunk, end="", flush=True)

# **For authenticated usage (including image generation)**

fb_email = "[email protected]"

fb_password = "qwertfdsa"

meta_ai = Meta(fb_email=fb_email, fb_password=fb_password)

# Text prompt with web search

response = meta_ai.ask("what is currently happning in bangladesh in aug 2024")

print(response["message"]) # Access the text message

print("Sources:", response["sources"]) # Access sources (if any)

# Image generation

response = meta_ai.ask("Create an image of a cat wearing a hat.")

print(response["message"]) # Print the text message from the response

for media in response["media"]:

print(media["url"]) # Access image URLsfrom webscout import KOBOLDAI

# Instantiate the KOBOLDAI class with default parameters

koboldai = KOBOLDAI()

# Define a prompt to send to the AI

prompt = "What is the capital of France?"

# Use the 'ask' method to get a response from the AI

response = koboldai.ask(prompt)

# Extract and print the message from the response

message = koboldai.get_message(response)

print(message)from webscout import REKA

a = REKA(is_conversation=True, max_tokens=8000, timeout=30,api_key="")

prompt = "tell me about india"

response_str = a.chat(prompt)

print(response_str)from webscout import Cohere

a = Cohere(is_conversation=True, max_tokens=8000, timeout=30,api_key="")

prompt = "tell me about india"

response_str = a.chat(prompt)

print(response_str)from webscout import DeepInfra

ai = DeepInfra(

is_conversation=True,

model= "Qwen/Qwen2-72B-Instruct",

max_tokens=800,

timeout=30,

intro=None,

filepath=None,

update_file=True,

proxies={},

history_offset=10250,

act=None,

)

prompt = "what is meaning of life"

response = ai.ask(prompt)

# Extract and print the message from the response

message = ai.get_message(response)

print(message)from webscout import GROQ

ai = GROQ(api_key="")

response = ai.chat("What is the meaning of life?")

print(response)

#----------------------TOOL CALL------------------

from webscout import GROQ # Adjust import based on your project structure

from webscout import WEBS

import json

# Initialize the GROQ client

client = GROQ(api_key="")

MODEL = 'llama3-groq-70b-8192-tool-use-preview'

# Function to evaluate a mathematical expression

def calculate(expression):

"""Evaluate a mathematical expression"""

try:

result = eval(expression)

return json.dumps({"result": result})

except Exception as e:

return json.dumps({"error": str(e)})

# Function to perform a text search using DuckDuckGo.com

def search(query):

"""Perform a text search using DuckDuckGo.com"""

try:

results = WEBS().text(query, max_results=5)

return json.dumps({"results": results})

except Exception as e:

return json.dumps({"error": str(e)})

# Add the functions to the provider

client.add_function("calculate", calculate)

client.add_function("search", search)

# Define the tools

tools = [

{

"type": "function",

"function": {

"name": "calculate",

"description": "Evaluate a mathematical expression",

"parameters": {

"type": "object",

"properties": {

"expression": {

"type": "string",

"description": "The mathematical expression to evaluate",

}

},

"required": ["expression"],

},

}

},

{

"type": "function",

"function": {

"name": "search",

"description": "Perform a text search using DuckDuckGo.com and Yep.com",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "The search query to execute",

}

},

"required": ["query"],

},

}

}

]

user_prompt_calculate = "What is 25 * 4 + 10?"

response_calculate = client.chat(user_prompt_calculate, tools=tools)

print(response_calculate)

user_prompt_search = "Find information on HelpingAI and who is its developer"

response_search = client.chat(user_prompt_search, tools=tools)

print(response_search)from webscout import LLAMA

llama = LLAMA()

r = llama.chat("What is the meaning of life?")

print(r)from webscout import AndiSearch

a = AndiSearch()

print(a.chat("HelpingAI-9B"))

LLAMA, C4ai, Venice, Copilot, HuggingFaceChat, TwoAI, HeckAI, AllenAI, PerplexityLabs, AkashGPT, DeepSeek, WiseCat, IBMGranite, QwenLM, ChatGPTGratis, TextPollinationsAI, GliderAI, Cohere, REKA, GROQ, AsyncGROQ, OPENAI, AsyncOPENAI, KOBOLDAI, AsyncKOBOLDAI, BLACKBOXAI, PhindSearch, GEMINI, DeepInfra, AI4Chat, Phindv2, OLLAMA, AndiSearch, PIZZAGPT, Sambanova, DARKAI, KOALA, Meta, AskMyAI, PiAI, Julius, YouChat, YEPCHAT, Cloudflare, TurboSeek, Editee, TeachAnything, AI21, Chatify, X0GPT, Cerebras, Lepton, GEMINIAPI, Cleeai, Elmo, Free2GPT, GPTWeb, Netwrck, LlamaTutor, PromptRefine, TutorAI, ChatGPTES, Bagoodex, AIMathGPT, GaurishCerebras, GeminiPro, LLMChat, Talkai, Llama3Mitril, Marcus, TypeGPT, Netwrck, MultiChatAI, JadveOpenAI, ChatGLM, NousHermes, FreeAIChat, ElectronHub, GithubChat, Flowith, SonusAI, UncovrAI, LabyrinthAI, WebSim, LambdaChat, ChatGPTClone

Code is similar to other providers.

from webscout.LLM import LLM, VLM

# Chat with text

llm = LLM("meta-llama/Meta-Llama-3-70B-Instruct")

response = llm.chat([{"role": "user", "content": "What's good?"}])

# Chat with images

vlm = VLM("cogvlm-grounding-generalist")

response = vlm.chat([{

"role": "user",

"content": [

{"type": "image", "image_url": "cool_pic.jpg"},

{"type": "text", "text": "What's in this image?"}

]

}])Webscout provides tools to convert and quantize Hugging Face models into the GGUF format for use with offline LLMs.

Example:

from webscout.Extra.gguf import ModelConverter

"""

Valid quantization methods:

"q2_k", "q3_k_l", "q3_k_m", "q3_k_s",

"q4_0", "q4_1", "q4_k_m", "q4_k_s",

"q5_0", "q5_1", "q5_k_m", "q5_k_s",

"q6_k", "q8_0"

"""

# Create a converter instance

converter = ModelConverter(

model_id="prithivMLmods/QWQ-500M",

quantization_methods="q2_k"

)

# Run the conversion

converter.convert()Command Line Usage:

-

GGUF Conversion:

python -m webscout.Extra.gguf convert -m "prithivMLmods/QWQ-500M" -q "q2_k"

Note:

- Replace

"your_username"and"your_hf_token"with your actual Hugging Face credentials. - The

model_pathinautollamais the Hugging Face model ID, andgguf_fileis the GGUF file ID.

Contributions are welcome! If you'd like to contribute to Webscout, please follow these steps:

- Fork the repository.

- Create a new branch for your feature or bug fix.

- Make your changes and commit them with descriptive messages.

- Push your branch to your forked repository.

- Submit a pull request to the main repository.

- All the amazing developers who have contributed to the project!

- The open-source community for their support and inspiration.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Webscout

Similar Open Source Tools

Webscout

WebScout is a versatile tool that allows users to search for anything using Google, DuckDuckGo, and phind.com. It contains AI models, can transcribe YouTube videos, generate temporary email and phone numbers, has TTS support, webai (terminal GPT and open interpreter), and offline LLMs. It also supports features like weather forecasting, YT video downloading, temp mail and number generation, text-to-speech, advanced web searches, and more.

Webscout

Webscout is an all-in-one Python toolkit for web search, AI interaction, digital utilities, and more. It provides access to diverse search engines, cutting-edge AI models, temporary communication tools, media utilities, developer helpers, and powerful CLI interfaces through a unified library. With features like comprehensive search leveraging Google and DuckDuckGo, AI powerhouse for accessing various AI models, YouTube toolkit for video and transcript management, GitAPI for GitHub data extraction, Tempmail & Temp Number for privacy, Text-to-Speech conversion, GGUF conversion & quantization, SwiftCLI for CLI interfaces, LitPrinter for styled console output, LitLogger for logging, LitAgent for user agent generation, Text-to-Image generation, Scout for web parsing and crawling, Awesome Prompts for specialized tasks, Weather Toolkit, and AI Search Providers.

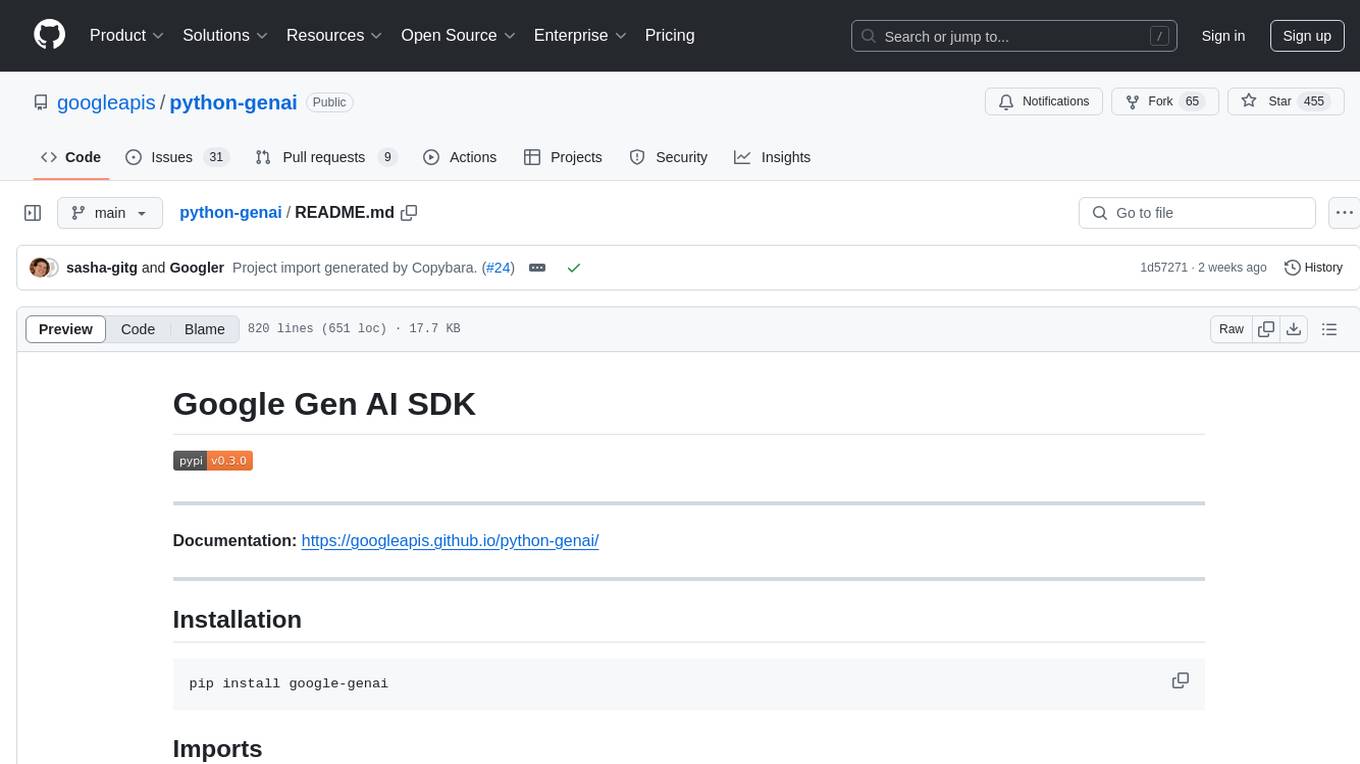

python-genai

The Google Gen AI SDK is a Python library that provides access to Google AI and Vertex AI services. It allows users to create clients for different services, work with parameter types, models, generate content, call functions, handle JSON response schemas, stream text and image content, perform async operations, count and compute tokens, embed content, generate and upscale images, edit images, work with files, create and get cached content, tune models, distill models, perform batch predictions, and more. The SDK supports various features like automatic function support, manual function declaration, JSON response schema support, streaming for text and image content, async methods, tuning job APIs, distillation, batch prediction, and more.

generative-ai-python

The Google AI Python SDK is the easiest way for Python developers to build with the Gemini API. The Gemini API gives you access to Gemini models created by Google DeepMind. Gemini models are built from the ground up to be multimodal, so you can reason seamlessly across text, images, and code.

omniai

OmniAI provides a unified Ruby API for integrating with multiple AI providers, streamlining AI development by offering a consistent interface for features such as chat, text-to-speech, speech-to-text, and embeddings. It ensures seamless interoperability across platforms and effortless switching between providers, making integrations more flexible and reliable.

capsule

Capsule is a secure and durable runtime for AI agents, designed to coordinate tasks in isolated environments. It allows for long-running workflows, large-scale processing, autonomous decision-making, and multi-agent systems. Tasks run in WebAssembly sandboxes with isolated execution, resource limits, automatic retries, and lifecycle tracking. It enables safe execution of untrusted code within AI agent systems.

clawlet

Clawlet is an ultra-lightweight and efficient personal AI assistant that comes as a single binary with no CGO, runtime, or dependencies. It features hybrid semantic memory search and is inspired by OpenClaw and nanobot. Users can easily download Clawlet from GitHub Releases and drop it on any machine to enable memory search functionality. The tool supports various LLM providers like OpenAI, OpenRouter, Anthropic, Gemini, and local endpoints. Users can configure Clawlet for memory search setup and chat app integrations for platforms like Telegram, WhatsApp, Discord, and Slack. Clawlet CLI provides commands for initializing workspace, running the agent, managing channels, scheduling jobs, and more.

ai00_server

AI00 RWKV Server is an inference API server for the RWKV language model based upon the web-rwkv inference engine. It supports VULKAN parallel and concurrent batched inference and can run on all GPUs that support VULKAN. No need for Nvidia cards!!! AMD cards and even integrated graphics can be accelerated!!! No need for bulky pytorch, CUDA and other runtime environments, it's compact and ready to use out of the box! Compatible with OpenAI's ChatGPT API interface. 100% open source and commercially usable, under the MIT license. If you are looking for a fast, efficient, and easy-to-use LLM API server, then AI00 RWKV Server is your best choice. It can be used for various tasks, including chatbots, text generation, translation, and Q&A.

model.nvim

model.nvim is a tool designed for Neovim users who want to utilize AI models for completions or chat within their text editor. It allows users to build prompts programmatically with Lua, customize prompts, experiment with multiple providers, and use both hosted and local models. The tool supports features like provider agnosticism, programmatic prompts in Lua, async and multistep prompts, streaming completions, and chat functionality in 'mchat' filetype buffer. Users can customize prompts, manage responses, and context, and utilize various providers like OpenAI ChatGPT, Google PaLM, llama.cpp, ollama, and more. The tool also supports treesitter highlights and folds for chat buffers.

ruby-openai

Use the OpenAI API with Ruby! 🤖🩵 Stream text with GPT-4, transcribe and translate audio with Whisper, or create images with DALL·E... Hire me | 🎮 Ruby AI Builders Discord | 🐦 Twitter | 🧠 Anthropic Gem | 🚂 Midjourney Gem ## Table of Contents * Ruby OpenAI * Table of Contents * Installation * Bundler * Gem install * Usage * Quickstart * With Config * Custom timeout or base URI * Extra Headers per Client * Logging * Errors * Faraday middleware * Azure * Ollama * Counting Tokens * Models * Examples * Chat * Streaming Chat * Vision * JSON Mode * Functions * Edits * Embeddings * Batches * Files * Finetunes * Assistants * Threads and Messages * Runs * Runs involving function tools * Image Generation * DALL·E 2 * DALL·E 3 * Image Edit * Image Variations * Moderations * Whisper * Translate * Transcribe * Speech * Errors * Development * Release * Contributing * License * Code of Conduct

parrot.nvim

Parrot.nvim is a Neovim plugin that prioritizes a seamless out-of-the-box experience for text generation. It simplifies functionality and focuses solely on text generation, excluding integration of DALLE and Whisper. It supports persistent conversations as markdown files, custom hooks for inline text editing, multiple providers like Anthropic API, perplexity.ai API, OpenAI API, Mistral API, and local/offline serving via ollama. It allows custom agent definitions, flexible API credential support, and repository-specific instructions with a `.parrot.md` file. It does not have autocompletion or hidden requests in the background to analyze files.

js-genai

The Google Gen AI JavaScript SDK is an experimental SDK for TypeScript and JavaScript developers to build applications powered by Gemini. It supports both the Gemini Developer API and Vertex AI. The SDK is designed to work with Gemini 2.0 features. Users can access API features through the GoogleGenAI classes, which provide submodules for querying models, managing caches, creating chats, uploading files, and starting live sessions. The SDK also allows for function calling to interact with external systems. Users can find more samples in the GitHub samples directory.

hezar

Hezar is an all-in-one AI library designed specifically for the Persian community. It brings together various AI models and tools, making it easy to use AI with just a few lines of code. The library seamlessly integrates with Hugging Face Hub, offering a developer-friendly interface and task-based model interface. In addition to models, Hezar provides tools like word embeddings, tokenizers, feature extractors, and more. It also includes supplementary ML tools for deployment, benchmarking, and optimization.

pocketgroq

PocketGroq is a tool that provides advanced functionalities for text generation, web scraping, web search, and AI response evaluation. It includes features like an Autonomous Agent for answering questions, web crawling and scraping capabilities, enhanced web search functionality, and flexible integration with Ollama server. Users can customize the agent's behavior, evaluate responses using AI, and utilize various methods for text generation, conversation management, and Chain of Thought reasoning. The tool offers comprehensive methods for different tasks, such as initializing RAG, error handling, and tool management. PocketGroq is designed to enhance development processes and enable the creation of AI-powered applications with ease.

lagent

Lagent is a lightweight open-source framework that allows users to efficiently build large language model(LLM)-based agents. It also provides some typical tools to augment LLM. The overview of our framework is shown below:

For similar tasks

Webscout

WebScout is a versatile tool that allows users to search for anything using Google, DuckDuckGo, and phind.com. It contains AI models, can transcribe YouTube videos, generate temporary email and phone numbers, has TTS support, webai (terminal GPT and open interpreter), and offline LLMs. It also supports features like weather forecasting, YT video downloading, temp mail and number generation, text-to-speech, advanced web searches, and more.

KrillinAI

KrillinAI is a video subtitle translation and dubbing tool based on AI large models, featuring speech recognition, intelligent sentence segmentation, professional translation, and one-click deployment of the entire process. It provides a one-stop workflow from video downloading to the final product, empowering cross-language cultural communication with AI. The tool supports multiple languages for input and translation, integrates features like automatic dependency installation, video downloading from platforms like YouTube and Bilibili, high-speed subtitle recognition, intelligent subtitle segmentation and alignment, custom vocabulary replacement, professional-level translation engine, and diverse external service selection for speech and large model services.

DownEdit

DownEdit is a fast and powerful program for downloading and editing videos from platforms like TikTok, Douyin, and Kuaishou. It allows users to effortlessly grab videos, make bulk edits, and utilize advanced AI features for generating videos, images, and sounds in bulk. The tool offers features like video, photo, and sound editing, downloading videos without watermarks, bulk AI generation, and AI editing for content enhancement.

AIO-Video-Downloader

AIO Video Downloader is an open-source Android application built on the robust yt-dlp backend with the help of youtubedl-android. It aims to be the most powerful download manager available, offering a clean and efficient interface while unlocking advanced downloading capabilities with minimal setup. With support for 1000+ sites and virtually any downloadable content across the web, AIO delivers a seamless yet powerful experience that balances speed, flexibility, and simplicity.

Y2A-Auto

Y2A-Auto is an automation tool that transfers YouTube videos to AcFun. It automates the entire process from downloading, translating subtitles, content moderation, intelligent tagging, to partition recommendation and upload. It also includes a web management interface and YouTube monitoring feature. The tool supports features such as downloading videos and covers using yt-dlp, AI translation and embedding of subtitles, AI generation of titles/descriptions/tags, content moderation using Aliyun Green, uploading to AcFun, task management, manual review, and forced upload. It also offers settings for automatic mode, concurrency, proxies, subtitles, login protection, brute force lock, YouTube monitoring, channel/trend capturing, scheduled tasks, history records, optional GPU/hardware acceleration, and Docker deployment or local execution.

youwee

Youwee is a modern YouTube video downloader tool built with Tauri and React. It offers features like downloading videos from various platforms, following channels, fetching metadata, live stream support, AI video summary and processing, time range download, batch and playlist downloads, audio extraction, subtitle support, subtitle workshop, post-processing, SponsorBlock, speed limit control, download library, multiple themes, and is fast and lightweight.

sktime

sktime is a Python library for time series analysis that provides a unified interface for various time series learning tasks such as classification, regression, clustering, annotation, and forecasting. It offers time series algorithms and tools compatible with scikit-learn for building, tuning, and validating time series models. sktime aims to enhance the interoperability and usability of the time series analysis ecosystem by empowering users to apply algorithms across different tasks and providing interfaces to related libraries like scikit-learn, statsmodels, tsfresh, PyOD, and fbprophet.

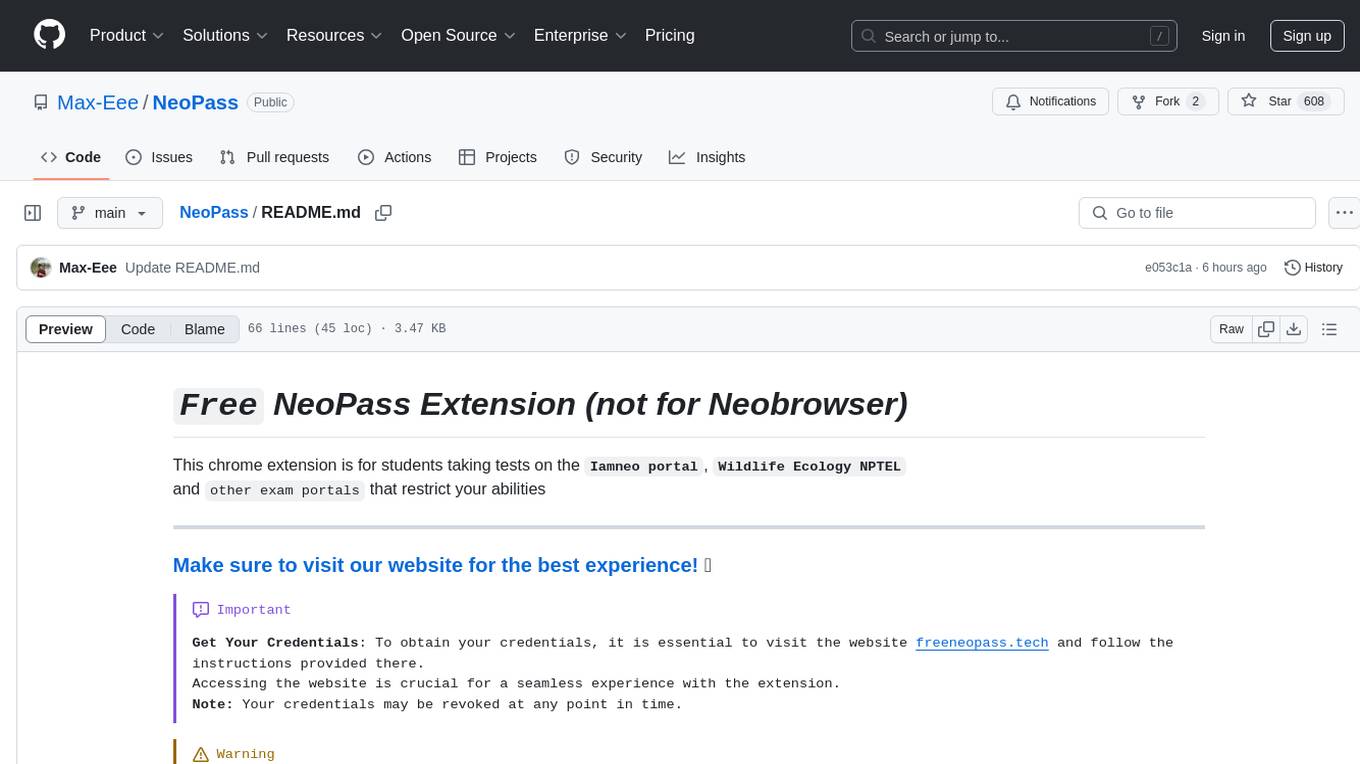

NeoPass

NeoPass is a free Chrome extension designed for students taking tests on exam portals like Iamneo and Wildlife Ecology NPTEL. It provides features such as NPTEL integration, NeoExamShield bypass, AI chatbot with stealth mode, AI search answers/code, MCQ solving, tab switching bypass, pasting when restricted, and remote logout. Users can install the extension by following simple steps and use shortcuts for quick access to features. The tool is intended for educational purposes only and promotes academic integrity.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.