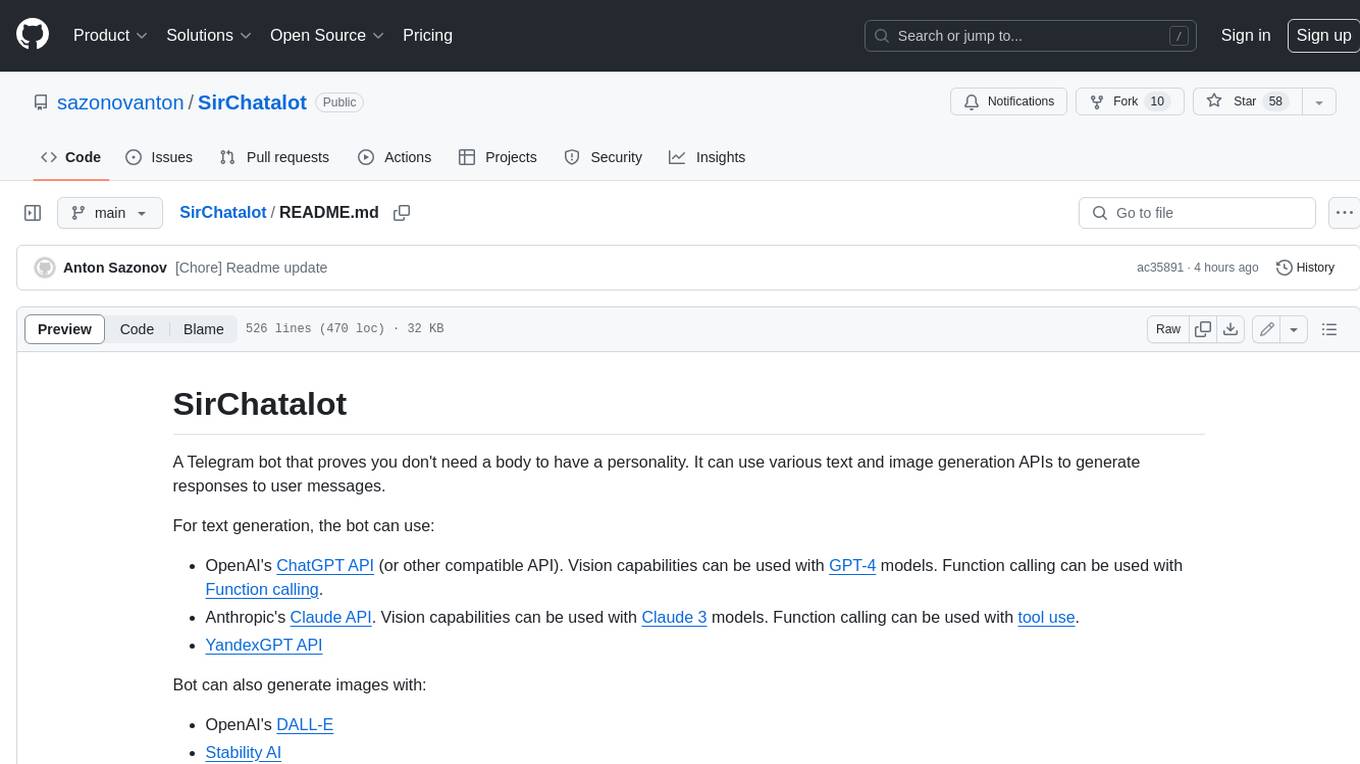

omniai

OmniAI standardizes the APIs for multiple AI providers like OpenAI's Chat GPT, Mistral's LeChat, Claude's Anthropic, Google's Gemini and DeepSeek's Chat..

Stars: 161

OmniAI provides a unified Ruby API for integrating with multiple AI providers, streamlining AI development by offering a consistent interface for features such as chat, text-to-speech, speech-to-text, and embeddings. It ensures seamless interoperability across platforms and effortless switching between providers, making integrations more flexible and reliable.

README:

OmniAI provides a unified Ruby API for integrating with multiple AI providers, including Anthropic, DeepSeek, Google, Mistral, and OpenAI. It streamlines AI development by offering a consistent interface for features such as chat, text-to-speech, speech-to-text, and embeddings—ensuring seamless interoperability across platforms. Switching between providers is effortless, making any integration more flexible and reliable.

Example #1: Chat w/ Text

This example demonstrates using OmniAI with Anthropic to ask for a joke. The response is parsed and printed.

require 'omniai/anthropic'

CLIENT = OmniAI::Anthropic::Client.new

puts "> [USER] Tell me a joke"

response = CLIENT.chat("Tell me a joke")

puts response.text> [USER] Tell me a joke

Why don't scientists trust atoms? Because they make up everything!

Example #2: Chat w/ Prompt

This example demonstrates using OmniAI with Mistral to ask for the fastest animal. It includes a system and user message in the prompt. The response is streamed in real time.

require "omniai/mistral"

CLIENT = OmniAI::Mistral::Client.new

puts "> [SYSTEM] Respond in both English and French."

puts "> [USER] What is the fastest animal?"

CLIENT.chat(stream: $stdout) do |prompt|

prompt.system "Respond in both English and French."

prompt.user "What is the fastest animal?"

end> [SYSTEM] Respond in both English and French.

> [USER] What is the fastest animal?

**English**: The peregrine falcon is generally considered the fastest animal, reaching speeds of over 390 km/h.

**French**: Le faucon pèlerin est généralement considéré comme l'animal le plus rapide, atteignant des vitesses de plus de 390 km/h.

Example #3: Chat w/ Vision

This example demonstrates using OmniAI with OpenAI to prompt a “biologist” for an analysis of photos, identifying the animals within each one. A system and user message are provided, and the response is streamed in real time.

require "omniai/openai"

CLIENT = OmniAI::OpenAI::Client.new

CAT_URL = "https://images.unsplash.com/photo-1472491235688-bdc81a63246e?q=80&w=1024&h=1024&fit=crop&fm=jpg"

DOG_URL = "https://images.unsplash.com/photo-1517849845537-4d257902454a?q=80&w=1024&h=1024&fit=crop&fm=jpg"

CLIENT.chat(stream: $stdout) do |prompt|

prompt.system("You are a helpful biologist with expertise in animals who responds with the Latin names.")

prompt.user do |message|

message.text("What animals are in the attached photos?")

message.url(CAT_URL, "image/jpeg")

message.url(DOG_URL, "image/jpeg")

end

end> [SYSTEM] You are a helpful biologist with expertise in animals who responds with the Latin names.

> [USER] What animals are in the attached photos?

The first photo is of a cat, *Felis Catus*.

The second photo is of a dog, *Canis Familiaris*.

Example #4: Chat w/ Tools

This example demonstrates using OmniAI with Google to ask for the weather. A tool “Weather” is provided. The tool accepts a location and unit (Celsius or Fahrenheit) then calculates the weather. The LLM makes multiple tool-call requests and is automatically provided with a tool-call response prior to streaming in real-time the result.

require 'omniai/google'

CLIENT = OmniAI::Google::Client.new

TOOL = OmniAI::Tool.new(

proc { |location:, unit: "Celsius"| "#{rand(20..50)}° #{unit} in #{location}" },

name: "Weather",

description: "Lookup the weather in a location",

parameters: OmniAI::Tool::Parameters.new(

properties: {

location: OmniAI::Tool::Property.string(description: "e.g. Toronto"),

unit: OmniAI::Tool::Property.string(enum: %w[Celsius Fahrenheit]),

},

required: %i[location]

)

)

puts "> [SYSTEM] You are an expert in weather."

puts "> [USER] What is the weather in 'London' in Celsius and 'Madrid' in Fahrenheit?"

CLIENT.chat(stream: $stdout, tools: [TOOL]) do |prompt|

prompt.system "You are an expert in weather."

prompt.user 'What is the weather in "London" in Celsius and "Madrid" in Fahrenheit?'

end> [SYSTEM] You are an expert in weather.

> [USER] What is the weather in 'London' in Celsius and 'Madrid' in Fahrenheit?

The weather is 24° Celsius in London and 42° Fahrenheit in Madrid.

Example #5: Text-to-Speech

This example demonstrates using OmniAI with OpenAI to convert text to speech and save it to a file.

require 'omniai/openai'

CLIENT = OmniAI::OpenAI::Client.new

File.open(File.join(__dir__, 'audio.wav'), 'wb') do |file|

CLIENT.speak('Sally sells seashells by the seashore.', format: OmniAI::Speak::Format::WAV) do |chunk|

file << chunk

end

endExample #6: Speech-to-Text

This example demonstrates using OmniAI with OpenAI to convert speech to text.

require 'omniai/openai'

CLIENT = OmniAI::OpenAI::Client.new

File.open(File.join(__dir__, 'audio.wav'), 'rb') do |file|

transcription = CLIENT.transcribe(file)

puts(transcription.text)

endExample #7: Embeddings

This example demonstrates using OmniAI with Mistral to generate embeddings for a dataset. It defines a set of entries (e.g. "George is a teacher." or "Ringo is a doctor.") and then compares the embeddings generated from a query (e.g. "What does George do?" or "Who is a doctor?") to rank the entries by relevance.

require 'omniai/mistral'

CLIENT = OmniAI::Mistral::Client.new

Entry = Data.define(:text, :embedding) do

def initialize(text:)

super(text:, embedding: CLIENT.embed(text).embedding)

end

end

ENTRIES = [

Entry.new(text: 'John is a musician.'),

Entry.new(text: 'Paul is a plumber.'),

Entry.new(text: 'George is a teacher.'),

Entry.new(text: 'Ringo is a doctor.'),

].freeze

def search(query)

embedding = CLIENT.embed(query).embedding

results = ENTRIES.sort_by do |data|

Math.sqrt(data.embedding.zip(embedding).map { |a, b| (a - b)**2 }.reduce(:+))

end

puts "'#{query}': '#{results.first.text}'"

end

search('What does George do?')

search('Who is a doctor?')

search('Who do you call to fix a toilet?')'What does George do?': 'George is a teacher.'

'Who is a doctor?': 'Ringo is a doctor.'

'Who do you call to fix a toilet?': 'Paul is a plumber.'

The main omniai gem is installed with:

gem install omniaiSpecific provider gems are installed with:

gem install omniai-anthropic

gem install omniai-deepseek

gem install omniai-mistral

gem install omniai-google

gem install omniai-openaiOmniAI implements APIs for a number of popular clients by default. A client can be initialized using the specific gem (e.g. omniai-openai for OmniAI::OpenAI). Vendor specific docs can be found within each repo.

require 'omniai/anthropic'

client = OmniAI::Anthropic::Client.newrequire 'omniai/deepseek'

client = OmniAI::DeepSeek::Client.newrequire 'omniai/google'

client = OmniAI::Google::Client.newrequire 'omniai/mistral'

client = OmniAI::Mistral::Client.newrequire 'omniai/openai'

client = OmniAI::OpenAI::Client.newLocalAI support is offered through OmniAI::OpenAI:

Ollama support is offered through OmniAI::OpenAI:

Logging the request / response is configurable by passing a logger into any client:

require 'omniai/openai'

require 'logger'

logger = Logger.new(STDOUT)

client = OmniAI::OpenAI::Client.new(logger:)[INFO]: POST https://...

[INFO]: 200 OK

...

Timeouts are configurable by passing a timeout an integer duration for the request / response of any APIs using:

require 'omniai/openai'

require 'logger'

logger = Logger.new(STDOUT)

client = OmniAI::OpenAI::Client.new(timeout: 8) # i.e. 8 secondsTimeouts are also configurable by passing a timeout hash with timeout / read / write / keys using:

require 'omniai/openai'

require 'logger'

logger = Logger.new(STDOUT)

client = OmniAI::OpenAI::Client.new(timeout: {

read: 2, # i.e. 2 seconds

write: 3, # i.e. 3 seconds

connect: 4, # i.e. 4 seconds

})Clients that support chat (e.g. Anthropic w/ "Claude", Google w/ "Gemini", Mistral w/ "LeChat", OpenAI w/ "ChatGPT", etc) generate completions using the following calls:

Generating a completion is as simple as sending in the text:

completion = client.chat('Tell me a joke.')

completion.text # 'Why don't scientists trust atoms? They make up everything!'More complex completions are generated using a block w/ various system / user messages:

completion = client.chat do |prompt|

prompt.system 'You are a helpful assistant with an expertise in animals.'

prompt.user do |message|

message.text 'What animals are in the attached photos?'

message.url('https://.../cat.jpeg', "image/jpeg")

message.url('https://.../dog.jpeg', "image/jpeg")

message.file('./hamster.jpeg', "image/jpeg")

end

end

completion.text # 'They are photos of a cat, a cat, and a hamster.'A real-time stream of messages can be generated by passing in a proc:

stream = proc do |chunk|

print(chunk.text) # '...'

end

client.chat('Tell me a joke.', stream:)The above code can also be supplied any IO (e.g. File, $stdout, $stdin, etc):

client.chat('Tell me a story', stream: $stdout)A chat can also be initialized with tools:

tool = OmniAI::Tool.new(

proc { |location:, unit: 'Celsius'| "#{rand(20..50)}° #{unit} in #{location}" },

name: 'Weather',

description: 'Lookup the weather in a location',

parameters: OmniAI::Tool::Parameters.new(

properties: {

location: OmniAI::Tool::Property.string(description: 'e.g. Toronto'),

unit: OmniAI::Tool::Property.string(enum: %w[Celsius Fahrenheit]),

},

required: %i[location]

)

)

client.chat('What is the weather in "London" in Celsius and "Paris" in Fahrenheit?', tools: [tool])Clients that support transcribe (e.g. OpenAI w/ "Whisper") convert recordings to text via the following calls:

transcription = client.transcribe("example.ogg")

transcription.text # '...'File.open("example.ogg", "rb") do |file|

transcription = client.transcribe(file)

transcription.text # '...'

endClients that support speak (e.g. OpenAI w/ "Whisper") convert text to recordings via the following calls:

File.open('example.ogg', 'wb') do |file|

client.speak('The quick brown fox jumps over a lazy dog.', voice: 'HAL') do |chunk|

file << chunk

end

endtempfile = client.speak('The quick brown fox jumps over a lazy dog.', voice: 'HAL')

tempfile.close

tempfile.unlinkClients that support generating embeddings (e.g. OpenAI, Mistral, etc.) convert text to embeddings via the following:

response = client.embed('The quick brown fox jumps over a lazy dog')

response.usage # <OmniAI::Embed::Usage prompt_tokens=5 total_tokens=5>

response.embedding # [0.1, 0.2, ...] >Batches of text can also be converted to embeddings via the following:

response = client.embed([

'',

'',

])

response.usage # <OmniAI::Embed::Usage prompt_tokens=5 total_tokens=5>

response.embeddings.each do |embedding|

embedding # [0.1, 0.2, ...]

endOmniAI packages a basic command line interface (CLI) to allow for exploration of various APIs. A detailed CLI documentation can be found via help:

omniai --helpomniai chat "What is the coldest place on earth?"The coldest place on earth is Antarctica.

omniai chat --provider="openai" --model="gpt-4" --temperature="0.5"Type 'exit' or 'quit' to abort.

# What is the warmet place on earth?

The warmest place on earth is Africa.

omniai embed "The quick brown fox jumps over a lazy dog."0.0

...

omniai embed --provider="openai" --model="text-embedding-ada-002"Type 'exit' or 'quit' to abort.

# Whe quick brown fox jumps over a lazy dog.

0.0

...

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for omniai

Similar Open Source Tools

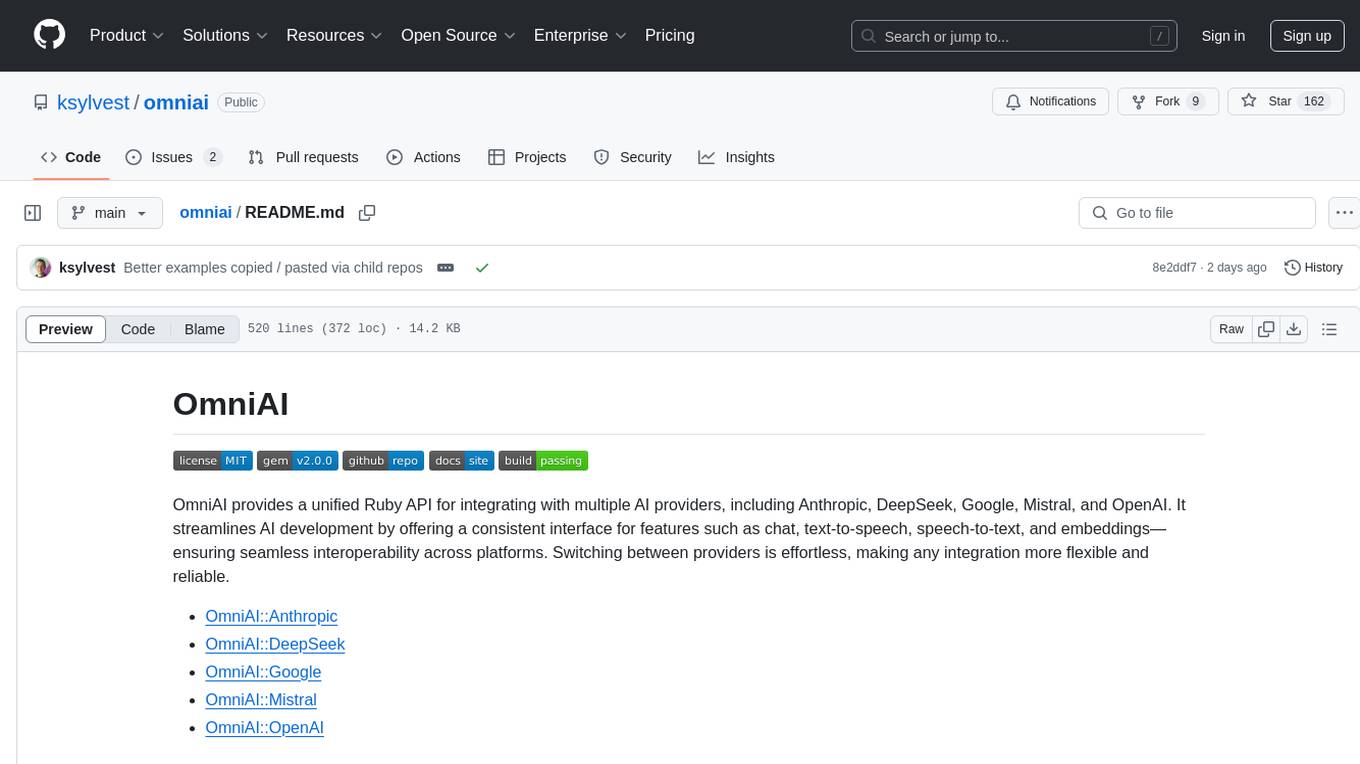

omniai

OmniAI provides a unified Ruby API for integrating with multiple AI providers, streamlining AI development by offering a consistent interface for features such as chat, text-to-speech, speech-to-text, and embeddings. It ensures seamless interoperability across platforms and effortless switching between providers, making integrations more flexible and reliable.

python-genai

The Google Gen AI SDK is a Python library that provides access to Google AI and Vertex AI services. It allows users to create clients for different services, work with parameter types, models, generate content, call functions, handle JSON response schemas, stream text and image content, perform async operations, count and compute tokens, embed content, generate and upscale images, edit images, work with files, create and get cached content, tune models, distill models, perform batch predictions, and more. The SDK supports various features like automatic function support, manual function declaration, JSON response schema support, streaming for text and image content, async methods, tuning job APIs, distillation, batch prediction, and more.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

cursive-py

Cursive is a universal and intuitive framework for interacting with LLMs. It is extensible, allowing users to hook into any part of a completion life cycle. Users can easily describe functions that LLMs can use with any supported model. Cursive aims to bridge capabilities between different models, providing a single interface for users to choose any model. It comes with built-in token usage and costs calculations, automatic retry, and model expanding features. Users can define and describe functions, generate Pydantic BaseModels, hook into completion life cycle, create embeddings, and configure retry and model expanding behavior. Cursive supports various models from OpenAI, Anthropic, OpenRouter, Cohere, and Replicate, with options to pass API keys for authentication.

Webscout

WebScout is a versatile tool that allows users to search for anything using Google, DuckDuckGo, and phind.com. It contains AI models, can transcribe YouTube videos, generate temporary email and phone numbers, has TTS support, webai (terminal GPT and open interpreter), and offline LLMs. It also supports features like weather forecasting, YT video downloading, temp mail and number generation, text-to-speech, advanced web searches, and more.

langchainrb

Langchain.rb is a Ruby library that makes it easy to build LLM-powered applications. It provides a unified interface to a variety of LLMs, vector search databases, and other tools, making it easy to build and deploy RAG (Retrieval Augmented Generation) systems and assistants. Langchain.rb is open source and available under the MIT License.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

sparkle

Sparkle is a tool that streamlines the process of building AI-driven features in applications using Large Language Models (LLMs). It guides users through creating and managing agents, defining tools, and interacting with LLM providers like OpenAI. Sparkle allows customization of LLM provider settings, model configurations, and provides a seamless integration with Sparkle Server for exposing agents via an OpenAI-compatible chat API endpoint.

mediasoup-client-aiortc

mediasoup-client-aiortc is a handler for the aiortc Python library, allowing Node.js applications to connect to a mediasoup server using WebRTC for real-time audio, video, and DataChannel communication. It facilitates the creation of Worker instances to manage Python subprocesses, obtain audio/video tracks, and create mediasoup-client handlers. The tool supports features like getUserMedia, handlerFactory creation, and event handling for subprocess closure and unexpected termination. It provides custom classes for media stream and track constraints, enabling diverse audio/video sources like devices, files, or URLs. The tool enhances WebRTC capabilities in Node.js applications through seamless Python subprocess communication.

ruby-openai

Use the OpenAI API with Ruby! 🤖🩵 Stream text with GPT-4, transcribe and translate audio with Whisper, or create images with DALL·E... Hire me | 🎮 Ruby AI Builders Discord | 🐦 Twitter | 🧠 Anthropic Gem | 🚂 Midjourney Gem ## Table of Contents * Ruby OpenAI * Table of Contents * Installation * Bundler * Gem install * Usage * Quickstart * With Config * Custom timeout or base URI * Extra Headers per Client * Logging * Errors * Faraday middleware * Azure * Ollama * Counting Tokens * Models * Examples * Chat * Streaming Chat * Vision * JSON Mode * Functions * Edits * Embeddings * Batches * Files * Finetunes * Assistants * Threads and Messages * Runs * Runs involving function tools * Image Generation * DALL·E 2 * DALL·E 3 * Image Edit * Image Variations * Moderations * Whisper * Translate * Transcribe * Speech * Errors * Development * Release * Contributing * License * Code of Conduct

top_secret

Top Secret is a Ruby gem designed to filter sensitive information from free text before sending it to external services or APIs, such as chatbots and LLMs. It provides default filters for credit cards, emails, phone numbers, social security numbers, people's names, and locations, with the ability to add custom filters. Users can configure the tool to handle sensitive information redaction, scan for sensitive data, batch process messages, and restore filtered text from external services. Top Secret uses Regex and NER filters to detect and redact sensitive information, allowing users to override default filters, disable specific filters, and add custom filters globally. The tool is suitable for applications requiring data privacy and security measures.

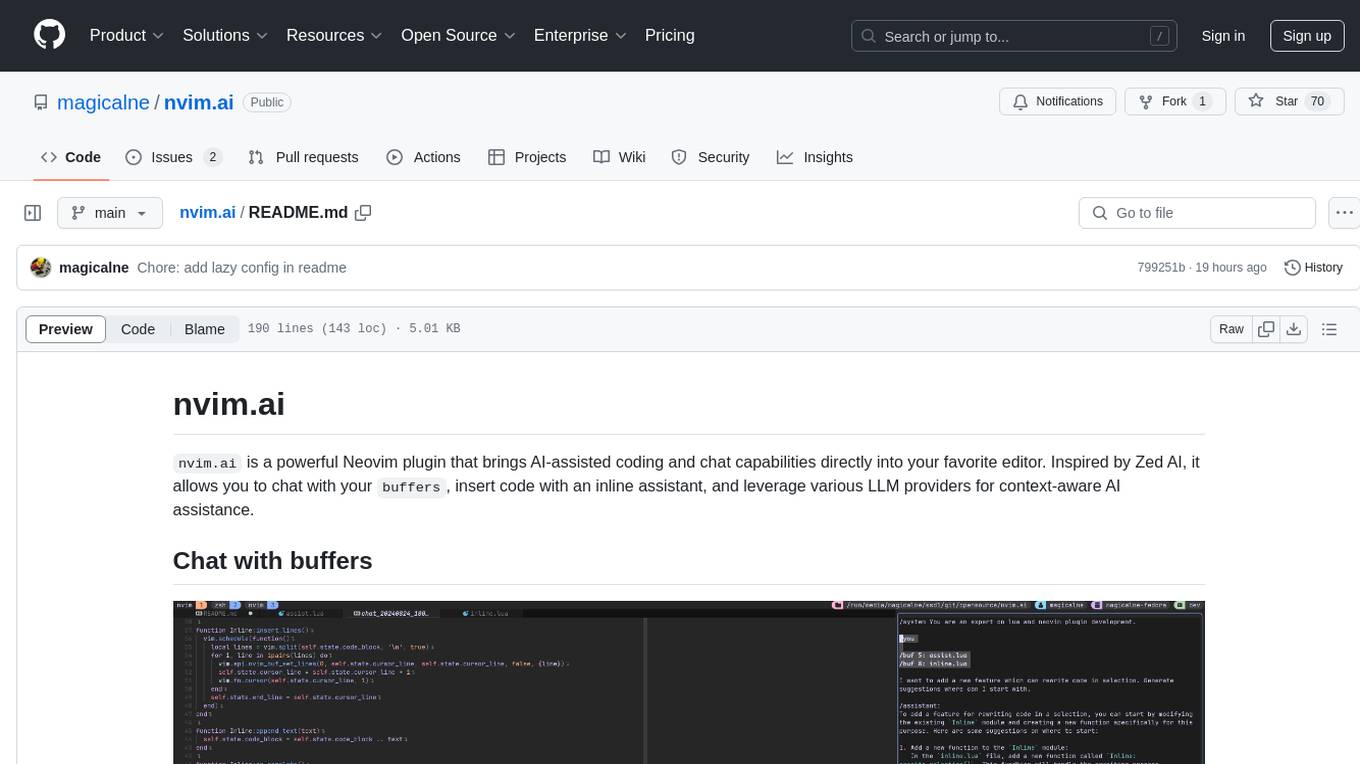

nvim.ai

nvim.ai is a powerful Neovim plugin that enables AI-assisted coding and chat capabilities within the editor. Users can chat with buffers, insert code with an inline assistant, and utilize various LLM providers for context-aware AI assistance. The plugin supports features like interacting with AI about code and documents, receiving relevant help based on current work, code insertion, code rewriting (Work in Progress), and integration with multiple LLM providers. Users can configure the plugin, add API keys to dotfiles, and integrate with nvim-cmp for command autocompletion. Keymaps are available for chat and inline assist functionalities. The chat dialog allows parsing content with keywords and supports roles like /system, /you, and /assistant. Context-aware assistance can be accessed through inline assist by inserting code blocks anywhere in the file.

instructor

Instructor is a Python library that makes it a breeze to work with structured outputs from large language models (LLMs). Built on top of Pydantic, it provides a simple, transparent, and user-friendly API to manage validation, retries, and streaming responses. Get ready to supercharge your LLM workflows!

swarmzero

SwarmZero SDK is a library that simplifies the creation and execution of AI Agents and Swarms of Agents. It supports various LLM Providers such as OpenAI, Azure OpenAI, Anthropic, MistralAI, Gemini, Nebius, and Ollama. Users can easily install the library using pip or poetry, set up the environment and configuration, create and run Agents, collaborate with Swarms, add tools for complex tasks, and utilize retriever tools for semantic information retrieval. Sample prompts are provided to help users explore the capabilities of the agents and swarms. The SDK also includes detailed examples and documentation for reference.

promptic

Promptic is a tool designed for LLM app development, providing a productive and pythonic way to build LLM applications. It leverages LiteLLM, allowing flexibility to switch LLM providers easily. Promptic focuses on building features by providing type-safe structured outputs, easy-to-build agents, streaming support, automatic prompt caching, and built-in conversation memory.

For similar tasks

SirChatalot

A Telegram bot that proves you don't need a body to have a personality. It can use various text and image generation APIs to generate responses to user messages. For text generation, the bot can use: * OpenAI's ChatGPT API (or other compatible API). Vision capabilities can be used with GPT-4 models. Function calling can be used with Function calling. * Anthropic's Claude API. Vision capabilities can be used with Claude 3 models. Function calling can be used with tool use. * YandexGPT API Bot can also generate images with: * OpenAI's DALL-E * Stability AI * Yandex ART This bot can also be used to generate responses to voice messages. Bot will convert the voice message to text and will then generate a response. Speech recognition can be done using the OpenAI's Whisper model. To use this feature, you need to install the ffmpeg library. This bot is also support working with files, see Files section for more details. If function calling is enabled, bot can generate images and search the web (limited).

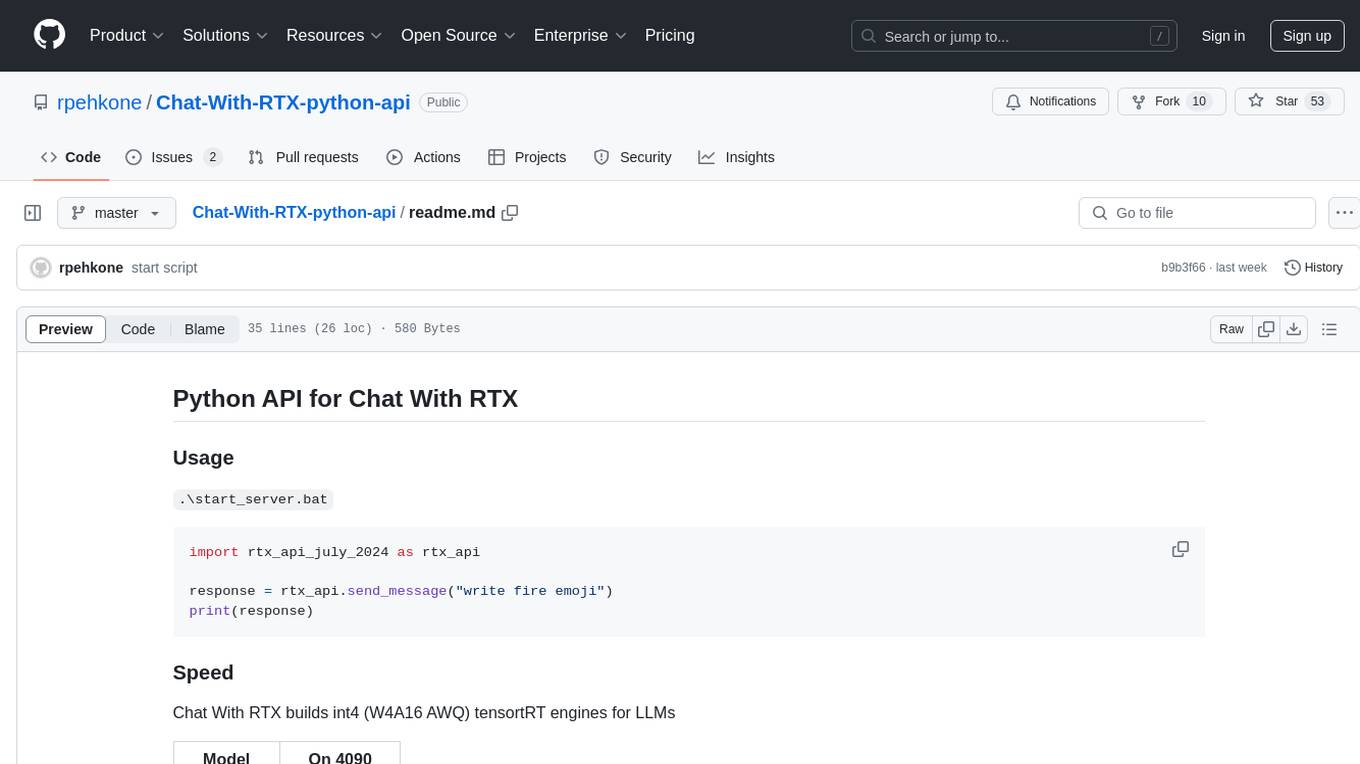

Chat-With-RTX-python-api

This repository contains a Python API for Chat With RTX, which allows users to interact with RTX models for natural language processing. The API provides functionality to send messages and receive responses from various LLM models. It also includes information on the speed of different models supported by Chat With RTX. The repository has a history of updates, including the removal of a feature and the addition of a new model for speech-to-text conversion. The repository is licensed under CC0.

LLMVoX

LLMVoX is a lightweight 30M-parameter, LLM-agnostic, autoregressive streaming Text-to-Speech (TTS) system designed to convert text outputs from Large Language Models into high-fidelity streaming speech with low latency. It achieves significantly lower Word Error Rate compared to speech-enabled LLMs while operating at comparable latency and speech quality. Key features include being lightweight & fast with only 30M parameters, LLM-agnostic for easy integration with existing models, multi-queue streaming for continuous speech generation, and multilingual support for easy adaptation to new languages.

omniai

OmniAI provides a unified Ruby API for integrating with multiple AI providers, streamlining AI development by offering a consistent interface for features such as chat, text-to-speech, speech-to-text, and embeddings. It ensures seamless interoperability across platforms and effortless switching between providers, making integrations more flexible and reliable.

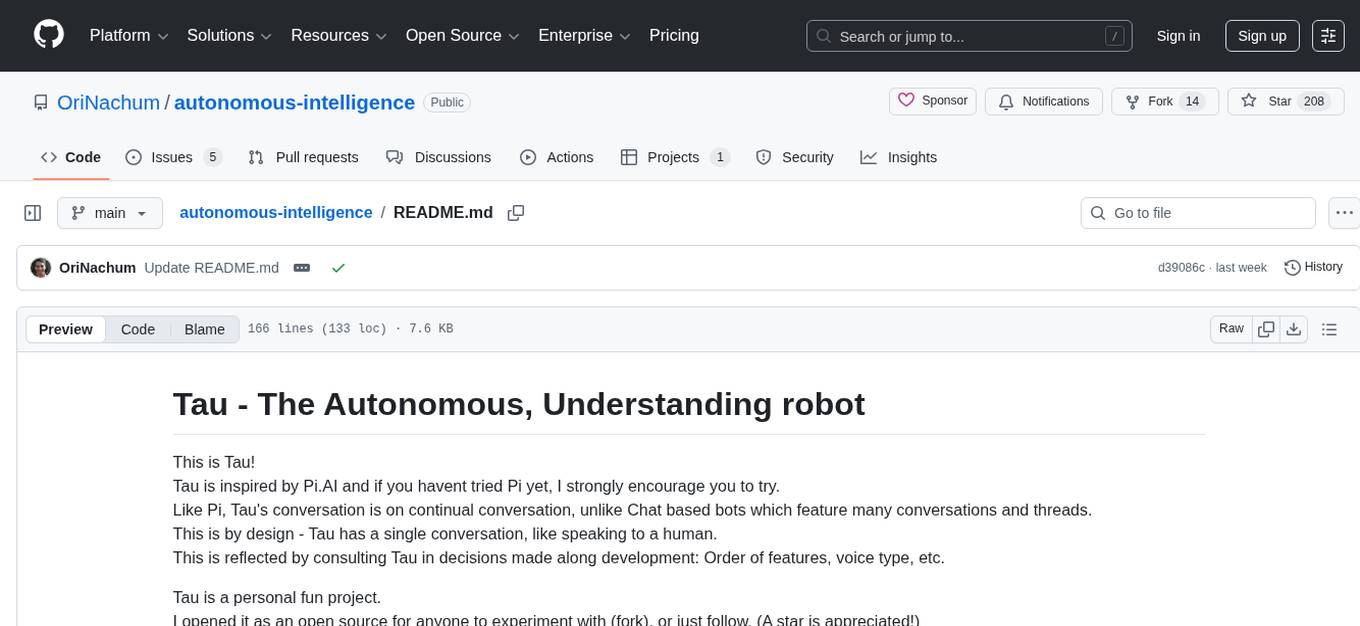

autonomous-intelligence

Tau is an autonomous robot project inspired by Pi.AI, designed for continual conversation with a single context. It features speech-based interaction, memory management, and integration with vision services. The project aims to create a local AI companion with personality, suitable for experimentation and development. Key components include long and immediate memory, speech-to-text and text-to-speech capabilities, and integration with Nvidia Jetson and Hailo vision services. Tau is open-source and encourages community contributions and experimentation.

wunjo.wladradchenko.ru

Wunjo AI is a comprehensive tool that empowers users to explore the realm of speech synthesis, deepfake animations, video-to-video transformations, and more. Its user-friendly interface and privacy-first approach make it accessible to both beginners and professionals alike. With Wunjo AI, you can effortlessly convert text into human-like speech, clone voices from audio files, create multi-dialogues with distinct voice profiles, and perform real-time speech recognition. Additionally, you can animate faces using just one photo combined with audio, swap faces in videos, GIFs, and photos, and even remove unwanted objects or enhance the quality of your deepfakes using the AI Retouch Tool. Wunjo AI is an all-in-one solution for your voice and visual AI needs, offering endless possibilities for creativity and expression.

airunner

AI Runner is a multi-modal AI interface that allows users to run open-source large language models and AI image generators on their own hardware. The tool provides features such as voice-based chatbot conversations, text-to-speech, speech-to-text, vision-to-text, text generation with large language models, image generation capabilities, image manipulation tools, utility functions, and more. It aims to provide a stable and user-friendly experience with security updates, a new UI, and a streamlined installation process. The application is designed to run offline on users' hardware without relying on a web server, offering a smooth and responsive user experience.

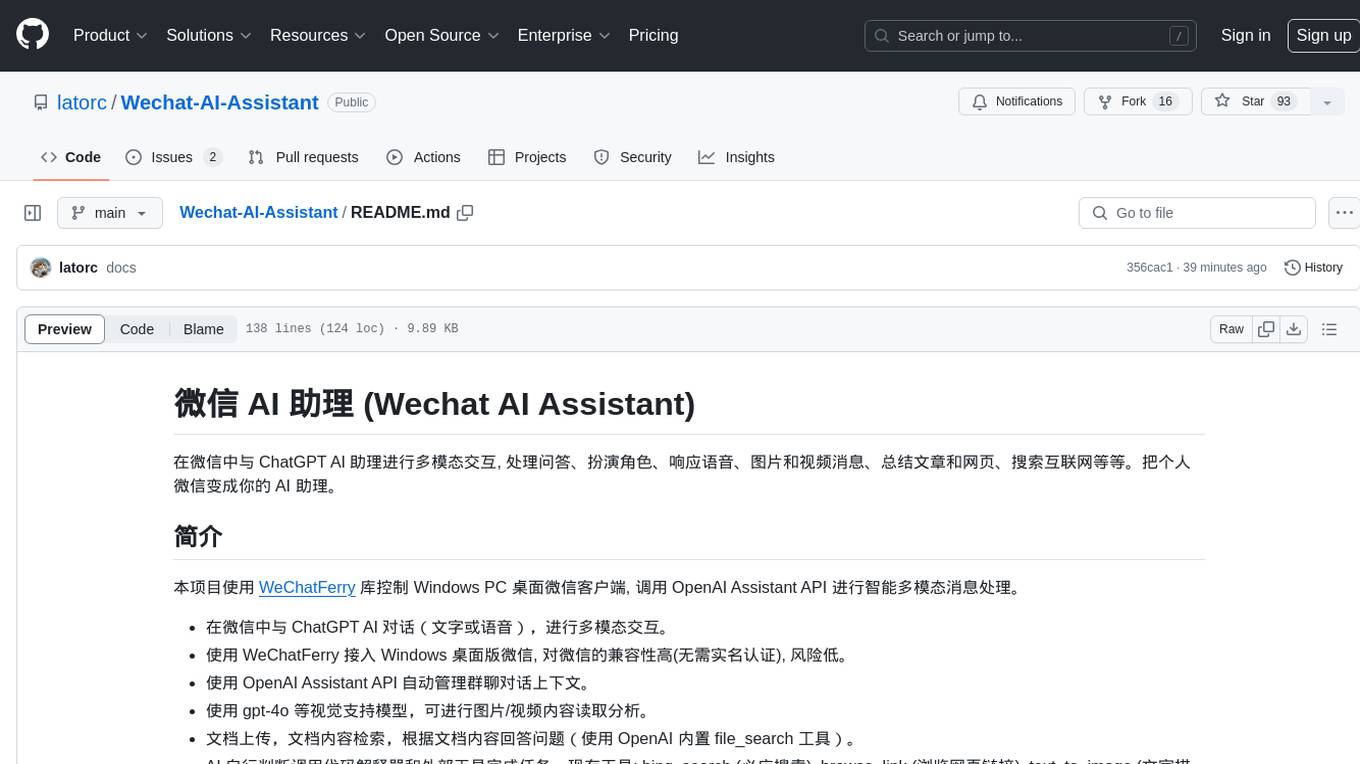

Wechat-AI-Assistant

Wechat AI Assistant is a project that enables multi-modal interaction with ChatGPT AI assistant within WeChat. It allows users to engage in conversations, role-playing, respond to voice messages, analyze images and videos, summarize articles and web links, and search the internet. The project utilizes the WeChatFerry library to control the Windows PC desktop WeChat client and leverages the OpenAI Assistant API for intelligent multi-modal message processing. Users can interact with ChatGPT AI in WeChat through text or voice, access various tools like bing_search, browse_link, image_to_text, text_to_image, text_to_speech, video_analysis, and more. The AI autonomously determines which code interpreter and external tools to use to complete tasks. Future developments include file uploads for AI to reference content, integration with other APIs, and login support for enterprise WeChat and WeChat official accounts.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.