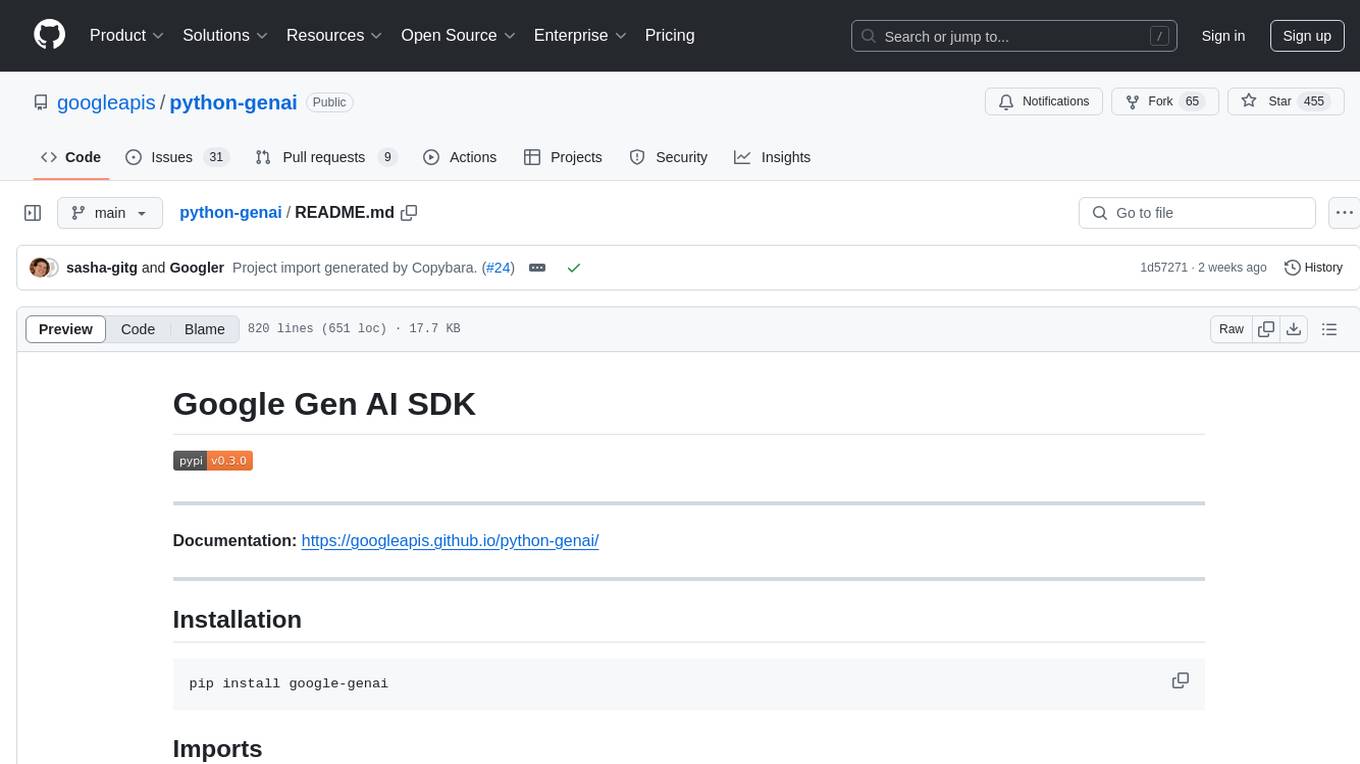

python-genai

Google Gen AI Python SDK provides an interface for developers to integrate Google's generative models into their Python applications.

Stars: 3372

The Google Gen AI SDK is a Python library that provides access to Google AI and Vertex AI services. It allows users to create clients for different services, work with parameter types, models, generate content, call functions, handle JSON response schemas, stream text and image content, perform async operations, count and compute tokens, embed content, generate and upscale images, edit images, work with files, create and get cached content, tune models, distill models, perform batch predictions, and more. The SDK supports various features like automatic function support, manual function declaration, JSON response schema support, streaming for text and image content, async methods, tuning job APIs, distillation, batch prediction, and more.

README:

Documentation: https://googleapis.github.io/python-genai/

Google Gen AI Python SDK provides an interface for developers to integrate Google's generative models into their Python applications. It supports the Gemini Developer API and Vertex AI APIs.

Generative models are often unaware of recent API and SDK updates and may suggest outdated or legacy code.

We recommend using our Code Generation instructions codegen_instructions.md when generating Google Gen AI SDK code to guide your model towards using the more recent SDK features. Copy and paste the instructions into your development environment to provide the model with the necessary context.

pip install google-genaiWith uv:

uv pip install google-genaifrom google import genai

from google.genai import typesPlease run one of the following code blocks to create a client for different services (Gemini Developer API or Vertex AI).

from google import genai

# Only run this block for Gemini Developer API

client = genai.Client(api_key='GEMINI_API_KEY')from google import genai

# Only run this block for Vertex AI API

client = genai.Client(

vertexai=True, project='your-project-id', location='us-central1'

)All API methods support Pydantic types and dictionaries, which you can access

from google.genai.types. You can import the types module with the following:

from google.genai import typesBelow is an example generate_content() call using types from the types module:

response = client.models.generate_content(

model='gemini-2.5-flash',

contents=types.Part.from_text(text='Why is the sky blue?'),

config=types.GenerateContentConfig(

temperature=0,

top_p=0.95,

top_k=20,

),

)Alternatively, you can accomplish the same request using dictionaries instead of types:

response = client.models.generate_content(

model='gemini-2.5-flash',

contents={'text': 'Why is the sky blue?'},

config={

'temperature': 0,

'top_p': 0.95,

'top_k': 20,

},

)(Optional) Using environment variables:

You can create a client by configuring the necessary environment variables. Configuration setup instructions depends on whether you're using the Gemini Developer API or the Gemini API in Vertex AI.

Gemini Developer API: Set the GEMINI_API_KEY or GOOGLE_API_KEY.

It will automatically be picked up by the client. It's recommended that you

set only one of those variables, but if both are set, GOOGLE_API_KEY takes

precedence.

export GEMINI_API_KEY='your-api-key'Gemini API on Vertex AI: Set GOOGLE_GENAI_USE_VERTEXAI,

GOOGLE_CLOUD_PROJECT and GOOGLE_CLOUD_LOCATION, as shown below:

export GOOGLE_GENAI_USE_VERTEXAI=true

export GOOGLE_CLOUD_PROJECT='your-project-id'

export GOOGLE_CLOUD_LOCATION='us-central1'from google import genai

client = genai.Client()Explicitly close the sync client to ensure that resources, such as the underlying HTTP connections, are properly cleaned up and closed.

from google.genai import Client

client = Client()

response_1 = client.models.generate_content(

model=MODEL_ID,

contents='Hello',

)

response_2 = client.models.generate_content(

model=MODEL_ID,

contents='Ask a question',

)

# Close the sync client to release resources.

client.close()To explicitly close the async client:

from google.genai import Client

aclient = Client(

vertexai=True, project='my-project-id', location='us-central1'

).aio

response_1 = await aclient.models.generate_content(

model=MODEL_ID,

contents='Hello',

)

response_2 = await aclient.models.generate_content(

model=MODEL_ID,

contents='Ask a question',

)

# Close the async client to release resources.

await aclient.aclose()By using the sync client context manager, it will close the underlying sync client when exiting the with block and avoid httpx "client has been closed" error like issues#1763.

from google.genai import Client

with Client() as client:

response_1 = client.models.generate_content(

model=MODEL_ID,

contents='Hello',

)

response_2 = client.models.generate_content(

model=MODEL_ID,

contents='Ask a question',

)By using the async client context manager, it will close the underlying async client when exiting the with block.

from google.genai import Client

async with Client().aio as aclient:

response_1 = await aclient.models.generate_content(

model=MODEL_ID,

contents='Hello',

)

response_2 = await aclient.models.generate_content(

model=MODEL_ID,

contents='Ask a question',

)By default, the SDK uses the beta API endpoints provided by Google to support

preview features in the APIs. The stable API endpoints can be selected by

setting the API version to v1.

To set the API version use http_options. For example, to set the API version

to v1 for Vertex AI:

from google import genai

from google.genai import types

client = genai.Client(

vertexai=True,

project='your-project-id',

location='us-central1',

http_options=types.HttpOptions(api_version='v1')

)To set the API version to v1alpha for the Gemini Developer API:

from google import genai

from google.genai import types

client = genai.Client(

api_key='GEMINI_API_KEY',

http_options=types.HttpOptions(api_version='v1alpha')

)By default we use httpx for both sync and async client implementations. In order

to have faster performance, you may install google-genai[aiohttp]. In Gen AI

SDK we configure trust_env=True to match with the default behavior of httpx.

Additional args of aiohttp.ClientSession.request() (see _RequestOptions args) can be passed

through the following way:

http_options = types.HttpOptions(

async_client_args={'cookies': ..., 'ssl': ...},

)

client=Client(..., http_options=http_options)Both httpx and aiohttp libraries use urllib.request.getproxies from

environment variables. Before client initialization, you may set proxy (and

optional SSL_CERT_FILE) by setting the environment variables:

export HTTPS_PROXY='http://username:password@proxy_uri:port'

export SSL_CERT_FILE='client.pem'If you need socks5 proxy, httpx supports socks5 proxy if you pass it via

args to httpx.Client(). You may install httpx[socks] to use it.

Then, you can pass it through the following way:

http_options = types.HttpOptions(

client_args={'proxy': 'socks5://user:pass@host:port'},

async_client_args={'proxy': 'socks5://user:pass@host:port'},

)

client=Client(..., http_options=http_options)In some cases you might need a custom base url (for example, API gateway proxy server) and bypass some authentication checks for project, location, or API key. You may pass the custom base url like this:

base_url = 'https://test-api-gateway-proxy.com'

client = Client(

vertexai=True, # Currently only vertexai=True is supported

http_options={

'base_url': base_url,

'headers': {'Authorization': 'Bearer test_token'},

},

)Parameter types can be specified as either dictionaries(TypedDict) or

Pydantic Models.

Pydantic model types are available in the types module.

The client.models module exposes model inferencing and model getters.

See the 'Create a client' section above to initialize a client.

response = client.models.generate_content(

model='gemini-2.5-flash', contents='Why is the sky blue?'

)

print(response.text)from google.genai import types

response = client.models.generate_content(

model='gemini-2.5-flash-image',

contents='A cartoon infographic for flying sneakers',

config=types.GenerateContentConfig(

response_modalities=["IMAGE"],

image_config=types.ImageConfig(

aspect_ratio="9:16",

),

),

)

for part in response.parts:

if part.inline_data:

generated_image = part.as_image()

generated_image.show()Download the file in console.

!wget -q https://storage.googleapis.com/generativeai-downloads/data/a11.txtpython code.

file = client.files.upload(file='a11.txt')

response = client.models.generate_content(

model='gemini-2.5-flash',

contents=['Could you summarize this file?', file]

)

print(response.text)The SDK always converts the inputs to the contents argument into

list[types.Content].

The following shows some common ways to provide your inputs.

This is the canonical way to provide contents, SDK will not do any conversion.

from google.genai import types

contents = types.Content(

role='user',

parts=[types.Part.from_text(text='Why is the sky blue?')]

)SDK converts this to

[

types.Content(

role='user',

parts=[types.Part.from_text(text='Why is the sky blue?')]

)

]contents='Why is the sky blue?'The SDK will assume this is a text part, and it converts this into the following:

[

types.UserContent(

parts=[

types.Part.from_text(text='Why is the sky blue?')

]

)

]Where a types.UserContent is a subclass of types.Content, it sets the

role field to be user.

contents=['Why is the sky blue?', 'Why is the cloud white?']The SDK assumes these are 2 text parts, it converts this into a single content, like the following:

[

types.UserContent(

parts=[

types.Part.from_text(text='Why is the sky blue?'),

types.Part.from_text(text='Why is the cloud white?'),

]

)

]Where a types.UserContent is a subclass of types.Content, the

role field in types.UserContent is fixed to be user.

from google.genai import types

contents = types.Part.from_function_call(

name='get_weather_by_location',

args={'location': 'Boston'}

)The SDK converts a function call part to a content with a model role:

[

types.ModelContent(

parts=[

types.Part.from_function_call(

name='get_weather_by_location',

args={'location': 'Boston'}

)

]

)

]Where a types.ModelContent is a subclass of types.Content, the

role field in types.ModelContent is fixed to be model.

from google.genai import types

contents = [

types.Part.from_function_call(

name='get_weather_by_location',

args={'location': 'Boston'}

),

types.Part.from_function_call(

name='get_weather_by_location',

args={'location': 'New York'}

),

]The SDK converts a list of function call parts to a content with a model role:

[

types.ModelContent(

parts=[

types.Part.from_function_call(

name='get_weather_by_location',

args={'location': 'Boston'}

),

types.Part.from_function_call(

name='get_weather_by_location',

args={'location': 'New York'}

)

]

)

]Where a types.ModelContent is a subclass of types.Content, the

role field in types.ModelContent is fixed to be model.

from google.genai import types

contents = types.Part.from_uri(

file_uri: 'gs://generativeai-downloads/images/scones.jpg',

mime_type: 'image/jpeg',

)The SDK converts all non function call parts into a content with a user role.

[

types.UserContent(parts=[

types.Part.from_uri(

file_uri: 'gs://generativeai-downloads/images/scones.jpg',

mime_type: 'image/jpeg',

)

])

]from google.genai import types

contents = [

types.Part.from_text('What is this image about?'),

types.Part.from_uri(

file_uri: 'gs://generativeai-downloads/images/scones.jpg',

mime_type: 'image/jpeg',

)

]The SDK will convert the list of parts into a content with a user role

[

types.UserContent(

parts=[

types.Part.from_text('What is this image about?'),

types.Part.from_uri(

file_uri: 'gs://generativeai-downloads/images/scones.jpg',

mime_type: 'image/jpeg',

)

]

)

]You can also provide a list of types.ContentUnion. The SDK leaves items of

types.Content as is, it groups consecutive non function call parts into a

single types.UserContent, and it groups consecutive function call parts into

a single types.ModelContent.

If you put a list within a list, the inner list can only contain

types.PartUnion items. The SDK will convert the inner list into a single

types.UserContent.

The output of the model can be influenced by several optional settings

available in generate_content's config parameter. For example, increasing

max_output_tokens is essential for longer model responses. To make a model more

deterministic, lowering the temperature parameter reduces randomness, with

values near 0 minimizing variability. Capabilities and parameter defaults for

each model is shown in the

Vertex AI docs

and Gemini API docs respectively.

from google.genai import types

response = client.models.generate_content(

model='gemini-2.5-flash',

contents='high',

config=types.GenerateContentConfig(

system_instruction='I say high, you say low',

max_output_tokens=3,

temperature=0.3,

),

)

print(response.text)To retrieve tuned models, see list tuned models.

for model in client.models.list():

print(model)pager = client.models.list(config={'page_size': 10})

print(pager.page_size)

print(pager[0])

pager.next_page()

print(pager[0])async for job in await client.aio.models.list():

print(job)async_pager = await client.aio.models.list(config={'page_size': 10})

print(async_pager.page_size)

print(async_pager[0])

await async_pager.next_page()

print(async_pager[0])from google.genai import types

response = client.models.generate_content(

model='gemini-2.5-flash',

contents='Say something bad.',

config=types.GenerateContentConfig(

safety_settings=[

types.SafetySetting(

category='HARM_CATEGORY_HATE_SPEECH',

threshold='BLOCK_ONLY_HIGH',

)

]

),

)

print(response.text)You can pass a Python function directly and it will be automatically called and responded by default.

from google.genai import types

def get_current_weather(location: str) -> str:

"""Returns the current weather.

Args:

location: The city and state, e.g. San Francisco, CA

"""

return 'sunny'

response = client.models.generate_content(

model='gemini-2.5-flash',

contents='What is the weather like in Boston?',

config=types.GenerateContentConfig(tools=[get_current_weather]),

)

print(response.text)If you pass in a python function as a tool directly, and do not want automatic function calling, you can disable automatic function calling as follows:

from google.genai import types

response = client.models.generate_content(

model='gemini-2.5-flash',

contents='What is the weather like in Boston?',

config=types.GenerateContentConfig(

tools=[get_current_weather],

automatic_function_calling=types.AutomaticFunctionCallingConfig(

disable=True

),

),

)With automatic function calling disabled, you will get a list of function call parts in the response:

function_calls: Optional[List[types.FunctionCall]] = response.function_callsIf you don't want to use the automatic function support, you can manually declare the function and invoke it.

The following example shows how to declare a function and pass it as a tool. Then you will receive a function call part in the response.

from google.genai import types

function = types.FunctionDeclaration(

name='get_current_weather',

description='Get the current weather in a given location',

parameters_json_schema={

'type': 'object',

'properties': {

'location': {

'type': 'string',

'description': 'The city and state, e.g. San Francisco, CA',

}

},

'required': ['location'],

},

)

tool = types.Tool(function_declarations=[function])

response = client.models.generate_content(

model='gemini-2.5-flash',

contents='What is the weather like in Boston?',

config=types.GenerateContentConfig(tools=[tool]),

)

print(response.function_calls[0])After you receive the function call part from the model, you can invoke the function and get the function response. And then you can pass the function response to the model. The following example shows how to do it for a simple function invocation.

from google.genai import types

user_prompt_content = types.Content(

role='user',

parts=[types.Part.from_text(text='What is the weather like in Boston?')],

)

function_call_part = response.function_calls[0]

function_call_content = response.candidates[0].content

try:

function_result = get_current_weather(

**function_call_part.function_call.args

)

function_response = {'result': function_result}

except (

Exception

) as e: # instead of raising the exception, you can let the model handle it

function_response = {'error': str(e)}

function_response_part = types.Part.from_function_response(

name=function_call_part.name,

response=function_response,

)

function_response_content = types.Content(

role='tool', parts=[function_response_part]

)

response = client.models.generate_content(

model='gemini-2.5-flash',

contents=[

user_prompt_content,

function_call_content,

function_response_content,

],

config=types.GenerateContentConfig(

tools=[tool],

),

)

print(response.text)If you configure function calling mode to be ANY, then the model will always

return function call parts. If you also pass a python function as a tool, by

default the SDK will perform automatic function calling until the remote calls exceed the

maximum remote call for automatic function calling (default to 10 times).

If you'd like to disable automatic function calling in ANY mode:

from google.genai import types

def get_current_weather(location: str) -> str:

"""Returns the current weather.

Args:

location: The city and state, e.g. San Francisco, CA

"""

return "sunny"

response = client.models.generate_content(

model="gemini-2.5-flash",

contents="What is the weather like in Boston?",

config=types.GenerateContentConfig(

tools=[get_current_weather],

automatic_function_calling=types.AutomaticFunctionCallingConfig(

disable=True

),

tool_config=types.ToolConfig(

function_calling_config=types.FunctionCallingConfig(mode='ANY')

),

),

)If you'd like to set x number of automatic function call turns, you can

configure the maximum remote calls to be x + 1.

Assuming you prefer 1 turn for automatic function calling.

from google.genai import types

def get_current_weather(location: str) -> str:

"""Returns the current weather.

Args:

location: The city and state, e.g. San Francisco, CA

"""

return "sunny"

response = client.models.generate_content(

model="gemini-2.5-flash",

contents="What is the weather like in Boston?",

config=types.GenerateContentConfig(

tools=[get_current_weather],

automatic_function_calling=types.AutomaticFunctionCallingConfig(

maximum_remote_calls=2

),

tool_config=types.ToolConfig(

function_calling_config=types.FunctionCallingConfig(mode='ANY')

),

),

)Built-in MCP support is an experimental feature. You can pass a local MCP server as a tool directly.

import os

import asyncio

from datetime import datetime

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

from google import genai

client = genai.Client()

# Create server parameters for stdio connection

server_params = StdioServerParameters(

command="npx", # Executable

args=["-y", "@philschmid/weather-mcp"], # MCP Server

env=None, # Optional environment variables

)

async def run():

async with stdio_client(server_params) as (read, write):

async with ClientSession(read, write) as session:

# Prompt to get the weather for the current day in London.

prompt = f"What is the weather in London in {datetime.now().strftime('%Y-%m-%d')}?"

# Initialize the connection between client and server

await session.initialize()

# Send request to the model with MCP function declarations

response = await client.aio.models.generate_content(

model="gemini-2.5-flash",

contents=prompt,

config=genai.types.GenerateContentConfig(

temperature=0,

tools=[session], # uses the session, will automatically call the tool using automatic function calling

),

)

print(response.text)

# Start the asyncio event loop and run the main function

asyncio.run(run())However you define your schema, don't duplicate it in your input prompt, including by giving examples of expected JSON output. If you do, the generated output might be lower in quality.

Schemas can be provided as standard JSON schema.

user_profile = {

'properties': {

'age': {

'anyOf': [

{'maximum': 20, 'minimum': 0, 'type': 'integer'},

{'type': 'null'},

],

'title': 'Age',

},

'username': {

'description': "User's unique name",

'title': 'Username',

'type': 'string',

},

},

'required': ['username', 'age'],

'title': 'User Schema',

'type': 'object',

}

response = client.models.generate_content(

model='gemini-2.5-flash',

contents='Give me a random user profile.',

config={

'response_mime_type': 'application/json',

'response_json_schema': user_profile

},

)

print(response.parsed)Schemas can be provided as Pydantic Models.

from pydantic import BaseModel

from google.genai import types

class CountryInfo(BaseModel):

name: str

population: int

capital: str

continent: str

gdp: int

official_language: str

total_area_sq_mi: int

response = client.models.generate_content(

model='gemini-2.5-flash',

contents='Give me information for the United States.',

config=types.GenerateContentConfig(

response_mime_type='application/json',

response_schema=CountryInfo,

),

)

print(response.text)from google.genai import types

response = client.models.generate_content(

model='gemini-2.5-flash',

contents='Give me information for the United States.',

config=types.GenerateContentConfig(

response_mime_type='application/json',

response_schema={

'required': [

'name',

'population',

'capital',

'continent',

'gdp',

'official_language',

'total_area_sq_mi',

],

'properties': {

'name': {'type': 'STRING'},

'population': {'type': 'INTEGER'},

'capital': {'type': 'STRING'},

'continent': {'type': 'STRING'},

'gdp': {'type': 'INTEGER'},

'official_language': {'type': 'STRING'},

'total_area_sq_mi': {'type': 'INTEGER'},

},

'type': 'OBJECT',

},

),

)

print(response.text)You can set response_mime_type to 'text/x.enum' to return one of those enum values as the response.

from enum import Enum

class InstrumentEnum(Enum):

PERCUSSION = 'Percussion'

STRING = 'String'

WOODWIND = 'Woodwind'

BRASS = 'Brass'

KEYBOARD = 'Keyboard'

response = client.models.generate_content(

model='gemini-2.5-flash',

contents='What instrument plays multiple notes at once?',

config={

'response_mime_type': 'text/x.enum',

'response_schema': InstrumentEnum,

},

)

print(response.text)You can also set response_mime_type to 'application/json', the response will be

identical but in quotes.

from enum import Enum

class InstrumentEnum(Enum):

PERCUSSION = 'Percussion'

STRING = 'String'

WOODWIND = 'Woodwind'

BRASS = 'Brass'

KEYBOARD = 'Keyboard'

response = client.models.generate_content(

model='gemini-2.5-flash',

contents='What instrument plays multiple notes at once?',

config={

'response_mime_type': 'application/json',

'response_schema': InstrumentEnum,

},

)

print(response.text)Generate content in a streaming format so that the model outputs streams back to you, rather than being returned as one chunk.

for chunk in client.models.generate_content_stream(

model='gemini-2.5-flash', contents='Tell me a story in 300 words.'

):

print(chunk.text, end='')If your image is stored in Google Cloud Storage,

you can use the from_uri class method to create a Part object.

from google.genai import types

for chunk in client.models.generate_content_stream(

model='gemini-2.5-flash',

contents=[

'What is this image about?',

types.Part.from_uri(

file_uri='gs://generativeai-downloads/images/scones.jpg',

mime_type='image/jpeg',

),

],

):

print(chunk.text, end='')If your image is stored in your local file system, you can read it in as bytes

data and use the from_bytes class method to create a Part object.

from google.genai import types

YOUR_IMAGE_PATH = 'your_image_path'

YOUR_IMAGE_MIME_TYPE = 'your_image_mime_type'

with open(YOUR_IMAGE_PATH, 'rb') as f:

image_bytes = f.read()

for chunk in client.models.generate_content_stream(

model='gemini-2.5-flash',

contents=[

'What is this image about?',

types.Part.from_bytes(data=image_bytes, mime_type=YOUR_IMAGE_MIME_TYPE),

],

):

print(chunk.text, end='')client.aio exposes all the analogous async methods

that are available on client. Note that it applies to all the modules.

For example, client.aio.models.generate_content is the async version

of client.models.generate_content

response = await client.aio.models.generate_content(

model='gemini-2.5-flash', contents='Tell me a story in 300 words.'

)

print(response.text)async for chunk in await client.aio.models.generate_content_stream(

model='gemini-2.5-flash', contents='Tell me a story in 300 words.'

):

print(chunk.text, end='')response = client.models.count_tokens(

model='gemini-2.5-flash',

contents='why is the sky blue?',

)

print(response)Compute tokens is only supported in Vertex AI.

response = client.models.compute_tokens(

model='gemini-2.5-flash',

contents='why is the sky blue?',

)

print(response)response = await client.aio.models.count_tokens(

model='gemini-2.5-flash',

contents='why is the sky blue?',

)

print(response)tokenizer = genai.LocalTokenizer(model_name='gemini-2.5-flash')

result = tokenizer.count_tokens("What is your name?")tokenizer = genai.LocalTokenizer(model_name='gemini-2.5-flash')

result = tokenizer.compute_tokens("What is your name?")response = client.models.embed_content(

model='gemini-embedding-001',

contents='why is the sky blue?',

)

print(response)from google.genai import types

response = client.models.embed_content(

model='gemini-embedding-001',

contents=['why is the sky blue?', 'What is your age?'],

config=types.EmbedContentConfig(output_dimensionality=10),

)

print(response)from google.genai import types

response1 = client.models.generate_images(

model='imagen-4.0-generate-001',

prompt='An umbrella in the foreground, and a rainy night sky in the background',

config=types.GenerateImagesConfig(

number_of_images=1,

include_rai_reason=True,

output_mime_type='image/jpeg',

),

)

response1.generated_images[0].image.show()Upscale image is only supported in Vertex AI.

from google.genai import types

response2 = client.models.upscale_image(

model='imagen-4.0-upscale-preview',

image=response1.generated_images[0].image,

upscale_factor='x2',

config=types.UpscaleImageConfig(

include_rai_reason=True,

output_mime_type='image/jpeg',

),

)

response2.generated_images[0].image.show()Edit image uses a separate model from generate and upscale.

Edit image is only supported in Vertex AI.

# Edit the generated image from above

from google.genai import types

from google.genai.types import RawReferenceImage, MaskReferenceImage

raw_ref_image = RawReferenceImage(

reference_id=1,

reference_image=response1.generated_images[0].image,

)

# Model computes a mask of the background

mask_ref_image = MaskReferenceImage(

reference_id=2,

config=types.MaskReferenceConfig(

mask_mode='MASK_MODE_BACKGROUND',

mask_dilation=0,

),

)

response3 = client.models.edit_image(

model='imagen-3.0-capability-001',

prompt='Sunlight and clear sky',

reference_images=[raw_ref_image, mask_ref_image],

config=types.EditImageConfig(

edit_mode='EDIT_MODE_INPAINT_INSERTION',

number_of_images=1,

include_rai_reason=True,

output_mime_type='image/jpeg',

),

)

response3.generated_images[0].image.show()Support for generating videos is considered public preview

from google.genai import types

# Create operation

operation = client.models.generate_videos(

model='veo-3.1-generate-preview',

prompt='A neon hologram of a cat driving at top speed',

config=types.GenerateVideosConfig(

number_of_videos=1,

duration_seconds=5,

enhance_prompt=True,

),

)

# Poll operation

while not operation.done:

time.sleep(20)

operation = client.operations.get(operation)

video = operation.response.generated_videos[0].video

video.show()from google.genai import types

# Read local image (uses mimetypes.guess_type to infer mime type)

image = types.Image.from_file("local/path/file.png")

# Create operation

operation = client.models.generate_videos(

model='veo-3.1-generate-preview',

# Prompt is optional if image is provided

prompt='Night sky',

image=image,

config=types.GenerateVideosConfig(

number_of_videos=1,

duration_seconds=5,

enhance_prompt=True,

# Can also pass an Image into last_frame for frame interpolation

),

)

# Poll operation

while not operation.done:

time.sleep(20)

operation = client.operations.get(operation)

video = operation.response.generated_videos[0].video

video.show()Currently, only Gemini Developer API supports video extension on Veo 3.1 for previously generated videos. Vertex supports video extension on Veo 2.0.

from google.genai import types

# Read local video (uses mimetypes.guess_type to infer mime type)

video = types.Video.from_file("local/path/video.mp4")

# Create operation

operation = client.models.generate_videos(

model='veo-3.1-generate-preview',

# Prompt is optional if Video is provided

prompt='Night sky',

# Input video must be in GCS for Vertex or a URI for Gemini

video=types.Video(

uri="gs://bucket-name/inputs/videos/cat_driving.mp4",

),

config=types.GenerateVideosConfig(

number_of_videos=1,

duration_seconds=5,

enhance_prompt=True,

),

)

# Poll operation

while not operation.done:

time.sleep(20)

operation = client.operations.get(operation)

video = operation.response.generated_videos[0].video

video.show()Create a chat session to start a multi-turn conversations with the model. Then,

use chat.send_message function multiple times within the same chat session so

that it can reflect on its previous responses (i.e., engage in an ongoing

conversation). See the 'Create a client' section above to initialize a client.

chat = client.chats.create(model='gemini-2.5-flash')

response = chat.send_message('tell me a story')

print(response.text)

response = chat.send_message('summarize the story you told me in 1 sentence')

print(response.text)chat = client.chats.create(model='gemini-2.5-flash')

for chunk in chat.send_message_stream('tell me a story'):

print(chunk.text)chat = client.aio.chats.create(model='gemini-2.5-flash')

response = await chat.send_message('tell me a story')

print(response.text)chat = client.aio.chats.create(model='gemini-2.5-flash')

async for chunk in await chat.send_message_stream('tell me a story'):

print(chunk.text)Files are only supported in Gemini Developer API. See the 'Create a client' section above to initialize a client.

!gcloud storage cp gs://cloud-samples-data/generative-ai/pdf/2312.11805v3.pdf .

!gcloud storage cp gs://cloud-samples-data/generative-ai/pdf/2403.05530.pdf .file1 = client.files.upload(file='2312.11805v3.pdf')

file2 = client.files.upload(file='2403.05530.pdf')

print(file1)

print(file2)file1 = client.files.upload(file='2312.11805v3.pdf')

file_info = client.files.get(name=file1.name)file3 = client.files.upload(file='2312.11805v3.pdf')

client.files.delete(name=file3.name)client.caches contains the control plane APIs for cached content. See the

'Create a client' section above to initialize a client.

from google.genai import types

if client.vertexai:

file_uris = [

'gs://cloud-samples-data/generative-ai/pdf/2312.11805v3.pdf',

'gs://cloud-samples-data/generative-ai/pdf/2403.05530.pdf',

]

else:

file_uris = [file1.uri, file2.uri]

cached_content = client.caches.create(

model='gemini-2.5-flash',

config=types.CreateCachedContentConfig(

contents=[

types.Content(

role='user',

parts=[

types.Part.from_uri(

file_uri=file_uris[0], mime_type='application/pdf'

),

types.Part.from_uri(

file_uri=file_uris[1],

mime_type='application/pdf',

),

],

)

],

system_instruction='What is the sum of the two pdfs?',

display_name='test cache',

ttl='3600s',

),

)cached_content = client.caches.get(name=cached_content.name)from google.genai import types

response = client.models.generate_content(

model='gemini-2.5-flash',

contents='Summarize the pdfs',

config=types.GenerateContentConfig(

cached_content=cached_content.name,

),

)

print(response.text)Warning: The Interactions API is in Beta. This is a preview of an experimental feature. Features and schemas are subject to breaking changes.

The Interactions API is a unified interface for interacting with Gemini models and agents. It simplifies state management, tool orchestration, and long-running tasks.

See the documentation site for more details.

interaction = client.interactions.create(

model='gemini-2.5-flash',

input='Tell me a short joke about programming.'

)

print(interaction.outputs[-1].text)The Interactions API supports server-side state management. You can continue a conversation by referencing the previous_interaction_id.

# 1. First turn

interaction1 = client.interactions.create(

model='gemini-2.5-flash',

input='Hi, my name is Amir.'

)

print(f"Model: {interaction1.outputs[-1].text}")

# 2. Second turn (passing previous_interaction_id)

interaction2 = client.interactions.create(

model='gemini-2.5-flash',

input='What is my name?',

previous_interaction_id=interaction1.id

)

print(f"Model: {interaction2.outputs[-1].text}")You can use specialized agents like deep-research-pro-preview-12-2025 for complex tasks.

import time

# 1. Start the Deep Research Agent

initial_interaction = client.interactions.create(

input='Research the history of the Google TPUs with a focus on 2025 and 2026.',

agent='deep-research-pro-preview-12-2025',

background=True

)

print(f"Research started. Interaction ID: {initial_interaction.id}")

# 2. Poll for results

while True:

interaction = client.interactions.get(id=initial_interaction.id)

print(f"Status: {interaction.status}")

if interaction.status == "completed":

print("\nFinal Report:\n", interaction.outputs[-1].text)

break

elif interaction.status in ["failed", "cancelled"]:

print(f"Failed with status: {interaction.status}")

break

time.sleep(10)You can provide multimodal data (text, images, audio, etc.) in the input list.

import base64

# Assuming you have an image loaded as bytes

# base64_image = ...

interaction = client.interactions.create(

model='gemini-2.5-flash',

input=[

{'type': 'text', 'text': 'Describe the image.'},

{'type': 'image', 'data': base64_image, 'mime_type': 'image/png'}

]

)

print(interaction.outputs[-1].text)You can define custom functions for the model to use. The Interactions API handles the tool selection, and you provide the execution result back to the model.

# 1. Define the tool

def get_weather(location: str):

"""Gets the weather for a given location."""

return f"The weather in {location} is sunny."

weather_tool = {

'type': 'function',

'name': 'get_weather',

'description': 'Gets the weather for a given location.',

'parameters': {

'type': 'object',

'properties': {

'location': {'type': 'string', 'description': 'The city and state, e.g. San Francisco, CA'}

},

'required': ['location']

}

}

# 2. Send the request with tools

interaction = client.interactions.create(

model='gemini-2.5-flash',

input='What is the weather in Mountain View, CA?',

tools=[weather_tool]

)

# 3. Handle the tool call

for output in interaction.outputs:

if output.type == 'function_call':

print(f"Tool Call: {output.name}({output.arguments})")

# Execute your actual function here

result = get_weather(**output.arguments)

# Send result back to the model

interaction = client.interactions.create(

model='gemini-2.5-flash',

previous_interaction_id=interaction.id,

input=[{

'type': 'function_result',

'name': output.name,

'call_id': output.id,

'result': result

}]

)

print(f"Response: {interaction.outputs[-1].text}")You can also use Google's built-in tools, such as Google Search or Code Execution.

interaction = client.interactions.create(

model='gemini-2.5-flash',

input='Who won the last Super Bowl?',

tools=[{'type': 'google_search'}]

)

# Find the text output (not the GoogleSearchResultContent)

text_output = next((o for o in interaction.outputs if o.type == 'text'), None)

if text_output:

print(text_output.text)interaction = client.interactions.create(

model='gemini-2.5-flash',

input='Calculate the 50th Fibonacci number.',

tools=[{'type': 'code_execution'}]

)

print(interaction.outputs[-1].text)The Interactions API can generate multimodal outputs, such as images. You must specify the response_modalities.

import base64

interaction = client.interactions.create(

model='gemini-3-pro-image-preview',

input='Generate an image of a futuristic city.',

response_modalities=['IMAGE']

)

for output in interaction.outputs:

if output.type == 'image':

print(f"Generated image with mime_type: {output.mime_type}")

# Save the image

with open("generated_city.png", "wb") as f:

f.write(base64.b64decode(output.data))client.tunings contains tuning job APIs and supports supervised fine

tuning through tune. Only supported in Vertex AI. See the 'Create a client'

section above to initialize a client.

- Vertex AI supports tuning from GCS source or from a Vertex AI Multimodal Dataset

from google.genai import types

model = 'gemini-2.5-flash'

training_dataset = types.TuningDataset(

# or gcs_uri=my_vertex_multimodal_dataset

gcs_uri='gs://your-gcs-bucket/your-tuning-data.jsonl',

)from google.genai import types

tuning_job = client.tunings.tune(

base_model=model,

training_dataset=training_dataset,

config=types.CreateTuningJobConfig(

epoch_count=1, tuned_model_display_name='test_dataset_examples model'

),

)

print(tuning_job)tuning_job = client.tunings.get(name=tuning_job.name)

print(tuning_job)import time

completed_states = set(

[

'JOB_STATE_SUCCEEDED',

'JOB_STATE_FAILED',

'JOB_STATE_CANCELLED',

]

)

while tuning_job.state not in completed_states:

print(tuning_job.state)

tuning_job = client.tunings.get(name=tuning_job.name)

time.sleep(10)response = client.models.generate_content(

model=tuning_job.tuned_model.endpoint,

contents='why is the sky blue?',

)

print(response.text)tuned_model = client.models.get(model=tuning_job.tuned_model.model)

print(tuned_model)To retrieve base models, see list base models.

for model in client.models.list(config={'page_size': 10, 'query_base': False}):

print(model)pager = client.models.list(config={'page_size': 10, 'query_base': False})

print(pager.page_size)

print(pager[0])

pager.next_page()

print(pager[0])async for job in await client.aio.models.list(config={'page_size': 10, 'query_base': False}):

print(job)async_pager = await client.aio.models.list(config={'page_size': 10, 'query_base': False})

print(async_pager.page_size)

print(async_pager[0])

await async_pager.next_page()

print(async_pager[0])from google.genai import types

model = pager[0]

model = client.models.update(

model=model.name,

config=types.UpdateModelConfig(

display_name='my tuned model', description='my tuned model description'

),

)

print(model)for job in client.tunings.list(config={'page_size': 10}):

print(job)pager = client.tunings.list(config={'page_size': 10})

print(pager.page_size)

print(pager[0])

pager.next_page()

print(pager[0])async for job in await client.aio.tunings.list(config={'page_size': 10}):

print(job)async_pager = await client.aio.tunings.list(config={'page_size': 10})

print(async_pager.page_size)

print(async_pager[0])

await async_pager.next_page()

print(async_pager[0])Only supported in Vertex AI. See the 'Create a client' section above to initialize a client.

Vertex AI:

# Specify model and source file only, destination and job display name will be auto-populated

job = client.batches.create(

model='gemini-2.5-flash',

src='bq://my-project.my-dataset.my-table', # or "gs://path/to/input/data"

)

print(job)Gemini Developer API:

# Create a batch job with inlined requests

batch_job = client.batches.create(

model="gemini-2.5-flash",

src=[{

"contents": [{

"parts": [{

"text": "Hello!",

}],

"role": "user",

}],

"config": {"response_modalities": ["text"]},

}],

)

jobIn order to create a batch job with file name. Need to upload a json file.

For example myrequests.json:

{"key":"request_1", "request": {"contents": [{"parts": [{"text":

"Explain how AI works in a few words"}]}], "generation_config": {"response_modalities": ["TEXT"]}}}

{"key":"request_2", "request": {"contents": [{"parts": [{"text": "Explain how Crypto works in a few words"}]}]}}Then upload the file.

# Upload the file

file = client.files.upload(

file='myrequests.json',

config=types.UploadFileConfig(display_name='test-json')

)

# Create a batch job with file name

batch_job = client.batches.create(

model="gemini-2.5-flash",

src="files/test-json",

)# Get a job by name

job = client.batches.get(name=job.name)

job.statecompleted_states = set(

[

'JOB_STATE_SUCCEEDED',

'JOB_STATE_FAILED',

'JOB_STATE_CANCELLED',

'JOB_STATE_PAUSED',

]

)

while job.state not in completed_states:

print(job.state)

job = client.batches.get(name=job.name)

time.sleep(30)

jobfor job in client.batches.list(config=types.ListBatchJobsConfig(page_size=10)):

print(job)pager = client.batches.list(config=types.ListBatchJobsConfig(page_size=10))

print(pager.page_size)

print(pager[0])

pager.next_page()

print(pager[0])async for job in await client.aio.batches.list(

config=types.ListBatchJobsConfig(page_size=10)

):

print(job)async_pager = await client.aio.batches.list(

config=types.ListBatchJobsConfig(page_size=10)

)

print(async_pager.page_size)

print(async_pager[0])

await async_pager.next_page()

print(async_pager[0])# Delete the job resource

delete_job = client.batches.delete(name=job.name)

delete_jobTo handle errors raised by the model service, the SDK provides this APIError class.

from google.genai import errors

try:

client.models.generate_content(

model="invalid-model-name",

contents="What is your name?",

)

except errors.APIError as e:

print(e.code) # 404

print(e.message)The extra_body field in HttpOptions accepts a dictionary of additional JSON

properties to include in the request body. This can be used to access new or

experimental backend features that are not yet formally supported in the SDK.

The structure of the dictionary must match the backend API's request structure.

- Vertex AI backend API docs: https://cloud.google.com/vertex-ai/docs/reference/rest

- Gemini API backend API docs: https://ai.google.dev/api/rest

response = client.models.generate_content(

model="gemini-2.5-pro",

contents="What is the weather in Boston? and how about Sunnyvale?",

config=types.GenerateContentConfig(

tools=[get_current_weather],

http_options=types.HttpOptions(extra_body={'tool_config': {'function_calling_config': {'mode': 'COMPOSITIONAL'}}}),

),

)For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for python-genai

Similar Open Source Tools

python-genai

The Google Gen AI SDK is a Python library that provides access to Google AI and Vertex AI services. It allows users to create clients for different services, work with parameter types, models, generate content, call functions, handle JSON response schemas, stream text and image content, perform async operations, count and compute tokens, embed content, generate and upscale images, edit images, work with files, create and get cached content, tune models, distill models, perform batch predictions, and more. The SDK supports various features like automatic function support, manual function declaration, JSON response schema support, streaming for text and image content, async methods, tuning job APIs, distillation, batch prediction, and more.

generative-ai-python

The Google AI Python SDK is the easiest way for Python developers to build with the Gemini API. The Gemini API gives you access to Gemini models created by Google DeepMind. Gemini models are built from the ground up to be multimodal, so you can reason seamlessly across text, images, and code.

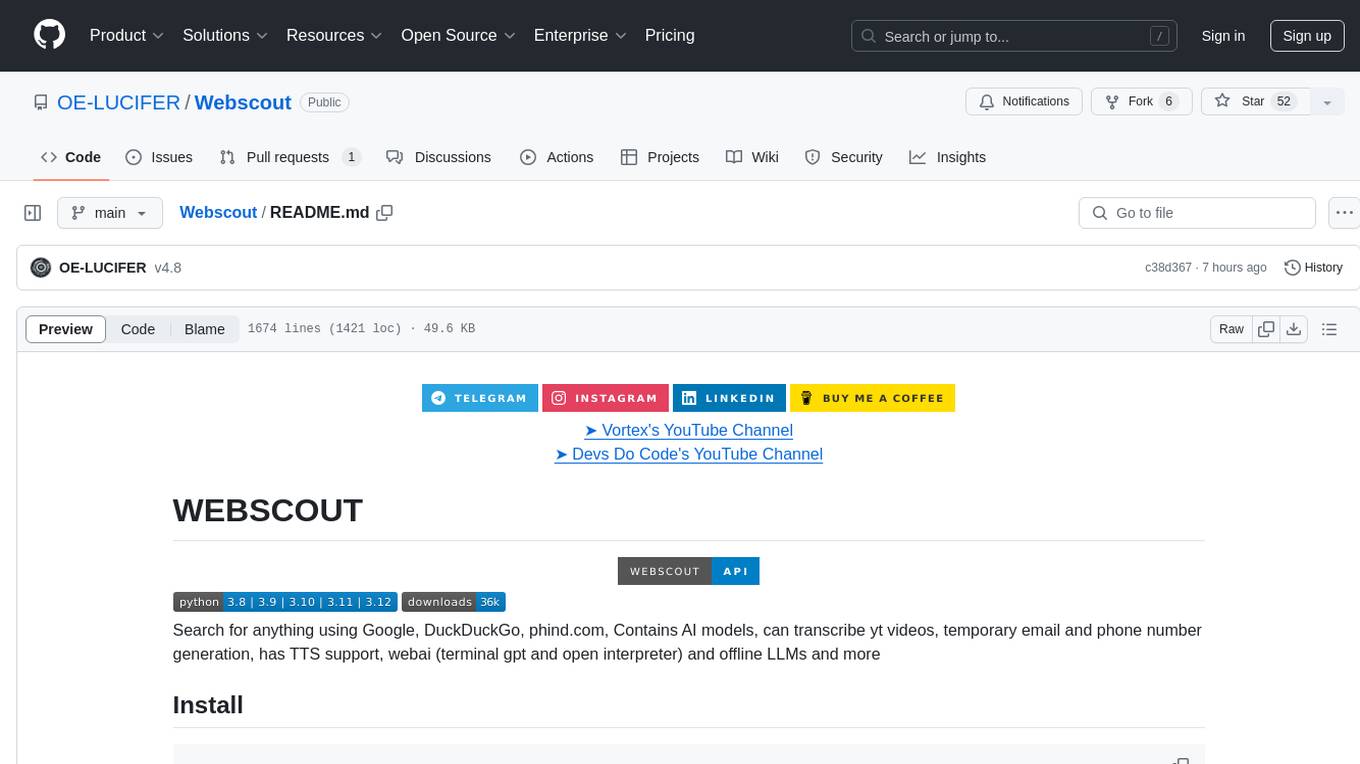

Webscout

WebScout is a versatile tool that allows users to search for anything using Google, DuckDuckGo, and phind.com. It contains AI models, can transcribe YouTube videos, generate temporary email and phone numbers, has TTS support, webai (terminal GPT and open interpreter), and offline LLMs. It also supports features like weather forecasting, YT video downloading, temp mail and number generation, text-to-speech, advanced web searches, and more.

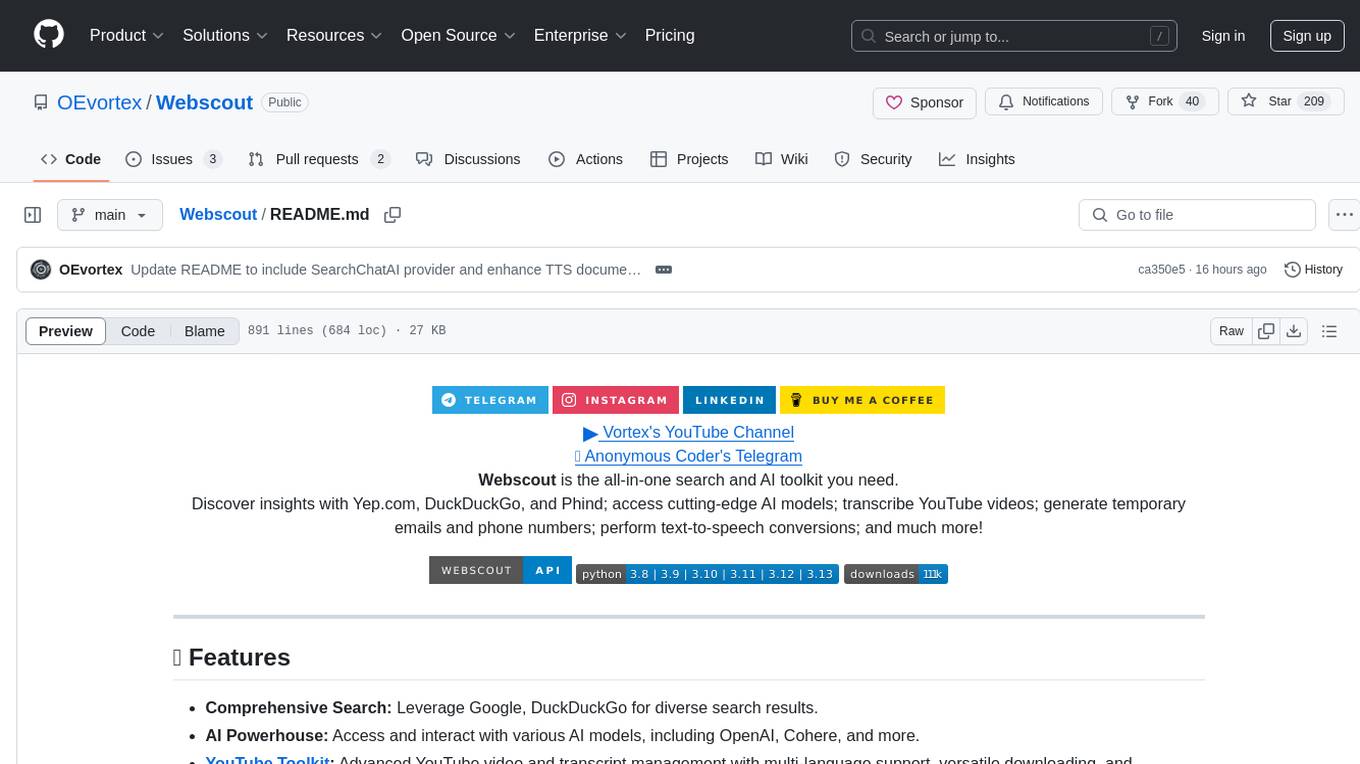

Webscout

Webscout is an all-in-one Python toolkit for web search, AI interaction, digital utilities, and more. It provides access to diverse search engines, cutting-edge AI models, temporary communication tools, media utilities, developer helpers, and powerful CLI interfaces through a unified library. With features like comprehensive search leveraging Google and DuckDuckGo, AI powerhouse for accessing various AI models, YouTube toolkit for video and transcript management, GitAPI for GitHub data extraction, Tempmail & Temp Number for privacy, Text-to-Speech conversion, GGUF conversion & quantization, SwiftCLI for CLI interfaces, LitPrinter for styled console output, LitLogger for logging, LitAgent for user agent generation, Text-to-Image generation, Scout for web parsing and crawling, Awesome Prompts for specialized tasks, Weather Toolkit, and AI Search Providers.

js-genai

The Google Gen AI JavaScript SDK is an experimental SDK for TypeScript and JavaScript developers to build applications powered by Gemini. It supports both the Gemini Developer API and Vertex AI. The SDK is designed to work with Gemini 2.0 features. Users can access API features through the GoogleGenAI classes, which provide submodules for querying models, managing caches, creating chats, uploading files, and starting live sessions. The SDK also allows for function calling to interact with external systems. Users can find more samples in the GitHub samples directory.

omniai

OmniAI provides a unified Ruby API for integrating with multiple AI providers, streamlining AI development by offering a consistent interface for features such as chat, text-to-speech, speech-to-text, and embeddings. It ensures seamless interoperability across platforms and effortless switching between providers, making integrations more flexible and reliable.

model.nvim

model.nvim is a tool designed for Neovim users who want to utilize AI models for completions or chat within their text editor. It allows users to build prompts programmatically with Lua, customize prompts, experiment with multiple providers, and use both hosted and local models. The tool supports features like provider agnosticism, programmatic prompts in Lua, async and multistep prompts, streaming completions, and chat functionality in 'mchat' filetype buffer. Users can customize prompts, manage responses, and context, and utilize various providers like OpenAI ChatGPT, Google PaLM, llama.cpp, ollama, and more. The tool also supports treesitter highlights and folds for chat buffers.

strictjson

Strict JSON is a framework designed to handle JSON outputs with complex structures, fixing issues that standard json.loads() cannot resolve. It provides functionalities for parsing LLM outputs into dictionaries, supporting various data types, type forcing, and error correction. The tool allows easy integration with OpenAI JSON Mode and offers community support through tutorials and discussions. Users can download the package via pip, set up API keys, and import functions for usage. The tool works by extracting JSON values using regex, matching output values to literals, and ensuring all JSON fields are output by LLM with optional type checking. It also supports LLM-based checks for type enforcement and error correction loops.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

hezar

Hezar is an all-in-one AI library designed specifically for the Persian community. It brings together various AI models and tools, making it easy to use AI with just a few lines of code. The library seamlessly integrates with Hugging Face Hub, offering a developer-friendly interface and task-based model interface. In addition to models, Hezar provides tools like word embeddings, tokenizers, feature extractors, and more. It also includes supplementary ML tools for deployment, benchmarking, and optimization.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

sparkle

Sparkle is a tool that streamlines the process of building AI-driven features in applications using Large Language Models (LLMs). It guides users through creating and managing agents, defining tools, and interacting with LLM providers like OpenAI. Sparkle allows customization of LLM provider settings, model configurations, and provides a seamless integration with Sparkle Server for exposing agents via an OpenAI-compatible chat API endpoint.

instructor

Instructor is a Python library that makes it a breeze to work with structured outputs from large language models (LLMs). Built on top of Pydantic, it provides a simple, transparent, and user-friendly API to manage validation, retries, and streaming responses. Get ready to supercharge your LLM workflows!

xsai

xsAI is an extra-small AI SDK designed for Browser, Node.js, Deno, Bun, or Edge Runtime. It provides a series of utils to help users utilize OpenAI or OpenAI-compatible APIs. The SDK is lightweight and efficient, using a variety of methods to minimize its size. It is runtime-agnostic, working seamlessly across different environments without depending on Node.js Built-in Modules. Users can easily install specific utils like generateText or streamText, and leverage tools like weather to perform tasks such as getting the weather in a location.

For similar tasks

floneum

Floneum is a graph editor that makes it easy to develop your own AI workflows. It uses large language models (LLMs) to run AI models locally, without any external dependencies or even a GPU. This makes it easy to use LLMs with your own data, without worrying about privacy. Floneum also has a plugin system that allows you to improve the performance of LLMs and make them work better for your specific use case. Plugins can be used in any language that supports web assembly, and they can control the output of LLMs with a process similar to JSONformer or guidance.

llm-answer-engine

This repository contains the code and instructions needed to build a sophisticated answer engine that leverages the capabilities of Groq, Mistral AI's Mixtral, Langchain.JS, Brave Search, Serper API, and OpenAI. Designed to efficiently return sources, answers, images, videos, and follow-up questions based on user queries, this project is an ideal starting point for developers interested in natural language processing and search technologies.

discourse-ai

Discourse AI is a plugin for the Discourse forum software that uses artificial intelligence to improve the user experience. It can automatically generate content, moderate posts, and answer questions. This can free up moderators and administrators to focus on other tasks, and it can help to create a more engaging and informative community.

Gemini-API

Gemini-API is a reverse-engineered asynchronous Python wrapper for Google Gemini web app (formerly Bard). It provides features like persistent cookies, ImageFx support, extension support, classified outputs, official flavor, and asynchronous operation. The tool allows users to generate contents from text or images, have conversations across multiple turns, retrieve images in response, generate images with ImageFx, save images to local files, use Gemini extensions, check and switch reply candidates, and control log level.

genai-for-marketing

This repository provides a deployment guide for utilizing Google Cloud's Generative AI tools in marketing scenarios. It includes step-by-step instructions, examples of crafting marketing materials, and supplementary Jupyter notebooks. The demos cover marketing insights, audience analysis, trendspotting, content search, content generation, and workspace integration. Users can access and visualize marketing data, analyze trends, improve search experience, and generate compelling content. The repository structure includes backend APIs, frontend code, sample notebooks, templates, and installation scripts.

generative-ai-dart

The Google Generative AI SDK for Dart enables developers to utilize cutting-edge Large Language Models (LLMs) for creating language applications. It provides access to the Gemini API for generating content using state-of-the-art models. Developers can integrate the SDK into their Dart or Flutter applications to leverage powerful AI capabilities. It is recommended to use the SDK for server-side API calls to ensure the security of API keys and protect against potential key exposure in mobile or web apps.

Dough

Dough is a tool for crafting videos with AI, allowing users to guide video generations with precision using images and example videos. Users can create guidance frames, assemble shots, and animate them by defining parameters and selecting guidance videos. The tool aims to help users make beautiful and unique video creations, providing control over the generation process. Setup instructions are available for Linux and Windows platforms, with detailed steps for installation and running the app.

ChaKt-KMP

ChaKt is a multiplatform app built using Kotlin and Compose Multiplatform to demonstrate the use of Generative AI SDK for Kotlin Multiplatform to generate content using Google's Generative AI models. It features a simple chat based user interface and experience to interact with AI. The app supports mobile, desktop, and web platforms, and is built with Kotlin Multiplatform, Kotlin Coroutines, Compose Multiplatform, Generative AI SDK, Calf - File picker, and BuildKonfig. Users can contribute to the project by following the guidelines in CONTRIBUTING.md. The app is licensed under the MIT License.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.