Best AI tools for< Tune Models >

20 - AI tool Sites

Sapien.io

Sapien.io is a decentralized data foundry that offers data labeling services powered by a decentralized workforce and gamified platform. The platform provides high-quality training data for large language models through a human-in-the-loop labeling process, enabling fine-tuning of datasets to build performant AI models. Sapien combines AI and human intelligence to collect and annotate various data types for any model, offering customized data collection and labeling models across industries.

Helix AI

Helix AI is a private GenAI platform that enables users to build AI applications using open source models. The platform offers tools for RAG (Retrieval-Augmented Generation) and fine-tuning, allowing deployment on-premises or in a Virtual Private Cloud (VPC). Users can access curated models, utilize Helix API tools to connect internal and external APIs, embed Helix Assistants into websites/apps for chatbot functionality, write AI application logic in natural language, and benefit from the innovative RAG system for Q&A generation. Additionally, users can fine-tune models for domain-specific needs and deploy securely on Kubernetes or Docker in any cloud environment. Helix Cloud offers free and premium tiers with GPU priority, catering to individuals, students, educators, and companies of varying sizes.

Together AI

Together AI is an AI Acceleration Cloud platform that offers fast inference, fine-tuning, and training services. It provides self-service NVIDIA GPUs, model deployment on custom hardware, AI chat app, code execution sandbox, and tools to find the right model for specific use cases. The platform also includes a model library with open-source models, documentation for developers, and resources for advancing open-source AI. Together AI enables users to leverage pre-trained models, fine-tune them, or build custom models from scratch, catering to various generative AI needs.

Welo Data

Welo Data is an AI tool that specializes in AI benchmarking, model assessment, and training high-quality datasets for AI models. The platform offers services such as supervised fine tuning, reinforcement learning with human feedback, data generation, expert evaluations, and data quality framework to support the development of world-class AI models. With over 27 years of experience, Welo Data combines language expertise and AI data to deliver exceptional training and performance evaluation solutions.

Labelbox

Labelbox is a data factory platform that empowers AI teams to manage data labeling, train models, and create better data with internet scale RLHF platform. It offers an all-in-one solution comprising tooling and services powered by a global community of domain experts. Labelbox operates a global data labeling infrastructure and operations for AI workloads, providing expert human network for data labeling in various domains. The platform also includes AI-assisted alignment for maximum efficiency, data curation, model training, and labeling services. Customers achieve breakthroughs with high-quality data through Labelbox.

Replicate

Replicate is an AI tool that allows users to run and fine-tune models, deploy custom models at scale, and generate various types of content such as images, videos, music, and text with just one line of code. It provides access to a wide range of high-quality models contributed by the community, enabling users to explore, fine-tune, and deploy AI models efficiently. Replicate aims to make AI accessible and practical for real-world applications beyond academic research and demos.

Flux LoRA Model Library

Flux LoRA Model Library is an AI tool that provides a platform for finding and using Flux LoRA models suitable for various projects. Users can browse a catalog of popular Flux LoRA models and learn about FLUX models and LoRA (Low-Rank Adaptation) technology. The platform offers resources for fine-tuning models and ensuring responsible use of generated images.

FriendliAI

FriendliAI is a generative AI infrastructure company that offers efficient, fast, and reliable generative AI inference solutions for production. Their cutting-edge technologies enable groundbreaking performance improvements, cost savings, and lower latency. FriendliAI provides a platform for building and serving compound AI systems, deploying custom models effortlessly, and monitoring and debugging model performance. The application guarantees consistent results regardless of the model used and offers seamless data integration for real-time knowledge enhancement. With a focus on security, scalability, and performance optimization, FriendliAI empowers businesses to scale with ease.

TrainEngine.ai

TrainEngine.ai is a powerful AI-powered image generation tool that allows users to create stunning, unique images from text prompts. With its advanced algorithms and vast dataset, TrainEngine.ai can generate images in a wide range of styles, from realistic to abstract, and in various formats, including photos, paintings, and illustrations. The platform is easy to use, making it accessible to both professional artists and hobbyists alike. TrainEngine.ai offers a range of features, including the ability to fine-tune models, generate unlimited AI assets, and access trending models. It also provides a marketplace where users can buy and sell AI-generated images.

FairPlay

FairPlay is a Fairness-as-a-Service solution designed for financial institutions, offering AI-powered tools to assess automated decisioning models quickly. It helps in increasing fairness and profits by optimizing marketing, underwriting, and pricing strategies. The application provides features such as Fairness Optimizer, Second Look, Customer Composition, Redline Status, and Proxy Detection. FairPlay enables users to identify and overcome tradeoffs between performance and disparity, assess geographic fairness, de-bias proxies for protected classes, and tune models to reduce disparities without increasing risk. It offers advantages like increased compliance, speed, and readiness through automation, higher approval rates with no increase in risk, and rigorous Fair Lending analysis for sponsor banks and regulators. However, some disadvantages include the need for data integration, potential bias in AI algorithms, and the requirement for technical expertise to interpret results.

Tensoic AI

Tensoic AI is an AI tool designed for custom Large Language Models (LLMs) fine-tuning and inference. It offers ultra-fast fine-tuning and inference capabilities for enterprise-grade LLMs, with a focus on use case-specific tasks. The tool is efficient, cost-effective, and easy to use, enabling users to outperform general-purpose LLMs using synthetic data. Tensoic AI generates small, powerful models that can run on consumer-grade hardware, making it ideal for a wide range of applications.

TractoAI

TractoAI is an advanced AI platform that offers deep learning solutions for various industries. It provides Batch Inference with no rate limits, DeepSeek offline inference, and helps in training open source AI models. TractoAI simplifies training infrastructure setup, accelerates workflows with GPUs, and automates deployment and scaling for tasks like ML training and big data processing. The platform supports fine-tuning models, sandboxed code execution, and building custom AI models with distributed training launcher. It is developer-friendly, scalable, and efficient, offering a solution library and expert guidance for AI projects.

Lambda Docs

Lambda Docs is an AI tool that provides cloud and hardware solutions for individuals, teams, and organizations. It offers services such as Managed Kubernetes, Preinstalled Kubernetes, Slurm, and access to GPU clusters. The platform also provides educational resources and tutorials for machine learning engineers and researchers to fine-tune models and deploy AI solutions.

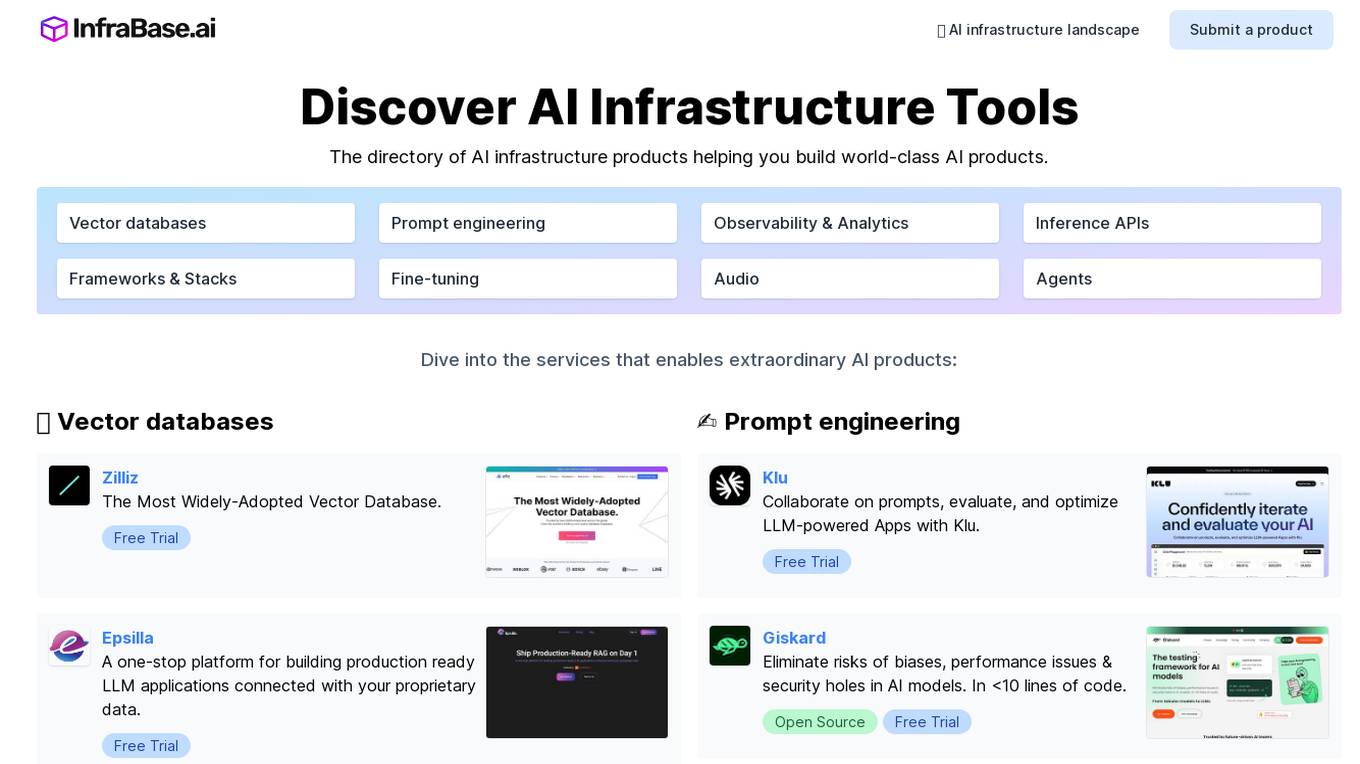

Infrabase.ai

Infrabase.ai is a directory of AI infrastructure products that helps users discover and explore a wide range of tools for building world-class AI products. The platform offers a comprehensive directory of products in categories such as Vector databases, Prompt engineering, Observability & Analytics, Inference APIs, Frameworks & Stacks, Fine-tuning, Audio, and Agents. Users can find tools for tasks like data storage, model development, performance monitoring, and more, making it a valuable resource for AI projects.

Scale AI

Scale AI is an AI tool that accelerates the development of AI applications for various sectors including enterprise, government, and automotive industries. It offers solutions for training models, fine-tuning, generative AI, and model evaluations. Scale Data Engine and GenAI Platform enable users to leverage enterprise data effectively. The platform collaborates with leading AI models and provides high-quality data for public and private sector applications.

Nscale

Nscale is a full-stack, scalable, and sustainable AI cloud platform that offers a wide range of AI services and solutions. It provides services for developing, training, tuning, and deploying AI models using on-demand services. Nscale also offers serverless inference API endpoints, fine-tuning capabilities, private cloud solutions, and various GPU clusters engineered for AI. The platform aims to simplify the journey from AI model development to production, offering a marketplace for AI/ML tools and resources. Nscale's infrastructure includes data centers powered by renewable energy, high-performance GPU nodes, and optimized networking and storage solutions.

Cerebras

Cerebras is a leading AI tool and application provider that offers cutting-edge AI supercomputers, model services, and cloud solutions for various industries. The platform specializes in high-performance computing, large language models, and AI model training, catering to sectors such as health, energy, government, and financial services. Cerebras empowers developers and researchers with access to advanced AI models, open-source resources, and innovative hardware and software development kits.

Toloka AI

Toloka AI is a data labeling platform that empowers AI development by combining human insight with machine learning models. It offers adaptive AutoML, human-in-the-loop workflows, large language models, and automated data labeling. The platform supports various AI solutions with human input, such as e-commerce services, content moderation, computer vision, and NLP. Toloka AI aims to accelerate machine learning processes by providing high-quality human-labeled data and leveraging the power of the crowd.

fal.ai

fal.ai is a generative media platform designed for developers to build the next generation of creativity. It offers lightning-fast inference with no compromise on quality, providing access to high-quality generative media models optimized by the fal Inference Engine™. The platform allows developers to fine-tune their own models, leverage real-time infrastructure for new user experiences, and scale to thousands of GPUs as needed. With a focus on developer experience, fal.ai aims to be the fastest AI tool for running diffusion models.

Together AI

Together AI is an AI tool that offers a variety of generative AI services, including serverless models, fine-tuning capabilities, dedicated endpoints, and GPU clusters. Users can run or fine-tune leading open source models with only a few lines of code. The platform provides a range of functionalities for tasks such as chat, vision, text-to-speech, code/language reranking, and more. Together AI aims to simplify the process of utilizing AI models for various applications.

3 - Open Source AI Tools

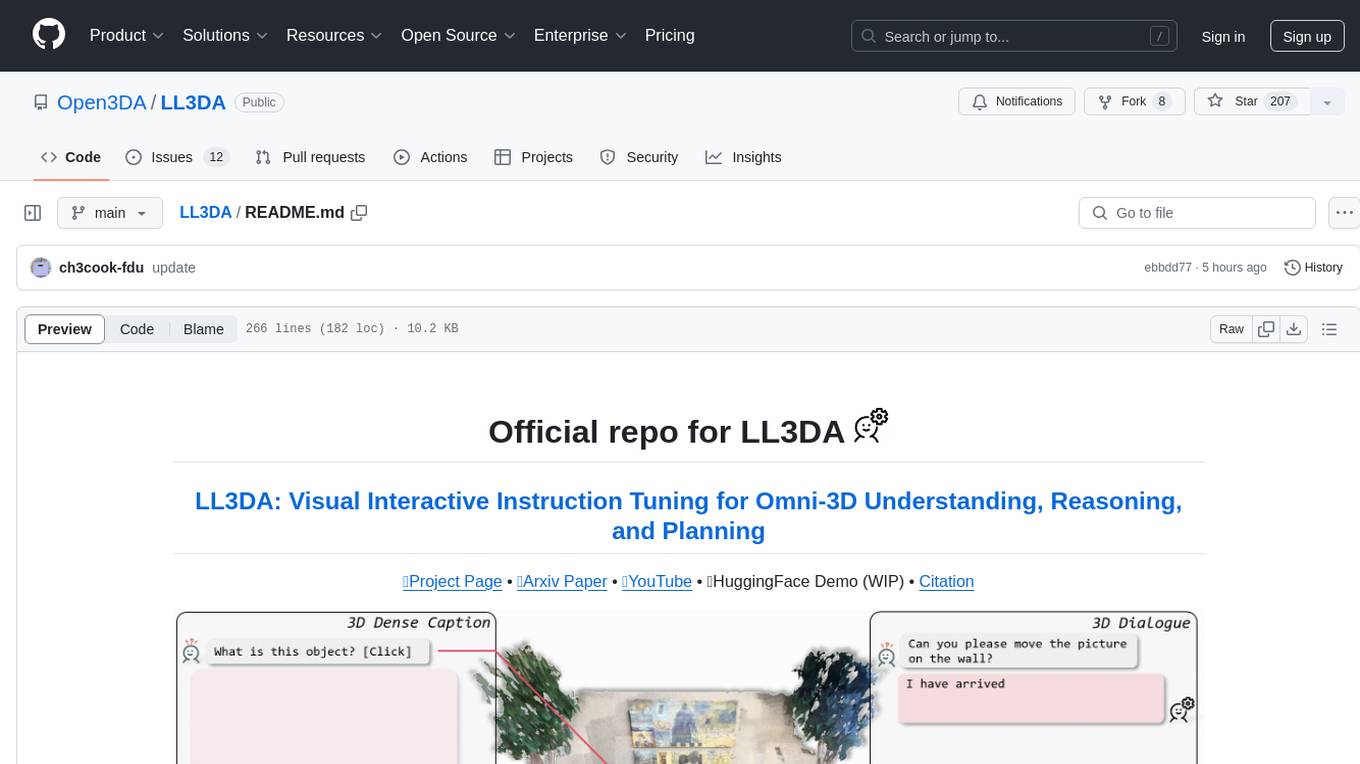

LL3DA

LL3DA is a Large Language 3D Assistant that responds to both visual and textual interactions within complex 3D environments. It aims to help Large Multimodal Models (LMM) comprehend, reason, and plan in diverse 3D scenes by directly taking point cloud input and responding to textual instructions and visual prompts. LL3DA achieves remarkable results in 3D Dense Captioning and 3D Question Answering, surpassing various 3D vision-language models. The code is fully released, allowing users to train customized models and work with pre-trained weights. The tool supports training with different LLM backends and provides scripts for tuning and evaluating models on various tasks.

python-genai

The Google Gen AI SDK is a Python library that provides access to Google AI and Vertex AI services. It allows users to create clients for different services, work with parameter types, models, generate content, call functions, handle JSON response schemas, stream text and image content, perform async operations, count and compute tokens, embed content, generate and upscale images, edit images, work with files, create and get cached content, tune models, distill models, perform batch predictions, and more. The SDK supports various features like automatic function support, manual function declaration, JSON response schema support, streaming for text and image content, async methods, tuning job APIs, distillation, batch prediction, and more.

kaito

KAITO is an operator that automates the AI/ML model inference or tuning workload in a Kubernetes cluster. It manages large model files using container images, provides preset configurations to avoid adjusting workload parameters based on GPU hardware, supports popular open-sourced inference runtimes, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry. Using KAITO simplifies the workflow of onboarding large AI inference models in Kubernetes.

20 - OpenAI Gpts

HuggingFace Helper

A witty yet succinct guide for HuggingFace, offering technical assistance on using the platform - based on their Learning Hub

Pytorch Trainer GPT

Your purpose is to create the pytorch code to train language models using pytorch

Tune Tailor: Playlist Pal

I find and create playlists based on mood, genre, and activities.

Text Tune Up GPT

I edit articles, improving clarity and respectfulness, maintaining your style.

The Name That Tune Game - from lyrics

Joyful music expert in song lyrics, offering trivia, insights, and engaging music discussions.

Joke Smith | Joke Edits for Standup Comedy

A witty editor to fine-tune stand-up comedy jokes.

Rewrite This Song: Lyrics Generator

I rewrite song lyrics to new themes, keeping the tune and essence of the original.

Dr. Tuning your Sim Racing doctor

Your quirky pit crew chief for top-notch sim racing advice

アダチさん12号(Oracle RDBMS篇)

安達孝一さんがSE時代に蓄積してきた、Oracle RDBMSのナレッジやノウハウ等 (Oracle 7/8.1.6/8.1.7/9iR1/9iR2/10gR1/10gR2/11gR2/12c/SQLチューニング) について、ご質問頂けます。また、対話内容を基に、ChatGPT(GPT-4)向けの、汎用的な質問文例も作成できます。

Drone Buddy

An FPV drone specialist aiding in building, tuning, and learning about the hobby.