strictjson

A Structured Output Framework for LLM Outputs

Stars: 336

Strict JSON is a framework designed to handle JSON outputs with complex structures, fixing issues that standard json.loads() cannot resolve. It provides functionalities for parsing LLM outputs into dictionaries, supporting various data types, type forcing, and error correction. The tool allows easy integration with OpenAI JSON Mode and offers community support through tutorials and discussions. Users can download the package via pip, set up API keys, and import functions for usage. The tool works by extracting JSON values using regex, matching output values to literals, and ensuring all JSON fields are output by LLM with optional type checking. It also supports LLM-based checks for type enforcement and error correction loops.

README:

- YAML is much more concise than JSON, and avoids a lot of problems faced with quotations and brackets

- YAML also outputs code blocks much better with multi-line literal-block scalar (|), and the code following it can be totally free of any unnecessary backslash escape characters as it is not in a string

- LLMs now are quite good at interpreting YAML than when this repo was first started

- Parses the LLM output as a YAML, and converts it to dict

- Uses concise

output_formatto save tokens - Converts

output_formatinto pydantic schema automatically, and uses pydantic to validate output - Able to process datatypes:

int,float,str,bool,list,dict,date,datetime,time,UUID,Decimal - Able to process:

None,Any,Union,Optional - Default datatype when not specified is

Any - Error correction of up to

num_triestimes (default: 3)

def llm(system_prompt: str, user_prompt: str) -> str:

''' Here, we use OpenAI for illustration, you can change it to your own local/remote LLM '''

# ensure your LLM imports are all within this function

from openai import OpenAI

# define your own LLM here

client = OpenAI()

response = client.chat.completions.create(

model='gpt-4o-mini',

temperature = 0,

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_prompt}

]

)

return response.choices[0].message.contentparse_yaml(system_prompt = "Give me 5 names on a topic",

user_prompt = "weather",

output_format = {"Names": "Great sounding names, List[str]",

"Meanings": "Name and meaning, dict",

"Chinese Meanings": "Name and meaning in chinese, dict",

"Lucky Name or Number": "List[Union[int, str]]",

"Code": "Python code to generate 5 names"},

llm = llm){'Names': ['Aurora', 'Zephyr', 'Nimbus', 'Solstice', 'Tempest'],

'Meanings': {'Aurora': 'Dawn',

'Zephyr': 'Gentle breeze',

'Nimbus': 'Rain cloud',

'Solstice': 'Sun standing still',

'Tempest': 'Violent storm'},

'Chinese Meanings': {'Aurora': '曙光',

'Zephyr': '微风',

'Nimbus': '雨云',

'Solstice': '至日',

'Tempest': '暴风'},

'Lucky Name or Number': [7, '13', 3, 'Lucky', 21],

'Code': 'import random\n\ndef generate_weather_names():\n names = ["Aurora", "Zephyr", "Nimbus", "Solstice", "Tempest"]\n return random.sample(names, 5)\n\nprint(generate_weather_names())'}

For Agentic Framework, do check out AgentJo (the official Agentic Framework building on StrictJSON). This will make the StrictJSON repo neater and this github will focus on using StrictJSON for LLM Output Parsing

- Ensures LLM outputs into a dictionary based on a JSON format (HUGE: Nested lists and dictionaries now supported)

- Works for JSON outputs with multiple ' or " or { or } or \ or unmatched braces/brackets that may break a json.loads()

- Supports

int,float,str,dict,list,array,code,Dict[],List[],Enum[],booltype forcing with LLM-based error correction, as well as LLM-based error correction usingtype: ensure <restriction>, and (advanced) custom user checks usingcustom_checks - Easy construction of LLM-based functions using

Function(Note: renamed fromstrict_functionto keep in line with naming convention of capitalised class groups.strict_functionstill works for legacy support.) - Easy integration with OpenAI JSON Mode by setting

openai_json_mode = True - Exposing of llm variable for

strict_jsonandFunctionfor easy use of self-defined LLMs -

AsyncFunctionandstrict_json_asyncfor async (and faster) processing

- Created: 7 Apr 2023

- Collaborators welcome

- Video tutorial (Ask Me Anything): https://www.youtube.com/watch?v=L4aytve5v1Q

- Video tutorial: https://www.youtube.com/watch?v=IjTUKAciTCg

- Discussion Channel (my discord - John's AI Group): discord.gg/bzp87AHJy5

- Download package via command line

pip install strictjson - Import the required functions from

strictjson - Set up the relevant API Keys for your LLM if needed. Refer to

Tutorial.ipynbfor how to do it for Jupyter Notebooks.

- Extract JSON values as a string using a special regex (add delimiters to

keyto make###key###) to split keys and values. (New!) Also works for nested datatypes by splitting recursively. - Uses

ast.literal_evalto best match the extracted output value to a literal (e.g. int, string, dict). - Ensures that all JSON fields are output by LLM, with optional type checking, if not it will feed in error message to LLM to iteratively correct its generation (default: 3 tries)

- system_prompt: Write in whatever you want the LLM to become. "You are a <purpose in life>"

- user_prompt: The user input. Later, when we use it as a function, this is the function input

-

output_format: JSON of output variables in a dictionary, with the key as the output key, and the value as the output description

- The output keys will be preserved exactly, while the LLM will generate content to match the description of the value as best as possible

-

llm: The llm you want to use. Takes in

system_promptanduser_promptand outputs the LLM-generated string

res = strict_json(system_prompt = 'You are a classifier',

user_prompt = 'It is a beautiful and sunny day',

output_format = {'Sentiment': 'Type of Sentiment',

'Adjectives': 'Array of adjectives',

'Words': 'Number of words'},

llm = llm)

print(res){'Sentiment': 'Positive', 'Adjectives': ['beautiful', 'sunny'], 'Words': 7}

- More advanced demonstration involving code that would typically break

json.loads()

res = strict_json(system_prompt = 'You are a code generator, generating code to fulfil a task',

user_prompt = 'Given array p, output a function named func_sum to return its sum',

output_format = {'Elaboration': 'How you would do it',

'C': 'Code',

'Python': 'Code'},

llm = llm)

print(res){'Elaboration': 'Use a loop to iterate through each element in the array and add it to a running total.',

'C': 'int func_sum(int p[], int size) {\n int sum = 0;\n for (int i = 0; i < size; i++) {\n sum += p[i];\n }\n return sum;\n}',

'Python': 'def func_sum(p):\n sum = 0\n for num in p:\n sum += num\n return sum'}

- Generally,

strict_jsonwill infer the data type automatically for you for the output fields - However, if you would like very specific data types, you can do data forcing using

type: <data_type>at the last part of the output field description -

<data_type>must be of the formint,float,str,dict,list,array,code,Dict[],List[],Array[],Enum[],boolfor type checking to work -

coderemoves all unicode escape characters that might interfere with normal code running - The

EnumandListare not case sensitive, soenumandlistworks just as well - For

Enum[list_of_category_names], it is best to give an "Other" category in case the LLM fails to classify correctly with the other options. - If

listorList[]is not formatted correctly in LLM's output, we will correct it by asking the LLM to list out the elements line by line - For

dict, we can further check whether keys are present usingDict[list_of_key_names] - Other types will first be forced by rule-based conversion, any further errors will be fed into LLM's error feedback mechanism

- If

<data_type>is not the specified data types, it can still be useful to shape the output for the LLM. However, no type checking will be done. - Note: LLM understands the word

Arraybetter thanListsinceArrayis the official JSON object type, so in the backend, any type with the wordListwill be converted toArray.

- If you would like the LLM to ensure that the type is being met, use

type: ensure <requirement> - This will run a LLM to check if the requirement is met. If requirement is not met, the LLM will generate what needs to be done to meet the requirement, which will be fed into the error-correcting loop of

strict_json

res = strict_json(system_prompt = 'You are a classifier',

user_prompt = 'It is a beautiful and sunny day',

output_format = {'Sentiment': 'Type of Sentiment, type: Enum["Pos", "Neg", "Other"]',

'Adjectives': 'Array of adjectives, type: List[str]',

'Words': 'Number of words, type: int',

'In English': 'Whether sentence is in English, type: bool'},

llm = llm)

print(res){'Sentiment': 'Pos', 'Adjectives': ['beautiful', 'sunny'], 'Words': 7, 'In English': True}

res = strict_json(system_prompt = 'You are an expert at organising birthday parties',

user_prompt = 'Give me some information on how to organise a birthday',

output_format = {'Famous Quote about Age': 'type: ensure quote contains the word age',

'Lucky draw numbers': '3 numbers from 1-50, type: List[int]',

'Sample venues': 'Describe two venues, type: List[Dict["Venue", "Description"]]'},

llm = llm)

print(res)Using LLM to check "The secret of staying young is to live honestly, eat slowly, and lie about your age. - Lucille Ball" to see if it adheres to "quote contains the word age" Requirement Met: True

{'Famous Quote about Age': 'The secret of staying young is to live honestly, eat slowly, and lie about your age. - Lucille Ball',

'Lucky draw numbers': [7, 21, 35],

'Sample venues': [{'Venue': 'Beachside Resort', 'Description': 'A beautiful resort with stunning views of the beach. Perfect for a summer birthday party.'}, {'Venue': 'Indoor Trampoline Park', 'Description': 'An exciting venue with trampolines and fun activities. Ideal for an active and energetic birthday celebration.'}]}

-

Enhances

strict_json()with a function-like interface for repeated use of modular LLM-based functions (or wraps external functions) -

Use angle brackets <> to enclose input variable names. First input variable name to appear in

fn_descriptionwill be first input variable and second to appear will be second input variable. For example,fn_description = 'Adds up two numbers, <var1> and <var2>'will result in a function with first input variablevar1and second input variablevar2 -

(Optional) If you would like greater specificity in your function's input, you can describe the variable after the : in the input variable name, e.g.

<var1: an integer from 10 to 30>. Here,var1is the input variable andan integer from 10 to 30is the description. -

(Optional) If your description of the variable is one of

int,float,str,dict,list,array,code,Dict[],List[],Array[],Enum[],bool, we will enforce type checking when generating the function inputs inget_next_subtaskmethod of theAgentclass. Example:<var1: int>. Refer to Section 3. Type Forcing Output Variables for details. -

Inputs (primary):

-

fn_description: String. Function description to describe process of transforming input variables to output variables. Variables must be enclosed in <> and listed in order of appearance in function input.

- New feature: If

external_fnis provided and nofn_descriptionis provided, then we will automatically parse out the fn_description based on docstring ofexternal_fn. The docstring should contain the names of all compulsory input variables - New feature: If

external_fnis provided and nooutput_formatis provided, then we will automatically derive theoutput_formatfrom the function signature

- New feature: If

- output_format: Dict. Dictionary containing output variables names and description for each variable.

-

fn_description: String. Function description to describe process of transforming input variables to output variables. Variables must be enclosed in <> and listed in order of appearance in function input.

-

Inputs (optional):

- examples - Dict or List[Dict]. Examples in Dictionary form with the input and output variables (list if more than one)

-

external_fn - Python Function. If defined, instead of using LLM to process the function, we will run the external function.

If there are multiple outputs of this function, we will map it to the keys of

output_formatin a one-to-one fashion -

fn_name - String. If provided, this will be the name of the function. Otherwise, if

external_fnis provided, it will be the name ofexternal_fn. Otherwise, we will use LLM to generate a function name from thefn_description - kwargs - Dict. Additional arguments you would like to pass on to the strict_json function

-

Outputs: JSON of output variables in a dictionary (similar to

strict_json)

# basic configuration with variable names (in order of appearance in fn_description)

fn = Function(fn_description = 'Output a sentence with <obj> and <entity> in the style of <emotion>',

output_format = {'output': 'sentence'},

llm = llm)

# Use the function

fn('ball', 'dog', 'happy') #obj, entity, emotion{'output': 'The happy dog chased the ball.'}

# Construct the function: infer pattern from just examples without description (here it is multiplication)

fn = Function(fn_description = 'Map <var1> and <var2> to output based on examples',

output_format = {'output': 'final answer'},

examples = [{'var1': 3, 'var2': 2, 'output': 6},

{'var1': 5, 'var2': 3, 'output': 15},

{'var1': 7, 'var2': 4, 'output': 28}],

llm = llm)

# Use the function

fn(2, 10) #var1, var2{'output': 20}

# Construct the function: description and examples with variable names

# variable names will be referenced in order of appearance in fn_description

fn = Function(fn_description = 'Output the sum and difference of <num1> and <num2>',

output_format = {'sum': 'sum of two numbers',

'difference': 'absolute difference of two numbers'},

examples = {'num1': 2, 'num2': 4, 'sum': 6, 'difference': 2},

llm = llm)

# Use the function

fn(3, 4) #num1, num2{'sum': 7, 'difference': 1}

Example Usage 4 (External Function with automatic inference of fn_description and output_format - Preferred)

# Docstring should provide all input variables, otherwise we will add it in automatically

# We will ignore shared_variables, *args and **kwargs

# No need to define llm in Function for External Functions

from typing import List

def add_number_to_list(num1: int, num_list: List[int], *args, **kwargs) -> List[int]:

'''Adds num1 to num_list'''

num_list.append(num1)

return num_list

fn = Function(external_fn = add_number_to_list)

# Show the processed function docstring

print(str(fn))

# Use the function

fn(3, [2, 4, 5])Description: Adds <num1: int> to <num_list: list>

Input: ['num1', 'num_list']

Output: {'num_list': 'Array of numbers'}

{'num_list': [2, 4, 5, 3]}

Example Usage 5 (External Function with manually defined fn_description and output_format - Legacy Approach)

def binary_to_decimal(x):

return int(str(x), 2)

# an external function with a single output variable, with an expressive variable description

fn = Function(fn_description = 'Convert input <x: a binary number in base 2> to base 10',

output_format = {'output1': 'x in base 10'},

external_fn = binary_to_decimal,

llm = llm)

# Use the function

fn(10) #x{'output1': 2}

- If you want to use the OpenAI JSON Mode, you can simply add in

openai_json_mode = Trueand setmodel = 'gpt-4-1106-preview'ormodel = 'gpt-3.5-turbo-1106'instrict_jsonorFunction - We will set model to

gpt-3.5-turbo-1106by default if you provide an invalid model - This does not work with the

llmvariable - Note that type checking does not work with OpenAI JSON Mode

res = strict_json(system_prompt = 'You are a classifier',

user_prompt = 'It is a beautiful and sunny day',

output_format = {'Sentiment': 'Type of Sentiment',

'Adjectives': 'Array of adjectives',

'Words': 'Number of words'},

model = 'gpt-3.5-turbo-1106' # Set the model

openai_json_mode = True) # Toggle this to True

print(res){'Sentiment': 'positive', 'Adjectives': ['beautiful', 'sunny'], 'Words': 6}

- StrictJSON supports nested outputs like nested lists and dictionaries

res = strict_json(system_prompt = 'You are a classifier',

user_prompt = 'It is a beautiful and sunny day',

output_format = {'Sentiment': ['Type of Sentiment',

'Strength of Sentiment, type: Enum[1, 2, 3, 4, 5]'],

'Adjectives': "Name and Description as separate keys, type: List[Dict['Name', 'Description']]",

'Words': {

'Number of words': 'Word count',

'Language': {

'English': 'Whether it is English, type: bool',

'Chinese': 'Whether it is Chinese, type: bool'

},

'Proper Words': 'Whether the words are proper in the native language, type: bool'

}

},

llm = llm)

print(res){'Sentiment': ['Positive', 3],

'Adjectives': [{'Name': 'beautiful', 'Description': 'pleasing to the senses'}, {'Name': 'sunny', 'Description': 'filled with sunshine'}],

'Words':

{'Number of words': 6,

'Language': {'English': True, 'Chinese': False},

'Proper Words': True}

}

- By default,

strict_jsonreturns a Python Dictionary - If needed to parse as JSON, simply set

return_as_json=True - By default, this is set to

Falsein order to return a Python Dictionry

-

AsyncFunctionandstrict_json_async- These are the async equivalents of

Functionandstrict_json - You will need to define an LLM that can operate in async mode

- Everything is the same as the sync version of the functions, except you use the

awaitkeyword when callingAsyncFunctionandstrict_json_async

- These are the async equivalents of

-

Using Async can help do parallel processes simulataneously, resulting in a much faster workflow

async def llm_async(system_prompt: str, user_prompt: str):

''' Here, we use OpenAI for illustration, you can change it to your own LLM '''

# ensure your LLM imports are all within this function

from openai import AsyncOpenAI

# define your own LLM here

client = AsyncOpenAI()

response = await client.chat.completions.create(

model='gpt-4o-mini',

temperature = 0,

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_prompt}

]

)

return response.choices[0].message.contentres = await strict_json_async(system_prompt = 'You are a classifier',

user_prompt = 'It is a beautiful and sunny day',

output_format = {'Sentiment': 'Type of Sentiment',

'Adjectives': 'Array of adjectives',

'Words': 'Number of words'},

llm = llm_async) # set this to your own LLM

print(res){'Sentiment': 'Positive', 'Adjectives': ['beautiful', 'sunny'], 'Words': 7}

fn = AsyncFunction(fn_description = 'Output a sentence with <obj> and <entity> in the style of <emotion>',

output_format = {'output': 'sentence'},

llm = llm_async) # set this to your own LLM

res = await fn('ball', 'dog', 'happy') #obj, entity, emotion

print(res){'output': 'The dog happily chased the ball.'}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for strictjson

Similar Open Source Tools

strictjson

Strict JSON is a framework designed to handle JSON outputs with complex structures, fixing issues that standard json.loads() cannot resolve. It provides functionalities for parsing LLM outputs into dictionaries, supporting various data types, type forcing, and error correction. The tool allows easy integration with OpenAI JSON Mode and offers community support through tutorials and discussions. Users can download the package via pip, set up API keys, and import functions for usage. The tool works by extracting JSON values using regex, matching output values to literals, and ensuring all JSON fields are output by LLM with optional type checking. It also supports LLM-based checks for type enforcement and error correction loops.

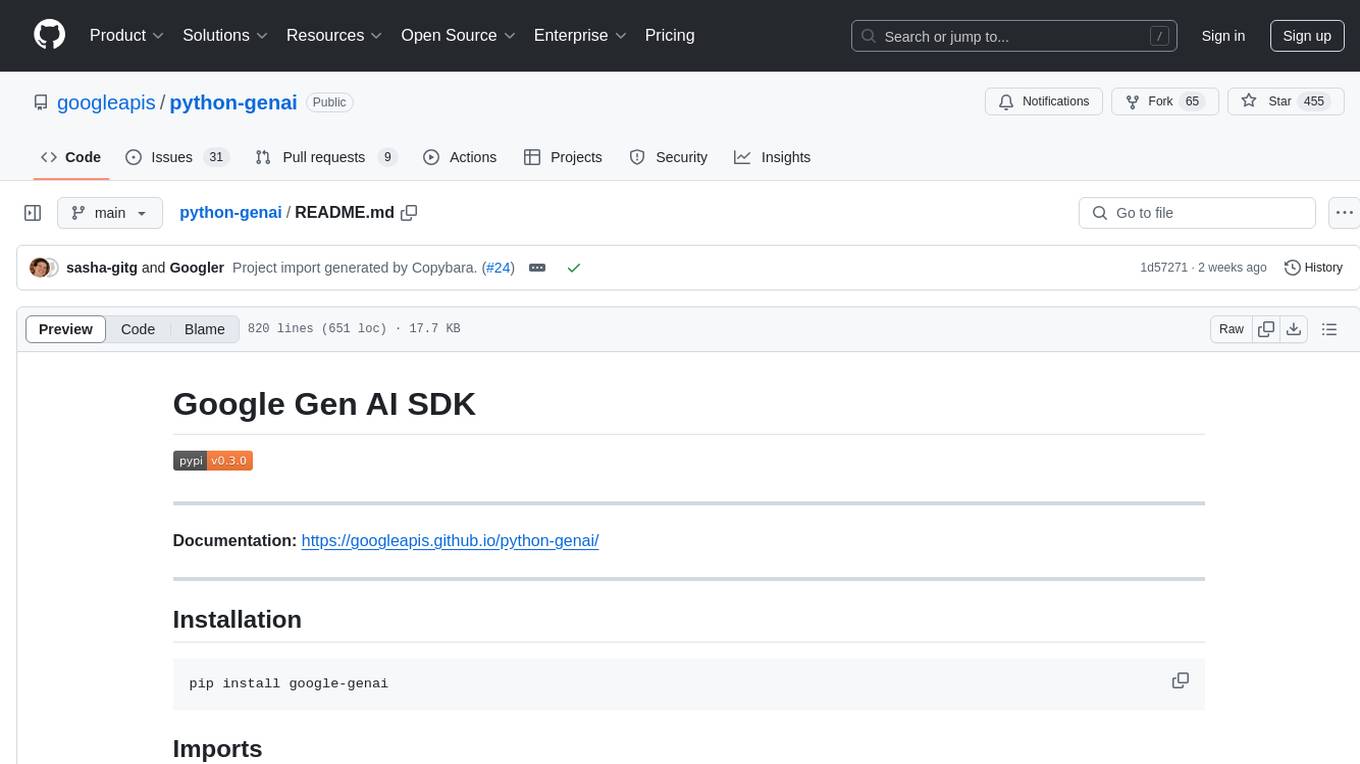

python-genai

The Google Gen AI SDK is a Python library that provides access to Google AI and Vertex AI services. It allows users to create clients for different services, work with parameter types, models, generate content, call functions, handle JSON response schemas, stream text and image content, perform async operations, count and compute tokens, embed content, generate and upscale images, edit images, work with files, create and get cached content, tune models, distill models, perform batch predictions, and more. The SDK supports various features like automatic function support, manual function declaration, JSON response schema support, streaming for text and image content, async methods, tuning job APIs, distillation, batch prediction, and more.

model.nvim

model.nvim is a tool designed for Neovim users who want to utilize AI models for completions or chat within their text editor. It allows users to build prompts programmatically with Lua, customize prompts, experiment with multiple providers, and use both hosted and local models. The tool supports features like provider agnosticism, programmatic prompts in Lua, async and multistep prompts, streaming completions, and chat functionality in 'mchat' filetype buffer. Users can customize prompts, manage responses, and context, and utilize various providers like OpenAI ChatGPT, Google PaLM, llama.cpp, ollama, and more. The tool also supports treesitter highlights and folds for chat buffers.

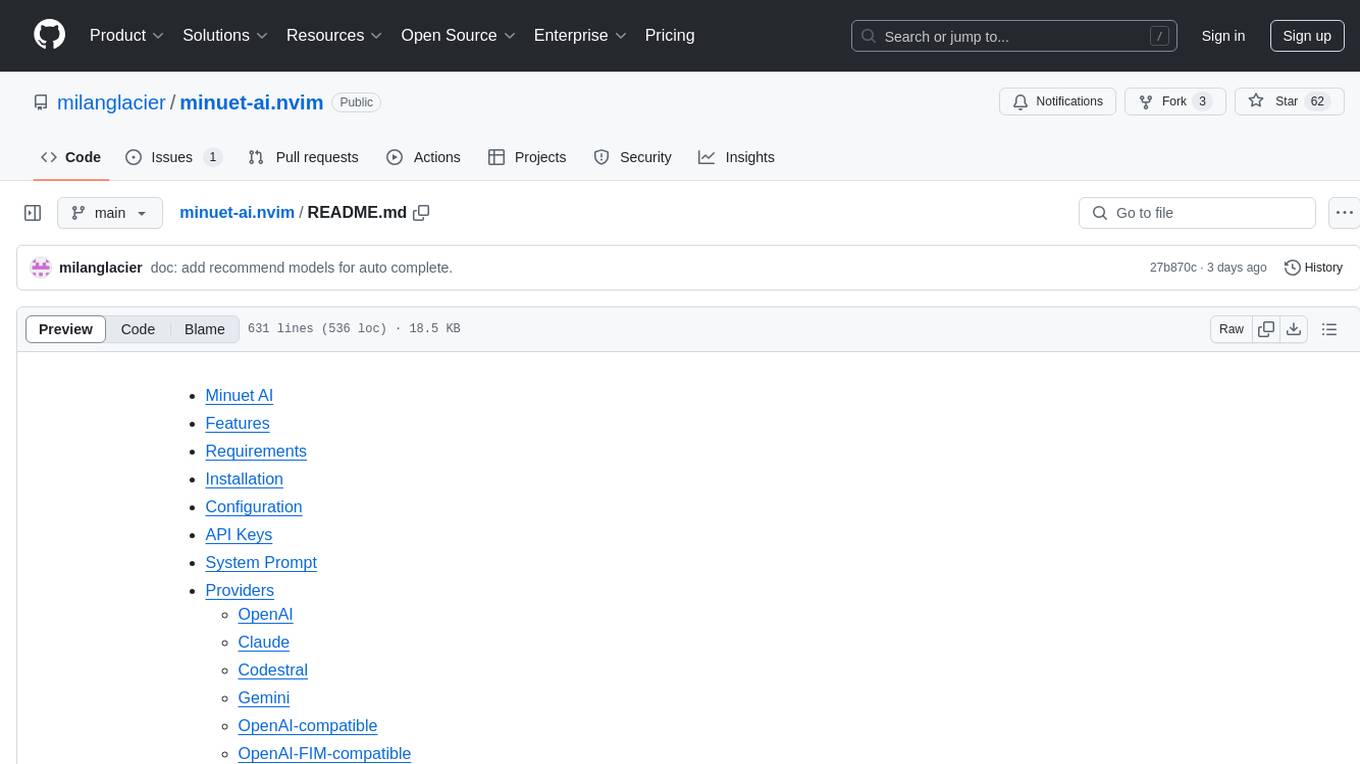

minuet-ai.nvim

Minuet AI is a Neovim plugin that integrates with nvim-cmp to provide AI-powered code completion using multiple AI providers such as OpenAI, Claude, Gemini, Codestral, and Huggingface. It offers customizable configuration options and streaming support for completion delivery. Users can manually invoke completion or use cost-effective models for auto-completion. The plugin requires API keys for supported AI providers and allows customization of system prompts. Minuet AI also supports changing providers, toggling auto-completion, and provides solutions for input delay issues. Integration with lazyvim is possible, and future plans include implementing RAG on the codebase and virtual text UI support.

omniai

OmniAI provides a unified Ruby API for integrating with multiple AI providers, streamlining AI development by offering a consistent interface for features such as chat, text-to-speech, speech-to-text, and embeddings. It ensures seamless interoperability across platforms and effortless switching between providers, making integrations more flexible and reliable.

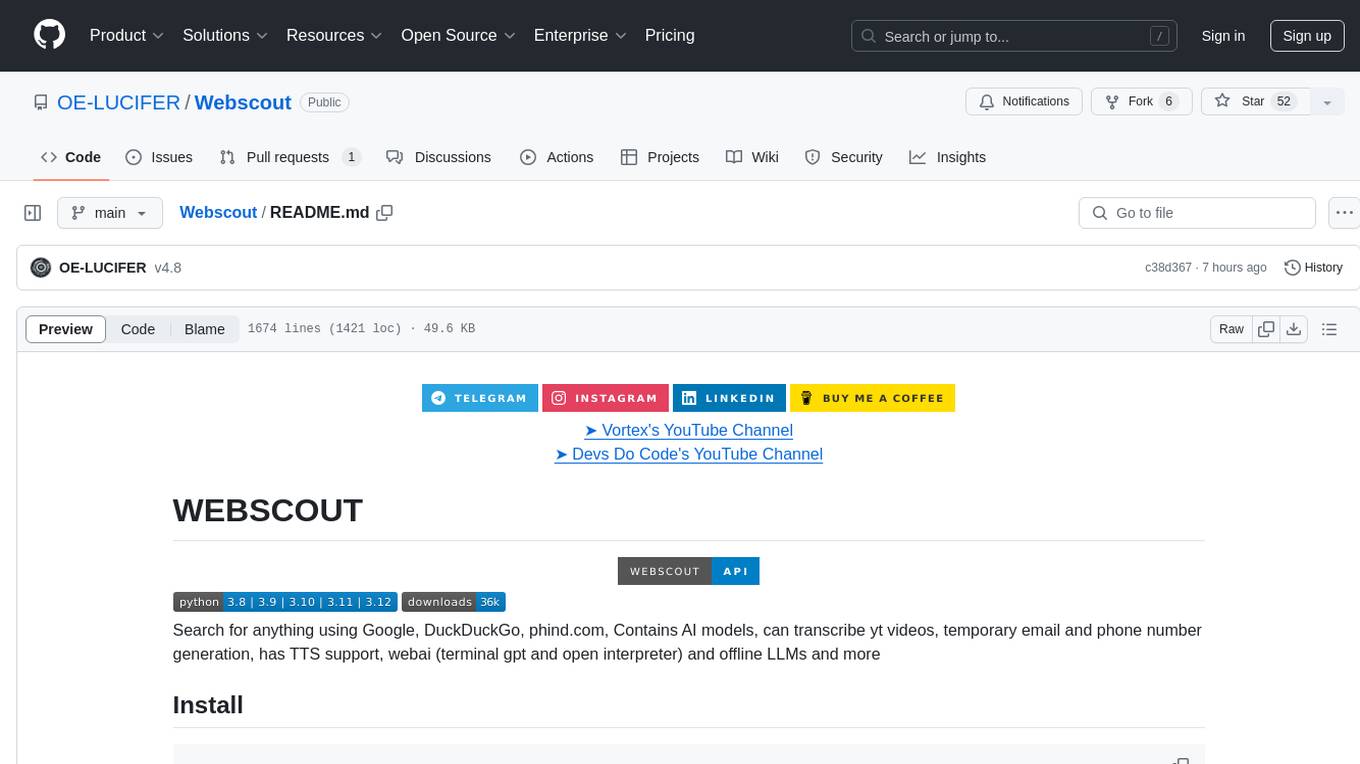

Webscout

WebScout is a versatile tool that allows users to search for anything using Google, DuckDuckGo, and phind.com. It contains AI models, can transcribe YouTube videos, generate temporary email and phone numbers, has TTS support, webai (terminal GPT and open interpreter), and offline LLMs. It also supports features like weather forecasting, YT video downloading, temp mail and number generation, text-to-speech, advanced web searches, and more.

js-genai

The Google Gen AI JavaScript SDK is an experimental SDK for TypeScript and JavaScript developers to build applications powered by Gemini. It supports both the Gemini Developer API and Vertex AI. The SDK is designed to work with Gemini 2.0 features. Users can access API features through the GoogleGenAI classes, which provide submodules for querying models, managing caches, creating chats, uploading files, and starting live sessions. The SDK also allows for function calling to interact with external systems. Users can find more samples in the GitHub samples directory.

Webscout

Webscout is an all-in-one Python toolkit for web search, AI interaction, digital utilities, and more. It provides access to diverse search engines, cutting-edge AI models, temporary communication tools, media utilities, developer helpers, and powerful CLI interfaces through a unified library. With features like comprehensive search leveraging Google and DuckDuckGo, AI powerhouse for accessing various AI models, YouTube toolkit for video and transcript management, GitAPI for GitHub data extraction, Tempmail & Temp Number for privacy, Text-to-Speech conversion, GGUF conversion & quantization, SwiftCLI for CLI interfaces, LitPrinter for styled console output, LitLogger for logging, LitAgent for user agent generation, Text-to-Image generation, Scout for web parsing and crawling, Awesome Prompts for specialized tasks, Weather Toolkit, and AI Search Providers.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

ax

Ax is a Typescript library that allows users to build intelligent agents inspired by agentic workflows and the Stanford DSP paper. It seamlessly integrates with multiple Large Language Models (LLMs) and VectorDBs to create RAG pipelines or collaborative agents capable of solving complex problems. The library offers advanced features such as streaming validation, multi-modal DSP, and automatic prompt tuning using optimizers. Users can easily convert documents of any format to text, perform smart chunking, embedding, and querying, and ensure output validation while streaming. Ax is production-ready, written in Typescript, and has zero dependencies.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

generative-ai-python

The Google AI Python SDK is the easiest way for Python developers to build with the Gemini API. The Gemini API gives you access to Gemini models created by Google DeepMind. Gemini models are built from the ground up to be multimodal, so you can reason seamlessly across text, images, and code.

promptic

Promptic is a tool designed for LLM app development, providing a productive and pythonic way to build LLM applications. It leverages LiteLLM, allowing flexibility to switch LLM providers easily. Promptic focuses on building features by providing type-safe structured outputs, easy-to-build agents, streaming support, automatic prompt caching, and built-in conversation memory.

hezar

Hezar is an all-in-one AI library designed specifically for the Persian community. It brings together various AI models and tools, making it easy to use AI with just a few lines of code. The library seamlessly integrates with Hugging Face Hub, offering a developer-friendly interface and task-based model interface. In addition to models, Hezar provides tools like word embeddings, tokenizers, feature extractors, and more. It also includes supplementary ML tools for deployment, benchmarking, and optimization.

sparkle

Sparkle is a tool that streamlines the process of building AI-driven features in applications using Large Language Models (LLMs). It guides users through creating and managing agents, defining tools, and interacting with LLM providers like OpenAI. Sparkle allows customization of LLM provider settings, model configurations, and provides a seamless integration with Sparkle Server for exposing agents via an OpenAI-compatible chat API endpoint.

opencode.nvim

Opencode.nvim is a neovim frontend for Opencode, a terminal-based AI coding agent. It provides a chat interface between neovim and the Opencode AI agent, capturing editor context to enhance prompts. The plugin maintains persistent sessions for continuous conversations with the AI assistant, similar to Cursor AI.

For similar tasks

strictjson

Strict JSON is a framework designed to handle JSON outputs with complex structures, fixing issues that standard json.loads() cannot resolve. It provides functionalities for parsing LLM outputs into dictionaries, supporting various data types, type forcing, and error correction. The tool allows easy integration with OpenAI JSON Mode and offers community support through tutorials and discussions. Users can download the package via pip, set up API keys, and import functions for usage. The tool works by extracting JSON values using regex, matching output values to literals, and ensuring all JSON fields are output by LLM with optional type checking. It also supports LLM-based checks for type enforcement and error correction loops.

markpdfdown

MarkPDFDown is a powerful tool that leverages multimodal large language models to transcribe PDF files into Markdown format. It simplifies the process of converting PDF documents into clean, editable Markdown text by accurately extracting text, preserving formatting, and handling complex document structures including tables, formulas, and diagrams.

hayhooks

Hayhooks is a tool that simplifies the deployment and serving of Haystack pipelines as REST APIs. It allows users to wrap their pipelines with custom logic and expose them via HTTP endpoints, including OpenAI-compatible chat completion endpoints. With Hayhooks, users can easily convert their Haystack pipelines into API services with minimal boilerplate code.

langfuse-js

langfuse-js is a modular mono repo for the Langfuse JS/TS client libraries. It includes packages for Langfuse API client, tracing, OpenTelemetry export helpers, OpenAI integration, and LangChain integration. The SDK is currently in version 4 and offers universal JavaScript environments support as well as Node.js 20+. The repository provides documentation, reference materials, and development instructions for managing the monorepo with pnpm. It is licensed under MIT.

innoshop

InnoShop is an innovative open-source e-commerce system based on Laravel 12. It supports multiple languages, multiple currencies, and is integrated with OpenAI. The system features plugin mechanisms and theme template development for enhanced user experience and system extensibility. It is globally oriented, user-friendly, and based on the latest technology with deep AI integration.

ailab

The 'ailab' project is an experimental ground for code generation combining AI (especially coding agents) and Deno. It aims to manage configuration files defining coding rules and modes in Deno projects, enhancing the quality and efficiency of code generation by AI. The project focuses on defining clear rules and modes for AI coding agents, establishing best practices in Deno projects, providing mechanisms for type-safe code generation and validation, applying test-driven development (TDD) workflow to AI coding, and offering implementation examples utilizing design patterns like adapter pattern.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.