swarmzero

SwarmZero's SDK for building AI agents, swarms of agents and much more.

Stars: 218

SwarmZero SDK is a library that simplifies the creation and execution of AI Agents and Swarms of Agents. It supports various LLM Providers such as OpenAI, Azure OpenAI, Anthropic, MistralAI, Gemini, Nebius, and Ollama. Users can easily install the library using pip or poetry, set up the environment and configuration, create and run Agents, collaborate with Swarms, add tools for complex tasks, and utilize retriever tools for semantic information retrieval. Sample prompts are provided to help users explore the capabilities of the agents and swarms. The SDK also includes detailed examples and documentation for reference.

README:

This library provides you with an easy way to create and run AI Agents and Swarms of Agents.

Supported LLM Providers:

- Python >= 3.11

You can either directly install with pip:

pip install swarmzeroOr can either directly install with poetry:

poetry add swarmzeroOr add it to your requirements.txt file:

...

swarmzero==x.y.z

...You need to specify an OPENAI_API_KEY in a .env file in this directory.

Make a copy of the .env.example file and rename it to .env.

To use a configuration file with your Agent, follow these steps:

-

Create a Configuration File:

- Create a TOML file (e.g.,

swarmzero_config.toml) in your project directory. (See swarmzero_config_example.toml).

- Create a TOML file (e.g.,

-

Create an SDK Context:

- Create an instance of SDKContext with the path to your configuration file.

- The SDKContext allows you to manage configurations, resources, and utilities across your SwarmZero Agents more efficiently.

from swarmzero.sdk_context import SDKContext sdk_context = SDKContext(config_path="./swarmzero_config.toml")

-

Specify the Configuration Path:

- When creating a

Agentinstance, provide the relative or absolute path to your configuration file. - Agent will use the configuration from the SDK Context. If you have one agent you can directly pass the config_path it will create the sdk_context for you.

from swarmzero import Agent simple_agent = Agent( name="Simple Agent", functions=[], instruction="your instructions for this agent's goal", # sdk_context=sdk_context config_path="./swarmzero_config.toml" )

- When creating a

More detailed examples can be found at https://github.com/swarmzero/examples

First import the Agent class:

from swarmzero import AgentLoad your environment variables:

from dotenv import load_dotenv

load_dotenv()Then create a Agent instance:

my_agent = Agent(

name="my_agent",

functions=[],

instruction="your instructions for this agent's goal",

)Then, run your agent:

my_agent.run()Finally, call the API endpoint, /api/v1/chat, to see the result:

curl --request POST \

--url http://localhost:8000/api/v1/chat \

--header 'Content-Type: multipart/form-data' \

--form 'user_id="test"' \

--form 'session_id="test"' \

--form 'chat_data={ "messages": [ { "role": "user", "content": "Who is Satoshi Nakamoto?" } ] }'You can create tools that help your agent handle more complex tasks. Here's an example:

import os

from typing import Optional, Dict

from web3 import Web3

from swarmzero import Agent

from dotenv import load_dotenv

load_dotenv()

rpc_url = os.getenv("RPC_URL") # add an ETH Mainnet HTTP RPC URL to your `.env` file

def get_transaction_receipt(transaction_hash: str) -> Optional[Dict]:

"""

Fetches the receipt of a specified transaction on the Ethereum blockchain and returns it as a dictionary.

:param transaction_hash: The hash of the transaction to fetch the receipt for.

:return: A dictionary containing the transaction receipt details, or None if the transaction cannot be found.

"""

web3 = Web3(Web3.HTTPProvider(rpc_url))

if not web3.is_connected():

print("unable to connect to Ethereum")

return None

try:

transaction_receipt = web3.eth.get_transaction_receipt(transaction_hash)

return dict(transaction_receipt)

except Exception as e:

print(f"an error occurred: {e}")

return None

if __name__ == "__main__":

my_agent = Agent(

name="my_agent",

functions=[get_transaction_receipt]

)

my_agent.run()

"""

[1] send a request:

```

curl --request POST \

--url http://localhost:8000/api/v1/chat \

--header 'Content-Type: multipart/form-data' \

--form 'user_id="test"' \

--form 'session_id="test"' \

--form 'chat_data={ "messages": [ { "role": "user", "content": "Who is the sender of this transaction - 0x5c504ed432cb51138bcf09aa5e8a410dd4a1e204ef84bfed1be16dfba1b22060" } ] }'

```

[2] result:

The address that initiated the transaction with hash 0x5c504ed432cb51138bcf09aa5e8a410dd4a1e204ef84bfed1be16dfba1b22060 is 0xA1E4380A3B1f749673E270229993eE55F35663b4.

"""You can create a swarm of agents to collaborate on complex tasks. Here's an example of how to set up and use a swarm:

from swarmzero.swarm import Swarm

from swarmzero.agent import Agent

from swarmzero.sdk_context import SDKContext

import asyncio

# Create SDK Context

sdk_context = SDKContext(config_path="./swarmzero_config_example.toml")

def save_report():

return "save_item_to_csv"

def search_on_web():

return "search_on_web"

# Create individual agents

agent1 = Agent(name="Research Agent", instruction="Conduct research on given topics", sdk_context=sdk_context,

functions=[search_on_web])

agent2 = Agent(name="Analysis Agent", instruction="Analyze data and provide insights", sdk_context=sdk_context,

functions=[save_report])

agent3 = Agent(name="Report Agent", instruction="Compile findings into a report", sdk_context=sdk_context, functions=[])

# Create swarm

swarm = Swarm(name="Research Team", description="A swarm of agents that collaborate on research tasks",

instruction="Be helpful and collaborative", functions=[], agents=[agent1, agent2, agent3])

async def chat_with_swarm():

return await swarm.chat("Can you analyze the following data: [1, 2, 3, 4, 5]")

if __name__ == "__main__":

asyncio.run(chat_with_swarm())You can add retriever tools to create vector embeddings and retrieve semantic information. It will create vector index for every pdf documents under 'swarmzero-data/files/user' folder and can filter files with required_exts parameter.

- SwarmZero agent supports ".md", '.mdx' ,".txt", '.csv', '.docx', '.pdf' file types.

- SwarmZero agent supports 4 type of retriever (basic, chroma, pinecone-serverless, pinecone-pod) and controlled with retrieval_tool parameter.

from swarmzero import Agent

from dotenv import load_dotenv

load_dotenv()

if __name__ == "__main__":

my_agent = Agent(

name="retrieve-test",

functions=[],

retrieve = True,

required_exts = ['.md'],

retrieval_tool='chroma'

)

my_agent.run()

"""

[1] send a request:

```

curl --request POST \

--url http://localhost:8000/api/v1/chat \

--header 'Content-Type: multipart/form-data' \

--form 'user_id="test"' \

--form 'session_id="test"' \

--form 'chat_data={ "messages": [ { "role": "user", "content": "Can you summarise the documents?" } ] }'

```

"""Users of your agent/swarm may not always be familiar with its abilities. Providing sample prompts allows them to explore what you have built. Here's how to add sample prompts which they can use before committing to use your agent/swarm.

In your swarmzero_config.toml file, create a top level entry called [sample_prompts] and add a new array to the key prompts like this:

[sample_prompts]

prompts = [

"What can you help me do?",

"Which tools do you have access to?",

"What are your capabilities?"

][target_agent_id]

model = "gpt-3.5-turbo"

timeout = 15

environment = "dev"

enable_multi_modal = true

ollama_server_url = 'http://123.456.78.90:11434'

sample_prompts = [

"What can you help me do?",

"Which tools do you have access to?",

"What are your capabilities?"

]See ./swarmzero_config_example.toml for an example configuration file.

If you want to contribute to the codebase, you would need to set up your dev environment. Follow these steps:

- Create a new file called .env

- Copy the contents of .env.example into your new .env file

- API keys for third-party tools are not provided.

-

OPENAI_API_KEYfrom OpenAI

-

- If you don't have Poetry installed, you can install it using the following commands:

curl -sSL https://install.python-poetry.org | python3 -

export PATH="$HOME/.local/bin:$PATH"- Activate the Virtual Environment created by Poetry with the following command:

poetry shell- Install dependencies.

poetry install --no-root- Make sure you're in the

tests/directory:

cd tests/- Run the test suite:

pytest- Run tests for a specific module:

pytest tests/path/to/test_module.py- Run with verbose output:

pytest -v- Run with a detailed output of each test (including print statements):

pytest -s- Run with coverage report:

pip install coverage pytest-cov

pytest --cov --cov-report=htmlReports file tests/htmlcov/index.html

Open http://localhost:8000/docs with your browser to see the Swagger UI of the API.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for swarmzero

Similar Open Source Tools

swarmzero

SwarmZero SDK is a library that simplifies the creation and execution of AI Agents and Swarms of Agents. It supports various LLM Providers such as OpenAI, Azure OpenAI, Anthropic, MistralAI, Gemini, Nebius, and Ollama. Users can easily install the library using pip or poetry, set up the environment and configuration, create and run Agents, collaborate with Swarms, add tools for complex tasks, and utilize retriever tools for semantic information retrieval. Sample prompts are provided to help users explore the capabilities of the agents and swarms. The SDK also includes detailed examples and documentation for reference.

sparkle

Sparkle is a tool that streamlines the process of building AI-driven features in applications using Large Language Models (LLMs). It guides users through creating and managing agents, defining tools, and interacting with LLM providers like OpenAI. Sparkle allows customization of LLM provider settings, model configurations, and provides a seamless integration with Sparkle Server for exposing agents via an OpenAI-compatible chat API endpoint.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

deep-searcher

DeepSearcher is a tool that combines reasoning LLMs and Vector Databases to perform search, evaluation, and reasoning based on private data. It is suitable for enterprise knowledge management, intelligent Q&A systems, and information retrieval scenarios. The tool maximizes the utilization of enterprise internal data while ensuring data security, supports multiple embedding models, and provides support for multiple LLMs for intelligent Q&A and content generation. It also includes features like private data search, vector database management, and document loading with web crawling capabilities under development.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

vinagent

Vinagent is a lightweight and flexible library designed for building smart agent assistants across various industries. It provides a simple yet powerful foundation for creating AI-powered customer service bots, data analysis assistants, or domain-specific automation agents. With its modular tool system, users can easily extend their agent's capabilities by integrating a wide range of tools that are self-contained, well-documented, and can be registered dynamically. Vinagent allows users to scale and adapt their agents to new tasks or environments effortlessly.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

Lumos

Lumos is a Chrome extension powered by a local LLM co-pilot for browsing the web. It allows users to summarize long threads, news articles, and technical documentation. Users can ask questions about reviews and product pages. The tool requires a local Ollama server for LLM inference and embedding database. Lumos supports multimodal models and file attachments for processing text and image content. It also provides options to customize models, hosts, and content parsers. The extension can be easily accessed through keyboard shortcuts and offers tools for automatic invocation based on prompts.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

simpleAI

SimpleAI is a self-hosted alternative to the not-so-open AI API, focused on replicating main endpoints for LLM such as text completion, chat, edits, and embeddings. It allows quick experimentation with different models, creating benchmarks, and handling specific use cases without relying on external services. Users can integrate and declare models through gRPC, query endpoints using Swagger UI or API, and resolve common issues like CORS with FastAPI middleware. The project is open for contributions and welcomes PRs, issues, documentation, and more.

instructor

Instructor is a Python library that makes it a breeze to work with structured outputs from large language models (LLMs). Built on top of Pydantic, it provides a simple, transparent, and user-friendly API to manage validation, retries, and streaming responses. Get ready to supercharge your LLM workflows!

llm-rag-workshop

The LLM RAG Workshop repository provides a workshop on using Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) to generate and understand text in a human-like manner. It includes instructions on setting up the environment, indexing Zoomcamp FAQ documents, creating a Q&A system, and using OpenAI for generation based on retrieved information. The repository focuses on enhancing language model responses with retrieved information from external sources, such as document databases or search engines, to improve factual accuracy and relevance of generated text.

agent-mimir

Agent Mimir is a command line and Discord chat client 'agent' manager for LLM's like Chat-GPT that provides the models with access to tooling and a framework with which accomplish multi-step tasks. It is easy to configure your own agent with a custom personality or profession as well as enabling access to all tools that are compatible with LangchainJS. Agent Mimir is based on LangchainJS, every tool or LLM that works on Langchain should also work with Mimir. The tasking system is based on Auto-GPT and BabyAGI where the agent needs to come up with a plan, iterate over its steps and review as it completes the task.

letta

Letta is an open source framework for building stateful LLM applications. It allows users to build stateful agents with advanced reasoning capabilities and transparent long-term memory. The framework is white box and model-agnostic, enabling users to connect to various LLM API backends. Letta provides a graphical interface, the Letta ADE, for creating, deploying, interacting, and observing with agents. Users can access Letta via REST API, Python, Typescript SDKs, and the ADE. Letta supports persistence by storing agent data in a database, with PostgreSQL recommended for data migrations. Users can install Letta using Docker or pip, with Docker defaulting to PostgreSQL and pip defaulting to SQLite. Letta also offers a CLI tool for interacting with agents. The project is open source and welcomes contributions from the community.

scaleapi-python-client

The Scale AI Python SDK is a tool that provides a Python interface for interacting with the Scale API. It allows users to easily create tasks, manage projects, upload files, and work with evaluation tasks, training tasks, and Studio assignments. The SDK handles error handling and provides detailed documentation for each method. Users can also manage teammates, project groups, and batches within the Scale Studio environment. The SDK supports various functionalities such as creating tasks, retrieving tasks, canceling tasks, auditing tasks, updating task attributes, managing files, managing team members, and working with evaluation and training tasks.

nvim.ai

nvim.ai is a powerful Neovim plugin that enables AI-assisted coding and chat capabilities within the editor. Users can chat with buffers, insert code with an inline assistant, and utilize various LLM providers for context-aware AI assistance. The plugin supports features like interacting with AI about code and documents, receiving relevant help based on current work, code insertion, code rewriting (Work in Progress), and integration with multiple LLM providers. Users can configure the plugin, add API keys to dotfiles, and integrate with nvim-cmp for command autocompletion. Keymaps are available for chat and inline assist functionalities. The chat dialog allows parsing content with keywords and supports roles like /system, /you, and /assistant. Context-aware assistance can be accessed through inline assist by inserting code blocks anywhere in the file.

For similar tasks

swarmzero

SwarmZero SDK is a library that simplifies the creation and execution of AI Agents and Swarms of Agents. It supports various LLM Providers such as OpenAI, Azure OpenAI, Anthropic, MistralAI, Gemini, Nebius, and Ollama. Users can easily install the library using pip or poetry, set up the environment and configuration, create and run Agents, collaborate with Swarms, add tools for complex tasks, and utilize retriever tools for semantic information retrieval. Sample prompts are provided to help users explore the capabilities of the agents and swarms. The SDK also includes detailed examples and documentation for reference.

SWE-bench-Live

SWE-bench-Live is a live benchmark dataset for evaluating AI systems' ability to complete real-world software engineering tasks. It is continuously updated through an automated curation pipeline, providing the community with up-to-date task instances for rigorous and contamination-free evaluation. The dataset is designed to test the performance of various AI models on software engineering tasks and supports multiple programming languages and operating systems.

sidecar

Sidecar is the AI brains of Aide the editor, responsible for creating prompts, interacting with LLM, and ensuring seamless integration of all functionalities. It includes 'tool_box.rs' for handling language-specific smartness, 'symbol/' for smart and independent symbols, 'llm_prompts/' for creating prompts, and 'repomap' for creating a repository map using page rank on code symbols. Users can contribute by submitting bugs, feature requests, reviewing source code changes, and participating in the development workflow.

honcho

Honcho is a platform for creating personalized AI agents and LLM powered applications for end users. The repository is a monorepo containing the server/API for managing database interactions and storing application state, along with a Python SDK. It utilizes FastAPI for user context management and Poetry for dependency management. The API can be run using Docker or manually by setting environment variables. The client SDK can be installed using pip or Poetry. The project is open source and welcomes contributions, following a fork and PR workflow. Honcho is licensed under the AGPL-3.0 License.

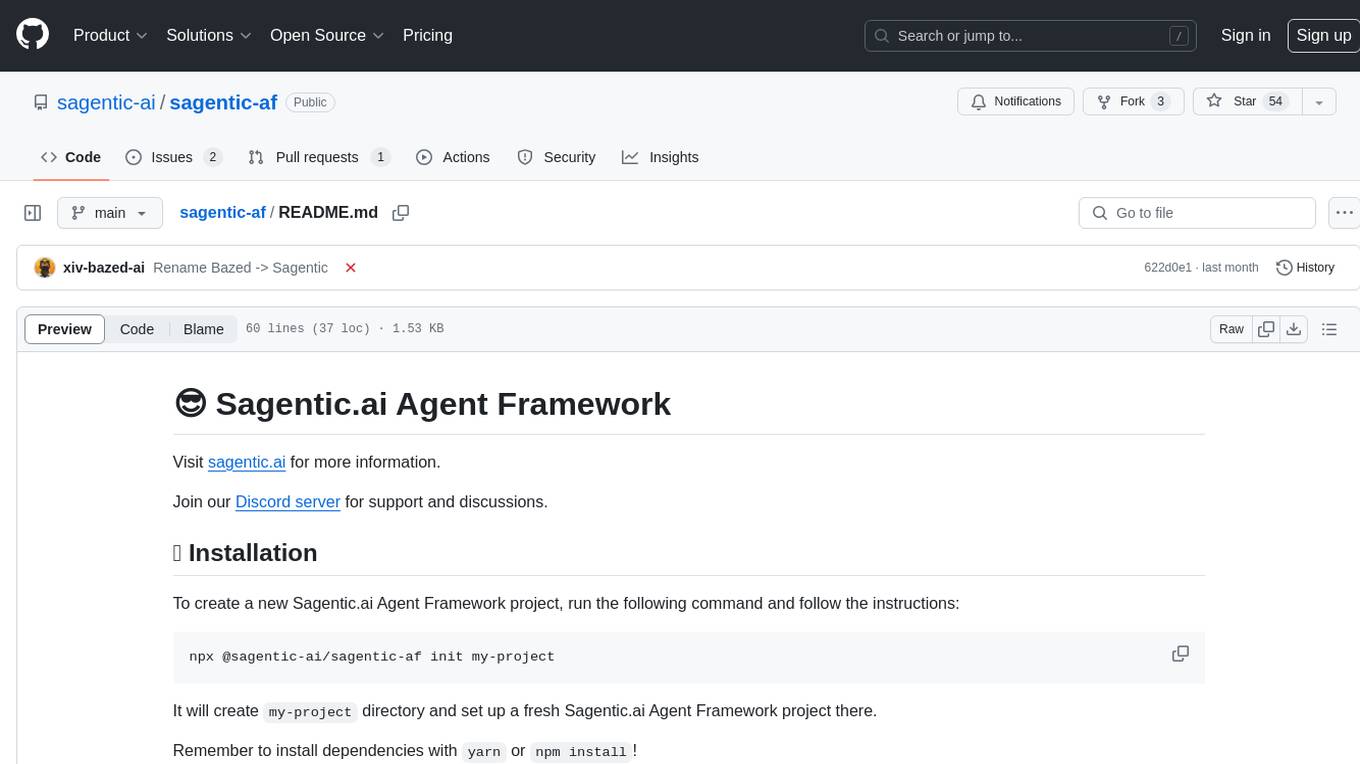

sagentic-af

Sagentic.ai Agent Framework is a tool for creating AI agents with hot reloading dev server. It allows users to spawn agents locally by calling specific endpoint. The framework comes with detailed documentation and supports contributions, issues, and feature requests. It is MIT licensed and maintained by Ahyve Inc.

tinyllm

tinyllm is a lightweight framework designed for developing, debugging, and monitoring LLM and Agent powered applications at scale. It aims to simplify code while enabling users to create complex agents or LLM workflows in production. The core classes, Function and FunctionStream, standardize and control LLM, ToolStore, and relevant calls for scalable production use. It offers structured handling of function execution, including input/output validation, error handling, evaluation, and more, all while maintaining code readability. Users can create chains with prompts, LLM models, and evaluators in a single file without the need for extensive class definitions or spaghetti code. Additionally, tinyllm integrates with various libraries like Langfuse and provides tools for prompt engineering, observability, logging, and finite state machine design.

council

Council is an open-source platform designed for the rapid development and deployment of customized generative AI applications using teams of agents. It extends the LLM tool ecosystem by providing advanced control flow and scalable oversight for AI agents. Users can create sophisticated agents with predictable behavior by leveraging Council's powerful approach to control flow using Controllers, Filters, Evaluators, and Budgets. The framework allows for automated routing between agents, comparing, evaluating, and selecting the best results for a task. Council aims to facilitate packaging and deploying agents at scale on multiple platforms while enabling enterprise-grade monitoring and quality control.

mentals-ai

Mentals AI is a tool designed for creating and operating agents that feature loops, memory, and various tools, all through straightforward markdown syntax. This tool enables you to concentrate solely on the agent’s logic, eliminating the necessity to compose underlying code in Python or any other language. It redefines the foundational frameworks for future AI applications by allowing the creation of agents with recursive decision-making processes, integration of reasoning frameworks, and control flow expressed in natural language. Key concepts include instructions with prompts and references, working memory for context, short-term memory for storing intermediate results, and control flow from strings to algorithms. The tool provides a set of native tools for message output, user input, file handling, Python interpreter, Bash commands, and short-term memory. The roadmap includes features like a web UI, vector database tools, agent's experience, and tools for image generation and browsing. The idea behind Mentals AI originated from studies on psychoanalysis executive functions and aims to integrate 'System 1' (cognitive executor) with 'System 2' (central executive) to create more sophisticated agents.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.