obs-localvocal

OBS plugin for local speech recognition and captioning using AI

Stars: 248

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.

README:

LocalVocal live-streaming AI assistant plugin allows you to transcribe, locally on your machine, audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). ✅ No GPU required, ✅ no cloud costs, ✅ no network and ✅ no downtime! Privacy first - all data stays on your machine.

If this free plugin has been valuable to you consider adding a ⭐ to this GH repo, rating it on OBS, subscribing to my YouTube channel where I post updates, and supporting my work on GitHub or Patreon 🙏

For a standalone captioning and translation free open tool consider our LexiSynth, which also does speech synthesis.

Internally the plugin is running a neural network (OpenAI Whisper) locally to predict in real time the speech and provide captions. It's using the Whisper.cpp project from ggerganov to run the Whisper network efficiently on CPUs and GPUs.

Do more with LocalVocal:

- Translate Caption any Application

- Real-time Translation with DeepL

- POST Captions to YouTube

- Local LLM Real-time Translation

Current Features:

- Transcribe audio to text in real time in 100 languages

- Display captions on screen using text sources

- Send captions to a .txt or .srt file (to read by external sources or video playback) with and without aggregation option

- Sync'ed captions with OBS recording timestamps

- Send captions on a RTMP stream to e.g. YouTube, Twitch

- Bring your own Whisper model (any GGML)

- Translate captions in real time to major languages (both Whisper built-in translation as well as NMT models with CTranslate2)

- CUDA, OpenCL, Apple Arm64, AVX & SSE acceleration support

Roadmap:

- More robust built-in translation options

- Additional output options: .vtt, .ssa, .sub, etc.

- Speaker diarization (detecting speakers in a multi-person audio stream)

Check out our other plugins:

- Background Removal removes background from webcam without a green screen.

- Detect will detect and track >80 types of objects in real-time inside OBS

- 🚧 Experimental 🚧 CleanStream for real-time filler word (uh,um) and profanity removal from live audio stream

- URL/API Source that allows fetching live data from an API and displaying it in OBS.

- Polyglot translation AI plugin for real-time, local translation to hunderds of languages

Check out the latest releases for downloads and install instructions.

The plugin ships with the Tiny.en model, and will autonomoously download other bigger Whisper models through a dropdown. However there's an option to select an external model file if you have it on disk.

Get more models from https://ggml.ggerganov.com/ and follow the instructions on whisper.cpp to create your own models or download others such as distilled models.

The plugin was built and tested on Mac OSX (Intel & Apple silicon), Windows (with and without Nvidia CUDA) and Linux.

Start by cloning this repo to a directory of your choice.

Using the CI pipeline scripts, locally you would just call the zsh script, which builds for the architecture specified in $MACOS_ARCH (either x86_64 or arm64).

$ MACOS_ARCH="x86_64" ./.github/scripts/build-macos -c ReleaseThe above script should succeed and the plugin files (e.g. obs-localvocal.plugin) will reside in the ./release/Release folder off of the root. Copy the .plugin file to the OBS directory e.g. ~/Library/Application Support/obs-studio/plugins.

To get .pkg installer file, run for example

$ ./.github/scripts/package-macos -c Release(Note that maybe the outputs will be in the Release folder and not the install folder like pakage-macos expects, so you will need to rename the folder from build_x86_64/Release to build_x86_64/install)

For successfully building on linux, first clone the repo, then from the repo directory:

$ sudo apt install -y libssl-dev

$ ./.github/scripts/build-linuxCopy the results to the standard OBS folders on Ubuntu

$ sudo cp -R release/RelWithDebInfo/lib/* /usr/lib/

$ sudo cp -R release/RelWithDebInfo/share/* /usr/share/Note: The official OBS plugins guide recommends adding plugins to the ~/.config/obs-studio/plugins folder. This has to do with the way you installed OBS.

In case the above doesn't work, attempt to copy the files to the ~/.config folder:

$ mkdir -p ~/.config/obs-studio/plugins/obs-localvocal/bin/64bit

$ cp -R release/RelWithDebInfo/lib/x86_64-linux-gnu/obs-plugins/* ~/.config/obs-studio/plugins/obs-localvocal/bin/64bit/

$ mkdir -p ~/.config/obs-studio/plugins/obs-localvocal/data

$ cp -R release/RelWithDebInfo/share/obs/obs-plugins/obs-localvocal/* ~/.config/obs-studio/plugins/obs-localvocal/data/Use the CI scripts again, for example:

> .github/scripts/Build-Windows.ps1 -Configuration ReleaseThe build should exist in the ./release folder off the root. You can manually install the files in the OBS directory.

> Copy-Item -Recurse -Force "release\Release\*" -Destination "C:\Program Files\obs-studio\"LocalVocal will now build with CUDA support automatically through a prebuilt binary of Whisper.cpp from https://github.com/occ-ai/occ-ai-dep-whispercpp. The CMake scripts will download all necessary files.

To build with cuda add CPU_OR_CUDA as an environment variable (with cpu, 12.2.0 or 11.8.0) and build regularly

> $env:CPU_OR_CUDA="12.2.0"

> .github/scripts/Build-Windows.ps1 -Configuration ReleaseFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for obs-localvocal

Similar Open Source Tools

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.

obs-localvocal

LocalVocal is a Speech AI assistant OBS Plugin that enables users to transcribe speech into text and translate it into any language locally on their machine. The plugin runs OpenAI's Whisper for real-time speech processing and prediction. It supports features like transcribing audio in real-time, displaying captions on screen, sending captions to files, syncing captions with recordings, and translating captions to major languages. Users can bring their own Whisper model, filter or replace captions, and experience partial transcriptions for streaming. The plugin is privacy-focused, requiring no GPU, cloud costs, network, or downtime.

elyra

Elyra is a set of AI-centric extensions to JupyterLab Notebooks that includes features like Visual Pipeline Editor, running notebooks/scripts as batch jobs, reusable code snippets, hybrid runtime support, script editors with execution capabilities, debugger, version control using Git, and more. It provides a comprehensive environment for data scientists and AI practitioners to develop, test, and deploy machine learning models and workflows efficiently.

snipkit

SnipKit is a CLI tool designed to manage snippets efficiently, allowing users to execute saved scripts or generate new ones with the help of AI directly from the terminal. It supports loading snippets from various sources, parameter substitution, different parameter types, themes, and customization options. The tool includes an interactive chat-style interface called SnipKit Assistant for generating parameterized scripts. Users can also work with different AI providers like OpenAI, Anthropic, Google Gemini, and more. SnipKit aims to streamline script execution and script generation workflows for developers and users who frequently work with code snippets.

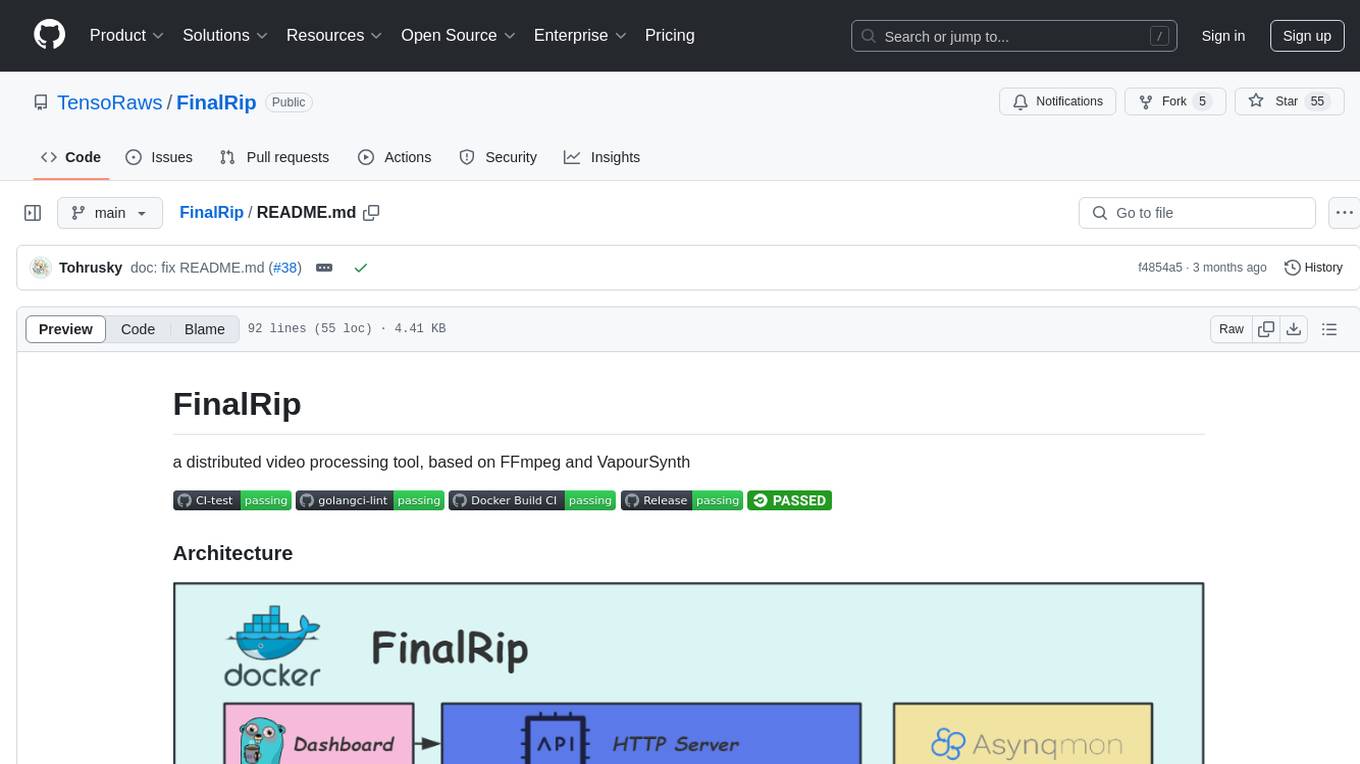

FinalRip

FinalRip is a distributed video processing tool based on FFmpeg and VapourSynth. It cuts the original video into multiple clips, processes each clip in parallel, and merges them into the final video. Users can deploy the system in a distributed way, configure settings via environment variables or remote config files, and develop/test scripts in the vs-playground environment. It supports Nvidia GPU, AMD GPU with ROCm support, and provides a dashboard for selecting compatible scripts to process videos.

ai-cli-lib

The ai-cli-lib is a library designed to enhance interactive command-line editing programs by integrating with GPT large language model servers. It allows users to obtain AI help from servers like Anthropic's or OpenAI's, or a llama.cpp server. The library acts as a command line copilot, providing natural language prompts and responses to enhance user experience and productivity. It supports various platforms such as Debian GNU/Linux, macOS, and Cygwin, and requires specific packages for installation and operation. Users can configure the library to activate during shell startup and interact with command-line programs like bash, mysql, psql, gdb, sqlite3, and bc. Additionally, the library provides options for configuring API keys, setting up llama.cpp servers, and ensuring data privacy by managing context settings.

vim-ollama

The 'vim-ollama' plugin for Vim adds Copilot-like code completion support using Ollama as a backend, enabling intelligent AI-based code completion and integrated chat support for code reviews. It does not rely on cloud services, preserving user privacy. The plugin communicates with Ollama via Python scripts for code completion and interactive chat, supporting Vim only. Users can configure LLM models for code completion tasks and interactive conversations, with detailed installation and usage instructions provided in the README.

bit

Bit is a build system that organizes source code into composable components, enabling the creation of reliable, scalable, and consistent applications. It supports the creation of reusable UI components, standard building blocks, shell applications, and atomic deployments. Bit is compatible with various tools in the JavaScript ecosystem and offers official dev environments for popular frameworks. It can be used in different codebase structures like monorepos or polyrepos, and even without repositories. Users can install Bit, create shell applications, compose components, release and deploy components, and modernize existing projects using Bit Cloud or self-hosted scopes.

SciPIP

SciPIP is a scientific paper idea generation tool powered by a large language model (LLM) designed to assist researchers in quickly generating novel research ideas. It conducts a literature review based on user-provided background information and generates fresh ideas for potential studies. The tool is designed to help researchers in various fields by providing a GUI environment for idea generation, supporting NLP, multimodal, and CV fields, and allowing users to interact with the tool through a web app or terminal. SciPIP uses Neo4j as its database and provides functionalities for generating new ideas, fetching papers, and constructing the database.

air-light

Air-light is a minimalist WordPress starter theme designed to be an ultra minimal starting point for a WordPress project. It is built to be very straightforward, backwards compatible, front-end developer friendly and modular by its structure. Air-light is free of weird "app-like" folder structures or odd syntaxes that nobody else uses. It loves WordPress as it was and as it is.

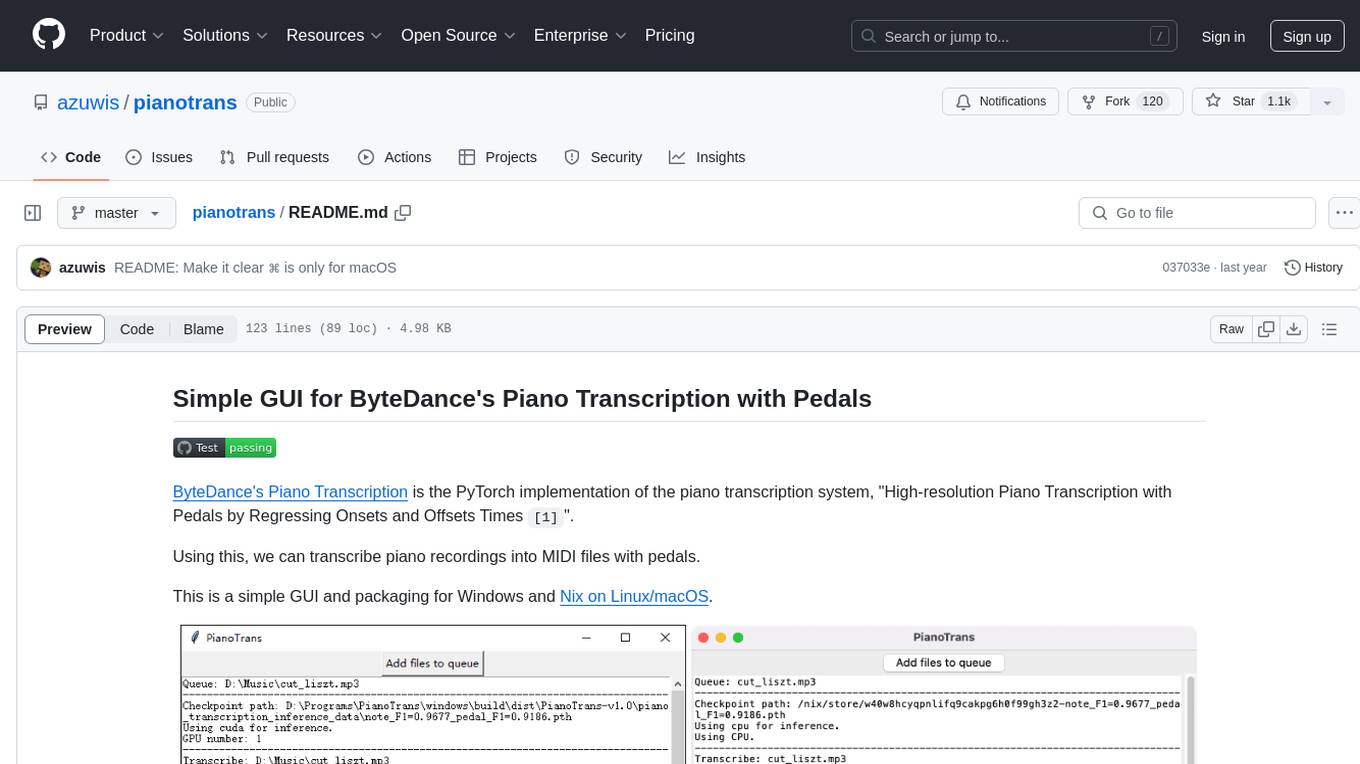

pianotrans

ByteDance's Piano Transcription is a PyTorch implementation for transcribing piano recordings into MIDI files with pedals. This repository provides a simple GUI and packaging for Windows and Nix on Linux/macOS. It supports using GPU for inference and includes CLI usage. Users can upgrade the tool and report issues to the upstream project. The tool focuses on providing MIDI files, and any other improvements to transcription results should be directed to the original project.

tgpt

tgpt is a cross-platform command-line interface (CLI) tool that allows users to interact with AI chatbots in the Terminal without needing API keys. It supports various AI providers such as KoboldAI, Phind, Llama2, Blackbox AI, and OpenAI. Users can generate text, code, and images using different flags and options. The tool can be installed on GNU/Linux, MacOS, FreeBSD, and Windows systems. It also supports proxy configurations and provides options for updating and uninstalling the tool.

stable-diffusion-discord-bot

A discord bot built to interface with the InvokeAI fork of stable-diffusion. It is a work in progress for a major rewrite of the arty project, compatible with `invokeai 5.1.1`. The bot supports various functionalities like building node graphs from job requests, refreshing renders using png metadata, removing backgrounds, job progress tracking, and LLM integration. Users can install custom invokeai nodes for advanced functionality and launch the bot natively or with docker. Patches and pull requests are welcomed.

anyquery

Anyquery is a SQL query engine built on SQLite that allows users to run SQL queries on various data sources like files, databases, and apps. It can connect to LLMs to access data and act as a MySQL server for running queries. The tool is extensible through plugins and supports various installation methods like Homebrew, APT, YUM/DNF, Scoop, Winget, and Chocolatey.

frontend

Nuclia frontend apps and libraries repository contains various frontend applications and libraries for the Nuclia platform. It includes components such as Dashboard, Widget, SDK, Sistema (design system), NucliaDB admin, CI/CD Deployment, and Maintenance page. The repository provides detailed instructions on installation, dependencies, and usage of these components for both Nuclia employees and external developers. It also covers deployment processes for different components and tools like ArgoCD for monitoring deployments and logs. The repository aims to facilitate the development, testing, and deployment of frontend applications within the Nuclia ecosystem.

aio-switch-updater

AIO-Switch-Updater is a Nintendo Switch homebrew app that allows users to download and update custom firmware, firmware files, cheat codes, and more. It supports Atmosphère, ReiNX, and SXOS on both unpatched and patched Switches. The app provides features like updating CFW with custom RCM payload, updating Hekate/payload, custom downloads, downloading firmwares and cheats, and various tools like rebooting to specific payload, changing color schemes, consulting cheat codes, and more. Users can contribute by submitting PRs and suggestions, and the app supports localization. It does not host or distribute any files and gives special thanks to contributors and supporters.

For similar tasks

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

quivr

Quivr is a personal assistant powered by Generative AI, designed to be a second brain for users. It offers fast and efficient access to data, ensuring security and compatibility with various file formats. Quivr is open source and free to use, allowing users to share their brains publicly or keep them private. The marketplace feature enables users to share and utilize brains created by others, boosting productivity. Quivr's offline mode provides anytime, anywhere access to data. Key features include speed, security, OS compatibility, file compatibility, open source nature, public/private sharing options, a marketplace, and offline mode.

react-native-vercel-ai

Run Vercel AI package on React Native, Expo, Web and Universal apps. Currently React Native fetch API does not support streaming which is used as a default on Vercel AI. This package enables you to use AI library on React Native but the best usage is when used on Expo universal native apps. On mobile you get back responses without streaming with the same API of `useChat` and `useCompletion` and on web it will fallback to `ai/react`

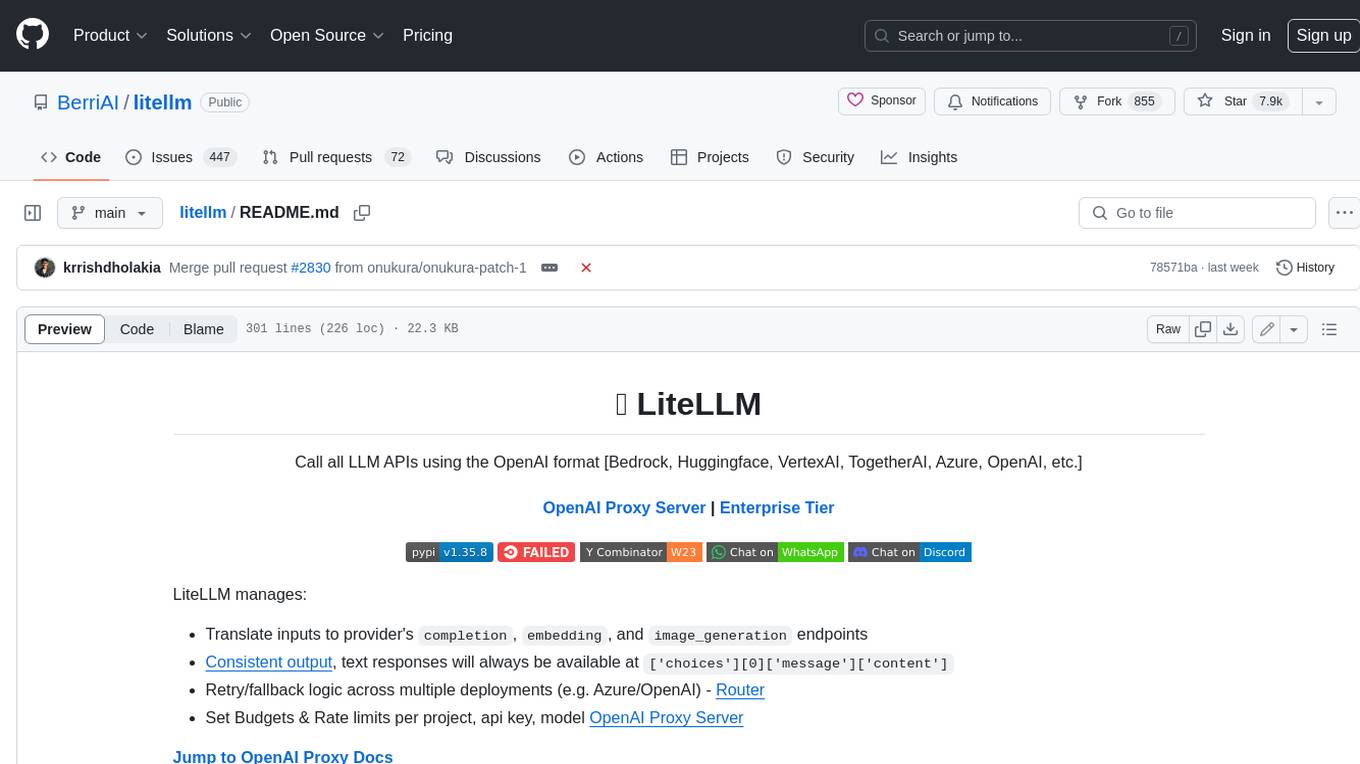

litellm

LiteLLM is a tool that allows you to call all LLM APIs using the OpenAI format. This includes Bedrock, Huggingface, VertexAI, TogetherAI, Azure, OpenAI, and more. LiteLLM manages translating inputs to provider's `completion`, `embedding`, and `image_generation` endpoints, providing consistent output, and retry/fallback logic across multiple deployments. It also supports setting budgets and rate limits per project, api key, and model.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.