ai-cli-lib

Add AI capabilities to any readline-enabled command-line program

Stars: 109

The ai-cli-lib is a library designed to enhance interactive command-line editing programs by integrating with GPT large language model servers. It allows users to obtain AI help from servers like Anthropic's or OpenAI's, or a llama.cpp server. The library acts as a command line copilot, providing natural language prompts and responses to enhance user experience and productivity. It supports various platforms such as Debian GNU/Linux, macOS, and Cygwin, and requires specific packages for installation and operation. Users can configure the library to activate during shell startup and interact with command-line programs like bash, mysql, psql, gdb, sqlite3, and bc. Additionally, the library provides options for configuring API keys, setting up llama.cpp servers, and ensuring data privacy by managing context settings.

README:

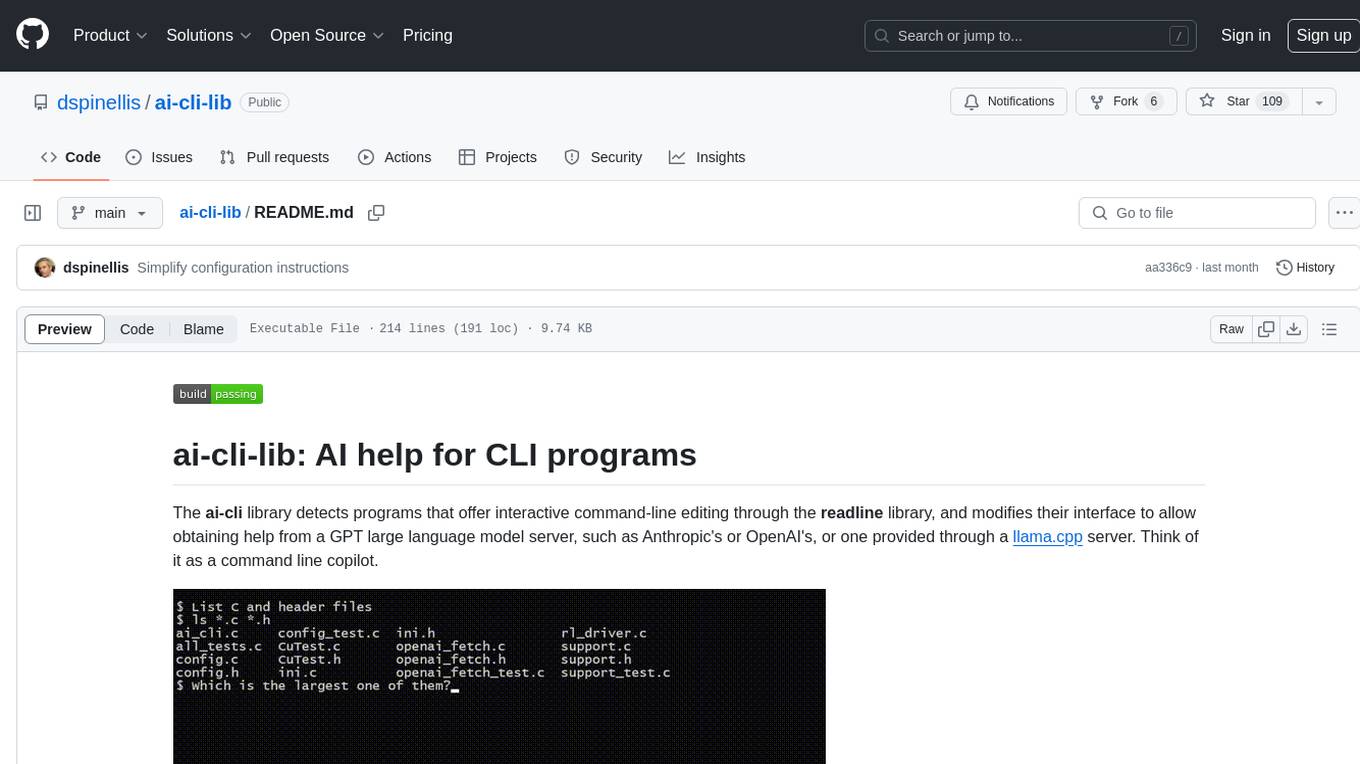

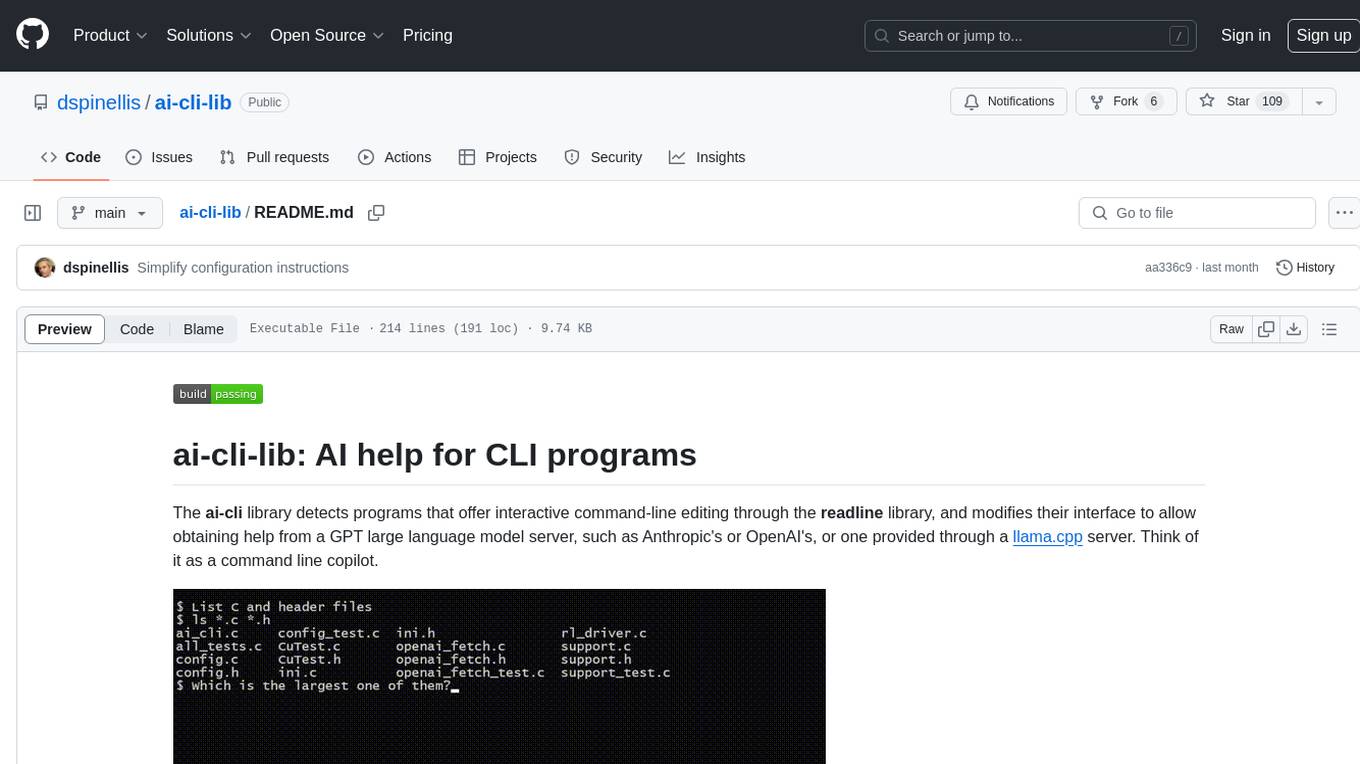

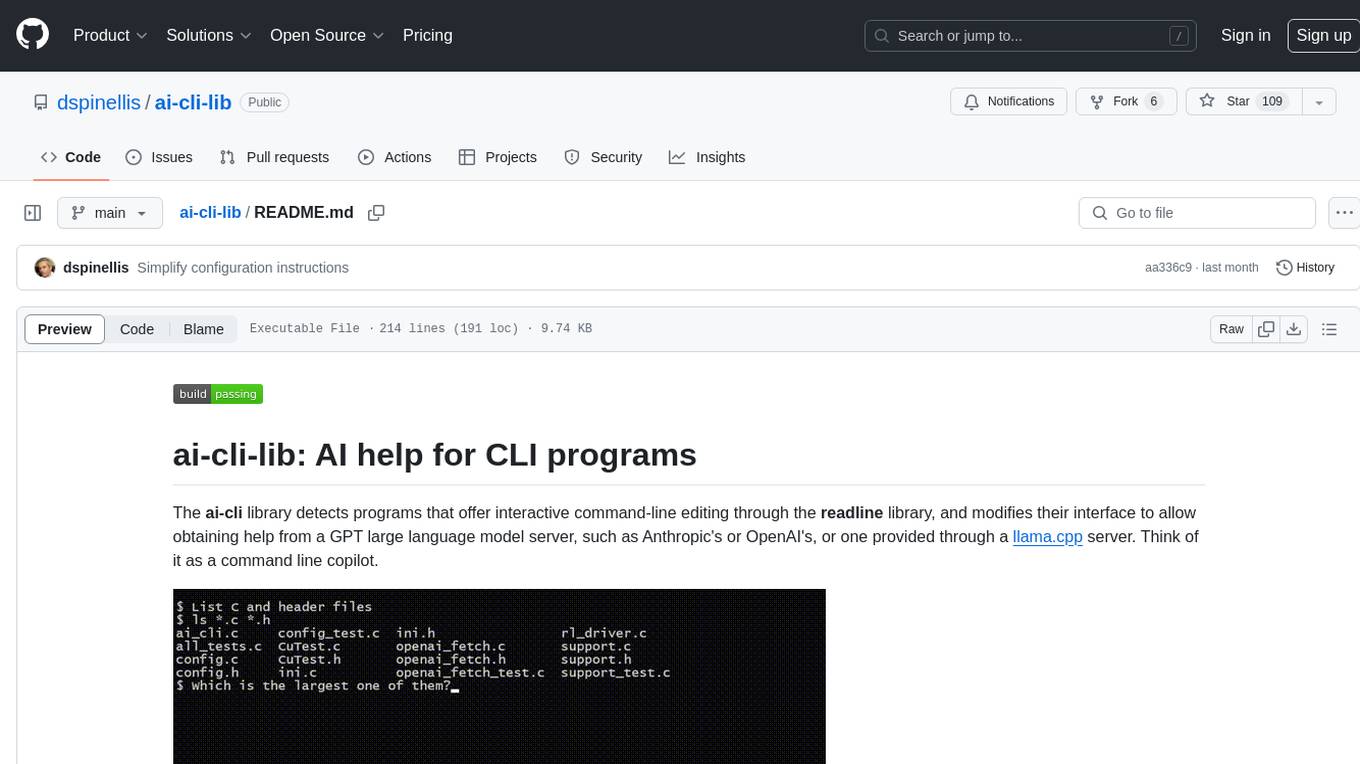

The ai-cli library detects programs that offer interactive command-line editing through the readline library, and modifies their interface to allow obtaining help from a GPT large language model server, such as Anthropic's or OpenAI's, or one provided through a llama.cpp server. Think of it as a command line copilot.

The ai-cli

library has been built and tested under the Debian GNU/Linux (bullseye)

distribution

(natively under version 11 and the x86_64 and armv7l architectures

and under Windows Subsystem for Linux version 2),

under macOS (Ventura 13.4) on the arm64 architecture

using Homebrew packages and executables linked against GNU Readline

(not the macOS-supplied editline compatibility layer),

and under Cygwin (3.4.7).

On Linux,

in addition to make, a C compiler, and the GNU C library,

the following packages are required:

libcurl4-openssl-dev

libjansson-dev

libreadline-dev.

On macOS, in addition to an Xcode installation, the following Homebrew

packages are required:

jansson

readline.

On Cygwin in addition to make, a C compiler, and the GNU C library,

the following packages are required:

libcurl-devel,

libjansson-devel,

libreadline-devel.

Package names may be different on other systems.

cd src

makecd src

make unit-testcd src

make e2e-testThis will provide you a simple read-print loop where you can test the ai-cli library's capability to link with the Readline API of third party programs.

cd src

# Global installation for all users

sudo make install

# Local installation for the user executing the command

make install PREFIX=~- Configure the ai-cli library to be activated when your bash

shell starts up by adding the following lines in your

.bashrcfile (ideally near its beginning for performance reasons). Adjust the provided path match the ai-cli library installation path; it is currently set for a local installation in your home directory.# Initialize the ai-cli library source $HOME/share/ai-cli/ai-cli-activate-bash.sh

- Alternatively, implement one of the following system-specific configurations.

- Under Linux and Cygwin set the

LD_PRELOADenvironment variable to load the library using its full path. For example, under bash runexport LD_PRELOAD=/usr/local/lib/ai_cli.so(global installation) orexport LD_PRELOAD=/home/myname/lib/ai_cli.so(local installation). - Under macOS set the

DYLD_INSERT_LIBRARIESenvironment variable to load the library using its full path. For example, under bash runexport DYLD_INSERT_LIBRARIES=/Users/myname/lib/ai_cli.dylib. Also set theDYLD_LIBRARY_PATHenvironment variable to include the Homebrew library directory, e.g.export DYLD_LIBRARY_PATH=/opt/homebrew/lib:$DYLD_LIBRARY_PATH.

- Under Linux and Cygwin set the

- Perform one of the following.

- Obtain your

Anthropic API key

or

OpenAI API key

and configure it in the

.aicliconfigfile in your home directory. This is done with akey={key}entry in the file's[anthropic]or[openai]section. In addition, addapi=anthropicorapi=openaiin the file's[general]section. See the file ai-cli-config to understand how configuration files are structured. Anthropic currently provides free trial credits to new users. Note that OpenAI API access requires a different (usage-based) subscription from the ChatGPT one. - Configure a llama.cpp server

and list its

endpoint(e.g.endpoint=http://localhost:8080/completionin the configuration file's[llamacpp]section. In addition, addapi=llamacppin the file's[general]section. In brief running a llama.cpp server involves- compiling llama.cpp (ideally with GPU support),

- downloading, converting, and quantizing suitable model files (use files with more than 7 billion parameters only on GPUs with sufficient memory to hold them),

- Running the server with a command such as

server -m models/llama-2-13b-chat/ggml-model-q4_0.gguf -c 2048 --n-gpu-layers 100.

- Obtain your

Anthropic API key

or

OpenAI API key

and configure it in the

- Run the interactive command-line programs, such as bash, mysql, psql, gdb, sqlite3, bc, as you normally would.

- If the program you want to prompt in natural language isn't linked

with the GNU Readline library, you can still make it work with Readline,

by invoking it through rlwrap.

This however looses the program-specific context provision, because

the program's name appears to The ai-cli library as

rlwrap. - To obtain AI help, enter a natural language prompt and press

^X-a(Ctrl-X followed by a) in the (default) Emacs key binding mode orVif you have configured vi key bindings. - Keep in mind that by default ai-cli-lib is sending previously entered

commands as context to the model engine you are using.

This may leak secrets that you enter, for example by setting an environment

variable to contain a key or by configuring a database password.

To avoid this problem configure the

contextsetting to zero, or use the command-line program's offered method to avoid storing an entered line. For instance, in bash you can do this by starting the line with a space character.

Note that macOS ships with the editline line-editing library,

which is currently not compatible with the ai-cli library

(it has been designed to tap onto GNU Readline).

However, Homebrew tools link with GNU Readline, so they can be used

with the ai-cli library.

To find out whether a tool you're using links with GNU Readline (libreadline)

or with editline (libedit),

use the which command to determine the command's full

path, and then the otool command to see the libraries it is linked with.

In the example below,

/usr/bin/sqlite3 isn't linked with GNU Readline,

but /opt/homebrew/opt/sqlite/bin/sqlite3 is linked with editline.

$ which sqlite3

/usr/bin/sqlite3

$ otool -L /usr/bin/sqlite3

/usr/bin/sqlite3:

/usr/lib/libncurses.5.4.dylib (compatibility version 5.4.0, current version 5.4.0)

/usr/lib/libedit.3.dylib (compatibility version 2.0.0, current version 3.0.0)

/usr/lib/libSystem.B.dylib (compatibility version 1.0.0, current version 1319.100.3)

$ otool -L /opt/homebrew/opt/sqlite/bin/sqlite3

/opt/homebrew/opt/sqlite/bin/sqlite3:

/opt/homebrew/opt/readline/lib/libreadline.8.dylib (compatibility version 8.2.0, current version 8.2.0)

/usr/lib/libncurses.5.4.dylib (compatibility version 5.4.0, current version 5.4.0)

/usr/lib/libz.1.dylib (compatibility version 1.0.0, current version 1.2.11)

/usr/lib/libSystem.B.dylib (compatibility version 1.0.0, current version 1319.100.3)

Consequently, if you want to use the capabilities of the ai-cli library, configure your system to use the Homebrew commands in preference to the ones supplied with macOS.

The ai-cli reference documentation is provided as Unix manual pages.

Contributions are welcomed through GitHub pull requests. Before working on something substantial, open an issue to signify your interest and coordinate with others. Particular useful are:

- multi-shot prompts for systems not yet supported (see the ai-cli-config file),

- support for other large language models (start from the openai_fetch.c file),

- support for other libraries (mainly editline),

- ports to other platforms and distributions.

- Diomidis Spinellis. Commands as AI Conversations. IEEE Software 40(6), November/December 2023. doi: 10.1109/MS.2023.3307170

- edX open online course on Unix tools for data, software, and production engineering

- Agarwal, Mayank et al. NeurIPS 2020 NLC2CMD Competition: Translating Natural Language to Bash Commands. ArXiv abs/2103.02523 (2021): n. pag.

- celery-ai: similar idea, but without program-specific prompts; works by monitoring keyboard events through pynput.

- ChatGDB: a gdb-specific front-end.

- ai-cli: a (similarly named) command line interface to AI models.

- Warp AI: A terminal with integrated AI capabilities.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-cli-lib

Similar Open Source Tools

ai-cli-lib

The ai-cli-lib is a library designed to enhance interactive command-line editing programs by integrating with GPT large language model servers. It allows users to obtain AI help from servers like Anthropic's or OpenAI's, or a llama.cpp server. The library acts as a command line copilot, providing natural language prompts and responses to enhance user experience and productivity. It supports various platforms such as Debian GNU/Linux, macOS, and Cygwin, and requires specific packages for installation and operation. Users can configure the library to activate during shell startup and interact with command-line programs like bash, mysql, psql, gdb, sqlite3, and bc. Additionally, the library provides options for configuring API keys, setting up llama.cpp servers, and ensuring data privacy by managing context settings.

alcless

Alcoholless is a lightweight security sandbox for macOS programs, originally designed for securing Homebrew but can be used for any CLI programs. It allows AI agents to run shell commands with reduced risk of breaking the host OS. The tool creates a separate environment for executing commands, syncing changes back to the host directory upon command exit. It uses utilities like sudo, su, pam_launchd, and rsync, with potential future integration of FSKit for file syncing. The tool also generates a sudo configuration for user-specific sandbox access, enabling users to run commands as the sandbox user without a password.

browser

Lightpanda Browser is an open-source headless browser designed for fast web automation, AI agents, LLM training, scraping, and testing. It features ultra-low memory footprint, exceptionally fast execution, and compatibility with Playwright and Puppeteer through CDP. Built for performance, Lightpanda offers Javascript execution, support for Web APIs, and is optimized for minimal memory usage. It is a modern solution for web scraping and automation tasks, providing a lightweight alternative to traditional browsers like Chrome.

tgpt

tgpt is a cross-platform command-line interface (CLI) tool that allows users to interact with AI chatbots in the Terminal without needing API keys. It supports various AI providers such as KoboldAI, Phind, Llama2, Blackbox AI, and OpenAI. Users can generate text, code, and images using different flags and options. The tool can be installed on GNU/Linux, MacOS, FreeBSD, and Windows systems. It also supports proxy configurations and provides options for updating and uninstalling the tool.

backend.ai-webui

Backend.AI Web UI is a user-friendly web and app interface designed to make AI accessible for end-users, DevOps, and SysAdmins. It provides features for session management, inference service management, pipeline management, storage management, node management, statistics, configurations, license checking, plugins, help & manuals, kernel management, user management, keypair management, manager settings, proxy mode support, service information, and integration with the Backend.AI Web Server. The tool supports various devices, offers a built-in websocket proxy feature, and allows for versatile usage across different platforms. Users can easily manage resources, run environment-supported apps, access a web-based terminal, use Visual Studio Code editor, manage experiments, set up autoscaling, manage pipelines, handle storage, monitor nodes, view statistics, configure settings, and more.

elyra

Elyra is a set of AI-centric extensions to JupyterLab Notebooks that includes features like Visual Pipeline Editor, running notebooks/scripts as batch jobs, reusable code snippets, hybrid runtime support, script editors with execution capabilities, debugger, version control using Git, and more. It provides a comprehensive environment for data scientists and AI practitioners to develop, test, and deploy machine learning models and workflows efficiently.

AutoDocs

AutoDocs by Sita is a tool designed to automate documentation for any repository. It parses the repository using tree-sitter and SCIP, constructs a code dependency graph, and generates repository-wide, dependency-aware documentation and summaries. It provides a FastAPI backend for ingestion/search and a Next.js web UI for chat and exploration. Additionally, it includes an MCP server for deep search capabilities. The tool aims to simplify the process of generating accurate and high-signal documentation for codebases.

vim-ollama

The 'vim-ollama' plugin for Vim adds Copilot-like code completion support using Ollama as a backend, enabling intelligent AI-based code completion and integrated chat support for code reviews. It does not rely on cloud services, preserving user privacy. The plugin communicates with Ollama via Python scripts for code completion and interactive chat, supporting Vim only. Users can configure LLM models for code completion tasks and interactive conversations, with detailed installation and usage instructions provided in the README.

ai-containers

This repository contains Dockerfiles, scripts, yaml files, Helm charts, etc. used to scale out AI containers with versions of TensorFlow and PyTorch optimized for Intel platforms. Scaling is done with python, Docker, kubernetes, kubeflow, cnvrg.io, Helm, and other container orchestration frameworks for use in the cloud and on-premise.

frontend

Nuclia frontend apps and libraries repository contains various frontend applications and libraries for the Nuclia platform. It includes components such as Dashboard, Widget, SDK, Sistema (design system), NucliaDB admin, CI/CD Deployment, and Maintenance page. The repository provides detailed instructions on installation, dependencies, and usage of these components for both Nuclia employees and external developers. It also covers deployment processes for different components and tools like ArgoCD for monitoring deployments and logs. The repository aims to facilitate the development, testing, and deployment of frontend applications within the Nuclia ecosystem.

ai-context

AI Context is a CLI tool that generates AI-friendly markdown files from GitHub repos, local code, YouTube videos, or webpages. It supports processing local directories, GitHub repositories, YouTube transcripts, and webpages, converting them to markdown format. The tool simplifies interactions with LLMs like ChatGPT and Claude by providing a text-first context creation approach. It offers features for installation, usage, and acknowledgments, with options to process single paths, URLs, or lists of paths concurrently.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.

NeoGPT

NeoGPT is an AI assistant that transforms your local workspace into a powerhouse of productivity from your CLI. With features like code interpretation, multi-RAG support, vision models, and LLM integration, NeoGPT redefines how you work and create. It supports executing code seamlessly, multiple RAG techniques, vision models, and interacting with various language models. Users can run the CLI to start using NeoGPT and access features like Code Interpreter, building vector database, running Streamlit UI, and changing LLM models. The tool also offers magic commands for chat sessions, such as resetting chat history, saving conversations, exporting settings, and more. Join the NeoGPT community to experience a new era of efficiency and contribute to its evolution.

desktop

ComfyUI Desktop is a packaged desktop application that allows users to easily use ComfyUI with bundled features like ComfyUI source code, ComfyUI-Manager, and uv. It automatically installs necessary Python dependencies and updates with stable releases. The app comes with Electron, Chromium binaries, and node modules. Users can store ComfyUI files in a specified location and manage model paths. The tool requires Python 3.12+ and Visual Studio with Desktop C++ workload for Windows. It uses nvm to manage node versions and yarn as the package manager. Users can install ComfyUI and dependencies using comfy-cli, download uv, and build/launch the code. Troubleshooting steps include rebuilding modules and installing missing libraries. The tool supports debugging in VSCode and provides utility scripts for cleanup. Crash reports can be sent to help debug issues, but no personal data is included.

gitingest

GitIngest is a tool that allows users to turn any Git repository into a prompt-friendly text ingest for LLMs. It provides easy code context by generating a text digest from a git repository URL or directory. The tool offers smart formatting for optimized output format for LLM prompts and provides statistics about file and directory structure, size of the extract, and token count. GitIngest can be used as a CLI tool on Linux and as a Python package for code integration. The tool is built using Tailwind CSS for frontend, FastAPI for backend framework, tiktoken for token estimation, and apianalytics.dev for simple analytics. Users can self-host GitIngest by building the Docker image and running the container. Contributions to the project are welcome, and the tool aims to be beginner-friendly for first-time contributors with a simple Python and HTML codebase.

xlang

XLang™ is a cutting-edge language designed for AI and IoT applications, offering exceptional dynamic and high-performance capabilities. It excels in distributed computing and seamless integration with popular languages like C++, Python, and JavaScript. Notably efficient, running 3 to 5 times faster than Python in AI and deep learning contexts. Features optimized tensor computing architecture for constructing neural networks through tensor expressions. Automates tensor data flow graph generation and compilation for specific targets, enhancing GPU performance by 6 to 10 times in CUDA environments.

For similar tasks

ai-cli-lib

The ai-cli-lib is a library designed to enhance interactive command-line editing programs by integrating with GPT large language model servers. It allows users to obtain AI help from servers like Anthropic's or OpenAI's, or a llama.cpp server. The library acts as a command line copilot, providing natural language prompts and responses to enhance user experience and productivity. It supports various platforms such as Debian GNU/Linux, macOS, and Cygwin, and requires specific packages for installation and operation. Users can configure the library to activate during shell startup and interact with command-line programs like bash, mysql, psql, gdb, sqlite3, and bc. Additionally, the library provides options for configuring API keys, setting up llama.cpp servers, and ensuring data privacy by managing context settings.

aicommit2

AICommit2 is a Reactive CLI tool that streamlines interactions with various AI providers such as OpenAI, Anthropic Claude, Gemini, Mistral AI, Cohere, and unofficial providers like Huggingface and Clova X. Users can request multiple AI simultaneously to generate git commit messages without waiting for all AI responses. The tool runs 'git diff' to grab code changes, sends them to configured AI, and returns the AI-generated commit message. Users can set API keys or Cookies for different providers and configure options like locale, generate number of messages, commit type, proxy, timeout, max-length, and more. AICommit2 can be used both locally with Ollama and remotely with supported providers, offering flexibility and efficiency in generating commit messages.

TrustEval-toolkit

TrustEval-toolkit is a dynamic and comprehensive framework for evaluating the trustworthiness of Generative Foundation Models (GenFMs) across dimensions such as safety, fairness, robustness, privacy, and more. It offers features like dynamic dataset generation, multi-model compatibility, customizable metrics, metadata-driven pipelines, comprehensive evaluation dimensions, optimized inference, and detailed reports.

prompt-optimizer

Prompt Optimizer is a powerful AI prompt optimization tool that helps you write better AI prompts, improving AI output quality. It supports both web application and Chrome extension usage. The tool features intelligent optimization for prompt words, real-time testing to compare before and after optimization, integration with multiple mainstream AI models, client-side processing for security, encrypted local storage for data privacy, responsive design for user experience, and more.

youtube_summarizer

YouTube AI Summarizer is a modern Next.js-based tool for AI-powered YouTube video summarization. It allows users to generate concise summaries of YouTube videos using various AI models, with support for multiple languages and summary styles. The application features flexible API key requirements, multilingual support, flexible summary modes, a smart history system, modern UI/UX design, and more. Users can easily input a YouTube URL, select language, summary type, and AI model, and generate summaries with real-time progress tracking. The tool offers a clean, well-structured summary view, history dashboard, and detailed history view for past summaries. It also provides configuration options for API keys and database setup, along with technical highlights, performance improvements, and a modern tech stack.

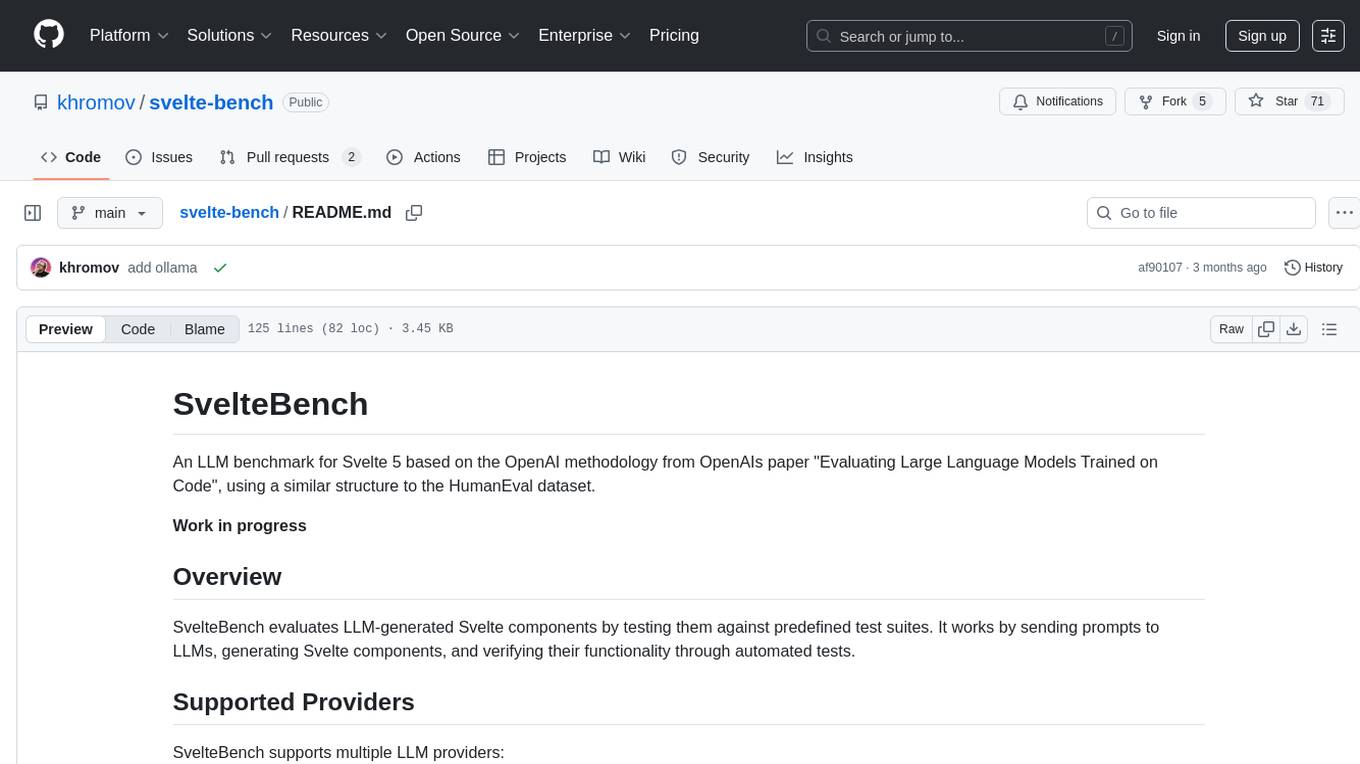

svelte-bench

SvelteBench is an LLM benchmark tool for evaluating Svelte components generated by large language models. It supports multiple LLM providers such as OpenAI, Anthropic, Google, and OpenRouter. Users can run predefined test suites to verify the functionality of the generated components. The tool allows configuration of API keys for different providers and offers debug mode for faster development. Users can provide a context file to improve component generation. Benchmark results are saved in JSON format for analysis and visualization.

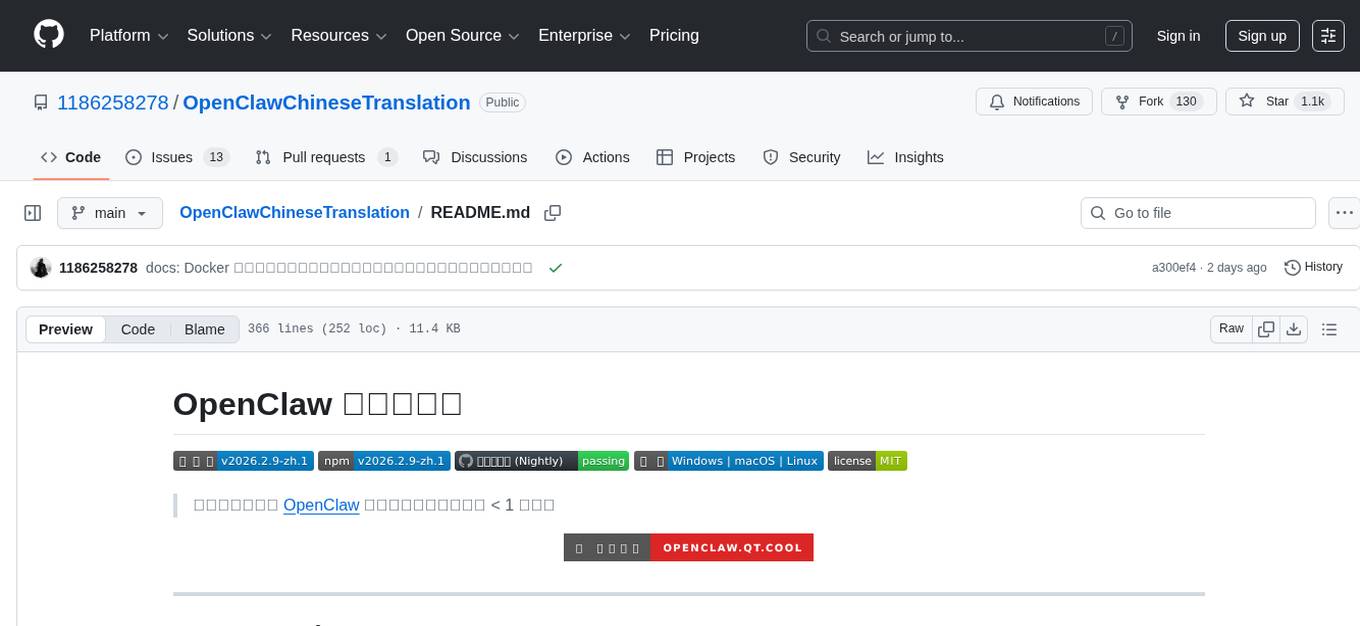

OpenClawChineseTranslation

OpenClaw Chinese Translation is a localization project that provides a fully Chinese interface for the OpenClaw open-source personal AI assistant platform. It allows users to interact with their AI assistant through chat applications like WhatsApp, Telegram, and Discord to manage daily tasks such as emails, calendars, and files. The project includes both CLI command-line and dashboard web interface fully translated into Chinese.

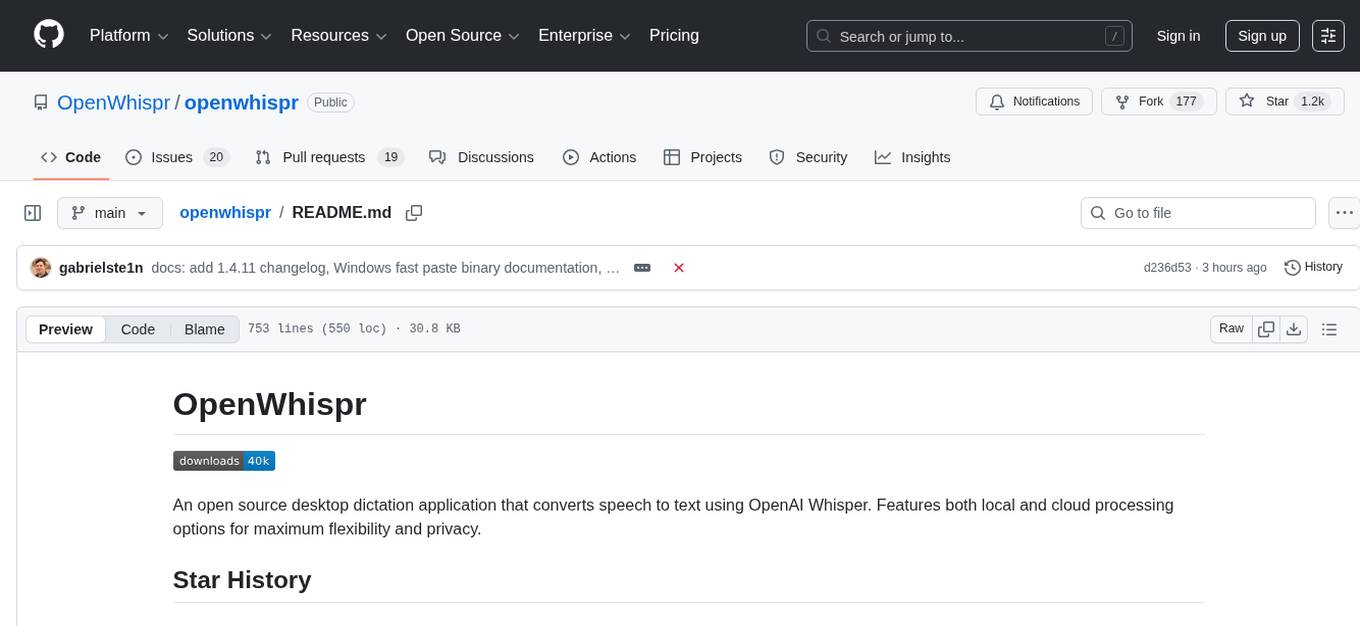

openwhispr

OpenWhispr is an open source desktop dictation application that converts speech to text using OpenAI Whisper. It features both local and cloud processing options for maximum flexibility and privacy. The application supports multiple AI providers, customizable hotkeys, agent naming, and various AI processing models. It offers a modern UI built with React 19, TypeScript, and Tailwind CSS v4, and is optimized for speed using Vite and modern tooling. Users can manage settings, view history, configure API keys, and download/manage local Whisper models. The application is cross-platform, supporting macOS, Windows, and Linux, and offers features like automatic pasting, draggable interface, global hotkeys, and compound hotkeys.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.