youtube_summarizer

A modern Next.js-based tool for AI-powered YouTube video summarization. This application allows you to generate concise summaries of YouTube videos using different AI models, with support for multiple languages and summary styles.

Stars: 86

YouTube AI Summarizer is a modern Next.js-based tool for AI-powered YouTube video summarization. It allows users to generate concise summaries of YouTube videos using various AI models, with support for multiple languages and summary styles. The application features flexible API key requirements, multilingual support, flexible summary modes, a smart history system, modern UI/UX design, and more. Users can easily input a YouTube URL, select language, summary type, and AI model, and generate summaries with real-time progress tracking. The tool offers a clean, well-structured summary view, history dashboard, and detailed history view for past summaries. It also provides configuration options for API keys and database setup, along with technical highlights, performance improvements, and a modern tech stack.

README:

A modern Next.js-based tool for AI-powered YouTube video summarization. This application allows you to generate concise summaries of YouTube videos using different AI models, with support for multiple languages and summary styles.

-

Multiple AI Models: Choose your preferred AI model for summarization:

- Google Gemini 2.0 Flash (Fast and efficient)

- Groq with Llama 70B (High accuracy)

- GPT-4o-mini (Balanced performance)

-

Flexible API Key Requirements:

- Only one API key is required to start using the application

- Models become available based on the API keys you provide

- Mix and match different models as needed

-

Multilingual Support:

- Generate summaries in English and German

- Clean formatting in both languages

- Proper handling of language-specific structures

-

Flexible Summary Modes:

- Video Summary: Concise, structured overview

- Podcast Style: More narrative, detailed analysis

-

Smart History System:

- Automatic storage in SQLite database

- Quick access to previous summaries

- Unique constraint handling for video/language combinations

-

Modern UI/UX:

- Clean, responsive design with Tailwind CSS

- Automatic dark/light mode

- Progress indicators for summarization

- Beautiful markdown rendering

- Mobile-friendly interface

The main interface where users can input a YouTube URL and select their preferred language, summary type, and AI model.

The main interface where users can input a YouTube URL and select their preferred language, summary type, and AI model.

Real-time progress tracking shows the current status of your summary generation, including processing stages and completion percentage.

Real-time progress tracking shows the current status of your summary generation, including processing stages and completion percentage.

The generated summary is displayed in a clean, well-structured format with an overview and key points from the video.

The generated summary is displayed in a clean, well-structured format with an overview and key points from the video.

Access your previously generated summaries through the history dashboard, showing video titles and generation dates.

Access your previously generated summaries through the history dashboard, showing video titles and generation dates.

View complete details of past summaries, including full analysis and key points.

View complete details of past summaries, including full analysis and key points.

- Node.js 15.x or higher (for local installation)

- npm package manager (for local installation)

- Docker (optional, for containerized installation)

- API keys for the AI services

- Clone the repository:

git clone [repository-url]

cd youtube-summarizer- Install dependencies:

npm install

# or

yarn install- Create a

.envfile in the root directory:

# You only need to add the API keys for the models you want to use

# At least one API key is required

GEMINI_API_KEY="your-gemini-api-key"

GROQ_API_KEY="your-groq-api-key"

OPENAI_API_KEY="your-openai-api-key"- Set up the database:

npx prisma generate

npx prisma db push- Start the development server:

npm run dev

# or

yarn dev- Clone the repository:

git clone [repository-url]

cd youtube-summarizer- Build the Docker image:

docker build -t youtube-summarizer .- Run the container:

docker run -d \

-p 3000:3000 \

-v ./prisma:/app/prisma \

-e GEMINI_API_KEY="your-key" \

-e GROQ_API_KEY="your-key" \

-e OPENAI_API_KEY="your-key" \

youtube-summarizerNote for Docker installation:

- The

-v ./prisma:/app/prismaflag creates a volume for the SQLite database - You only need to provide the API keys for the models you want to use

- At least one API key is required

- The application will be available at http://localhost:3000

The application will be available at http://localhost:3000

The application is designed to work with partial API key configurations:

- You only need to provide API keys for the models you want to use

- The UI will automatically show which models are available based on your API keys

- You can start with just one API key and add more later

- Models without API keys will be disabled in the interface

The application uses Prisma with SQLite for data persistence. The configuration is defined in prisma/schema.prisma:

generator client {

provider = "prisma-client-js"

}

datasource db {

provider = "sqlite"

url = "file:./dev.db"

}To reset the database if you encounter any issues:

# Remove the existing database

rm prisma/dev.db

# Regenerate the database

npx prisma generate

npx prisma db push-

Google Gemini API Key (Good starting choice - free tier available):

- Visit Google AI Studio

- Create a new project if needed

- Generate an API key

- Free tier available with generous limits

-

Groq API Key:

- Go to Groq Cloud

- Sign up for an account

- Navigate to API settings

- Generate a new API key

-

OpenAI API Key:

- Visit OpenAI Platform

- Create an account or log in

- Go to API settings

- Generate a new API key

- Note: This service requires a paid subscription

- Previously built with Python and Streamlit

- Completely rebuilt using Next.js for better performance

- New architecture using the App Router for improved routing

- Enhanced state management and real-time updates

- Streaming responses for real-time progress updates

- Efficient chunk processing for long videos

- Smart caching of summaries

- Optimized database queries

- Frontend: Next.js 15+, React, TypeScript

- Styling: Tailwind CSS, shadcn/ui components

- Database: Prisma with SQLite

- AI Integration: Multiple model support

- API: Built-in API routes with streaming support

- Visit the homepage

- Paste a YouTube URL

- Select your preferred:

- Language (English/German)

- Summary mode (Video/Podcast)

- AI model

- Click "Generate Summary"

- Watch the real-time progress

- View your formatted summary

- Access previous summaries in the history section

Contributions are welcome! Please feel free to submit a Pull Request.

This project is licensed under the MIT License - see the LICENSE file for details.

If you encounter database errors like "database disk image is malformed", follow these steps:

- Stop the development server

- Delete the corrupted database:

rm prisma/dev.db

- Regenerate the database:

npx prisma generate npx prisma db push

- Restart the development server:

npm run dev

If you encounter API errors:

- Check that all environment variables are properly set in

.env - Verify that your API keys are valid and have sufficient credits

- For history-related errors, try resetting the database as described above

-

"Invalid API Key" errors:

- Double-check your API keys in

.env - Make sure there are no extra spaces or quotes

- Verify the keys are active in their respective platforms

- Double-check your API keys in

-

"Failed to fetch summaries" error:

- Usually indicates a database issue

- Follow the database reset steps above

- Check if your database has proper read/write permissions

-

Performance issues:

- Long videos may take more time to process

- Consider using Gemini model for faster processing

- Check your network connection

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for youtube_summarizer

Similar Open Source Tools

youtube_summarizer

YouTube AI Summarizer is a modern Next.js-based tool for AI-powered YouTube video summarization. It allows users to generate concise summaries of YouTube videos using various AI models, with support for multiple languages and summary styles. The application features flexible API key requirements, multilingual support, flexible summary modes, a smart history system, modern UI/UX design, and more. Users can easily input a YouTube URL, select language, summary type, and AI model, and generate summaries with real-time progress tracking. The tool offers a clean, well-structured summary view, history dashboard, and detailed history view for past summaries. It also provides configuration options for API keys and database setup, along with technical highlights, performance improvements, and a modern tech stack.

CursorLens

Cursor Lens is an open-source tool that acts as a proxy between Cursor and various AI providers, logging interactions and providing detailed analytics to help developers optimize their use of AI in their coding workflow. It supports multiple AI providers, captures and logs all requests, provides visual analytics on AI usage, allows users to set up and switch between different AI configurations, offers real-time monitoring of AI interactions, tracks token usage, estimates costs based on token usage and model pricing. Built with Next.js, React, PostgreSQL, Prisma ORM, Vercel AI SDK, Tailwind CSS, and shadcn/ui components.

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

better-chatbot

Better Chatbot is an open-source AI chatbot designed for individuals and teams, inspired by various AI models. It integrates major LLMs, offers powerful tools like MCP protocol and data visualization, supports automation with custom agents and visual workflows, enables collaboration by sharing configurations, provides a voice assistant feature, and ensures an intuitive user experience. The platform is built with Vercel AI SDK and Next.js, combining leading AI services into one platform for enhanced chatbot capabilities.

DevoxxGenieIDEAPlugin

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

mu

Mu is a collection of simple tools for everyday use that respect users' time by providing utilities without ads, algorithms, or tracking. It includes features like personalized dashboard, microblogging, AI-powered chat, RSS feeds with AI summaries, ad-free YouTube viewing, private messaging & email, and crypto payments. Users can self-host Mu or use the hosted version at mu.xyz. The tools are designed to be small, focused, and do one thing well, enhancing some features with AI capabilities like auto-tagging topics, summarizing articles, and providing knowledge assistance.

comfyui-web-viewer

The ComfyUI Web Viewer by vrch.ai is a real-time AI-generated interactive art framework that integrates realtime streaming into ComfyUI workflows. It supports keyboard control nodes, OSC control nodes, sound input nodes, and more, accessible from any device with a web browser. It enables real-time interaction with AI-generated content, ideal for interactive visual projects and enhancing ComfyUI workflows with efficient content management and display.

gitdiagram

GitDiagram is a tool that turns any GitHub repository into an interactive diagram for visualization in seconds. It offers instant visualization, interactivity, fast generation, customization, and API access. The tool utilizes a tech stack including Next.js, FastAPI, PostgreSQL, Claude 3.5 Sonnet, Vercel, EC2, GitHub Actions, PostHog, and Api-Analytics. Users can self-host the tool for local development and contribute to its development. GitDiagram is inspired by Gitingest and has future plans to use larger context models, allow user API key input, implement RAG with Mermaid.js docs, and include font-awesome icons in diagrams.

swark

Swark is a VS Code extension that automatically generates architecture diagrams from code using large language models (LLMs). It is directly integrated with GitHub Copilot, requires no authentication or API key, and supports all languages. Swark helps users learn new codebases, review AI-generated code, improve documentation, understand legacy code, spot design flaws, and gain test coverage insights. It saves output in a 'swark-output' folder with diagram and log files. Source code is only shared with GitHub Copilot for privacy. The extension settings allow customization for file reading, file extensions, exclusion patterns, and language model selection. Swark is open source under the GNU Affero General Public License v3.0.

SecureAI-Tools

SecureAI Tools is a private and secure AI tool that allows users to chat with AI models, chat with documents (PDFs), and run AI models locally. It comes with built-in authentication and user management, making it suitable for family members or coworkers. The tool is self-hosting optimized and provides necessary scripts and docker-compose files for easy setup in under 5 minutes. Users can customize the tool by editing the .env file and enabling GPU support for faster inference. SecureAI Tools also supports remote OpenAI-compatible APIs, with lower hardware requirements for using remote APIs only. The tool's features wishlist includes chat sharing, mobile-friendly UI, and support for more file types and markdown rendering.

aisdk-prompt-optimizer

AISDK Prompt Optimizer is an open-source tool designed to transform AI interactions by optimizing prompts. It utilizes the GEPA reflective optimizer to evolve textual components of AI systems, providing features such as reflective prompt mutation, rich textual feedback, and Pareto-based selection. Users can teach their AI desired behaviors, collect ideal samples, run optimization to generate optimized prompts, and deploy the results in their applications. The tool leverages advanced optimization algorithms to guide AI through interactive conversations and refine prompt candidates for improved performance.

next-money

Next Money Stripe Starter is a SaaS Starter project that empowers your next project with a stack of Next.js, Prisma, Supabase, Clerk Auth, Resend, React Email, Shadcn/ui, and Stripe. It seamlessly integrates these technologies to accelerate your development and SaaS journey. The project includes frameworks, platforms, UI components, hooks and utilities, code quality tools, and miscellaneous features to enhance the development experience. Created by @koyaguo in 2023 and released under the MIT license.

deep-research

Deep Research is a lightning-fast tool that uses powerful AI models to generate comprehensive research reports in just a few minutes. It leverages advanced 'Thinking' and 'Task' models, combined with an internet connection, to provide fast and insightful analysis on various topics. The tool ensures privacy by processing and storing all data locally. It supports multi-platform deployment, offers support for various large language models, web search functionality, knowledge graph generation, research history preservation, local and server API support, PWA technology, multi-key payload support, multi-language support, and is built with modern technologies like Next.js and Shadcn UI. Deep Research is open-source under the MIT License.

note-companion

Note Companion is an AI-powered Obsidian plugin that automatically organizes and formats notes. It provides organizing suggestions, custom format AI prompts, automated workflows, handwritten note digitization, audio transcription, atomic note generation, YouTube summaries, and context-aware AI chat. Key use cases include smart vault management, handwritten notes digitization, and intelligent meeting notes. The tool offers advanced features like custom AI templates and multi-modal support for processing various content types. Users can seamlessly integrate with mobile workflows and utilize iOS shortcuts for sending Apple Notes to Obsidian. Note Companion enhances productivity by streamlining note organization and management tasks with AI assistance.

Memori

Memori is a memory fabric designed for enterprise AI that seamlessly integrates into existing software and infrastructure. It is agnostic to LLM, datastore, and framework, providing support for major foundational models and databases. With features like vectorized memories, in-memory semantic search, and a knowledge graph, Memori simplifies the process of attributing LLM interactions and managing sessions. It offers Advanced Augmentation for enhancing memories at different levels and supports various platforms, frameworks, database integrations, and datastores. Memori is designed to reduce development overhead and provide efficient memory management for AI applications.

VibeSurf

VibeSurf is an open-source AI agentic browser that combines workflow automation with intelligent AI agents, offering faster, cheaper, and smarter browser automation. It allows users to create revolutionary browser workflows, run multiple AI agents in parallel, perform intelligent AI automation tasks, maintain privacy with local LLM support, and seamlessly integrate as a Chrome extension. Users can save on token costs, achieve efficiency gains, and enjoy deterministic workflows for consistent and accurate results. VibeSurf also provides a Docker image for easy deployment and offers pre-built workflow templates for common tasks.

For similar tasks

youtube_summarizer

YouTube AI Summarizer is a modern Next.js-based tool for AI-powered YouTube video summarization. It allows users to generate concise summaries of YouTube videos using various AI models, with support for multiple languages and summary styles. The application features flexible API key requirements, multilingual support, flexible summary modes, a smart history system, modern UI/UX design, and more. Users can easily input a YouTube URL, select language, summary type, and AI model, and generate summaries with real-time progress tracking. The tool offers a clean, well-structured summary view, history dashboard, and detailed history view for past summaries. It also provides configuration options for API keys and database setup, along with technical highlights, performance improvements, and a modern tech stack.

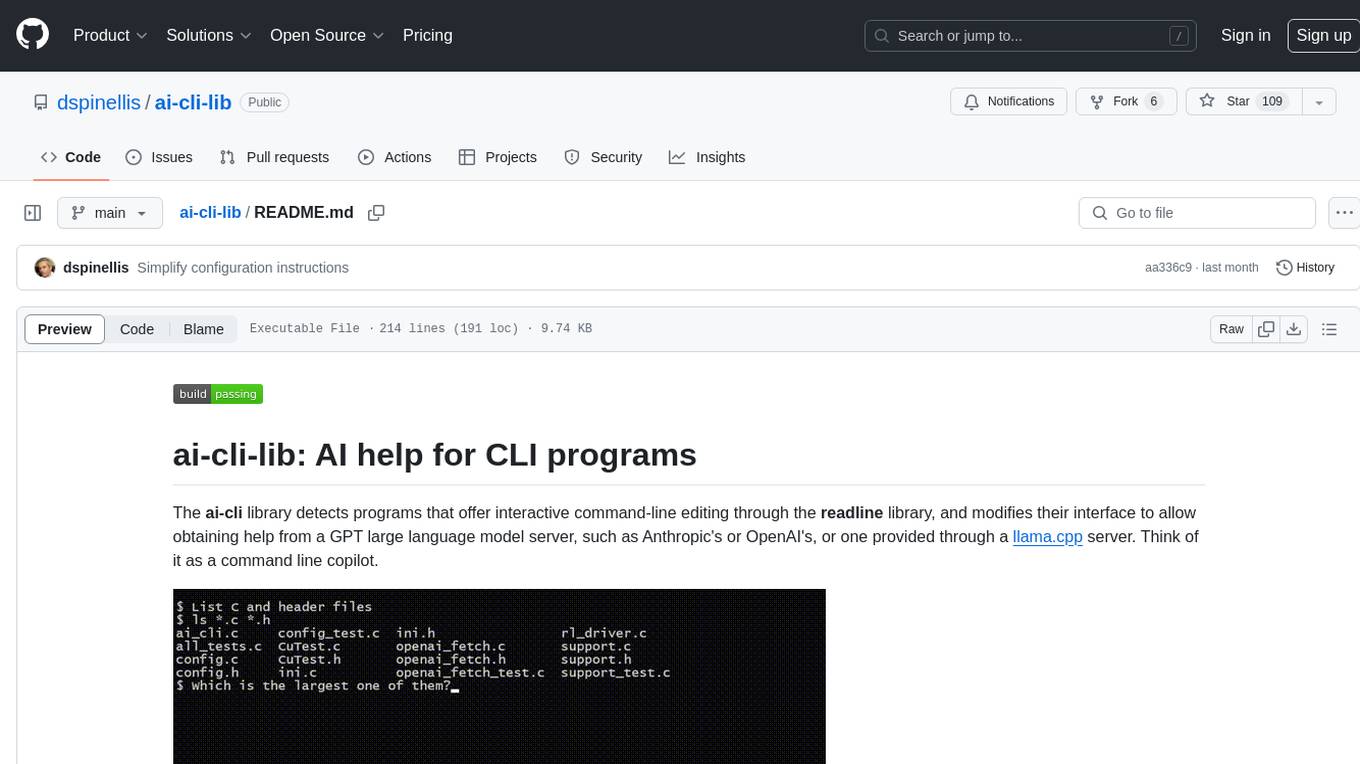

ai-cli-lib

The ai-cli-lib is a library designed to enhance interactive command-line editing programs by integrating with GPT large language model servers. It allows users to obtain AI help from servers like Anthropic's or OpenAI's, or a llama.cpp server. The library acts as a command line copilot, providing natural language prompts and responses to enhance user experience and productivity. It supports various platforms such as Debian GNU/Linux, macOS, and Cygwin, and requires specific packages for installation and operation. Users can configure the library to activate during shell startup and interact with command-line programs like bash, mysql, psql, gdb, sqlite3, and bc. Additionally, the library provides options for configuring API keys, setting up llama.cpp servers, and ensuring data privacy by managing context settings.

aicommit2

AICommit2 is a Reactive CLI tool that streamlines interactions with various AI providers such as OpenAI, Anthropic Claude, Gemini, Mistral AI, Cohere, and unofficial providers like Huggingface and Clova X. Users can request multiple AI simultaneously to generate git commit messages without waiting for all AI responses. The tool runs 'git diff' to grab code changes, sends them to configured AI, and returns the AI-generated commit message. Users can set API keys or Cookies for different providers and configure options like locale, generate number of messages, commit type, proxy, timeout, max-length, and more. AICommit2 can be used both locally with Ollama and remotely with supported providers, offering flexibility and efficiency in generating commit messages.

TrustEval-toolkit

TrustEval-toolkit is a dynamic and comprehensive framework for evaluating the trustworthiness of Generative Foundation Models (GenFMs) across dimensions such as safety, fairness, robustness, privacy, and more. It offers features like dynamic dataset generation, multi-model compatibility, customizable metrics, metadata-driven pipelines, comprehensive evaluation dimensions, optimized inference, and detailed reports.

prompt-optimizer

Prompt Optimizer is a powerful AI prompt optimization tool that helps you write better AI prompts, improving AI output quality. It supports both web application and Chrome extension usage. The tool features intelligent optimization for prompt words, real-time testing to compare before and after optimization, integration with multiple mainstream AI models, client-side processing for security, encrypted local storage for data privacy, responsive design for user experience, and more.

svelte-bench

SvelteBench is an LLM benchmark tool for evaluating Svelte components generated by large language models. It supports multiple LLM providers such as OpenAI, Anthropic, Google, and OpenRouter. Users can run predefined test suites to verify the functionality of the generated components. The tool allows configuration of API keys for different providers and offers debug mode for faster development. Users can provide a context file to improve component generation. Benchmark results are saved in JSON format for analysis and visualization.

OpenClawChineseTranslation

OpenClaw Chinese Translation is a localization project that provides a fully Chinese interface for the OpenClaw open-source personal AI assistant platform. It allows users to interact with their AI assistant through chat applications like WhatsApp, Telegram, and Discord to manage daily tasks such as emails, calendars, and files. The project includes both CLI command-line and dashboard web interface fully translated into Chinese.

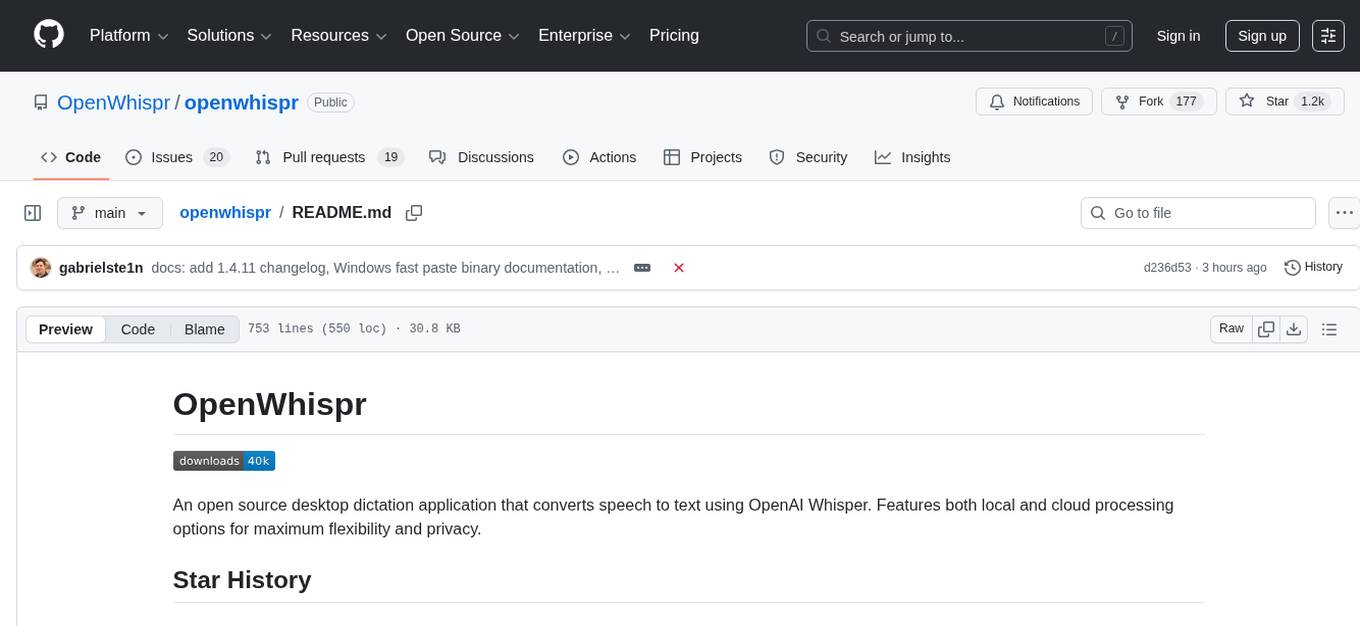

openwhispr

OpenWhispr is an open source desktop dictation application that converts speech to text using OpenAI Whisper. It features both local and cloud processing options for maximum flexibility and privacy. The application supports multiple AI providers, customizable hotkeys, agent naming, and various AI processing models. It offers a modern UI built with React 19, TypeScript, and Tailwind CSS v4, and is optimized for speed using Vite and modern tooling. Users can manage settings, view history, configure API keys, and download/manage local Whisper models. The application is cross-platform, supporting macOS, Windows, and Linux, and offers features like automatic pasting, draggable interface, global hotkeys, and compound hotkeys.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.