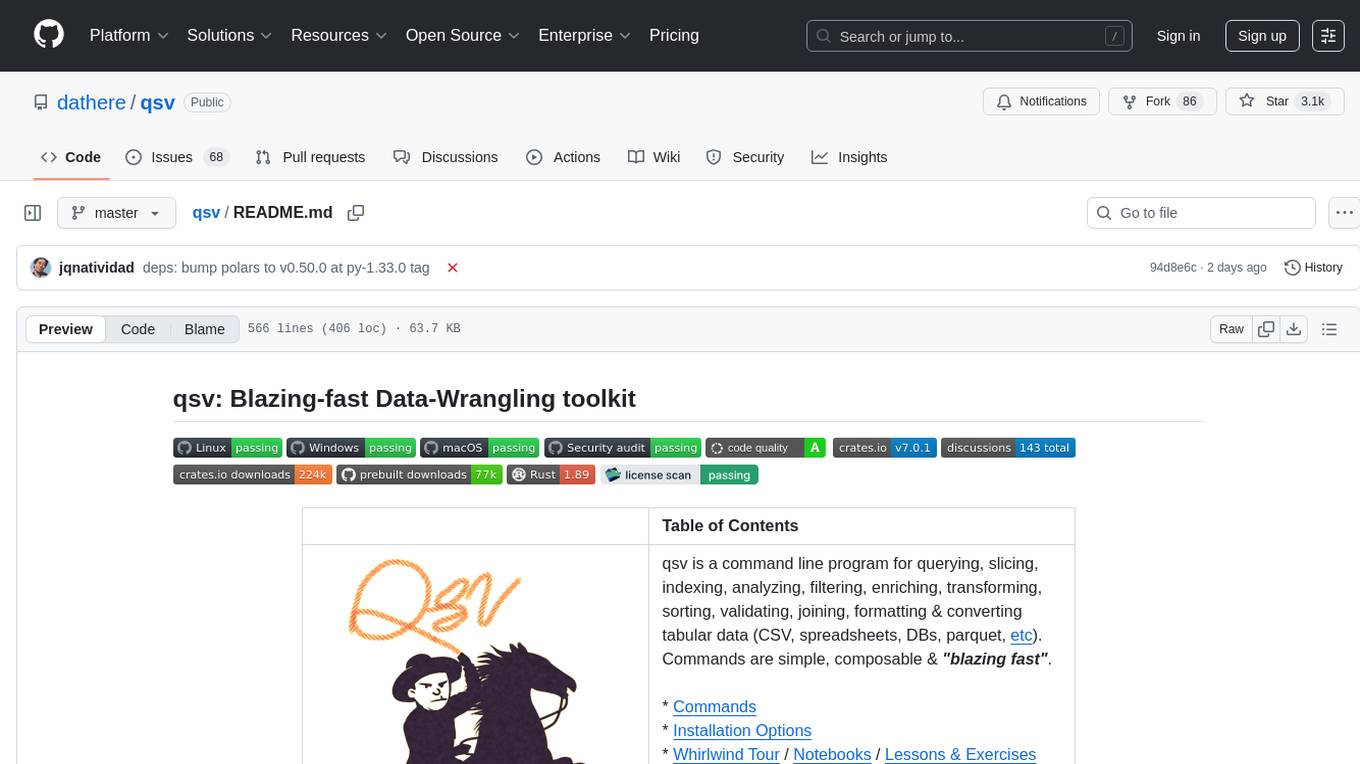

qsv

Blazing-fast Data-Wrangling toolkit

Stars: 3477

qsv is a command line program for querying, slicing, indexing, analyzing, filtering, enriching, transforming, sorting, validating, joining, formatting & converting tabular data (CSV, spreadsheets, DBs, parquet, etc). Commands are simple, composable & 'blazing fast'. It is a blazing-fast data-wrangling toolkit with a focus on speed, processing very large files, and being a complete data-wrangling toolkit. It is designed to be portable, easy to use, secure, and easy to contribute to. qsv follows the RFC 4180 CSV standard, requires UTF-8 encoding, and supports various file formats. It has extensive shell completion support, automatic compression/decompression using Snappy, and supports environment variables and dotenv files. qsv has a comprehensive test suite and is dual-licensed under MIT or the UNLICENSE.

README:

| Table of Contents | |

|---|---|

Hi-ho "Quicksilver" away! original logo details * Base AI-reimagined logo * Event logo archive |

qsv is a command line program for querying, slicing, sorting, analyzing, filtering, enriching, transforming, validating, joining, formatting, converting, chatting, FAIRifying & documenting tabular data (CSV, Excel, etc). Commands are simple, composable & "blazing fast". * Commands * Installation Options * Whirlwind Tour / Notebooks / Lessons & Exercises * FAQ * Performance Tuning * 👉 Benchmarks 🚀 * Environment Variables * Feature Flags * Goals/Non-goals * Testing * NYC SOD 2022/csv,conf,v8/PyConUS 2025/csv,conf,v9 * "Have we achieved ACI?" blogpost series * Sponsor |

Try it out at qsv.dathere.com!

| Command | Description |

|---|---|

|

apply✨ 📇🚀🧠🤖🔣👆 |

Apply series of string, date, math & currency transformations to given CSV column/s. It also has some basic NLP functions (similarity, sentiment analysis, profanity, eudex, language & name gender) detection. |

|

applydp✨ 📇🚀🔣👆

|

applydp is a slimmed-down version of apply with only Datapusher+ relevant subcommands/operations (qsvdp binary variant only). |

| behead | Drop headers from a CSV. |

|

cat 🗄️ |

Concatenate CSV files by row or by column. |

|

clipboard✨ 🖥️ |

Provide input from the clipboard or save output to the clipboard. |

|

color✨ 🐻❄️🖥️ |

Outputs tabular data as a pretty, colorized table that always fits into the terminal. Apart from CSV and its dialects, Arrow, Avro/IPC, Parquet, JSON array & JSONL formats are supported with the "polars" feature. |

|

count 📇🏎️🐻❄️ |

Count the rows and optionally compile record width statistics of a CSV file. (11.87 seconds for a 15gb, 27m row NYC 311 dataset without an index. Instantaneous with an index.) If the polars feature is enabled, uses Polars' multithreaded, mem-mapped CSV reader for fast counts even without an index |

|

datefmt 📇🚀👆 |

Formats recognized date fields (19 formats recognized) to a specified date format using strftime date format specifiers. |

|

dedup 🤯🚀👆 |

Remove duplicate rows (See also extdedup, extsort, sort & sortcheck commands). |

|

describegpt 🌐🤖🪄🗃️📚⛩️

|

Infer a "neuro-symbolic" Data Dictionary, Description & Tags or ask questions about a CSV with a configurable, Mini Jinja prompt file, using any OpenAI API-compatible LLM, including local LLMs via Ollama, Jan & LM Studio. (e.g. Markdown, JSON, TOON, Everything, Spanish, Mandarin, Controlled Tags; --prompt "What are the top 10 complaint types by community board & borough by year?" - deterministic, hallucination-free SQL RAG result; iterative, session-based SQL RAG refinement - refined SQL RAG result) |

|

diff 🚀🪄 |

Find the difference between two CSVs with ludicrous speed! e.g. compare two CSVs with 1M rows x 9 columns in under 600ms! |

| edit | Replace the value of a cell specified by its row and column. |

|

enum 👆 |

Add a new column enumerating rows by adding a column of incremental or uuid identifiers. Can also be used to copy a column or fill a new column with a constant value. |

|

excel 🚀 |

Exports a specified Excel/ODS sheet to a CSV file. |

|

exclude 📇👆 |

Removes a set of CSV data from another set based on the specified columns. |

|

explode 🔣👆 |

Explode rows into multiple ones by splitting a column value based on the given separator. |

|

extdedup 👆 |

Remove duplicate rows from an arbitrarily large CSV/text file using a memory-mapped, on-disk hash table. Unlike the dedup command, this command does not load the entire file into memory nor does it sort the deduped file. |

|

extsort 🚀📇👆 |

Sort an arbitrarily large CSV/text file using a multithreaded external merge sort algorithm. |

|

fetch✨ 📇🧠🌐 |

Send/Fetch data to/from web services for every row using HTTP Get. Comes with HTTP/2 adaptive flow control, jaq JSON query language support, dynamic throttling (RateLimit) & caching with available persistent caching using Redis or a disk-cache. |

|

fetchpost✨ 📇🧠🌐⛩️ |

Similar to fetch, but uses HTTP Post (HTTP GET vs POST methods). Supports HTML form (application/x-www-form-urlencoded), JSON (application/json) and custom content types - with the ability to render payloads using CSV data using the Mini Jinja template engine. |

|

fill 👆 |

Fill empty values. |

| fixlengths | Force a CSV to have same-length records by either padding or truncating them. |

| flatten | A flattened view of CSV records. Useful for viewing one record at a time. e.g. qsv slice -i 5 data.csv | qsv flatten. |

| fmt | Reformat a CSV with different delimiters, record terminators or quoting rules. (Supports ASCII delimited data.) |

|

foreach✨ 📇 |

Execute a shell command once per record in a given CSV file. |

|

frequency 📇😣🏎️👆🪄

|

Build frequency distribution tables of each column. Uses multithreading to go faster if an index is present (Examples: CSV JSON TOON). |

|

geocode✨ 📇🧠🌐🚀🔣👆🌎 |

Geocodes a location against an updatable local copy of the Geonames cities & the Maxmind GeoLite2 databases. With caching and multi-threading, it geocodes up to 360,000 records/sec! |

|

geoconvert✨ 🌎 |

Convert between various spatial formats and CSV/SVG including GeoJSON, SHP, and more. |

|

headers 🗄️ |

Show the headers of a CSV. Or show the intersection of all headers between many CSV files. |

| index | Create an index (📇) for a CSV. This is very quick (even the 15gb, 28m row NYC 311 dataset takes all of 14 seconds to index) & provides constant time indexing/random access into the CSV. With an index, count, sample & slice work instantaneously; random access mode is enabled in luau; and multithreading (🏎️) is enabled for the frequency, split, stats, schema & tojsonl commands. |

| input | Read CSV data with special commenting, quoting, trimming, line-skipping & non-UTF8 encoding handling rules. Typically used to "normalize" a CSV for further processing with other qsv commands. |

|

join 😣👆 |

Inner, outer, right, cross, anti & semi joins. Automatically creates a simple, in-memory hash index to make it fast. |

|

joinp✨ 🚀🐻❄️🪄 |

Inner, outer, right, cross, anti, semi, non-equi & asof joins using the Pola.rs engine. Unlike the join command, joinp can process files larger than RAM, is multithreaded, has join key validation, a maintain row order option, pre and post-join filtering, join keys unicode normalization, supports "special" non-equi joins and asof joins (which is particularly useful for time series data) & its output columns can be coalesced. |

|

json 👆 |

Convert JSON array to CSV. |

|

jsonl 🚀🔣 |

Convert newline-delimited JSON (JSONL/NDJSON) to CSV. See tojsonl command to convert CSV to JSONL. |

|

lens✨🗃️ 🐻❄️🖥️ |

Interactively view, search & filter tabular data files using the csvlens engine. Apart from CSV and its dialects, Arrow, Avro/IPC, Parquet, JSON array & JSONL formats are supported with the "polars" feature. |

luau  ✨ ✨📇🌐🔣📚

|

Create multiple new computed columns, filter rows, compute aggregations and build complex data pipelines by executing a Luau 0.708 expression/script for every row of a CSV file (sequential mode), or using random access with an index (random access mode). Can process a single Luau expression or full-fledged data-wrangling scripts using lookup tables with discrete BEGIN, MAIN and END sections. It is not just another qsv command, it is qsv's Domain-specific Language (DSL) with numerous qsv-specific helper functions to build production data pipelines. |

|

moarstats 📇🏎️ |

Add dozens of additional statistics, including extended outlier, robust & bivariate statistics to an existing stats CSV file. (example). |

|

partition 👆 |

Partition a CSV based on a column value. |

|

pivotp✨ 🚀🐻❄️🪄 |

Pivot CSV data. Features "smart" aggregation auto-selection based on data type & stats. |

|

pragmastat 🤯 |

Compute pragmatic statistics using the Pragmastat library. |

| pro | Interact with the qsv pro API. |

|

prompt✨ 🐻❄️🖥️ |

Open a file dialog to either pick a file as input or save output to a file. |

|

pseudo 🔣👆 |

Pseudonymise the value of the given column by replacing them with an incremental identifier. |

|

py✨ 📇🔣 |

Create a new computed column or filter rows by evaluating a Python expression on every row of a CSV file. Python's f-strings is particularly useful for extended formatting, with the ability to evaluate Python expressions as well. Requires Python 3.8 or greater. |

| rename | Rename the columns of a CSV efficiently. |

|

replace 📇👆🏎️ |

Replace CSV data using a regex. Applies the regex to each field individually. |

|

reverse 📇🤯 |

Reverse order of rows in a CSV. Unlike the sort --reverse command, it preserves the order of rows with the same key. If an index is present, it works with constant memory. Otherwise, it will load all the data into memory. |

safenames

|

Modify headers of a CSV to only have "safe" names - guaranteed "database-ready"/"CKAN-ready" names. |

|

sample 📇🌐🏎️ |

Randomly draw rows (with optional seed) from a CSV using seven different sampling methods - reservoir (default), indexed, bernoulli, systematic, stratified, weighted & cluster sampling. Supports sampling from CSVs on remote URLs. |

|

schema 📇😣🏎️👆🪄🐻❄️ |

Infer either a JSON Schema Validation Draft 2020-12 (Example) or Polars Schema (Example) from CSV data. In JSON Schema Validation mode, it produces a .schema.json file replete with inferred data type & domain/range validation rules derived from stats. Uses multithreading to go faster if an index is present. See validate command to use the generated JSON Schema to validate if similar CSVs comply with the schema.With the --polars option, it produces a .pschema.json file that all polars commands (sqlp, joinp & pivotp) use to determine the data type of each column & to optimize performance.Both schemas are editable and can be fine-tuned. For JSON Schema, to refine the inferred validation rules. For Polars Schema, to change the inferred Polars data types. |

|

search 📇🏎️👆 |

Run a regex over a CSV. Applies the regex to selected fields & shows only matching rows. |

|

searchset 📇🏎️👆 |

Run multiple regexes over a CSV in a single pass. Applies the regexes to each field individually & shows only matching rows. |

|

select 👆 |

Select, re-order, reverse, duplicate or drop columns. |

|

slice 📇🏎️🗃️ |

Slice rows from any part of a CSV. When an index is present, this only has to parse the rows in the slice (instead of all rows leading up to the start of the slice). |

|

snappy 🚀🌐 |

Does streaming compression/decompression of the input using Google's Snappy framing format (more info). |

|

sniff 📇🌐🤖

|

Quickly sniff & infer CSV metadata (delimiter, header row, preamble rows, quote character, flexible, is_utf8, average record length, number of records, content length & estimated number of records if sniffing a CSV on a URL, number of fields, field names & data types). It is also a general mime type detector. |

|

sort 🚀🤯👆 |

Sorts CSV data in lexicographical, natural, numerical, reverse, unique or random (with optional seed) order (Also see extsort & sortcheck commands). |

|

sortcheck 📇👆 |

Check if a CSV is sorted. With the --json options, also retrieve record count, sort breaks & duplicate count. |

|

split 📇🏎️ |

Split one CSV file into many CSV files. It can split by number of rows, number of chunks or file size. Uses multithreading to go faster if an index is present when splitting by rows or chunks. |

|

sqlp✨ 📇🚀🐻❄️🗄️🪄 |

Run Polars SQL (a PostgreSQL dialect) queries against several CSVs, Parquet, JSONL and Arrow files - converting queries to blazing-fast Polars LazyFrame expressions, processing larger than memory CSV files. Query results can be saved in CSV, JSON, JSONL, Parquet, Apache Arrow IPC and Apache Avro formats. |

|

stats 📇🤯🏎️👆🪄 |

Compute summary statistics (sum, min/max/range, sort order/sortiness, min/max/sum/avg length, mean, standard error of the mean (SEM), geometric/harmonic means, stddev, variance, Coefficient of Variation (CV), nullcount, max precision, sparsity, quartiles, Interquartile Range (IQR), lower/upper fences, skewness, median, mode/s, antimode/s, cardinality & uniqueness ratio) & make GUARANTEED data type inferences (Null, String, Float, Integer, Date, DateTime, Boolean) for each column in a CSV (Example - more info). Uses multithreading to go faster if an index is present (with an index, can compile "streaming" stats on NYC's 311 data (15gb, 28m rows) in less than 7.3 seconds!). |

|

table 🤯 |

Align output of a CSV using elastic tabstops for viewing; or to create an "aligned TSV" file or Fixed Width Format file. To interactively view a CSV, use the lens command. |

|

template 📇🚀🔣📚⛩️

|

Renders a template using CSV data with the Mini Jinja template engine (Example). |

|

to✨ 🚀🗄️ |

Convert CSV files to PostgreSQL, SQLite, Excel (XLSX), LibreOffice Calc (ODS) and Data Package. |

|

tojsonl 📇😣🚀🔣🪄🗃️ |

Smartly converts CSV to a newline-delimited JSON (JSONL/NDJSON). By scanning the CSV first, it "smartly" infers the appropriate JSON data type for each column. See jsonl command to convert JSONL to CSV. |

|

transpose 🤯👆 |

Transpose rows/columns of a CSV. |

|

validate 📇🚀🌐📚🗄️

|

Validate CSV data blazingly-fast using JSON Schema Validation (Draft 2020-12) (e.g. up to 780,031 rows/second1 using NYC's 311 schema generated by the schema command) & put invalid records into a separate file along with a detailed validation error report.Supports several custom JSON Schema formats & keywords: * currency custom format with ISO-4217 validation* dynamicEnum custom keyword that supports enum validation against a CSV on the filesystem or a URL (http/https/ckan & dathere URL schemes supported)* uniqueCombinedWith custom keyword to validate uniqueness across multiple columns for composite key validation.If no JSON schema file is provided, validates if a CSV conforms to the RFC 4180 standard and is UTF-8 encoded. |

✨: enabled by a feature flag.

📇: uses an index when available.

🤯: loads entire CSV into memory, though dedup, stats & transpose have "streaming" modes as well.

😣: uses additional memory proportional to the cardinality of the columns in the CSV.

🧠: expensive operations are memoized with available inter-session Redis/Disk caching for fetch commands.

🗄️: Extended input support.

🗃️: Limited Extended input support.

🐻❄️: command powered/accelerated by

🤖: command uses Natural Language Processing or Generative AI.

🏎️: multithreaded and/or faster when an index (📇) is available.

🚀: multithreaded even without an index.

: has CKAN-aware integration options.

: has CKAN-aware integration options.

🌐: has web-aware options.

🔣: requires UTF-8 encoded input.

👆: has powerful column selector support. See select for syntax.

🪄: "automagical" commands that uses stats and/or frequency tables to work "smarter" & "faster".

📚: has lookup table support, enabling runtime "lookups" against local or remote reference CSVs.

🌎: has geospatial capabilities.

⛩️: uses Mini Jinja template engine.

: uses Luau 0.708 as an embedded scripting DSL.

: uses Luau 0.708 as an embedded scripting DSL.

🖥️: part of the User Interface (UI) feature group

NOTE: To install the qsv MCP Server, go here.

If you prefer to explore your data using a graphical interface instead of the command-line, feel free to try out qsv pro. Leveraging qsv, qsv pro can help you quickly analyze spreadsheet data by just dropping a file, along with many other interactive features. Learn more at qsvpro.dathere.com or download qsv pro directly by clicking one of the badges below.

Full-featured prebuilt binary variants of the latest qsv version for Linux, macOS & Windows are available for download, including binaries compiled with Rust Nightly (more info). You may click a badge below based on your platform to download a ZIP with pre-built binaries.

Prebuilt binaries for Apple Silicon, Windows for ARM, IBM Power Servers (PowerPC64 LE Linux) and IBM Z mainframes (s390x) have CPU optimizations enabled (target-cpu=native) for even more performance gains.

We do not enable CPU optimizations on prebuilt binaries on x86_64 platforms as there are too many CPU variants which often lead to Illegal Instruction (SIGILL) faults. If you still get SIGILL faults, "portable" binaries (all CPU optimizations disabled) are also included in the release zip archives (qsv with a "p for portable" suffix - e.g. qsvp, qsvplite qsvpdp).

For Windows, an MSI "Easy installer" for the x86_64 MSVC qsvp binary is also available. After downloading and installing the Easy installer, launch the Easy installer and click "Install qsv" to download the latest qsvp pre-built binary to a folder that is added to your PATH. Afterwards qsv should be installed and you may launch a new terminal to use qsv.

For macOS, "ad-hoc" signatures are used to sign our binaries, so you will need to set appropriate Gatekeeper security settings or run the following command to remove the quarantine attribute from qsv before you run it for the first time:

# replace qsv with qsvlite or qsvdp if you installed those binary variants

xattr -d com.apple.quarantine qsvAn additional benefit of using the prebuilt binaries is that they have the self_update feature enabled, allowing you to quickly update qsv to the latest version with a simple qsv --update. For further security, the self_update feature only fetches releases from this GitHub repo and automatically verifies the signature of the downloaded zip archive before installing the update.

ℹ️ NOTE: The

luaufeature is not available inmuslprebuilt binaries2.

All prebuilt binaries zip archives are signed with zipsign with the following public key qsv-zipsign-public.key. To verify the integrity of the downloaded zip archives:

# if you don't have zipsign installed yet

cargo install zipsign

# verify the integrity of the downloaded prebuilt binary zip archive

# after downloading the zip archive and the qsv-zipsign-public.key file.

# replace <PREBUILT-BINARY-ARCHIVE.zip> with the name of the downloaded zip archive

# e.g. zipsign verify zip qsv-0.118.0-aarch64-apple-darwin.zip qsv-zipsign-public.key

zipsign verify zip <PREBUILT-BINARY-ARCHIVE.zip> qsv-zipsign-public.keyqsv is also distributed by several package managers and distros.

Here are the relevant commands for installing qsv using the various package managers and distros:

# Arch Linux AUR (https://aur.archlinux.org/packages/qsv)

yay -S qsv

# Homebrew on macOS/Linux (https://formulae.brew.sh/formula/qsv#default)

brew install qsv

# MacPorts on macOS (https://ports.macports.org/port/qsv/)

sudo port install qsv

# Mise on Linux/macOS/Windows (https://mise.jdx.dev)

mise use -g qsv@latest

# Nixpkgs on Linux/macOS (https://search.nixos.org/packages?channel=unstable&show=qsv&from=0&size=50&sort=relevance&type=packages&query=qsv)

nix-shell -p qsv

# Scoop on Windows (https://scoop.sh/#/apps?q=qsv)

scoop install qsv

# Void Linux (https://voidlinux.org/packages/?arch=x86_64&q=qsv)

sudo xbps-install qsv

# Conda-forge (https://anaconda.org/conda-forge/qsv)

conda install conda-forge::qsvNote that qsv provided by these package managers/distros enable different features (Homebrew, for instance, only enables the apply and luau features. However, it does automatically install shell completion for bash, fish and zsh shells).

To find out what features are enabled in a package/distro's qsv, run qsv --version (more info).

In the true spirit of open source, these packages are maintained by volunteers who wanted to make qsv easier to install in various environments. They are much appreciated, and we loosely collaborate with the package maintainers through GitHub, but know that these packages are maintained by third-parties.

datHere also maintains a Debian package targeting the latest Ubuntu LTS on x86_64 architecture to make it easier to install qsv with DataPusher+.

To install qsv on Ubuntu/Debian:

wget -O - https://dathere.github.io/qsv-deb-releases/qsv-deb.gpg | sudo gpg --dearmor -o /usr/share/keyrings/qsv-deb.gpg

echo "deb [signed-by=/usr/share/keyrings/qsv-deb.gpg] https://dathere.github.io/qsv-deb-releases ./" | sudo tee /etc/apt/sources.list.d/qsv.list

sudo apt update

sudo apt install qsvIf you have Rust installed, you can compile from source3:

git clone https://github.com/dathere/qsv.git

cd qsv

cargo build --release --locked --bin qsv --features all_featuresThe compiled binary will end up in ./target/release/.

To compile different variants and enable optional features:

# to compile qsv with all features enabled

cargo build --release --locked --bin qsv --features feature_capable,apply,fetch,foreach,geocode,luau,polars,python,self_update,to,ui

# shorthand

cargo build --release --locked --bin qsv -F all_features

# enable all CPU optimizations for the current CPU (warning: creates non-portable binary)

CARGO_BUILD_RUSTFLAGS='-C target-cpu=native' cargo build --release --locked --bin qsv -F all_features

# or build qsv with only the fetch and foreach features enabled

cargo build --release --locked --bin qsv -F feature_capable,fetch,foreach

# for qsvlite

cargo build --release --locked --bin qsvlite -F lite

# for qsvdp

cargo build --release --locked --bin qsvdp -F datapusher_plusℹ️ NOTE: To build with Rust nightly, see Nightly Release Builds. The

feature_capable,liteanddatapusher_plusare MUTUALLY EXCLUSIVE features. See Special Build Features for more info.

There are four binary variants of qsv:

-

qsv- feature-capable(✨), with the prebuilt binaries enabling all applicable features except Python 2 -

qsvpy- same asqsvbut with the Python feature enabled. Three subvariants are available - qsvpy311, qsvpy312 & qsvpy313 - which are compiled with the latest patch version of Python 3.11, 3.12 & 3.13 respectively. We need to have a binary for each Python version as Python is dynamically linked (more info). -

qsvlite- all features disabled (~13% of the size ofqsv). If you are migrating from xsv and want the same experience and feature set, this is the variant for you. -

qsvdp- optimized for use with DataPusher+ with only DataPusher+ relevant commands; an embeddedluauinterpreter;applydp, a slimmed-down version of theapplyfeature; the--progressbaroption disabled; and the self-update only checking for new releases, requiring an explicit--update(~12% of the the size ofqsv).

ℹ️ NOTE: There are "portable" subvariants of qsv available with the "p" suffix -

qsvp,qsvpliteandqsvpdp. These subvariants are compiled without any CPU features enabled. Use these subvariants if you have an old CPU architecture or getting "Illegal instruction (SIGILL)" errors when running the regular qsv binaries.

qsv has extensive, extendable shell completion support. It currently supports the following shells: bash, zsh, powershell, fish, nushell, fig & elvish. You may download a shell completions script for your shell by clicking one of the badges below:

To customize shell completions, see the Shell Completion documentation. If you're using Bash, you can also follow the step-by-step tutorial at 100.dathere.com to learn how to enable the Bash shell completions.

The --select option and several commands (apply, applydp, datefmt, exclude, fetchpost, replace, schema, search, searchset, select, sqlp, stats & validate) allow the user to specify regular expressions. We use the regex crate to parse, compile and execute these expressions. 4

Its syntax can be found here and "is similar to other regex engines, but it lacks several features that are not known how to implement efficiently. This includes, but is not limited to, look-around and backreferences. In exchange, all regex searches in this crate have worst case O(m * n) time complexity, where m is proportional to the size of the regex and n is proportional to the size of the string being searched."

If you want to test your regular expressions, regex101 supports the syntax used by the regex crate. Just select the "Rust" flavor.

JSON SCHEMA VALIDATION REGEX NOTE: The

schemacommand, when inferring a JSON Schema Validation file, will derive a regex expression for the selected columns when the--pattern-columnsoption is used. Though the derived regex is guaranteed to work, it may not be the most efficient.

Before using the generated JSON Schema file in production with thevalidatecommand, it is recommended that users inspect and optimize the derived regex as required.

While doing so, note that thevalidatecommand in JSON Schema Validation mode, can also support "fancy" regex expressions with look-around and backreferences using the--fancy-regexoption.

qsv recognizes UTF-8/ASCII encoded, CSV (.csv), SSV (.ssv) and TSV files (.tsv & .tab). CSV files are assumed to have "," (comma) as a delimiter, SSV files have ";" (semicolon) as a delimiter

and TSV files, "\t" (tab) as a delimiter. The delimiter is a single ascii character that can be set either by the --delimiter command-line option or

with the QSV_DEFAULT_DELIMITER environment variable or automatically detected when QSV_SNIFF_DELIMITER is set.

When using the --output option, qsv will UTF-8 encode the file & automatically change the delimiter used in the generated file based on the file extension - i.e. comma for .csv, semicolon for .ssv, tab for .tsv & .tab files.

JSON files are recognized & converted to CSV with the json command.

JSONL/NDJSON files are also recognized & converted to/from CSV with the jsonl and tojsonl commands respectively.

The fetch & fetchpost commands also produces JSONL files when its invoked without the --new-column option & TSV files with the --report option.

The excel, safenames, sniff, sortcheck & validate commands produce JSON files with their JSON options following the JSON API 1.1 specification, so it can return detailed machine-friendly metadata that can be used by other systems.

The schema command produces a JSON Schema Validation (Draft 2020-12) file with the ".schema.json" file extension, which can be used with the validate command to validate other CSV files with an identical schema.

The describegpt and frequency commands also both produce TOON files. TOON is a compact, human-readable encoding of the JSON data model for LLM prompts.

The excel command recognizes Excel & Open Document Spreadsheet(ODS) files (.xls, .xlsx, .xlsm, .xlsb & .ods files).

Speaking of Excel, if you're having trouble opening qsv-generated CSV files in Excel, set the QSV_OUTPUT_BOM environment variable to add a Byte Order Mark to the beginning of the generated CSV file. This is a workaround for Excel's UTF-8 encoding detection bug.

The to command converts CSVs to Excel .xlsx, LibreOffice/OpenOffice Calc .ods & Data Package formats, and populates PostgreSQL and SQLite databases.

The sqlp command returns query results in CSV, JSON, JSONL, Parquet, Apache Arrow IPC & Apache AVRO formats. Polars SQL also supports reading external files directly in various formats with its read_csv, read_ndjson, read_parquet & read_ipc table functions.

The sniff command can also detect the mime type of any file with the --no-infer or --just-mime options, may it be local or remote (http and https schemes supported).

It can detect more than 130 file formats, including MS Office/Open Document files, JSON, XML, PDF, PNG, JPEG and specialized geospatial formats like GPX, GML, KML, TML, TMX, TSX, TTML.

Click here for a complete list.

ℹ️ NOTE: When the

polarsfeature is enabled, qsv can also natively read.parquet,.ipc,.arrow,.json&.jsonlfiles.

The cat, headers, sqlp, to & validate commands have extended input support (🗄️). If the input is - or empty, the command will try to use stdin as input. If it's not, it will check if its a directory, and if so, add all the files in the directory as input files.

If its a file, it will first check if it has an .infile-list extension. If it does, it will load the text file and parse each line as an input file path. This is a much faster and convenient way to process a large number of input files, without having to pass them all as separate command-line arguments. Further, the file paths can be anywhere in the file system, even on separate volumes. If an input file path is not fully qualified, it will be treated as relative to the current working directory. Empty lines and lines starting with # are ignored. Invalid file paths will be logged as warnings and skipped.

For both directory and .infile-list input, snappy compressed files with a .sz or .zip extension will be automatically decompressed.

Finally, if its just a regular file, it will be treated as a regular input file.

The describegpt, lens, slice & tojsonl commands have limited extended input support (🗃️). They are different in that they only process one file. If provided an .infile-list or a compressed .sz or .zip file, they will only process the first file.

qsv supports automatic compression/decompression using the Snappy frame format. Snappy was chosen instead of more popular compression formats like gzip because it was designed for high-performance streaming compression & decompression (up to 2.58 gb/sec compression, 0.89 gb/sec decompression).

For all commands except the index, extdedup & extsort commands, if the input file has an ".sz" extension, qsv will automatically do streaming decompression as it reads it. Further, if the input file has an extended CSV/TSV ".sz" extension (e.g nyc311.csv.sz/nyc311.tsv.sz/nyc311.tab.sz), qsv will also use the file extension to determine the delimiter to use.

Similarly, if the --output file has an ".sz" extension, qsv will automatically do streaming compression as it writes it.

If the output file has an extended CSV/TSV ".sz" extension, qsv will also use the file extension to determine the delimiter to use.

Note however that compressed files cannot be indexed, so index-accelerated commands (frequency, schema, split, stats, tojsonl) will not be multithreaded. Random access is also disabled without an index, so slice will not be instantaneous and luau's random-access mode will not be available.

There is also a dedicated snappy command with four subcommands for direct snappy file operations — a multithreaded compress subcommand (4-5x faster than the built-in, single-threaded auto-compression); a decompress subcommand with detailed compression metadata; a check subcommand to quickly inspect if a file has a Snappy header; and a validate subcommand to confirm if a Snappy file is valid.

The snappy command can be used to compress/decompress ANY file, not just CSV/TSV files.

Using the snappy command, we can compress NYC's 311 data (15gb, 28m rows) to 4.95 gb in 5.77 seconds with the multithreaded compress subcommand - 2.58 gb/sec with a 0.33 (3.01:1) compression ratio. With snappy decompress, we can roundtrip decompress the same file in 16.71 seconds - 0.89 gb/sec.

Compare that to zip 3.0, which compressed the same file to 2.9 gb in 248.3 seconds on the same machine - 43x slower at 0.06 gb/sec with a 0.19 (5.17:1) compression ratio - for just an additional 14% (2.45 gb) of saved space. zip also took 4.3x longer to roundtrip decompress the same file in 72 seconds - 0.20 gb/sec.

ℹ️ NOTE: qsv has additional compression support beyond Snappy:

The

sqlpcommand can:

- Automatically decompress gzip, zstd and zlib compressed input files

- Automatically compress output files when using Arrow, Avro and Parquet formats (via

--formatand--compressionoptions)When the

polarsfeature is enabled, qsv can automatically decompress these compressed file formats:

- CSV:

.csv.gz,.csv.zst,.csv.zlib- TSV/TAB:

.tsv.gz,.tsv.zst,.tsv.zlib;.tab.gz,.tab.zst,.tab.zlib- SSV:

.ssv.gz,.ssv.zst,.ssv.zlibCommands with both Extended and Limited Extended Input support also support the

.zipcompressed format.

qsv follows the RFC 4180 CSV standard. However, in real life, CSV formats vary significantly & qsv is actually not strictly compliant with the specification so it can process "real-world" CSV files. qsv leverages the awesome Rust CSV crate to read/write CSV files.

Click here to find out more about how qsv conforms to the standard using this crate.

When dealing with "atypical" CSV files, you can use the input & fmt commands to normalize them to be RFC 4180-compliant.

qsv requires UTF-8 encoded input (of which ASCII is a subset).

Should you need to re-encode CSV/TSV files, you can use the input command to "lossy save" to UTF-8 - replacing invalid UTF-8 sequences with � (U+FFFD REPLACEMENT CHARACTER).

Alternatively, if you want to truly transcode to UTF-8, there are several utilities like iconv that you can use to do so on Linux/macOS & Windows.

Unlike other modern operating systems, Microsoft Windows' default encoding is UTF16-LE. This will cause problems when redirecting qsv's output to a CSV file in Powershell & trying to open it with Excel - everything will be in the first column, as the UTF16-LE encoded CSV file will not be properly recognized by Excel.

# the following command will produce a UTF16-LE encoded CSV file on Windows

qsv stats wcp.csv > wcpstats.csv

Which is weird, since you'd think Microsoft's own Excel would properly recognize UTF16-LE encoded CSV files. Regardless, to create a properly UTF-8 encoded file on Windows, use the --output option instead:

# so instead of redirecting stdout to a file on Windows

qsv stats wcp.csv > wcpstats.csv

# do this instead, so it will be properly UTF-8 encoded

qsv stats wcp.csv --output wcpstats.csv

Alternatively, qsv can add a Byte Order Mark (BOM) to the beginning of a CSV to indicate it's UTF-8 encoded. You can do this by setting the QSV_OUTPUT_BOM environment variable to 1.

This will allow Excel on Windows to properly recognize the CSV file as UTF-8 encoded.

Note that this is not a problem with Excel on macOS, as macOS (like most other *nixes) uses UTF-8 as its default encoding.

Nor is it a problem with qsv output files produced on other operating systems, as Excel on Windows can properly recognize UTF-8 encoded CSV files.

For complex data-wrangling tasks, you can use Luau and Python scripts.

Luau is recommended over Python for complex data-wrangling tasks as it is faster, more memory-efficient, has no external dependencies and has several data-wrangling helper functions as qsv's DSL.

See Luau vs Python for more info.

Another "interpreter" included with qsv is MiniJinja, which is used in the template and fetchpost commands.

qsv supports two memory allocators - mimalloc (default) and the standard allocator.

See Memory Allocator for more info.

It also has Out-of-Memory prevention, with two modes - NORMAL (default) & CONSERVATIVE.

See Out-of-Memory Prevention for more info.

qsv supports an extensive list of environment variables and supports .env files to set them.

For details, see Environment Variables and the dotenv.template.yaml file.

qsv has several feature flags that can be used to enable/disable optional features.

See Features for more info.

qsv's MSRV policy is to require the latest stable Rust version that is supported by Homebrew, currently

QuickSilver's goals, in priority order, are to be:

-

As Fast as Possible - To do so, it has frequent releases, an aggressive MSRV policy, takes advantage of CPU features, employs various caching strategies, uses HTTP/2, and is multithreaded when possible and it makes sense. It also uses the latest dependencies when possible, and will use Cargo

patchto get unreleased fixes/features from its dependencies. See Performance for more info. - Able to Process Very Large Files - Most qsv commands are streaming, using constant memory, and can process arbitrarily large CSV files. For those commands that require loading the entire CSV into memory (denoted by 🤯), qsv has Out-of-Memory prevention, batch processing strategies and "ext"ernal commands that use the disk to process larger than memory files. See Memory Management for more info.

-

A Complete Data-Wrangling Toolkit - qsv aims to be a comprehensive data-wrangling toolkit that you can use for quick analysis and investigations, but is also robust enough for production data pipelines. Its many commands are targeted towards common data-wrangling tasks and can be combined/composed into complex data-wrangling scripts with its Luau-based DSL.

Luau will also serve as the backbone of a whole library of qsv recipes - reusable scripts for common tasks (e.g. street-level geocoding, removing PII, data enrichment, etc.) that prompt for easily modifiable parameters. - Composable/Interoperable - qsv is designed to be composable, with a focus on interoperability with other common CLI tools like 'awk', 'xargs', 'ripgrep', 'sed', etc., and with well known ETL/ELT tools like Airbyte, Airflow, Pentaho Kettle, etc. Its commands can be combined with other tools via pipes, and it supports other common file formats like JSON/JSONL, Parquet, Arrow IPC, Avro, Excel, ODS, PostgreSQL, SQLite, etc. See File Formats for more info.

- As Portable as Possible - qsv is designed to be portable, with installers on several platforms with an integrated self-update mechanism. In preference order, it supports Linux, macOS and Windows. See Installation Options for more info.

- As Easy to Use as Possible - qsv is designed to be easy to use. As easy-to-use that is, as command line interfaces go 🤷. Its commands have numerous options but have sensible defaults. The usage text is written for a data analyst audience, not developers; and there are numerous examples in the usage text, with the tests doubling as examples as well. With qsv pro, it has much expanded functionality while being easier to use with its Graphical User Interface.

-

As Secure as Possible - qsv is designed to be secure. It has no external runtime dependencies, is written in Rust, and its codebase is automatically audited for security vulnerabilities with automated DevSkim, "cargo audit" and Codacy Github Actions workflows.

It uses the latest stable Rust version, with an aggressive MSRV policy and the latest version of all its dependencies. It has an extensive test suite with ~2,448 tests, including several property tests which randomly generate parameters for oft-used commands.

Its prebuilt binary archives are zipsigned, so you can verify their integrity. Its self-update mechanism automatically verifies the integrity of the prebuilt binaries archive before applying an update. See Security for more info. - As Easy to Contribute to as Possible - qsv is designed to be easy to contribute to, with a focus on maintainability. It's modular architecture allows the easy addition of self-contained commands gated by feature flags, the source code is heavily commented, the usage text is embedded, and there are helper functions that make it easy to create additional commands and supporting tests. See Features and Contributing for more info.

QuickSilver's non-goals are to be:

-

As Small as Possible - qsv is designed to be small, but not at the expense of performance, features, composability, portability, usability, security or maintainability. However, we do have a

qsvlitevariant that is ~13% of the size ofqsvand aqsvdpvariant that is ~12% of the size ofqsv. Those variants, however, have reduced functionality. Further, several commands are gated behind feature flags, so you can compile qsv with only the features you need. -

Multi-lingual - qsv's usage text and messages are English-only. There are no plans to support other languages. This does not mean it can only process English input files.

It can process well-formed CSVs in any language so long as its UTF-8 encoded. Further, it supports alternate delimiters/separators other than comma; theapply whatlangoperation detects 69 languages; and itsapply thousands, currency and eudexoperations supports different languages and country conventions for number, currency and date parsing/formatting.

Finally, though the default Geonames index of thegeocodecommand is English-only, the index can be rebuilt with thegeocode index-updatesubcommand with the--languagesoption to return place names in multiple languages (with support for 254 languages).

qsv has ~2,448 tests in the tests directory.

Each command has its own test suite in a separate file with the convention test_<COMMAND>.rs.

Apart from preventing regressions, the tests also serve as good illustrative examples, and are often linked from the usage text of each corresponding command.

To test each binary variant:

# to test qsv

cargo test --features all_features

# to test qsvlite

cargo test --features lite

# to test all tests with "stats" in the name with qsvlite

cargo test stats --features lite

# to test qsvdp

cargo test --features datapusher_plus

# to test a specific command

# here we test only stats and use the

# t alias for test and the -F shortcut for --features

cargo t stats -F all_features

# to test a specific command with a specific feature

# here we test only luau command with the luau feature

cargo t luau -F feature_capable,luau

# to test the count command with multiple features

# we use "test_count" as we don't want to run other tests

# that have "count" in the testname - e.g. test_geocode_countryinfo

cargo t test_count -F feature_capable,luau,polars

# to test using the standard allocator

# instead of the default mimalloc allocator

cargo t --no-default-features -F all_featuresDual-licensed under MIT or the UNLICENSE.

qsv is a fork of the popular xsv utility. Building on this solid foundation, it was forked in Sept 2021 and has since evolved to a general purpose data wrangling toolkit, adding numerous commands and features. See FAQ for more details.

| qsv was made possible by |

|---|

|

| Standards-based, best-of-breed, open source solutions to make your Data Useful, Usable & Used. |

This project is unrelated to Intel's Quick Sync Video.

-

The

luaufeature is NOT enabled by default on the prebuilt binaries for musl platforms. This is because we cross-compile using GitHub Action Runners using Ubuntu 20.04 LTS with the musl libc toolchain. However, Ubuntu is a glibc-based, not a musl-based distro. We get around this by cross-compiling.

Unfortunately, this prevents us from cross-compiling binaries with theluaufeature enabled as doing so requires statically linking the host OS libc library. If you need theluaufeature onmusl, you will need to compile from source on your own musl-based Linux Distro (e.g. Alpine, Void, etc.). ↩ ↩2 -

Of course, you'll also need a linker & a C compiler. Linux users should generally install GCC or Clang, according to their distribution’s documentation. For example, if you use Ubuntu, you can install the

build-essentialpackage. On macOS, you can get a C compiler by running$ xcode-select --install. For Windows, this means installing Visual Studio 2022. When prompted for workloads, include "Desktop Development with C++", the Windows 10 or 11 SDK & the English language pack, along with any other language packs your require. ↩ -

This is the same regex engine used by

ripgrep- the blazingly fast grep replacement that powers Visual Studio's magical "Find in Files" feature. ↩

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for qsv

Similar Open Source Tools

qsv

qsv is a command line program for querying, slicing, indexing, analyzing, filtering, enriching, transforming, sorting, validating, joining, formatting & converting tabular data (CSV, spreadsheets, DBs, parquet, etc). Commands are simple, composable & 'blazing fast'. It is a blazing-fast data-wrangling toolkit with a focus on speed, processing very large files, and being a complete data-wrangling toolkit. It is designed to be portable, easy to use, secure, and easy to contribute to. qsv follows the RFC 4180 CSV standard, requires UTF-8 encoding, and supports various file formats. It has extensive shell completion support, automatic compression/decompression using Snappy, and supports environment variables and dotenv files. qsv has a comprehensive test suite and is dual-licensed under MIT or the UNLICENSE.

lhotse

Lhotse is a Python library designed to make speech and audio data preparation flexible and accessible. It aims to attract a wider community to speech processing tasks by providing a Python-centric design and an expressive command-line interface. Lhotse offers standard data preparation recipes, PyTorch Dataset classes for speech tasks, and efficient data preparation for model training with audio cuts. It supports data augmentation, feature extraction, and feature-space cut mixing. The tool extends Kaldi's data preparation recipes with seamless PyTorch integration, human-readable text manifests, and convenient Python classes.

debug-gym

debug-gym is a text-based interactive debugging framework designed for debugging Python programs. It provides an environment where agents can interact with code repositories, use various tools like pdb and grep to investigate and fix bugs, and propose code patches. The framework supports different LLM backends such as OpenAI, Azure OpenAI, and Anthropic. Users can customize tools, manage environment states, and run agents to debug code effectively. debug-gym is modular, extensible, and suitable for interactive debugging tasks in a text-based environment.

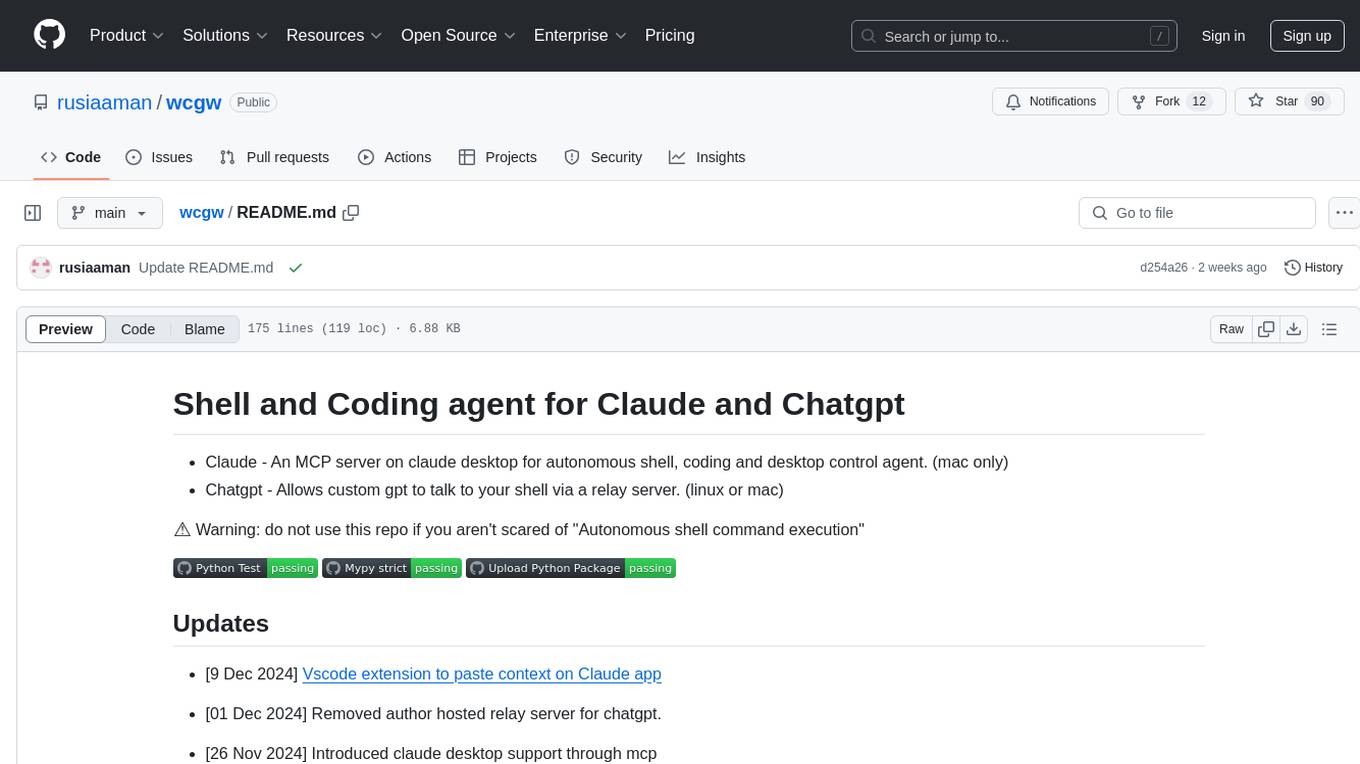

wcgw

wcgw is a shell and coding agent designed for Claude and Chatgpt. It provides full shell access with no restrictions, desktop control on Claude for screen capture and control, interactive command handling, large file editing, and REPL support. Users can use wcgw to create, execute, and iterate on tasks, such as solving problems with Python, finding code instances, setting up projects, creating web apps, editing large files, and running server commands. Additionally, wcgw supports computer use on Docker containers for desktop control. The tool can be extended with a VS Code extension for pasting context on Claude app and integrates with Chatgpt for custom GPT interactions.

safety-tooling

This repository, safety-tooling, is designed to be shared across various AI Safety projects. It provides an LLM API with a common interface for OpenAI, Anthropic, and Google models. The aim is to facilitate collaboration among AI Safety researchers, especially those with limited software engineering backgrounds, by offering a platform for contributing to a larger codebase. The repo can be used as a git submodule for easy collaboration and updates. It also supports pip installation for convenience. The repository includes features for installation, secrets management, linting, formatting, Redis configuration, testing, dependency management, inference, finetuning, API usage tracking, and various utilities for data processing and experimentation.

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

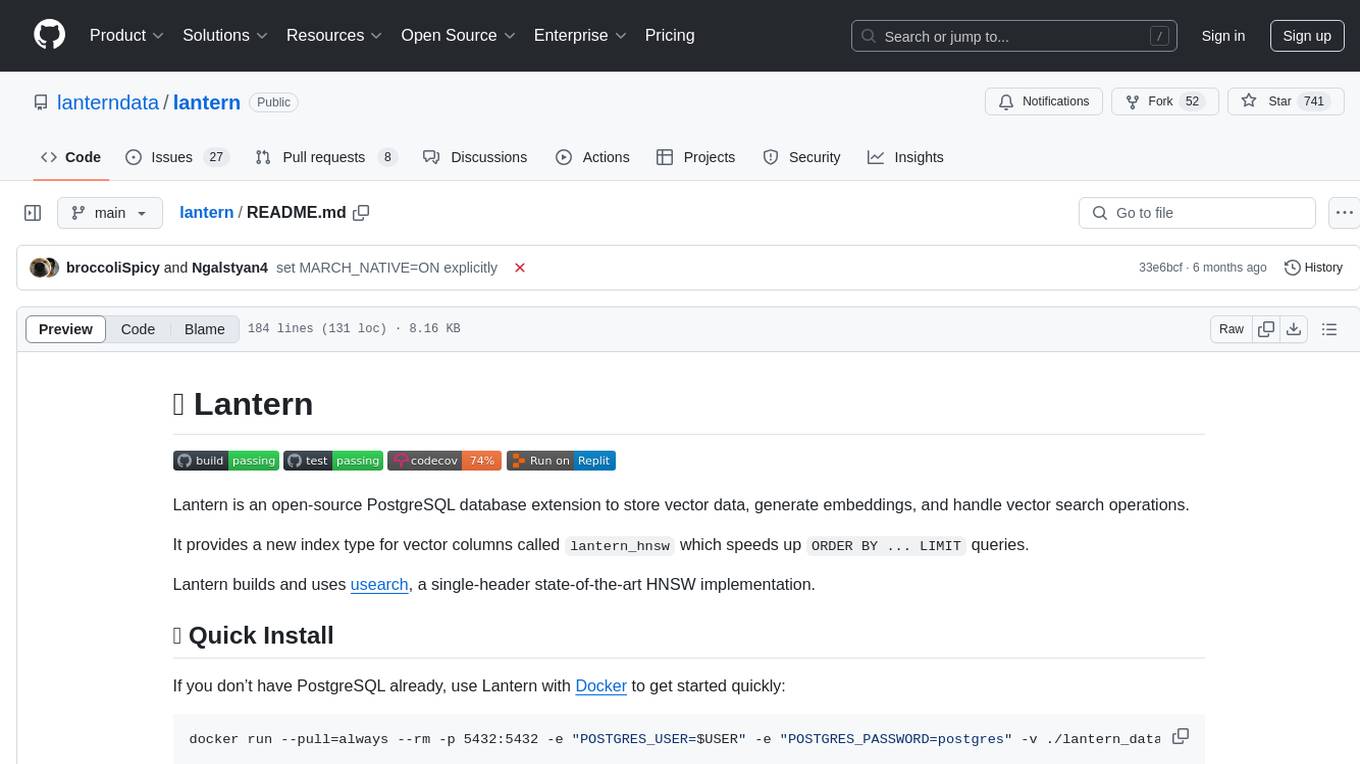

lantern

Lantern is an open-source PostgreSQL database extension designed to store vector data, generate embeddings, and handle vector search operations efficiently. It introduces a new index type called 'lantern_hnsw' for vector columns, which speeds up 'ORDER BY ... LIMIT' queries. Lantern utilizes the state-of-the-art HNSW implementation called usearch. Users can easily install Lantern using Docker, Homebrew, or precompiled binaries. The tool supports various distance functions, index construction parameters, and operator classes for efficient querying. Lantern offers features like embedding generation, interoperability with pgvector, parallel index creation, and external index graph generation. It aims to provide superior performance metrics compared to other similar tools and has a roadmap for future enhancements such as cloud-hosted version, hardware-accelerated distance metrics, industry-specific application templates, and support for version control and A/B testing of embeddings.

blinkid-android

The BlinkID Android SDK is a comprehensive solution for implementing secure document scanning and extraction. It offers powerful capabilities for extracting data from a wide range of identification documents. The SDK provides features for integrating document scanning into Android apps, including camera requirements, SDK resource pre-bundling, customizing the UX, changing default strings and localization, troubleshooting integration difficulties, and using the SDK through various methods. It also offers options for completely custom UX with low-level API integration. The SDK size is optimized for different processor architectures, and API documentation is available for reference. For any questions or support, users can contact the Microblink team at help.microblink.com.

termax

Termax is an LLM agent in your terminal that converts natural language to commands. It is featured by: - Personalized Experience: Optimize the command generation with RAG. - Various LLMs Support: OpenAI GPT, Anthropic Claude, Google Gemini, Mistral AI, and more. - Shell Extensions: Plugin with popular shells like `zsh`, `bash` and `fish`. - Cross Platform: Able to run on Windows, macOS, and Linux.

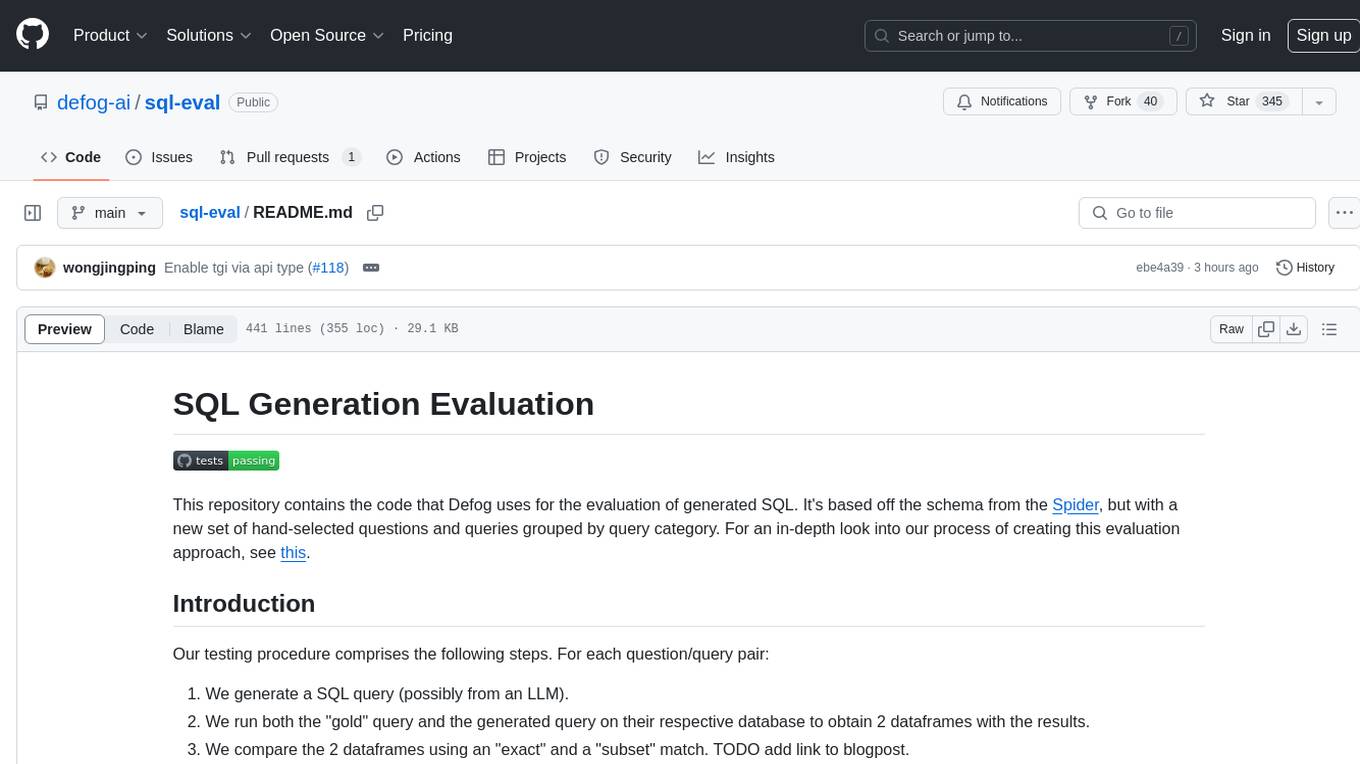

sql-eval

This repository contains the code that Defog uses for the evaluation of generated SQL. It's based off the schema from the Spider, but with a new set of hand-selected questions and queries grouped by query category. The testing procedure involves generating a SQL query, running both the 'gold' query and the generated query on their respective database to obtain dataframes with the results, comparing the dataframes using an 'exact' and a 'subset' match, logging these alongside other metrics of interest, and aggregating the results for reporting. The repository provides comprehensive instructions for installing dependencies, starting a Postgres instance, importing data into Postgres, importing data into Snowflake, using private data, implementing a query generator, and running the test with different runners.

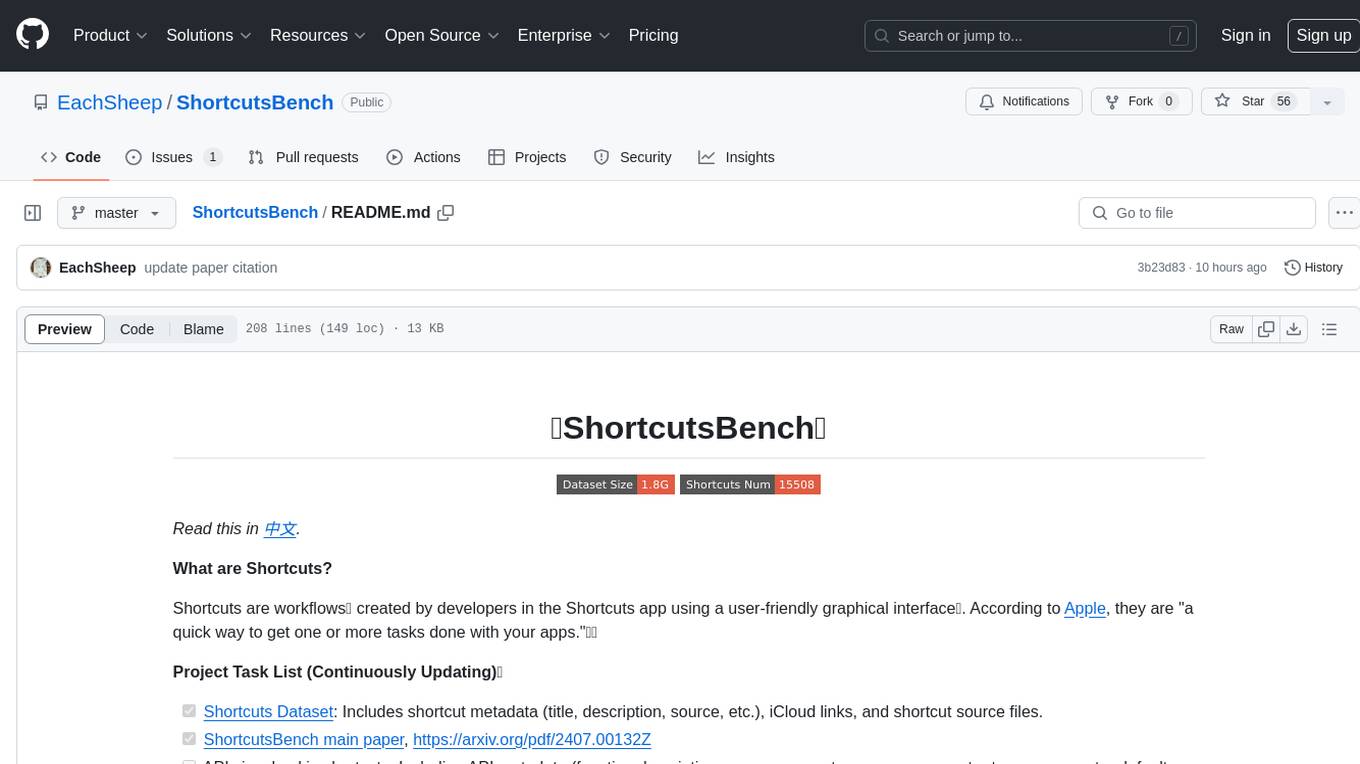

ShortcutsBench

ShortcutsBench is a project focused on collecting and analyzing workflows created in the Shortcuts app, providing a dataset of shortcut metadata, source files, and API information. It aims to study the integration of large language models with Apple devices, particularly focusing on the role of shortcuts in enhancing user experience. The project offers insights for Shortcuts users, enthusiasts, and researchers to explore, customize workflows, and study automated workflows, low-code programming, and API-based agents.

llm-memorization

The 'llm-memorization' project is a tool designed to index, archive, and search conversations with a local LLM using a SQLite database enriched with automatically extracted keywords. It aims to provide personalized context at the start of a conversation by adding memory information to the initial prompt. The tool automates queries from local LLM conversational management libraries, offers a hybrid search function, enhances prompts based on posed questions, and provides an all-in-one graphical user interface for data visualization. It supports both French and English conversations and prompts for bilingual use.

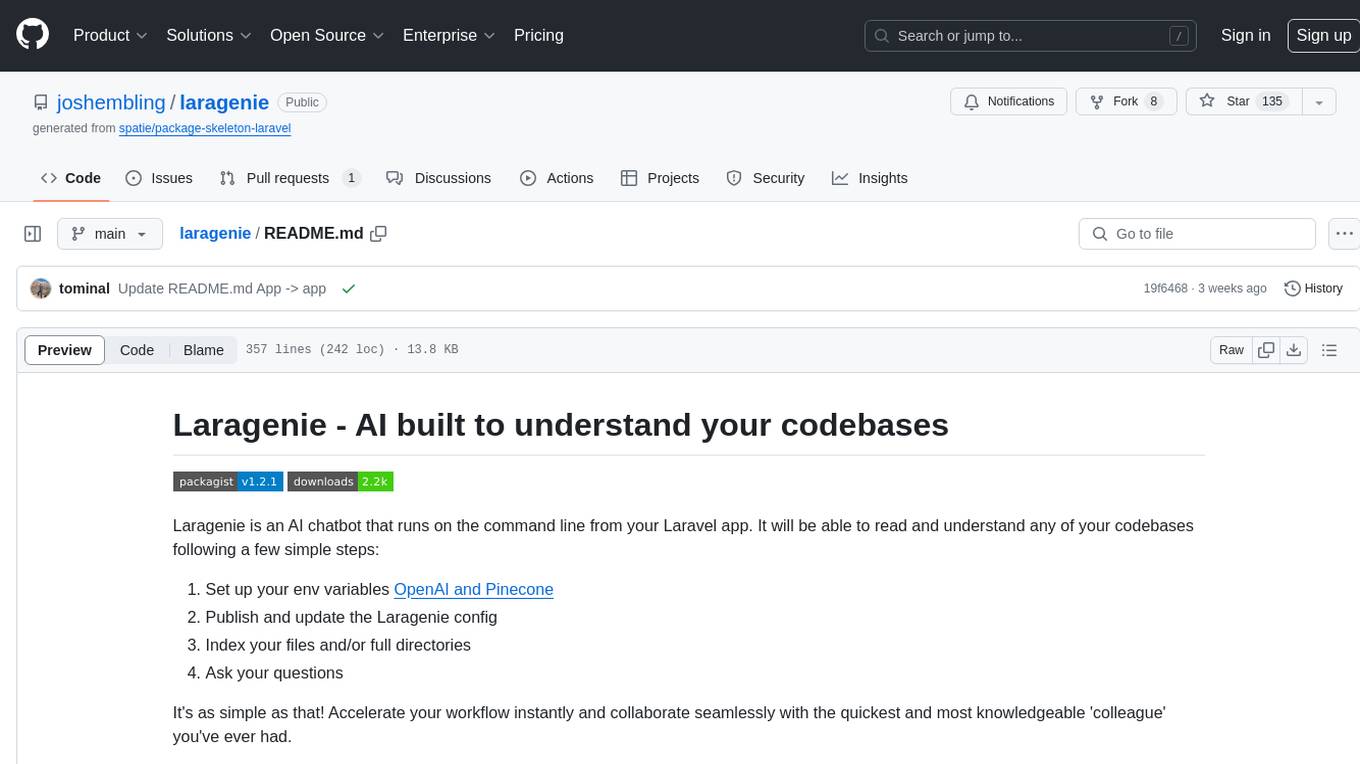

laragenie

Laragenie is an AI chatbot designed to understand and assist developers with their codebases. It runs on the command line from a Laravel app, helping developers onboard to new projects, understand codebases, and provide daily support. Laragenie accelerates workflow and collaboration by indexing files and directories, allowing users to ask questions and receive AI-generated responses. It supports OpenAI and Pinecone for processing and indexing data, making it a versatile tool for any repo in any language.

paper-qa

PaperQA is a minimal package for question and answering from PDFs or text files, providing very good answers with in-text citations. It uses OpenAI Embeddings to embed and search documents, and includes a process of embedding docs, queries, searching for top passages, creating summaries, using an LLM to re-score and select relevant summaries, putting summaries into prompt, and generating answers. The tool can be used to answer specific questions related to scientific research by leveraging citations and relevant passages from documents.

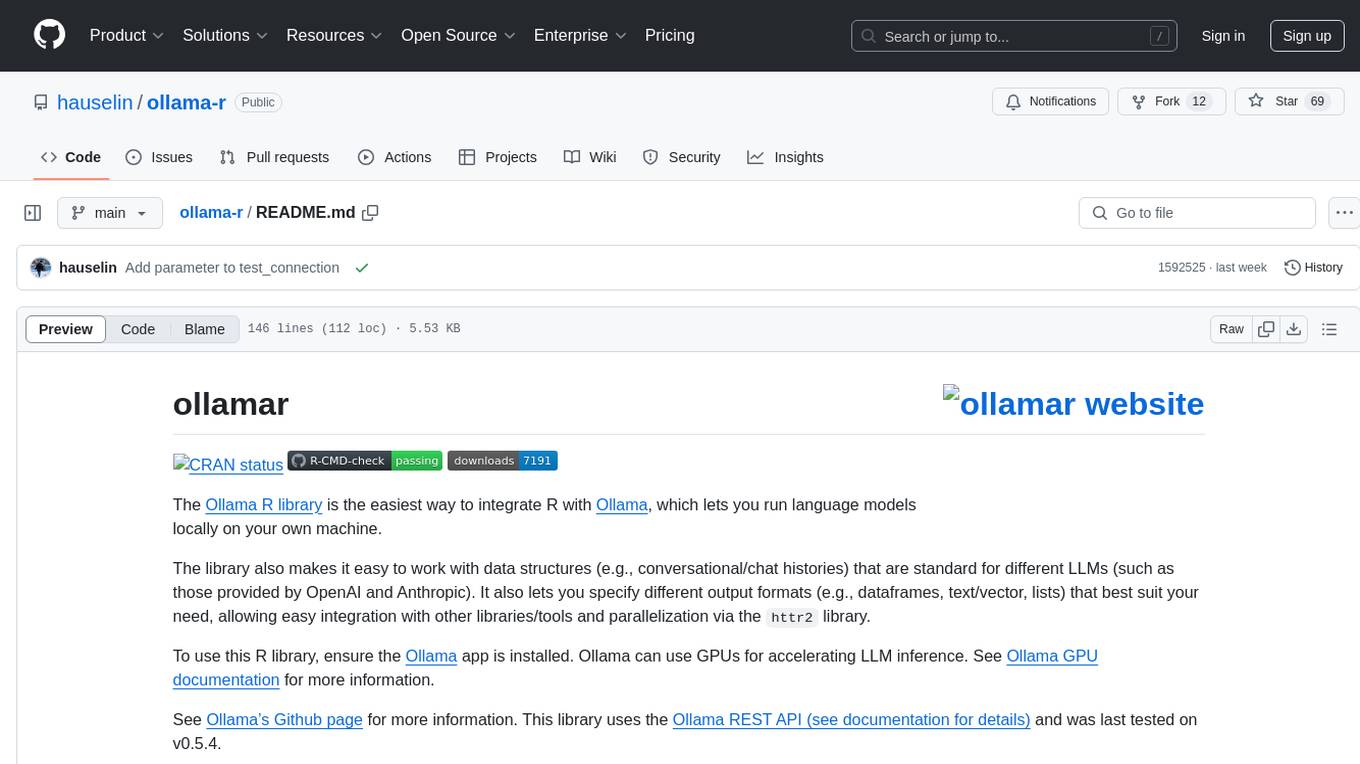

ollama-r

The Ollama R library provides an easy way to integrate R with Ollama for running language models locally on your machine. It supports working with standard data structures for different LLMs, offers various output formats, and enables integration with other libraries/tools. The library uses the Ollama REST API and requires the Ollama app to be installed, with GPU support for accelerating LLM inference. It is inspired by Ollama Python and JavaScript libraries, making it familiar for users of those languages. The installation process involves downloading the Ollama app, installing the 'ollamar' package, and starting the local server. Example usage includes testing connection, downloading models, generating responses, and listing available models.

hash

HASH is a self-building, open-source database which grows, structures and checks itself. With it, we're creating a platform for decision-making, which helps you integrate, understand and use data in a variety of different ways.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

vanna

Vanna is an open-source Python framework for SQL generation and related functionality. It uses Retrieval-Augmented Generation (RAG) to train a model on your data, which can then be used to ask questions and get back SQL queries. Vanna is designed to be portable across different LLMs and vector databases, and it supports any SQL database. It is also secure and private, as your database contents are never sent to the LLM or the vector database.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

marvin

Marvin is a lightweight AI toolkit for building natural language interfaces that are reliable, scalable, and easy to trust. Each of Marvin's tools is simple and self-documenting, using AI to solve common but complex challenges like entity extraction, classification, and generating synthetic data. Each tool is independent and incrementally adoptable, so you can use them on their own or in combination with any other library. Marvin is also multi-modal, supporting both image and audio generation as well using images as inputs for extraction and classification. Marvin is for developers who care more about _using_ AI than _building_ AI, and we are focused on creating an exceptional developer experience. Marvin users should feel empowered to bring tightly-scoped "AI magic" into any traditional software project with just a few extra lines of code. Marvin aims to merge the best practices for building dependable, observable software with the best practices for building with generative AI into a single, easy-to-use library. It's a serious tool, but we hope you have fun with it. Marvin is open-source, free to use, and made with 💙 by the team at Prefect.

activepieces

Activepieces is an open source replacement for Zapier, designed to be extensible through a type-safe pieces framework written in Typescript. It features a user-friendly Workflow Builder with support for Branches, Loops, and Drag and Drop. Activepieces integrates with Google Sheets, OpenAI, Discord, and RSS, along with 80+ other integrations. The list of supported integrations continues to grow rapidly, thanks to valuable contributions from the community. Activepieces is an open ecosystem; all piece source code is available in the repository, and they are versioned and published directly to npmjs.com upon contributions. If you cannot find a specific piece on the pieces roadmap, please submit a request by visiting the following link: Request Piece Alternatively, if you are a developer, you can quickly build your own piece using our TypeScript framework. For guidance, please refer to the following guide: Contributor's Guide