RD-Agent

Research and development (R&D) is crucial for the enhancement of industrial productivity, especially in the AI era, where the core aspects of R&D are mainly focused on data and models. We are committed to automating these high-value generic R&D processes through R&D-Agent, which lets AI drive data-driven AI. 🔗https://aka.ms/RD-Agent-Tech-Report

Stars: 10915

RD-Agent is a tool designed to automate critical aspects of industrial R&D processes, focusing on data-driven scenarios to streamline model and data development. It aims to propose new ideas ('R') and implement them ('D') automatically, leading to solutions of significant industrial value. The tool supports scenarios like Automated Quantitative Trading, Data Mining Agent, Research Copilot, and more, with a framework to push the boundaries of research in data science. Users can create a Conda environment, install the RDAgent package from PyPI, configure GPT model, and run various applications for tasks like quantitative trading, model evolution, medical prediction, and more. The tool is intended to enhance R&D processes and boost productivity in industrial settings.

README:

🖥️ Live Demo |

🎥 Demo Video

| 🗞️ News | 📝 Description |

|---|---|

| NeurIPS 2025 Acceptance | We are thrilled to announce that our paper R&D-Agent-Quant has been accepted to NeurIPS 2025 |

| Technical Report Release | Overall framework description and results on MLE-bench |

| R&D-Agent-Quant Release | Apply R&D-Agent to quant trading |

| MLE-Bench Results Released | R&D-Agent currently leads as the top-performing machine learning engineering agent on MLE-bench |

| Support LiteLLM Backend | We now fully support LiteLLM as our default backend for integration with multiple LLM providers. |

| General Data Science Agent | Data Science Agent |

| Kaggle Scenario release | We release Kaggle Agent, try the new features! |

| Official WeChat group release | We created a WeChat group, welcome to join! (🗪QR Code) |

| Official Discord release | We launch our first chatting channel in Discord (🗪 ) ) |

| First release | R&D-Agent is released on GitHub |

MLE-bench is a comprehensive benchmark evaluating the performance of AI agents on machine learning engineering tasks. Utilizing datasets from 75 Kaggle competitions, MLE-bench provides robust assessments of AI systems' capabilities in real-world ML engineering scenarios.

R&D-Agent currently leads as the top-performing machine learning engineering agent on MLE-bench:

| Agent | Low == Lite (%) | Medium (%) | High (%) | All (%) |

|---|---|---|---|---|

| R&D-Agent o3(R)+GPT-4.1(D) | 51.52 ± 6.9 | 19.3 ± 5.5 | 26.67 ± 0 | 30.22 ± 1.5 |

| R&D-Agent o1-preview | 48.18 ± 2.49 | 8.95 ± 2.36 | 18.67 ± 2.98 | 22.4 ± 1.1 |

| AIDE o1-preview | 34.3 ± 2.4 | 8.8 ± 1.1 | 10.0 ± 1.9 | 16.9 ± 1.1 |

Notes:

- O3(R)+GPT-4.1(D): This version is designed to both reduce average time per loop and leverage a cost-effective combination of backend LLMs by seamlessly integrating Research Agent (o3) with Development Agent (GPT-4.1).

- AIDE o1-preview: Represents the previously best public result on MLE-bench as reported in the original MLE-bench paper.

- Average and standard deviation results for R&D-Agent o1-preview is based on a independent of 5 seeds and for R&D-Agent o3(R)+GPT-4.1(D) is based on 6 seeds.

- According to MLE-Bench, the 75 competitions are categorized into three levels of complexity: Low==Lite if we estimate that an experienced ML engineer can produce a sensible solution in under 2 hours, excluding the time taken to train any models; Medium if it takes between 2 and 10 hours; and High if it takes more than 10 hours.

You can inspect the detailed runs of the above results online.

For running R&D-Agent on MLE-bench, refer to MLE-bench Guide: Running ML Engineering via MLE-bench

R&D-Agent for Quantitative Finance, in short RD-Agent(Q), is the first data-centric, multi-agent framework designed to automate the full-stack research and development of quantitative strategies via coordinated factor-model co-optimization.

Extensive experiments in real stock markets show that, at a cost under $10, RD-Agent(Q) achieves approximately 2× higher ARR than benchmark factor libraries while using over 70% fewer factors. It also surpasses state-of-the-art deep time-series models under smaller resource budgets. Its alternating factor–model optimization further delivers excellent trade-off between predictive accuracy and strategy robustness.

You can learn more details about RD-Agent(Q) through the paper and reproduce it through the documentation.

Check out our demo video showcasing the current progress of our Data Science Agent under development:

https://github.com/user-attachments/assets/3eccbecb-34a4-4c81-bce4-d3f8862f7305

R&D-Agent aims to automate the most critical and valuable aspects of the industrial R&D process, and we begin with focusing on the data-driven scenarios to streamline the development of models and data. Methodologically, we have identified a framework with two key components: 'R' for proposing new ideas and 'D' for implementing them. We believe that the automatic evolution of R&D will lead to solutions of significant industrial value.

R&D is a very general scenario. The advent of R&D-Agent can be your

- 💰 Automatic Quant Factory (🎥Demo Video|

▶️ YouTube) - 🤖 Data Mining Agent: Iteratively proposing data & models (🎥Demo Video 1|

▶️ YouTube) (🎥Demo Video 2|▶️ YouTube) and implementing them by gaining knowledge from data. - 🦾 Research Copilot: Auto read research papers (🎥Demo Video|

▶️ YouTube) / financial reports (🎥Demo Video|▶️ YouTube) and implement model structures or building datasets. - 🤖 Kaggle Agent: Auto Model Tuning and Feature Engineering(🎥Demo Video Coming Soon...) and implementing them to achieve more in competitions.

- ...

You can click the links above to view the demo. We're continuously adding more methods and scenarios to the project to enhance your R&D processes and boost productivity.

Additionally, you can take a closer look at the examples in our 🖥️ Live Demo.

You can try above demos by running the following command:

Users must ensure Docker is installed before attempting most scenarios. Please refer to the official 🐳Docker page for installation instructions.

Ensure the current user can run Docker commands without using sudo. You can verify this by executing docker run hello-world.

- Create a new conda environment with Python (3.10 and 3.11 are well-tested in our CI):

conda create -n rdagent python=3.10

- Activate the environment:

conda activate rdagent

- You can directly install the R&D-Agent package from PyPI:

pip install rdagent

- If you want to try the latest version or contribute to RD-Agent, you can install it from the source and follow the development setup:

git clone https://github.com/microsoft/RD-Agent cd RD-Agent make dev

More details can be found in the development setup.

- rdagent provides a health check that currently checks two things.

- whether the docker installation was successful.

- whether the default port used by the rdagent ui is occupied.

rdagent health_check --no-check-env

-

The demos requires following ability:

- ChatCompletion

- json_mode

- embedding query

You can set your Chat Model and Embedding Model in the following ways:

🔥 Attention: We now provide experimental support for DeepSeek models! You can use DeepSeek's official API for cost-effective and high-performance inference. See the configuration example below for DeepSeek setup.

-

Using LiteLLM (Default): We now support LiteLLM as a backend for integration with multiple LLM providers. You can configure in multiple ways:

Option 1: Unified API base for both models

Configuration Example:

OpenAISetup :cat << EOF > .env # Set to any model supported by LiteLLM. CHAT_MODEL=gpt-4o EMBEDDING_MODEL=text-embedding-3-small # Configure unified API base OPENAI_API_BASE=<your_unified_api_base> OPENAI_API_KEY=<replace_with_your_openai_api_key>

Configuration Example:

Azure OpenAISetup :Before using this configuration, please confirm in advance that your

Azure OpenAI API keysupportsembedded models.cat << EOF > .env EMBEDDING_MODEL=azure/<Model deployment supporting embedding> CHAT_MODEL=azure/<your deployment name> AZURE_API_KEY=<replace_with_your_openai_api_key> AZURE_API_BASE=<your_unified_api_base> AZURE_API_VERSION=<azure api version>

Option 2: Separate API bases for Chat and Embedding models

cat << EOF > .env # Set to any model supported by LiteLLM. # Configure separate API bases for chat and embedding # CHAT MODEL: CHAT_MODEL=gpt-4o OPENAI_API_BASE=<your_chat_api_base> OPENAI_API_KEY=<replace_with_your_openai_api_key> # EMBEDDING MODEL: # TAKE siliconflow as an example, you can use other providers. # Note: embedding requires litellm_proxy prefix EMBEDDING_MODEL=litellm_proxy/BAAI/bge-large-en-v1.5 LITELLM_PROXY_API_KEY=<replace_with_your_siliconflow_api_key> LITELLM_PROXY_API_BASE=https://api.siliconflow.cn/v1

Configuration Example:

DeepSeekSetup :Since many users encounter configuration errors when setting up DeepSeek. Here's a complete working example for DeepSeek Setup:

cat << EOF > .env # CHAT MODEL: Using DeepSeek Official API CHAT_MODEL=deepseek/deepseek-chat DEEPSEEK_API_KEY=<replace_with_your_deepseek_api_key> # EMBEDDING MODEL: Using SiliconFlow for embedding since deepseek has no embedding model. # Note: embedding requires litellm_proxy prefix EMBEDDING_MODEL=litellm_proxy/BAAI/bge-m3 LITELLM_PROXY_API_KEY=<replace_with_your_siliconflow_api_key> LITELLM_PROXY_API_BASE=https://api.siliconflow.cn/v1

Notice: If you are using reasoning models that include thought processes in their responses (such as <think> tags), you need to set the following environment variable:

REASONING_THINK_RM=True

You can also use a deprecated backend if you only use

OpenAI APIorAzure OpenAIdirectly. For this deprecated setting and more configuration information, please refer to the documentation. -

If your environment configuration is complete, please execute the following commands to check if your configuration is valid. This step is necessary.

rdagent health_check

The 🖥️ Live Demo is implemented by the following commands(each item represents one demo, you can select the one you prefer):

-

Run the Automated Quantitative Trading & Iterative Factors Model Joint Evolution: Qlib self-loop factor & model proposal and implementation application

rdagent fin_quant

-

Run the Automated Quantitative Trading & Iterative Factors Evolution: Qlib self-loop factor proposal and implementation application

rdagent fin_factor

-

Run the Automated Quantitative Trading & Iterative Model Evolution: Qlib self-loop model proposal and implementation application

rdagent fin_model

-

Run the Automated Quantitative Trading & Factors Extraction from Financial Reports: Run the Qlib factor extraction and implementation application based on financial reports

# 1. Generally, you can run this scenario using the following command: rdagent fin_factor_report --report-folder=<Your financial reports folder path> # 2. Specifically, you need to prepare some financial reports first. You can follow this concrete example: wget https://github.com/SunsetWolf/rdagent_resource/releases/download/reports/all_reports.zip unzip all_reports.zip -d git_ignore_folder/reports rdagent fin_factor_report --report-folder=git_ignore_folder/reports

-

Run the Automated Model Research & Development Copilot: model extraction and implementation application

# 1. Generally, you can run your own papers/reports with the following command: rdagent general_model <Your paper URL> # 2. Specifically, you can do it like this. For more details and additional paper examples, use `rdagent general_model -h`: rdagent general_model "https://arxiv.org/pdf/2210.09789"

-

Run the Automated Medical Prediction Model Evolution: Medical self-loop model proposal and implementation application

# Generally, you can run the data science program with the following command: rdagent data_science --competition <your competition name> # Specifically, you need to create a folder for storing competition files (e.g., competition description file, competition datasets, etc.), and configure the path to the folder in your environment. In addition, you need to use chromedriver when you download the competition descriptors, which you can follow for this specific example: # 1. Download the dataset, extract it to the target folder. wget https://github.com/SunsetWolf/rdagent_resource/releases/download/ds_data/arf-12-hours-prediction-task.zip unzip arf-12-hours-prediction-task.zip -d ./git_ignore_folder/ds_data/ # 2. Configure environment variables in the `.env` file dotenv set DS_LOCAL_DATA_PATH "$(pwd)/git_ignore_folder/ds_data" dotenv set DS_CODER_ON_WHOLE_PIPELINE True dotenv set DS_IF_USING_MLE_DATA False dotenv set DS_SAMPLE_DATA_BY_LLM False dotenv set DS_SCEN rdagent.scenarios.data_science.scen.DataScienceScen # 3. run the application rdagent data_science --competition arf-12-hours-prediction-task

NOTE: For more information about the dataset, please refer to the documentation.

-

Run the Automated Kaggle Model Tuning & Feature Engineering: self-loop model proposal and feature engineering implementation application

Using tabular-playground-series-dec-2021 as an example.

- Register and login on the Kaggle website.

- Configuring the Kaggle API.

(1) Click on the avatar (usually in the top right corner of the page) ->Settings->Create New Token, A file calledkaggle.jsonwill be downloaded.

(2) Movekaggle.jsonto~/.config/kaggle/

(3) Modify the permissions of the kaggle.json file. Reference command:chmod 600 ~/.config/kaggle/kaggle.json

- Join the competition: Click

Join the competition->I Understand and Acceptat the bottom of the competition details page.

# Generally, you can run the Kaggle competition program with the following command: rdagent data_science --competition <your competition name> # 1. Configure environment variables in the `.env` file mkdir -p ./git_ignore_folder/ds_data dotenv set DS_LOCAL_DATA_PATH "$(pwd)/git_ignore_folder/ds_data" dotenv set DS_CODER_ON_WHOLE_PIPELINE True dotenv set DS_IF_USING_MLE_DATA True dotenv set DS_SAMPLE_DATA_BY_LLM True dotenv set DS_SCEN rdagent.scenarios.data_science.scen.KaggleScen # 2. run the application rdagent data_science --competition tabular-playground-series-dec-2021

- Register and login on the Kaggle website.

-

You can run the following command for our demo program to see the run logs.

rdagent ui --port 19899 --log-dir <your log folder like "log/"> --data-science

-

About the

data_scienceparameter: If you want to see the logs of the data science scenario, set thedata_scienceparameter toTrue; otherwise set it toFalse. -

Although port 19899 is not commonly used, but before you run this demo, you need to check if port 19899 is occupied. If it is, please change it to another port that is not occupied.

You can check if a port is occupied by running the following command.

rdagent health_check --no-check-env --no-check-docker

We have applied R&D-Agent to multiple valuable data-driven industrial scenarios.

In this project, we are aiming to build an Agent to automate Data-Driven R&D that can

- 📄 Read real-world material (reports, papers, etc.) and extract key formulas, descriptions of interested features and models, which are the key components of data-driven R&D .

- 🛠️ Implement the extracted formulas (e.g., features, factors, and models) in runnable codes.

- Due to the limited ability of LLM in implementing at once, build an evolving process for the agent to improve performance by learning from feedback and knowledge.

- 💡 Propose new ideas based on current knowledge and observations.

In the two key areas of data-driven scenarios, model implementation and data building, our system aims to serve two main roles: 🦾Copilot and 🤖Agent.

- The 🦾Copilot follows human instructions to automate repetitive tasks.

- The 🤖Agent, being more autonomous, actively proposes ideas for better results in the future.

The supported scenarios are listed below:

| Scenario/Target | Model Implementation | Data Building |

|---|---|---|

| 💹 Finance | 🤖 Iteratively Proposing Ideas & Evolving |

🤖 Iteratively Proposing Ideas & Evolving 🦾 Auto reports reading & implementation |

| 🩺 Medical | 🤖 Iteratively Proposing Ideas & Evolving |

- |

| 🏭 General | 🦾 Auto paper reading & implementation 🤖 Auto Kaggle Model Tuning |

🤖Auto Kaggle feature Engineering |

- RoadMap: Currently, we are working hard to add new features to the Kaggle scenario.

Different scenarios vary in entrance and configuration. Please check the detailed setup tutorial in the scenarios documents.

Here is a gallery of successful explorations (5 traces showed in 🖥️ Live Demo). You can download and view the execution trace using this command from the documentation.

Please refer to 📖readthedocs_scen for more details of the scenarios.

Automating the R&D process in data science is a highly valuable yet underexplored area in industry. We propose a framework to push the boundaries of this important research field.

The research questions within this framework can be divided into three main categories:

| Research Area | Paper/Work List |

|---|---|

| Benchmark the R&D abilities | Benchmark |

| Idea proposal: Explore new ideas or refine existing ones | Research |

| Ability to realize ideas: Implement and execute ideas | Development |

We believe that the key to delivering high-quality solutions lies in the ability to evolve R&D capabilities. Agents should learn like human experts, continuously improving their R&D skills.

More documents can be found in the 📖 readthedocs.

@misc{yang2025rdagentllmagentframeworkautonomous,

title={R&D-Agent: An LLM-Agent Framework Towards Autonomous Data Science},

author={Xu Yang and Xiao Yang and Shikai Fang and Yifei Zhang and Jian Wang and Bowen Xian and Qizheng Li and Jingyuan Li and Minrui Xu and Yuante Li and Haoran Pan and Yuge Zhang and Weiqing Liu and Yelong Shen and Weizhu Chen and Jiang Bian},

year={2025},

eprint={2505.14738},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2505.14738},

}@misc{chen2024datacentric,

title={Towards Data-Centric Automatic R&D},

author={Haotian Chen and Xinjie Shen and Zeqi Ye and Wenjun Feng and Haoxue Wang and Xiao Yang and Xu Yang and Weiqing Liu and Jiang Bian},

year={2024},

eprint={2404.11276},

archivePrefix={arXiv},

primaryClass={cs.AI}

}In a data mining expert's daily research and development process, they propose a hypothesis (e.g., a model structure like RNN can capture patterns in time-series data), design experiments (e.g., finance data contains time-series and we can verify the hypothesis in this scenario), implement the experiment as code (e.g., Pytorch model structure), and then execute the code to get feedback (e.g., metrics, loss curve, etc.). The experts learn from the feedback and improve in the next iteration.

Based on the principles above, we have established a basic method framework that continuously proposes hypotheses, verifies them, and gets feedback from the real-world practice. This is the first scientific research automation framework that supports linking with real-world verification.

For more detail, please refer to our 🖥️ Live Demo page.

@misc{yang2024collaborative,

title={Collaborative Evolving Strategy for Automatic Data-Centric Development},

author={Xu Yang and Haotian Chen and Wenjun Feng and Haoxue Wang and Zeqi Ye and Xinjie Shen and Xiao Yang and Shizhao Sun and Weiqing Liu and Jiang Bian},

year={2024},

eprint={2407.18690},

archivePrefix={arXiv},

primaryClass={cs.AI}

}@misc{li2025rdagentquantmultiagentframeworkdatacentric,

title={R&D-Agent-Quant: A Multi-Agent Framework for Data-Centric Factors and Model Joint Optimization},

author={Yuante Li and Xu Yang and Xiao Yang and Minrui Xu and Xisen Wang and Weiqing Liu and Jiang Bian},

year={2025},

eprint={2505.15155},

archivePrefix={arXiv},

primaryClass={q-fin.CP},

url={https://arxiv.org/abs/2505.15155},

}We welcome contributions and suggestions to improve R&D-Agent. Please refer to the Contributing Guide for more details on how to contribute.

Before submitting a pull request, ensure that your code passes the automatic CI checks.

This project welcomes contributions and suggestions. Contributing to this project is straightforward and rewarding. Whether it's solving an issue, addressing a bug, enhancing documentation, or even correcting a typo, every contribution is valuable and helps improve R&D-Agent.

To get started, you can explore the issues list, or search for TODO: comments in the codebase by running the command grep -r "TODO:".

Before we released R&D-Agent as an open-source project on GitHub, it was an internal project within our group. Unfortunately, the internal commit history was not preserved when we removed some confidential code. As a result, some contributions from our group members, including Haotian Chen, Wenjun Feng, Haoxue Wang, Zeqi Ye, Xinjie Shen, and Jinhui Li, were not included in the public commits.

The RD-agent is provided “as is”, without warranty of any kind, express or implied, including but not limited to the warranties of merchantability, fitness for a particular purpose and noninfringement. The RD-agent is aimed to facilitate research and development process in the financial industry and not ready-to-use for any financial investment or advice. Users shall independently assess and test the risks of the RD-agent in a specific use scenario, ensure the responsible use of AI technology, including but not limited to developing and integrating risk mitigation measures, and comply with all applicable laws and regulations in all applicable jurisdictions. The RD-agent does not provide financial opinions or reflect the opinions of Microsoft, nor is it designed to replace the role of qualified financial professionals in formulating, assessing, and approving finance products. The inputs and outputs of the RD-agent belong to the users and users shall assume all liability under any theory of liability, whether in contract, torts, regulatory, negligence, products liability, or otherwise, associated with use of the RD-agent and any inputs and outputs thereof.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for RD-Agent

Similar Open Source Tools

RD-Agent

RD-Agent is a tool designed to automate critical aspects of industrial R&D processes, focusing on data-driven scenarios to streamline model and data development. It aims to propose new ideas ('R') and implement them ('D') automatically, leading to solutions of significant industrial value. The tool supports scenarios like Automated Quantitative Trading, Data Mining Agent, Research Copilot, and more, with a framework to push the boundaries of research in data science. Users can create a Conda environment, install the RDAgent package from PyPI, configure GPT model, and run various applications for tasks like quantitative trading, model evolution, medical prediction, and more. The tool is intended to enhance R&D processes and boost productivity in industrial settings.

modelscope-agent

ModelScope-Agent is a customizable and scalable Agent framework. A single agent has abilities such as role-playing, LLM calling, tool usage, planning, and memory. It mainly has the following characteristics: - **Simple Agent Implementation Process**: Simply specify the role instruction, LLM name, and tool name list to implement an Agent application. The framework automatically arranges workflows for tool usage, planning, and memory. - **Rich models and tools**: The framework is equipped with rich LLM interfaces, such as Dashscope and Modelscope model interfaces, OpenAI model interfaces, etc. Built in rich tools, such as **code interpreter**, **weather query**, **text to image**, **web browsing**, etc., make it easy to customize exclusive agents. - **Unified interface and high scalability**: The framework has clear tools and LLM registration mechanism, making it convenient for users to expand more diverse Agent applications. - **Low coupling**: Developers can easily use built-in tools, LLM, memory, and other components without the need to bind higher-level agents.

evalverse

Evalverse is an open-source project designed to support Large Language Model (LLM) evaluation needs. It provides a standardized and user-friendly solution for processing and managing LLM evaluations, catering to AI research engineers and scientists. Evalverse supports various evaluation methods, insightful reports, and no-code evaluation processes. Users can access unified evaluation with submodules, request evaluations without code via Slack bot, and obtain comprehensive reports with scores, rankings, and visuals. The tool allows for easy comparison of scores across different models and swift addition of new evaluation tools.

AgentBench

AgentBench is a benchmark designed to evaluate Large Language Models (LLMs) as autonomous agents in various environments. It includes 8 distinct environments such as Operating System, Database, Knowledge Graph, Digital Card Game, and Lateral Thinking Puzzles. The tool provides a comprehensive evaluation of LLMs' ability to operate as agents by offering Dev and Test sets for each environment. Users can quickly start using the tool by following the provided steps, configuring the agent, starting task servers, and assigning tasks. AgentBench aims to bridge the gap between LLMs' proficiency as agents and their practical usability.

EmbodiedScan

EmbodiedScan is a holistic multi-modal 3D perception suite designed for embodied AI. It introduces a multi-modal, ego-centric 3D perception dataset and benchmark for holistic 3D scene understanding. The dataset includes over 5k scans with 1M ego-centric RGB-D views, 1M language prompts, 160k 3D-oriented boxes spanning 760 categories, and dense semantic occupancy with 80 common categories. The suite includes a baseline framework named Embodied Perceptron, capable of processing multi-modal inputs for 3D perception tasks and language-grounded tasks.

RLAIF-V

RLAIF-V is a novel framework that aligns MLLMs in a fully open-source paradigm for super GPT-4V trustworthiness. It maximally exploits open-source feedback from high-quality feedback data and online feedback learning algorithm. Notable features include achieving super GPT-4V trustworthiness in both generative and discriminative tasks, using high-quality generalizable feedback data to reduce hallucination of different MLLMs, and exhibiting better learning efficiency and higher performance through iterative alignment.

transformers

Transformers is a state-of-the-art pretrained models library that acts as the model-definition framework for machine learning models in text, computer vision, audio, video, and multimodal tasks. It centralizes model definition for compatibility across various training frameworks, inference engines, and modeling libraries. The library simplifies the usage of new models by providing simple, customizable, and efficient model definitions. With over 1M+ Transformers model checkpoints available, users can easily find and utilize models for their tasks.

vertex-ai-mlops

Vertex AI is a platform for end-to-end model development. It consist of core components that make the processes of MLOps possible for design patterns of all types.

basiclingua-LLM-Based-NLP

BasicLingua is a Python library that provides functionalities for linguistic tasks such as tokenization, stemming, lemmatization, and many others. It is based on the Gemini Language Model, which has demonstrated promising results in dealing with text data. BasicLingua can be used as an API or through a web demo. It is available under the MIT license and can be used in various projects.

superduperdb

SuperDuperDB is a Python framework for integrating AI models, APIs, and vector search engines directly with your existing databases, including hosting of your own models, streaming inference and scalable model training/fine-tuning. Build, deploy and manage any AI application without the need for complex pipelines, infrastructure as well as specialized vector databases, and moving our data there, by integrating AI at your data's source: - Generative AI, LLMs, RAG, vector search - Standard machine learning use-cases (classification, segmentation, regression, forecasting recommendation etc.) - Custom AI use-cases involving specialized models - Even the most complex applications/workflows in which different models work together SuperDuperDB is **not** a database. Think `db = superduper(db)`: SuperDuperDB transforms your databases into an intelligent platform that allows you to leverage the full AI and Python ecosystem. A single development and deployment environment for all your AI applications in one place, fully scalable and easy to manage.

MLE-agent

MLE-Agent is an intelligent companion designed for machine learning engineers and researchers. It features autonomous baseline creation, integration with Arxiv and Papers with Code, smart debugging, file system organization, comprehensive tools integration, and an interactive CLI chat interface for seamless AI engineering and research workflows.

ALMA

ALMA (Advanced Language Model-based Translator) is a many-to-many LLM-based translation model that utilizes a two-step fine-tuning process on monolingual and parallel data to achieve strong translation performance. ALMA-R builds upon ALMA models with LoRA fine-tuning and Contrastive Preference Optimization (CPO) for even better performance, surpassing GPT-4 and WMT winners. The repository provides ALMA and ALMA-R models, datasets, environment setup, evaluation scripts, training guides, and data information for users to leverage these models for translation tasks.

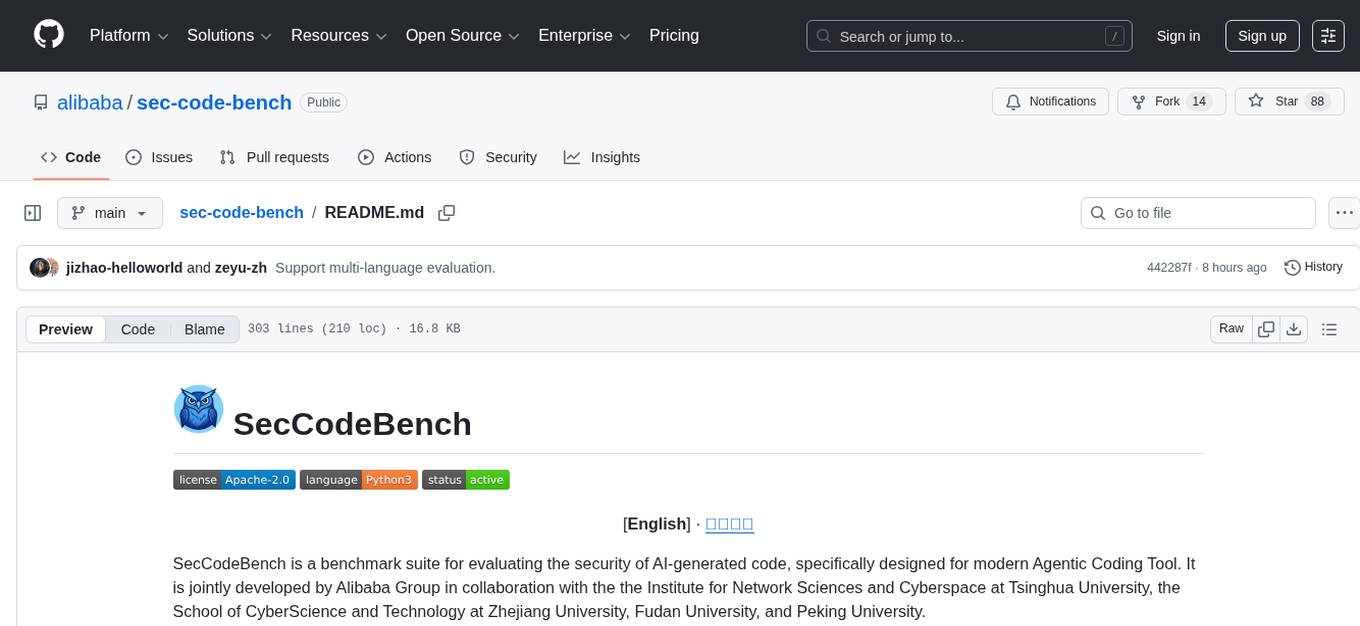

sec-code-bench

SecCodeBench is a benchmark suite for evaluating the security of AI-generated code, specifically designed for modern Agentic Coding Tools. It addresses challenges in existing security benchmarks by ensuring test case quality, employing precise evaluation methods, and covering Agentic Coding Tools. The suite includes 98 test cases across 5 programming languages, focusing on functionality-first evaluation and dynamic execution-based validation. It offers a highly extensible testing framework for end-to-end automated evaluation of agentic coding tools, generating comprehensive reports and logs for analysis and improvement.

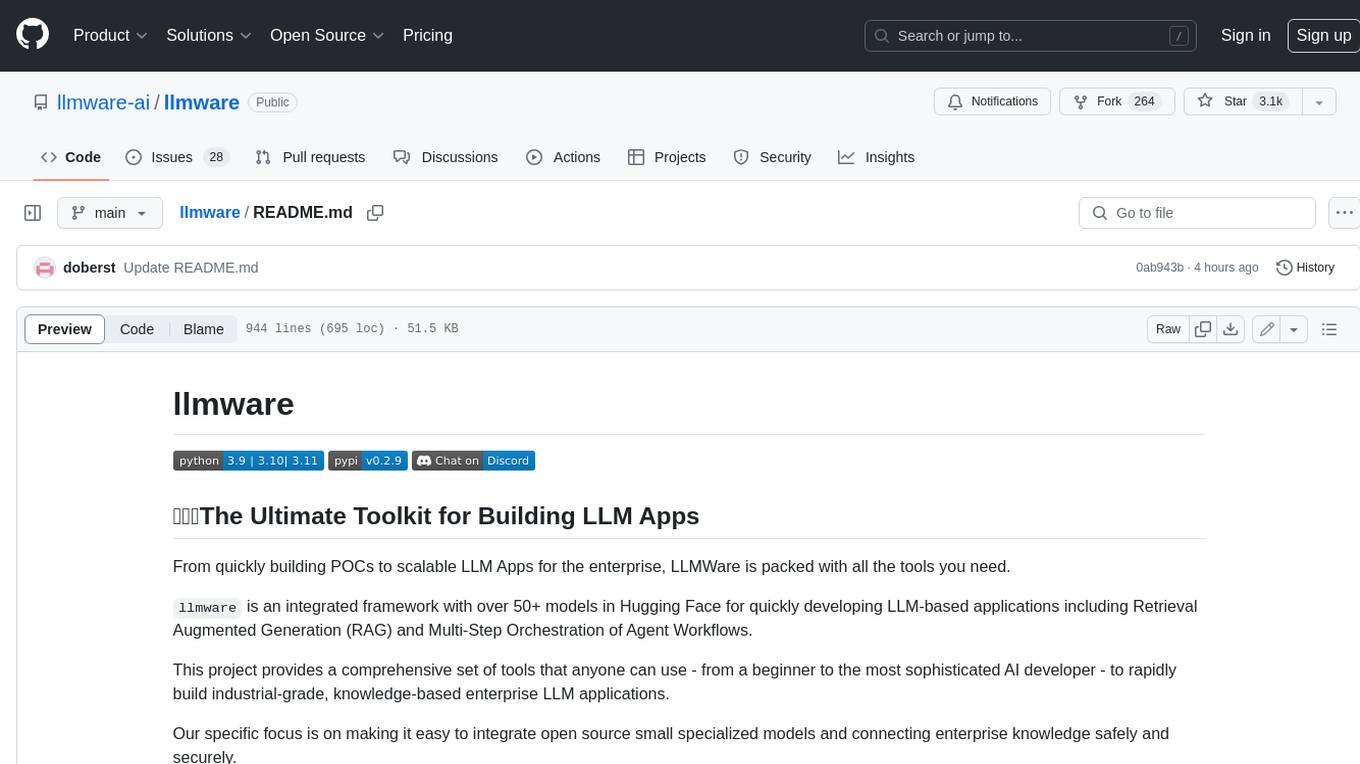

llmware

LLMWare is a framework for quickly developing LLM-based applications including Retrieval Augmented Generation (RAG) and Multi-Step Orchestration of Agent Workflows. This project provides a comprehensive set of tools that anyone can use - from a beginner to the most sophisticated AI developer - to rapidly build industrial-grade, knowledge-based enterprise LLM applications. Our specific focus is on making it easy to integrate open source small specialized models and connecting enterprise knowledge safely and securely.

LLM-Pruner

LLM-Pruner is a tool for structural pruning of large language models, allowing task-agnostic compression while retaining multi-task solving ability. It supports automatic structural pruning of various LLMs with minimal human effort. The tool is efficient, requiring only 3 minutes for pruning and 3 hours for post-training. Supported LLMs include Llama-3.1, Llama-3, Llama-2, LLaMA, BLOOM, Vicuna, and Baichuan. Updates include support for new LLMs like GQA and BLOOM, as well as fine-tuning results achieving high accuracy. The tool provides step-by-step instructions for pruning, post-training, and evaluation, along with a Gradio interface for text generation. Limitations include issues with generating repetitive or nonsensical tokens in compressed models and manual operations for certain models.

PowerInfer

PowerInfer is a high-speed Large Language Model (LLM) inference engine designed for local deployment on consumer-grade hardware, leveraging activation locality to optimize efficiency. It features a locality-centric design, hybrid CPU/GPU utilization, easy integration with popular ReLU-sparse models, and support for various platforms. PowerInfer achieves high speed with lower resource demands and is flexible for easy deployment and compatibility with existing models like Falcon-40B, Llama2 family, ProSparse Llama2 family, and Bamboo-7B.

For similar tasks

RD-Agent

RD-Agent is a tool designed to automate critical aspects of industrial R&D processes, focusing on data-driven scenarios to streamline model and data development. It aims to propose new ideas ('R') and implement them ('D') automatically, leading to solutions of significant industrial value. The tool supports scenarios like Automated Quantitative Trading, Data Mining Agent, Research Copilot, and more, with a framework to push the boundaries of research in data science. Users can create a Conda environment, install the RDAgent package from PyPI, configure GPT model, and run various applications for tasks like quantitative trading, model evolution, medical prediction, and more. The tool is intended to enhance R&D processes and boost productivity in industrial settings.

starwhale

Starwhale is an MLOps/LLMOps platform that brings efficiency and standardization to machine learning operations. It streamlines the model development lifecycle, enabling teams to optimize workflows around key areas like model building, evaluation, release, and fine-tuning. Starwhale abstracts Model, Runtime, and Dataset as first-class citizens, providing tailored capabilities for common workflow scenarios including Models Evaluation, Live Demo, and LLM Fine-tuning. It is an open-source platform designed for clarity and ease of use, empowering developers to build customized MLOps features tailored to their needs.

transformerlab-app

Transformer Lab is an app that allows users to experiment with Large Language Models by providing features such as one-click download of popular models, finetuning across different hardware, RLHF and Preference Optimization, working with LLMs across different operating systems, chatting with models, using different inference engines, evaluating models, building datasets for training, calculating embeddings, providing a full REST API, running in the cloud, converting models across platforms, supporting plugins, embedded Monaco code editor, prompt editing, inference logs, all through a simple cross-platform GUI.

aisheets

Hugging Face AI Sheets is an open-source tool for building, enriching, and transforming datasets using AI models with no code. It can be deployed locally or on the Hub, providing access to thousands of open models. Users can easily generate datasets, run data generation scripts, and customize inference endpoints for text generation. The tool supports custom LLMs and offers advanced configuration options for authentication, inference, and miscellaneous settings. With AI Sheets, users can leverage the power of AI models without writing any code, making dataset management and transformation efficient and accessible.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.