PowerInfer

High-speed Large Language Model Serving on PCs with Consumer-grade GPUs

Stars: 7584

PowerInfer is a high-speed Large Language Model (LLM) inference engine designed for local deployment on consumer-grade hardware, leveraging activation locality to optimize efficiency. It features a locality-centric design, hybrid CPU/GPU utilization, easy integration with popular ReLU-sparse models, and support for various platforms. PowerInfer achieves high speed with lower resource demands and is flexible for easy deployment and compatibility with existing models like Falcon-40B, Llama2 family, ProSparse Llama2 family, and Bamboo-7B.

README:

PowerInfer is a CPU/GPU LLM inference engine leveraging activation locality for your device.

- [2024/6/11] We are thrilled to introduce PowerInfer-2, our highly optimized inference framework designed specifically for smartphones. With TurboSparse-Mixtral-47B, it achieves an impressive speed of 11.68 tokens per second, which is up to 22 times faster than other state-of-the-art frameworks.

- [2024/6/11] We are thrilled to present Turbo Sparse, our TurboSparse models for fast inference. With just $0.1M, we sparsified the original Mistral and Mixtral model to nearly 90% sparsity while maintaining superior performance! For a Mixtral-level model, our TurboSparse-Mixtral activates only 4B parameters!

- [2024/5/20] Competition Recruitment: CCF-TCArch Customized Computing Challenge 2024. The CCF TCARCH CCC is a national competition organized by the Technical Committee on Computer Architecture (TCARCH) of the China Computer Federation (CCF). This year's competition aims to optimize the PowerInfer inference engine using the open-source ROCm/HIP. More information about the competition can be found here.

- [2024/5/17] We now provide support for AMD devices with ROCm. (WIP for models exceeding 40B).

- [2024/3/28] We are trilled to present Bamboo LLM that achieves both top-level performance and unparalleled speed with PowerInfer! Experience it with Bamboo-7B Base / DPO.

- [2024/3/14] We supported ProSparse Llama 2 (7B/13B), ReLU models with ~90% sparsity, matching original Llama 2's performance (Thanks THUNLP & ModelBest)!

- [2024/1/11] We supported Windows with GPU inference!

- [2023/12/24] We released an online gradio demo for Falcon(ReLU)-40B-FP16!

- [2023/12/19] We officially released PowerInfer!

https://github.com/SJTU-IPADS/PowerInfer/assets/34213478/fe441a42-5fce-448b-a3e5-ea4abb43ba23

PowerInfer v.s. llama.cpp on a single RTX 4090(24G) running Falcon(ReLU)-40B-FP16 with a 11x speedup!

Both PowerInfer and llama.cpp were running on the same hardware and fully utilized VRAM on RTX 4090.

[!NOTE] Live Demo Online⚡️

Try out our Gradio server hosting Falcon(ReLU)-40B-FP16 on a RTX 4090!

Experimental and without warranties 🚧

We introduce PowerInfer, a high-speed Large Language Model (LLM) inference engine on a personal computer (PC) equipped with a single consumer-grade GPU. The key underlying the design of PowerInfer is exploiting the high locality inherent in LLM inference, characterized by a power-law distribution in neuron activation.

This distribution indicates that a small subset of neurons, termed hot neurons, are consistently activated across inputs, while the majority, cold neurons, vary based on specific inputs. PowerInfer exploits such an insight to design a GPU-CPU hybrid inference engine: hot-activated neurons are preloaded onto the GPU for fast access, while cold-activated neurons are computed on the CPU, thus significantly reducing GPU memory demands and CPU-GPU data transfers. PowerInfer further integrates adaptive predictors and neuron-aware sparse operators, optimizing the efficiency of neuron activation and computational sparsity.

Evaluation shows that PowerInfer attains an average token generation rate of 13.20 tokens/s, with a peak of 29.08 tokens/s, across various LLMs (including OPT-175B) on a single NVIDIA RTX 4090 GPU, only 18% lower than that achieved by a top-tier server-grade A100 GPU. This significantly outperforms llama.cpp by up to 11.69x while retaining model accuracy.

PowerInfer is a high-speed and easy-to-use inference engine for deploying LLMs locally.

PowerInfer is fast with:

- Locality-centric design: Utilizes sparse activation and 'hot'/'cold' neuron concept for efficient LLM inference, ensuring high speed with lower resource demands.

- Hybrid CPU/GPU Utilization: Seamlessly integrates memory/computation capabilities of CPU and GPU for a balanced workload and faster processing.

PowerInfer is flexible and easy to use with:

- Easy Integration: Compatible with popular ReLU-sparse models.

- Local Deployment Ease: Designed and deeply optimized for local deployment on consumer-grade hardware, enabling low-latency LLM inference and serving on a single GPU.

-

Backward Compatibility: While distinct from llama.cpp, you can make use of most of

examples/the same way as llama.cpp such as server and batched generation. PowerInfer also supports inference with llama.cpp's model weights for compatibility purposes, but there will be no performance gain.

You can use these models with PowerInfer today:

- Falcon-40B

- Llama2 family

- ProSparse Llama2 family

- Bamboo-7B

We have tested PowerInfer on the following platforms:

- x86-64 CPUs with AVX2 instructions, with or without NVIDIA GPUs, under Linux.

- x86-64 CPUs with AVX2 instructions, with or without NVIDIA GPUs, under Windows.

- Apple M Chips (CPU only) on macOS. (As we do not optimize for Mac, the performance improvement is not significant now.)

And new features coming soon:

- Metal backend for sparse inference on macOS

Please kindly refer to our Project Kanban for our current focus of development.

PowerInfer requires the following dependencies:

- CMake (3.17+)

- Python (3.8+) and pip (19.3+), for converting model weights and automatic FFN offloading

git clone https://github.com/SJTU-IPADS/PowerInfer

cd PowerInfer

pip install -r requirements.txt # install Python helpers' dependenciesIn order to build PowerInfer you have two different options. These commands are supposed to be run from the root directory of the project.

Using CMake(3.17+):

- If you have an NVIDIA GPU:

cmake -S . -B build -DLLAMA_CUBLAS=ON

cmake --build build --config Release- If you have an AMD GPU:

# Replace '1100' to your card architecture name, you can get it by rocminfo

CC=/opt/rocm/llvm/bin/clang CXX=/opt/rocm/llvm/bin/clang++ cmake -S . -B build -DLLAMA_HIPBLAS=ON -DAMDGPU_TARGETS=gfx1100

cmake --build build --config Release- If you have just CPU:

cmake -S . -B build

cmake --build build --config ReleasePowerInfer models are stored in a special format called PowerInfer GGUF based on GGUF format, consisting of both LLM weights and predictor weights.

You can obtain PowerInfer GGUF weights at *.powerinfer.gguf as well as profiled model activation statistics for 'hot'-neuron offloading from each Hugging Face repo below.

| Base Model | PowerInfer GGUF |

|---|---|

| LLaMA(ReLU)-2-7B | PowerInfer/ReluLLaMA-7B-PowerInfer-GGUF |

| LLaMA(ReLU)-2-13B | PowerInfer/ReluLLaMA-13B-PowerInfer-GGUF |

| Falcon(ReLU)-40B | PowerInfer/ReluFalcon-40B-PowerInfer-GGUF |

| LLaMA(ReLU)-2-70B | PowerInfer/ReluLLaMA-70B-PowerInfer-GGUF |

| ProSparse-LLaMA-2-7B | PowerInfer/ProSparse-LLaMA-2-7B-GGUF |

| ProSparse-LLaMA-2-13B | PowerInfer/ProSparse-LLaMA-2-13B-GGUF |

| Bamboo-base-7B 🌟 | PowerInfer/Bamboo-base-v0.1-gguf |

| Bamboo-DPO-7B 🌟 | PowerInfer/Bamboo-DPO-v0.1-gguf |

We recommend using huggingface-cli to download the whole model repo. For example, the following command will download PowerInfer/ReluLLaMA-7B-PowerInfer-GGUF into the ./ReluLLaMA-7B directory.

huggingface-cli download --resume-download --local-dir ReluLLaMA-7B --local-dir-use-symlinks False PowerInfer/ReluLLaMA-7B-PowerInfer-GGUFAs such, PowerInfer can automatically make use of the following directory structure for feature-complete model offloading:

.

├── *.powerinfer.gguf (Unquantized PowerInfer model)

├── *.q4.powerinfer.gguf (INT4 quantized PowerInfer model, if available)

├── activation (Profiled activation statistics for fine-grained FFN offloading)

│ ├── activation_x.pt (Profiled activation statistics for layer x)

│ └── ...

├── *.[q4].powerinfer.gguf.generated.gpuidx (Generated GPU index at runtime for corresponding model)

Hugging Face limits single model weight to 50GiB. For unquantized models >= 40B, you can convert PowerInfer GGUF from the original model weights and predictor weights obtained from Hugging Face.

| Base Model | Original Model | Predictor |

|---|---|---|

| LLaMA(ReLU)-2-7B | SparseLLM/ReluLLaMA-7B | PowerInfer/ReluLLaMA-7B-Predictor |

| LLaMA(ReLU)-2-13B | SparseLLM/ReluLLaMA-13B | PowerInfer/ReluLLaMA-13B-Predictor |

| Falcon(ReLU)-40B | SparseLLM/ReluFalcon-40B | PowerInfer/ReluFalcon-40B-Predictor |

| LLaMA(ReLU)-2-70B | SparseLLM/ReluLLaMA-70B | PowerInfer/ReluLLaMA-70B-Predictor |

| ProSparse-LLaMA-2-7B | SparseLLM/ProSparse-LLaMA-2-7B | PowerInfer/ProSparse-LLaMA-2-7B-Predictor |

| ProSparse-LLaMA-2-13B | SparseLLM/ProSparse-LLaMA-2-13B | PowerInfer/ProSparse-LLaMA-2-13B-Predictor |

| Bamboo-base-7B 🌟 | PowerInfer/Bamboo-base-v0.1 | PowerInfer/Bamboo-base-v0.1-predictor |

| Bamboo-DPO-7B 🌟 | PowerInfer/Bamboo-DPO-v0.1 | PowerInfer/Bamboo-DPO-v0.1-predictor |

You can use the following command to convert the original model weights and predictor weights to PowerInfer GGUF:

# make sure that you have done `pip install -r requirements.txt`

python convert.py --outfile /PATH/TO/POWERINFER/GGUF/REPO/MODELNAME.powerinfer.gguf /PATH/TO/ORIGINAL/MODEL /PATH/TO/PREDICTOR

# python convert.py --outfile ./ReluLLaMA-70B-PowerInfer-GGUF/llama-70b-relu.powerinfer.gguf ./SparseLLM/ReluLLaMA-70B ./PowerInfer/ReluLLaMA-70B-PredictorFor the same reason, we suggest keeping the same directory structure as PowerInfer GGUF repos after conversion.

Convert Original models into dense GGUF models(compatible with llama.cpp)

python convert-dense.py --outfile /PATH/TO/DENSE/GGUF/REPO/MODELNAME.gguf /PATH/TO/ORIGINAL/MODEL

# python convert-dense.py --outfile ./Bamboo-DPO-v0.1-gguf/bamboo-7b-dpo-v0.1.gguf --outtype f16 ./Bamboo-DPO-v0.1Please note that the generated dense GGUF models might not work properly with llama.cpp, as we have altered activation functions (for ReluLLaMA and Prosparse models), or the model architecture (for Bamboo models). The dense GGUF models generated by convert-dense.py can be used for PowerInfer in dense inference mode, but might not work properly with llama.cpp.

For CPU-only and CPU-GPU hybrid inference with all available VRAM, you can use the following instructions to run PowerInfer:

./build/bin/main -m /PATH/TO/MODEL -n $output_token_count -t $thread_num -p $prompt

# e.g.: ./build/bin/main -m ./ReluFalcon-40B-PowerInfer-GGUF/falcon-40b-relu.q4.powerinfer.gguf -n 128 -t 8 -p "Once upon a time"

# For Windows: .\build\bin\Release\main.exe -m .\ReluFalcon-40B-PowerInfer-GGUF\falcon-40b-relu.q4.powerinfer.gguf -n 128 -t 8 -p "Once upon a time"If you want to limit the VRAM usage of GPU:

./build/bin/main -m /PATH/TO/MODEL -n $output_token_count -t $thread_num -p $prompt --vram-budget $vram_gb

# e.g.: ./build/bin/main -m ./ReluLLaMA-7B-PowerInfer-GGUF/llama-7b-relu.powerinfer.gguf -n 128 -t 8 -p "Once upon a time" --vram-budget 8

# For Windows: .\build\bin\Release\main.exe -m .\ReluLLaMA-7B-PowerInfer-GGUF\llama-7b-relu.powerinfer.gguf -n 128 -t 8 -p "Once upon a time" --vram-budget 8Under CPU-GPU hybrid inference, PowerInfer will automatically offload all dense activation blocks to GPU, then split FFN and offload to GPU if possible.

Dense inference mode (limited support)

If you want to run PowerInfer to infer with the dense variants of the PowerInfer model family, you can use similarly as llama.cpp does:

./build/bin/main -m /PATH/TO/DENSE/MODEL -n $output_token_count -t $thread_num -p $prompt -ngl $num_gpu_layers

# e.g.: ./build/bin/main -m ./Bamboo-base-v0.1-gguf/bamboo-7b-v0.1.gguf -n 128 -t 8 -p "Once upon a time" -ngl 12So is the case for other examples/ like server and batched_generation. Please note that the dense inference mode is not a "compatible mode" for all models. We have altered activation functions (for ReluLLaMA and Prosparse models) in this mode to match with our model family.

PowerInfer supports serving and batched generation with the same instructions as llama.cpp. Generally, you can use the same command as llama.cpp, except for -ngl argument which has been replaced by --vram-budget for PowerInfer. Please refer to the detailed instructions in each examples/ directory. For example:

PowerInfer has optimized quantization support for INT4(Q4_0) models. You can use the following instructions to quantize PowerInfer GGUF model:

./build/bin/quantize /PATH/TO/MODEL /PATH/TO/OUTPUT/QUANTIZED/MODEL Q4_0

# e.g.: ./build/bin/quantize ./ReluFalcon-40B-PowerInfer-GGUF/falcon-40b-relu.powerinfer.gguf ./ReluFalcon-40B-PowerInfer-GGUF/falcon-40b-relu.q4.powerinfer.gguf Q4_0

# For Windows: .\build\bin\Release\quantize.exe .\ReluFalcon-40B-PowerInfer-GGUF\falcon-40b-relu.powerinfer.gguf .\ReluFalcon-40B-PowerInfer-GGUF\falcon-40b-relu.q4.powerinfer.gguf Q4_0Then you can use the quantized model for inference with PowerInfer with the same instructions as above.

We evaluated PowerInfer vs. llama.cpp on a single RTX 4090(24G) with a series of FP16 ReLU models under inputs of length 64, and the results are shown below. PowerInfer achieves up to 11x speedup on Falcon 40B and up to 3x speedup on Llama 2 70B.

The X axis indicates the output length, and the Y axis represents the speedup compared with llama.cpp. The number above each bar indicates the end-to-end generation speed (total prompting + generation time / total tokens generated, in tokens/s).

We also evaluated PowerInfer on a single RTX 2080Ti(11G) with INT4 ReLU models under inputs of length 8, and the results are illustrated in the same way as above. PowerInfer achieves up to 8x speedup on Falcon 40B and up to 3x speedup on Llama 2 70B.

Please refer to our paper for more evaluation details.

-

What if I encountered

CUDA_ERROR_OUT_OF_MEMORY?- You can try to run with

--reset-gpu-indexargument to rebuild the GPU index for this model to avoid any stale cache. - Due to our current implementation, model offloading might not be as accurate as expected. You can try with

--vram-budgetwith a slightly lower value or--disable-gpu-indexto disable FFN offloading.

- You can try to run with

-

Does PowerInfer support mistral, original llama, Qwen, ...?

- Now we only support models with ReLU/ReGLU/Squared ReLU activation function. So we do not support these models now. It's worth mentioning that a paper has demonstrated that using the ReLU/ReGLU activation function has a negligible impact on convergence and performance.

-

Why is there a noticeable downgrade in the performance metrics of our current ReLU model, particularly the 70B model?

- In contrast to the typical requirement of around 2T tokens for LLM training, our model's fine-tuning was conducted with only 5B tokens. This insufficient retraining has resulted in the model's inability to regain its original performance. We are actively working on updating to a more capable model, so please stay tuned.

-

What if...

- Issues are welcomed! Please feel free to open an issue and attach your running environment and running parameters. We will try our best to help you.

We will release the code and data in the following order, please stay tuned!

- [x] Release core code of PowerInfer, supporting Llama-2, Falcon-40B.

- [x] Support

Mistral-7B(Bamboo-7B) - [x] Support Windows

- [ ] Support text-generation-webui

- [x] Release perplexity evaluation code

- [ ] Support Metal for Mac

- [ ] Release code for OPT models

- [ ] Release predictor training code

- [x] Support online split for FFN network

- [ ] Support Multi-GPU

More technical details can be found in our paper.

If you find PowerInfer useful or relevant to your project and research, please kindly cite our paper:

@misc{song2023powerinfer,

title={PowerInfer: Fast Large Language Model Serving with a Consumer-grade GPU},

author={Yixin Song and Zeyu Mi and Haotong Xie and Haibo Chen},

year={2023},

eprint={2312.12456},

archivePrefix={arXiv},

primaryClass={cs.LG}

}We are thankful for the easily modifiable operator library ggml and execution runtime provided by llama.cpp. We also extend our gratitude to THUNLP for their support of ReLU-based sparse models. We also appreciate the research of Deja Vu, which inspires PowerInfer.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for PowerInfer

Similar Open Source Tools

PowerInfer

PowerInfer is a high-speed Large Language Model (LLM) inference engine designed for local deployment on consumer-grade hardware, leveraging activation locality to optimize efficiency. It features a locality-centric design, hybrid CPU/GPU utilization, easy integration with popular ReLU-sparse models, and support for various platforms. PowerInfer achieves high speed with lower resource demands and is flexible for easy deployment and compatibility with existing models like Falcon-40B, Llama2 family, ProSparse Llama2 family, and Bamboo-7B.

model2vec

Model2Vec is a technique to turn any sentence transformer into a really small static model, reducing model size by 15x and making the models up to 500x faster, with a small drop in performance. It outperforms other static embedding models like GLoVe and BPEmb, is lightweight with only `numpy` as a major dependency, offers fast inference, dataset-free distillation, and is integrated into Sentence Transformers, txtai, and Chonkie. Model2Vec creates powerful models by passing a vocabulary through a sentence transformer model, reducing dimensionality using PCA, and weighting embeddings using zipf weighting. Users can distill their own models or use pre-trained models from the HuggingFace hub. Evaluation can be done using the provided evaluation package. Model2Vec is licensed under MIT.

CogVideo

CogVideo is an open-source repository that provides pretrained text-to-video models for generating videos based on input text. It includes models like CogVideoX-2B and CogVideo, offering powerful video generation capabilities. The repository offers tools for inference, fine-tuning, and model conversion, along with demos showcasing the model's capabilities through CLI, web UI, and online experiences. CogVideo aims to facilitate the creation of high-quality videos from textual descriptions, catering to a wide range of applications.

opencompass

OpenCompass is a one-stop platform for large model evaluation, aiming to provide a fair, open, and reproducible benchmark for large model evaluation. Its main features include: * Comprehensive support for models and datasets: Pre-support for 20+ HuggingFace and API models, a model evaluation scheme of 70+ datasets with about 400,000 questions, comprehensively evaluating the capabilities of the models in five dimensions. * Efficient distributed evaluation: One line command to implement task division and distributed evaluation, completing the full evaluation of billion-scale models in just a few hours. * Diversified evaluation paradigms: Support for zero-shot, few-shot, and chain-of-thought evaluations, combined with standard or dialogue-type prompt templates, to easily stimulate the maximum performance of various models. * Modular design with high extensibility: Want to add new models or datasets, customize an advanced task division strategy, or even support a new cluster management system? Everything about OpenCompass can be easily expanded! * Experiment management and reporting mechanism: Use config files to fully record each experiment, and support real-time reporting of results.

ludwig

Ludwig is a declarative deep learning framework designed for scale and efficiency. It is a low-code framework that allows users to build custom AI models like LLMs and other deep neural networks with ease. Ludwig offers features such as optimized scale and efficiency, expert level control, modularity, and extensibility. It is engineered for production with prebuilt Docker containers, support for running with Ray on Kubernetes, and the ability to export models to Torchscript and Triton. Ludwig is hosted by the Linux Foundation AI & Data.

llmware

LLMWare is a framework for quickly developing LLM-based applications including Retrieval Augmented Generation (RAG) and Multi-Step Orchestration of Agent Workflows. This project provides a comprehensive set of tools that anyone can use - from a beginner to the most sophisticated AI developer - to rapidly build industrial-grade, knowledge-based enterprise LLM applications. Our specific focus is on making it easy to integrate open source small specialized models and connecting enterprise knowledge safely and securely.

aimo-progress-prize

This repository contains the training and inference code needed to replicate the winning solution to the AI Mathematical Olympiad - Progress Prize 1. It consists of fine-tuning DeepSeekMath-Base 7B, high-quality training datasets, a self-consistency decoding algorithm, and carefully chosen validation sets. The training methodology involves Chain of Thought (CoT) and Tool Integrated Reasoning (TIR) training stages. Two datasets, NuminaMath-CoT and NuminaMath-TIR, were used to fine-tune the models. The models were trained using open-source libraries like TRL, PyTorch, vLLM, and DeepSpeed. Post-training quantization to 8-bit precision was done to improve performance on Kaggle's T4 GPUs. The project structure includes scripts for training, quantization, and inference, along with necessary installation instructions and hardware/software specifications.

hal-9100

This repository is now archived and the code is privately maintained. If you are interested in this infrastructure, please contact the maintainer directly.

basiclingua-LLM-Based-NLP

BasicLingua is a Python library that provides functionalities for linguistic tasks such as tokenization, stemming, lemmatization, and many others. It is based on the Gemini Language Model, which has demonstrated promising results in dealing with text data. BasicLingua can be used as an API or through a web demo. It is available under the MIT license and can be used in various projects.

swiftide

Swiftide is a fast, streaming indexing and query library tailored for Retrieval Augmented Generation (RAG) in AI applications. It is built in Rust, utilizing parallel, asynchronous streams for blazingly fast performance. With Swiftide, users can easily build AI applications from idea to production in just a few lines of code. The tool addresses frustrations around performance, stability, and ease of use encountered while working with Python-based tooling. It offers features like fast streaming indexing pipeline, experimental query pipeline, integrations with various platforms, loaders, transformers, chunkers, embedders, and more. Swiftide aims to provide a platform for data indexing and querying to advance the development of automated Large Language Model (LLM) applications.

magpie

This is the official repository for 'Alignment Data Synthesis from Scratch by Prompting Aligned LLMs with Nothing'. Magpie is a tool designed to synthesize high-quality instruction data at scale by extracting it directly from an aligned Large Language Models (LLMs). It aims to democratize AI by generating large-scale alignment data and enhancing the transparency of model alignment processes. Magpie has been tested on various model families and can be used to fine-tune models for improved performance on alignment benchmarks such as AlpacaEval, ArenaHard, and WildBench.

RLHF-Reward-Modeling

This repository contains code for training reward models for Deep Reinforcement Learning-based Reward-modulated Hierarchical Fine-tuning (DRL-based RLHF), Iterative Selection Fine-tuning (Rejection sampling fine-tuning), and iterative Decision Policy Optimization (DPO). The reward models are trained using a Bradley-Terry model based on the Gemma and Mistral language models. The resulting reward models achieve state-of-the-art performance on the RewardBench leaderboard for reward models with base models of up to 13B parameters.

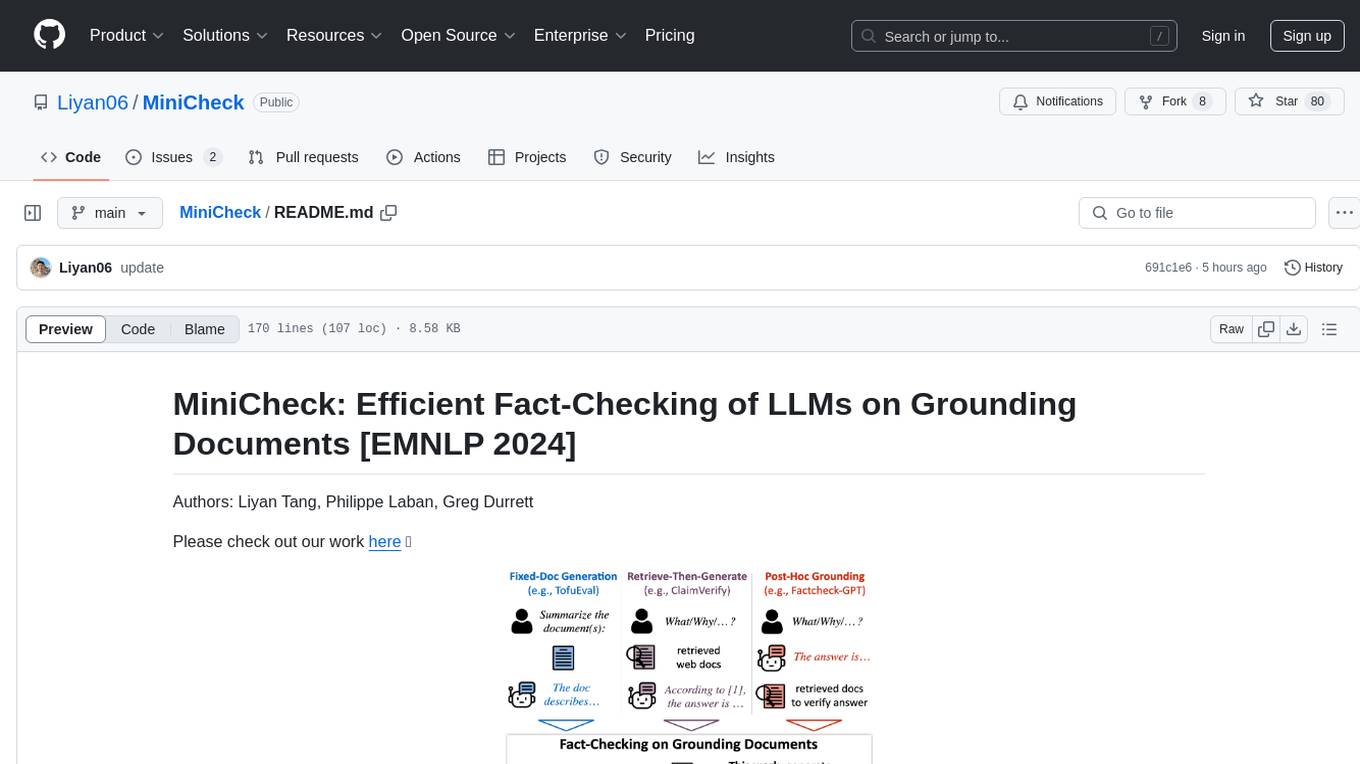

MiniCheck

MiniCheck is an efficient fact-checking tool designed to verify claims against grounding documents using large language models. It provides a sentence-level fact-checking model that can be used to evaluate the consistency of claims with the provided documents. MiniCheck offers different models, including Bespoke-MiniCheck-7B, which is the state-of-the-art and commercially usable. The tool enables users to fact-check multi-sentence claims by breaking them down into individual sentences for optimal performance. It also supports automatic prefix caching for faster inference when repeatedly fact-checking the same document with different claims.

qlib

Qlib is an open-source, AI-oriented quantitative investment platform that supports diverse machine learning modeling paradigms, including supervised learning, market dynamics modeling, and reinforcement learning. It covers the entire chain of quantitative investment, from alpha seeking to order execution. The platform empowers researchers to explore ideas and implement productions using AI technologies in quantitative investment. Qlib collaboratively solves key challenges in quantitative investment by releasing state-of-the-art research works in various paradigms. It provides a full ML pipeline for data processing, model training, and back-testing, enabling users to perform tasks such as forecasting market patterns, adapting to market dynamics, and modeling continuous investment decisions.

Reflection_Tuning

Reflection-Tuning is a project focused on improving the quality of instruction-tuning data through a reflection-based method. It introduces Selective Reflection-Tuning, where the student model can decide whether to accept the improvements made by the teacher model. The project aims to generate high-quality instruction-response pairs by defining specific criteria for the oracle model to follow and respond to. It also evaluates the efficacy and relevance of instruction-response pairs using the r-IFD metric. The project provides code for reflection and selection processes, along with data and model weights for both V1 and V2 methods.

YuE

YuE (乐) is an open-source foundation model designed for music generation, specifically transforming lyrics into full songs. It can generate complete songs in various genres and vocal styles, ensuring a polished and cohesive result. The model requires significant GPU memory for generating long sequences and recommends specific configurations for optimal performance. Users can customize the number of sessions for memory usage. The tool provides a quickstart guide for generating music using Transformers and includes tips for execution time and tag selection. The project is licensed under Creative Commons Attribution Non Commercial 4.0.

For similar tasks

PowerInfer

PowerInfer is a high-speed Large Language Model (LLM) inference engine designed for local deployment on consumer-grade hardware, leveraging activation locality to optimize efficiency. It features a locality-centric design, hybrid CPU/GPU utilization, easy integration with popular ReLU-sparse models, and support for various platforms. PowerInfer achieves high speed with lower resource demands and is flexible for easy deployment and compatibility with existing models like Falcon-40B, Llama2 family, ProSparse Llama2 family, and Bamboo-7B.

multipack_sampler

The Multipack sampler is a tool designed for padding-free distributed training of large language models. It optimizes batch processing efficiency using an approximate solution to the identical machine scheduling problem. The V2 update further enhances the packing algorithm complexity, achieving better throughput for a large number of nodes. It includes two variants for models with different attention types, aiming to balance sequence lengths and optimize packing efficiency. Users can refer to the provided benchmark for evaluating efficiency, utilization, and L^2 lag. The tool is compatible with PyTorch DataLoader and is released under the MIT license.

EScAIP

EScAIP is an Efficiently Scaled Attention Interatomic Potential that leverages a novel multi-head self-attention formulation within graph neural networks to predict energy and forces between atoms in molecules and materials. It achieves substantial gains in efficiency, at least 10x speed up in inference time and 5x less memory usage compared to existing models. EScAIP represents a philosophy towards developing general-purpose Neural Network Interatomic Potentials that achieve better expressivity through scaling and continue to scale efficiently with increased computational resources and training data.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.