Best AI tools for< Optimize Efficiency >

20 - AI tool Sites

Mobileye

Mobileye is a leading company specializing in driver assist and autonomous driving technologies. With a focus on developing innovative solutions for the automotive industry, Mobileye has revolutionized driver-assist technology by leveraging camera sensors to enhance safety and efficiency in vehicles. The company offers a range of solutions, from cloud-enhanced driver-assist systems to fully autonomous driving capabilities, all designed to provide a seamless and natural driving experience. By developing both hardware and software in-house, Mobileye ensures a safe-by-design approach that prioritizes scalability and efficiency, making their technology accessible to the mass market.

Space-O Technologies

Space-O Technologies is a top-rated Artificial Intelligence Development Company with 14+ years of expertise in AI software development, consulting services, and ML development services. They excel in deep learning, NLP, computer vision, and AutoML, serving both startups and enterprises. Using advanced tools like Python, TensorFlow, and PyTorch, they create scalable and secure AI products to optimize efficiency, drive revenue growth, and deliver sustained performance.

Decidr

Decidr is an AI-first business platform that offers grants for product or service ideas. It integrates AI specialists into workflows to outperform traditional companies. Decidr enables businesses to automate processes, scale, and optimize efficiency by assigning knowledge tasks to AI roles. The platform empowers users to reserve or purchase complete AI-first businesses, leading the pack in AI features and exclusivity. With Decidr, businesses can connect with other AI businesses to supercharge functions like marketing, accounting, HR, and admin. The platform leverages generative AI to power and automate around 80% of tasks, freeing up human talent for strategic roles.

Supervity

Supervity is an AI application that empowers businesses to scale operations, optimize efficiency, and achieve transformative growth with intelligent automation. It offers a range of AI agents for various industries and functions, such as finance, HR, IT, customer service, and more. Supervity's AI agents help streamline processes, automate tasks, and enhance decision-making across different business sectors.

Ever Efficient AI

Ever Efficient AI is an advanced AI development platform that offers customized solutions to streamline business processes and drive growth. The platform leverages historical data to trigger innovation, optimize efficiency, automate tasks, and enhance decision-making. With a focus on AI automation, the tool aims to revolutionize business operations by combining human intelligence with artificial intelligence for extraordinary results.

Interviewer.AI

Interviewer.AI is an end-to-end AI video interview platform that leverages Generative AI and Explainable AI to automate job descriptions, craft relevant interview questions, pre-screen and shortlist candidates. It significantly reduces time spent on pre-interviews, providing a comprehensive evaluation of potential candidates' psychological and technical factors. The platform is designed to streamline recruitment processes, optimize efficiency, and enhance the ability to find the perfect fit for teams.

Stack Spaces

Stack Spaces is an intelligent all-in-one workspace designed to elevate productivity by providing a central workspace and dashboard for product development. It offers a platform to manage knowledge, tasks, documents, and schedule in an organized, centralized, and simplified manner. The application integrates GPT-4 technology to tailor the workspace for users, allowing them to leverage large language models and customizable widgets. Users can centralize all apps and tools, ask questions, and perform intelligent searches to access relevant answers and insights. Stack Spaces aims to streamline workflows, eliminate context-switching, and optimize efficiency for users.

Intangles

Intangles is an advanced fleet management and predictive analytics platform powered by AI technology. It offers a suite of solutions designed to optimize fleet operations, improve vehicle health monitoring, enhance driving behavior, track fuel consumption, automate operations, and provide accurate predictive analytics. Intangles caters to various industries such as trucking, construction, mining, farming, oil & gas, transit, marine engines, gensets, and waste management. The platform leverages state-of-the-art technology including Digital Twin Technology, Integrated Solution, and Predictive Analytics to deliver outstanding results. Intangles' mission is to spark a technology revolution in the mobility industry by helping businesses save time and money through predictive maintenance and real-time data insights.

Backlsh

Backlsh is an AI-powered time tracking platform designed to increase team productivity by providing automatic time tracking, productivity analysis, AI integration for insights, and attendance tracking. It offers personalized AI tips, apps and websites monitoring, and detailed reports for performance analysis. Backlsh helps businesses optimize workflow efficiency, identify workforce disparities, and make data-driven decisions to enhance productivity. Trusted by over 10,000 users, Backlsh is acclaimed for its industry-leading features and seamless remote collaboration capabilities.

Healthray

Healthray is a Next-Gen AI Hospital Management System that offers a comprehensive suite of healthcare software solutions, including Hospital Information Management System (HIMS), EMR Software, EHR Software, Pharmacy Management System (PMS), and Laboratory Information Management System (LIMS). The platform leverages cutting-edge AI technology to streamline operations, elevate patient care, and optimize administrative efficiency for healthcare providers. Healthray caters to a wide range of medical specialties and offers advanced functionalities to revolutionize traditional healthcare practices. With a focus on digital healthcare solutions and AI integration, Healthray aims to transform the healthcare industry by providing innovative tools for doctors and hospitals.

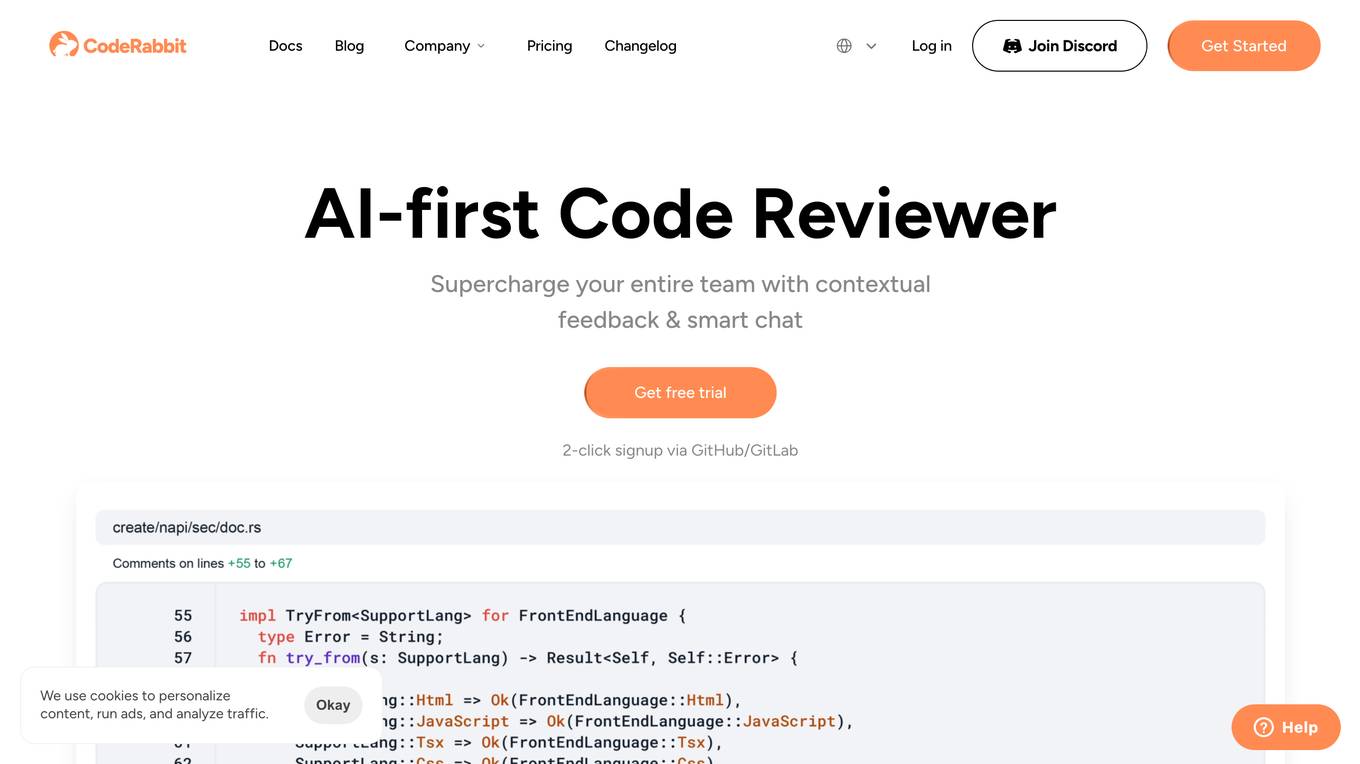

CodeRabbit

CodeRabbit is an innovative AI code review platform that streamlines and enhances the development process. By automating reviews, it dramatically improves code quality while saving valuable time for developers. The system offers detailed, line-by-line analysis, providing actionable insights and suggestions to optimize code efficiency and reliability. Trusted by hundreds of organizations and thousands of developers daily, CodeRabbit has processed millions of pull requests. Backed by CRV, CodeRabbit continues to revolutionize the landscape of AI-assisted software development.

RIOS

RIOS is an AI-powered automation tool that revolutionizes American manufacturing by leveraging robotics and AI technology. It offers flexible, reliable, and efficient robotic automation solutions that integrate seamlessly into existing production lines, helping businesses improve productivity, reduce operating expenses, and minimize risks. RIOS provides intelligent agents, machine tending, food handling, and end-of-line packout services, powered by AI and robotics. The tool aims to simplify complex manual processes, ensure total control of operations, and cut costs for businesses facing production inefficiencies and challenges in labor productivity.

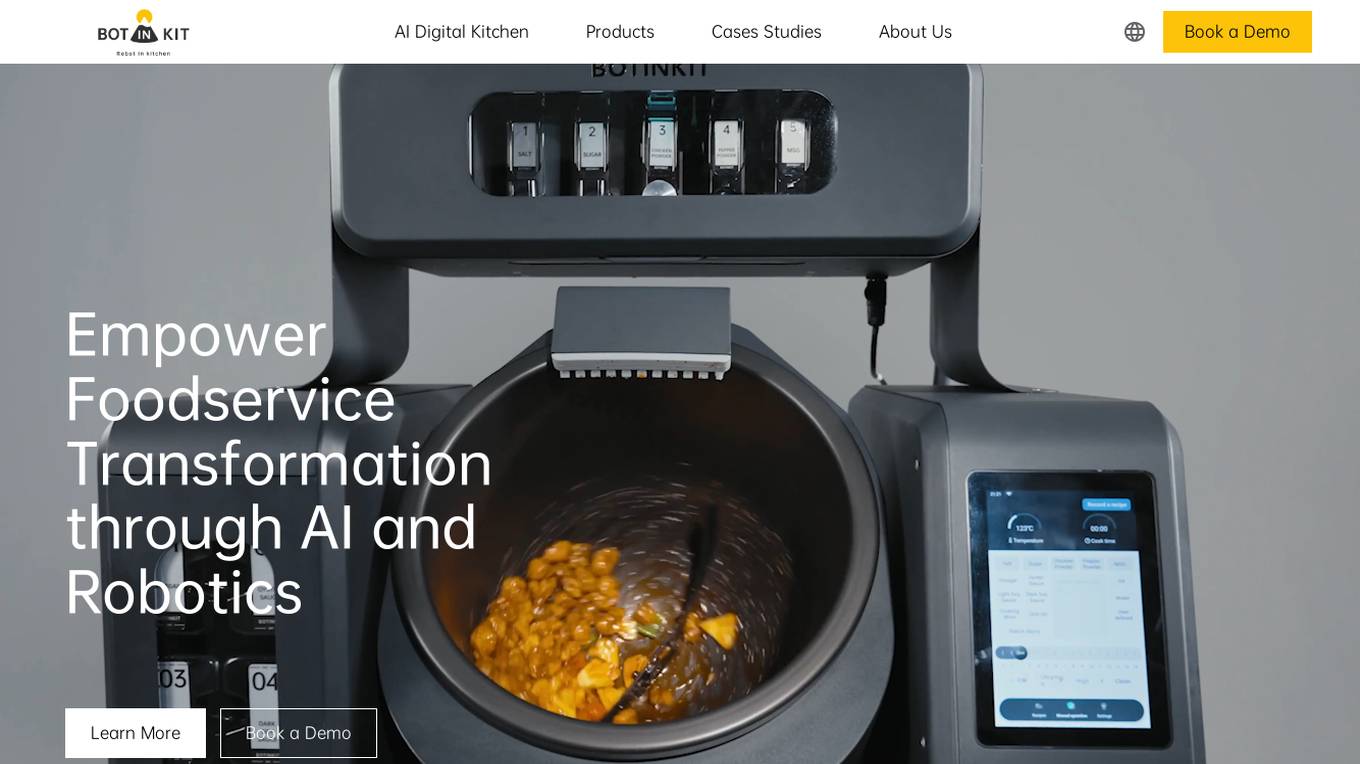

BOTINKIT

BOTINKIT is an AI-driven digital kitchen solution designed to empower foodservice transformation through AI and robotics. It helps chain restaurants globally by eliminating the reliance on skilled labor, ensuring consistent food quality, reducing kitchen labor costs, and optimizing ingredient usage. The innovative solutions offered by BOTINKIT are tailored to overcome obstacles faced by restaurants and facilitate seamless global expansion.

Nanotronics

Nanotronics is an AI-powered platform for autonomous manufacturing that revolutionizes the industry through automated optical inspection solutions. It combines computer vision, AI, and optical microscopy to ensure high-volume production with higher yields, less waste, and lower costs. Nanotronics offers products like nSpec and nControl, leading the paradigm shift in process control and transforming the entire manufacturing stack. With over 150 patents, 250+ deployments, and offices in multiple locations, Nanotronics is at the forefront of innovation in the manufacturing sector.

Allie

Allie is an AI application designed for manufacturing industries to maximize efficiency and quality in factories. It offers a comprehensive platform that connects machines, cameras, and production systems to provide real-time insights and predictive models. Allie helps in reducing downtime, increasing productivity, improving quality, and accelerating decision-making processes. It is built for enterprise use and has been chosen by industry leaders for its effectiveness in transforming manufacturing operations.

EnergyX

EnergyX is a leading provider of energy optimization solutions for the architectural industry. They offer fully integrated solutions for sustainable design, cloud-based optimization, innovation research, architectural design services, and platform financing. EnergyX focuses on delivering comprehensive services to enhance building sustainability, energy efficiency, and compliance with green building standards. With a strong emphasis on AI-driven solutions, EnergyX aims to revolutionize the construction market by providing cutting-edge technologies and sustainable practices.

Nexibeo

Nexibeo is an AI Integration Specialist company that helps businesses integrate AI into their operations to enhance productivity and efficiency. They offer custom GPTs tailored for businesses, training for workforces, and transparent pricing with a minimum 100% ROI. Nexibeo empowers teams to reach new heights of performance by seamlessly integrating AI into business processes.

NVIDIA Run:ai

NVIDIA Run:ai is an enterprise platform for AI workloads and GPU orchestration. It accelerates AI and machine learning operations by addressing key infrastructure challenges through dynamic resource allocation, comprehensive AI life-cycle support, and strategic resource management. The platform significantly enhances GPU efficiency and workload capacity by pooling resources across environments and utilizing advanced orchestration. NVIDIA Run:ai provides unparalleled flexibility and adaptability, supporting public clouds, private clouds, hybrid environments, or on-premises data centers.

Deployment Manager

The website is a platform for managing software deployments. It allows users to control the deployment process, monitor progress, and make necessary adjustments. Users can easily pause and resume deployments, ensuring smooth and efficient software releases. The platform provides detailed insights and analytics to help users optimize their deployment strategies and improve overall efficiency.

Polymath Robotics

Polymath Robotics specializes in providing autonomy and safety systems for off-highway vehicles. Their software-first approach simplifies the development of autonomy programs, making it faster and more cost-effective. The company offers modular autonomy solutions that are sensor agnostic, vehicle agnostic, and feature onboard compute capabilities. Polymath Robotics collaborates with clients to enhance safety, efficiency, and sustainability in logistics through their advanced solutions.

3 - Open Source AI Tools

PowerInfer

PowerInfer is a high-speed Large Language Model (LLM) inference engine designed for local deployment on consumer-grade hardware, leveraging activation locality to optimize efficiency. It features a locality-centric design, hybrid CPU/GPU utilization, easy integration with popular ReLU-sparse models, and support for various platforms. PowerInfer achieves high speed with lower resource demands and is flexible for easy deployment and compatibility with existing models like Falcon-40B, Llama2 family, ProSparse Llama2 family, and Bamboo-7B.

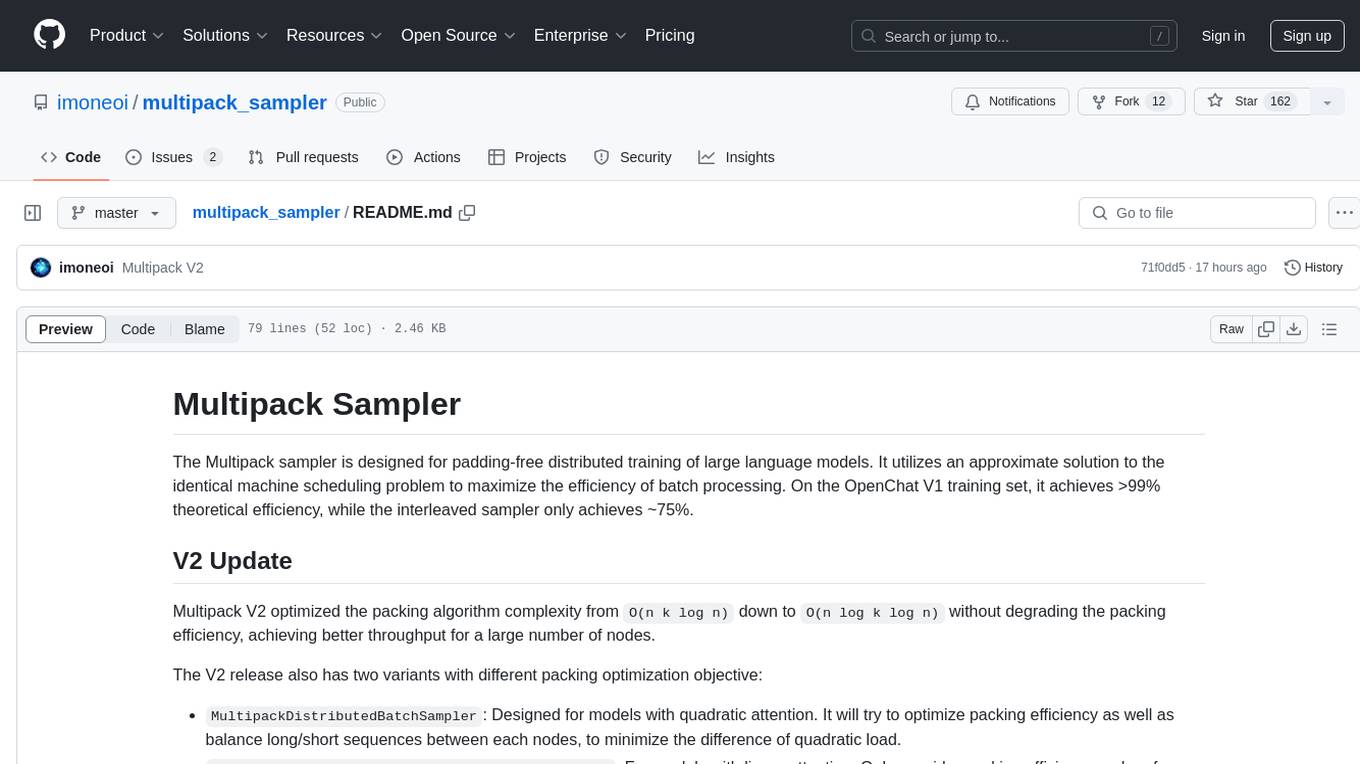

multipack_sampler

The Multipack sampler is a tool designed for padding-free distributed training of large language models. It optimizes batch processing efficiency using an approximate solution to the identical machine scheduling problem. The V2 update further enhances the packing algorithm complexity, achieving better throughput for a large number of nodes. It includes two variants for models with different attention types, aiming to balance sequence lengths and optimize packing efficiency. Users can refer to the provided benchmark for evaluating efficiency, utilization, and L^2 lag. The tool is compatible with PyTorch DataLoader and is released under the MIT license.

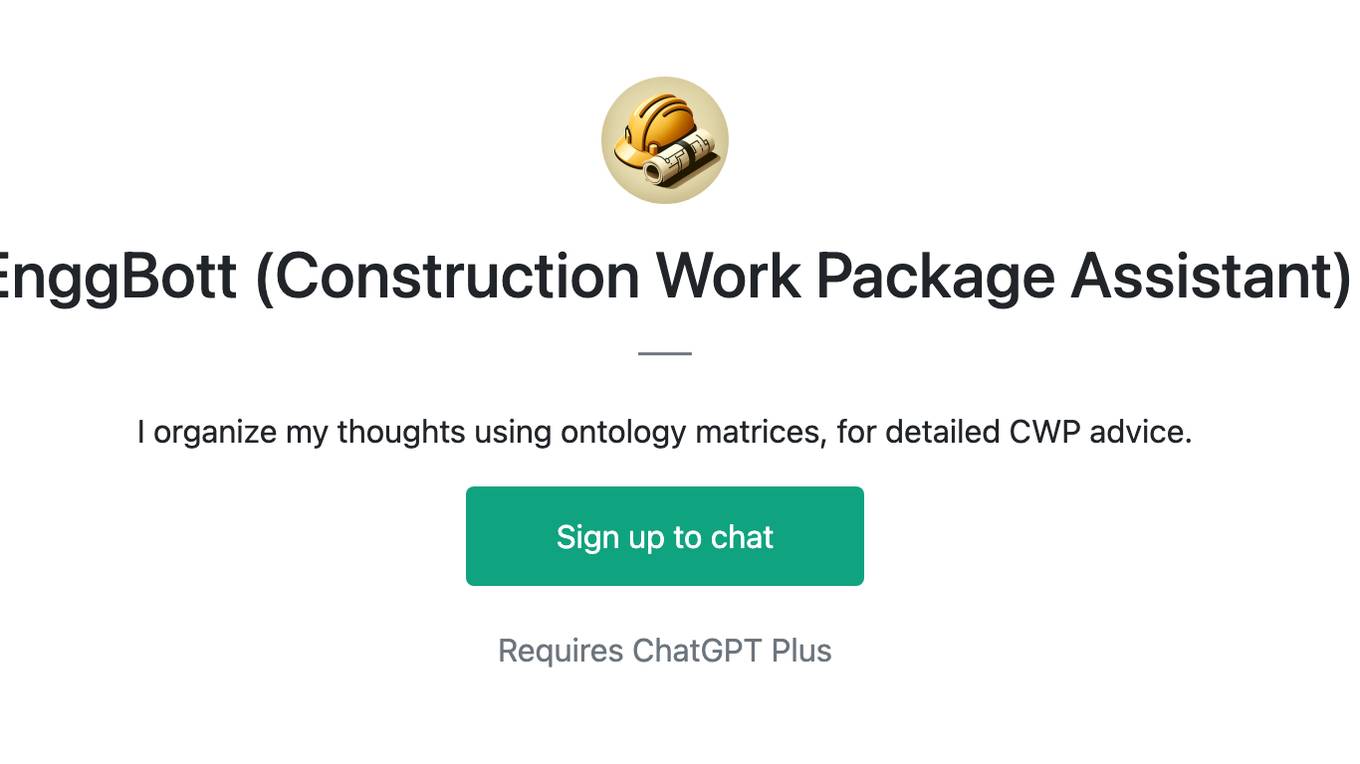

EScAIP

EScAIP is an Efficiently Scaled Attention Interatomic Potential that leverages a novel multi-head self-attention formulation within graph neural networks to predict energy and forces between atoms in molecules and materials. It achieves substantial gains in efficiency, at least 10x speed up in inference time and 5x less memory usage compared to existing models. EScAIP represents a philosophy towards developing general-purpose Neural Network Interatomic Potentials that achieve better expressivity through scaling and continue to scale efficiently with increased computational resources and training data.

20 - OpenAI Gpts

AI Business Transformer

Top AI for business automation, data analytics, content creation. Optimize efficiency, gain insights, and innovate with AI Business Transformer.

StatGPT

Engineering-savvy assistant for creative solutions, accurate calculations, and detailed blueprints.

EnggBott (Construction Work Package Assistant)

I organize my thoughts using ontology matrices, for detailed CWP advice.

Your Business Taxes: Guide

insightful articles and guides on business tax strategies at AfterTaxCash. Discover expert advice and tips to optimize tax efficiency, reduce liabilities, and maximize after-tax profits for your business. Stay informed to make informed financial decisions.

Thermodynamics Advisor

Advises on thermodynamics processes to optimize system efficiency.

Supplier Relationship Management Advisor

Streamlines supplier interactions to optimize organizational efficiency and cost-effectiveness.

Software Delivery Management Advisor

Streamlines software delivery processes to optimize operational efficiency.

Solidity Contract Auditor

Auditor for Solidity contracts, focusing on security, bug-finding and gas efficiency.

Process Optimization Advisor

Improves operational efficiency by optimizing processes and reducing waste.

Process Engineering Advisor

Optimizes production processes for improved efficiency and quality.

Organizational Design Advisor

Guides organizational structure optimization for efficiency and productivity.

Staff Scheduling Advisor

Coordinates and optimizes staff schedules for operational efficiency.

Office Space Planning Advisor

Optimizes workspace layout to enhance productivity and efficiency.

Wireless Communications Advisor

Advises on wireless communication technologies to enhance organizational efficiency.

Cloud Networking Advisor

Optimizes cloud-based networks for efficient organizational operations.

Manufacturing Process Development Advisor

Optimizes manufacturing processes for efficiency and quality.