obs-urlsource

OBS plugin to fetch data from a URL or file, connect to an API or AI service, parse responses and display text, image or audio on scene

Stars: 136

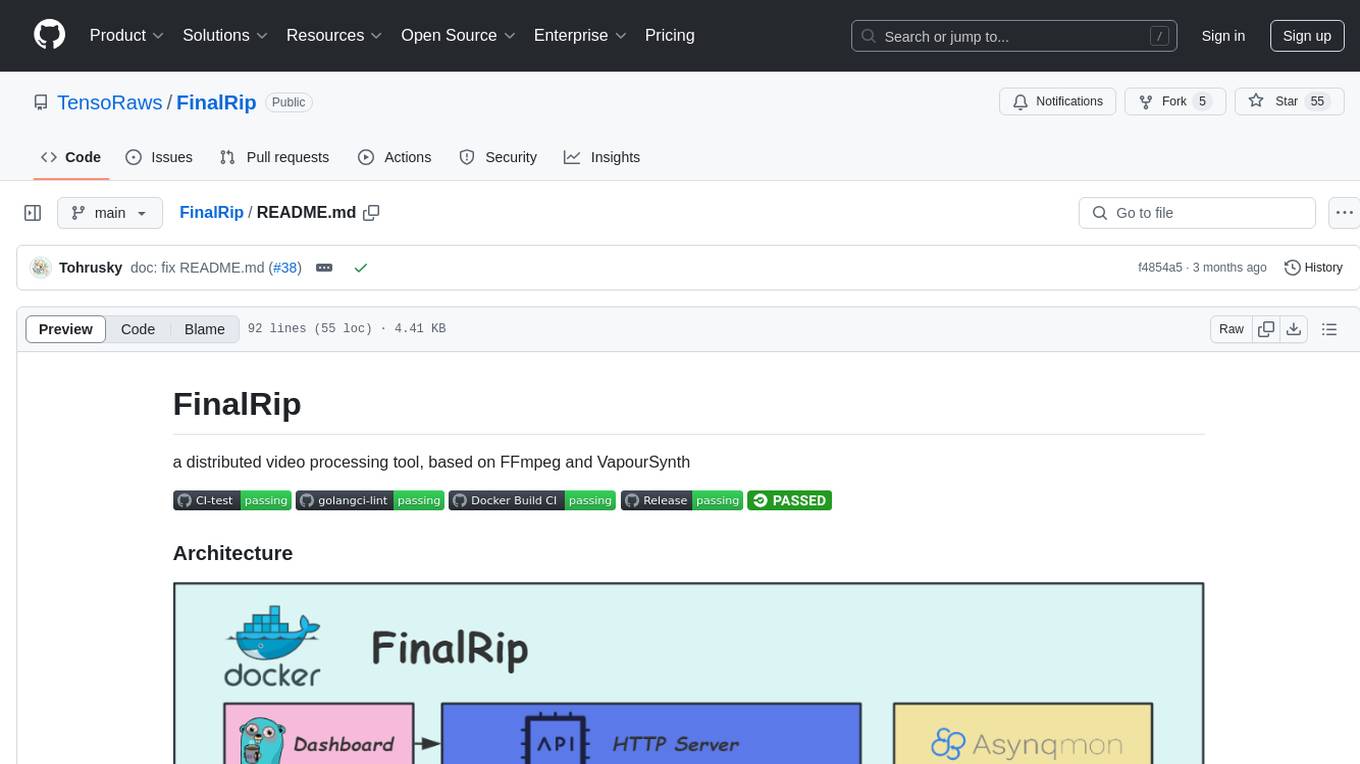

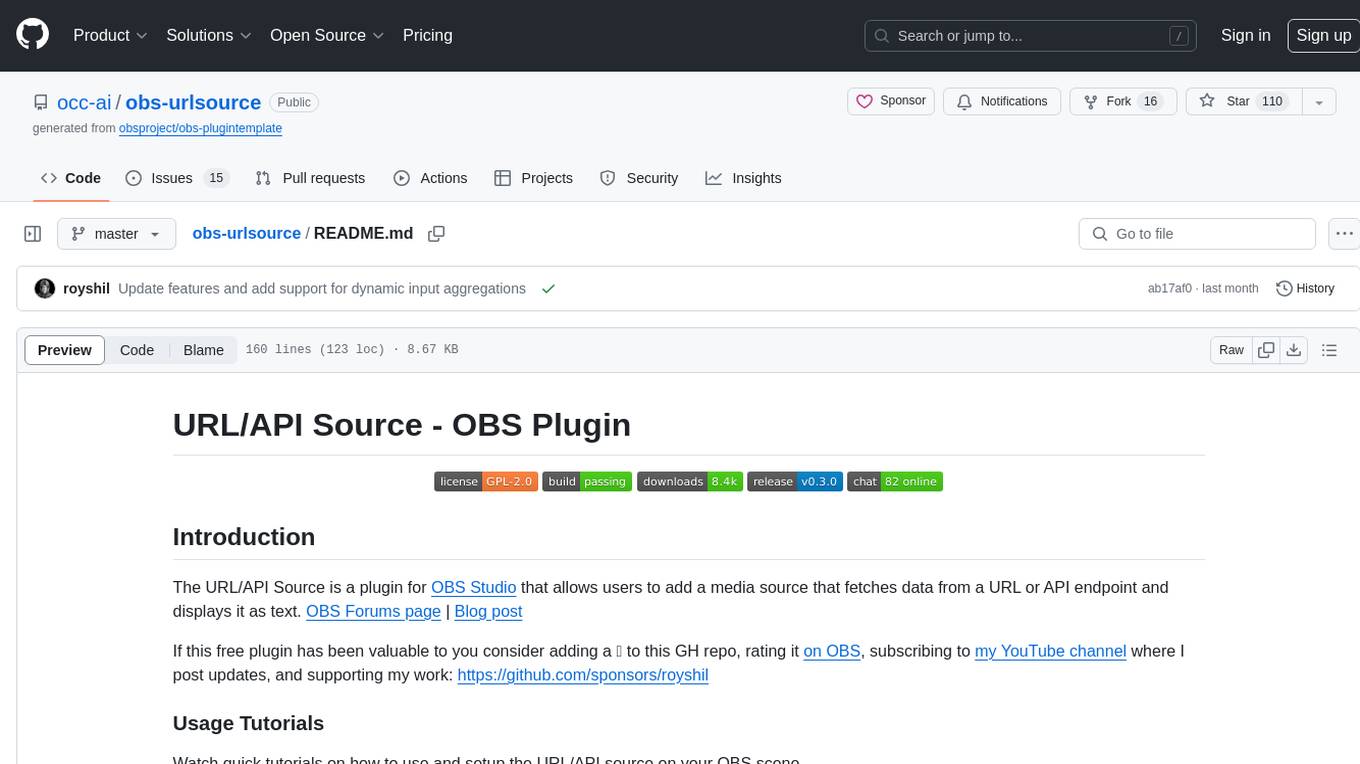

The URL/API Source is a plugin for OBS Studio that allows users to add a media source fetching data from a URL or API endpoint and displaying it as text. It supports input and output templating, various request types, output parsing (JSON, XML/HTML, Regex, CSS selectors), live data updating, output styling, and formatting. Future features include authentication, websocket support, more parsing options, request types, and output formats. The plugin is cross-platform compatible and actively maintained by the developer. Users can support the project on GitHub.

README:

The URL/API Source is a plugin for OBS Studio that allows fetcing data from a URL, API endpoint or file and displays it as text, image or even audio. OBS Forums page | Blog post

If this free plugin has been valuable to you consider adding a ⭐ to this GH repo, rating it on OBS, subscribing to my YouTube channel where I post updates, and supporting my work on GitHub, Patreon or OpenCollective

Watch quick tutorials on how to use and setup the URL/API source on your OBS scene.

Additional things to do with URL/API source to upgrade your content:

- HTML live website Scraping (18 min)

- Use ChatGPT

- Live Narration of your content with GPT

- Text-to-Speech with Coqui

- Live data from Google Sheets with API

- Send captions to YouTube API

- Get VSCode live data with API

- Translate with DeepL API

Out of ideas on what to do with URL/API Source? Here's a repo with 1,000s of public APIs https://github.com/public-apis/public-apis

The URL source supports both input and output templating using the Inja engine.

Output templates include {{output}} in case of a singular extraction from the response or {{output1}},{{output2}},... in case of multiple extracted value. In addition the {{body}} variable contains the entire body of the response in case of JSON. Advanced output templating functions can be achieved through Inja like looping over arrays, etc.

The input template works for the URL (querystring or REST path) or the POST body

Use the {{input}} variable to insert the output from a Text source. Inja advanced templates are available too.

A special function strftime is available for formatting the current time using conventions from C++ STL (strftime), as well as urlencode which is useful for dynamic input in the querystring.

The internal template renderer supports HTML4 and CSS with a reduced subset of feautures. It is quite powerful and can render tables and apply various styling to the text.

Image render is supported with the <img /> tag, and external URLs are supported as well. For example <img src="{{output}}" /> could be used to dynamically render an image URL coming from the response.

Watch an explanation of the major parts of the code and how they work together.

Features:

- HTTP request types: GET, POST

- Request headers (for e.g. API Key or Auth token)

- Request body for POST

- Multiple dynamic inputs from several Text or Image sources (base64)

- Output parsing: JSON (JSONPointer & JSONPath), XML/HTML (XPath & XQuery), Key-Value, Regex and CSS selectors

- Update timer for live updating data

- Test of the request to find the right parsing

- Output styling (font, color, etc.) and formatting (via regex post processing)

- Output Image (via image URL or image data on the response)

- Output text to external Text Source and audio to external Media Source

- Output to multiple sources with one request (Output Mapping)

- Multi-value (array, union) parsed output capture, object unpacking (via Inja)

- Dynamic input aggregations (time-based, "empty"-based)

Coming soon:

- Authentication (Basic, Digest, OAuth)

- Websocket support

- More parsing options (CSV, etc.)

- More request types (HTTP PUT / DELETE / PATCH, and GraphQL)

- More output formats (Markdown, slim, reStructured, HAML, etc.)

Check out our other plugins:

- Background Removal removes background from webcam without a green screen.

- Detect will find and track >80 types of objects in any source that provides an image in real-time

- LocalVocal speech AI assistant plugin for real-time, local transcription (captions), translation and more language functions

- Polyglot translation AI plugin for real-time, local translation to hunderds of languages

- 🚧 Experimental 🚧 CleanStream for real-time filler word (uh,um) and profanity removal from live audio stream

If you like this work, which is given to you completely free of charge, please consider supporting it on GitHub: https://github.com/sponsors/royshil

Check out the latest releases for downloads and install instructions.

The plugin was built and tested on Mac OSX (Intel & Apple silicon), Windows and Linux.

Start by cloning this repo to a directory of your choice.

Using the CI pipeline scripts, locally you would just call the zsh script. By default this builds a universal binary for both Intel and Apple Silicon. To build for a specific architecture please see .github/scripts/.build.zsh for the -arch options.

$ ./.github/scripts/build-macos -c ReleaseThe above script should succeed and the plugin files (e.g. obs-urlsource.plugin) will reside in the ./release/Release folder off of the root. Copy the .plugin file to the OBS directory e.g. ~/Library/Application Support/obs-studio/plugins.

To get .pkg installer file, run for example

$ ./.github/scripts/package-macos -c Release(Note that maybe the outputs will be in the Release folder and not the install folder like pakage-macos expects, so you will need to rename the folder from build_x86_64/Release to build_x86_64/install)

Use the CI scripts again

$ ./.github/scripts/build-linux.shCopy the results to the standard OBS folders on Ubuntu

$ sudo cp -R release/RelWithDebInfo/lib/* /usr/lib/x86_64-linux-gnu/

$ sudo cp -R release/RelWithDebInfo/share/* /usr/share/Note: The official OBS plugins guide recommends adding plugins to the ~/.config/obs-studio/plugins folder.

Use the CI scripts again, for example:

> .github/scripts/Build-Windows.ps1 -Target x64 -CMakeGenerator "Visual Studio 17 2022"The build should exist in the ./release folder off the root. You can manually install the files in the OBS directory.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for obs-urlsource

Similar Open Source Tools

obs-urlsource

The URL/API Source is a plugin for OBS Studio that allows users to add a media source fetching data from a URL or API endpoint and displaying it as text. It supports input and output templating, various request types, output parsing (JSON, XML/HTML, Regex, CSS selectors), live data updating, output styling, and formatting. Future features include authentication, websocket support, more parsing options, request types, and output formats. The plugin is cross-platform compatible and actively maintained by the developer. Users can support the project on GitHub.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.

obs-localvocal

LocalVocal is a Speech AI assistant OBS Plugin that enables users to transcribe speech into text and translate it into any language locally on their machine. The plugin runs OpenAI's Whisper for real-time speech processing and prediction. It supports features like transcribing audio in real-time, displaying captions on screen, sending captions to files, syncing captions with recordings, and translating captions to major languages. Users can bring their own Whisper model, filter or replace captions, and experience partial transcriptions for streaming. The plugin is privacy-focused, requiring no GPU, cloud costs, network, or downtime.

Scriberr

Scriberr is a self-hostable AI audio transcription app that utilizes open-source Whisper models from OpenAI for transcribing audio files locally on user's hardware. It offers fast transcription with customizable compute settings, local transcription on device, API endpoints for automation, and integration with other tools. Users can optionally summarize transcripts using ChatGPT or Ollama, with support for custom prompts. The app is mobile-ready, simple, and easy to use, with planned features including speaker diarization, audio recording, file actions, full text fuzzy search, tag-based organization, follow-along text with playback, edit summaries, export options, and support for other languages. Despite being in beta, Scriberr is functional and usable, albeit with some rough edges and minor bugs.

snipkit

SnipKit is a CLI tool designed to manage snippets efficiently, allowing users to execute saved scripts or generate new ones with the help of AI directly from the terminal. It supports loading snippets from various sources, parameter substitution, different parameter types, themes, and customization options. The tool includes an interactive chat-style interface called SnipKit Assistant for generating parameterized scripts. Users can also work with different AI providers like OpenAI, Anthropic, Google Gemini, and more. SnipKit aims to streamline script execution and script generation workflows for developers and users who frequently work with code snippets.

mmore

MMORE is an open-source, end-to-end pipeline for ingesting, processing, indexing, and retrieving knowledge from various file types such as PDFs, Office docs, images, audio, video, and web pages. It standardizes content into a unified multimodal format, supports distributed CPU/GPU processing, and offers hybrid dense+sparse retrieval with an integrated RAG service through CLI and APIs.

react-grab

React Grab is a tool that allows users to select context for coding agents directly from a website by pointing at any element and copying the file name, React component, and HTML source code. It enhances the performance of tools like Cursor, Claude Code, and Copilot by making them run up to 3 times faster and more accurately. Users can install React Grab, connect it to coding agents, and easily copy element contexts for pasting into their coding environment. The tool can be manually installed in various React frameworks and build tools, and it also provides an API for extending functionality with plugins, hooks, actions, themes, and custom agents.

NekoImageGallery

NekoImageGallery is an online AI image search engine that utilizes the Clip model and Qdrant vector database. It supports keyword search and similar image search. The tool generates 768-dimensional vectors for each image using the Clip model, supports OCR text search using PaddleOCR, and efficiently searches vectors using the Qdrant vector database. Users can deploy the tool locally or via Docker, with options for metadata storage using Qdrant database or local file storage. The tool provides API documentation through FastAPI's built-in Swagger UI and can be used for tasks like image search, text extraction, and vector search.

tgpt

tgpt is a cross-platform command-line interface (CLI) tool that allows users to interact with AI chatbots in the Terminal without needing API keys. It supports various AI providers such as KoboldAI, Phind, Llama2, Blackbox AI, and OpenAI. Users can generate text, code, and images using different flags and options. The tool can be installed on GNU/Linux, MacOS, FreeBSD, and Windows systems. It also supports proxy configurations and provides options for updating and uninstalling the tool.

Fabric

Fabric is an open-source framework designed to augment humans using AI by organizing prompts by real-world tasks. It addresses the integration problem of AI by creating and organizing prompts for various tasks. Users can create, collect, and organize AI solutions in a single place for use in their favorite tools. Fabric also serves as a command-line interface for those focused on the terminal. It offers a wide range of features and capabilities, including support for multiple AI providers, internationalization, speech-to-text, AI reasoning, model management, web search, text-to-speech, desktop notifications, and more. The project aims to help humans flourish by leveraging AI technology to solve human problems and enhance creativity.

chunkr

Chunkr is an open-source document intelligence API that provides a production-ready service for document layout analysis, OCR, and semantic chunking. It allows users to convert PDFs, PPTs, Word docs, and images into RAG/LLM-ready chunks. The API offers features such as layout analysis, OCR with bounding boxes, structured HTML and markdown output, and VLM processing controls. Users can interact with Chunkr through a Python SDK, enabling them to upload documents, process them, and export results in various formats. The tool also supports self-hosted deployment options using Docker Compose or Kubernetes, with configurations for different AI models like OpenAI, Google AI Studio, and OpenRouter. Chunkr is dual-licensed under the GNU Affero General Public License v3.0 (AGPL-3.0) and a commercial license, providing flexibility for different usage scenarios.

gitingest

GitIngest is a tool that allows users to turn any Git repository into a prompt-friendly text ingest for LLMs. It provides easy code context by generating a text digest from a git repository URL or directory. The tool offers smart formatting for optimized output format for LLM prompts and provides statistics about file and directory structure, size of the extract, and token count. GitIngest can be used as a CLI tool on Linux and as a Python package for code integration. The tool is built using Tailwind CSS for frontend, FastAPI for backend framework, tiktoken for token estimation, and apianalytics.dev for simple analytics. Users can self-host GitIngest by building the Docker image and running the container. Contributions to the project are welcome, and the tool aims to be beginner-friendly for first-time contributors with a simple Python and HTML codebase.

FinalRip

FinalRip is a distributed video processing tool based on FFmpeg and VapourSynth. It cuts the original video into multiple clips, processes each clip in parallel, and merges them into the final video. Users can deploy the system in a distributed way, configure settings via environment variables or remote config files, and develop/test scripts in the vs-playground environment. It supports Nvidia GPU, AMD GPU with ROCm support, and provides a dashboard for selecting compatible scripts to process videos.

anyquery

Anyquery is a SQL query engine built on SQLite that allows users to run SQL queries on various data sources like files, databases, and apps. It can connect to LLMs to access data and act as a MySQL server for running queries. The tool is extensible through plugins and supports various installation methods like Homebrew, APT, YUM/DNF, Scoop, Winget, and Chocolatey.

SciPIP

SciPIP is a scientific paper idea generation tool powered by a large language model (LLM) designed to assist researchers in quickly generating novel research ideas. It conducts a literature review based on user-provided background information and generates fresh ideas for potential studies. The tool is designed to help researchers in various fields by providing a GUI environment for idea generation, supporting NLP, multimodal, and CV fields, and allowing users to interact with the tool through a web app or terminal. SciPIP uses Neo4j as its database and provides functionalities for generating new ideas, fetching papers, and constructing the database.

refact-lsp

Refact Agent is a small executable written in Rust as part of the Refact Agent project. It lives inside your IDE to keep AST and VecDB indexes up to date, supporting connection graphs between definitions and usages in popular programming languages. It functions as an LSP server, offering code completion, chat functionality, and integration with various tools like browsers, databases, and debuggers. Users can interact with it through a Text UI in the command line.

For similar tasks

obs-urlsource

The URL/API Source is a plugin for OBS Studio that allows users to add a media source fetching data from a URL or API endpoint and displaying it as text. It supports input and output templating, various request types, output parsing (JSON, XML/HTML, Regex, CSS selectors), live data updating, output styling, and formatting. Future features include authentication, websocket support, more parsing options, request types, and output formats. The plugin is cross-platform compatible and actively maintained by the developer. Users can support the project on GitHub.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.

go-stock

Go-stock is a tool for analyzing stock market data using the Go programming language. It provides functionalities for fetching stock data, performing technical analysis, and visualizing trends. With Go-stock, users can easily retrieve historical stock prices, calculate moving averages, and plot candlestick charts. This tool is designed to help investors and traders make informed decisions based on data-driven insights.

AingDesk

AingDesk is a tool that allows users to deploy DeepSeek or other AI models on their computer with just one click. It features a user-friendly interface, multi-source knowledge base support, built-in chat interface, and the ability to share projects online. The tool is optimized for performance on both local and cloud environments, with a focus on hassle-free setup and extensibility through a modular architecture. The development plan includes support for third-party API integrations and local deployment of text-to-image hybrid models for creative workflows.

LotteryMaster

LotteryMaster is a tool designed to fetch lottery data, save it to Excel files, and provide analysis reports including number prediction, number recommendation, and number trends. It supports multiple platforms for access such as Web and mobile App. The tool integrates AI models like Qwen API and DeepSeek for generating analysis reports and trend analysis charts. Users can configure API parameters for controlling randomness, diversity, presence penalty, and maximum tokens. The tool also includes a frontend project based on uniapp + Vue3 + TypeScript for multi-platform applications. It provides a backend service running on Fastify with Node.js, Cheerio.js for web scraping, Pino for logging, xlsx for Excel file handling, and Jest for testing. The project is still in development and some features may not be fully implemented. The analysis reports are for reference only and do not constitute investment advice. Users are advised to use the tool responsibly and avoid addiction to gambling.

yournextstore

Your Next Store is an open-source Next.js e-commerce platform designed for AI development. It offers a Stripe-native integration, ultra-fast page loads, and typed APIs. The codebase follows consistent patterns, provides blazing fast performance with Next.js 16 and edge caching, and allows direct API integration without the need for plugins. With a focus on AI coding tools, Your Next Store offers familiar patterns, typed APIs for Commerce Kit SDK methods, and a well-defined domain for commerce data models, making it easier for AI models to generate accurate suggestions and write correct code.

venice

Venice is a derived data storage platform, providing the following characteristics: 1. High throughput asynchronous ingestion from batch and streaming sources (e.g. Hadoop and Samza). 2. Low latency online reads via remote queries or in-process caching. 3. Active-active replication between regions with CRDT-based conflict resolution. 4. Multi-cluster support within each region with operator-driven cluster assignment. 5. Multi-tenancy, horizontal scalability and elasticity within each cluster. The above makes Venice particularly suitable as the stateful component backing a Feature Store, such as Feathr. AI applications feed the output of their ML training jobs into Venice and then query the data for use during online inference workloads.

airtable

A simple Golang package to access the Airtable API. It provides functionalities to interact with Airtable such as initializing client, getting tables, listing records, adding records, updating records, deleting records, and bulk deleting records. The package is compatible with Go 1.13 and above.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.