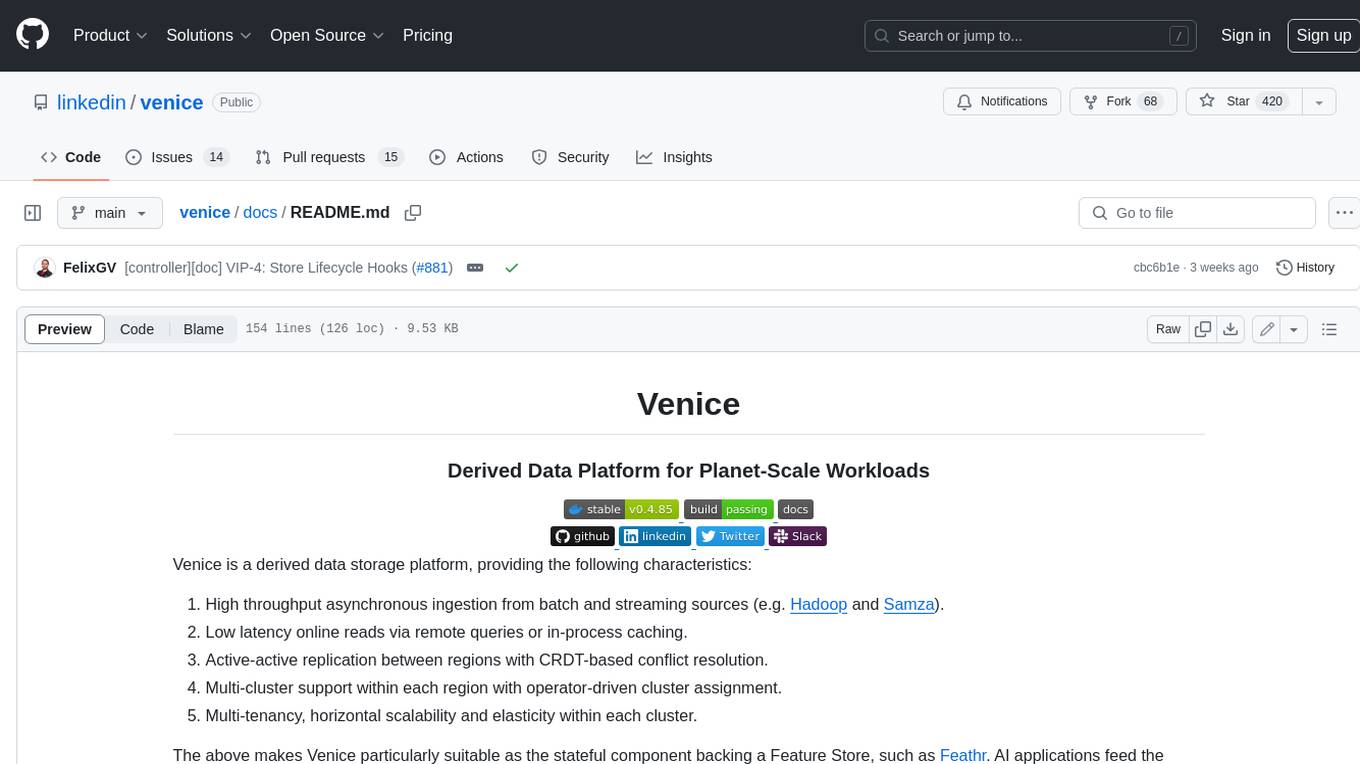

venice

Venice, Derived Data Platform for Planet-Scale Workloads.

Stars: 568

Venice is a derived data storage platform, providing the following characteristics: 1. High throughput asynchronous ingestion from batch and streaming sources (e.g. Hadoop and Samza). 2. Low latency online reads via remote queries or in-process caching. 3. Active-active replication between regions with CRDT-based conflict resolution. 4. Multi-cluster support within each region with operator-driven cluster assignment. 5. Multi-tenancy, horizontal scalability and elasticity within each cluster. The above makes Venice particularly suitable as the stateful component backing a Feature Store, such as Feathr. AI applications feed the output of their ML training jobs into Venice and then query the data for use during online inference workloads.

README:

Venice is a derived data storage platform, providing the following characteristics:

- High throughput asynchronous ingestion from batch and streaming sources (e.g. Hadoop and Samza).

- Low latency online reads via remote queries or in-process caching.

- Active-active replication between regions with CRDT-based conflict resolution.

- Multi-cluster support within each region with operator-driven cluster assignment.

- Multi-tenancy, horizontal scalability and elasticity within each cluster.

The above makes Venice particularly suitable as the stateful component backing a Feature Store, such as Feathr. AI applications feed the output of their ML training jobs into Venice and then query the data for use during online inference workloads.

Venice is a system which straddles the offline, nearline and online worlds, as illustrated below.

You can add a dependency on Venice to any Java project as specified below. Note that, currently, Venice dependencies are not published on Maven Central and therefore require adding an extra repository definition. All published jars can be seen here. Usually, the project is released a few times per week.

Add the following to your build.gradle:

repositories {

mavenCentral()

maven {

name 'VeniceJFrog'

url 'https://linkedin.jfrog.io/artifactory/venice'

}

}

dependencies {

implementation 'com.linkedin.venice:venice-client:0.4.455'

}Add the following to your pom.xml:

<project>

...

<repositories>

...

<repository>

<id>venice-jfrog</id>

<name>VeniceJFrog</name>

<url>https://linkedin.jfrog.io/artifactory/venice</url>

</repository>

</repositories>

...

<dependencies>

...

<dependency>

<groupId>com.linkedin.venice</groupId>

<artifactId>venice-client</artifactId>

<version>0.4.455</version>

<scope>compile</scope>

</dependency>

</dependencies>

</project>

From the user's perspective, Venice provides a variety of read and write APIs. These are fully decoupled from one another, in the sense that no matter which write APIs are used, any of the read APIs are available.

Furthermore, Venice provides a rich spectrum of options in terms of simplicity on one end, and sophistication on the other. It is easy to get started with the simpler APIs, and later on decide to enhance the use case via more advanced APIs, either in addition to or instead of the simpler ones. In this way, Venice can accompany users as their requirements evolve, in terms of scale, latency and functionality.

The following diagram presents these APIs and summarizes the components coming into play to make them work.

The Venice write path can be broken down into three granularities: full dataset swap, insertion of many rows into an existing dataset, and updates of some columns of some rows. All three granularities are supported by Hadoop and Samza. In addition, any service can asynchronously produce single row inserts and updates as well, using the Online Producer library. The table below summarizes the write operations supported by each platform:

| Hadoop | Samza | Any Service | |

|---|---|---|---|

| Full dataset swap | ✅ | ✅ | |

| Insertion of some rows into an existing dataset | ✅ | ✅ | ✅ |

| Updates to some columns of some rows | ✅ | ✅ | ✅ |

Moreover, the three granularities of write operations can all be mixed within a single dataset. A dataset which gets full dataset swaps in addition to row insertion or row updates is called hybrid.

As part of configuring a store to be hybrid, an important concept is the rewind time, which defines how far back should recent real-time writes be rewound and applied on top of the new generation of the dataset getting swapped in.

Leveraging this mechanism, it is possible to overlay the output of a stream processing job on top of that of a batch job. If using partial updates, then it is possible to have some of the columns be updated in real-time and some in batch, and these two sets of columns can either overlap or be disjoint, as desired.

Write Compute includes two kinds of operations, which can be performed on the value associated with a given key:

- Partial update: set the content of a field within the value.

- Collection merging: add or remove entries in a set or map.

N.B.: Currently, write compute is only supported in conjunction with active-passive replication. Support for active-active replication is under development.

Venice supports the following read APIs:

- Single get: get the value associated with a single key

- Batch get: get the values associated with a set of keys

-

Read compute: project some fields and/or compute some function on the fields of values associated with a set of

keys. When using the read compute DSL, the following functions are currently supported:

- Dot product: perform a dot product on the float vector stored in a given field, against another float vector provided as query param, and return the resulting scalar.

- Cosine similarity: perform a cosine similarity on the float vector stored in a given field, against another float vector provided as query param, and return the resulting scalar.

- Hadamard product: perform a Hadamard product on the float vector stored in a given field, against another float vector provided as query param, and return the resulting vector.

- Collection count: return the number of items in the collection stored in a given field.

There are two main modes for accessing Venice data:

-

Classical Venice (stateless): You can perform remote queries against Venice's distributed backend service. If

using read compute operations in this mode, the queries are pushed down to the backend and only the computation

results are returned to the client. There are two clients capable of such remote queries:

- Thin Client: This is the simplest client, which sends requests to the router tier, which itself sends requests to the server tier.

- Fast Client: This client is partitioning-aware, and can therefore send requests directly to the correct server instance, skipping the routing tier. Note that this client is still under development and may not be as stable nor at functional parity with the Thin Client.

- Da Vinci (stateful): Alternatively, you can eagerly load some or all partitions of the dataset and perform queries against the resulting local cache. Future updates to the data continue to be streamed in and applied to the local cache.

The table below summarizes the clients' characteristics:

| Network Hops | Typical latency (p99) | State Footprint | |

|---|---|---|---|

| Thin Client | 2 | < 10 milliseconds | Stateless |

| Fast Client | 1 | < 2 milliseconds | Minimal (routing metadata only) |

| Da Vinci Client (RAM + SSD) | 0 | < 1 millisecond | Bounded RAM, full dataset on SSD |

| Da Vinci Client (all-in-RAM) | 0 | < 10 microseconds | Full dataset in RAM |

All of these clients share the same read APIs described above. This enables users to make changes to their cost/performance tradeoff without needing to rewrite their applications.

The Open Sourcing Venice blog and conference talk are good starting points to get an overview of what use cases and scale can Venice support. For more Venice posts, talks and podcasts, see our Learn More page.

Refer to the Venice quickstart to create your own Venice cluster and play around with some features like creating a data store, batch push, incremental push, and single get. We recommend sticking to our latest stable release.

Feel free to engage with the community using our:

-

Slack workspace

- Archived and publicly searchable on Linen

-

LinkedIn group

-

GitHub issues

-

Contributor's guide

Follow us to hear more about the progress of the Venice project and community:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for venice

Similar Open Source Tools

venice

Venice is a derived data storage platform, providing the following characteristics: 1. High throughput asynchronous ingestion from batch and streaming sources (e.g. Hadoop and Samza). 2. Low latency online reads via remote queries or in-process caching. 3. Active-active replication between regions with CRDT-based conflict resolution. 4. Multi-cluster support within each region with operator-driven cluster assignment. 5. Multi-tenancy, horizontal scalability and elasticity within each cluster. The above makes Venice particularly suitable as the stateful component backing a Feature Store, such as Feathr. AI applications feed the output of their ML training jobs into Venice and then query the data for use during online inference workloads.

magpie

This is the official repository for 'Alignment Data Synthesis from Scratch by Prompting Aligned LLMs with Nothing'. Magpie is a tool designed to synthesize high-quality instruction data at scale by extracting it directly from an aligned Large Language Models (LLMs). It aims to democratize AI by generating large-scale alignment data and enhancing the transparency of model alignment processes. Magpie has been tested on various model families and can be used to fine-tune models for improved performance on alignment benchmarks such as AlpacaEval, ArenaHard, and WildBench.

SheetCopilot

SheetCopilot is an assistant agent that manipulates spreadsheets by following user commands. It leverages Large Language Models (LLMs) to interact with spreadsheets like a human expert, enabling non-expert users to complete tasks on complex software such as Google Sheets and Excel via a language interface. The tool observes spreadsheet states, polishes generated solutions based on external action documents and error feedback, and aims to improve success rate and efficiency. SheetCopilot offers a dataset with diverse task categories and operations, supporting operations like entry & manipulation, management, formatting, charts, and pivot tables. Users can interact with SheetCopilot in Excel or Google Sheets, executing tasks like calculating revenue, creating pivot tables, and plotting charts. The tool's evaluation includes performance comparisons with leading LLMs and VBA-based methods on specific datasets, showcasing its capabilities in controlling various aspects of a spreadsheet.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

BambooAI

BambooAI is a lightweight library utilizing Large Language Models (LLMs) to provide natural language interaction capabilities, much like a research and data analysis assistant enabling conversation with your data. You can either provide your own data sets, or allow the library to locate and fetch data for you. It supports Internet searches and external API interactions.

gepa

GEPA (Genetic-Pareto) is a framework for optimizing arbitrary systems composed of text components like AI prompts, code snippets, or textual specs against any evaluation metric. It employs LLMs to reflect on system behavior, using feedback from execution and evaluation traces to drive targeted improvements. Through iterative mutation, reflection, and Pareto-aware candidate selection, GEPA evolves robust, high-performing variants with minimal evaluations, co-evolving multiple components in modular systems for domain-specific gains. The repository provides the official implementation of the GEPA algorithm as proposed in the paper titled 'GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning'.

kafka-ml

Kafka-ML is a framework designed to manage the pipeline of Tensorflow/Keras and PyTorch machine learning models on Kubernetes. It enables the design, training, and inference of ML models with datasets fed through Apache Kafka, connecting them directly to data streams like those from IoT devices. The Web UI allows easy definition of ML models without external libraries, catering to both experts and non-experts in ML/AI.

MegatronApp

MegatronApp is a toolchain built around the Megatron-LM training framework, offering performance tuning, slow-node detection, and training-process visualization. It includes modules like MegaScan for anomaly detection, MegaFBD for forward-backward decoupling, MegaDPP for dynamic pipeline planning, and MegaScope for visualization. The tool aims to enhance large-scale distributed training by providing valuable capabilities and insights.

mscclpp

MSCCL++ is a GPU-driven communication stack for scalable AI applications. It provides a highly efficient and customizable communication stack for distributed GPU applications. MSCCL++ redefines inter-GPU communication interfaces, delivering a highly efficient and customizable communication stack for distributed GPU applications. Its design is specifically tailored to accommodate diverse performance optimization scenarios often encountered in state-of-the-art AI applications. MSCCL++ provides communication abstractions at the lowest level close to hardware and at the highest level close to application API. The lowest level of abstraction is ultra light weight which enables a user to implement logics of data movement for a collective operation such as AllReduce inside a GPU kernel extremely efficiently without worrying about memory ordering of different ops. The modularity of MSCCL++ enables a user to construct the building blocks of MSCCL++ in a high level abstraction in Python and feed them to a CUDA kernel in order to facilitate the user's productivity. MSCCL++ provides fine-grained synchronous and asynchronous 0-copy 1-sided abstracts for communication primitives such as `put()`, `get()`, `signal()`, `flush()`, and `wait()`. The 1-sided abstractions allows a user to asynchronously `put()` their data on the remote GPU as soon as it is ready without requiring the remote side to issue any receive instruction. This enables users to easily implement flexible communication logics, such as overlapping communication with computation, or implementing customized collective communication algorithms without worrying about potential deadlocks. Additionally, the 0-copy capability enables MSCCL++ to directly transfer data between user's buffers without using intermediate internal buffers which saves GPU bandwidth and memory capacity. MSCCL++ provides consistent abstractions regardless of the location of the remote GPU (either on the local node or on a remote node) or the underlying link (either NVLink/xGMI or InfiniBand). This simplifies the code for inter-GPU communication, which is often complex due to memory ordering of GPU/CPU read/writes and therefore, is error-prone.

AgentLab

AgentLab is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides features for developing and evaluating agents on various benchmarks supported by BrowserGym. The framework allows for large-scale parallel agent experiments using ray, building blocks for creating agents over BrowserGym, and a unified LLM API for OpenRouter, OpenAI, Azure, or self-hosted using TGI. AgentLab also offers reproducibility features, a unified LeaderBoard, and supports multiple benchmarks like WebArena, WorkArena, WebLinx, VisualWebArena, AssistantBench, GAIA, Mind2Web-live, and MiniWoB.

NineRec

NineRec is a benchmark dataset suite for evaluating transferable recommendation models. It provides datasets for pre-training and transfer learning in recommender systems, focusing on multimodal and foundation model tasks. The dataset includes user-item interactions, item texts in multiple languages, item URLs, and raw images. Researchers can use NineRec to develop more effective and efficient methods for pre-training recommendation models beyond end-to-end training. The dataset is accompanied by code for dataset preparation, training, and testing in PyTorch environment.

qlib

Qlib is an open-source, AI-oriented quantitative investment platform that supports diverse machine learning modeling paradigms, including supervised learning, market dynamics modeling, and reinforcement learning. It covers the entire chain of quantitative investment, from alpha seeking to order execution. The platform empowers researchers to explore ideas and implement productions using AI technologies in quantitative investment. Qlib collaboratively solves key challenges in quantitative investment by releasing state-of-the-art research works in various paradigms. It provides a full ML pipeline for data processing, model training, and back-testing, enabling users to perform tasks such as forecasting market patterns, adapting to market dynamics, and modeling continuous investment decisions.

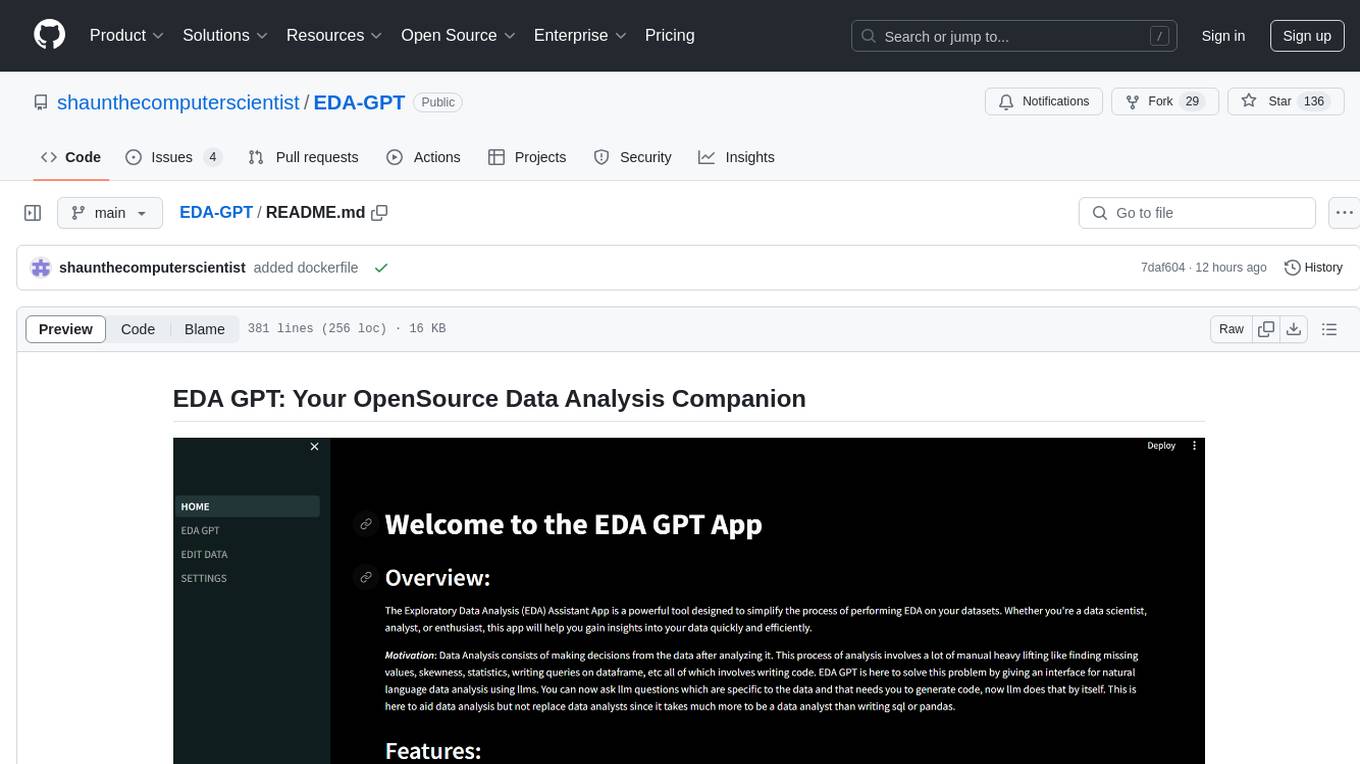

EDA-GPT

EDA GPT is an open-source data analysis companion that offers a comprehensive solution for structured and unstructured data analysis. It streamlines the data analysis process, empowering users to explore, visualize, and gain insights from their data. EDA GPT supports analyzing structured data in various formats like CSV, XLSX, and SQLite, generating graphs, and conducting in-depth analysis of unstructured data such as PDFs and images. It provides a user-friendly interface, powerful features, and capabilities like comparing performance with other tools, analyzing large language models, multimodal search, data cleaning, and editing. The tool is optimized for maximal parallel processing, searching internet and documents, and creating analysis reports from structured and unstructured data.

guidellm

GuideLLM is a powerful tool for evaluating and optimizing the deployment of large language models (LLMs). By simulating real-world inference workloads, GuideLLM helps users gauge the performance, resource needs, and cost implications of deploying LLMs on various hardware configurations. This approach ensures efficient, scalable, and cost-effective LLM inference serving while maintaining high service quality. Key features include performance evaluation, resource optimization, cost estimation, and scalability testing.

fuse-med-ml

FuseMedML is a Python framework designed to accelerate machine learning-based discovery in the medical field by promoting code reuse. It provides a flexible design concept where data is stored in a nested dictionary, allowing easy handling of multi-modality information. The framework includes components for creating custom models, loss functions, metrics, and data processing operators. Additionally, FuseMedML offers 'batteries included' key components such as fuse.data for data processing, fuse.eval for model evaluation, and fuse.dl for reusable deep learning components. It supports PyTorch and PyTorch Lightning libraries and encourages the creation of domain extensions for specific medical domains.

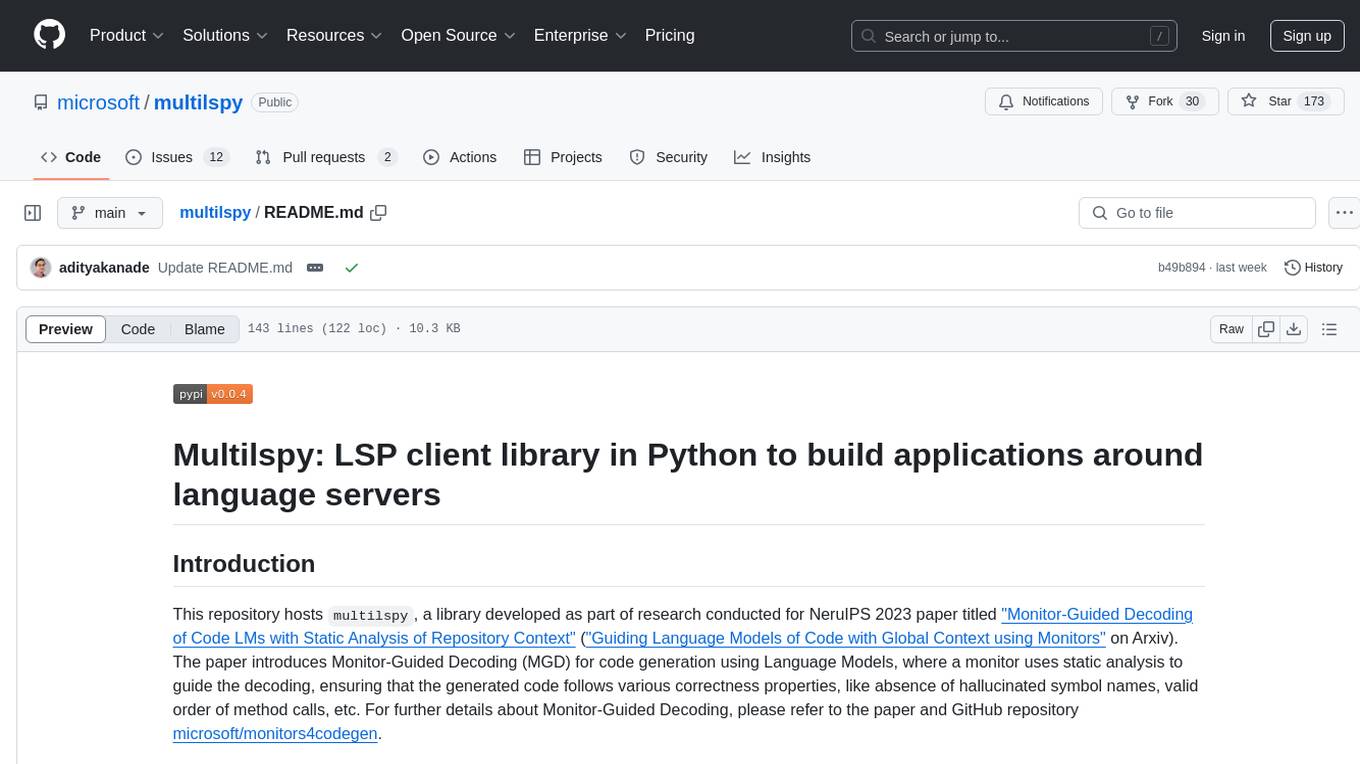

multilspy

Multilspy is a Python library developed for research purposes to facilitate the creation of language server clients for querying and obtaining results of static analyses from various language servers. It simplifies the process by handling server setup, communication, and configuration parameters, providing a common interface for different languages. The library supports features like finding function/class definitions, callers, completions, hover information, and document symbols. It is designed to work with AI systems like Large Language Models (LLMs) for tasks such as Monitor-Guided Decoding to ensure code generation correctness and boost compilability.

For similar tasks

venice

Venice is a derived data storage platform, providing the following characteristics: 1. High throughput asynchronous ingestion from batch and streaming sources (e.g. Hadoop and Samza). 2. Low latency online reads via remote queries or in-process caching. 3. Active-active replication between regions with CRDT-based conflict resolution. 4. Multi-cluster support within each region with operator-driven cluster assignment. 5. Multi-tenancy, horizontal scalability and elasticity within each cluster. The above makes Venice particularly suitable as the stateful component backing a Feature Store, such as Feathr. AI applications feed the output of their ML training jobs into Venice and then query the data for use during online inference workloads.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

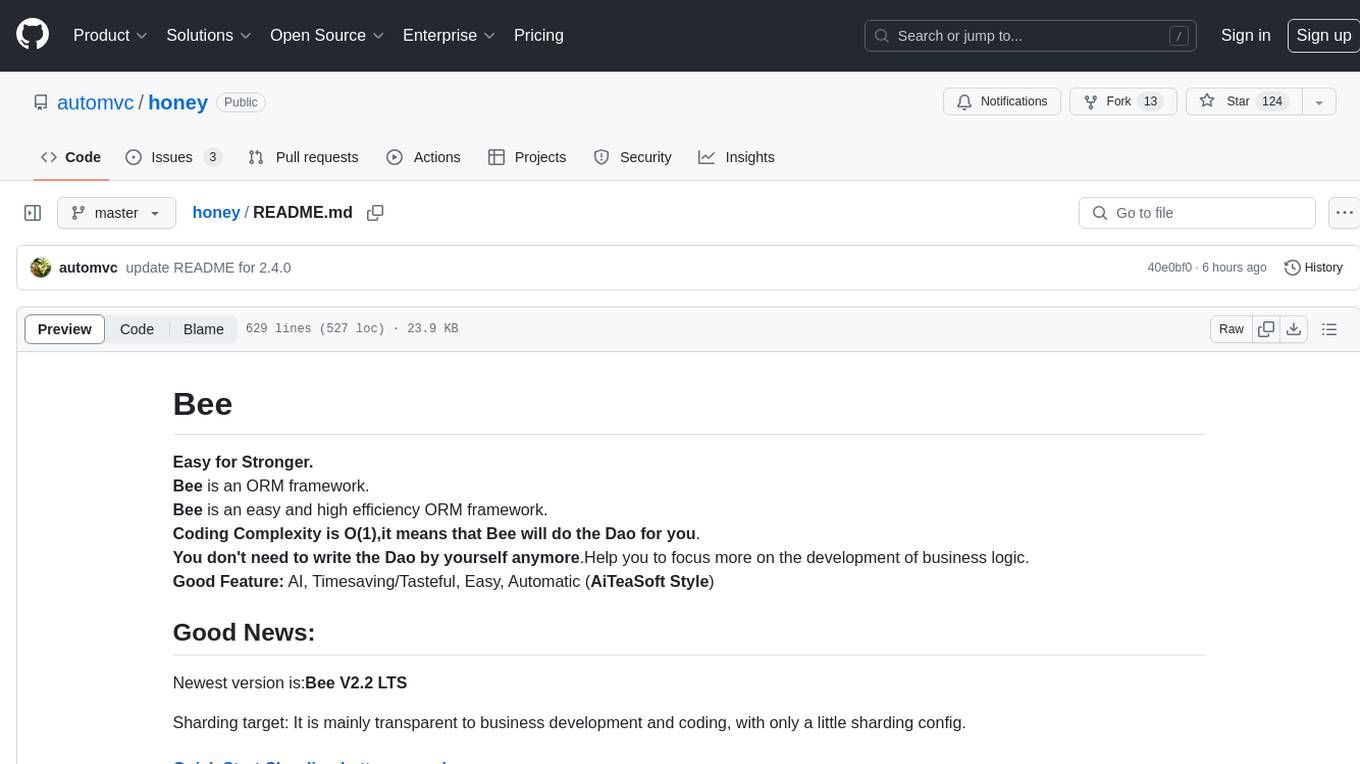

honey

Bee is an ORM framework that provides easy and high-efficiency database operations, allowing developers to focus on business logic development. It supports various databases and features like automatic filtering, partial field queries, pagination, and JSON format results. Bee also offers advanced functionalities like sharding, transactions, complex queries, and MongoDB ORM. The tool is designed for rapid application development in Java, offering faster development for Java Web and Spring Cloud microservices. The Enterprise Edition provides additional features like financial computing support, automatic value insertion, desensitization, dictionary value conversion, multi-tenancy, and more.

llama_index

LlamaIndex is a data framework for building LLM applications. It provides tools for ingesting, structuring, and querying data, as well as integrating with LLMs and other tools. LlamaIndex is designed to be easy to use for both beginner and advanced users, and it provides a comprehensive set of features for building LLM applications.

wandbot

Wandbot is a question-answering bot designed for Weights & Biases documentation. It employs Retrieval Augmented Generation with a ChromaDB backend for efficient responses. The bot features periodic data ingestion, integration with Discord and Slack, and performance monitoring through logging. It has a fallback mechanism for model selection and is evaluated based on retrieval accuracy and model-generated responses. The implementation includes creating document embeddings, constructing the Q&A RAGPipeline, model selection, deployment on FastAPI, Discord, and Slack, logging and analysis with Weights & Biases Tables, and performance evaluation.

data-engineering-zoomcamp

Data Engineering Zoomcamp is a comprehensive course covering various aspects of data engineering, including data ingestion, workflow orchestration, data warehouse, analytics engineering, batch processing, and stream processing. The course provides hands-on experience with tools like Python, Rust, Terraform, Airflow, BigQuery, dbt, PySpark, Kafka, and more. Students will learn how to work with different data technologies to build scalable and efficient data pipelines for analytics and processing. The course is designed for individuals looking to enhance their data engineering skills and gain practical experience in working with big data technologies.

stakgraph

Stakgraph is a source code parser that utilizes treesitter, LSP, and neo4j to create software knowledge graphs for AI agents. It supports various languages such as Golang, React, Ruby on Rails, Typescript, Python, Swift, Kotlin, Rust, Java, Angular, and Svelte. Users can parse repositories, link endpoints, requests, and E2E tests, and ingest data to generate comprehensive graphs. The tool leverages the Language Server Protocol for node connections in the graph, enabling seamless integration and analysis of codebases.

parseable

Parseable is a full stack observability platform designed to ingest, analyze, and extract insights from various types of telemetry data. It can be run locally, in the cloud, or as a managed service. The platform offers features like high availability, smart cache, alerts, role-based access control, OAuth2 support, and OpenTelemetry integration. Users can easily ingest data, query logs, and access the dashboard to monitor and analyze data. Parseable provides a seamless experience for observability and monitoring tasks.

For similar jobs

db2rest

DB2Rest is a modern low-code REST DATA API platform that simplifies the development of intelligent applications. It seamlessly integrates existing and new databases with language models (LMs/LLMs) and vector stores, enabling the rapid delivery of context-aware, reasoning applications without vendor lock-in.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

airflow

Apache Airflow (or simply Airflow) is a platform to programmatically author, schedule, and monitor workflows. When workflows are defined as code, they become more maintainable, versionable, testable, and collaborative. Use Airflow to author workflows as directed acyclic graphs (DAGs) of tasks. The Airflow scheduler executes your tasks on an array of workers while following the specified dependencies. Rich command line utilities make performing complex surgeries on DAGs a snap. The rich user interface makes it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed.

airbyte-platform

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's low-code Connector Development Kit (CDK). Airbyte is used by data engineers and analysts at companies of all sizes to move data for a variety of purposes, including data warehousing, data analysis, and machine learning.

chronon

Chronon is a platform that simplifies and improves ML workflows by providing a central place to define features, ensuring point-in-time correctness for backfills, simplifying orchestration for batch and streaming pipelines, offering easy endpoints for feature fetching, and guaranteeing and measuring consistency. It offers benefits over other approaches by enabling the use of a broad set of data for training, handling large aggregations and other computationally intensive transformations, and abstracting away the infrastructure complexity of data plumbing.