llm_model_hub

None

Stars: 55

Model Hub V2 is a one-stop platform for model fine-tuning, deployment, and debugging without code, providing users with a visual interface to quickly validate the effects of fine-tuning various open-source models, facilitating rapid experimentation and decision-making, and lowering the threshold for users to fine-tune large models. For detailed instructions, please refer to the Feishu documentation.

README:

Model Hub V2是提供一站式的模型微调,部署,调试的无代码可视化平台,可以帮助用户快速验证微调各类开源模型的效果,方便用户快速实验和决策,降低用户微调大模型的门槛。详情请见飞书使用说明

- 进入CloudFormation创建一个stack,选择上传部署文件cloudformation-template.yaml

- 必填项包括,stack名,例如modelhub,选择一个ec2 key pairs (如果没有请提前去ec2控制台创建一个)

- 其他根据情况选填,也可以后期在backend/.env中添加,添加完成之后用

pm2 restart all命令重启生效

- 一直下一步,直到勾选确认框,然后提交

- 配置完成后,等待stack创建完成,从Stack output栏找到PublicIP地址,然后访问http://{ip}:3000访问modelhub,默认用户名demo_user

- 密码获取:进入AWS System Manager->Parameter Store服务控制台,可以看到多了一个/modelhub/RandomPassword,进入之后打开Show decrypted value开关,获取登陆密码,默认用户名是

⚠️ 注意,stack显示部署完成之后,启动的EC2还需要8-10分钟自动运行一些脚本,如果不行,请等待8-10分钟,然后刷新页面

- 中国区由于无法直接用github,所以部署分为两步,首先使用cloudformation创建ec2服务器和role,然后下载项目代码上传至ec2服务器,运行一键安装脚本。

- 首先,进入CloudFormation创建一个stack,选择上传部署文件cloudformation-template-cn.yaml

- 填入一个stack名,例如modelhub,选择一个keypair文件用于ssh到ec2实例(如果没有已有的,则需要到ec2控制台事先创建好)

- 大约几分钟部署完成,在output页卡找到ec2 PublicIP和SageMakerRoleArn信息,后面会用到

- 请先在能访问github的电脑环境中执行以下命令下载代码,然后把代码打包成zip文件,上传到ec2服务器的/home/ubuntu/下。

⚠️ 注意:需要使用--recurse-submodule下载代码

git clone --recurse-submodule https://github.com/aws-samples/llm_model_hub.git- zip项目并上传至第一步创建的ec2服务器的/home/ubuntu目录下

zip -r llm_model_hub.zip llm_model_hub/

scp -i <path_to_your_keypair_file> llm_model_hub.zip ubuntu@<第一步中获取的PublicIP>:~/- 在本地终端中通过ssh到第一步创建的ec2, 注意ssh时添加-o ServerAliveInterval=60命令,防止安装脚本时,终端超时断开

ssh -i <path_to_your_keypair_file> -o ServerAliveInterval=60 ubuntu@<第一步中获取的PublicIP>:~/- 登陆到ec2之后,解压上传的zip包

sudo apt update

sudo apt install unzip

unzip llm_model_hub.zip- 设置环境变量

export SageMakerRoleArn=<第一步cloudformation output里SageMakerRoleArn信息,如 arn:aws-cn:iam:1234567890:role/sagemaker_exection_role>- (可选)如需要设置Swanlab或者wandb作为metrics监控看板,也可以后期在backend/.env中添加,添加之后运行pm2 restart all重启服务

export SWANLAB_API_KEY=<SWANLAB_API_KEY>

export WANDB_API_KEY=<WANDB_API_KEY>

export WANDB_BASE_URL=<WANDB_BASE_URL>- 执行一键部署脚本

cd /home/ubuntu/llm_model_hub

bash cn-region-deploy.sh大约40~60分钟(取决于docker镜像网站速度)之后执行完成,可以在/home/ubuntu/setup.log中查看安装日志。

- 访问

- 以上都部署完成后,前端启动之后,可以通过浏览器访问http://{ec2 PublicIP}:3000访问前端,/home/ubuntu/setup.log中查看用户名和随机密码

- 如果需要做端口转发,则参考后端配置中的nginx配置部分

- 硬件需求:一台ec2 Instance, m5.xlarge, 200GB EBS storage

- os需求:ubuntu 22.04

- 配置权限:

- 在IAM中创建一个ec2 role :adminrole-for-ec2.

- select trust type: AWS service, service: EC2,

- 添加以下2个服务的权限,AmazonSageMakerFullAccess, CloudWatchLogsFullAccess

- 把ec2 instance attach到role

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:PutObject",

"s3:DeleteObject",

"s3:ListBucket",

"s3:CreateBucket"

],

"Resource": [

"*"

]

},

{

"Effect": "Allow",

"Action": [

"ssmmessages:CreateControlChannel"

],

"Resource": [

"*"

]

}

]

}- ssh 到ec2 instance

- 如果是中国区需要手动下载代码并打包传到ec2中

- 请先在能访问github的环境中执行以下命令下载代码,然后把代码打包成zip文件,上传到ec2服务器的/home/ubuntu/下。

- 使用--recurse-submodule下载代码

git clone --recurse-submodule https://github.com/aws-samples/llm_model_hub.gitunzip llm_model_hub.zipexport SageMakerRoleArn=<上面步骤创建的sagemaker_exection_role的完整arn,如 arn:aws-cn:iam:1234567890:role/sagemaker_exection_role>- (可选)如需要设置Swanlab或者wandb作为metrics监控看板,也可以后期在backend/.env中添加,添加之后运行pm2 restart all重启服务

export SWANLAB_API_KEY=<SWANLAB_API_KEY>

export WANDB_API_KEY=<WANDB_API_KEY>

export WANDB_BASE_URL=<WANDB_BASE_URL>cd /home/ubuntu/llm_model_hub

bash cn-region-deploy.sh大约40~60分钟(取决于docker镜像网站速度)之后执行完成,可以在/home/ubuntu/setup.log中查看安装日志。

- 以上都部署完成后,前端启动之后,可以通过浏览器访问http://{ip}:3000访问前端,/home/ubuntu/setup.log中查看用户名和随机密码

- 如果需要做端口转发,则参考后端配置中的nginx配置部分

- 方法 1. 下载新的cloudformation 模板进行重新部署,大约12分钟部署完成一个全新的modelhub (此方法以前的job 任务数据会丢失)

- 方法 2.

- 使用一键升级脚本(1.0.6之后支持):

cd /home/ubuntu/llm_model_hub/backend/

bash 03.upgrade.sh- 方法 3. 手动更新:

- 更新代码, 重新打包byoc镜像

git pull

git submodule update --remote

cd /home/ubuntu/llm_model_hub/backend/byoc

bash build_and_push.sh - 重启服务

pm2 restart all- 更新完成

docker exec -it hub-mysql mysql -ullmdata -pllmdata进入mysql cli之后:

use llm;

show tables;

select * from USER_TABLE;

本软件按"原样"提供,不提供任何形式的明示或暗示保证,包括但不限于对适销性、特定用途适用性和非侵权性的保证。在任何情况下,无论是在合同诉讼、侵权行为或其他方面,作者或版权持有人均不对任何索赔、损害或其他责任负责,这些索赔、损害或其他责任源于本软件或与本软件的使用或其他交易有关。

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm_model_hub

Similar Open Source Tools

llm_model_hub

Model Hub V2 is a one-stop platform for model fine-tuning, deployment, and debugging without code, providing users with a visual interface to quickly validate the effects of fine-tuning various open-source models, facilitating rapid experimentation and decision-making, and lowering the threshold for users to fine-tune large models. For detailed instructions, please refer to the Feishu documentation.

evalplus

EvalPlus is a rigorous evaluation framework for LLM4Code, providing HumanEval+ and MBPP+ tests to evaluate large language models on code generation tasks. It offers precise evaluation and ranking, coding rigorousness analysis, and pre-generated code samples. Users can use EvalPlus to generate code solutions, post-process code, and evaluate code quality. The tool includes tools for code generation and test input generation using various backends.

herc.ai

Herc.ai is a powerful library for interacting with the Herc.ai API. It offers free access to users and supports all languages. Users can benefit from Herc.ai's features unlimitedly with a one-time subscription and API key. The tool provides functionalities for question answering and text-to-image generation, with support for various models and customization options. Herc.ai can be easily integrated into CLI, CommonJS, TypeScript, and supports beta models for advanced usage. Developed by FiveSoBes and Luppux Development.

browser4

Browser4 is a lightning-fast, coroutine-safe browser designed for AI integration with large language models. It offers ultra-fast automation, deep web understanding, and powerful data extraction APIs. Users can automate the browser, extract data at scale, and perform tasks like summarizing products, extracting product details, and finding specific links. The tool is developer-friendly, supports AI-powered automation, and provides advanced features like X-SQL for precise data extraction. It also offers RPA capabilities, browser control, and complex data extraction with X-SQL. Browser4 is suitable for web scraping, data extraction, automation, and AI integration tasks.

auto-round

AutoRound is an advanced weight-only quantization algorithm for low-bits LLM inference. It competes impressively against recent methods without introducing any additional inference overhead. The method adopts sign gradient descent to fine-tune rounding values and minmax values of weights in just 200 steps, often significantly outperforming SignRound with the cost of more tuning time for quantization. AutoRound is tailored for a wide range of models and consistently delivers noticeable improvements.

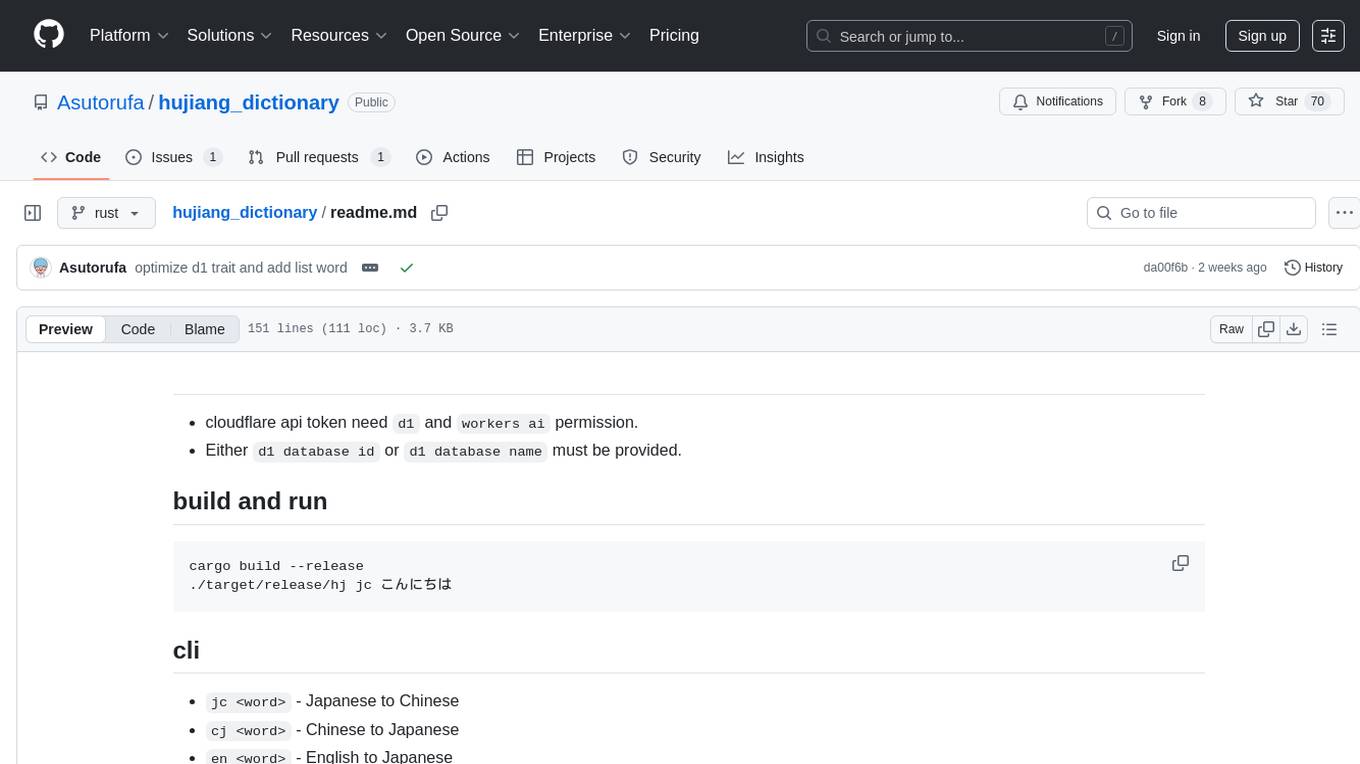

hujiang_dictionary

Hujiang Dictionary is a tool that provides translation services between Japanese, Chinese, and English. It supports various translation modes such as Japanese to Chinese, Chinese to Japanese, English to Japanese, and more. The tool utilizes cloud services like Telegram, Lambda, and Cloudflare Workers for different deployment options. Users can interact with the tool via a command-line interface (CLI) to perform translations and access online resources like weblio and Google Translate. Additionally, the tool offers a Telegram bot for users to access translation services conveniently. The tool also supports setting up and managing databases for storing translation data.

cool-admin-midway

Cool-admin (midway version) is a cool open-source backend permission management system that supports modular, plugin-based, rapid CRUD development. It facilitates the quick construction and iteration of backend management systems, deployable in various ways such as serverless, docker, and traditional servers. It features AI coding for generating APIs and frontend pages, flow orchestration for drag-and-drop functionality, modular and plugin-based design for clear and maintainable code. The tech stack includes Node.js, Midway.js, Koa.js, TypeScript for backend, and Vue.js, Element-Plus, JSX, Pinia, Vue Router for frontend. It offers friendly technology choices for both frontend and backend developers, with TypeScript syntax similar to Java and PHP for backend developers. The tool is suitable for those looking for a modern, efficient, and fast development experience.

ailoy

Ailoy is a lightweight library for building AI applications such as agent systems or RAG pipelines with ease. It enables AI features effortlessly, supporting AI models locally or via cloud APIs, multi-turn conversation, system message customization, reasoning-based workflows, tool calling capabilities, and built-in vector store support. It also supports running native-equivalent functionality in web browsers using WASM. The library is in early development stages and provides examples in the `examples` directory for inspiration on building applications with Agents.

twick

Twick is a comprehensive video editing toolkit built with modern web technologies. It is a monorepo containing multiple packages for video and image manipulation. The repository includes core utilities for media handling, a React-based canvas library for video and image editing, a video visualization and animation toolkit, a React component for video playback and control, timeline management and editing capabilities, a React-based video editor, and example implementations and usage demonstrations. Twick provides detailed API documentation and module information for developers. It offers easy integration with existing projects and allows users to build videos using the Twick Studio. The project follows a comprehensive style guide for naming conventions and code style across all packages.

qianfan-starter

WenXin-Starter is a spring-boot-starter for Baidu's 'WenXin Workshop' large model, facilitating quick integration of Baidu's AI capabilities. It provides complete integration with WenXin Workshop's official API documentation, supports WenShengTu, built-in conversation memory, and supports conversation streaming. It also supports QPS control for individual models and queuing mechanism, with upcoming plugin support.

aiotieba

Aiotieba is an asynchronous Python library for interacting with the Tieba API. It provides a comprehensive set of features for working with Tieba, including support for authentication, thread and post management, and image and file uploading. Aiotieba is well-documented and easy to use, making it a great choice for developers who want to build applications that interact with Tieba.

acte

Acte is a framework designed to build GUI-like tools for AI Agents. It aims to address the issues of cognitive load and freedom degrees when interacting with multiple APIs in complex scenarios. By providing a graphical user interface (GUI) for Agents, Acte helps reduce cognitive load and constraints interaction, similar to how humans interact with computers through GUIs. The tool offers APIs for starting new sessions, executing actions, and displaying screens, accessible via HTTP requests or the SessionManager class.

Groq2API

Groq2API is a REST API wrapper around the Groq2 model, a large language model trained by Google. The API allows you to send text prompts to the model and receive generated text responses. The API is easy to use and can be integrated into a variety of applications.

wzry_ai

This is an open-source project for playing the game King of Glory with an artificial intelligence model. The first phase of the project has been completed, and future upgrades will be built upon this foundation. The second phase of the project has started, and progress is expected to proceed according to plan. For any questions, feel free to join the QQ exchange group: 687853827. The project aims to learn artificial intelligence and strictly prohibits cheating. Detailed installation instructions are available in the doc/README.md file. Environment installation video: (bilibili) Welcome to follow, like, tip, comment, and provide your suggestions.

ChatPilot

ChatPilot is a chat agent tool that enables AgentChat conversations, supports Google search, URL conversation (RAG), and code interpreter functionality, replicates Kimi Chat (file, drag and drop; URL, send out), and supports OpenAI/Azure API. It is based on LangChain and implements ReAct and OpenAI Function Call for agent Q&A dialogue. The tool supports various automatic tools such as online search using Google Search API, URL parsing tool, Python code interpreter, and enhanced RAG file Q&A with query rewriting support. It also allows front-end and back-end service separation using Svelte and FastAPI, respectively. Additionally, it supports voice input/output, image generation, user management, permission control, and chat record import/export.

ai00_server

AI00 RWKV Server is an inference API server for the RWKV language model based upon the web-rwkv inference engine. It supports VULKAN parallel and concurrent batched inference and can run on all GPUs that support VULKAN. No need for Nvidia cards!!! AMD cards and even integrated graphics can be accelerated!!! No need for bulky pytorch, CUDA and other runtime environments, it's compact and ready to use out of the box! Compatible with OpenAI's ChatGPT API interface. 100% open source and commercially usable, under the MIT license. If you are looking for a fast, efficient, and easy-to-use LLM API server, then AI00 RWKV Server is your best choice. It can be used for various tasks, including chatbots, text generation, translation, and Q&A.

For similar tasks

vertex-ai-samples

The Google Cloud Vertex AI sample repository contains notebooks and community content that demonstrate how to develop and manage ML workflows using Google Cloud Vertex AI.

byteir

The ByteIR Project is a ByteDance model compilation solution. ByteIR includes compiler, runtime, and frontends, and provides an end-to-end model compilation solution. Although all ByteIR components (compiler/runtime/frontends) are together to provide an end-to-end solution, and all under the same umbrella of this repository, each component technically can perform independently. The name, ByteIR, comes from a legacy purpose internally. The ByteIR project is NOT an IR spec definition project. Instead, in most scenarios, ByteIR directly uses several upstream MLIR dialects and Google Mhlo. Most of ByteIR compiler passes are compatible with the selected upstream MLIR dialects and Google Mhlo.

effort

Effort is an example implementation of the bucketMul algorithm, which allows for real-time adjustment of the number of calculations performed during inference of an LLM model. At 50% effort, it performs as fast as regular matrix multiplications on Apple Silicon chips; at 25% effort, it is twice as fast while still retaining most of the quality. Additionally, users have the option to skip loading the least important weights.

ort

Ort is an unofficial ONNX Runtime 1.17 wrapper for Rust based on the now inactive onnxruntime-rs. ONNX Runtime accelerates ML inference on both CPU and GPU.

llm_model_hub

Model Hub V2 is a one-stop platform for model fine-tuning, deployment, and debugging without code, providing users with a visual interface to quickly validate the effects of fine-tuning various open-source models, facilitating rapid experimentation and decision-making, and lowering the threshold for users to fine-tune large models. For detailed instructions, please refer to the Feishu documentation.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.