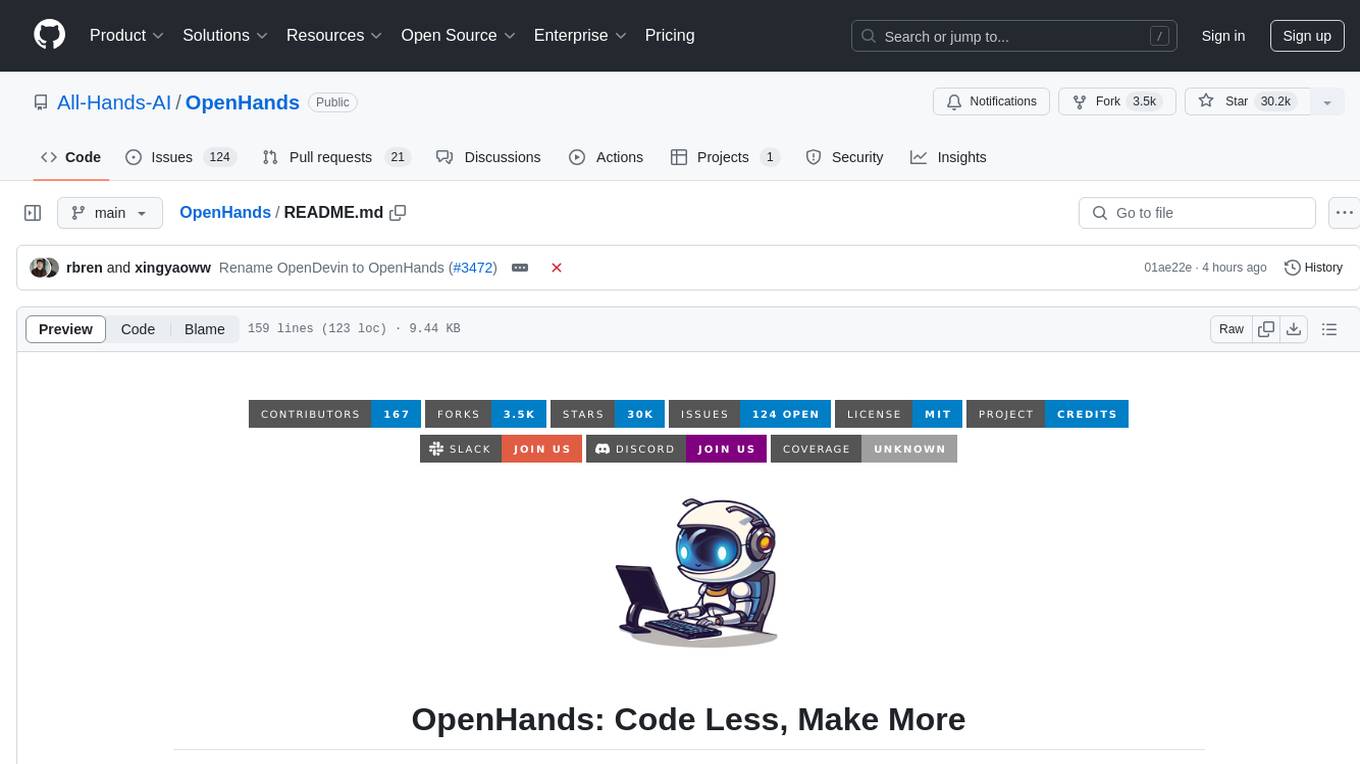

vertex-ai-samples

Notebooks, code samples, sample apps, and other resources that demonstrate how to use, develop and manage machine learning and generative AI workflows using Google Cloud Vertex AI.

Stars: 484

The Google Cloud Vertex AI sample repository contains notebooks and community content that demonstrate how to develop and manage ML workflows using Google Cloud Vertex AI.

README:

This repository contains notebooks, code samples, sample apps, and other resources that demonstrate how to use, develop and manage machine learning and generative AI workflows using Google Cloud Vertex AI.

Vertex AI is a fully-managed, unified AI development platform for building and using generative AI. This repository is designed to help you get started with Vertex AI. Whether you're new to Vertex AI or an experienced ML practitioner, you'll find valuable resources here.

For more Vertex AI Generative AI notebook samples, please visit the Vertex AI Generative AI GitHub repository.

You can explore, learn, and contribute to this repository to unleash the full potential of machine learning on Vertex AI!

Explore this repository, follow the links in the header section of each of the notebooks to -

Open and run the notebook in Vertex AI Workbench

See the Contributing Guide.

To get started using Vertex AI, you must have a Google Cloud project.

- If you don't have a Google Cloud project, you can learn and build on GCP for free using Free Trail.

- Once you have a Google Cloud project, you can learn more about setting up a project and a development environment.

├── notebooks

│ ├── official - Notebooks demonstrating use of each Vertex AI service

│ │ ├── automl

│ │ ├── custom

│ │ ├── ...

│ ├── community - Notebooks contributed by the community

│ │ ├── model_garden

│ │ ├── ...

├── community-content - Sample code and tutorials contributed by the community

| Category | Product | Description |

|---|---|---|

| Model |

Model Garden/

|

Curated collection of first-party, open-source, and third-party models available on Vertex AI including Gemini, Gemma, Llama 3, Claude 3 and many more. |

| Data |

Feature Store/

|

Set up and manage online serving using Vertex AI Feature Store. |

datasets/

|

Use BigQuery and Data Labeling service with Vertex AI. | |

| Model development |

automl/

|

Train and make predictions on AutoML models |

custom/

|

Create, deploy and serve custom models on Vertex AI | |

ray_on_vertex_ai/

|

Use Colab Enterprise and Vertex AI SDK for Python to connect to the Ray Cluster. | |

| Deploy and use |

prediction/

|

Build, train and deploy models using prebuilt containers for custom training and prediction. |

model_registry/

|

Use Model Registry to create and register a model. | |

Explainable AI/

|

Use Vertex Explainable AI's feature-based and example-based explanations to explain how or why a model produced a specific prediction. | |

ml_metadata/

|

Record the metadata and artifacts and query that metadata to help analyze, debug, and audit the performance of your ML system. | |

| Tools |

Pipelines/

|

Use `Vertex AI Pipelines` and `Google Cloud Pipeline Components` to build, tune, or deploy a custom model. |

Please use the Issues page to provide feedback or submit a bug report.

This is not an officially supported Google product. The code in this repository is for demonstrative purposes only.

- Vertex AI Jupyter Notebook tutorials

- Vertex AI Generative AI GitHub repository

- Vertex AI documentaton

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for vertex-ai-samples

Similar Open Source Tools

vertex-ai-samples

The Google Cloud Vertex AI sample repository contains notebooks and community content that demonstrate how to develop and manage ML workflows using Google Cloud Vertex AI.

piccolo

Piccolo AI is an open-source software development toolkit for constructing sensor-based AI inference models optimized to run on low-power microcontrollers and IoT edge platforms. It includes SensiML's ML Engine, Embedded ML SDK, Analytic Studio UI, and SensiML Python Client. The tool is intended for individual developers, researchers, and AI enthusiasts, offering support for time-series sensor data classification and various applications such as acoustic event detection, activity recognition, gesture detection, anomaly detection, keyword spotting, and vibration classification.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

llm-app

Pathway's LLM (Large Language Model) Apps provide a platform to quickly deploy AI applications using the latest knowledge from data sources. The Python application examples in this repository are Docker-ready, exposing an HTTP API to the frontend. These apps utilize the Pathway framework for data synchronization, API serving, and low-latency data processing without the need for additional infrastructure dependencies. They connect to document data sources like S3, Google Drive, and Sharepoint, offering features like real-time data syncing, easy alert setup, scalability, monitoring, security, and unification of application logic.

AI-Playground

AI Playground is an open-source project and AI PC starter app designed for AI image creation, image stylizing, and chatbot functionalities on a PC powered by an Intel Arc GPU. It leverages libraries from GitHub and Huggingface, providing users with the ability to create AI-generated content and interact with chatbots. The tool requires specific hardware specifications and offers packaged installers for ease of setup. Users can also develop the project environment, link it to the development environment, and utilize alternative models for different AI tasks.

Revornix

Revornix is an information management tool designed for the AI era. It allows users to conveniently integrate all visible information and generates comprehensive reports at specific times. The tool offers cross-platform availability, all-in-one content aggregation, document transformation & vectorized storage, native multi-tenancy, localization & open-source features, smart assistant & built-in MCP, seamless LLM integration, and multilingual & responsive experience for users.

embedchain

Embedchain is an Open Source Framework for personalizing LLM responses. It simplifies the creation and deployment of personalized AI applications by efficiently managing unstructured data, generating relevant embeddings, and storing them in a vector database. With diverse APIs, users can extract contextual information, find precise answers, and engage in interactive chat conversations tailored to their data. The framework follows the design principle of being 'Conventional but Configurable' to cater to both software engineers and machine learning engineers.

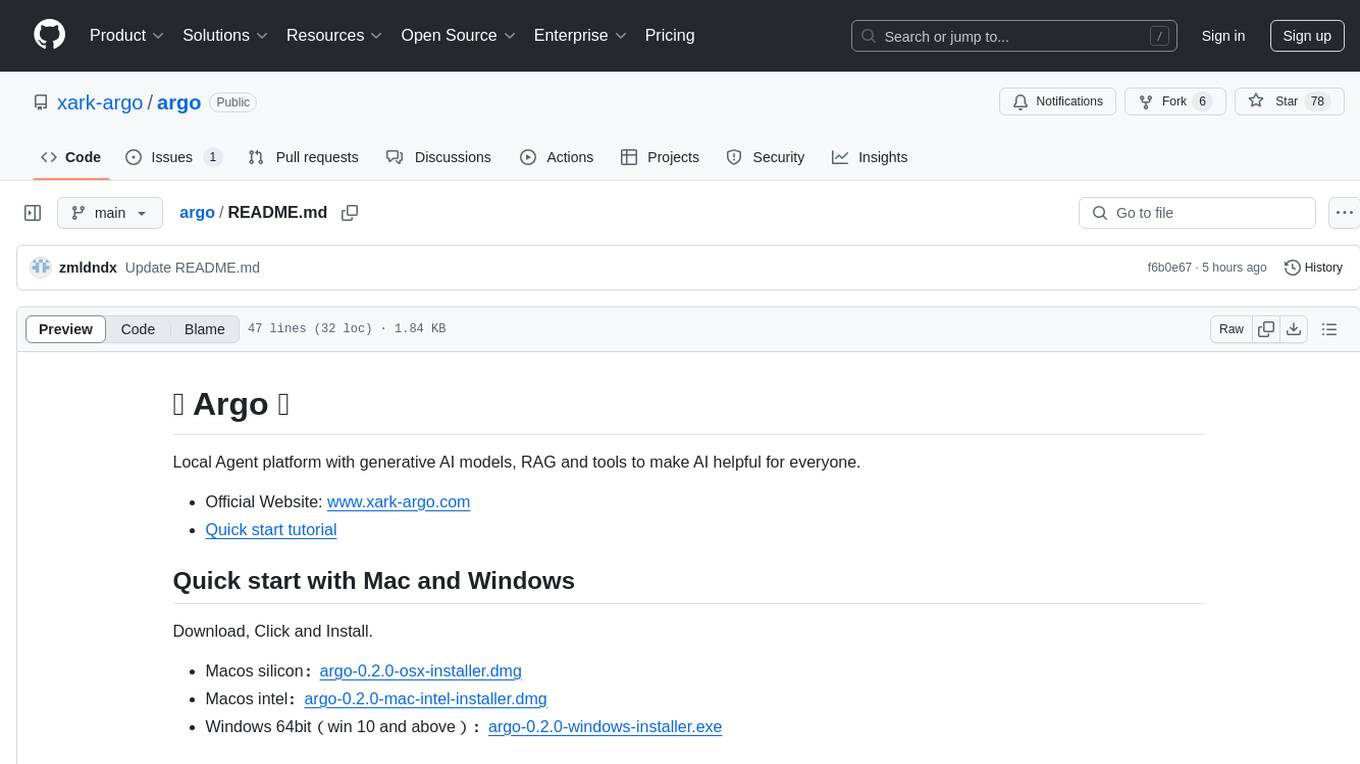

argo

Local Agent platform with generative AI models, RAG and tools to make AI helpful for everyone. Argo is a versatile tool that provides a user-friendly interface for leveraging AI capabilities. It offers a range of features and functionalities to assist users in various tasks related to artificial intelligence. The platform aims to democratize AI by simplifying complex processes and making them accessible to a wider audience. With Argo, users can easily deploy AI models, interact with generative AI technologies, and utilize a suite of tools designed to enhance their AI experience. Whether you are a beginner or an experienced AI practitioner, Argo provides a seamless environment for exploring and utilizing AI solutions.

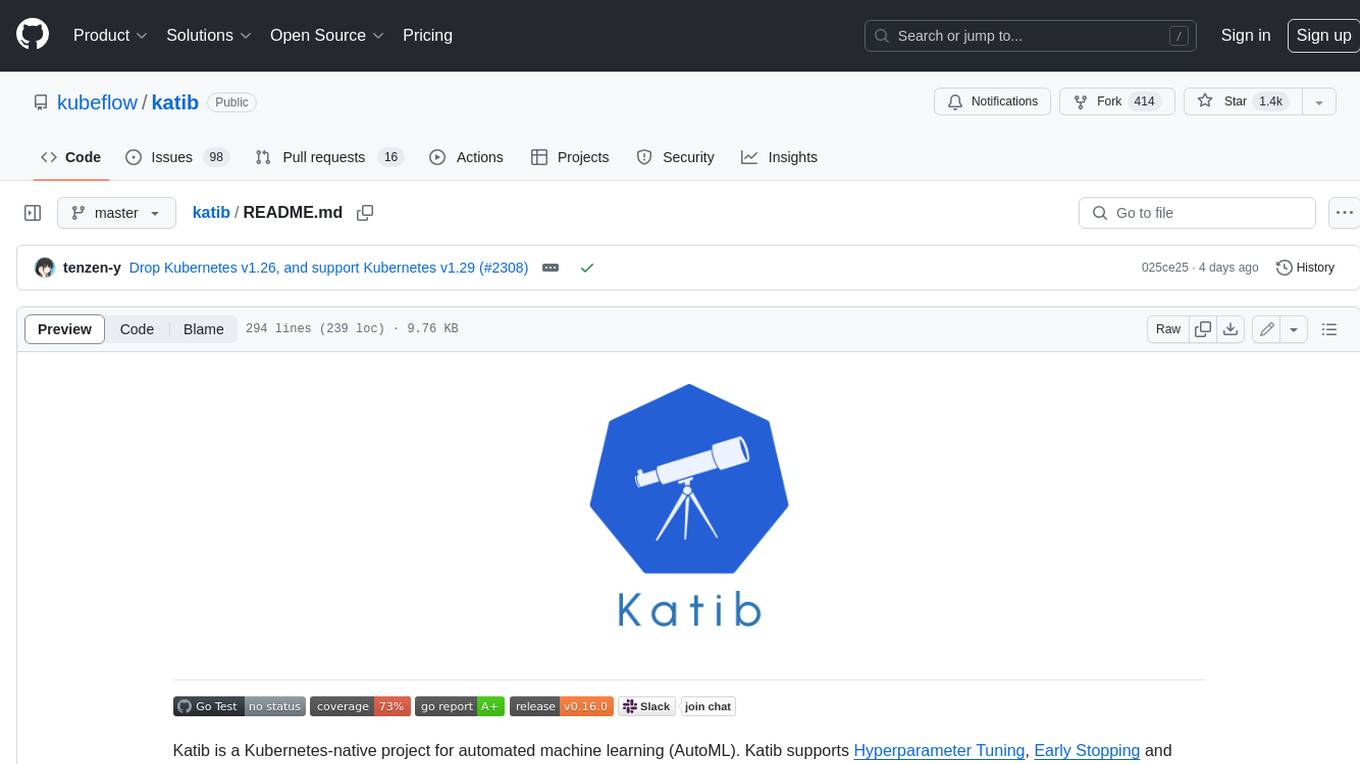

katib

Katib is a Kubernetes-native project for automated machine learning (AutoML). Katib supports Hyperparameter Tuning, Early Stopping and Neural Architecture Search. Katib is the project which is agnostic to machine learning (ML) frameworks. It can tune hyperparameters of applications written in any language of the users’ choice and natively supports many ML frameworks, such as TensorFlow, Apache MXNet, PyTorch, XGBoost, and others. Katib can perform training jobs using any Kubernetes Custom Resources with out of the box support for Kubeflow Training Operator, Argo Workflows, Tekton Pipelines and many more.

ZetaForge

ZetaForge is an open-source AI platform designed for rapid development of advanced AI and AGI pipelines. It allows users to assemble reusable, customizable, and containerized Blocks into highly visual AI Pipelines, enabling rapid experimentation and collaboration. With ZetaForge, users can work with AI technologies in any programming language, easily modify and update AI pipelines, dive into the code whenever needed, utilize community-driven blocks and pipelines, and share their own creations. The platform aims to accelerate the development and deployment of advanced AI solutions through its user-friendly interface and community support.

second-brain-ai-assistant-course

This open-source course teaches how to build an advanced RAG and LLM system using LLMOps and ML systems best practices. It helps you create an AI assistant that leverages your personal knowledge base to answer questions, summarize documents, and provide insights. The course covers topics such as LLM system architecture, pipeline orchestration, large-scale web crawling, model fine-tuning, and advanced RAG features. It is suitable for ML/AI engineers and data/software engineers & data scientists looking to level up to production AI systems. The course is free, with minimal costs for tools like OpenAI's API and Hugging Face's Dedicated Endpoints. Participants will build two separate Python applications for offline ML pipelines and online inference pipeline.

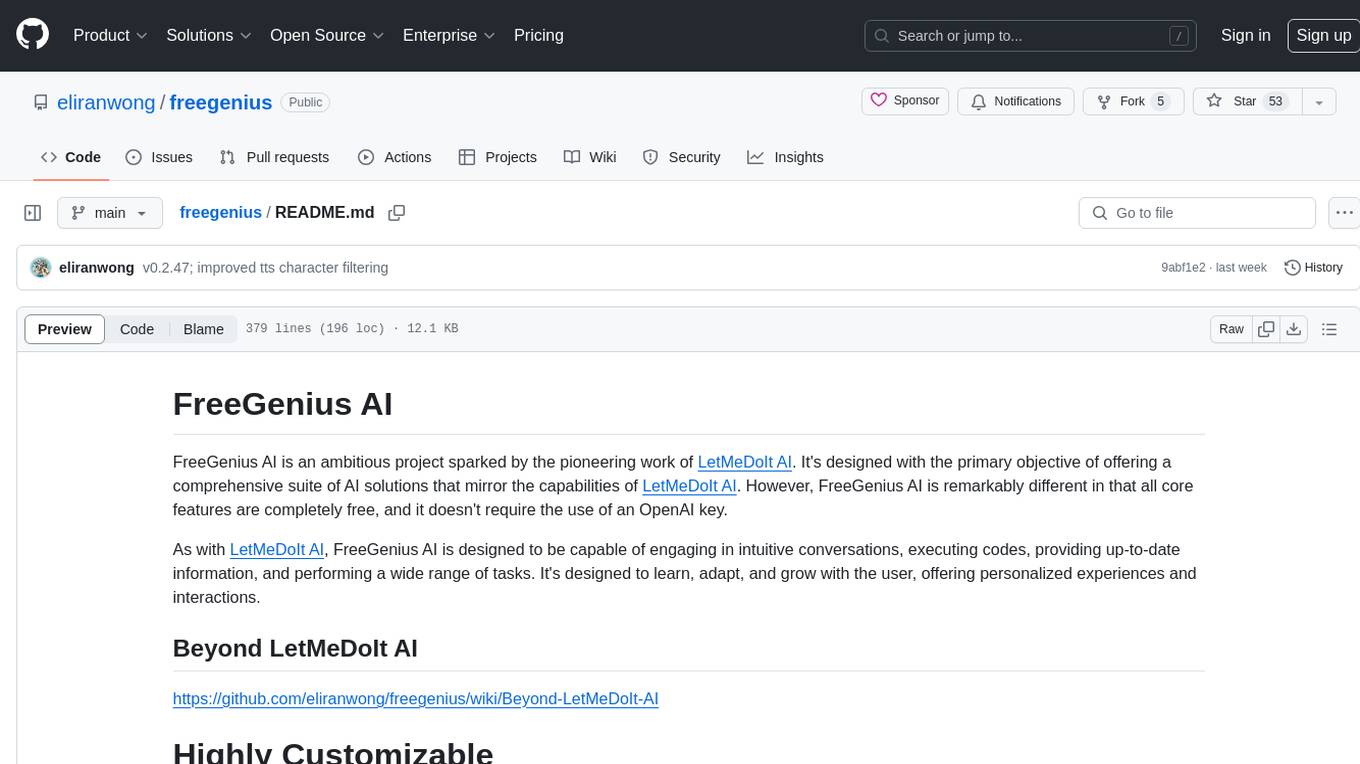

freegenius

FreeGenius AI is an ambitious project offering a comprehensive suite of AI solutions that mirror the capabilities of LetMeDoIt AI. It is designed to engage in intuitive conversations, execute codes, provide up-to-date information, and perform various tasks. The tool is free, customizable, and provides access to real-time data and device information. It aims to support offline and online backends, open-source large language models, and optional API keys. Users can use FreeGenius AI for tasks like generating tweets, analyzing audio, searching financial data, checking weather, and creating maps.

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

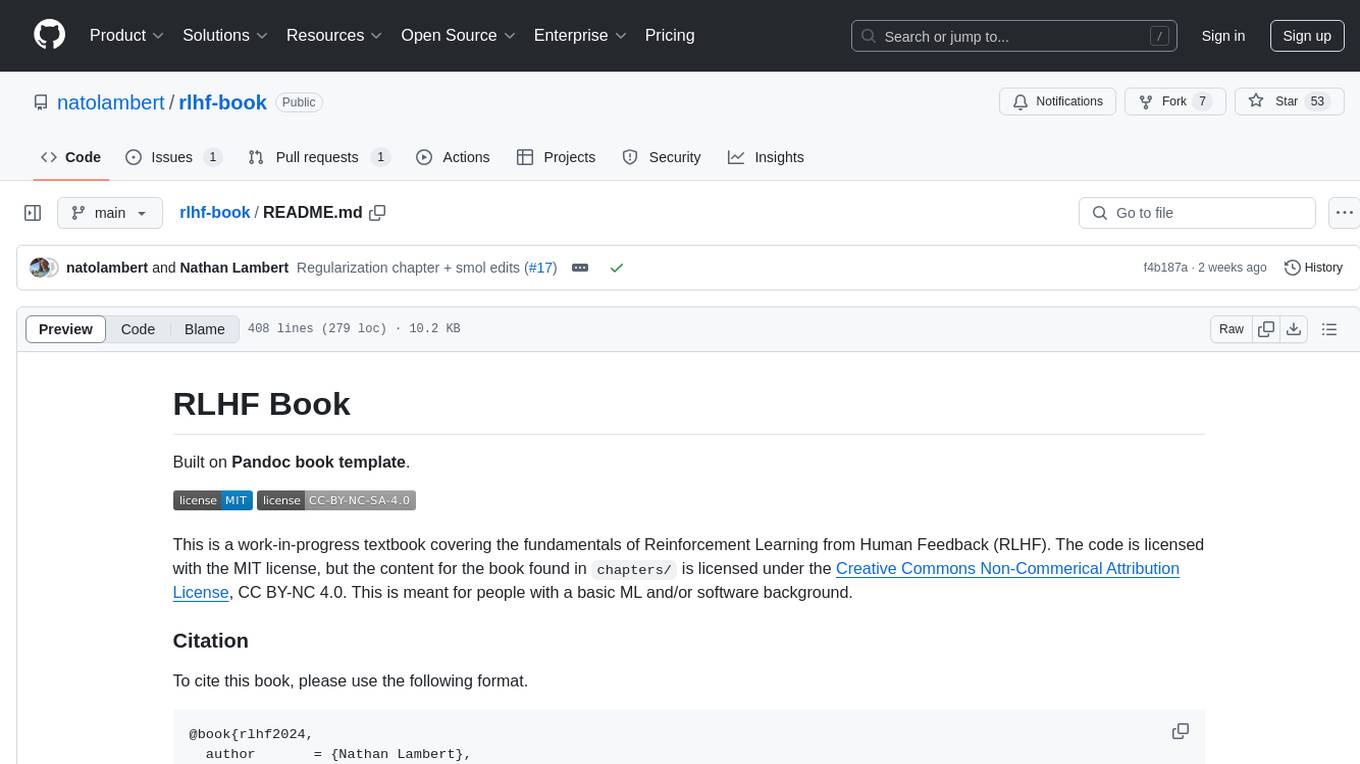

rlhf-book

RLHF Book is a work-in-progress textbook covering the fundamentals of Reinforcement Learning from Human Feedback (RLHF). It is built on the Pandoc book template and is meant for people with a basic ML and/or software background. The content for the book is licensed under the Creative Commons Non-Commercial Attribution License, CC BY-NC 4.0. The repository contains a simple template for building Pandoc documents, allowing users to compile markdown files into readable files such as PDF, EPUB, and HTML.

generative-ai-go

The Google AI Go SDK enables developers to use Google's state-of-the-art generative AI models (like Gemini) to build AI-powered features and applications. It supports use cases like generating text from text-only input, generating text from text-and-images input (multimodal), building multi-turn conversations (chat), and embedding.

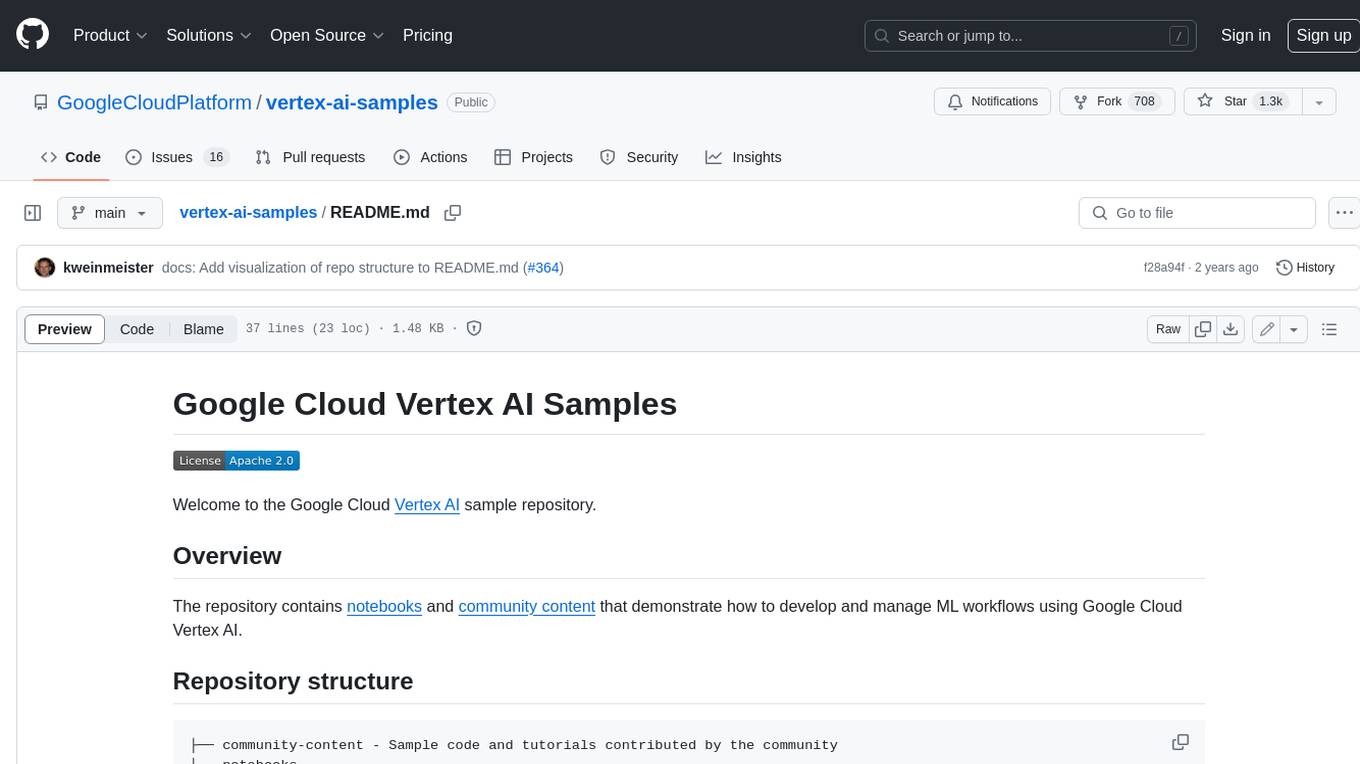

OpenHands

OpenDevin is a platform for autonomous software engineers powered by AI and LLMs. It allows human developers to collaborate with agents to write code, fix bugs, and ship features. The tool operates in a secured docker sandbox and provides access to different LLM providers for advanced configuration options. Users can contribute to the project through code contributions, research and evaluation of LLMs in software engineering, and providing feedback and testing. OpenDevin is community-driven and welcomes contributions from developers, researchers, and enthusiasts looking to advance software engineering with AI.

For similar tasks

vertex-ai-samples

The Google Cloud Vertex AI sample repository contains notebooks and community content that demonstrate how to develop and manage ML workflows using Google Cloud Vertex AI.

byteir

The ByteIR Project is a ByteDance model compilation solution. ByteIR includes compiler, runtime, and frontends, and provides an end-to-end model compilation solution. Although all ByteIR components (compiler/runtime/frontends) are together to provide an end-to-end solution, and all under the same umbrella of this repository, each component technically can perform independently. The name, ByteIR, comes from a legacy purpose internally. The ByteIR project is NOT an IR spec definition project. Instead, in most scenarios, ByteIR directly uses several upstream MLIR dialects and Google Mhlo. Most of ByteIR compiler passes are compatible with the selected upstream MLIR dialects and Google Mhlo.

effort

Effort is an example implementation of the bucketMul algorithm, which allows for real-time adjustment of the number of calculations performed during inference of an LLM model. At 50% effort, it performs as fast as regular matrix multiplications on Apple Silicon chips; at 25% effort, it is twice as fast while still retaining most of the quality. Additionally, users have the option to skip loading the least important weights.

ort

Ort is an unofficial ONNX Runtime 1.17 wrapper for Rust based on the now inactive onnxruntime-rs. ONNX Runtime accelerates ML inference on both CPU and GPU.

llm_model_hub

Model Hub V2 is a one-stop platform for model fine-tuning, deployment, and debugging without code, providing users with a visual interface to quickly validate the effects of fine-tuning various open-source models, facilitating rapid experimentation and decision-making, and lowering the threshold for users to fine-tune large models. For detailed instructions, please refer to the Feishu documentation.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.