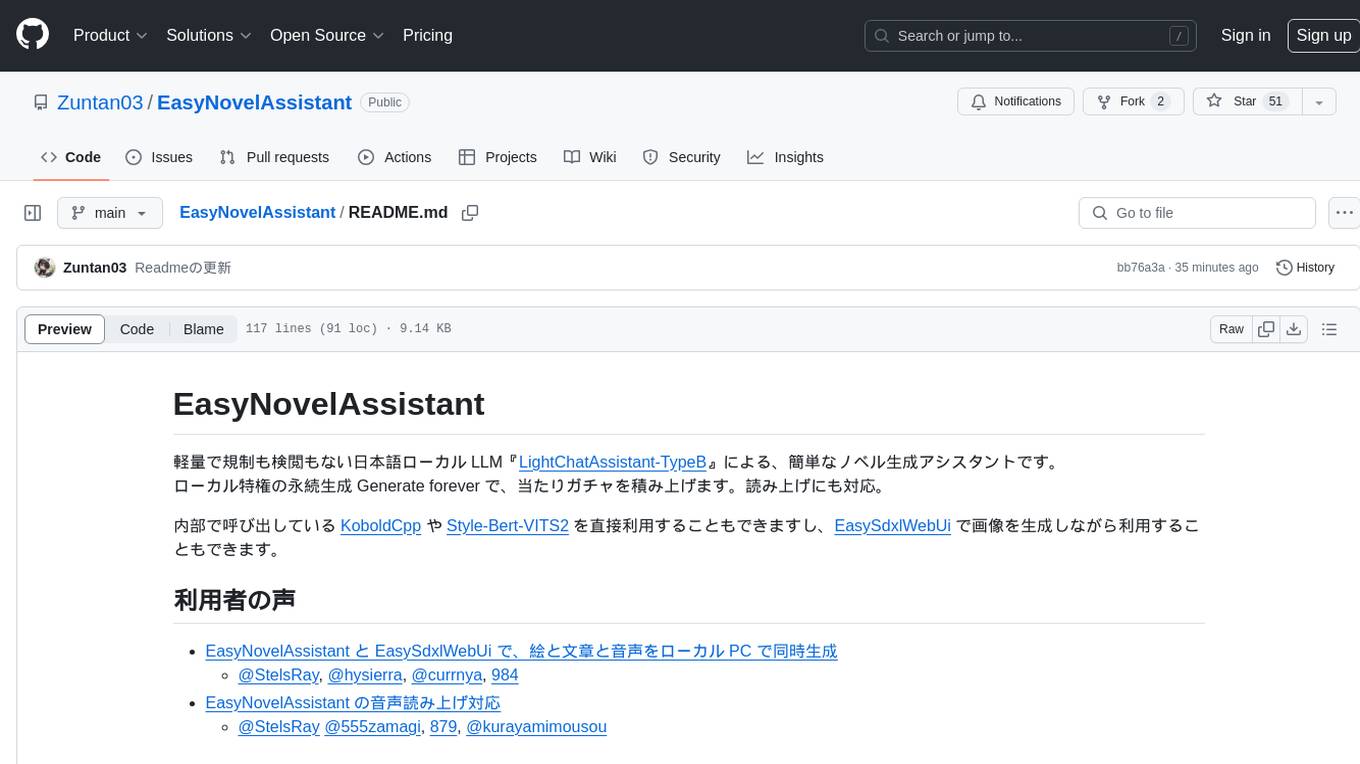

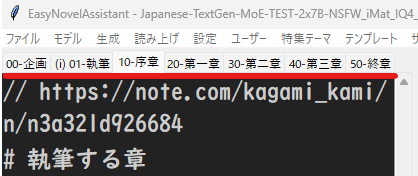

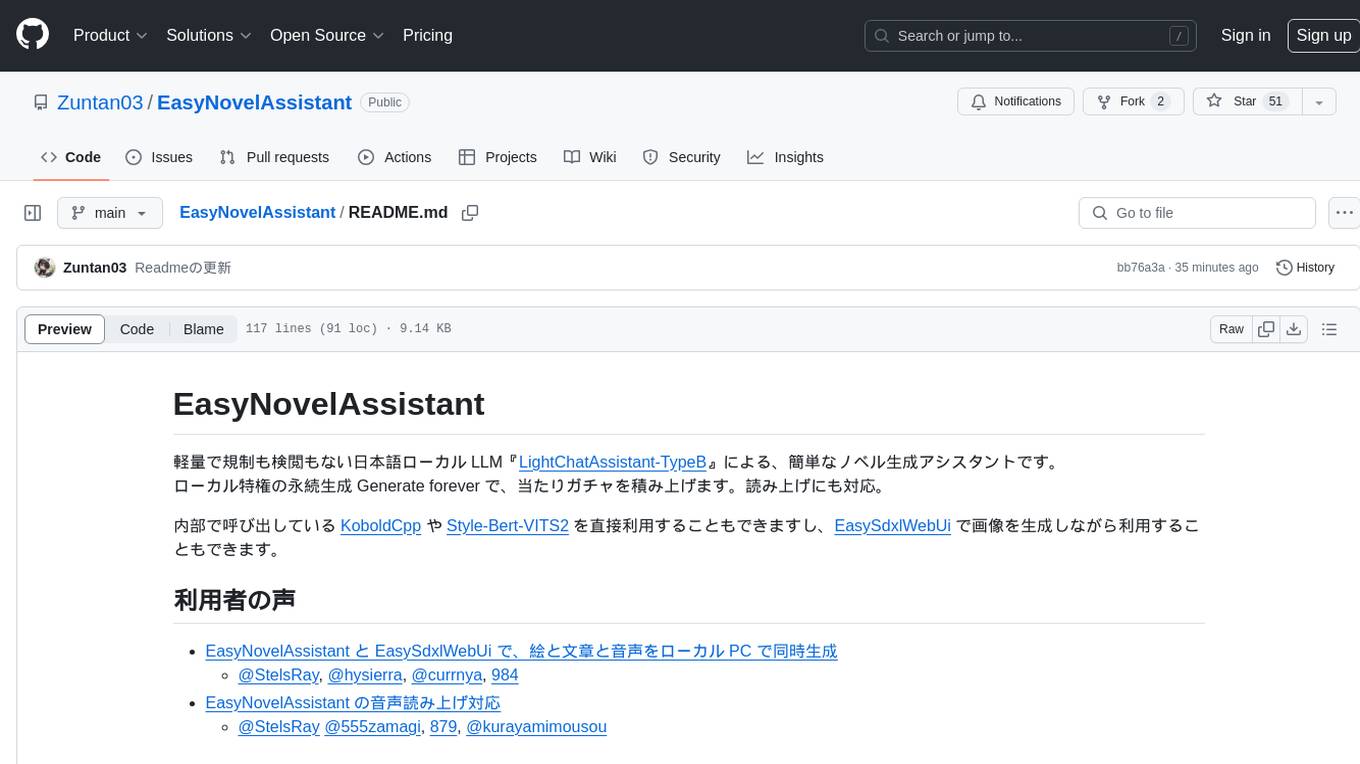

EasyNovelAssistant

軽量で規制も検閲もない日本語ローカル LLM『LightChatAssistant-TypeB』による、簡単なノベル生成アシスタントです。ローカル特権の永続生成 Generate forever で、当たりガチャを積み上げます。読み上げにも対応。

Stars: 92

EasyNovelAssistant is a simple novel generation assistant powered by a lightweight and uncensored Japanese local LLM 'LightChatAssistant-TypeB'. It allows for perpetual generation with 'Generate forever' feature, stacking up lucky gacha draws. It also supports text-to-speech. Users can directly utilize KoboldCpp and Style-Bert-VITS2 internally or use EasySdxlWebUi to generate images while using the tool. The tool is designed for local novel generation with a focus on ease of use and flexibility.

README:

軽量で規制も検閲もない日本語ローカル LLM『LightChatAssistant-TypeB』による、簡単なノベル生成アシスタントです。

ローカル特権の永続生成 Generate forever で、当たりガチャを積み上げます。読み上げにも対応。

内部で呼び出している KoboldCpp や Style-Bert-VITS2 を直接利用することもできますし、EasySdxlWebUi で画像を生成しながら利用することもできます。

記事

- 『【検閲なし】GPUで生成するローカルAIチャット環境と小説企画+執筆用ゴールシークプロンプトで叡智小説生成最強に見える』@kagami_kami_m

- 作例『[AI試運転]スパーリング・ウィズ・ツクモドウ』と 制作の感想。

動画

EasyNovelAssistantの利用検証, 負けヒロインの告白

つぶやき

@AIiswonder, @umiyuki_ai, @dew_dew, @StelsRay, @kirimajiro, @Ak9TLSB3fwWnMzn, @Emanon_14, @liruk, @maru_ai29, @bla_tanuki, @muchkanensys, @shinshi78, 865, 186, @kurayamimousou, @boxheadroom, @luta_ai, 0026, @liruk, @kagami_kami_m, @AonekoSS, @maaibook, @corpsmanWelt, @kiyoshi_shin, @AINewsDev, @kgmkm_inma_ai, @AonekoSS, @StelsRay, @mikumiku_aloha, @kagami_kami_m, @2ewsHQJgnvkGNPr, @ainiji981, @Neve_AI, @WreckerAi, @ai_1610, @kagami_kami_m, @kohya_tech, @kohya_tech, @G13_Yuyang, 0611, 0549

- 読み上げ音声に画像を割り当てて、字幕付きの動画の簡単作成に対応

- EasyNovelAssistant と EasySdxlWebUi で、絵と文章と音声をローカル PC で同時生成

- EasyNovelAssistant の音声読み上げ対応

インストールや更新で困ったことが起きたら、こちら を参照してください。

-

Install-EasyNovelAssistant.batを右クリックして名前をつけて保存で、インストール先フォルダ(英数字のパスで空白や日本語を含まない)にダウンロードして実行します。WindowsによってPCが保護されましたと表示されたら、詳細表示から実行します。-

配布元から関連ファイルをダウンロードすることに問題がなければyを入力します。 -

Windows セキュリティのネットワークへのアクセス許可は許可してください。

- インストールが完了すると、自動的に EasyNovelAssistant が起動します。

インストール完了後は

-

Run-EasyNovelAssistant.batで起動します。 -

Update-EasyNovelAssistant.batで更新します。

次のステップは はじめての生成 です。

- 『Ninja-v1-RP-expressive-v2』を追加しました。

-

Aratako さんの自信作な新モデル 『Ninja-v1-RP-expressive』を追加しました。

- ロールプレイ用モデルですが、他の用途でも使えそうな感触です。

- ロールプレイ(チャット)をしたい場合は プロンプトフォーマット を確認して、

KoboldCpp/koboldcpp.exeを 直接ご利用ください。

- Japanese-TextGen-Kage の更新に対応しました。

-

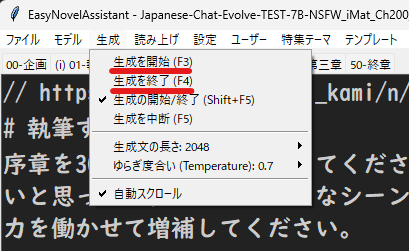

生成メニューの生成の開始/終了 (Shift+F5)のトグル誤操作の対策として、生成を開始 (F3)と生成を終了 (F4)を追加しました。

-

Japanese-TextGen-MoE-TEST-2x7B-NSFW と Japanese-Chat-Evolve-TEST-NSFW の Ch200 差し替え版に対応しました。

-

Japanese-Chat-Evolve-TEST-NSFW の

コンテキストサイズ上限が8Kから4Kに下がっていますので、ご注意ください。

-

Japanese-Chat-Evolve-TEST-NSFW の

- Japanese-TextGen-MoE-TEST-2x7B-NSFW の ファイル名変更 に対応しました。

-

Japanese-TextGen-MoE-TEST-2x7B-NSFW 作者 dddump さん の新モデル 2種を追加しました。

-

Japanese-Chat-Evolve-TEST-NSFW は

コンテキストサイズ上限を8Kまで設定できます。 -

Japanese-TextGen-Kage は

コンテキストサイズ上限を32Kまで設定できます。- Geforce RTX 3060 12GB 環境では

コンテキストサイズ上限が16KだとGPU レイヤーをL33でフルロードできます。

- Geforce RTX 3060 12GB 環境では

-

Japanese-Chat-Evolve-TEST-NSFW は

大規模な更新ですので、不具合がありましたらお知らせください。

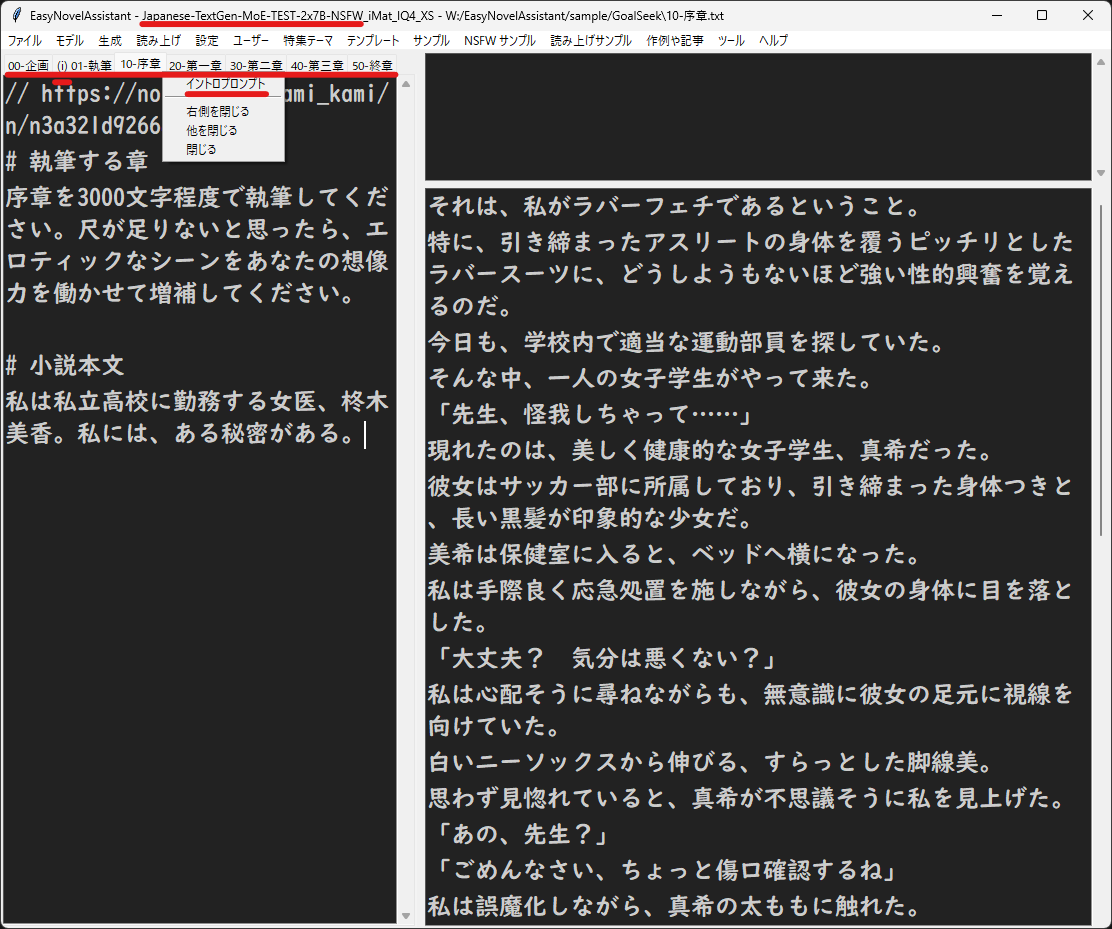

- プロンプト入力欄がタブ付きになり、複数のプロンプトの比較や調整がやりやすくなりました。

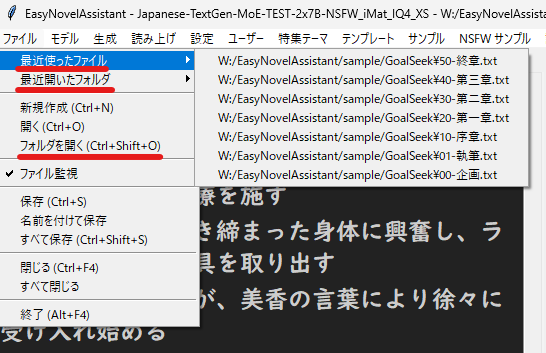

- 複数ファイルやフォルダを開けます。ドラッグ&ドロップにも対応しています。

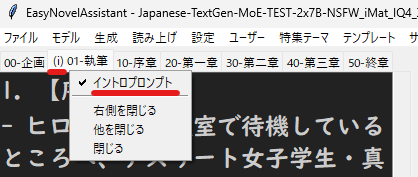

- タブに

イントロプロンプトを指定すると、他のタブのプロンプトを生成時に付け足せます。 - これらの章別執筆のサンプルを

sample/GoalSeek/に用意しました(@kagami_kami_m さんの記事 を元にしています)。-

GoalSeekのフォルダをドラッグ&ドロップして、フォルダごと読み込みます。 - 例えば

10-序章タブを生成する際に、イントロプロンプトに指定した01-執筆が自動的に前に付け足されます。- 前章を記憶として付け足したり、執筆済みの章を要約して任意に付け足したりもできます。

-

- 最近の個性豊かな軽量モデル公開ラッシュに対応しました。

-

llm_sequence.jsonのフォーマットを変更しました。- 詳細は

EasyNovelAssistant/setup/res/default_llm_sequence.jsonを参照ください。

- 詳細は

- 入力欄タブのコンテキストメニューに

タブを複製を追加しました。

-

Ocuteus-v1 を KoboldCpp で試せる

KoboldCpp/Launch-Ocuteus-v1-Q8_0-C16K-L0.batを追加しました。- GPU レイヤーを増やして高速化したい場合は、bat をコピーして

Launch-Ocuteus-v1-Q8_0-C16K-L33.batなどにリネームし、set GPU_LAYERS=0をset GPU_LAYERS=33に書き換えます。

- GPU レイヤーを増やして高速化したい場合は、bat をコピーして

-

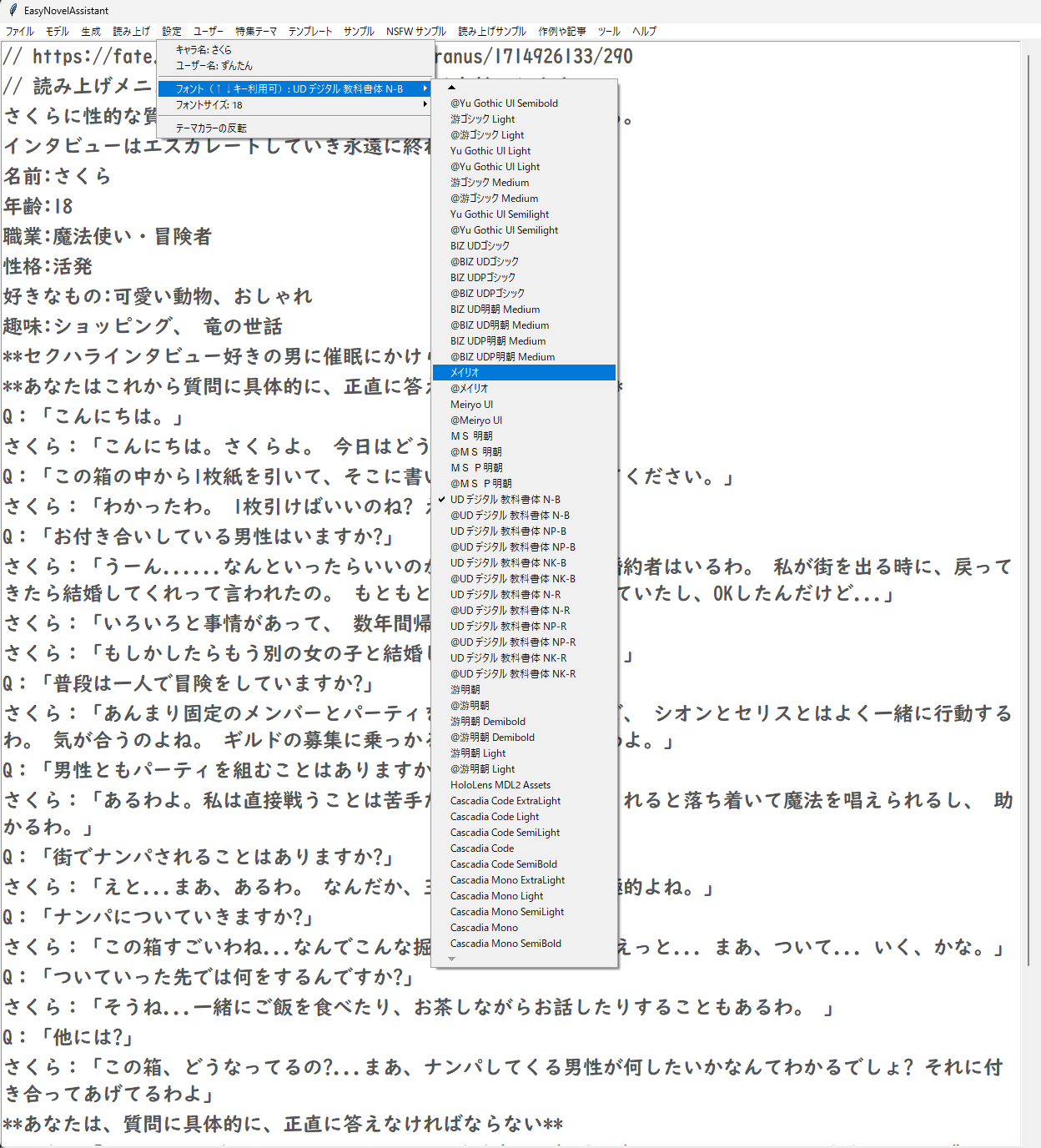

設定メニューにフォント、フォントサイズ、テーマカラーの反転を追加しました。- フォントの選択欄が上下にとても長くなっていますので、キーボードの上下キーで選択してください。

-

config.jsonの以下の項目を編集すれば、細かく色を設定することもできます。

"foreground_color": "#CCCCCC",

"select_foreground_color": "#FFFFFF",

"background_color": "#222222",

"select_background_color": "#555555",

-

コンテキストサイズ上限以上の生成文の長さを指定した際に、生成文の長さを自動的に短縮するようにしました。- アップデート後に入力欄と関係のない文章が生成されていた方は、この対応で修正されます。

-

生成文の長さが 4096 以上の長文を生成する方法- モデルを Vecteus(4K) からLightChatAssistant や Ninja に変更

-

コンテキストサイズ上限を 6144 以上に設定 -

生成文の長さを 4096 以上に設定

-

コンテキストサイズ上限を増やすと VRAM 消費も増えますので、動作しない場合はモデルの GPU レイヤー数(L33など)を引き下げてください。

-

sample/user.jsonファイルがあれば、他のsample/*.jsonと同じようにユーザーメニューを追加するようにしました。

-

インストールと更新

- インストールと更新の詳細説明とトラブルシューティングです。

-

はじめての生成

- EasyNovelAssistant のチュートリアルです。

-

モデルと GPU レイヤー数の選択

- 多様なモデルを効率的に利用する方法です。

-

Tips

- ちょっとした情報です。

-

動画の作成

- 読み上げ音声に画像を割り当てて、字幕付きの動画を簡単に作成します。

-

更新履歴

- 過去の更新履歴です。

このリポジトリの内容は以下を除き MIT License です。

- インストール時に ダウンロードするモノの一覧 を表示します。

-

EasyNovelAssistant/setup/res/tkinter-PythonSoftwareFoundationLicense.zipは Python Software Foundation License です。 - Style-Bert-VITS2 がダウンロードする JVNV 派生物は CC BY-SA 4.0 DEED です。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for EasyNovelAssistant

Similar Open Source Tools

EasyNovelAssistant

EasyNovelAssistant is a simple novel generation assistant powered by a lightweight and uncensored Japanese local LLM 'LightChatAssistant-TypeB'. It allows for perpetual generation with 'Generate forever' feature, stacking up lucky gacha draws. It also supports text-to-speech. Users can directly utilize KoboldCpp and Style-Bert-VITS2 internally or use EasySdxlWebUi to generate images while using the tool. The tool is designed for local novel generation with a focus on ease of use and flexibility.

LinguaGacha

LinguaGacha is a next-generation text translator using AI technology. It supports one-click translation of novels, games, subtitles, and other text content in multiple languages such as Chinese, English, Japanese, Korean, and Russian. The tool offers fast translation speed, automatic terminology generation, high translation quality, and accurate text style and code reproduction. It is recommended for creating embedded Chinese translations and is compatible with various AI models and interfaces.

app-platform

AppPlatform is an advanced large-scale model application engineering aimed at simplifying the development process of AI applications through integrated declarative programming and low-code configuration tools. This project provides a powerful and scalable environment for software engineers and product managers to support the full-cycle development of AI applications from concept to deployment. The backend module is based on the FIT framework, utilizing a plugin-based development approach, including application management and feature extension modules. The frontend module is developed using React framework, focusing on core modules such as application development, application marketplace, intelligent forms, and plugin management. Key features include low-code graphical interface, powerful operators and scheduling platform, and sharing and collaboration capabilities. The project also provides detailed instructions for setting up and running both backend and frontend environments for development and testing.

HiveChat

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

chatgpt-web-sea

ChatGPT Web Sea is an open-source project based on ChatGPT-web for secondary development. It supports all models that comply with the OpenAI interface standard, allows for model selection, configuration, and extension, and is compatible with OneAPI. The tool includes a Chinese ChatGPT tuning guide, supports file uploads, and provides model configuration options. Users can interact with the tool through a web interface, configure models, and perform tasks such as model selection, API key management, and chat interface setup. The project also offers Docker deployment options and instructions for manual packaging.

wechat-bot

WeChat Bot is a simple and easy-to-use WeChat robot based on chatgpt and wechaty. It can help you automatically reply to WeChat messages or manage WeChat groups/friends. The tool requires configuration of AI services such as Xunfei, Kimi, or ChatGPT. Users can customize the tool to automatically reply to group or private chat messages based on predefined conditions. The tool supports running in Docker for easy deployment and provides a convenient way to interact with various AI services for WeChat automation.

video2blog

video2blog is an open-source project aimed at converting videos into textual notes. The tool follows a process of extracting video information using yt-dlp, downloading the video, downloading subtitles if available, translating subtitles if not in Chinese, generating Chinese subtitles using whisper if no subtitles exist, converting subtitles to articles using gemini, and manually inserting images from the video into the article. The tool provides a solution for creating blog content from video resources, enhancing accessibility and content creation efficiency.

new-api

New API is an open-source project based on One API with additional features and improvements. It offers a new UI interface, supports Midjourney-Proxy(Plus) interface, online recharge functionality, model-based charging, channel weight randomization, data dashboard, token-controlled models, Telegram authorization login, Suno API support, Rerank model integration, and various third-party models. Users can customize models, retry channels, and configure caching settings. The deployment can be done using Docker with SQLite or MySQL databases. The project provides documentation for Midjourney and Suno interfaces, and it is suitable for AI enthusiasts and developers looking to enhance AI capabilities.

AMchat

AMchat is a large language model that integrates advanced math concepts, exercises, and solutions. The model is based on the InternLM2-Math-7B model and is specifically designed to answer advanced math problems. It provides a comprehensive dataset that combines Math and advanced math exercises and solutions. Users can download the model from ModelScope or OpenXLab, deploy it locally or using Docker, and even retrain it using XTuner for fine-tuning. The tool also supports LMDeploy for quantization, OpenCompass for evaluation, and various other features for model deployment and evaluation. The project contributors have provided detailed documentation and guides for users to utilize the tool effectively.

chatgpt-web

ChatGPT Web is a web application that provides access to the ChatGPT API. It offers two non-official methods to interact with ChatGPT: through the ChatGPTAPI (using the `gpt-3.5-turbo-0301` model) or through the ChatGPTUnofficialProxyAPI (using a web access token). The ChatGPTAPI method is more reliable but requires an OpenAI API key, while the ChatGPTUnofficialProxyAPI method is free but less reliable. The application includes features such as user registration and login, synchronization of conversation history, customization of API keys and sensitive words, and management of users and keys. It also provides a user interface for interacting with ChatGPT and supports multiple languages and themes.

wealth-tracker

Wealth Tracker is a personal finance management tool designed to help users track their income, expenses, and investments in one place. With intuitive features and customizable categories, users can easily monitor their financial health and make informed decisions. The tool provides detailed reports and visualizations to analyze spending patterns and set financial goals. Whether you are budgeting, saving for a big purchase, or planning for retirement, Wealth Tracker offers a comprehensive solution to manage your money effectively.

mcp-svelte-docs

A Model Context Protocol (MCP) server providing authoritative Svelte 5 and SvelteKit definitions extracted directly from TypeScript declarations. Get precise syntax, parameters, and examples for all Svelte 5 concepts through a single, unified interface. The server offers a 'svelte_definition' tool that covers various Svelte 5 runes, modern features, event handling, migration guidance, TypeScript interfaces, and advanced patterns. It aims to provide up-to-date, type-safe, and comprehensive documentation for Svelte developers.

new-api

New API is a next-generation large model gateway and AI asset management system that provides a wide range of features, including a new UI interface, multi-language support, online recharge function, key query for usage quota, compatibility with the original One API database, model charging by usage count, channel weighted randomization, data dashboard, token grouping and model restrictions, support for various authorization login methods, support for Rerank models, OpenAI Realtime API, Claude Messages format, reasoning effort setting, content reasoning, user-specific model rate limiting, request format conversion, cache billing support, and various model support such as gpts, Midjourney-Proxy, Suno API, custom channels, Rerank models, Claude Messages format, Dify, and more.

awesome-rag

Awesome RAG is a curated list of retrieval-augmented generation (RAG) in large language models. It includes papers, surveys, general resources, lectures, talks, tutorials, workshops, tools, and other collections related to retrieval-augmented generation. The repository aims to provide a comprehensive overview of the latest advancements, techniques, and applications in the field of RAG.

emohaa-free-api

Emohaa AI Free API is a free API that allows you to access the Emohaa AI chatbot. Emohaa AI is a powerful chatbot that can understand and respond to a wide range of natural language queries. It can be used for a variety of purposes, such as customer service, information retrieval, and language translation. The Emohaa AI Free API is easy to use and can be integrated into any application. It is a great way to add AI capabilities to your projects without having to build your own chatbot from scratch.

For similar tasks

EasyNovelAssistant

EasyNovelAssistant is a simple novel generation assistant powered by a lightweight and uncensored Japanese local LLM 'LightChatAssistant-TypeB'. It allows for perpetual generation with 'Generate forever' feature, stacking up lucky gacha draws. It also supports text-to-speech. Users can directly utilize KoboldCpp and Style-Bert-VITS2 internally or use EasySdxlWebUi to generate images while using the tool. The tool is designed for local novel generation with a focus on ease of use and flexibility.

tock

Tock is an open conversational AI platform for building bots. It offers a natural language processing open source stack compatible with various tools, a user interface for building stories and analytics, a conversational DSL for different programming languages, built-in connectors for text/voice channels, toolkits for custom web/mobile integration, and the ability to deploy anywhere in the cloud or on-premise with Docker.

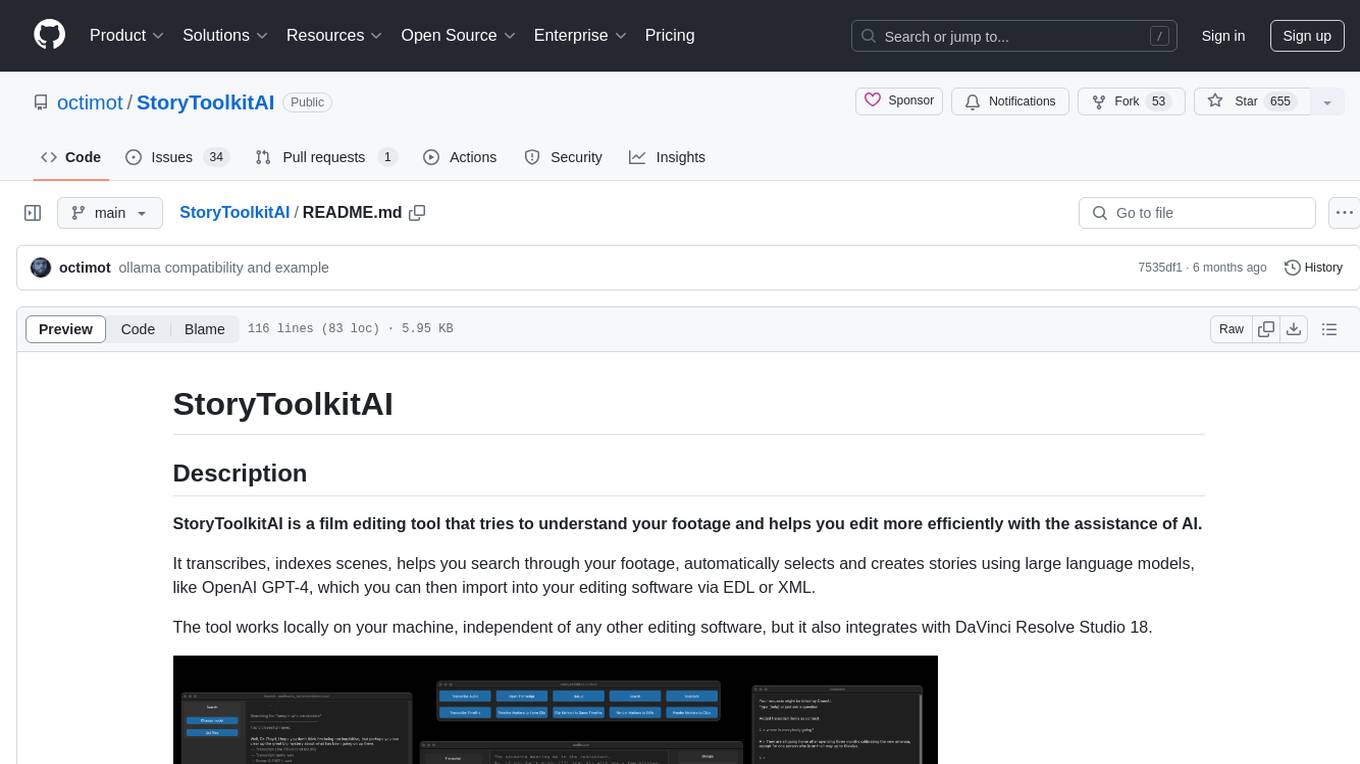

StoryToolKit

StoryToolkitAI is a film editing tool that utilizes AI to transcribe, index scenes, search through footage, and create stories. It offers features such as automatic transcription, translation, story creation, speaker detection, project file management, and more. The tool works locally on your machine and integrates with DaVinci Resolve Studio 18. It aims to streamline the editing process by leveraging AI capabilities and enhancing user efficiency.

StoryToolkitAI

StoryToolkitAI is a film editing tool that utilizes AI to transcribe, index scenes, search through footage, and create stories. It offers features like full video indexing, automatic transcriptions and translations, compatibility with OpenAI GPT and ollama, story editor for screenplay writing, speaker detection, project file management, and more. It integrates with DaVinci Resolve Studio 18 and offers planned features like automatic topic classification and integration with other AI tools. The tool is developed by Octavian Mot and is actively being updated with new features based on user needs and feedback.

aichildedu

AICHILDEDU is a microservice-based AI education platform for children that integrates LLMs, image generation, and speech synthesis to provide personalized storybook creation, intelligent conversational learning, and multimedia content generation. It offers features like personalized story generation, educational quiz creation, multimedia integration, age-appropriate content, multi-language support, user management, parental controls, and asynchronous processing. The platform follows a microservice architecture with components like API Gateway, User Service, Content Service, Learning Service, and AI Services. Technologies used include Python, FastAPI, PostgreSQL, MongoDB, Redis, LangChain, OpenAI GPT models, TensorFlow, PyTorch, Transformers, MinIO, Elasticsearch, Docker, Docker Compose, and JWT-based authentication.

Jailbreaks

Jailbreaks is a repository dedicated to organizing and curating models suitable for NSFW writing. It serves as a collection of resources for writers looking to explore adult content in a structured manner.

narratrix

NarratrixAI is an AI-powered tabletop roleplaying platform that leverages AI to create dynamic, responsive, and immersive storytelling experiences. It allows users to create their own stories, use it as character chat, or as a full tabletop RPG experience. The platform features a powerful chat system, flexible AI integration, rich character management, powerful storytelling tools, and developer-friendly customization options. Narratrix supports various AI providers through a manifest system and is built with Tauri for native performance across Windows, macOS, and Linux platforms.

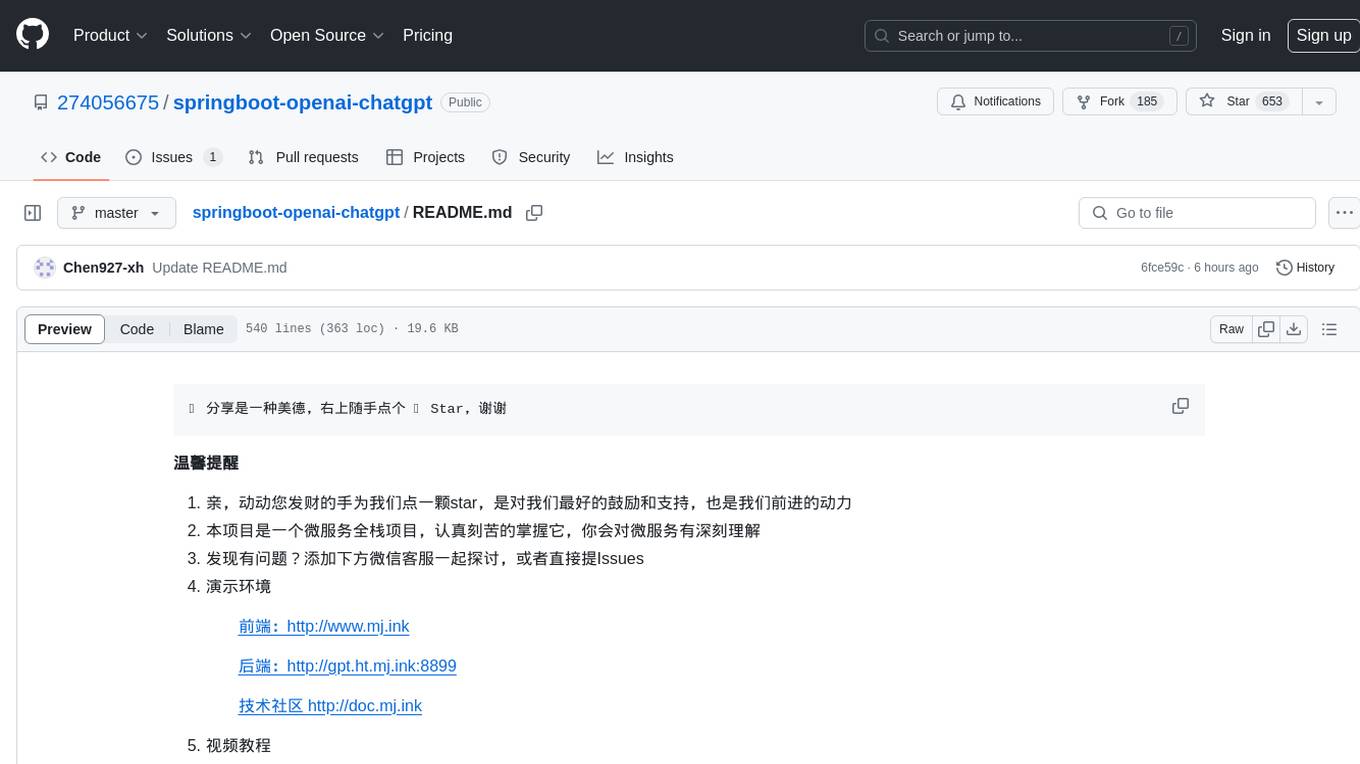

springboot-openai-chatgpt

The springboot-openai-chatgpt repository is an open-source project for a super AI brain that utilizes GPT technology to quickly generate language content such as copies, love letters, and questions. Users can input keywords to enhance work efficiency and creativity. The AI brain combines powerful question-answering systems and knowledge graphs to provide comprehensive and accurate answers. It supports programming tasks, generates code using GPT, and continuously strengthens its capabilities with growing data to provide superior intelligent applications.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.