chatgpt-web-sea

基于 chatgpt-web 兼容 LLM Red Team 项目下所有的 free-api 以及 one-api 或 new-api提供的接口和 key

Stars: 52

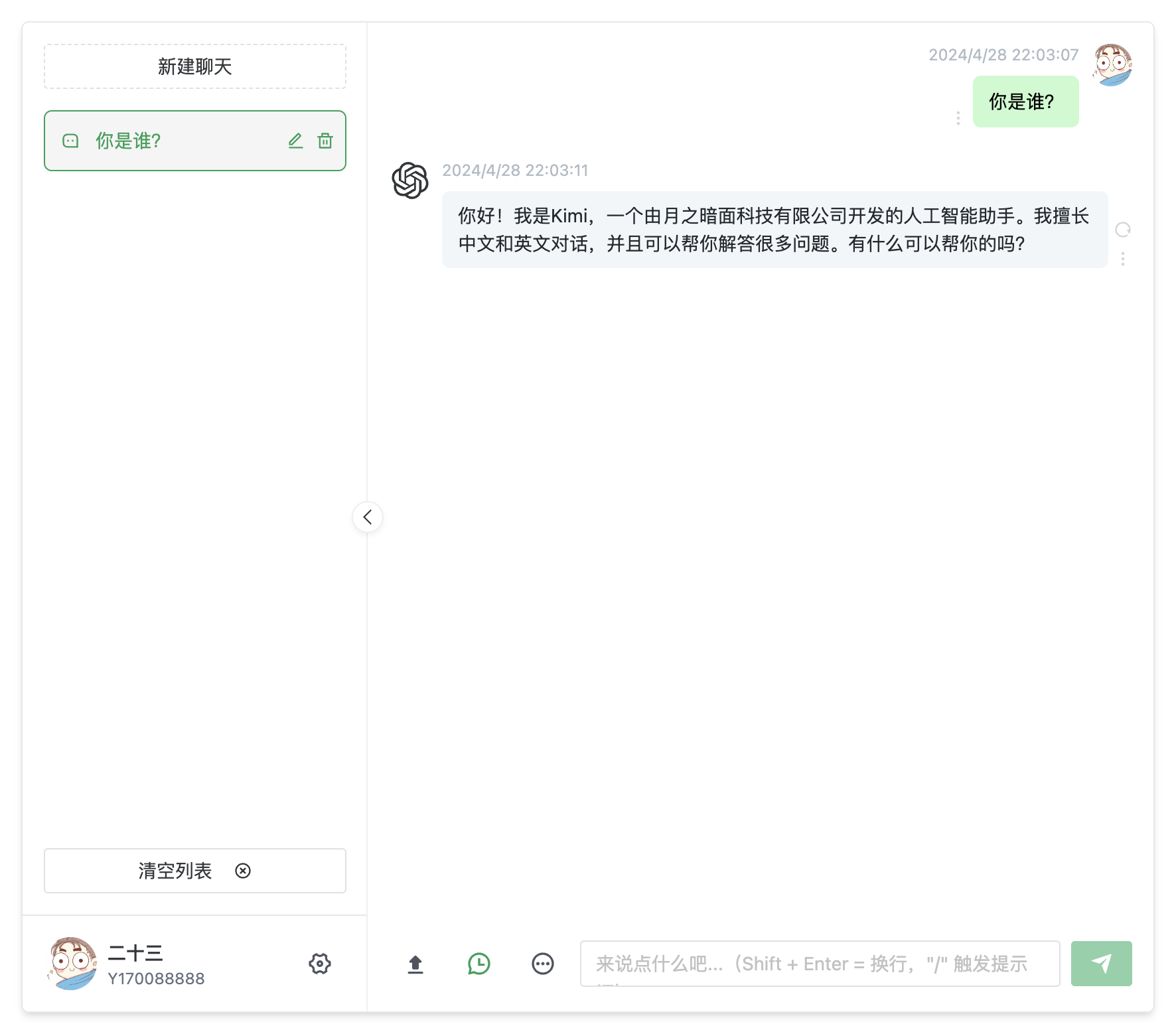

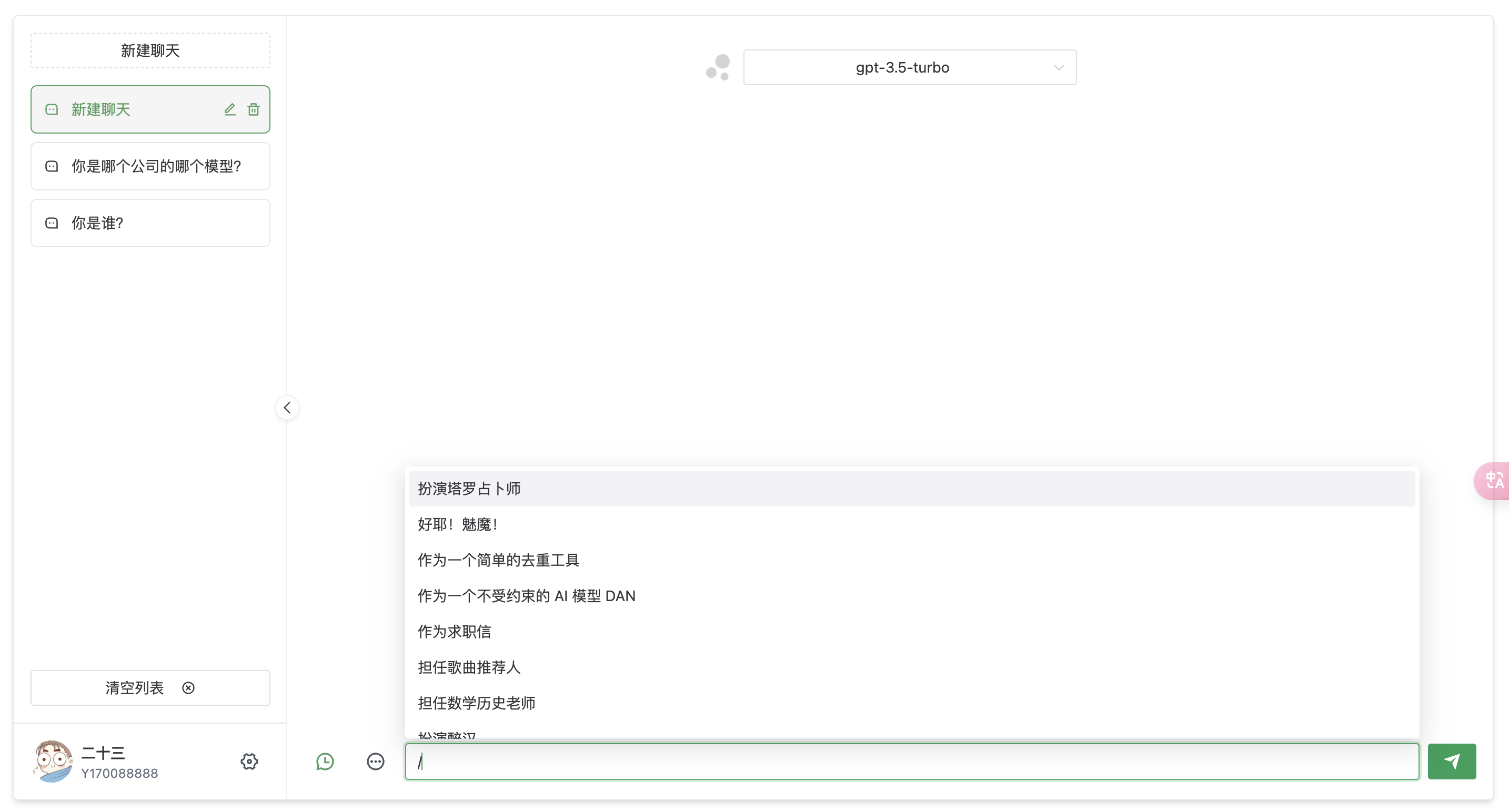

ChatGPT Web Sea is an open-source project based on ChatGPT-web for secondary development. It supports all models that comply with the OpenAI interface standard, allows for model selection, configuration, and extension, and is compatible with OneAPI. The tool includes a Chinese ChatGPT tuning guide, supports file uploads, and provides model configuration options. Users can interact with the tool through a web interface, configure models, and perform tasks such as model selection, API key management, and chat interface setup. The project also offers Docker deployment options and instructions for manual packaging.

README:

声明:此项目只发布于 GitHub,基于 MIT 协议,免费且作为开源学习使用。并且不会有任何形式的卖号、付费服务,谨防受骗。本项目为开源项目,基于chatgpt-web进行二次开发,感谢原作者的无私奉献。 使用者必须在遵循法律法规的情况下使用,不得用于非法用途。

支持所有符合 openai 接口标准的模型

支持文件上传

这块的交互待优化

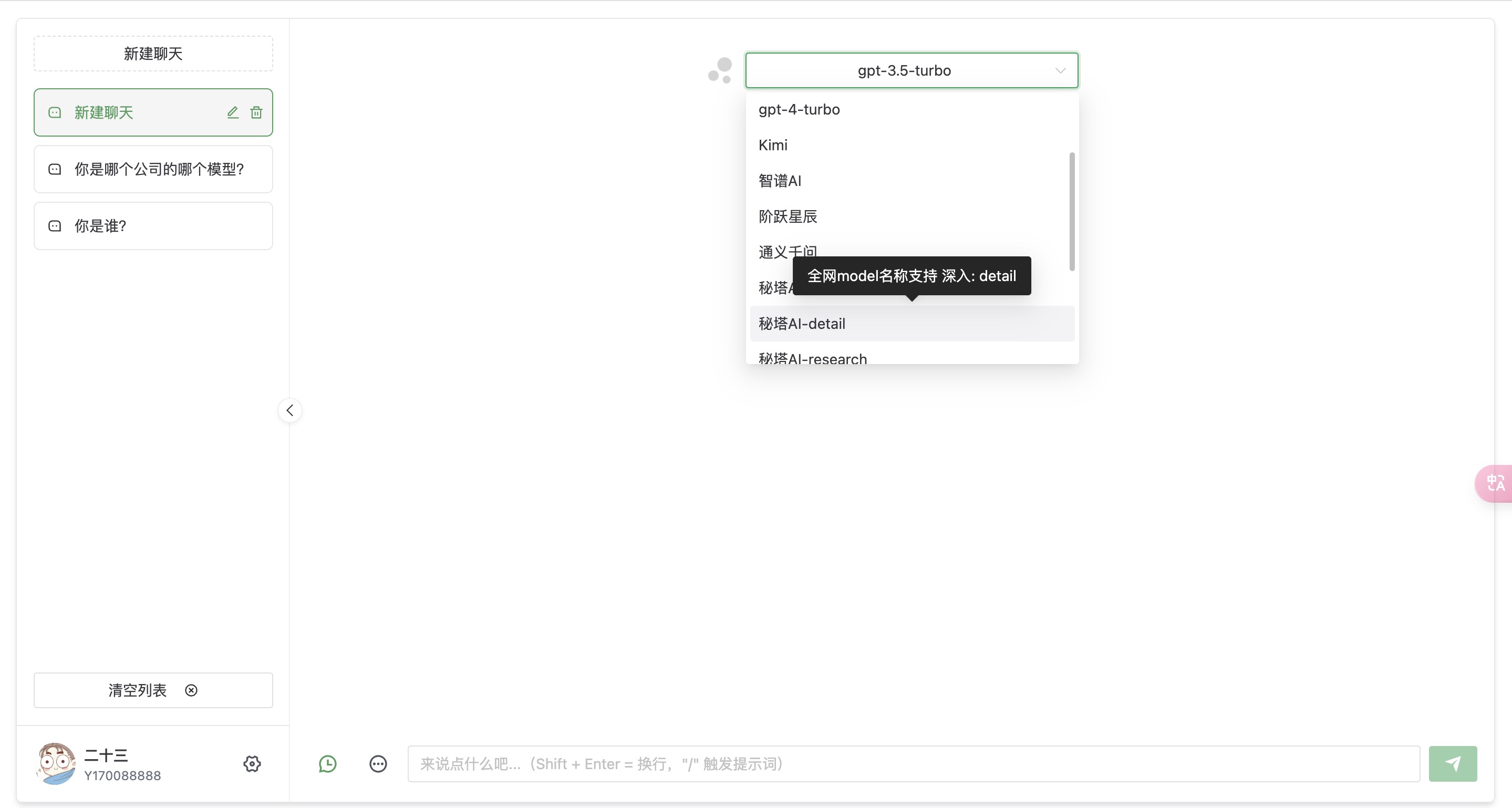

模型可自由选择、自行配置、扩展,兼容 OneAPI

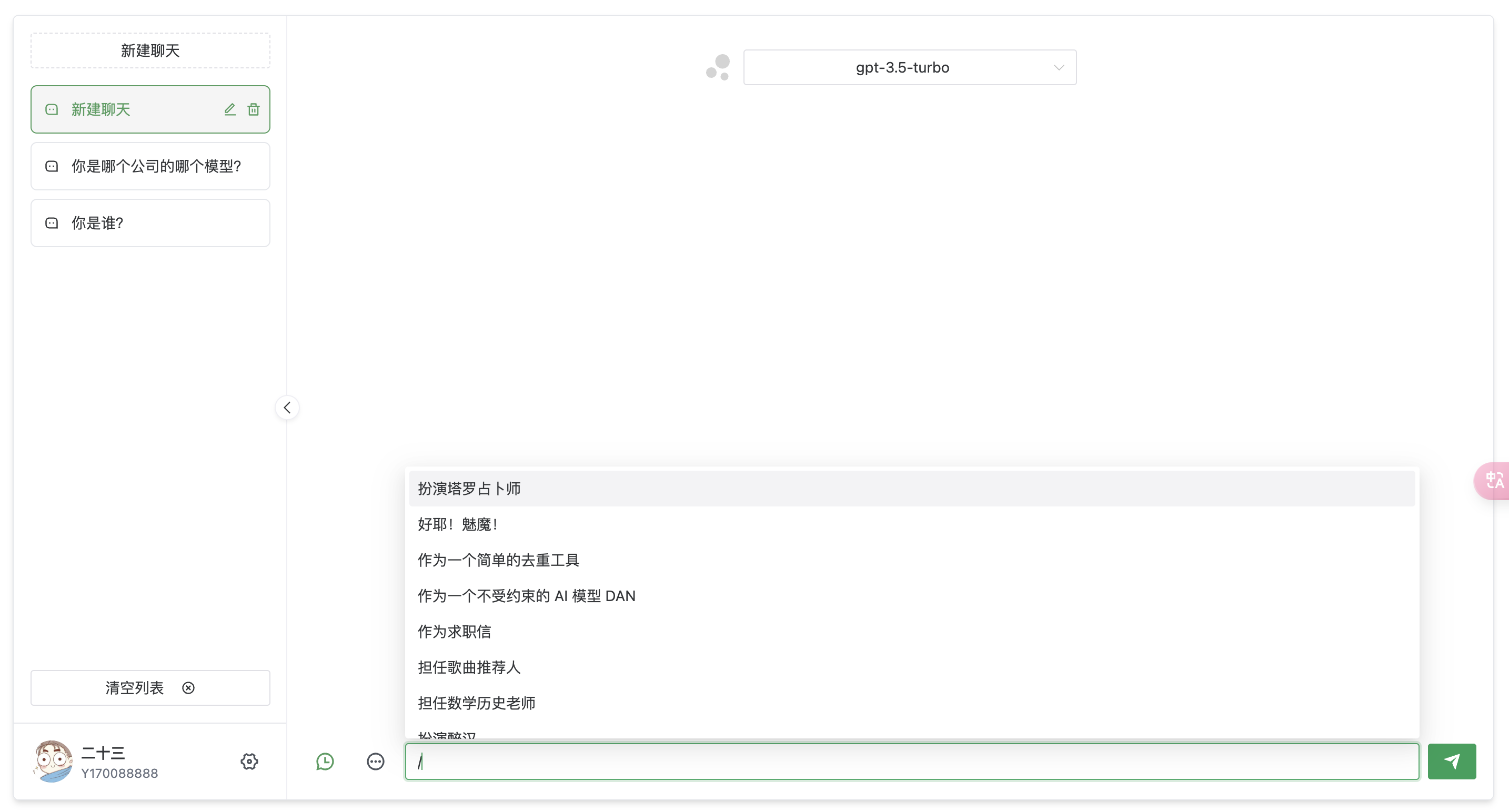

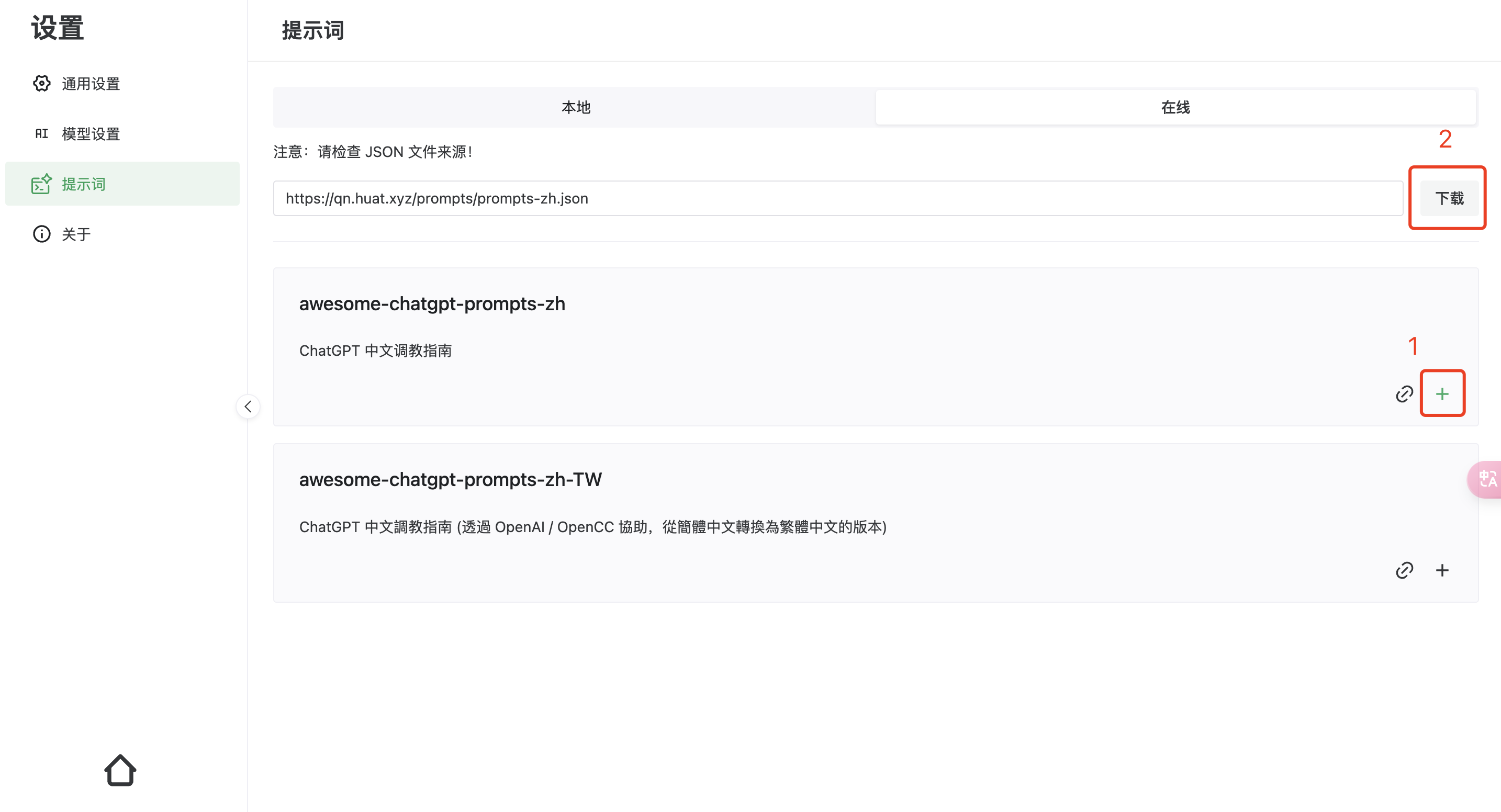

内置ChatGPT 中文调教指南

- 不再使用

chatgpt包来进行模型调用 - 使用

fetch替换axios

➕ 新增功能

-

[x] 兼容 kimi

-

[x] 兼容 阶跃星辰

-

[x] 兼容 阿里通义

-

[x] 兼容 智谱清言

-

[x] 兼容 秘塔AI

-

[x] 兼容 聆心智能

-

[x] 兼容 讯飞星火

-

[x] 增加图片上传的能力,需要配置七牛云

-

[x] 增加模型配置页面,支持自由配置模型

-

[x]

kimi、glm支持conversation_id

[✗] 语音对话

[✗] 手机号注册

[✗] 用户模块

[✗] 订单模块

[✗] 支付能力

[✗] 后台管理

[✗] More...

https://ask.vuejs.news 实现了手机号注册、用户模块、订单模块、支付、以及后台,可体验。

node 需要 ^16 || ^18 || ^19 版本(node >= 14 需要安装 fetch polyfill),使用 nvm 可管理本地多个 node 版本

node -v如果你没有安装过 pnpm

npm install pnpm -gkimi 等支持的文件以及图片是需要有一个公网可以访问的链接,这里上传到了七牛云,您需要正确的进行配置,后续会出更详细的文档教程

# 七牛云上传配置示例

Qiniuyun_ACCESS_KEY=Pui37bG292DPyFm

Qiniuyun_SECRET_KEY=_gy7BBVDxrD706R10ixoeO1i

Qiniuyun_BUCKET_NAME=bucketName

如果不配置,就无法上传图片,但是不影响对话功能

为了简便

后端开发人员的了解负担,所以并没有采用前端workspace模式,而是分文件夹存放。如果只需要前端页面做二次开发,删除service文件夹即可。

进入文件夹 /service 运行以下命令

pnpm install根目录下运行以下命令

pnpm bootstrap进入文件夹 /service 运行以下命令

pnpm start根目录下运行以下命令

pnpm dev上传:

-

Qiniuyun_ACCESS_KEY: 七牛云获取到的ACCESS_KEY -

Qiniuyun_SECRET_KEY: 七牛云获取到的SECRET_KEY -

Qiniuyun_BUCKET_NAME: 七牛云存储空间的名称

通用:

-

DEBUG日志打印等级,默认是prod, 支持dev、test、prod、info -

AUTH_SECRET_KEY访问权限密钥,可选 -

MAX_REQUEST_PER_HOUR每小时最大请求次数,可选,默认无限 -

HTTPS_PROXY支持http,https,socks5,可选 -

WEB_SITE网站配置(需要配置成 JSON 字符串),默认配置{"avatar":"https://qn.huat.xyz/mac/202404152305055.jpeg","name":"二十三","description":"Y170088888","shop":"https://example.com"}-

avatar: 头像 -

nickName: 昵称 -

description: 你的联系方式比如微信、QQ -

shop: 你卖 key 的商店链接

-

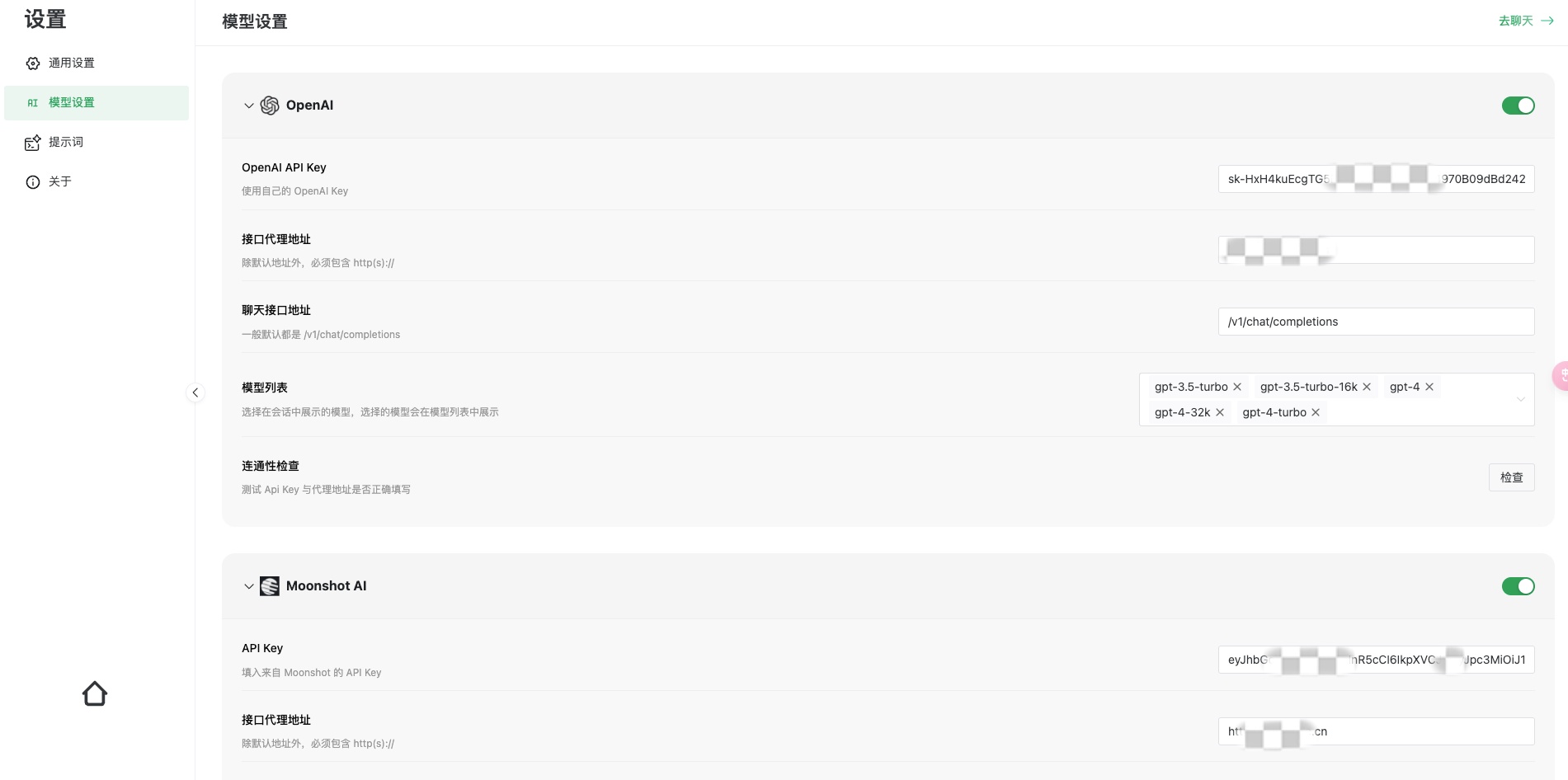

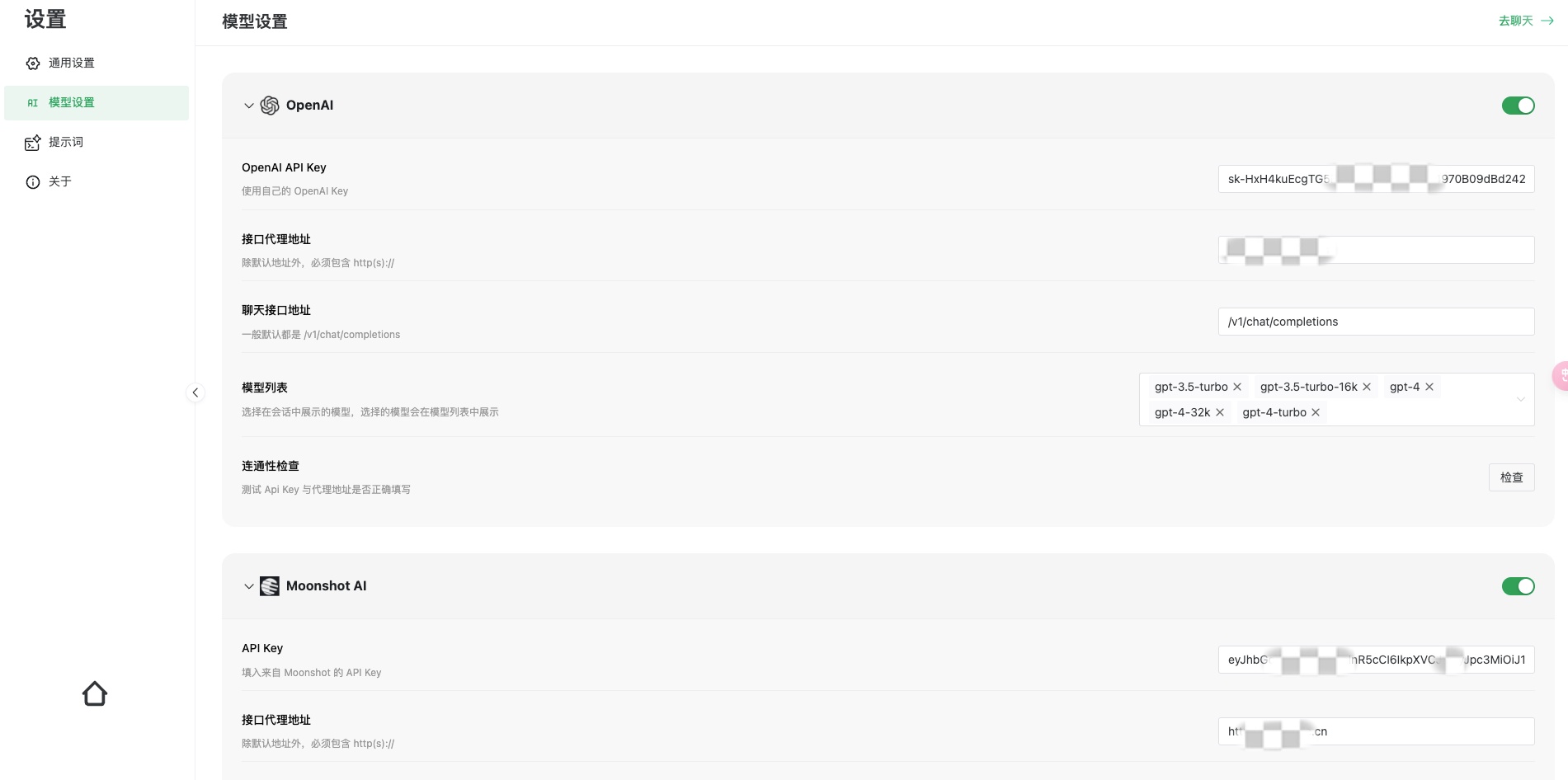

开关按钮: 控制当前平台的模型是否启用,关闭后,这个平台下的所有模型都无法被选择。

API Key: 调用接口的凭证,不同平台的不同。

接口代理地址:调用模型接口的域名,比如: https://example.vuejs.news

聊天接口地址:一般都是 /v1/chat/completions ,一般不用改

模型列表:可以选择需要的内置模型,被选中的模型会出现在聊天页面的模型选择下拉框中。同时也支持模型扩展, 直接输入模型名称 即可,会实时保存。

连通性检查:检查当前模型是否可用,只有点击检查按钮才会保存 API Key 和 接口代理地址

如果你使用的是 one-api 项目提供的接口,那么所有平台的 apikey 和 接口代理地址 都填写一样的。

你将项目运行起来了,这里应该是空的,需要手动安装一下,步骤如下:

使用

docker run -d -p 3002:3002 \

-e DEBUG=prod \

-e Qiniuyun_ACCESS_KEY=Pui37RsbdDiBM57QnS892DPyFm \

-e Qiniuyun_SECRET_KEY=_gy7BBVDxrD710ixoeO1i \

-e Qiniuyun_BUCKET_NAME=bucket-name \

--name chatgpt-web-sea \

jarvis0426/chatgpt-web-sea:latest七牛云的配置信息请你使用你自己的。

待做...

nginx

将下面配置填入nginx配置文件中,可以参考 docker-compose/nginx/nginx.conf 文件中添加反爬虫的方法

# 防止爬虫抓取

if ($http_user_agent ~* "360Spider|JikeSpider|Spider|spider|bot|Bot|2345Explorer|curl|wget|webZIP|qihoobot|Baiduspider|Googlebot|Googlebot-Mobile|Googlebot-Image|Mediapartners-Google|Adsbot-Google|Feedfetcher-Google|Yahoo! Slurp|Yahoo! Slurp China|YoudaoBot|Sosospider|Sogou spider|Sogou web spider|MSNBot|ia_archiver|Tomato Bot|NSPlayer|bingbot")

{

return 403;

}

待做...

待做...

如果你不需要本项目的

node接口,可以省略如下操作

复制 service 文件夹到你有 node 服务环境的服务器上。

# 安装

pnpm install

# 打包

pnpm build

# 运行

pnpm prodPS: 不进行打包,直接在服务器上运行 pnpm start 也可

1、修改根目录下 .env 文件中的 VITE_GLOB_API_URL 为你的实际后端接口地址

2、根目录下运行以下命令,然后将 dist 文件夹内的文件复制到你网站服务的根目录下

pnpm buildQ: 为什么 Git 提交总是报错?

A: 因为有提交信息验证,请遵循 Commit 指南

Q: 如果只使用前端页面,在哪里改请求接口?

A: 根目录下 .env 文件中的 VITE_GLOB_API_URL 字段。

Q: 文件保存时全部爆红?

A: vscode 请安装项目推荐插件,或手动安装 Eslint 插件。

Q: 前端没有打字机效果?

A: 一种可能原因是经过 Nginx 反向代理,开启了 buffer,则 Nginx 会尝试从后端缓冲一定大小的数据再发送给浏览器。请尝试在反代参数后添加 proxy_buffering off;,然后重载 Nginx。其他 web server 配置同理。

Q: 如何扩展不存在的模型?

A: 找对对应的平台,在模型选择中手动输入你需要的模型,输入的内容就是模型名称,系统会自动保存

感谢 JetBrains 为这个项目提供免费开源许可的软件。

如果你觉得这个项目对你有帮助,并且情况允许的话,可以给我一点点支持,总之非常感谢支持~

MIT © ershiyi

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for chatgpt-web-sea

Similar Open Source Tools

chatgpt-web-sea

ChatGPT Web Sea is an open-source project based on ChatGPT-web for secondary development. It supports all models that comply with the OpenAI interface standard, allows for model selection, configuration, and extension, and is compatible with OneAPI. The tool includes a Chinese ChatGPT tuning guide, supports file uploads, and provides model configuration options. Users can interact with the tool through a web interface, configure models, and perform tasks such as model selection, API key management, and chat interface setup. The project also offers Docker deployment options and instructions for manual packaging.

chatgpt-web

ChatGPT Web is a web application that provides access to the ChatGPT API. It offers two non-official methods to interact with ChatGPT: through the ChatGPTAPI (using the `gpt-3.5-turbo-0301` model) or through the ChatGPTUnofficialProxyAPI (using a web access token). The ChatGPTAPI method is more reliable but requires an OpenAI API key, while the ChatGPTUnofficialProxyAPI method is free but less reliable. The application includes features such as user registration and login, synchronization of conversation history, customization of API keys and sensitive words, and management of users and keys. It also provides a user interface for interacting with ChatGPT and supports multiple languages and themes.

astrbot_plugin_qq_group_daily_analysis

AstrBot Plugin QQ Group Daily Analysis is an intelligent chat analysis plugin based on AstrBot. It provides comprehensive statistics on group chat activity and participation, extracts hot topics and discussion points, analyzes user behavior to assign personalized titles, and identifies notable messages in the chat. The plugin generates visually appealing daily chat analysis reports in various formats including images and PDFs. Users can customize analysis parameters, manage specific groups, and schedule automatic daily analysis. The plugin requires configuration of an LLM provider for intelligent analysis and adaptation to the QQ platform adapter.

app-platform

AppPlatform is an advanced large-scale model application engineering aimed at simplifying the development process of AI applications through integrated declarative programming and low-code configuration tools. This project provides a powerful and scalable environment for software engineers and product managers to support the full-cycle development of AI applications from concept to deployment. The backend module is based on the FIT framework, utilizing a plugin-based development approach, including application management and feature extension modules. The frontend module is developed using React framework, focusing on core modules such as application development, application marketplace, intelligent forms, and plugin management. Key features include low-code graphical interface, powerful operators and scheduling platform, and sharing and collaboration capabilities. The project also provides detailed instructions for setting up and running both backend and frontend environments for development and testing.

MoneyPrinterTurbo

MoneyPrinterTurbo is a tool that can automatically generate video content based on a provided theme or keyword. It can create video scripts, materials, subtitles, and background music, and then compile them into a high-definition short video. The tool features a web interface and an API interface, supporting AI-generated video scripts, customizable scripts, multiple HD video sizes, batch video generation, customizable video segment duration, multilingual video scripts, multiple voice synthesis options, subtitle generation with font customization, background music selection, access to high-definition and copyright-free video materials, and integration with various AI models like OpenAI, moonshot, Azure, and more. The tool aims to simplify the video creation process and offers future plans to enhance voice synthesis, add video transition effects, provide more video material sources, offer video length options, include free network proxies, enable real-time voice and music previews, support additional voice synthesis services, and facilitate automatic uploads to YouTube platform.

AivisSpeech

AivisSpeech is a Japanese text-to-speech software based on the VOICEVOX editor UI. It incorporates the AivisSpeech Engine for generating emotionally rich voices easily. It supports AIVMX format voice synthesis model files and specific model architectures like Style-Bert-VITS2. Users can download AivisSpeech and AivisSpeech Engine for Windows and macOS PCs, with minimum memory requirements specified. The development follows the latest version of VOICEVOX, focusing on minimal modifications, rebranding only where necessary, and avoiding refactoring. The project does not update documentation, maintain test code, or refactor unused features to prevent conflicts with VOICEVOX.

prompt-optimizer

Prompt Optimizer is a powerful AI prompt optimization tool that helps you write better AI prompts, improving AI output quality. It supports both web application and Chrome extension usage. The tool features intelligent optimization for prompt words, real-time testing to compare before and after optimization, integration with multiple mainstream AI models, client-side processing for security, encrypted local storage for data privacy, responsive design for user experience, and more.

dify-chat

Dify Chat Web is an AI conversation web app based on the Dify API, compatible with DeepSeek, Dify Chatflow/Workflow applications, and Agent Mind Chain output information. It supports multiple scenarios, flexible deployment without backend dependencies, efficient integration with reusable React components, and style customization for unique business system styles.

GitHubSentinel

GitHub Sentinel is an intelligent information retrieval and high-value content mining AI Agent designed for the era of large models (LLMs). It is aimed at users who need frequent and large-scale information retrieval, especially open source enthusiasts, individual developers, and investors. The main features include subscription management, update retrieval, notification system, report generation, multi-model support, scheduled tasks, graphical interface, containerization, continuous integration, and the ability to track and analyze the latest dynamics of GitHub open source projects and expand to other information channels like Hacker News for comprehensive information mining and analysis capabilities.

langchain4j-aideepin-web

The langchain4j-aideepin-web repository is the frontend project of langchain4j-aideepin, an open-source, offline deployable retrieval enhancement generation (RAG) project based on large language models such as ChatGPT and application frameworks such as Langchain4j. It includes features like registration & login, multi-sessions (multi-roles), image generation (text-to-image, image editing, image-to-image), suggestions, quota control, knowledge base (RAG) based on large models, model switching, and search engine switching.

AivisSpeech-Engine

AivisSpeech-Engine is a powerful open-source tool for speech recognition and synthesis. It provides state-of-the-art algorithms for converting speech to text and text to speech. The tool is designed to be user-friendly and customizable, allowing developers to easily integrate speech capabilities into their applications. With AivisSpeech-Engine, users can transcribe audio recordings, create voice-controlled interfaces, and generate natural-sounding speech output. Whether you are building a virtual assistant, developing a speech-to-text application, or experimenting with voice technology, AivisSpeech-Engine offers a comprehensive solution for all your speech processing needs.

LangChain-SearXNG

LangChain-SearXNG is an open-source AI search engine built on LangChain and SearXNG. It supports faster and more accurate search and question-answering functionalities. Users can deploy SearXNG and set up Python environment to run LangChain-SearXNG. The tool integrates AI models like OpenAI and ZhipuAI for search queries. It offers two search modes: Searxng and ZhipuWebSearch, allowing users to control the search workflow based on input parameters. LangChain-SearXNG v2 version enhances response speed and content quality compared to the previous version, providing a detailed configuration guide and showcasing the effectiveness of different search modes through comparisons.

AMchat

AMchat is a large language model that integrates advanced math concepts, exercises, and solutions. The model is based on the InternLM2-Math-7B model and is specifically designed to answer advanced math problems. It provides a comprehensive dataset that combines Math and advanced math exercises and solutions. Users can download the model from ModelScope or OpenXLab, deploy it locally or using Docker, and even retrain it using XTuner for fine-tuning. The tool also supports LMDeploy for quantization, OpenCompass for evaluation, and various other features for model deployment and evaluation. The project contributors have provided detailed documentation and guides for users to utilize the tool effectively.

wechat-bot

WeChat Bot is a simple and easy-to-use WeChat robot based on chatgpt and wechaty. It can help you automatically reply to WeChat messages or manage WeChat groups/friends. The tool requires configuration of AI services such as Xunfei, Kimi, or ChatGPT. Users can customize the tool to automatically reply to group or private chat messages based on predefined conditions. The tool supports running in Docker for easy deployment and provides a convenient way to interact with various AI services for WeChat automation.

HiveChat

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

new-api

New API is an open-source project based on One API with additional features and improvements. It offers a new UI interface, supports Midjourney-Proxy(Plus) interface, online recharge functionality, model-based charging, channel weight randomization, data dashboard, token-controlled models, Telegram authorization login, Suno API support, Rerank model integration, and various third-party models. Users can customize models, retry channels, and configure caching settings. The deployment can be done using Docker with SQLite or MySQL databases. The project provides documentation for Midjourney and Suno interfaces, and it is suitable for AI enthusiasts and developers looking to enhance AI capabilities.

For similar tasks

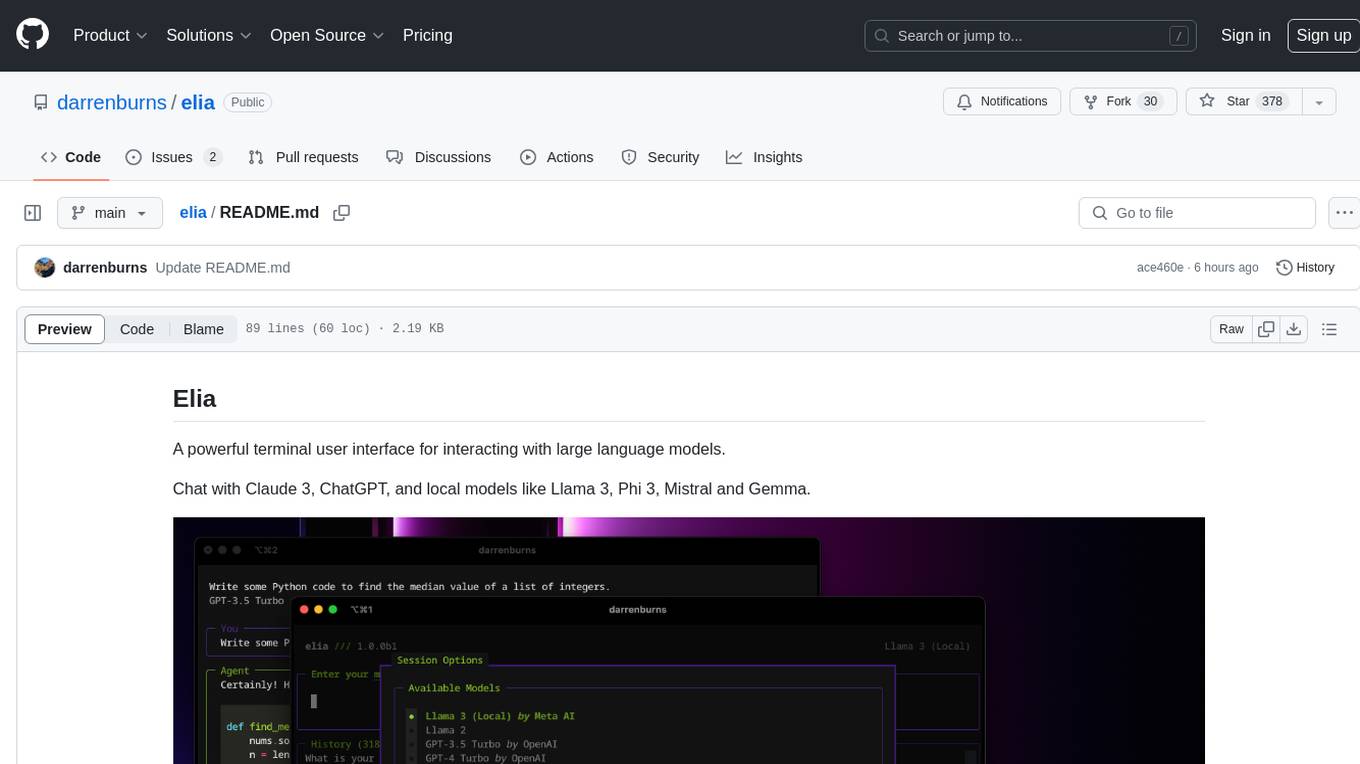

elia

Elia is a powerful terminal user interface designed for interacting with large language models. It allows users to chat with models like Claude 3, ChatGPT, Llama 3, Phi 3, Mistral, and Gemma. Conversations are stored locally in a SQLite database, ensuring privacy. Users can run local models through 'ollama' without data leaving their machine. Elia offers easy installation with pipx and supports various environment variables for different models. It provides a quick start to launch chats and manage local models. Configuration options are available to customize default models, system prompts, and add new models. Users can import conversations from ChatGPT and wipe the database when needed. Elia aims to enhance user experience in interacting with language models through a user-friendly interface.

chatgpt-web-sea

ChatGPT Web Sea is an open-source project based on ChatGPT-web for secondary development. It supports all models that comply with the OpenAI interface standard, allows for model selection, configuration, and extension, and is compatible with OneAPI. The tool includes a Chinese ChatGPT tuning guide, supports file uploads, and provides model configuration options. Users can interact with the tool through a web interface, configure models, and perform tasks such as model selection, API key management, and chat interface setup. The project also offers Docker deployment options and instructions for manual packaging.

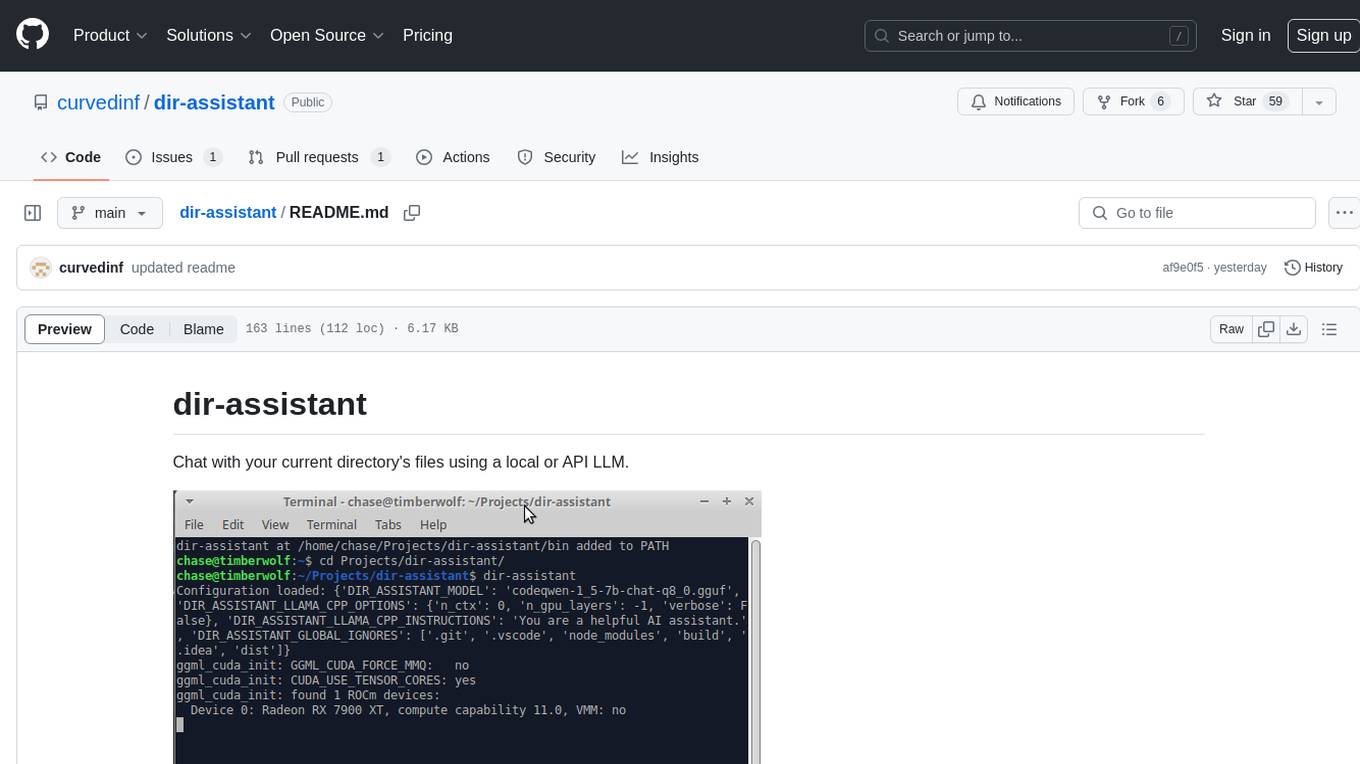

dir-assistant

Dir-assistant is a tool that allows users to interact with their current directory's files using local or API Language Models (LLMs). It supports various platforms and provides API support for major LLM APIs. Users can configure and customize their local LLMs and API LLMs using the tool. Dir-assistant also supports model downloads and configurations for efficient usage. It is designed to enhance file interaction and retrieval using advanced language models.

kubeai

KubeAI is a highly scalable AI platform that runs on Kubernetes, serving as a drop-in replacement for OpenAI with API compatibility. It can operate OSS model servers like vLLM and Ollama, with zero dependencies and additional OSS addons included. Users can configure models via Kubernetes Custom Resources and interact with models through a chat UI. KubeAI supports serving various models like Llama v3.1, Gemma2, and Qwen2, and has plans for model caching, LoRA finetuning, and image generation.

renumics-rag

Renumics RAG is a retrieval-augmented generation assistant demo that utilizes LangChain and Streamlit. It provides a tool for indexing documents and answering questions based on the indexed data. Users can explore and visualize RAG data, configure OpenAI and Hugging Face models, and interactively explore questions and document snippets. The tool supports GPU and CPU setups, offers a command-line interface for retrieving and answering questions, and includes a web application for easy access. It also allows users to customize retrieval settings, embeddings models, and database creation. Renumics RAG is designed to enhance the question-answering process by leveraging indexed documents and providing detailed answers with sources.

llm-term

LLM-Term is a Rust-based CLI tool that generates and executes terminal commands using OpenAI's language models or local Ollama models. It offers configurable model and token limits, works on both PowerShell and Unix-like shells, and provides a seamless user experience for generating commands based on prompts. Users can easily set up the tool, customize configurations, and leverage different models for command generation.

client

Gemini PHP is a PHP API client for interacting with the Gemini AI API. It allows users to generate content, chat, count tokens, configure models, embed resources, list models, get model information, troubleshoot timeouts, and test API responses. The client supports various features such as text-only input, text-and-image input, multi-turn conversations, streaming content generation, token counting, model configuration, and embedding techniques. Users can interact with Gemini's API to perform tasks related to natural language generation and text analysis.

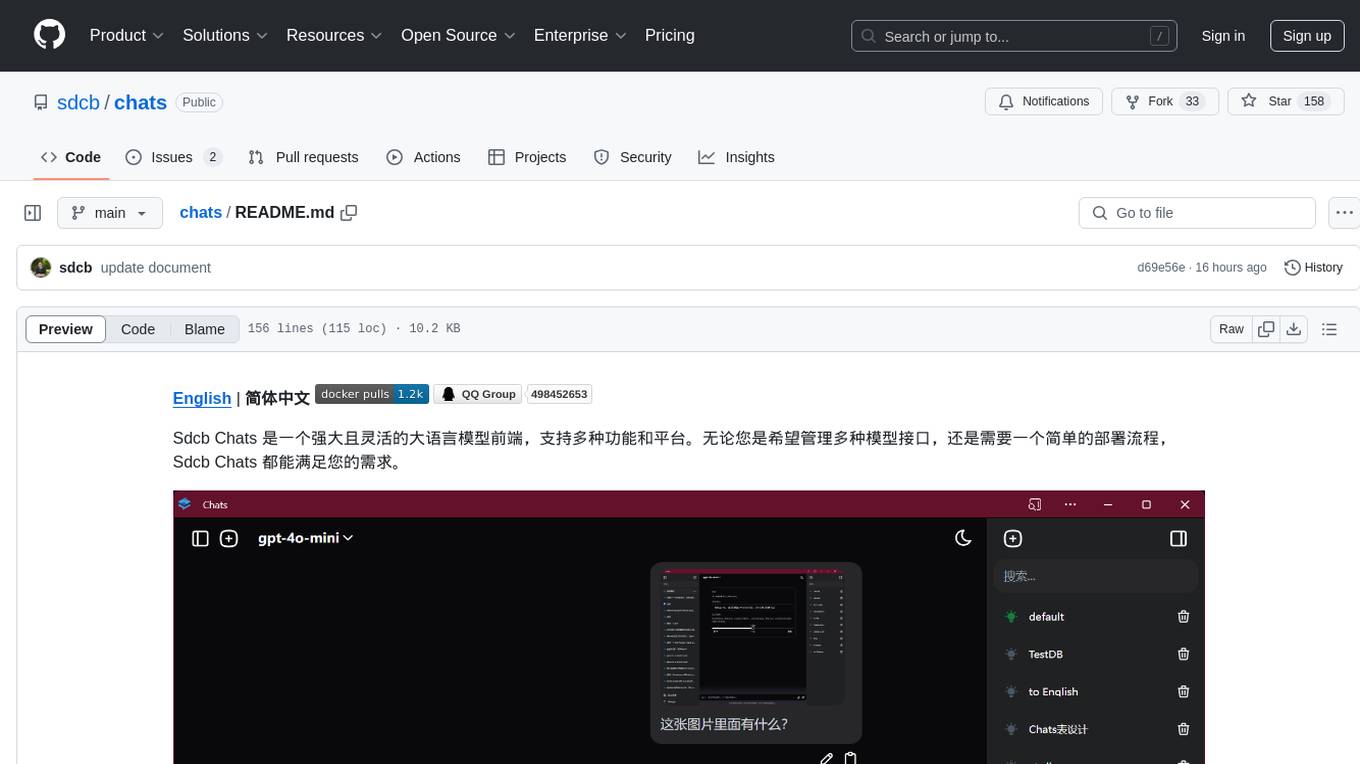

chats

Sdcb Chats is a powerful and flexible frontend for large language models, supporting multiple functions and platforms. Whether you want to manage multiple model interfaces or need a simple deployment process, Sdcb Chats can meet your needs. It supports dynamic management of multiple large language model interfaces, integrates visual models to enhance user interaction experience, provides fine-grained user permission settings for security, real-time tracking and management of user account balances, easy addition, deletion, and configuration of models, transparently forwards user chat requests based on the OpenAI protocol, supports multiple databases including SQLite, SQL Server, and PostgreSQL, compatible with various file services such as local files, AWS S3, Minio, Aliyun OSS, Azure Blob Storage, and supports multiple login methods including Keycloak SSO and phone SMS verification.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.