GitHubSentinel

GitHub Sentinel 是专为大模型(LLMs)时代打造的智能信息检索和高价值内容挖掘 AI Agent。它面向那些需要高频次、大量信息获取的用户,特别是开源爱好者、个人开发者和投资人等。GitHub Sentinel 不仅能帮助用户自动跟踪和分析 GitHub 开源项目 的最新动态,还能快速扩展到其他信息渠道,如 Hacker News 的热门话题,提供更全面的信息挖掘与分析能力。

Stars: 61

GitHub Sentinel is an intelligent information retrieval and high-value content mining AI Agent designed for the era of large models (LLMs). It is aimed at users who need frequent and large-scale information retrieval, especially open source enthusiasts, individual developers, and investors. The main features include subscription management, update retrieval, notification system, report generation, multi-model support, scheduled tasks, graphical interface, containerization, continuous integration, and the ability to track and analyze the latest dynamics of GitHub open source projects and expand to other information channels like Hacker News for comprehensive information mining and analysis capabilities.

README:

English | 中文

GitHub Sentinel 是专为大模型(LLMs)时代打造的智能信息检索和高价值内容挖掘 AI Agent。它面向那些需要高频次、大量信息获取的用户,特别是开源爱好者、个人开发者和投资人等。

- 订阅管理:轻松管理和跟踪您关注的 GitHub 仓库。

- 更新检索:自动检索并汇总订阅仓库的最新动态,包括提交记录、问题和拉取请求。

- 通知系统:通过电子邮件等方式,实时通知订阅者项目的最新进展。

- 报告生成:基于检索到的更新生成详细的项目进展报告,支持多种格式和模板,满足不同需求。

- 多模型支持:结合 OpenAI 和 Ollama 模型,生成自然语言项目报告,提供更智能、精准的信息服务。

- 定时任务:支持以守护进程方式执行定时任务,确保信息更新及时获取。

- 图形化界面:基于 Gradio 实现了简单易用的 GUI 操作模式,降低使用门槛。

- 容器化:项目支持 Docker 构建和容器化部署,便于在不同环境中快速部署和运行。

- 持续集成:实现了完备的单元测试,便于进一步配置生产级 CI/CD 流程,确保项目的稳定性和高质量交付。

GitHub Sentinel 不仅能帮助用户自动跟踪和分析 GitHub 开源项目 的最新动态,还能快速扩展到其他信息渠道,如 Hacker News 的热门话题,提供更全面的信息挖掘与分析能力。

首先,安装所需的依赖项:

pip install -r requirements.txt编辑 config.json 文件,以设置您的 GitHub Token、Email 设置(以腾讯企微邮箱为例)、订阅文件、更新设置,大模型服务配置(支持 OpenAI GPT API 和 Ollama 私有化大模型服务),以及自动检索和生成的报告类型(GitHub项目进展, Hacker News 热门话题和前沿技术趋势):

{

"github": {

"token": "your_github_token",

"subscriptions_file": "subscriptions.json",

"progress_frequency_days": 1,

"progress_execution_time": "08:00"

},

"email": {

"smtp_server": "smtp.exmail.qq.com",

"smtp_port": 465,

"from": "[email protected]",

"password": "your_email_password",

"to": "[email protected]"

},

"llm": {

"model_type": "ollama",

"openai_model_name": "gpt-4o-mini",

"ollama_model_name": "llama3",

"ollama_api_url": "http://localhost:11434/api/chat"

},

"report_types": [

"github",

"hacker_news_hours_topic",

"hacker_news_daily_report"

],

"slack": {

"webhook_url": "your_slack_webhook_url"

}

}出于安全考虑: GitHub Token 和 Email Password 的设置均支持使用环境变量进行配置,以避免明文配置重要信息,如下所示:

# Github

export GITHUB_TOKEN="github_pat_xxx"

# Email

export EMAIL_PASSWORD="password"GitHub Sentinel 支持以下三种运行方式:

您可以从命令行交互式地运行该应用:

python src/command_tool.py在此模式下,您可以手动输入命令来管理订阅、检索更新和生成报告。

要将该应用作为后台服务(守护进程)运行,它将根据相关配置定期自动更新。

您可以直接使用守护进程管理脚本 daemon_control.sh 来启动、查询状态、关闭和重启:

-

启动服务:

$ ./daemon_control.sh start Starting DaemonProcess... DaemonProcess started.

- 这将启动[./src/daemon_process.py],按照

config.json中设置的更新频率和时间点定期生成报告,并发送邮件。 - 本次服务日志将保存到

logs/DaemonProcess.log文件中。同时,历史累计日志也将同步追加到logs/app.log日志文件中。

- 这将启动[./src/daemon_process.py],按照

-

查询服务状态:

$ ./daemon_control.sh status DaemonProcess is running.

-

关闭服务:

$ ./daemon_control.sh stop Stopping DaemonProcess... DaemonProcess stopped.

-

重启服务:

$ ./daemon_control.sh restart Stopping DaemonProcess... DaemonProcess stopped. Starting DaemonProcess... DaemonProcess started.

要使用 Gradio 界面运行应用,允许用户通过 Web 界面与该工具交互:

python src/gradio_server.py- 这将在您的机器上启动一个 Web 服务器,允许您通过用户友好的界面管理订阅和生成报告。

- 默认情况下,Gradio 服务器将可在

http://localhost:7860访问,但如果需要,您可以公开共享它。

Ollama 是一个私有化大模型管理工具,支持本地和容器化部署,命令行交互和 REST API 调用。

关于 Ollama 安装部署与私有化大模型服务发布的详细说明,请参考Ollama 安装部署与服务发布。

要在 GitHub Sentinel 中使用 Ollama 调用私有化大模型服务,请按照以下步骤进行安装和配置:

-

安装 Ollama: 请根据 Ollama 的官方文档下载并安装 Ollama 服务。Ollama 支持多种操作系统,包括 Linux、Windows 和 macOS。

-

启动 Ollama 服务: 安装完成后,通过以下命令启动 Ollama 服务:

ollama serve

默认情况下,Ollama API 将在

http://localhost:11434运行。 -

配置 Ollama 在 GitHub Sentinel 中使用: 在

config.json文件中,配置 Ollama API 的相关信息:{ "llm": { "model_type": "ollama", "ollama_model_name": "llama3", "ollama_api_url": "http://localhost:11434/api/chat" } } -

验证配置: 使用以下命令启动 GitHub Sentinel 并生成报告,以验证 Ollama 配置是否正确:

python src/command_tool.py

如果配置正确,您将能够通过 Ollama 模型生成报告。

为了确保代码的质量和可靠性,GitHub Sentinel 使用了 unittest 模块进行单元测试。关于 unittest 及其相关工具(如 @patch 和 MagicMock)的详细说明,请参考 单元测试详细说明。

validate_tests.sh 是一个用于运行单元测试并验证结果的 Shell 脚本。它在 Docker 镜像构建过程中被执行,以确保代码的正确性和稳定性。

- 脚本运行所有单元测试,并将结果输出到

test_results.txt文件中。 - 如果测试失败,脚本会输出测试结果并导致 Docker 构建失败。

- 如果所有测试通过,脚本会继续构建过程。

为了便于在各种环境中构建和部署 GitHub Sentinel 项目,我们提供了 Docker 支持。该支持包括以下文件和功能:

Dockerfile 是用于定义如何构建 Docker 镜像的配置文件。它描述了镜像的构建步骤,包括安装依赖、复制项目文件、运行单元测试等。

- 使用

python:3.10-slim作为基础镜像,并设置工作目录为/app。 - 复制项目的

requirements.txt文件并安装 Python 依赖。 - 复制项目的所有文件到容器,并赋予

validate_tests.sh脚本执行权限。 - 在构建过程中执行

validate_tests.sh脚本,以确保所有单元测试通过。如果测试失败,构建过程将中止。 - 构建成功后,将默认运行

src/main.py作为容器的入口点。

build_image.sh 是一个用于自动构建 Docker 镜像的 Shell 脚本。它从当前的 Git 分支获取分支名称,并将其用作 Docker 镜像的标签,便于在不同分支上生成不同的 Docker 镜像。

- 获取当前的 Git 分支名称,并将其用作 Docker 镜像的标签。

- 使用

docker build命令构建 Docker 镜像,并使用当前 Git 分支名称作为标签。

chmod +x build_image.sh

./build_image.sh通过这些脚本和配置文件,确保在不同的开发分支中,构建的 Docker 镜像都是基于通过单元测试的代码,从而提高了代码质量和部署的可靠性。

贡献是使开源社区成为学习、激励和创造的惊人之处。非常感谢你所做的任何贡献。如果你有任何建议或功能请求,请先开启一个议题讨论你想要改变的内容。

该项目根据 Apache-2.0 许可证的条款进行许可。详情请参见 LICENSE 文件。

Django Peng - [email protected]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for GitHubSentinel

Similar Open Source Tools

GitHubSentinel

GitHub Sentinel is an intelligent information retrieval and high-value content mining AI Agent designed for the era of large models (LLMs). It is aimed at users who need frequent and large-scale information retrieval, especially open source enthusiasts, individual developers, and investors. The main features include subscription management, update retrieval, notification system, report generation, multi-model support, scheduled tasks, graphical interface, containerization, continuous integration, and the ability to track and analyze the latest dynamics of GitHub open source projects and expand to other information channels like Hacker News for comprehensive information mining and analysis capabilities.

Code-Interpreter-Api

Code Interpreter API is a project that combines a scheduling center with a sandbox environment, dedicated to creating the world's best code interpreter. It aims to provide a secure, reliable API interface for remotely running code and obtaining execution results, accelerating the development of various AI agents, and being a boon to many AI enthusiasts. The project innovatively combines Docker container technology to achieve secure isolation and execution of Python code. Additionally, the project supports storing generated image data in a PostgreSQL database and accessing it through API endpoints, providing rich data processing and storage capabilities.

MINI_LLM

This project is a personal implementation and reproduction of a small-parameter Chinese LLM. It mainly refers to these two open source projects: https://github.com/charent/Phi2-mini-Chinese and https://github.com/DLLXW/baby-llama2-chinese. It includes the complete process of pre-training, SFT instruction fine-tuning, DPO, and PPO (to be done). I hope to share it with everyone and hope that everyone can work together to improve it!

chatgpt-web-sea

ChatGPT Web Sea is an open-source project based on ChatGPT-web for secondary development. It supports all models that comply with the OpenAI interface standard, allows for model selection, configuration, and extension, and is compatible with OneAPI. The tool includes a Chinese ChatGPT tuning guide, supports file uploads, and provides model configuration options. Users can interact with the tool through a web interface, configure models, and perform tasks such as model selection, API key management, and chat interface setup. The project also offers Docker deployment options and instructions for manual packaging.

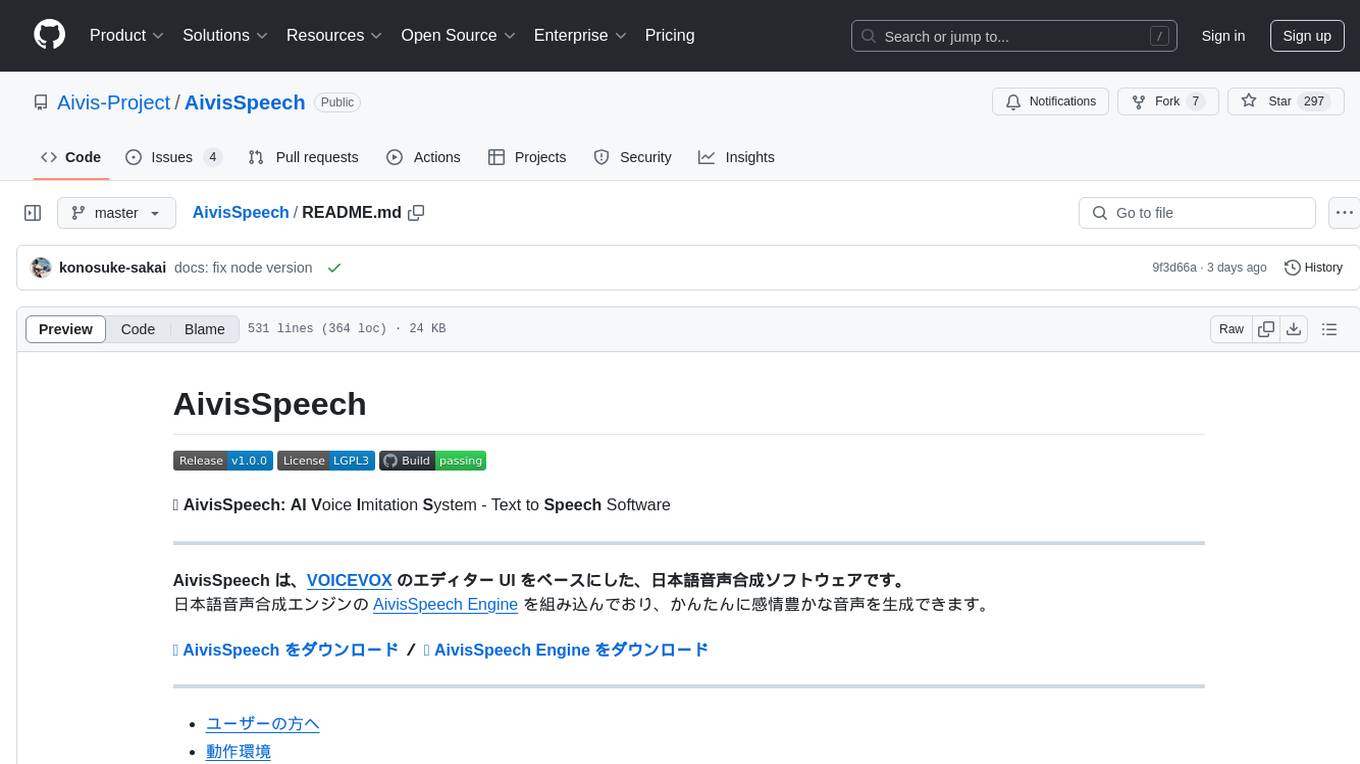

AivisSpeech

AivisSpeech is a Japanese text-to-speech software based on the VOICEVOX editor UI. It incorporates the AivisSpeech Engine for generating emotionally rich voices easily. It supports AIVMX format voice synthesis model files and specific model architectures like Style-Bert-VITS2. Users can download AivisSpeech and AivisSpeech Engine for Windows and macOS PCs, with minimum memory requirements specified. The development follows the latest version of VOICEVOX, focusing on minimal modifications, rebranding only where necessary, and avoiding refactoring. The project does not update documentation, maintain test code, or refactor unused features to prevent conflicts with VOICEVOX.

app-platform

AppPlatform is an advanced large-scale model application engineering aimed at simplifying the development process of AI applications through integrated declarative programming and low-code configuration tools. This project provides a powerful and scalable environment for software engineers and product managers to support the full-cycle development of AI applications from concept to deployment. The backend module is based on the FIT framework, utilizing a plugin-based development approach, including application management and feature extension modules. The frontend module is developed using React framework, focusing on core modules such as application development, application marketplace, intelligent forms, and plugin management. Key features include low-code graphical interface, powerful operators and scheduling platform, and sharing and collaboration capabilities. The project also provides detailed instructions for setting up and running both backend and frontend environments for development and testing.

meet-libai

The 'meet-libai' project aims to promote and popularize the cultural heritage of the Chinese poet Li Bai by constructing a knowledge graph of Li Bai and training a professional AI intelligent body using large models. The project includes features such as data preprocessing, knowledge graph construction, question-answering system development, and visualization exploration of the graph structure. It also provides code implementations for large models and RAG retrieval enhancement.

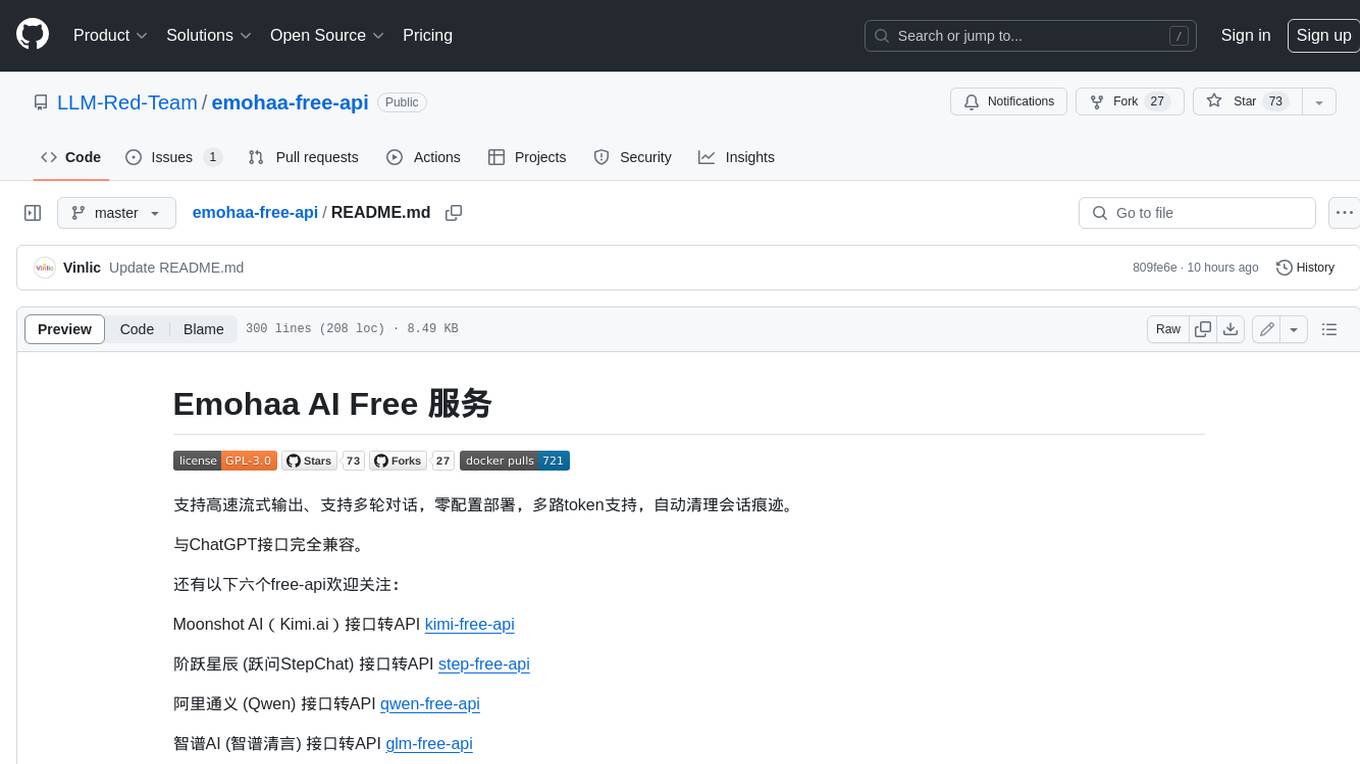

emohaa-free-api

Emohaa AI Free API is a free API that allows you to access the Emohaa AI chatbot. Emohaa AI is a powerful chatbot that can understand and respond to a wide range of natural language queries. It can be used for a variety of purposes, such as customer service, information retrieval, and language translation. The Emohaa AI Free API is easy to use and can be integrated into any application. It is a great way to add AI capabilities to your projects without having to build your own chatbot from scratch.

wealth-tracker

Wealth Tracker is a personal finance management tool designed to help users track their income, expenses, and investments in one place. With intuitive features and customizable categories, users can easily monitor their financial health and make informed decisions. The tool provides detailed reports and visualizations to analyze spending patterns and set financial goals. Whether you are budgeting, saving for a big purchase, or planning for retirement, Wealth Tracker offers a comprehensive solution to manage your money effectively.

HiveChat

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

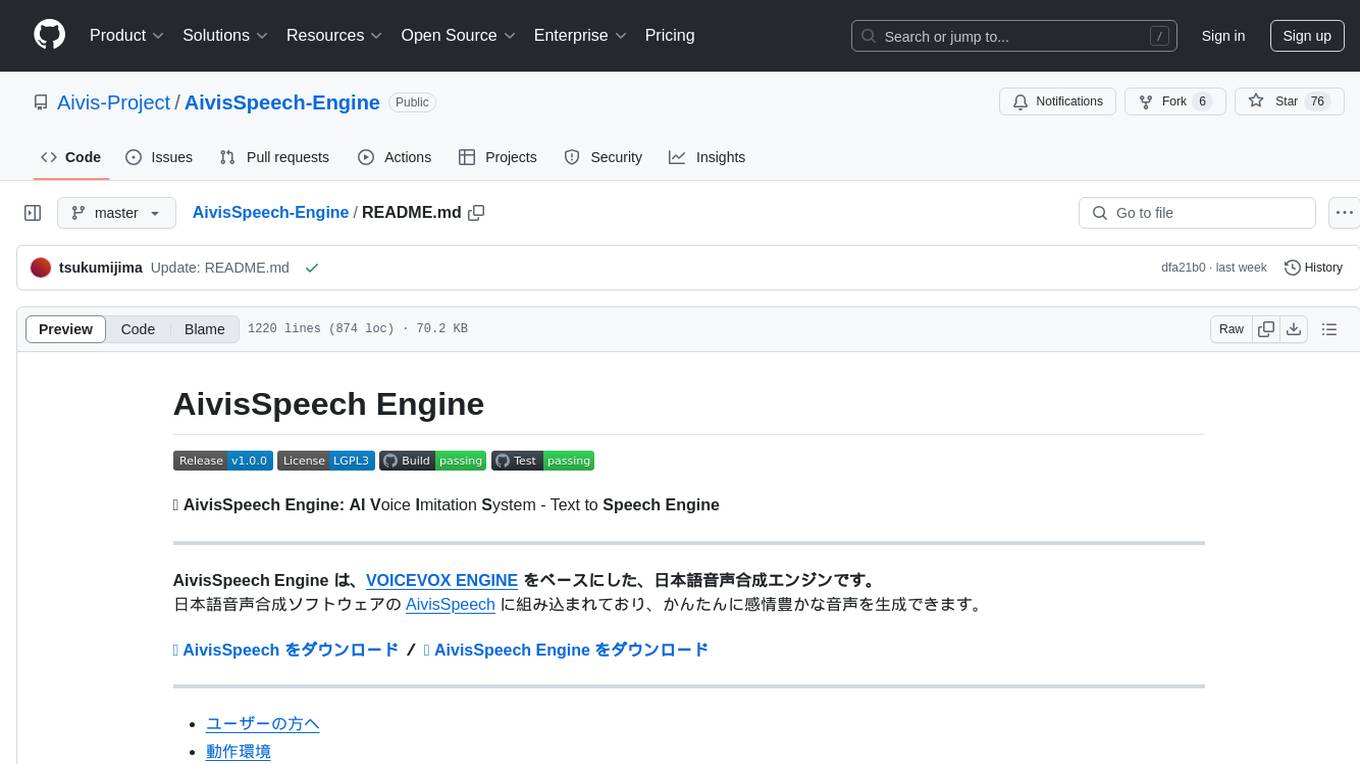

AivisSpeech-Engine

AivisSpeech-Engine is a powerful open-source tool for speech recognition and synthesis. It provides state-of-the-art algorithms for converting speech to text and text to speech. The tool is designed to be user-friendly and customizable, allowing developers to easily integrate speech capabilities into their applications. With AivisSpeech-Engine, users can transcribe audio recordings, create voice-controlled interfaces, and generate natural-sounding speech output. Whether you are building a virtual assistant, developing a speech-to-text application, or experimenting with voice technology, AivisSpeech-Engine offers a comprehensive solution for all your speech processing needs.

chatgpt-webui

ChatGPT WebUI is a user-friendly web graphical interface for various LLMs like ChatGPT, providing simplified features such as core ChatGPT conversation and document retrieval dialogues. It has been optimized for better RAG retrieval accuracy and supports various search engines. Users can deploy local language models easily and interact with different LLMs like GPT-4, Azure OpenAI, and more. The tool offers powerful functionalities like GPT4 API configuration, system prompt setup for role-playing, and basic conversation features. It also provides a history of conversations, customization options, and a seamless user experience with themes, dark mode, and PWA installation support.

Chat-Style-Bot

Chat-Style-Bot is an intelligent chatbot designed to mimic the chatting style of a specified individual. By analyzing and learning from WeChat chat records, Chat-Style-Bot can imitate your unique chatting style and become your personal chat assistant. Whether it's communicating with friends or handling daily conversations, Chat-Style-Bot can provide a natural, personalized interactive experience.

prompt-optimizer

Prompt Optimizer is a powerful AI prompt optimization tool that helps you write better AI prompts, improving AI output quality. It supports both web application and Chrome extension usage. The tool features intelligent optimization for prompt words, real-time testing to compare before and after optimization, integration with multiple mainstream AI models, client-side processing for security, encrypted local storage for data privacy, responsive design for user experience, and more.

oba-live-tool

The oba live tool is a small tool for Douyin small shops and Kuaishou Baiying live broadcasts. It features multiple account management, intelligent message assistant, automatic product explanation, AI automatic reply, and AI intelligent assistant. The tool requires Windows 10 or above, Chrome or Edge browser, and a valid account for Douyin small shops or Kuaishou Baiying. Users can download the tool from the Releases page, connect to the control panel, set API keys for AI functions, and configure auto-reply prompts. The tool is licensed under the MIT license.

EasyAIVtuber

EasyAIVtuber is a tool designed to animate 2D waifus by providing features like automatic idle actions, speaking animations, head nodding, singing animations, and sleeping mode. It also offers API endpoints and a web UI for interaction. The tool requires dependencies like torch and pre-trained models for optimal performance. Users can easily test the tool using OBS and UnityCapture, with options to customize character input, output size, simplification level, webcam output, model selection, port configuration, sleep interval, and movement extension. The tool also provides an API using Flask for actions like speaking based on audio, rhythmic movements, singing based on music and voice, stopping current actions, and changing images.

For similar tasks

GitHubSentinel

GitHub Sentinel is an intelligent information retrieval and high-value content mining AI Agent designed for the era of large models (LLMs). It is aimed at users who need frequent and large-scale information retrieval, especially open source enthusiasts, individual developers, and investors. The main features include subscription management, update retrieval, notification system, report generation, multi-model support, scheduled tasks, graphical interface, containerization, continuous integration, and the ability to track and analyze the latest dynamics of GitHub open source projects and expand to other information channels like Hacker News for comprehensive information mining and analysis capabilities.

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

supersonic

SuperSonic is a next-generation BI platform that integrates Chat BI (powered by LLM) and Headless BI (powered by semantic layer) paradigms. This integration ensures that Chat BI has access to the same curated and governed semantic data models as traditional BI. Furthermore, the implementation of both paradigms benefits from the integration: * Chat BI's Text2SQL gets augmented with context-retrieval from semantic models. * Headless BI's query interface gets extended with natural language API. SuperSonic provides a Chat BI interface that empowers users to query data using natural language and visualize the results with suitable charts. To enable such experience, the only thing necessary is to build logical semantic models (definition of metric/dimension/tag, along with their meaning and relationships) through a Headless BI interface. Meanwhile, SuperSonic is designed to be extensible and composable, allowing custom implementations to be added and configured with Java SPI. The integration of Chat BI and Headless BI has the potential to enhance the Text2SQL generation in two dimensions: 1. Incorporate data semantics (such as business terms, column values, etc.) into the prompt, enabling LLM to better understand the semantics and reduce hallucination. 2. Offload the generation of advanced SQL syntax (such as join, formula, etc.) from LLM to the semantic layer to reduce complexity. With these ideas in mind, we develop SuperSonic as a practical reference implementation and use it to power our real-world products. Additionally, to facilitate further development we decide to open source SuperSonic as an extensible framework.

DB-GPT

DB-GPT is an open source AI native data app development framework with AWEL(Agentic Workflow Expression Language) and agents. It aims to build infrastructure in the field of large models, through the development of multiple technical capabilities such as multi-model management (SMMF), Text2SQL effect optimization, RAG framework and optimization, Multi-Agents framework collaboration, AWEL (agent workflow orchestration), etc. Which makes large model applications with data simpler and more convenient.

Chat2DB

Chat2DB is an AI-driven data development and analysis platform that enables users to communicate with databases using natural language. It supports a wide range of databases, including MySQL, PostgreSQL, Oracle, SQLServer, SQLite, MariaDB, ClickHouse, DM, Presto, DB2, OceanBase, Hive, KingBase, MongoDB, Redis, and Snowflake. Chat2DB provides a user-friendly interface that allows users to query databases, generate reports, and explore data using natural language commands. It also offers a variety of features to help users improve their productivity, such as auto-completion, syntax highlighting, and error checking.

aide

AIDE (Advanced Intrusion Detection Environment) is a tool for monitoring file system changes. It can be used to detect unauthorized changes to monitored files and directories. AIDE was written to be a simple and free alternative to Tripwire. Features currently included in AIDE are as follows: o File attributes monitored: permissions, inode, user, group file size, mtime, atime, ctime, links and growing size. o Checksums and hashes supported: SHA1, MD5, RMD160, and TIGER. CRC32, HAVAL and GOST if Mhash support is compiled in. o Plain text configuration files and database for simplicity. o Rules, variables and macros that can be customized to local site or system policies. o Powerful regular expression support to selectively include or exclude files and directories to be monitored. o gzip database compression if zlib support is compiled in. o Free software licensed under the GNU General Public License v2.

OpsPilot

OpsPilot is an AI-powered operations navigator developed by the WeOps team. It leverages deep learning and LLM technologies to make operations plans interactive and generalize and reason about local operations knowledge. OpsPilot can be integrated with web applications in the form of a chatbot and primarily provides the following capabilities: 1. Operations capability precipitation: By depositing operations knowledge, operations skills, and troubleshooting actions, when solving problems, it acts as a navigator and guides users to solve operations problems through dialogue. 2. Local knowledge Q&A: By indexing local knowledge and Internet knowledge and combining the capabilities of LLM, it answers users' various operations questions. 3. LLM chat: When the problem is beyond the scope of OpsPilot's ability to handle, it uses LLM's capabilities to solve various long-tail problems.

aimeos-typo3

Aimeos is a professional, full-featured, and high-performance e-commerce extension for TYPO3. It can be installed in an existing TYPO3 website within 5 minutes and can be adapted, extended, overwritten, and customized to meet specific needs.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.