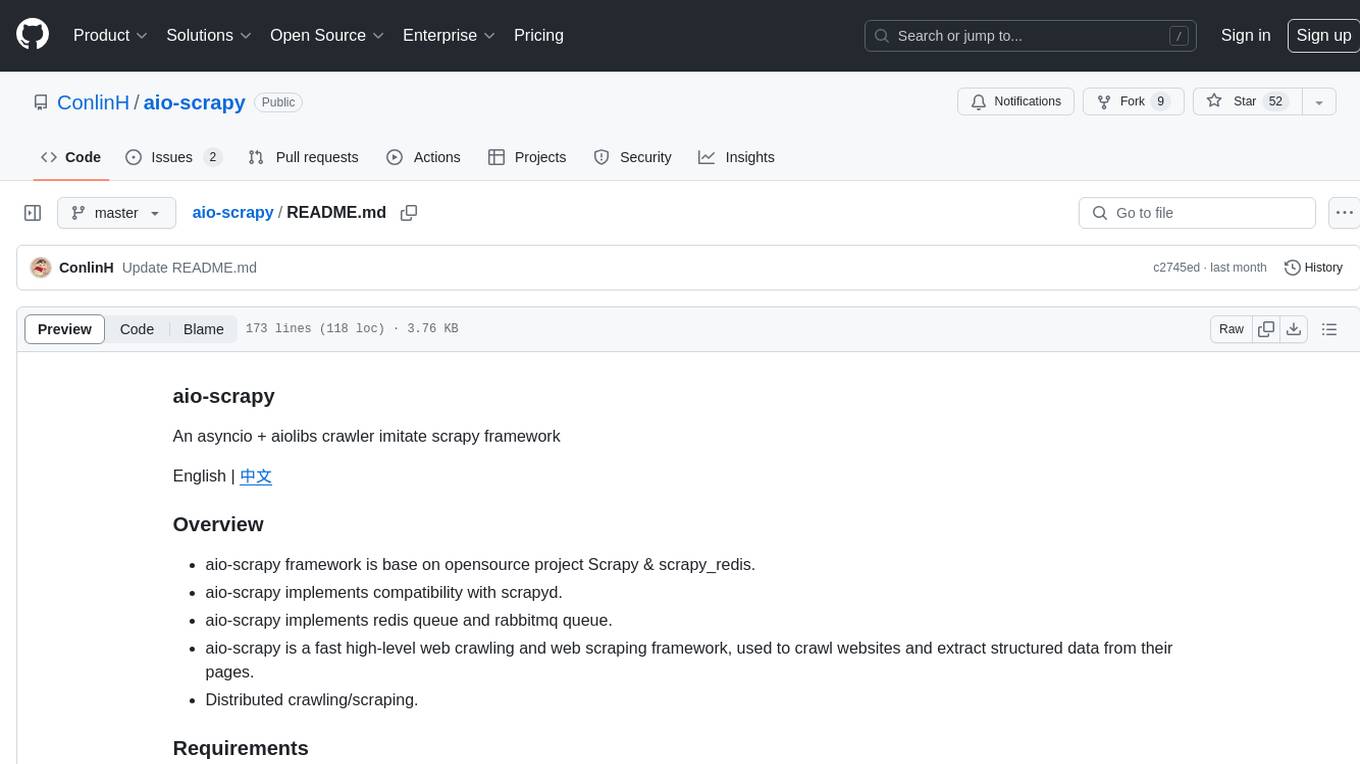

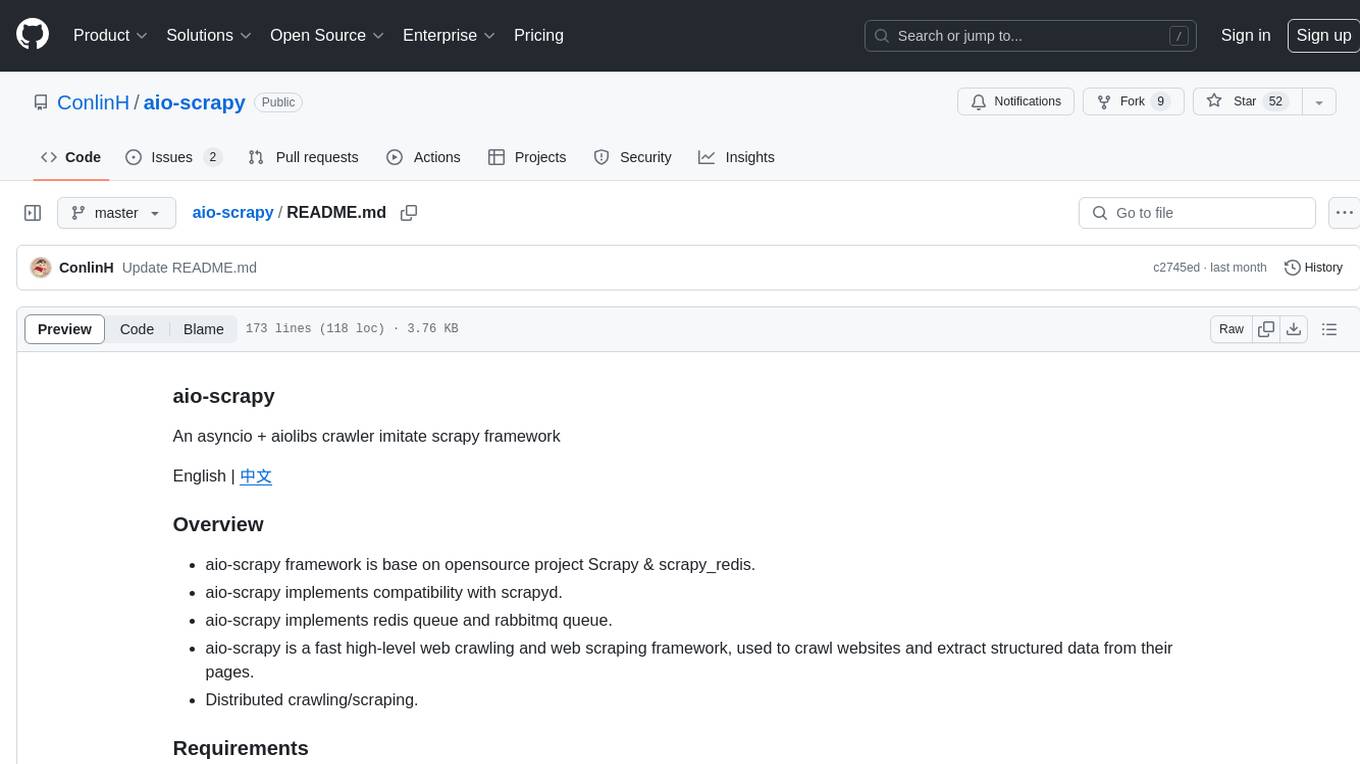

aio-scrapy

Implement scrapy with asyncio

Stars: 52

Aio-scrapy is an asyncio-based web crawling and web scraping framework inspired by Scrapy. It supports distributed crawling/scraping, implements compatibility with scrapyd, and provides options for using redis queue and rabbitmq queue. The framework is designed for fast extraction of structured data from websites. Aio-scrapy requires Python 3.9+ and is compatible with Linux, Windows, macOS, and BSD systems.

README:

An asyncio + aiolibs crawler imitate scrapy framework

English | 中文

- aio-scrapy framework is base on opensource project Scrapy & scrapy_redis.

- aio-scrapy implements compatibility with scrapyd.

- aio-scrapy implements redis queue and rabbitmq queue.

- aio-scrapy is a fast high-level web crawling and web scraping framework, used to crawl websites and extract structured data from their pages.

- Distributed crawling/scraping.

- Python 3.9+

- Works on Linux, Windows, macOS, BSD

The quick way:

# Install the latest aio-scrapy

pip install git+https://github.com/ConlinH/aio-scrapy

# default

pip install aio-scrapy

# Install all dependencies

pip install aio-scrapy[all]

# When you need to use mysql/httpx/rabbitmq/mongo

pip install aio-scrapy[aiomysql,httpx,aio-pika,mongo]aioscrapy startproject project_quotescd project_quotes

aioscrapy genspider quotes

quotes.py

from aioscrapy.spiders import Spider

class QuotesMemorySpider(Spider):

name = 'QuotesMemorySpider'

start_urls = ['https://quotes.toscrape.com']

async def parse(self, response):

for quote in response.css('div.quote'):

yield {

'author': quote.xpath('span/small/text()').get(),

'text': quote.css('span.text::text').get(),

}

next_page = response.css('li.next a::attr("href")').get()

if next_page is not None:

yield response.follow(next_page, self.parse)

if __name__ == '__main__':

QuotesMemorySpider.start()run the spider:

aioscrapy crawl quotesaioscrapy genspider single_quotes -t singlesingle_quotes.py:

from aioscrapy.spiders import Spider

class QuotesMemorySpider(Spider):

name = 'QuotesMemorySpider'

custom_settings = {

"USER_AGENT": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.198 Safari/537.36",

'CLOSE_SPIDER_ON_IDLE': True,

# 'DOWNLOAD_DELAY': 3,

# 'RANDOMIZE_DOWNLOAD_DELAY': True,

# 'CONCURRENT_REQUESTS': 1,

# 'LOG_LEVEL': 'INFO'

}

start_urls = ['https://quotes.toscrape.com']

@staticmethod

async def process_request(request, spider):

""" request middleware """

pass

@staticmethod

async def process_response(request, response, spider):

""" response middleware """

return response

@staticmethod

async def process_exception(request, exception, spider):

""" exception middleware """

pass

async def parse(self, response):

for quote in response.css('div.quote'):

yield {

'author': quote.xpath('span/small/text()').get(),

'text': quote.css('span.text::text').get(),

}

next_page = response.css('li.next a::attr("href")').get()

if next_page is not None:

yield response.follow(next_page, self.parse)

async def process_item(self, item):

print(item)

if __name__ == '__main__':

QuotesMemorySpider.start()run the spider:

aioscrapy runspider quotes.pyaioscrapy -hplease submit your sugguestion to owner by issue

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aio-scrapy

Similar Open Source Tools

aio-scrapy

Aio-scrapy is an asyncio-based web crawling and web scraping framework inspired by Scrapy. It supports distributed crawling/scraping, implements compatibility with scrapyd, and provides options for using redis queue and rabbitmq queue. The framework is designed for fast extraction of structured data from websites. Aio-scrapy requires Python 3.9+ and is compatible with Linux, Windows, macOS, and BSD systems.

llm-sandbox

LLM Sandbox is a lightweight and portable sandbox environment designed to securely execute large language model (LLM) generated code in a safe and isolated manner using Docker containers. It provides an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs. The tool supports multiple programming languages, offers flexibility with predefined Docker images or custom Dockerfiles, and allows scalability with support for Kubernetes and remote Docker hosts.

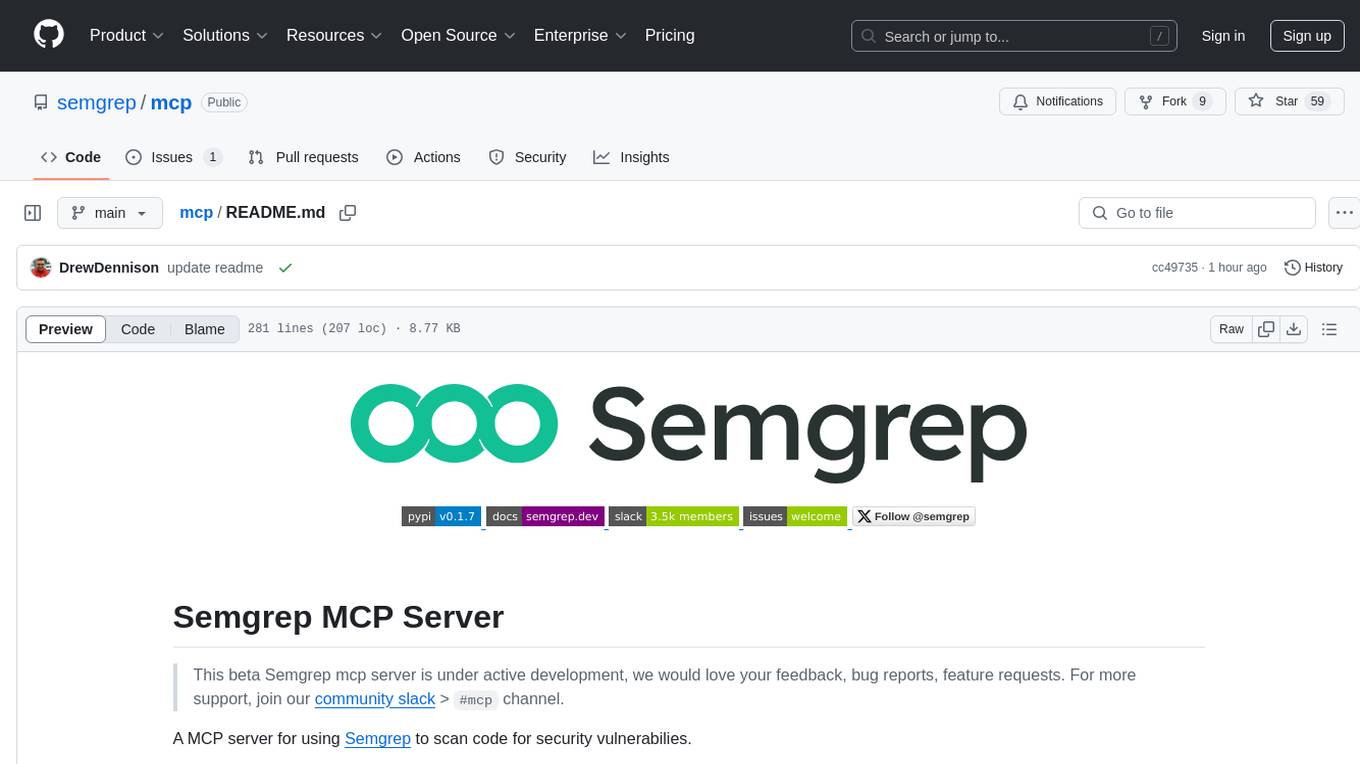

mcp

Semgrep MCP Server is a beta server under active development for using Semgrep to scan code for security vulnerabilities. It provides a Model Context Protocol (MCP) for various coding tools to get specialized help in tasks. Users can connect to Semgrep AppSec Platform, scan code for vulnerabilities, customize Semgrep rules, analyze and filter scan results, and compare results. The tool is published on PyPI as semgrep-mcp and can be installed using pip, pipx, uv, poetry, or other methods. It supports CLI and Docker environments for running the server. Integration with VS Code is also available for quick installation. The project welcomes contributions and is inspired by core technologies like Semgrep and MCP, as well as related community projects and tools.

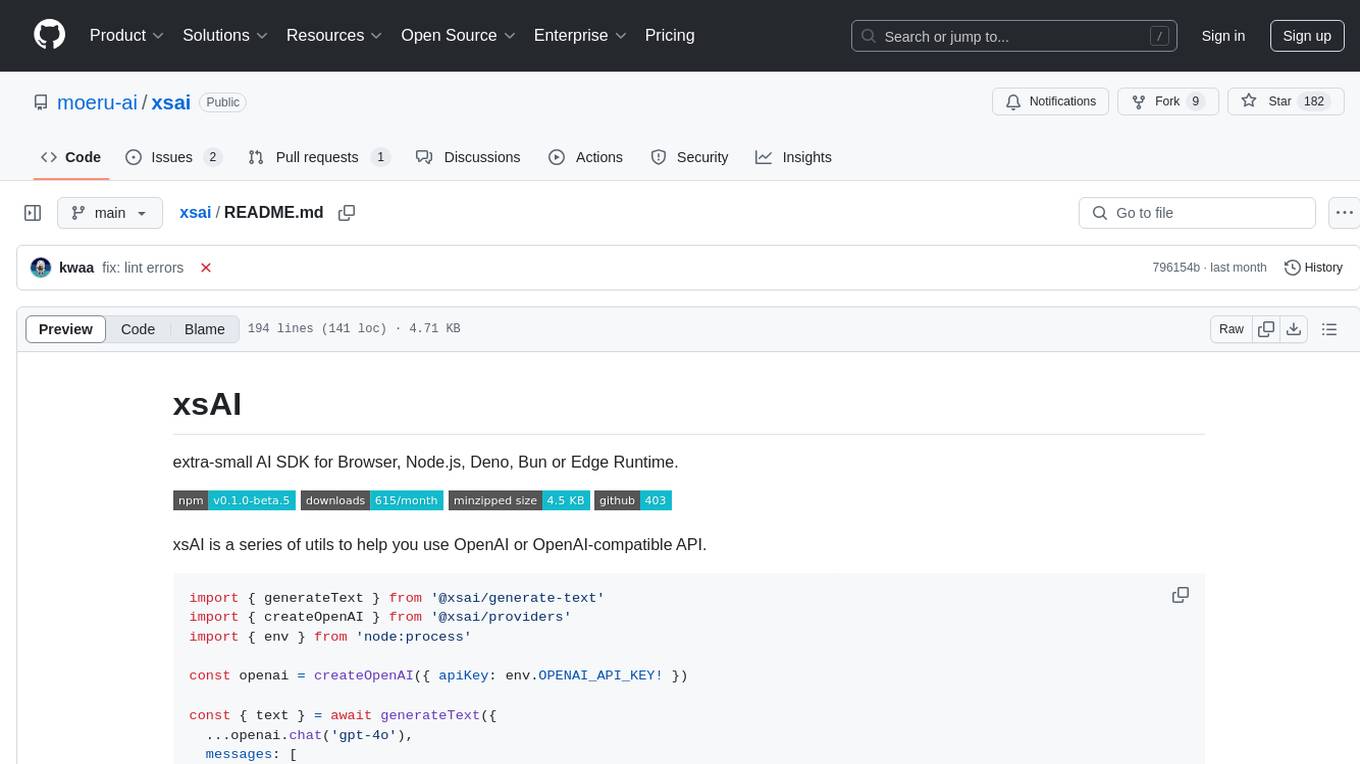

xsai

xsAI is an extra-small AI SDK designed for Browser, Node.js, Deno, Bun, or Edge Runtime. It provides a series of utils to help users utilize OpenAI or OpenAI-compatible APIs. The SDK is lightweight and efficient, using a variety of methods to minimize its size. It is runtime-agnostic, working seamlessly across different environments without depending on Node.js Built-in Modules. Users can easily install specific utils like generateText or streamText, and leverage tools like weather to perform tasks such as getting the weather in a location.

partialjson

PartialJson is a Python library that allows users to parse partial and incomplete JSON data with ease. With just 3 lines of Python code, users can parse JSON data that may be missing key elements or contain errors. The library provides a simple solution for handling JSON data that may not be well-formed or complete, making it a valuable tool for data processing and manipulation tasks.

probe

Probe is an AI-friendly, fully local, semantic code search tool designed to power the next generation of AI coding assistants. It combines the speed of ripgrep with the code-aware parsing of tree-sitter to deliver precise results with complete code blocks, making it perfect for large codebases and AI-driven development workflows. Probe supports various features like AI-friendly code extraction, fully local operation without external APIs, fast scanning of large codebases, accurate code structure parsing, re-rankers and NLP methods for better search results, multi-language support, interactive AI chat mode, and flexibility to run as a CLI tool, MCP server, or interactive AI chat.

UnrealGenAISupport

The Unreal Engine Generative AI Support Plugin is a tool designed to integrate various cutting-edge LLM/GenAI models into Unreal Engine for game development. It aims to simplify the process of using AI models for game development tasks, such as controlling scene objects, generating blueprints, running Python scripts, and more. The plugin currently supports models from organizations like OpenAI, Anthropic, XAI, Google Gemini, Meta AI, Deepseek, and Baidu. It provides features like API support, model control, generative AI capabilities, UI generation, project file management, and more. The plugin is still under development but offers a promising solution for integrating AI models into game development workflows.

flyte-sdk

Flyte 2 SDK is a pure Python tool for type-safe, distributed orchestration of agents, ML pipelines, and more. It allows users to write data pipelines, ML training jobs, and distributed compute in Python without any DSL constraints. With features like async-first parallelism and fine-grained observability, Flyte 2 offers a seamless workflow experience. Users can leverage core concepts like TaskEnvironments for container configuration, pure Python workflows for flexibility, and async parallelism for distributed execution. Advanced features include sub-task observability with tracing and remote task execution. The tool also provides native Jupyter integration for running and monitoring workflows directly from notebooks. Configuration and deployment are made easy with configuration files and commands for deploying and running workflows. Flyte 2 is licensed under the Apache 2.0 License.

ocode

OCode is a sophisticated terminal-native AI coding assistant that provides deep codebase intelligence and autonomous task execution. It seamlessly works with local Ollama models, bringing enterprise-grade AI assistance directly to your development workflow. OCode offers core capabilities such as terminal-native workflow, deep codebase intelligence, autonomous task execution, direct Ollama integration, and an extensible plugin layer. It can perform tasks like code generation & modification, project understanding, development automation, data processing, system operations, and interactive operations. The tool includes specialized tools for file operations, text processing, data processing, system operations, development tools, and integration. OCode enhances conversation parsing, offers smart tool selection, and provides performance improvements for coding tasks.

superagent

Superagent is an open-source AI assistant framework and API that allows developers to add powerful AI assistants to their applications. These assistants use large language models (LLMs), retrieval augmented generation (RAG), and generative AI to help users with a variety of tasks, including question answering, chatbot development, content generation, data aggregation, and workflow automation. Superagent is backed by Y Combinator and is part of YC W24.

klavis

Klavis AI is a production-ready solution for managing Multiple Communication Protocol (MCP) servers. It offers self-hosted solutions and a hosted service with enterprise OAuth support. With Klavis AI, users can easily deploy and manage over 50 MCP servers for various services like GitHub, Gmail, Google Sheets, YouTube, Slack, and more. The tool provides instant access to MCP servers, seamless authentication, and integration with AI frameworks, making it ideal for individuals and businesses looking to streamline their communication and data management workflows.

instructor

Instructor is a tool that provides structured outputs from Large Language Models (LLMs) in a reliable manner. It simplifies the process of extracting structured data by utilizing Pydantic for validation, type safety, and IDE support. With Instructor, users can define models and easily obtain structured data without the need for complex JSON parsing, error handling, or retries. The tool supports automatic retries, streaming support, and extraction of nested objects, making it production-ready for various AI applications. Trusted by a large community of developers and companies, Instructor is used by teams at OpenAI, Google, Microsoft, AWS, and YC startups.

mcp-ui

mcp-ui is a collection of SDKs that bring interactive web components to the Model Context Protocol (MCP). It allows servers to define reusable UI snippets, render them securely in the client, and react to their actions in the MCP host environment. The SDKs include @mcp-ui/server (TypeScript) for generating UI resources on the server, @mcp-ui/client (TypeScript) for rendering UI components on the client, and mcp_ui_server (Ruby) for generating UI resources in a Ruby environment. The project is an experimental community playground for MCP UI ideas, with rapid iteration and enhancements.

e2m

E2M is a Python library that can parse and convert various file types into Markdown format. It supports the conversion of multiple file formats, including doc, docx, epub, html, htm, url, pdf, ppt, pptx, mp3, and m4a. The ultimate goal of the E2M project is to provide high-quality data for Retrieval-Augmented Generation (RAG) and model training or fine-tuning. The core architecture consists of a Parser responsible for parsing various file types into text or image data, and a Converter responsible for converting text or image data into Markdown format.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

npcpy

npcpy is a core library of the NPC Toolkit that enhances natural language processing pipelines and agent tooling. It provides a flexible framework for building applications and conducting research with LLMs. The tool supports various functionalities such as getting responses for agents, setting up agent teams, orchestrating jinx workflows, obtaining LLM responses, generating images, videos, audio, and more. It also includes a Flask server for deploying NPC teams, supports LiteLLM integration, and simplifies the development of NLP-based applications. The tool is versatile, supporting multiple models and providers, and offers a graphical user interface through NPC Studio and a command-line interface via NPC Shell.

For similar tasks

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

aio-scrapy

Aio-scrapy is an asyncio-based web crawling and web scraping framework inspired by Scrapy. It supports distributed crawling/scraping, implements compatibility with scrapyd, and provides options for using redis queue and rabbitmq queue. The framework is designed for fast extraction of structured data from websites. Aio-scrapy requires Python 3.9+ and is compatible with Linux, Windows, macOS, and BSD systems.

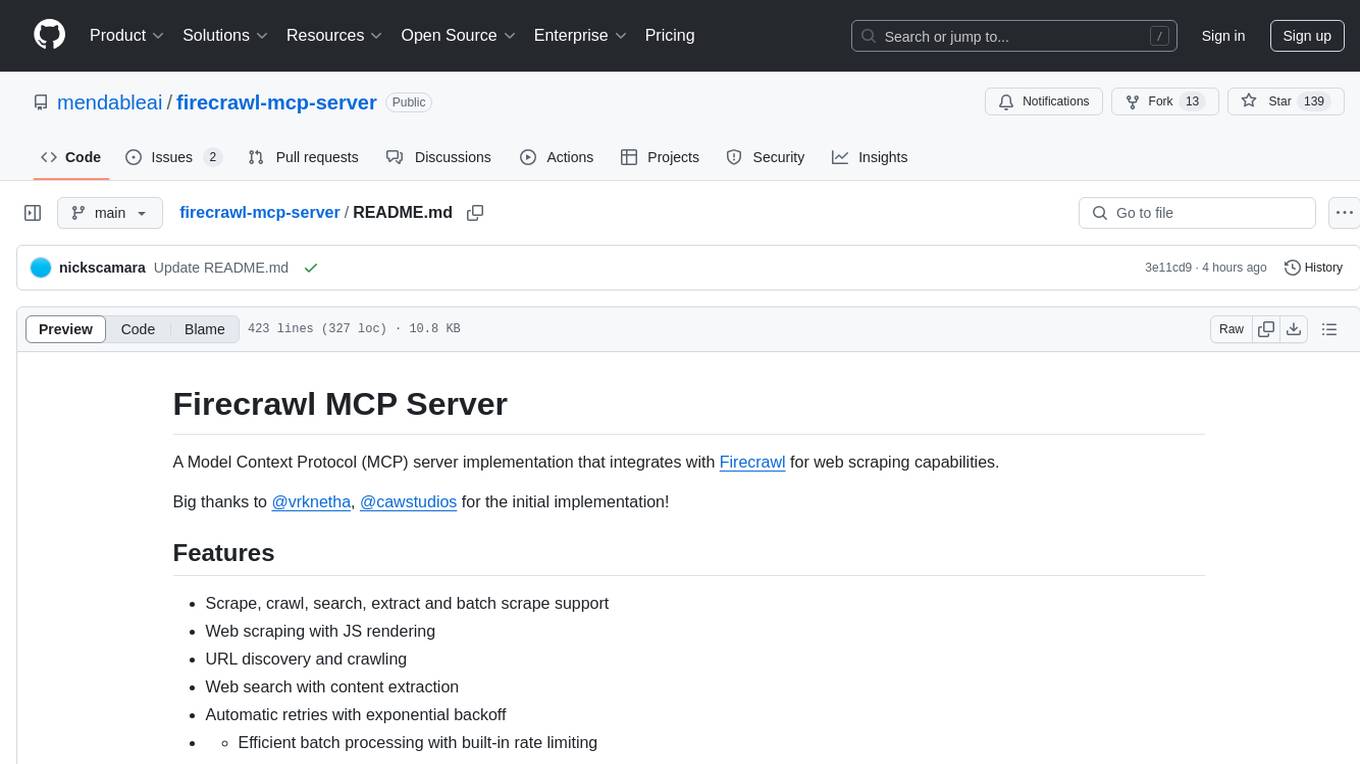

firecrawl-mcp-server

Firecrawl MCP Server is a Model Context Protocol (MCP) server implementation that integrates with Firecrawl for web scraping capabilities. It supports features like scrape, crawl, search, extract, and batch scrape. It provides web scraping with JS rendering, URL discovery, web search with content extraction, automatic retries with exponential backoff, credit usage monitoring, comprehensive logging system, support for cloud and self-hosted FireCrawl instances, mobile/desktop viewport support, and smart content filtering with tag inclusion/exclusion. The server includes configurable parameters for retry behavior and credit usage monitoring, rate limiting and batch processing capabilities, and tools for scraping, batch scraping, checking batch status, searching, crawling, and extracting structured information from web pages.

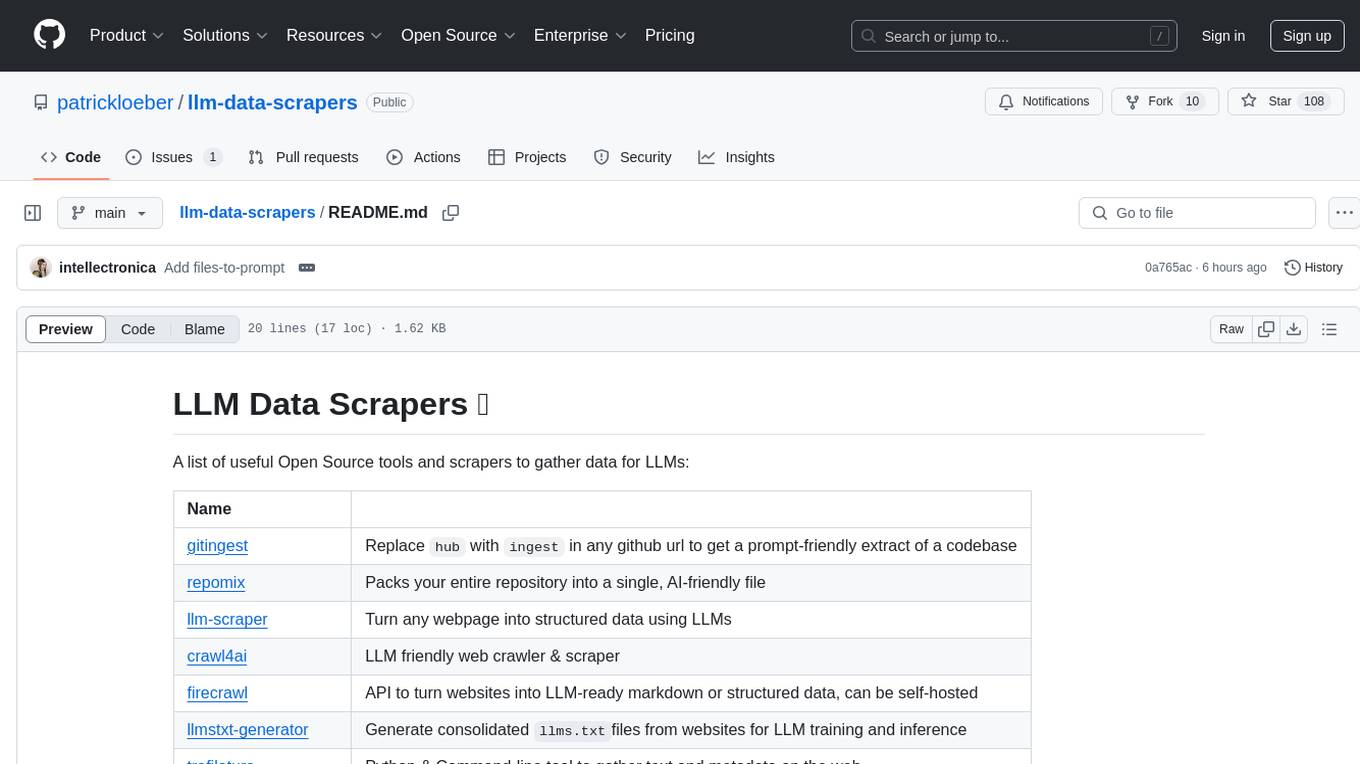

llm-data-scrapers

LLM Data Scrapers is a collection of open source tools and scrapers designed to gather data for Large Language Models (LLMs). The repository includes various tools such as gitingest for extracting codebases, repomix for packing repositories into AI-friendly files, llm-scraper for converting webpages into structured data, crawl4ai for web crawling, and firecrawl for turning websites into LLM-ready markdown or structured data. Additionally, the repository offers tools like llmstxt-generator for generating training data, trafilatura for gathering web text and metadata, RepoToTextForLLMs for fetching repo content, marker for converting PDFs, reader for converting URLs to LLM-friendly inputs, and files-to-prompt for concatenating files into prompts for LLMs.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

airbyte-connectors

This repository contains Airbyte connectors used in Faros and Faros Community Edition platforms as well as Airbyte Connector Development Kit (CDK) for JavaScript/TypeScript.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

unstract

Unstract is a no-code platform that enables users to launch APIs and ETL pipelines to structure unstructured documents. With Unstract, users can go beyond co-pilots by enabling machine-to-machine automation. Unstract's Prompt Studio provides a simple, no-code approach to creating prompts for LLMs, vector databases, embedding models, and text extractors. Users can then configure Prompt Studio projects as API deployments or ETL pipelines to automate critical business processes that involve complex documents. Unstract supports a wide range of LLM providers, vector databases, embeddings, text extractors, ETL sources, and ETL destinations, providing users with the flexibility to choose the best tools for their needs.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.