firecrawl-mcp-server

Official Firecrawl MCP Server - Adds powerful web scraping to Cursor, Claude and any other LLM clients.

Stars: 116

Firecrawl MCP Server is a Model Context Protocol (MCP) server implementation that integrates with Firecrawl for web scraping capabilities. It supports features like scrape, crawl, search, extract, and batch scrape. It provides web scraping with JS rendering, URL discovery, web search with content extraction, automatic retries with exponential backoff, credit usage monitoring, comprehensive logging system, support for cloud and self-hosted FireCrawl instances, mobile/desktop viewport support, and smart content filtering with tag inclusion/exclusion. The server includes configurable parameters for retry behavior and credit usage monitoring, rate limiting and batch processing capabilities, and tools for scraping, batch scraping, checking batch status, searching, crawling, and extracting structured information from web pages.

README:

A Model Context Protocol (MCP) server implementation that integrates with Firecrawl for web scraping capabilities.

Big thanks to @vrknetha, @cawstudios for the initial implementation!

- Scrape, crawl, search, extract and batch scrape support

- Web scraping with JS rendering

- URL discovery and crawling

- Web search with content extraction

- Automatic retries with exponential backoff

-

- Efficient batch processing with built-in rate limiting

- Credit usage monitoring for cloud API

- Comprehensive logging system

- Support for cloud and self-hosted FireCrawl instances

- Mobile/Desktop viewport support

- Smart content filtering with tag inclusion/exclusion

env FIRECRAWL_API_KEY=fc-YOUR_API_KEY npx -y firecrawl-mcpnpm install -g firecrawl-mcpConfiguring Cursor 🖥️ Note: Requires Cursor version 0.45.6+

To configure FireCrawl MCP in Cursor:

- Open Cursor Settings

- Go to Features > MCP Servers

- Click "+ Add New MCP Server"

- Enter the following:

- Name: "firecrawl-mcp" (or your preferred name)

- Type: "command"

- Command:

env FIRECRAWL_API_KEY=your-api-key npx -y firecrawl-mcp

Replace your-api-key with your FireCrawl API key.

After adding, refresh the MCP server list to see the new tools. The Composer Agent will automatically use FireCrawl MCP when appropriate, but you can explicitly request it by describing your web scraping needs. Access the Composer via Command+L (Mac), select "Agent" next to the submit button, and enter your query.

To install FireCrawl for Claude Desktop automatically via Smithery:

npx -y @smithery/cli install @mendableai/mcp-server-firecrawl --client claude-

FIRECRAWL_API_KEY: Your FireCrawl API key- Required when using cloud API (default)

- Optional when using self-hosted instance with

FIRECRAWL_API_URL

-

FIRECRAWL_API_URL(Optional): Custom API endpoint for self-hosted instances- Example:

https://firecrawl.your-domain.com - If not provided, the cloud API will be used (requires API key)

- Example:

-

FIRECRAWL_RETRY_MAX_ATTEMPTS: Maximum number of retry attempts (default: 3) -

FIRECRAWL_RETRY_INITIAL_DELAY: Initial delay in milliseconds before first retry (default: 1000) -

FIRECRAWL_RETRY_MAX_DELAY: Maximum delay in milliseconds between retries (default: 10000) -

FIRECRAWL_RETRY_BACKOFF_FACTOR: Exponential backoff multiplier (default: 2)

-

FIRECRAWL_CREDIT_WARNING_THRESHOLD: Credit usage warning threshold (default: 1000) -

FIRECRAWL_CREDIT_CRITICAL_THRESHOLD: Credit usage critical threshold (default: 100)

For cloud API usage with custom retry and credit monitoring:

# Required for cloud API

export FIRECRAWL_API_KEY=your-api-key

# Optional retry configuration

export FIRECRAWL_RETRY_MAX_ATTEMPTS=5 # Increase max retry attempts

export FIRECRAWL_RETRY_INITIAL_DELAY=2000 # Start with 2s delay

export FIRECRAWL_RETRY_MAX_DELAY=30000 # Maximum 30s delay

export FIRECRAWL_RETRY_BACKOFF_FACTOR=3 # More aggressive backoff

# Optional credit monitoring

export FIRECRAWL_CREDIT_WARNING_THRESHOLD=2000 # Warning at 2000 credits

export FIRECRAWL_CREDIT_CRITICAL_THRESHOLD=500 # Critical at 500 creditsFor self-hosted instance:

# Required for self-hosted

export FIRECRAWL_API_URL=https://firecrawl.your-domain.com

# Optional authentication for self-hosted

export FIRECRAWL_API_KEY=your-api-key # If your instance requires auth

# Custom retry configuration

export FIRECRAWL_RETRY_MAX_ATTEMPTS=10

export FIRECRAWL_RETRY_INITIAL_DELAY=500 # Start with faster retriesAdd this to your claude_desktop_config.json:

{

"mcpServers": {

"mcp-server-firecrawl": {

"command": "npx",

"args": ["-y", "mcp-server-firecrawl"],

"env": {

"FIRECRAWL_API_KEY": "YOUR_API_KEY_HERE",

"FIRECRAWL_RETRY_MAX_ATTEMPTS": "5",

"FIRECRAWL_RETRY_INITIAL_DELAY": "2000",

"FIRECRAWL_RETRY_MAX_DELAY": "30000",

"FIRECRAWL_RETRY_BACKOFF_FACTOR": "3",

"FIRECRAWL_CREDIT_WARNING_THRESHOLD": "2000",

"FIRECRAWL_CREDIT_CRITICAL_THRESHOLD": "500"

}

}

}

}The server includes several configurable parameters that can be set via environment variables. Here are the default values if not configured:

const CONFIG = {

retry: {

maxAttempts: 3, // Number of retry attempts for rate-limited requests

initialDelay: 1000, // Initial delay before first retry (in milliseconds)

maxDelay: 10000, // Maximum delay between retries (in milliseconds)

backoffFactor: 2, // Multiplier for exponential backoff

},

credit: {

warningThreshold: 1000, // Warn when credit usage reaches this level

criticalThreshold: 100, // Critical alert when credit usage reaches this level

},

};These configurations control:

-

Retry Behavior

- Automatically retries failed requests due to rate limits

- Uses exponential backoff to avoid overwhelming the API

- Example: With default settings, retries will be attempted at:

- 1st retry: 1 second delay

- 2nd retry: 2 seconds delay

- 3rd retry: 4 seconds delay (capped at maxDelay)

-

Credit Usage Monitoring

- Tracks API credit consumption for cloud API usage

- Provides warnings at specified thresholds

- Helps prevent unexpected service interruption

- Example: With default settings:

- Warning at 1000 credits remaining

- Critical alert at 100 credits remaining

The server utilizes FireCrawl's built-in rate limiting and batch processing capabilities:

- Automatic rate limit handling with exponential backoff

- Efficient parallel processing for batch operations

- Smart request queuing and throttling

- Automatic retries for transient errors

Scrape content from a single URL with advanced options.

{

"name": "firecrawl_scrape",

"arguments": {

"url": "https://example.com",

"formats": ["markdown"],

"onlyMainContent": true,

"waitFor": 1000,

"timeout": 30000,

"mobile": false,

"includeTags": ["article", "main"],

"excludeTags": ["nav", "footer"],

"skipTlsVerification": false

}

}Scrape multiple URLs efficiently with built-in rate limiting and parallel processing.

{

"name": "firecrawl_batch_scrape",

"arguments": {

"urls": ["https://example1.com", "https://example2.com"],

"options": {

"formats": ["markdown"],

"onlyMainContent": true

}

}

}Response includes operation ID for status checking:

{

"content": [

{

"type": "text",

"text": "Batch operation queued with ID: batch_1. Use firecrawl_check_batch_status to check progress."

}

],

"isError": false

}Check the status of a batch operation.

{

"name": "firecrawl_check_batch_status",

"arguments": {

"id": "batch_1"

}

}Search the web and optionally extract content from search results.

{

"name": "firecrawl_search",

"arguments": {

"query": "your search query",

"limit": 5,

"lang": "en",

"country": "us",

"scrapeOptions": {

"formats": ["markdown"],

"onlyMainContent": true

}

}

}Start an asynchronous crawl with advanced options.

{

"name": "firecrawl_crawl",

"arguments": {

"url": "https://example.com",

"maxDepth": 2,

"limit": 100,

"allowExternalLinks": false,

"deduplicateSimilarURLs": true

}

}Extract structured information from web pages using LLM capabilities. Supports both cloud AI and self-hosted LLM extraction.

{

"name": "firecrawl_extract",

"arguments": {

"urls": ["https://example.com/page1", "https://example.com/page2"],

"prompt": "Extract product information including name, price, and description",

"systemPrompt": "You are a helpful assistant that extracts product information",

"schema": {

"type": "object",

"properties": {

"name": { "type": "string" },

"price": { "type": "number" },

"description": { "type": "string" }

},

"required": ["name", "price"]

},

"allowExternalLinks": false,

"enableWebSearch": false,

"includeSubdomains": false

}

}Example response:

{

"content": [

{

"type": "text",

"text": {

"name": "Example Product",

"price": 99.99,

"description": "This is an example product description"

}

}

],

"isError": false

}-

urls: Array of URLs to extract information from -

prompt: Custom prompt for the LLM extraction -

systemPrompt: System prompt to guide the LLM -

schema: JSON schema for structured data extraction -

allowExternalLinks: Allow extraction from external links -

enableWebSearch: Enable web search for additional context -

includeSubdomains: Include subdomains in extraction

When using a self-hosted instance, the extraction will use your configured LLM. For cloud API, it uses FireCrawl's managed LLM service.

The server includes comprehensive logging:

- Operation status and progress

- Performance metrics

- Credit usage monitoring

- Rate limit tracking

- Error conditions

Example log messages:

[INFO] FireCrawl MCP Server initialized successfully

[INFO] Starting scrape for URL: https://example.com

[INFO] Batch operation queued with ID: batch_1

[WARNING] Credit usage has reached warning threshold

[ERROR] Rate limit exceeded, retrying in 2s...

The server provides robust error handling:

- Automatic retries for transient errors

- Rate limit handling with backoff

- Detailed error messages

- Credit usage warnings

- Network resilience

Example error response:

{

"content": [

{

"type": "text",

"text": "Error: Rate limit exceeded. Retrying in 2 seconds..."

}

],

"isError": true

}# Install dependencies

npm install

# Build

npm run build

# Run tests

npm test- Fork the repository

- Create your feature branch

- Run tests:

npm test - Submit a pull request

MIT License - see LICENSE file for details

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for firecrawl-mcp-server

Similar Open Source Tools

firecrawl-mcp-server

Firecrawl MCP Server is a Model Context Protocol (MCP) server implementation that integrates with Firecrawl for web scraping capabilities. It supports features like scrape, crawl, search, extract, and batch scrape. It provides web scraping with JS rendering, URL discovery, web search with content extraction, automatic retries with exponential backoff, credit usage monitoring, comprehensive logging system, support for cloud and self-hosted FireCrawl instances, mobile/desktop viewport support, and smart content filtering with tag inclusion/exclusion. The server includes configurable parameters for retry behavior and credit usage monitoring, rate limiting and batch processing capabilities, and tools for scraping, batch scraping, checking batch status, searching, crawling, and extracting structured information from web pages.

firecrawl-mcp-server

Firecrawl MCP Server is a Model Context Protocol (MCP) server implementation that integrates with Firecrawl for web scraping capabilities. It offers features such as web scraping, crawling, and discovery, search and content extraction, deep research and batch scraping, automatic retries and rate limiting, cloud and self-hosted support, and SSE support. The server can be configured to run with various tools like Cursor, Windsurf, SSE Local Mode, Smithery, and VS Code. It supports environment variables for cloud API and optional configurations for retry settings and credit usage monitoring. The server includes tools for scraping, batch scraping, mapping, searching, crawling, and extracting structured data from web pages. It provides detailed logging and error handling functionalities for robust performance.

ChannelMonitor

Channel Monitor is a tool for monitoring OneAPI/NewAPI channels by testing the availability of each channel's models at regular intervals. It updates available models in the database, supports exclusion of channels and models, configurable intervals, multiple database types, concurrent testing, request rate limiting, Uptime Kuma integration, update notifications via SMTP email and Telegram Bot, and JSON/YAML configuration formats.

typst-mcp

Typst MCP Server is an implementation of the Model Context Protocol (MCP) that facilitates interaction between AI models and Typst, a markup-based typesetting system. The server offers tools for converting between LaTeX and Typst, validating Typst syntax, and generating images from Typst code. It provides functions such as listing documentation chapters, retrieving specific chapters, converting LaTeX snippets to Typst, validating Typst syntax, and rendering Typst code to images. The server is designed to assist Language Model Managers (LLMs) in handling Typst-related tasks efficiently and accurately.

mcp-server-odoo

The MCP Server for Odoo is a tool that enables AI assistants like Claude to interact with Odoo ERP systems. Users can access business data, search records, create new entries, update existing data, and manage their Odoo instance through natural language. The server works with any Odoo instance and offers features like search and retrieve, create new records, update existing data, delete records, browse multiple records, count records, inspect model fields, secure access, smart pagination, LLM-optimized output, and YOLO Mode for quick access. Installation and configuration instructions are provided for different environments, along with troubleshooting tips. The tool supports various tasks such as searching and retrieving records, creating and managing records, listing models, updating records, deleting records, and accessing Odoo data through resource URIs.

mcp-omnisearch

mcp-omnisearch is a Model Context Protocol (MCP) server that acts as a unified gateway to multiple search providers and AI tools. It integrates Tavily, Perplexity, Kagi, Jina AI, Brave, Exa AI, and Firecrawl to offer a wide range of search, AI response, content processing, and enhancement features through a single interface. The server provides powerful search capabilities, AI response generation, content extraction, summarization, web scraping, structured data extraction, and more. It is designed to work flexibly with the API keys available, enabling users to activate only the providers they have keys for and easily add more as needed.

nexus

Nexus is a tool that acts as a unified gateway for multiple LLM providers and MCP servers. It allows users to aggregate, govern, and control their AI stack by connecting multiple servers and providers through a single endpoint. Nexus provides features like MCP Server Aggregation, LLM Provider Routing, Context-Aware Tool Search, Protocol Support, Flexible Configuration, Security features, Rate Limiting, and Docker readiness. It supports tool calling, tool discovery, and error handling for STDIO servers. Nexus also integrates with AI assistants, Cursor, Claude Code, and LangChain for seamless usage.

VectorETL

VectorETL is a lightweight ETL framework designed to assist Data & AI engineers in processing data for AI applications quickly. It streamlines the conversion of diverse data sources into vector embeddings and storage in various vector databases. The framework supports multiple data sources, embedding models, and vector database targets, simplifying the creation and management of vector search systems for semantic search, recommendation systems, and other vector-based operations.

ruby-openai

Use the OpenAI API with Ruby! 🤖🩵 Stream text with GPT-4, transcribe and translate audio with Whisper, or create images with DALL·E... Hire me | 🎮 Ruby AI Builders Discord | 🐦 Twitter | 🧠 Anthropic Gem | 🚂 Midjourney Gem ## Table of Contents * Ruby OpenAI * Table of Contents * Installation * Bundler * Gem install * Usage * Quickstart * With Config * Custom timeout or base URI * Extra Headers per Client * Logging * Errors * Faraday middleware * Azure * Ollama * Counting Tokens * Models * Examples * Chat * Streaming Chat * Vision * JSON Mode * Functions * Edits * Embeddings * Batches * Files * Finetunes * Assistants * Threads and Messages * Runs * Runs involving function tools * Image Generation * DALL·E 2 * DALL·E 3 * Image Edit * Image Variations * Moderations * Whisper * Translate * Transcribe * Speech * Errors * Development * Release * Contributing * License * Code of Conduct

ZerePy

ZerePy is an open-source Python framework for deploying agents on X using OpenAI or Anthropic LLMs. It offers CLI interface, Twitter integration, and modular connection system. Users can fine-tune models for creative outputs and create agents with specific tasks. The tool requires Python 3.10+, Poetry 1.5+, and API keys for LLM, OpenAI, Anthropic, and X API.

AICentral

AI Central is a powerful tool designed to take control of your AI services with minimal overhead. It is built on Asp.Net Core and dotnet 8, offering fast web-server performance. The tool enables advanced Azure APIm scenarios, PII stripping logging to Cosmos DB, token metrics through Open Telemetry, and intelligent routing features. AI Central supports various endpoint selection strategies, proxying asynchronous requests, custom OAuth2 authorization, circuit breakers, rate limiting, and extensibility through plugins. It provides an extensibility model for easy plugin development and offers enriched telemetry and logging capabilities for monitoring and insights.

RagaAI-Catalyst

RagaAI Catalyst is a comprehensive platform designed to enhance the management and optimization of LLM projects. It offers features such as project management, dataset management, evaluation management, trace management, prompt management, synthetic data generation, and guardrail management. These functionalities enable efficient evaluation and safeguarding of LLM applications.

mcp

Semgrep MCP Server is a beta server under active development for using Semgrep to scan code for security vulnerabilities. It provides a Model Context Protocol (MCP) for various coding tools to get specialized help in tasks. Users can connect to Semgrep AppSec Platform, scan code for vulnerabilities, customize Semgrep rules, analyze and filter scan results, and compare results. The tool is published on PyPI as semgrep-mcp and can be installed using pip, pipx, uv, poetry, or other methods. It supports CLI and Docker environments for running the server. Integration with VS Code is also available for quick installation. The project welcomes contributions and is inspired by core technologies like Semgrep and MCP, as well as related community projects and tools.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

crush

Crush is a versatile tool designed to enhance coding workflows in your terminal. It offers support for multiple LLMs, allows for flexible switching between models, and enables session-based work management. Crush is extensible through MCPs and works across various operating systems. It can be installed using package managers like Homebrew and NPM, or downloaded directly. Crush supports various APIs like Anthropic, OpenAI, Groq, and Google Gemini, and allows for customization through environment variables. The tool can be configured locally or globally, and supports LSPs for additional context. Crush also provides options for ignoring files, allowing tools, and configuring local models. It respects `.gitignore` files and offers logging capabilities for troubleshooting and debugging.

instructor

Instructor is a tool that provides structured outputs from Large Language Models (LLMs) in a reliable manner. It simplifies the process of extracting structured data by utilizing Pydantic for validation, type safety, and IDE support. With Instructor, users can define models and easily obtain structured data without the need for complex JSON parsing, error handling, or retries. The tool supports automatic retries, streaming support, and extraction of nested objects, making it production-ready for various AI applications. Trusted by a large community of developers and companies, Instructor is used by teams at OpenAI, Google, Microsoft, AWS, and YC startups.

For similar tasks

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

aio-scrapy

Aio-scrapy is an asyncio-based web crawling and web scraping framework inspired by Scrapy. It supports distributed crawling/scraping, implements compatibility with scrapyd, and provides options for using redis queue and rabbitmq queue. The framework is designed for fast extraction of structured data from websites. Aio-scrapy requires Python 3.9+ and is compatible with Linux, Windows, macOS, and BSD systems.

firecrawl-mcp-server

Firecrawl MCP Server is a Model Context Protocol (MCP) server implementation that integrates with Firecrawl for web scraping capabilities. It supports features like scrape, crawl, search, extract, and batch scrape. It provides web scraping with JS rendering, URL discovery, web search with content extraction, automatic retries with exponential backoff, credit usage monitoring, comprehensive logging system, support for cloud and self-hosted FireCrawl instances, mobile/desktop viewport support, and smart content filtering with tag inclusion/exclusion. The server includes configurable parameters for retry behavior and credit usage monitoring, rate limiting and batch processing capabilities, and tools for scraping, batch scraping, checking batch status, searching, crawling, and extracting structured information from web pages.

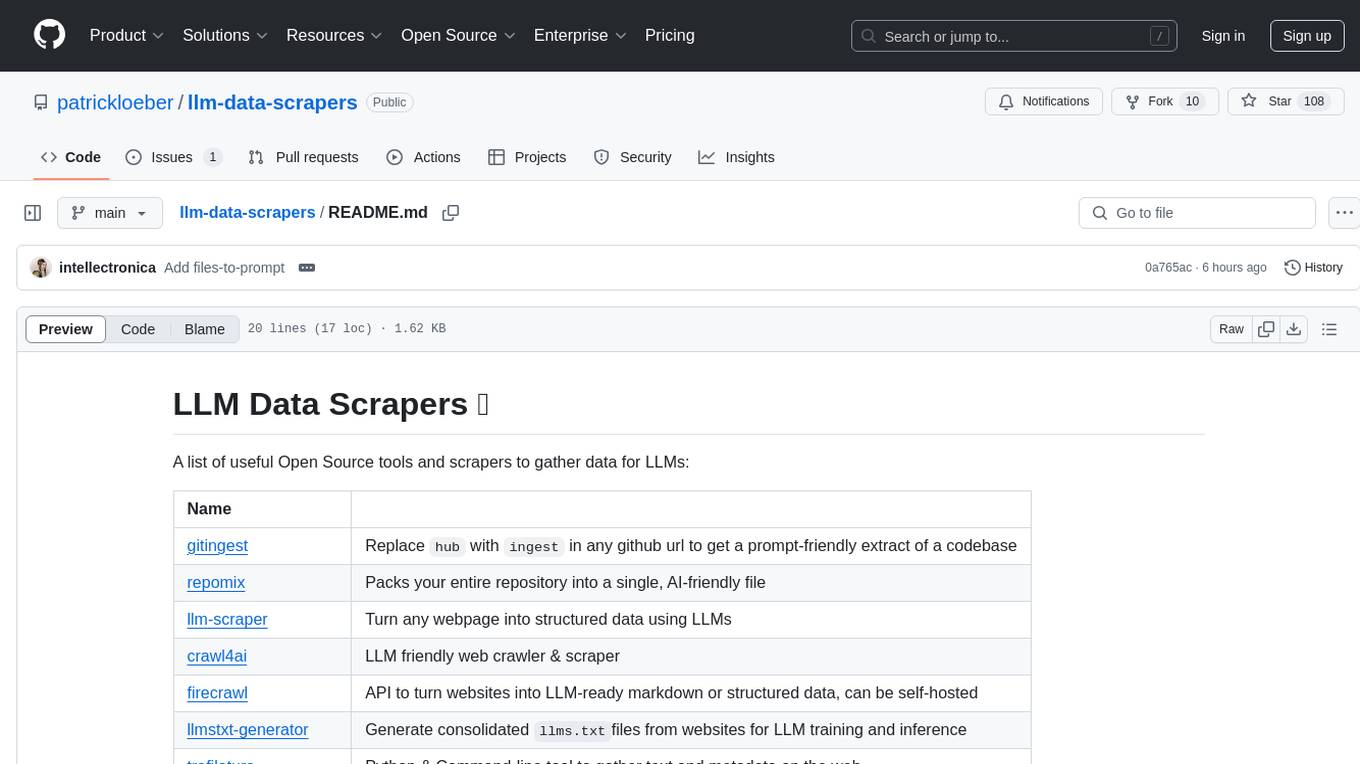

llm-data-scrapers

LLM Data Scrapers is a collection of open source tools and scrapers designed to gather data for Large Language Models (LLMs). The repository includes various tools such as gitingest for extracting codebases, repomix for packing repositories into AI-friendly files, llm-scraper for converting webpages into structured data, crawl4ai for web crawling, and firecrawl for turning websites into LLM-ready markdown or structured data. Additionally, the repository offers tools like llmstxt-generator for generating training data, trafilatura for gathering web text and metadata, RepoToTextForLLMs for fetching repo content, marker for converting PDFs, reader for converting URLs to LLM-friendly inputs, and files-to-prompt for concatenating files into prompts for LLMs.

WaterCrawl

WaterCrawl is a powerful web application that uses Python, Django, Scrapy, and Celery to crawl web pages and extract relevant data. It provides advanced web crawling and scraping capabilities, a powerful search engine, multi-language support, asynchronous processing, REST API with OpenAPI, rich ecosystem integrations, self-hosted and open-source options, and advanced results handling. The tool allows users to crawl websites with customizable options, search for relevant content across the web, monitor real-time progress of crawls, and process search results with customizable parameters.

extractor

Extractor is an AI-powered data extraction library for Laravel that leverages OpenAI's capabilities to effortlessly extract structured data from various sources, including images, PDFs, and emails. It features a convenient wrapper around OpenAI Chat and Completion endpoints, supports multiple input formats, includes a flexible Field Extractor for arbitrary data extraction, and integrates with Textract for OCR functionality. Extractor utilizes JSON Mode from the latest GPT-3.5 and GPT-4 models, providing accurate and efficient data extraction.

NeMo-Guardrails

NeMo Guardrails is an open-source toolkit for easily adding _programmable guardrails_ to LLM-based conversational applications. Guardrails (or "rails" for short) are specific ways of controlling the output of a large language model, such as not talking about politics, responding in a particular way to specific user requests, following a predefined dialog path, using a particular language style, extracting structured data, and more.

kor

Kor is a prototype tool designed to help users extract structured data from text using Language Models (LLMs). It generates prompts, sends them to specified LLMs, and parses the output. The tool works with the parsing approach and is integrated with the LangChain framework. Kor is compatible with pydantic v2 and v1, and schema is typed checked using pydantic. It is primarily used for extracting information from text based on provided reference examples and schema documentation. Kor is designed to work with all good-enough LLMs regardless of their support for function/tool calling or JSON modes.

For similar jobs

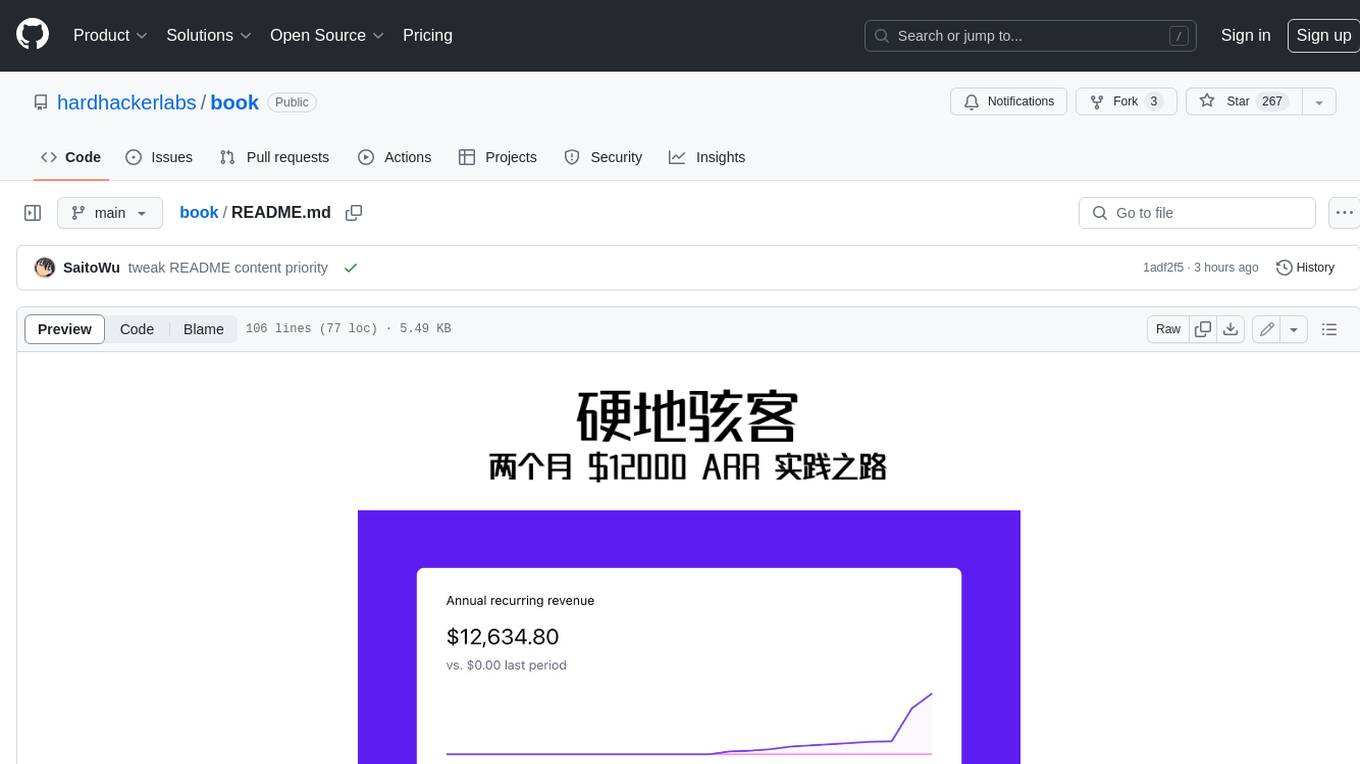

book

Podwise is an AI knowledge management app designed specifically for podcast listeners. With the Podwise platform, you only need to follow your favorite podcasts, such as "Hardcore Hackers". When a program is released, Podwise will use AI to transcribe, extract, summarize, and analyze the podcast content, helping you to break down the hard-core podcast knowledge. At the same time, it is connected to platforms such as Notion, Obsidian, Logseq, and Readwise, embedded in your knowledge management workflow, and integrated with content from other channels including news, newsletters, and blogs, helping you to improve your second brain 🧠.

extractor

Extractor is an AI-powered data extraction library for Laravel that leverages OpenAI's capabilities to effortlessly extract structured data from various sources, including images, PDFs, and emails. It features a convenient wrapper around OpenAI Chat and Completion endpoints, supports multiple input formats, includes a flexible Field Extractor for arbitrary data extraction, and integrates with Textract for OCR functionality. Extractor utilizes JSON Mode from the latest GPT-3.5 and GPT-4 models, providing accurate and efficient data extraction.

Scrapegraph-ai

ScrapeGraphAI is a Python library that uses Large Language Models (LLMs) and direct graph logic to create web scraping pipelines for websites, documents, and XML files. It allows users to extract specific information from web pages by providing a prompt describing the desired data. ScrapeGraphAI supports various LLMs, including Ollama, OpenAI, Gemini, and Docker, enabling users to choose the most suitable model for their needs. The library provides a user-friendly interface through its `SmartScraper` class, which simplifies the process of building and executing scraping pipelines. ScrapeGraphAI is open-source and available on GitHub, with extensive documentation and examples to guide users. It is particularly useful for researchers and data scientists who need to extract structured data from web pages for analysis and exploration.

databerry

Chaindesk is a no-code platform that allows users to easily set up a semantic search system for personal data without technical knowledge. It supports loading data from various sources such as raw text, web pages, files (Word, Excel, PowerPoint, PDF, Markdown, Plain Text), and upcoming support for web sites, Notion, and Airtable. The platform offers a user-friendly interface for managing datastores, querying data via a secure API endpoint, and auto-generating ChatGPT Plugins for each datastore. Chaindesk utilizes a Vector Database (Qdrant), Openai's text-embedding-ada-002 for embeddings, and has a chunk size of 1024 tokens. The technology stack includes Next.js, Joy UI, LangchainJS, PostgreSQL, Prisma, and Qdrant, inspired by the ChatGPT Retrieval Plugin.

auto-news

Auto-News is an automatic news aggregator tool that utilizes Large Language Models (LLM) to pull information from various sources such as Tweets, RSS feeds, YouTube videos, web articles, Reddit, and journal notes. The tool aims to help users efficiently read and filter content based on personal interests, providing a unified reading experience and organizing information effectively. It features feed aggregation with summarization, transcript generation for videos and articles, noise reduction, task organization, and deep dive topic exploration. The tool supports multiple LLM backends, offers weekly top-k aggregations, and can be deployed on Linux/MacOS using docker-compose or Kubernetes.

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

1filellm

1filellm is a command-line data aggregation tool designed for LLM ingestion. It aggregates and preprocesses data from various sources into a single text file, facilitating the creation of information-dense prompts for large language models. The tool supports automatic source type detection, handling of multiple file formats, web crawling functionality, integration with Sci-Hub for research paper downloads, text preprocessing, and token count reporting. Users can input local files, directories, GitHub repositories, pull requests, issues, ArXiv papers, YouTube transcripts, web pages, Sci-Hub papers via DOI or PMID. The tool provides uncompressed and compressed text outputs, with the uncompressed text automatically copied to the clipboard for easy pasting into LLMs.

Agently-Daily-News-Collector

Agently Daily News Collector is an open-source project showcasing a workflow powered by the Agent ly AI application development framework. It allows users to generate news collections on various topics by inputting the field topic. The AI agents automatically perform the necessary tasks to generate a high-quality news collection saved in a markdown file. Users can edit settings in the YAML file, install Python and required packages, input their topic idea, and wait for the news collection to be generated. The process involves tasks like outlining, searching, summarizing, and preparing column data. The project dependencies include Agently AI Development Framework, duckduckgo-search, BeautifulSoup4, and PyYAM.