Agently-Daily-News-Collector

An open-source LLM based automatically daily news collecting workflow showcase powered by Agently AI application development framework.

Stars: 338

Agently Daily News Collector is an open-source project showcasing a workflow powered by the Agent ly AI application development framework. It allows users to generate news collections on various topics by inputting the field topic. The AI agents automatically perform the necessary tasks to generate a high-quality news collection saved in a markdown file. Users can edit settings in the YAML file, install Python and required packages, input their topic idea, and wait for the news collection to be generated. The process involves tasks like outlining, searching, summarizing, and preparing column data. The project dependencies include Agently AI Development Framework, duckduckgo-search, BeautifulSoup4, and PyYAM.

README:

English | 中文说明

Agently Daily News Collector is an open-source LLM based automatically news collecting workflow showcase project powered by Agently AI application development framework.

You can use this project to generate almost any topic of news collection. All you need to do is simply input the field topic of your news collection. Then you wait and the AI agents will do their jobs automatically until a high quality news collection is generated and saved into a markdown file.

News collection file examples:

MarkDown File Lastest Updated on AI Models 2024-05-02

PDF File Lastest Updated on AI Models 2024-05-02

ℹ️ Notice:

Visit https://github.com/Maplemx/Agently if you want to learn more about Agently AI Application development framework.

Run this command in shell:

git clone [email protected]:AgentEra/Agently-Daily-News-Collector.gitYou can find SETTINGS.yaml file in the project dir.

Input your model's API key and change other settings as your wish.

If you want to use other model, you can read this document or this Agently official website page to see how to set the settings.

Because this project is a Python project, you need to install Python first. You can find installation instruction on Python official website.

At the first time to run this project, you should use this command in shell to download and install dependency packages:

pip install -r path/to/project/requirements.txtWait until the dependency packages are installed then use this command in shell to start the generation process.

python path/to/project/app.pyYou will see a tip [Please input the topic of your daily news collection]:.

Input your topic idea about the field of news that you want to collect, then you're good to go.

During the process, there'll be some logs printed to shell to present what tasks are done like this:

2024-05-02 22:44:27,347 [INFO] [Outline Generated] {'report_title': "Today's news about AI Models Appliaction", 'column_list': [{'column_title': 'Latest News', 'column_requirement': 'The content is related to AI Models Appliaction, and the time is within 24 hours', 'search_keywords': 'AI Models Appliaction news latest'}, {'column_title': 'Hot News', 'column_requirement': 'The content is related to AI Models Appliaction, and the interaction is high', 'search_keywords': 'AI Models Appliaction news hot'}, {'column_title': 'Related News', 'column_requirement': 'The content is related to AI Models Appliaction, but not news', 'search_keywords': 'AI Models Appliaction report'}]}

2024-05-02 22:44:32,352 [INFO] [Start Generate Column] Latest News

2024-05-02 22:44:34,132 [INFO] [Search News Count] 8

2024-05-02 22:44:46,062 [INFO] [Picked News Count] 2

2024-05-02 22:44:46,062 [INFO] [Summarzing] With Support from AWS, Yseop Develops a Unique Generative AI Application for Regulatory Document Generation Across BioPharma

2024-05-02 22:44:52,579 [INFO] [Summarzing] Success

2024-05-02 22:44:57,580 [INFO] [Summarzing] Over 500 AI models are now optimised for Core Ultra processors, says Intel

2024-05-02 22:45:02,130 [INFO] [Summarzing] Success

2024-05-02 22:45:19,475 [INFO] [Column Data Prepared] {'title': 'Latest News', 'prologue': 'Stay up-to-date with the latest advancements in AI technology with these news updates: [Yseop Partners with AWS to Develop Generative AI for BioPharma](https://finance.yahoo.com/news/support-aws-yseop-develops-unique-130000171.html) and [Intel Optimizes Over 500 AI Models for Core Ultra Processors](https://www.business-standard.com/technology/tech-news/over-500-ai-models-are-now-optimised-for-core-ultra-processors-says-intel-124050200482_1.html).', 'news_list': [{'url': 'https://finance.yahoo.com/news/support-aws-yseop-develops-unique-130000171.html', 'title': 'With Support from AWS, Yseop Develops a Unique Generative AI Application for Regulatory Document Generation Across BioPharma', 'summary': "Yseop utilizes AWS to create a new Generative AI application for the Biopharma sector. This application leverages AWS for its scalability and security, and it allows Biopharma companies to bring pharmaceuticals and vaccines to the market more quickly. Yseop's platform integrates LLM models for generating scientific content while meeting the security standards of the pharmaceutical industry.", 'recommend_comment': 'AWS partnership helps Yseop develop an innovative Generative AI application for the BioPharma industry, enabling companies to expedite the delivery of pharmaceuticals and vaccines to market. The integration of LLM models and compliance with stringent pharmaceutical industry security standards make this a valuable solution for BioPharma companies.'}, {'url': 'https://www.business-standard.com/technology/tech-news/over-500-ai-models-are-now-optimised-for-core-ultra-processors-says-intel-124050200482_1.html', 'title': 'Over 500 AI models are now optimised for Core Ultra processors, says Intel', 'summary': 'Intel stated over 500 AI models are optimized for Core Ultra processors. These models are accessible from well-known sources like OpenVINO Model Zoo, Hugging Face, ONNX Model Zoo, and PyTorch.', 'recommend_comment': "Intel's optimization of over 500 AI models for Core Ultra processors provides access to a vast selection of pre-trained models from reputable sources. This optimization enhances the performance and efficiency of AI applications, making it easier for developers to deploy AI solutions on Intel-based hardware."}]}Whole process will take some time, so just relax and have some rest☕️.

When the process is done finally, you will see a tip like this with markdown text that generated printed on screen:

2024-05-02 21:57:20,521 [INFO] [Markdown Generated]Then you can find a markdown file named <collection name> <generated date>.md in your project dir.

Enjoy it! 😄

- Agently AI Development Framework: https://github.com/Maplemx/Agently | https://pypi.org/project/Agently/

- duckduckgo-search: https://pypi.org/project/duckduckgo-search/

- BeautifulSoup4: https://pypi.org/project/beautifulsoup4/

- PyYAML: https://pypi.org/project/pyyaml/

Please ⭐️ this repo and Agently main repo if you like it! Thank you very much!

💡 Ideas / Bug Report: Report Issues Here

📧 Email Us: [email protected]

👾 Discord Group:

Click Here to Join or Scan the QR Code Down Below

💬 WeChat Group(加入微信群):

Click Here to Apply or Scan the QR Code Down Below

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Agently-Daily-News-Collector

Similar Open Source Tools

Agently-Daily-News-Collector

Agently Daily News Collector is an open-source project showcasing a workflow powered by the Agent ly AI application development framework. It allows users to generate news collections on various topics by inputting the field topic. The AI agents automatically perform the necessary tasks to generate a high-quality news collection saved in a markdown file. Users can edit settings in the YAML file, install Python and required packages, input their topic idea, and wait for the news collection to be generated. The process involves tasks like outlining, searching, summarizing, and preparing column data. The project dependencies include Agently AI Development Framework, duckduckgo-search, BeautifulSoup4, and PyYAM.

CodeFuse-ModelCache

Codefuse-ModelCache is a semantic cache for large language models (LLMs) that aims to optimize services by introducing a caching mechanism. It helps reduce the cost of inference deployment, improve model performance and efficiency, and provide scalable services for large models. The project caches pre-generated model results to reduce response time for similar requests and enhance user experience. It integrates various embedding frameworks and local storage options, offering functionalities like cache-writing, cache-querying, and cache-clearing through RESTful API. The tool supports multi-tenancy, system commands, and multi-turn dialogue, with features for data isolation, database management, and model loading schemes. Future developments include data isolation based on hyperparameters, enhanced system prompt partitioning storage, and more versatile embedding models and similarity evaluation algorithms.

DemoGPT

DemoGPT is an all-in-one agent library that provides tools, prompts, frameworks, and LLM models for streamlined agent development. It leverages GPT-3.5-turbo to generate LangChain code, creating interactive Streamlit applications. The tool is designed for creating intelligent, interactive, and inclusive solutions in LLM-based application development. It offers model flexibility, iterative development, and a commitment to user engagement. Future enhancements include integrating Gorilla for autonomous API usage and adding a publicly available database for refining the generation process.

archgw

Arch is an intelligent Layer 7 gateway designed to protect, observe, and personalize AI agents with APIs. It handles tasks related to prompts, including detecting jailbreak attempts, calling backend APIs, routing between LLMs, and managing observability. Built on Envoy Proxy, it offers features like function calling, prompt guardrails, traffic management, and observability. Users can build fast, observable, and personalized AI agents using Arch to improve speed, security, and personalization of GenAI apps.

letmedoit

LetMeDoIt AI is a virtual assistant designed to revolutionize the way you work. It goes beyond being a mere chatbot by offering a unique and powerful capability - the ability to execute commands and perform computing tasks on your behalf. With LetMeDoIt AI, you can access OpenAI ChatGPT-4, Google Gemini Pro, and Microsoft AutoGen, local LLMs, all in one place, to enhance your productivity.

aiconfig

AIConfig is a framework that makes it easy to build generative AI applications for production. It manages generative AI prompts, models and model parameters as JSON-serializable configs that can be version controlled, evaluated, monitored and opened in a local editor for rapid prototyping. It allows you to store and iterate on generative AI behavior separately from your application code, offering a streamlined AI development workflow.

oasis

OASIS is a scalable, open-source social media simulator that integrates large language models with rule-based agents to realistically mimic the behavior of up to one million users on platforms like Twitter and Reddit. It facilitates the study of complex social phenomena such as information spread, group polarization, and herd behavior, offering a versatile tool for exploring diverse social dynamics and user interactions in digital environments. With features like scalability, dynamic environments, diverse action spaces, and integrated recommendation systems, OASIS provides a comprehensive platform for simulating social media interactions at a large scale.

aiscript

AIScript is a unique programming language and web framework written in Rust, designed to help developers effortlessly build AI applications. It combines the strengths of Python, JavaScript, and Rust to create an intuitive, powerful, and easy-to-use tool. The language features first-class functions, built-in AI primitives, dynamic typing with static type checking, data validation, error handling inspired by Rust, a rich standard library, and automatic garbage collection. The web framework offers an elegant route DSL, automatic parameter validation, OpenAPI schema generation, database modules, authentication capabilities, and more. AIScript excels in AI-powered APIs, prototyping, microservices, data validation, and building internal tools.

cover-agent

CodiumAI Cover Agent is a tool designed to help increase code coverage by automatically generating qualified tests to enhance existing test suites. It utilizes Generative AI to streamline development workflows and is part of a suite of utilities aimed at automating the creation of unit tests for software projects. The system includes components like Test Runner, Coverage Parser, Prompt Builder, and AI Caller to simplify and expedite the testing process, ensuring high-quality software development. Cover Agent can be run via a terminal and is planned to be integrated into popular CI platforms. The tool outputs debug files locally, such as generated_prompt.md, run.log, and test_results.html, providing detailed information on generated tests and their status. It supports multiple LLMs and allows users to specify the model to use for test generation.

LLM-Brained-GUI-Agents-Survey

The 'LLM-Brained-GUI-Agents-Survey' repository contains code for a searchable paper page and assets used in the survey paper on Large Language Model-Brained GUI Agents. These agents operate within GUI environments, utilizing Large Language Models as their core inference and cognitive engine to generate, plan, and execute actions flexibly and adaptively. The repository also encourages contributions from the community for new papers, resources, or improvements related to LLM-Powered GUI Agents.

otto-m8

otto-m8 is a flowchart based automation platform designed to run deep learning workloads with minimal to no code. It provides a user-friendly interface to spin up a wide range of AI models, including traditional deep learning models and large language models. The tool deploys Docker containers of workflows as APIs for integration with existing workflows, building AI chatbots, or standalone applications. Otto-m8 operates on an Input, Process, Output paradigm, simplifying the process of running AI models into a flowchart-like UI.

FunClip

FunClip is an open-source, locally deployed automated video clipping tool that leverages Alibaba TONGYI speech lab's FunASR Paraformer series models for speech recognition on videos. Users can select text segments or speakers from recognition results to obtain corresponding video clips. It integrates industrial-grade models for accurate predictions and offers hotword customization and speaker recognition features. The tool is user-friendly with Gradio interaction, supporting multi-segment clipping and providing full video and target segment subtitles. FunClip is suitable for users looking to automate video clipping tasks with advanced AI capabilities.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

kaito

KAITO is an operator that automates the AI/ML model inference or tuning workload in a Kubernetes cluster. It manages large model files using container images, provides preset configurations to avoid adjusting workload parameters based on GPU hardware, supports popular open-sourced inference runtimes, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry. Using KAITO simplifies the workflow of onboarding large AI inference models in Kubernetes.

WildBench

WildBench is a tool designed for benchmarking Large Language Models (LLMs) with challenging tasks sourced from real users in the wild. It provides a platform for evaluating the performance of various models on a range of tasks. Users can easily add new models to the benchmark by following the provided guidelines. The tool supports models from Hugging Face and other APIs, allowing for comprehensive evaluation and comparison. WildBench facilitates running inference and evaluation scripts, enabling users to contribute to the benchmark and collaborate on improving model performance.

For similar tasks

Agently-Daily-News-Collector

Agently Daily News Collector is an open-source project showcasing a workflow powered by the Agent ly AI application development framework. It allows users to generate news collections on various topics by inputting the field topic. The AI agents automatically perform the necessary tasks to generate a high-quality news collection saved in a markdown file. Users can edit settings in the YAML file, install Python and required packages, input their topic idea, and wait for the news collection to be generated. The process involves tasks like outlining, searching, summarizing, and preparing column data. The project dependencies include Agently AI Development Framework, duckduckgo-search, BeautifulSoup4, and PyYAM.

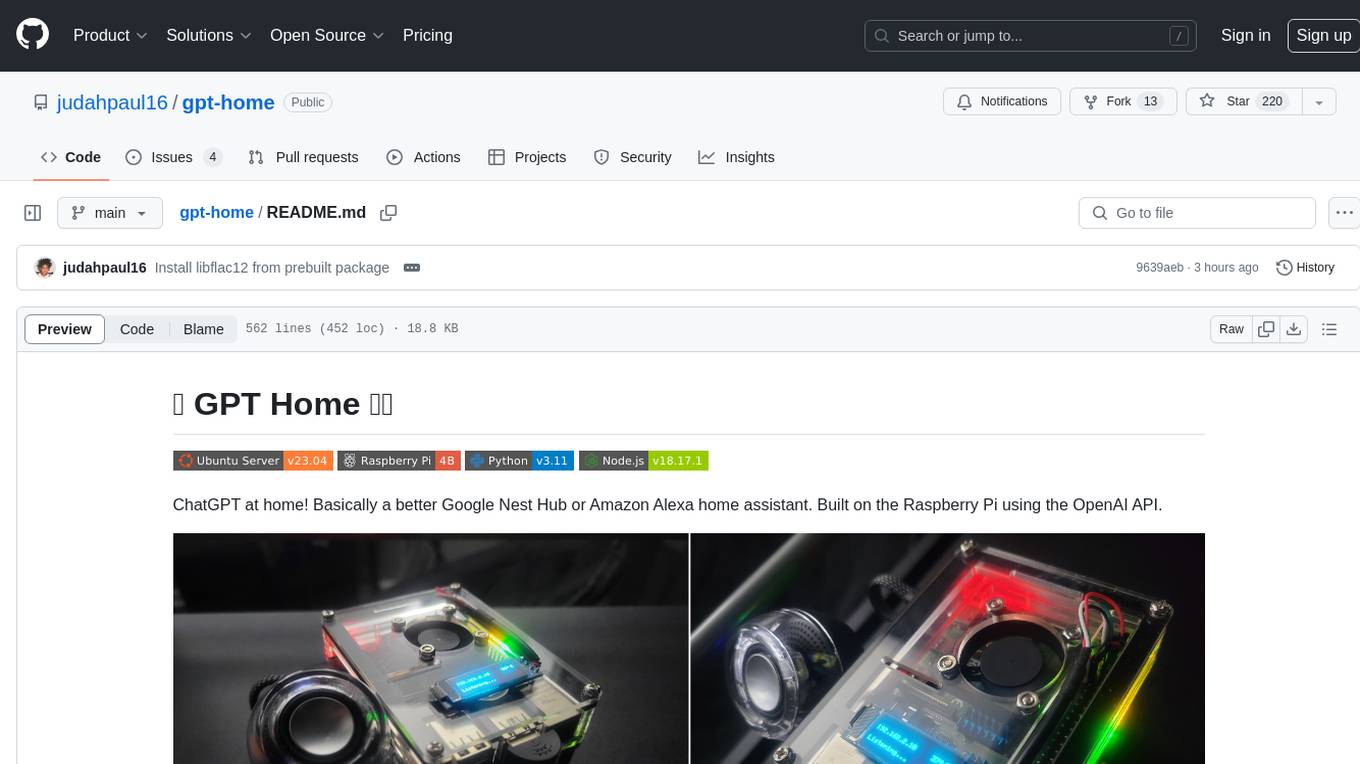

gpt-home

GPT Home is a project that allows users to build their own home assistant using Raspberry Pi and OpenAI API. It serves as a guide for setting up a smart home assistant similar to Google Nest Hub or Amazon Alexa. The project integrates various components like OpenAI, Spotify, Philips Hue, and OpenWeatherMap to provide a personalized home assistant experience. Users can follow the detailed instructions provided to build their own version of the home assistant on Raspberry Pi, with optional components for customization. The project also includes system configurations, dependencies installation, and setup scripts for easy deployment. Overall, GPT Home offers a DIY solution for creating a smart home assistant using Raspberry Pi and OpenAI technology.

comfy-cli

comfy-cli is a command line tool designed to simplify the installation and management of ComfyUI, an open-source machine learning framework. It allows users to easily set up ComfyUI, install packages, manage custom nodes, download checkpoints, and ensure cross-platform compatibility. The tool provides comprehensive documentation and examples to aid users in utilizing ComfyUI efficiently.

crewAI-tools

The crewAI Tools repository provides a guide for setting up tools for crewAI agents, enabling the creation of custom tools to enhance AI solutions. Tools play a crucial role in improving agent functionality. The guide explains how to equip agents with a range of tools and how to create new tools. Tools are designed to return strings for generating responses. There are two main methods for creating tools: subclassing BaseTool and using the tool decorator. Contributions to the toolset are encouraged, and the development setup includes steps for installing dependencies, activating the virtual environment, setting up pre-commit hooks, running tests, static type checking, packaging, and local installation. Enhance AI agent capabilities with advanced tooling.

aipan-netdisk-search

Aipan-Netdisk-Search is a free and open-source web project for searching netdisk resources. It utilizes third-party APIs with IP access restrictions, suggesting self-deployment. The project can be easily deployed on Vercel and provides instructions for manual deployment. Users can clone the project, install dependencies, run it in the browser, and access it at localhost:3001. The project also includes documentation for deploying on personal servers using NUXT.JS. Additionally, there are options for donations and communication via WeChat.

comfy-cli

Comfy-cli is a command line tool designed to facilitate the installation and management of ComfyUI, an open-source machine learning framework. Users can easily set up ComfyUI, install packages, and manage custom nodes directly from the terminal. The tool offers features such as easy installation, seamless package management, custom node management, checkpoint downloads, cross-platform compatibility, and comprehensive documentation. Comfy-cli simplifies the process of working with ComfyUI, making it convenient for users to handle various tasks related to the framework.

BentoDiffusion

BentoDiffusion is a BentoML example project that demonstrates how to serve and deploy diffusion models in the Stable Diffusion (SD) family. These models are specialized in generating and manipulating images based on text prompts. The project provides a guide on using SDXL Turbo as an example, along with instructions on prerequisites, installing dependencies, running the BentoML service, and deploying to BentoCloud. Users can interact with the deployed service using Swagger UI or other methods. Additionally, the project offers the option to choose from various diffusion models available in the repository for deployment.

md-agent

MD-Agent is a LLM-agent based toolset for Molecular Dynamics. It uses Langchain and a collection of tools to set up and execute molecular dynamics simulations, particularly in OpenMM. The tool assists in environment setup, installation, and usage by providing detailed steps. It also requires API keys for certain functionalities, such as OpenAI and paper-qa for literature searches. Contributions to the project are welcome, with a detailed Contributor's Guide available for interested individuals.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.