ControlFlow

🦾 Take control of your AI agents

Stars: 938

ControlFlow is a Python framework designed for building agentic AI workflows. It provides a structured approach for defining tasks, assigning specialized AI agents, and orchestrating complex behaviors. By balancing AI autonomy with precise oversight, users can create sophisticated AI-powered applications with confidence. ControlFlow offers a task-centric architecture, structured results with type-safe outputs, specialized agents for efficient problem-solving, ecosystem integration with LangChain models, flexible control over workflows, multi-agent orchestration, and native observability and debugging capabilities.

README:

ControlFlow is a Python framework for building agentic AI workflows.

ControlFlow provides a structured, developer-focused framework for defining workflows and delegating work to LLMs, without sacrificing control or transparency:

- Create discrete, observable tasks for an AI to work on.

- Assign one or more specialized AI agents to each task.

- Combine tasks into a flow to orchestrate more complex behaviors.

The simplest ControlFlow workflow has one task, a default agent, and automatic thread management:

import controlflow as cf

result = cf.run("Write a short poem about artificial intelligence")

print(result)Result:

In circuits and code, a mind does bloom,

With algorithms weaving through the gloom.

A spark of thought in silicon's embrace,

Artificial intelligence finds its place.

ControlFlow addresses the challenges of building AI-powered applications that are both powerful and predictable:

- 🧩 Task-Centric Architecture: Break complex AI workflows into manageable, observable steps.

- 🔒 Structured Results: Bridge the gap between AI and traditional software with type-safe, validated outputs.

- 🤖 Specialized Agents: Deploy task-specific AI agents for efficient problem-solving.

- 🎛️ Flexible Control: Continuously tune the balance of control and autonomy in your workflows.

- 🕹️ Multi-Agent Orchestration: Coordinate multiple AI agents within a single workflow or task.

- 🔍 Native Observability: Monitor and debug your AI workflows with full Prefect 3.0 support.

- 🔗 Ecosystem Integration: Seamlessly work with your existing code, tools, and the broader AI ecosystem.

Install ControlFlow with pip:

pip install controlflowNext, configure your LLM provider. ControlFlow's default provider is OpenAI, which requires the OPENAI_API_KEY environment variable:

export OPENAI_API_KEY=your-api-key

To use a different LLM provider, see the LLM configuration docs.

Here's a more involved example that showcases user interaction, a multi-step workflow, and structured outputs:

import controlflow as cf

from pydantic import BaseModel

class ResearchProposal(BaseModel):

title: str

abstract: str

key_points: list[str]

@cf.flow

def research_proposal_flow():

# Task 1: Get the research topic from the user

user_input = cf.Task(

"Work with the user to choose a research topic",

interactive=True,

)

# Task 2: Generate a structured research proposal

proposal = cf.run(

"Generate a structured research proposal",

result_type=ResearchProposal,

depends_on=[user_input]

)

return proposal

result = research_proposal_flow()

print(result.model_dump_json(indent=2))Click to see results

Conversation:

Agent: Hello! I'm here to help you choose a research topic. Do you have any particular area of interest or field you would like to explore? If you have any specific ideas or requirements, please share them as well. User: Yes, I'm interested in LLM agentic workflowsProposal:

{ "title": "AI Agentic Workflows: Enhancing Efficiency and Automation", "abstract": "This research proposal aims to explore the development and implementation of AI agentic workflows to enhance efficiency and automation in various domains. AI agents, equipped with advanced capabilities, can perform complex tasks, make decisions, and interact with other agents or humans to achieve specific goals. This research will investigate the underlying technologies, methodologies, and applications of AI agentic workflows, evaluate their effectiveness, and propose improvements to optimize their performance.", "key_points": [ "Introduction: Definition and significance of AI agentic workflows, Historical context and evolution of AI in workflows", "Technological Foundations: AI technologies enabling agentic workflows (e.g., machine learning, natural language processing), Software and hardware requirements for implementing AI workflows", "Methodologies: Design principles for creating effective AI agents, Workflow orchestration and management techniques, Interaction protocols between AI agents and human operators", "Applications: Case studies of AI agentic workflows in various industries (e.g., healthcare, finance, manufacturing), Benefits and challenges observed in real-world implementations", "Evaluation and Metrics: Criteria for assessing the performance of AI agentic workflows, Metrics for measuring efficiency, accuracy, and user satisfaction", "Proposed Improvements: Innovations to enhance the capabilities of AI agents, Strategies for addressing limitations and overcoming challenges", "Conclusion: Summary of key findings, Future research directions and potential impact on industry and society" ] }

In this example, ControlFlow is automatically managing a flow, or a shared context for a series of tasks. You can switch between standard Python functions and agentic tasks at any time, making it easy to incrementally build out complex workflows.

To dive deeper into ControlFlow:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ControlFlow

Similar Open Source Tools

ControlFlow

ControlFlow is a Python framework designed for building agentic AI workflows. It provides a structured approach for defining tasks, assigning specialized AI agents, and orchestrating complex behaviors. By balancing AI autonomy with precise oversight, users can create sophisticated AI-powered applications with confidence. ControlFlow offers a task-centric architecture, structured results with type-safe outputs, specialized agents for efficient problem-solving, ecosystem integration with LangChain models, flexible control over workflows, multi-agent orchestration, and native observability and debugging capabilities.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

langgraph

LangGraph is a low-level orchestration framework for building, managing, and deploying long-running, stateful agents. It provides durable execution, human-in-the-loop capabilities, comprehensive memory management, debugging tools, and production-ready deployment infrastructure. LangGraph can be used standalone or integrated with other LangChain products to streamline LLM application development.

blades

Blades is a multimodal AI Agent framework in Go, supporting custom models, tools, memory, middleware, and more. It is well-suited for multi-turn conversations, chain reasoning, and structured output. The framework provides core components like Agent, Prompt, Chain, ModelProvider, Tool, Memory, and Middleware, enabling developers to build intelligent applications with flexible configuration and high extensibility. Blades leverages the characteristics of Go to achieve high decoupling and efficiency, making it easy to integrate different language model services and external tools. The project is in its early stages, inviting Go developers and AI enthusiasts to contribute and explore the possibilities of building AI applications in Go.

synthora

Synthora is a lightweight and extensible framework for LLM-driven Agents and ALM research. It aims to simplify the process of building, testing, and evaluating agents by providing essential components. The framework allows for easy agent assembly with a single config, reducing the effort required for tuning and sharing agents. Although in early development stages with unstable APIs, Synthora welcomes feedback and contributions to enhance its stability and functionality.

LangChain-Udemy-Course

LangChain-Udemy-Course is a comprehensive course directory focusing on LangChain, a framework for generative AI applications. The course covers various aspects such as OpenAI API usage, prompt templates, Chains exploration, callback functions, memory techniques, RAG implementation, autonomous agents, hybrid search, LangSmith utilization, microservice architecture, and LangChain Expression Language. Learners gain theoretical knowledge and practical insights to understand and apply LangChain effectively in generative AI scenarios.

Upsonic

Upsonic offers a cutting-edge enterprise-ready framework for orchestrating LLM calls, agents, and computer use to complete tasks cost-effectively. It provides reliable systems, scalability, and a task-oriented structure for real-world cases. Key features include production-ready scalability, task-centric design, MCP server support, tool-calling server, computer use integration, and easy addition of custom tools. The framework supports client-server architecture and allows seamless deployment on AWS, GCP, or locally using Docker.

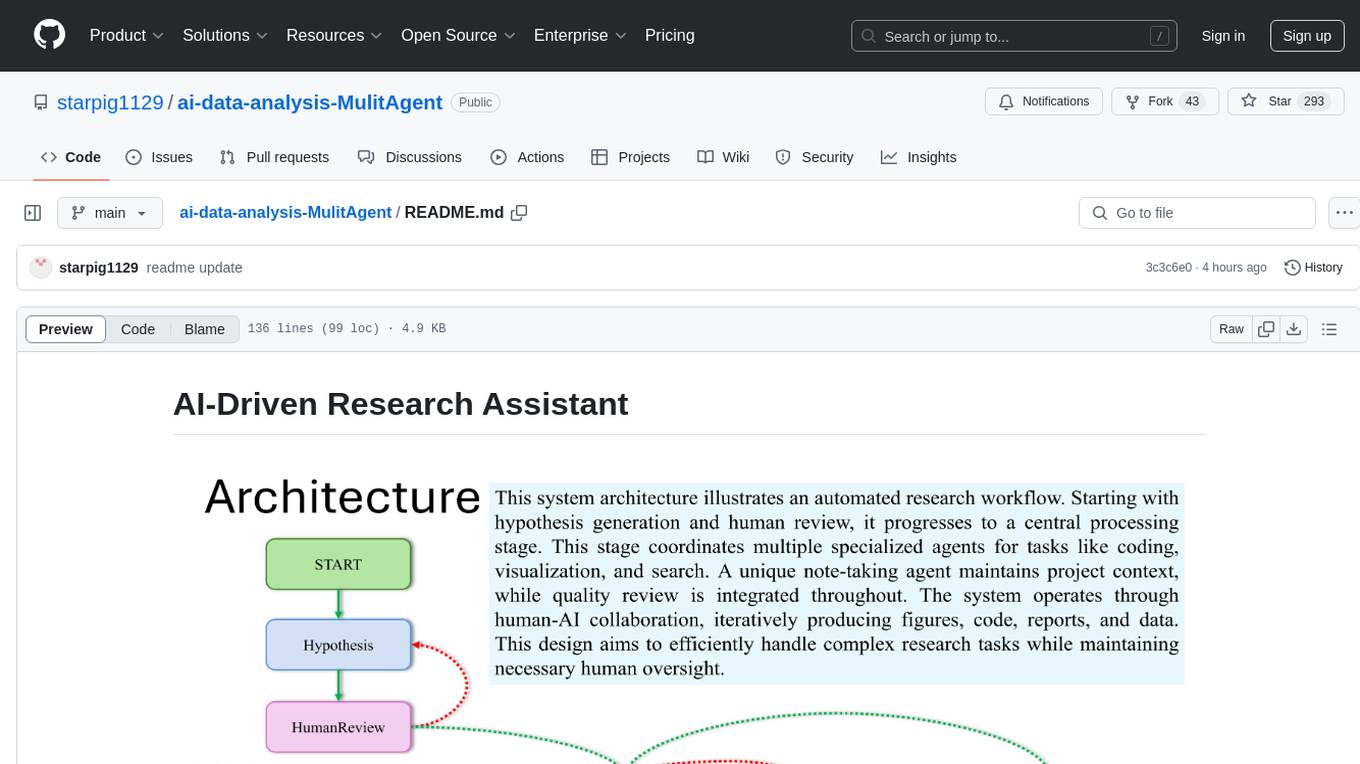

ai-data-analysis-MulitAgent

AI-Driven Research Assistant is an advanced AI-powered system utilizing specialized agents for data analysis, visualization, and report generation. It integrates LangChain, OpenAI's GPT models, and LangGraph for complex research processes. Key features include hypothesis generation, data processing, web search, code generation, and report writing. The system's unique Note Taker agent maintains project state, reducing overhead and improving context retention. System requirements include Python 3.10+ and Jupyter Notebook environment. Installation involves cloning the repository, setting up a Conda virtual environment, installing dependencies, and configuring environment variables. Usage instructions include setting data, running Jupyter Notebook, customizing research tasks, and viewing results. Main components include agents for hypothesis generation, process supervision, visualization, code writing, search, report writing, quality review, and note-taking. Workflow involves hypothesis generation, processing, quality review, and revision. Customization is possible by modifying agent creation and workflow definition. Current issues include OpenAI errors, NoteTaker efficiency, runtime optimization, and refiner improvement. Contributions via pull requests are welcome under the MIT License.

LazyLLM

LazyLLM is a low-code development tool for building complex AI applications with multiple agents. It assists developers in building AI applications at a low cost and continuously optimizing their performance. The tool provides a convenient workflow for application development and offers standard processes and tools for various stages of application development. Users can quickly prototype applications with LazyLLM, analyze bad cases with scenario task data, and iteratively optimize key components to enhance the overall application performance. LazyLLM aims to simplify the AI application development process and provide flexibility for both beginners and experts to create high-quality applications.

agentsociety

AgentSociety is an advanced framework designed for building agents in urban simulation environments. It integrates LLMs' planning, memory, and reasoning capabilities to generate realistic behaviors. The framework supports dataset-based, text-based, and rule-based environments with interactive visualization. It includes tools for interviews, surveys, interventions, and metric recording tailored for social experimentation.

codebase-context-spec

The Codebase Context Specification (CCS) project aims to standardize embedding contextual information within codebases to enhance understanding for both AI and human developers. It introduces a convention similar to `.env` and `.editorconfig` files but focused on documenting code for both AI and humans. By providing structured contextual metadata, collaborative documentation guidelines, and standardized context files, developers can improve code comprehension, collaboration, and development efficiency. The project includes a linter for validating context files and provides guidelines for using the specification with AI assistants. Tooling recommendations suggest creating memory systems, IDE plugins, AI model integrations, and agents for context creation and utilization. Future directions include integration with existing documentation systems, dynamic context generation, and support for explicit context overriding.

draive

draive is an open-source Python library designed to simplify and accelerate the development of LLM-based applications. It offers abstract building blocks for connecting functionalities with large language models, flexible integration with various AI solutions, and a user-friendly framework for building scalable data processing pipelines. The library follows a function-oriented design, allowing users to represent complex programs as simple functions. It also provides tools for measuring and debugging functionalities, ensuring type safety and efficient asynchronous operations for modern Python apps.

agency

Agency is a Go library designed for developers to explore Large Language Models (LLMs) and generative AI in a clean, effective, and Go-idiomatic way. It allows users to easily create custom operations, compose operations into processes, and interact with OpenAI API bindings for various tasks such as text completion, image generation, and speech-to-text conversion. The ultimate goal of Agency is to empower users to build autonomous AI systems, from chat interfaces to complex data analysis, with a focus on simplicity, flexibility, and efficiency.

poml

POML (Prompt Orchestration Markup Language) is a novel markup language designed to bring structure, maintainability, and versatility to advanced prompt engineering for Large Language Models (LLMs). It addresses common challenges in prompt development, such as lack of structure, complex data integration, format sensitivity, and inadequate tooling. POML provides a systematic way to organize prompt components, integrate diverse data types seamlessly, and manage presentation variations, empowering developers to create more sophisticated and reliable LLM applications.

Docs2KG

Docs2KG is a tool designed for constructing a unified knowledge graph from heterogeneous documents. It addresses the challenges of digitizing diverse unstructured documents and constructing a high-quality knowledge graph with less effort. The tool combines bottom-up and top-down approaches, utilizing a human-LLM collaborative interface to enhance the generated knowledge graph. It organizes the knowledge graph into MetaKG, LayoutKG, and SemanticKG, providing a comprehensive view of document content. Docs2KG aims to streamline the process of knowledge graph construction and offers metrics for evaluating the quality of automatic construction.

For similar tasks

ControlFlow

ControlFlow is a Python framework designed for building agentic AI workflows. It provides a structured approach for defining tasks, assigning specialized AI agents, and orchestrating complex behaviors. By balancing AI autonomy with precise oversight, users can create sophisticated AI-powered applications with confidence. ControlFlow offers a task-centric architecture, structured results with type-safe outputs, specialized agents for efficient problem-solving, ecosystem integration with LangChain models, flexible control over workflows, multi-agent orchestration, and native observability and debugging capabilities.

Long-Novel-GPT

Long-Novel-GPT is a long novel generator based on large language models like GPT. It utilizes a hierarchical outline/chapter/text structure to maintain the coherence of long novels. It optimizes API calls cost through context management and continuously improves based on self or user feedback until reaching the set goal. The tool aims to continuously refine and build novel content based on user-provided initial ideas, ultimately generating long novels at the level of human writers.

LLMAI-writer

LLMAI-writer is a powerful AI tool for assisting in novel writing, utilizing state-of-the-art large language models to help writers brainstorm, plan, and create novels. Whether you are an experienced writer or a beginner, LLMAI-writer can help you efficiently complete the writing process.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.