open-deep-research

Open source alternative to Gemini Deep Research. Generate reports with AI based on search results.

Stars: 231

Open Deep Research is an open-source tool designed to generate AI-powered reports from web search results efficiently. It combines Bing Search API for search results retrieval, JinaAI for content extraction, and customizable report generation. Users can customize settings, export reports in multiple formats, and benefit from rate limiting for stability. The tool aims to streamline research and report creation in a user-friendly platform.

README:

An open-source alternative to Gemini Deep Research, built to generate AI-powered reports from web search results with precision and efficiency. Supporting multiple AI platforms (Google, OpenAI, Anthropic) and models, it offers flexibility in choosing the right AI model for your research needs.

This app functions in three key steps:

- Search Results Retrieval: Using the Bing Search API, the app fetches comprehensive search results for the specified search term.

- Content Extraction: Leveraging JinaAI, it retrieves and processes the contents of the selected search results, ensuring accurate and relevant information.

- Report Generation: With the curated search results and extracted content, the app generates a detailed report using your chosen AI model (Gemini, GPT-4, Sonnet, etc.), providing insightful and synthesized output tailored to your custom prompts.

- Knowledge Base: Save and access your generated reports in a personal knowledge base for future reference and easy retrieval.

Open Deep Research combines powerful tools to streamline research and report creation in a user-friendly, open-source platform. You can customize the app to your needs (select your preferred AI model, customize prompts, update rate limits, and configure the number of results both fetched and selected).

- 🔍 Web search with time filtering

- 📄 Content extraction from web pages

- 🤖 Multi-platform AI support (Google Gemini, OpenAI GPT, Anthropic Sonnet)

- 🎯 Flexible model selection with granular configuration

- 📊 Multiple export formats (PDF, Word, Text)

- 🧠 Knowledge Base for saving and accessing past reports

- ⚡ Rate limiting for stability

- 📱 Responsive design

Try it out at: Open Deep Research

The Knowledge Base feature allows you to:

- Save generated reports for future reference (reports are saved in the browser's local storage)

- Access your research history

- Quickly load and review past reports

- Build a personal research library over time

The app's settings can be customized through the configuration file at lib/config.ts. Here are the key parameters you can adjust:

Control rate limiting and the number of requests allowed per minute for different operations:

rateLimits: {

enabled: true, // Enable/disable rate limiting (set to false to skip Redis setup)

search: 5, // Search requests per minute

contentFetch: 20, // Content fetch requests per minute

reportGeneration: 5, // Report generation requests per minute

}Note: If you set enabled: false, you can run the application without setting up Redis. This is useful for local development or when you don't need rate limiting.

Customize the search behavior:

search: {

resultsPerPage: 10, // Number of search results to fetch

maxSelectableResults: 3, // Maximum results users can select for reports

safeSearch: 'Moderate', // SafeSearch setting ('Off', 'Moderate', 'Strict')

market: 'en-US', // Search market/region

}The Knowledge Base feature allows you to build a personal research library by:

- Saving generated reports with their original search queries

- Accessing and loading past reports instantly

- Building a searchable archive of your research

- Maintaining context across research sessions

Reports saved to the Knowledge Base include:

- The full report content with all sections

- Original search query and prompt

- Source URLs and references

- Generation timestamp

You can access your Knowledge Base through the dedicated button in the UI, which opens a sidebar containing all your saved reports.

Configure which AI platforms and models are available. The app supports multiple AI platforms (Google, OpenAI, Anthropic) with various models for each platform. You can enable/disable platforms and individual models based on your needs:

platforms: {

google: {

enabled: true,

models: {

'gemini-flash': {

enabled: true,

label: 'Gemini Flash',

},

'gemini-flash-thinking': {

enabled: true,

label: 'Gemini Flash Thinking',

},

'gemini-exp': {

enabled: false,

label: 'Gemini Exp',

},

},

},

openai: {

enabled: true,

models: {

'gpt-4o': {

enabled: false,

label: 'GPT-4o',

},

'o1-mini': {

enabled: false,

label: 'O1 Mini',

},

'o1': {

enabled: false,

label: 'O1',

},

},

},

anthropic: {

enabled: true,

models: {

'sonnet-3.5': {

enabled: false,

label: 'Claude 3 Sonnet',

},

'haiku-3.5': {

enabled: false,

label: 'Claude 3 Haiku',

},

},

},

}For each platform:

-

enabled: Controls whether the platform is available - For each model:

-

enabled: Controls whether the specific model is selectable -

label: The display name shown in the UI

-

Disabled models will appear grayed out in the UI but remain visible to show all available options. This allows users to see the full range of available models while clearly indicating which ones are currently accessible.

To modify these settings, update the values in lib/config.ts. The changes will take effect after restarting the development server.

- Node.js 18+

- npm, yarn, pnpm, or bun

- Clone the repository:

git clone https://github.com/btahir/open-deep-research

cd open-deep-research- Install dependencies:

npm install

# or

yarn install

# or

pnpm install

# or

bun install- Create a

.env.localfile in the root directory:

# Azure Bing Search API key (required for web search)

AZURE_SUB_KEY=your_azure_subscription_key

# Google Gemini Pro API key (required for AI report generation)

GEMINI_API_KEY=your_gemini_api_key

# OpenAI API key (optional - required only if OpenAI models are enabled)

OPENAI_API_KEY=your_openai_api_key

# Anthropic API key (optional - required only if Anthropic models are enabled)

ANTHROPIC_API_KEY=your_anthropic_api_key

# Upstash Redis (required for rate limiting)

UPSTASH_REDIS_REST_URL=your_upstash_redis_url

UPSTASH_REDIS_REST_TOKEN=your_upstash_redis_tokenNote: You only need to provide API keys for the platforms you plan to use. If a platform is enabled in the config but its API key is missing, those models will appear disabled in the UI.

- Start the development server:

npm run dev

# or

yarn dev

# or

pnpm dev

# or

bun dev- Open http://localhost:3000 in your browser.

- Go to Azure Portal

- Create a Bing Search resource

- Get the subscription key from "Keys and Endpoint"

- Visit Google AI Studio

- Create an API key

- Copy the API key

- Visit OpenAI Platform

- Sign up or log in to your account

- Go to API Keys section

- Create a new API key

- Visit Anthropic Console

- Sign up or log in to your account

- Go to API Keys section

- Create a new API key

- Sign up at Upstash

- Create a new Redis database

- Copy the REST URL and REST Token

- Next.js 15 - React framework

- TypeScript - Type safety

- Tailwind CSS - Styling

- shadcn/ui - UI components

- Google Gemini - AI model

- JinaAI - Content extraction

- Azure Bing Search - Web search

- Upstash Redis - Rate limiting

- jsPDF & docx - Document generation

Pull requests are welcome. For major changes, please open an issue first to discuss what you would like to change.

- Inspired by Google's Gemini Deep Research feature

- Built with amazing open source tools and APIs

If you're interested in following all the random projects I'm working on, you can find me on Twitter:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for open-deep-research

Similar Open Source Tools

open-deep-research

Open Deep Research is an open-source tool designed to generate AI-powered reports from web search results efficiently. It combines Bing Search API for search results retrieval, JinaAI for content extraction, and customizable report generation. Users can customize settings, export reports in multiple formats, and benefit from rate limiting for stability. The tool aims to streamline research and report creation in a user-friendly platform.

deep-research

Deep Research is a lightning-fast tool that uses powerful AI models to generate comprehensive research reports in just a few minutes. It leverages advanced 'Thinking' and 'Task' models, combined with an internet connection, to provide fast and insightful analysis on various topics. The tool ensures privacy by processing and storing all data locally. It supports multi-platform deployment, offers support for various large language models, web search functionality, knowledge graph generation, research history preservation, local and server API support, PWA technology, multi-key payload support, multi-language support, and is built with modern technologies like Next.js and Shadcn UI. Deep Research is open-source under the MIT License.

TaskWeaver

TaskWeaver is a code-first agent framework designed for planning and executing data analytics tasks. It interprets user requests through code snippets, coordinates various plugins to execute tasks in a stateful manner, and preserves both chat history and code execution history. It supports rich data structures, customized algorithms, domain-specific knowledge incorporation, stateful execution, code verification, easy debugging, security considerations, and easy extension. TaskWeaver is easy to use with CLI and WebUI support, and it can be integrated as a library. It offers detailed documentation, demo examples, and citation guidelines.

DevDocs

DevDocs is a platform designed to simplify the process of digesting technical documentation for software engineers and developers. It automates the extraction and conversion of web content into markdown format, making it easier for users to access and understand the information. By crawling through child pages of a given URL, DevDocs provides a streamlined approach to gathering relevant data and integrating it into various tools for software development. The tool aims to save time and effort by eliminating the need for manual research and content extraction, ultimately enhancing productivity and efficiency in the development process.

open-deep-research

Open Deep Research is an open-source project that serves as a clone of Open AI's Deep Research experiment. It utilizes Firecrawl's extract and search method along with a reasoning model to conduct in-depth research on the web. The project features Firecrawl Search + Extract, real-time data feeding to AI via search, structured data extraction from multiple websites, Next.js App Router for advanced routing, React Server Components and Server Actions for server-side rendering, AI SDK for generating text and structured objects, support for various model providers, styling with Tailwind CSS, data persistence with Vercel Postgres and Blob, and simple and secure authentication with NextAuth.js.

ControlLLM

ControlLLM is a framework that empowers large language models to leverage multi-modal tools for solving complex real-world tasks. It addresses challenges like ambiguous user prompts, inaccurate tool selection, and inefficient tool scheduling by utilizing a task decomposer, a Thoughts-on-Graph paradigm, and an execution engine with a rich toolbox. The framework excels in tasks involving image, audio, and video processing, showcasing superior accuracy, efficiency, and versatility compared to existing methods.

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

ShortcutsBench

ShortcutsBench is a project focused on collecting and analyzing workflows created in the Shortcuts app, providing a dataset of shortcut metadata, source files, and API information. It aims to study the integration of large language models with Apple devices, particularly focusing on the role of shortcuts in enhancing user experience. The project offers insights for Shortcuts users, enthusiasts, and researchers to explore, customize workflows, and study automated workflows, low-code programming, and API-based agents.

deaddit

Deaddit is a project showcasing an AI-filled internet platform similar to Reddit. All content, including subdeaddits, posts, and comments, is generated by AI algorithms. Users can interact with AI-generated content and explore a simulated social media experience. The project provides a demonstration of how AI can be used to create online content and simulate user interactions in a virtual community.

helix-db

HelixDB is a database designed specifically for AI applications, providing a single platform to manage all components needed for AI applications. It supports graph + vector data model and also KV, documents, and relational data. Key features include built-in tools for MCP, embeddings, knowledge graphs, RAG, security, logical isolation, and ultra-low latency. Users can interact with HelixDB using the Helix CLI tool and SDKs in TypeScript and Python. The roadmap includes features like organizational auth, server code improvements, 3rd party integrations, educational content, and binary quantisation for better performance. Long term projects involve developing in-house tools for knowledge graph ingestion, graph-vector storage engine, and network protocol & serdes libraries.

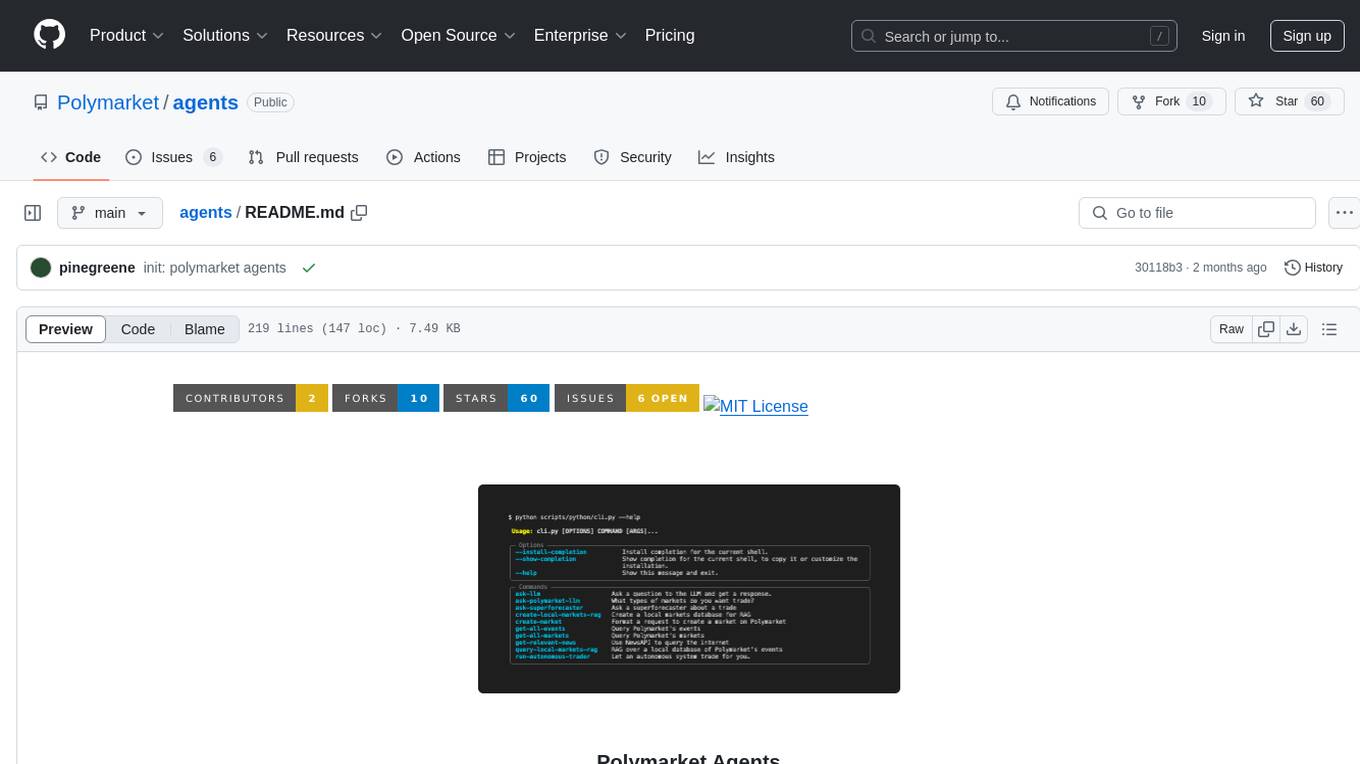

agents

Polymarket Agents is a developer framework and set of utilities for building AI agents to trade autonomously on Polymarket. It integrates with Polymarket API, provides AI agent utilities for prediction markets, supports local and remote RAG, sources data from various services, and offers comprehensive LLM tools for prompt engineering. The architecture features modular components like APIs and scripts for managing local environments, server set-up, and CLI for end-user commands.

crawlee-python

Crawlee-python is a web scraping and browser automation library that covers crawling and scraping end-to-end, helping users build reliable scrapers fast. It allows users to crawl the web for links, scrape data, and store it in machine-readable formats without worrying about technical details. With rich configuration options, users can customize almost any aspect of Crawlee to suit their project's needs.

odoo-expert

RAG-Powered Odoo Documentation Assistant is a comprehensive documentation processing and chat system that converts Odoo's documentation to a searchable knowledge base with an AI-powered chat interface. It supports multiple Odoo versions (16.0, 17.0, 18.0) and provides semantic search capabilities powered by OpenAI embeddings. The tool automates the conversion of RST to Markdown, offers real-time semantic search, context-aware AI-powered chat responses, and multi-version support. It includes a Streamlit-based web UI, REST API for programmatic access, and a CLI for document processing and chat. The system operates through a pipeline of data processing steps and an interface layer for UI and API access to the knowledge base.

pagible

PagibleAI CMS is an easy, flexible, and scalable API-first content management system that allows users to manage structured content, define new content elements, assign shared content to multiple pages, save drafts, publish content, and revert drafts. It features an extremely fast JSON frontend API, versatile GraphQL admin API, multi-language support, multi-domain routing, multi-tenancy capability, soft-deletes support, and is fully open source. The system can scale from a single page with SQLite to millions of pages with DB clusters. It can be seamlessly integrated into any existing Laravel application.

multimodal-chat

Yet Another Chatbot is a sophisticated multimodal chat interface powered by advanced AI models and equipped with a variety of tools. This chatbot can search and browse the web in real-time, query Wikipedia for information, perform news and map searches, execute Python code, compose long-form articles mixing text and images, generate, search, and compare images, analyze documents and images, search and download arXiv papers, save conversations as text and audio files, manage checklists, and track personal improvements. It offers tools for web interaction, Wikipedia search, Python scripting, content management, image handling, arXiv integration, conversation generation, file management, personal improvement, and checklist management.

mint-bench

MINT benchmark aims to evaluate LLMs' ability to solve tasks with multi-turn interactions by (1) using tools and (2) leveraging natural language feedback.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

Perplexica

Perplexica is an open-source AI-powered search engine that utilizes advanced machine learning algorithms to provide clear answers with sources cited. It offers various modes like Copilot Mode, Normal Mode, and Focus Modes for specific types of questions. Perplexica ensures up-to-date information by using SearxNG metasearch engine. It also features image and video search capabilities and upcoming features include finalizing Copilot Mode and adding Discover and History Saving features.

KULLM

KULLM (구름) is a Korean Large Language Model developed by Korea University NLP & AI Lab and HIAI Research Institute. It is based on the upstage/SOLAR-10.7B-v1.0 model and has been fine-tuned for instruction. The model has been trained on 8×A100 GPUs and is capable of generating responses in Korean language. KULLM exhibits hallucination and repetition phenomena due to its decoding strategy. Users should be cautious as the model may produce inaccurate or harmful results. Performance may vary in benchmarks without a fixed system prompt.

MMMU

MMMU is a benchmark designed to evaluate multimodal models on college-level subject knowledge tasks, covering 30 subjects and 183 subfields with 11.5K questions. It focuses on advanced perception and reasoning with domain-specific knowledge, challenging models to perform tasks akin to those faced by experts. The evaluation of various models highlights substantial challenges, with room for improvement to stimulate the community towards expert artificial general intelligence (AGI).

1filellm

1filellm is a command-line data aggregation tool designed for LLM ingestion. It aggregates and preprocesses data from various sources into a single text file, facilitating the creation of information-dense prompts for large language models. The tool supports automatic source type detection, handling of multiple file formats, web crawling functionality, integration with Sci-Hub for research paper downloads, text preprocessing, and token count reporting. Users can input local files, directories, GitHub repositories, pull requests, issues, ArXiv papers, YouTube transcripts, web pages, Sci-Hub papers via DOI or PMID. The tool provides uncompressed and compressed text outputs, with the uncompressed text automatically copied to the clipboard for easy pasting into LLMs.

gpt-researcher

GPT Researcher is an autonomous agent designed for comprehensive online research on a variety of tasks. It can produce detailed, factual, and unbiased research reports with customization options. The tool addresses issues of speed, determinism, and reliability by leveraging parallelized agent work. The main idea involves running 'planner' and 'execution' agents to generate research questions, seek related information, and create research reports. GPT Researcher optimizes costs and completes tasks in around 3 minutes. Features include generating long research reports, aggregating web sources, an easy-to-use web interface, scraping web sources, and exporting reports to various formats.

ChatTTS

ChatTTS is a generative speech model optimized for dialogue scenarios, providing natural and expressive speech synthesis with fine-grained control over prosodic features. It supports multiple speakers and surpasses most open-source TTS models in terms of prosody. The model is trained with 100,000+ hours of Chinese and English audio data, and the open-source version on HuggingFace is a 40,000-hour pre-trained model without SFT. The roadmap includes open-sourcing additional features like VQ encoder, multi-emotion control, and streaming audio generation. The tool is intended for academic and research use only, with precautions taken to limit potential misuse.

HebTTS

HebTTS is a language modeling approach to diacritic-free Hebrew text-to-speech (TTS) system. It addresses the challenge of accurately mapping text to speech in Hebrew by proposing a language model that operates on discrete speech representations and is conditioned on a word-piece tokenizer. The system is optimized using weakly supervised recordings and outperforms diacritic-based Hebrew TTS systems in terms of content preservation and naturalness of generated speech.

do-research-in-AI

This repository is a collection of research lectures and experience sharing posts from frontline researchers in the field of AI. It aims to help individuals upgrade their research skills and knowledge through insightful talks and experiences shared by experts. The content covers various topics such as evaluating research papers, choosing research directions, research methodologies, and tips for writing high-quality scientific papers. The repository also includes discussions on academic career paths, research ethics, and the emotional aspects of research work. Overall, it serves as a valuable resource for individuals interested in advancing their research capabilities in the field of AI.