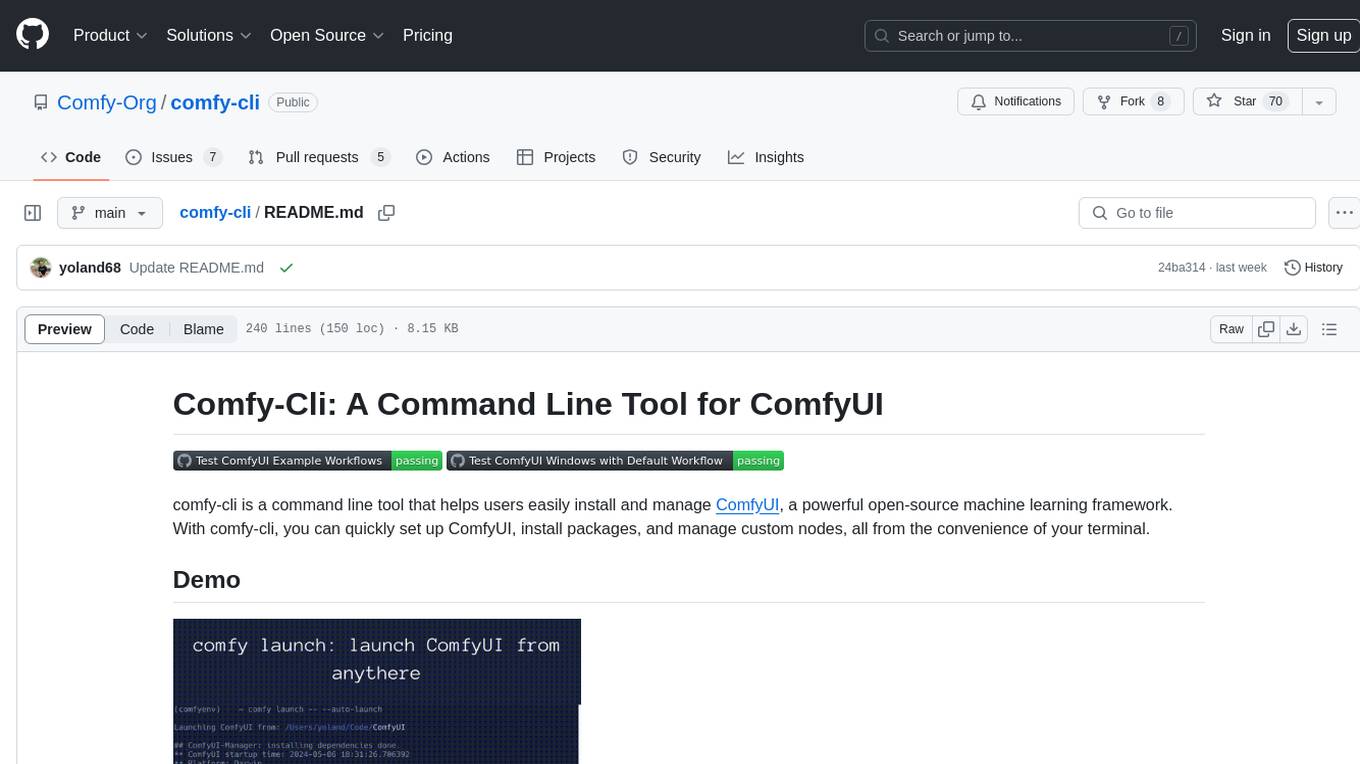

comfy-cli

Command Line Interface for Managing ComfyUI

Stars: 64

comfy-cli is a command line tool designed to simplify the installation and management of ComfyUI, an open-source machine learning framework. It allows users to easily set up ComfyUI, install packages, manage custom nodes, download checkpoints, and ensure cross-platform compatibility. The tool provides comprehensive documentation and examples to aid users in utilizing ComfyUI efficiently.

README:

comfy-cli is a command line tool that helps users easily install and manage ComfyUI, a powerful open-source machine learning framework. With comfy-cli, you can quickly set up ComfyUI, install packages, and manage custom nodes, all from the convenience of your terminal.

- 🚀 Easy installation of ComfyUI with a single command

- 📦 Seamless package management for ComfyUI extensions and dependencies

- 🔧 Custom node management for extending ComfyUI's functionality

- 🗄️ Download checkpoints and save model hash

- 💻 Cross-platform compatibility (Windows, macOS, Linux)

- 📖 Comprehensive documentation and examples

-

(Recommended, but not necessary) Enable virtual environment (venv/conda)

-

To install comfy-cli, make sure you have Python 3.9 or higher installed on your system. Then, run the following command:

pip install comfy-cli

To install autocompletion hints in your shell run:

comfy --install-completion

This enables you to type comfy [TAP] to autocomplete commands and options

To install ComfyUI using comfy, simply run:

comfy install

This command will download and set up the latest version of ComfyUI and ComfyUI-Manager on your system. If you run in a ComfyUI repo that has already been setup. The command will simply update the comfy.yaml file to reflect the local setup

-

comfy install --skip-manager: Install ComfyUI without ComfyUI-Manager. -

comfy --workspace=<path> install: Install ComfyUI into<path>/ComfyUI. - For

comfy install, if no path specification like--workspace, --recent, or --hereis provided, it will be implicitly installed in<HOME>/comfy.

-

You can specify the path of ComfyUI where the command will be applied through path indicators as follows:

-

comfy --workspace=<path>: Run from the ComfyUI installed in the specified workspace. -

comfy --recent: Run from the recently executed or installed ComfyUI. -

comfy --here: Run from the ComfyUI located in the current directory.

-

-

--workspace, --recent, and --here options cannot be used simultaneously.

-

If there is no path indicator, the following priority applies:

- Run from the default ComfyUI at the path specified by

comfy set-default <path>. - Run from the recently executed or installed ComfyUI.

- Run from the ComfyUI located in the current directory.

- Run from the default ComfyUI at the path specified by

-

Example 1: To run the recently executed ComfyUI:

comfy --recent launch

-

Example 2: To install a package on the ComfyUI in the current directory:

comfy --here node install ComfyUI-Impact-Pack

-

Example 3: To update the automatically selected path of ComfyUI and custom nodes based on priority:

comfy node update all

-

You can use the

comfy whichcommand to check the path of the target workspace.- e.g

comfy --recent which,comfy --here which,comfy which, ...

- e.g

The default sets the option that will be executed by default when no specific workspace's ComfyUI has been set for the command.

comfy set-default <workspace path> ?[--launch-extras="<extra args>"]

-

--launch-extrasoption specifies extra args that are applied only during launch by default. However, if extras are specified at the time of launch, this setting is ignored.

Comfy provides commands that allow you to easily run the installed ComfyUI.

comfy launch

-

To run with default ComfyUI options:

comfy launch -- <extra args...>comfy launch -- --cpu --listen 0.0.0.0- When you manually configure the extra options, the extras set by set-default will be overridden.

-

To run background

comfy launch --backgroundcomfy --workspace=~/comfy launch --background -- --listen 10.0.0.10 --port 8000- Instances launched with

--backgroundare displayed in the "Background ComfyUI" section ofcomfy env, providing management functionalities for a single background instance only. - Since "Comfy Server Running" in

comfy envonly shows the default port 8188, it doesn't display ComfyUI running on a different port. - Background-running ComfyUI can be stopped with

comfy stop.

- Instances launched with

comfy provides a convenient way to manage custom nodes for extending ComfyUI's functionality. Here are some examples:

- Show custom nodes' information:

comfy node [show|simple-show] [installed|enabled|not-installed|disabled|all|snapshot|snapshot-list]

?[--channel <channel name>]

?[--mode [remote|local|cache]]

-

comfy node show all --channel recentcomfy node simple-show installedcomfy node update allcomfy node install ComfyUI-Impact-Pack -

Managing snapshot:

comfy node save-snapshotcomfy node restore-snapshot <snapshot name> -

Install dependencies:

comfy node install-deps --deps=<deps .json file>comfy node install-deps --workflow=<workflow .json/.png file> -

Generate deps:

comfy node deps-in-workflow --workflow=<workflow .json/.png file>

-

Model downloading

comfy model download --url <URL> ?[--relative-path <PATH>] ?[--set-civitai-api-token <TOKEN>]- URL: CivitAI, huggingface file url, ...

-

Model remove

comfy model remove ?[--relative-path <PATH>] --model-names <model names> -

Model list

comfy model list ?[--relative-path <PATH>]

-

disable GUI of ComfyUI-Manager (disable Manager menu and Server)

comfy manager disable-gui -

enable GUI of ComfyUI-Manager

comfy manager enable-gui -

Clear reserved startup action:

comfy manager clear

basic:

models:

- model: [name of the model]

url: [url of the source, e.g. https://huggingface.co/...]

paths: [list of paths to the model]

- path: [path to the model]

- path: [path to the model]

hashes: [hashes for the model]

- hash: [hash]

type: [AutoV1, AutoV2, SHA256, CRC32, and Blake3]

type: [type of the model, e.g. diffuser, lora, etc.]

- model:

...

# compatible with ComfyUI-Manager's .yaml snapshot

custom_nodes:

comfyui: [commit hash]

file_custom_nodes:

- disabled: [bool]

filename: [.py filename]

...

git_custom_nodes:

[git-url]:

disabled: [bool]

hash: [commit hash]

...

We track analytics using Mixpanel to help us understand usage patterns and know where to prioritize our efforts. When you first download the cli, it will ask you to give consent. If at any point you wish to opt out:

comfy tracking disable

Check out the usage here: Mixpanel Board

We welcome contributions to comfy-cli! If you have any ideas, suggestions, or bug reports, please open an issue on our GitHub repository. If you'd like to contribute code, please fork the repository and submit a pull request.

Check out the Dev Guide for more details.

comfy is released under the GNU General Public License v3.0.

If you encounter any issues or have questions about comfy-cli, please open an issue on our GitHub repository or contact us on Discord. We'll be happy to assist you!

Happy diffusing with ComfyUI and comfy-cli! 🎉

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for comfy-cli

Similar Open Source Tools

comfy-cli

comfy-cli is a command line tool designed to simplify the installation and management of ComfyUI, an open-source machine learning framework. It allows users to easily set up ComfyUI, install packages, manage custom nodes, download checkpoints, and ensure cross-platform compatibility. The tool provides comprehensive documentation and examples to aid users in utilizing ComfyUI efficiently.

comfy-cli

Comfy-cli is a command line tool designed to facilitate the installation and management of ComfyUI, an open-source machine learning framework. Users can easily set up ComfyUI, install packages, and manage custom nodes directly from the terminal. The tool offers features such as easy installation, seamless package management, custom node management, checkpoint downloads, cross-platform compatibility, and comprehensive documentation. Comfy-cli simplifies the process of working with ComfyUI, making it convenient for users to handle various tasks related to the framework.

termax

Termax is an LLM agent in your terminal that converts natural language to commands. It is featured by: - Personalized Experience: Optimize the command generation with RAG. - Various LLMs Support: OpenAI GPT, Anthropic Claude, Google Gemini, Mistral AI, and more. - Shell Extensions: Plugin with popular shells like `zsh`, `bash` and `fish`. - Cross Platform: Able to run on Windows, macOS, and Linux.

lexido

Lexido is an innovative assistant for the Linux command line, designed to boost your productivity and efficiency. Powered by Gemini Pro 1.0 and utilizing the free API, Lexido offers smart suggestions for commands based on your prompts and importantly your current environment. Whether you're installing software, managing files, or configuring system settings, Lexido streamlines the process, making it faster and more intuitive.

vector-inference

This repository provides an easy-to-use solution for running inference servers on Slurm-managed computing clusters using vLLM. All scripts in this repository run natively on the Vector Institute cluster environment. Users can deploy models as Slurm jobs, check server status and performance metrics, and shut down models. The repository also supports launching custom models with specific configurations. Additionally, users can send inference requests and set up an SSH tunnel to run inference from a local device.

nosia

Nosia is a platform that allows users to run an AI model on their own data. It is designed to be easy to install and use. Users can follow the provided guides for quickstart, API usage, upgrading, starting, stopping, and troubleshooting. The platform supports custom installations with options for remote Ollama instances, custom completion models, and custom embeddings models. Advanced installation instructions are also available for macOS with a Debian or Ubuntu VM setup. Users can access the platform at 'https://nosia.localhost' and troubleshoot any issues by checking logs and job statuses.

magic-cli

Magic CLI is a command line utility that leverages Large Language Models (LLMs) to enhance command line efficiency. It is inspired by projects like Amazon Q and GitHub Copilot for CLI. The tool allows users to suggest commands, search across command history, and generate commands for specific tasks using local or remote LLM providers. Magic CLI also provides configuration options for LLM selection and response generation. The project is still in early development, so users should expect breaking changes and bugs.

ComfyUI

ComfyUI is a powerful and modular visual AI engine and application that allows users to design and execute advanced stable diffusion pipelines using a graph/nodes/flowchart based interface. It provides a user-friendly environment for creating complex Stable Diffusion workflows without the need for coding. ComfyUI supports various models for image editing, video processing, audio manipulation, 3D modeling, and more. It offers features like smart memory management, support for different GPU types, loading and saving workflows as JSON files, and offline functionality. Users can also use API nodes to access paid models from external providers through the online Comfy API.

gpt-cli

gpt-cli is a command-line interface tool for interacting with various chat language models like ChatGPT, Claude, and others. It supports model customization, usage tracking, keyboard shortcuts, multi-line input, markdown support, predefined messages, and multiple assistants. Users can easily switch between different assistants, define custom assistants, and configure model parameters and API keys in a YAML file for easy customization and management.

desktop

ComfyUI Desktop is a packaged desktop application that allows users to easily use ComfyUI with bundled features like ComfyUI source code, ComfyUI-Manager, and uv. It automatically installs necessary Python dependencies and updates with stable releases. The app comes with Electron, Chromium binaries, and node modules. Users can store ComfyUI files in a specified location and manage model paths. The tool requires Python 3.12+ and Visual Studio with Desktop C++ workload for Windows. It uses nvm to manage node versions and yarn as the package manager. Users can install ComfyUI and dependencies using comfy-cli, download uv, and build/launch the code. Troubleshooting steps include rebuilding modules and installing missing libraries. The tool supports debugging in VSCode and provides utility scripts for cleanup. Crash reports can be sent to help debug issues, but no personal data is included.

kwaak

Kwaak is a tool that allows users to run a team of autonomous AI agents locally from their own machine. It enables users to write code, improve test coverage, update documentation, and enhance code quality while focusing on building innovative projects. Kwaak is designed to run multiple agents in parallel, interact with codebases, answer questions about code, find examples, write and execute code, create pull requests, and more. It is free and open-source, allowing users to bring their own API keys or models via Ollama. Kwaak is part of the bosun.ai project, aiming to be a platform for autonomous code improvement.

browser

Lightpanda Browser is an open-source headless browser designed for fast web automation, AI agents, LLM training, scraping, and testing. It features ultra-low memory footprint, exceptionally fast execution, and compatibility with Playwright and Puppeteer through CDP. Built for performance, Lightpanda offers Javascript execution, support for Web APIs, and is optimized for minimal memory usage. It is a modern solution for web scraping and automation tasks, providing a lightweight alternative to traditional browsers like Chrome.

ComfyUI

ComfyUI is a powerful and modular visual AI engine and application that allows users to design and execute advanced stable diffusion pipelines using a graph/nodes/flowchart based interface. It provides a user-friendly environment for creating complex Stable Diffusion workflows without the need for coding. ComfyUI supports various models for image, video, audio, and 3D processing, along with features like smart memory management, model loading, embeddings/textual inversion, and offline usage. Users can experiment with different models, create complex workflows, and optimize their processes efficiently.

bedrock-claude-chat

This repository is a sample chatbot using the Anthropic company's LLM Claude, one of the foundational models provided by Amazon Bedrock for generative AI. It allows users to have basic conversations with the chatbot, personalize it with their own instructions and external knowledge, and analyze usage for each user/bot on the administrator dashboard. The chatbot supports various languages, including English, Japanese, Korean, Chinese, French, German, and Spanish. Deployment is straightforward and can be done via the command line or by using AWS CDK. The architecture is built on AWS managed services, eliminating the need for infrastructure management and ensuring scalability, reliability, and security.

vim-ollama

The 'vim-ollama' plugin for Vim adds Copilot-like code completion support using Ollama as a backend, enabling intelligent AI-based code completion and integrated chat support for code reviews. It does not rely on cloud services, preserving user privacy. The plugin communicates with Ollama via Python scripts for code completion and interactive chat, supporting Vim only. Users can configure LLM models for code completion tasks and interactive conversations, with detailed installation and usage instructions provided in the README.

OpenAI-sublime-text

The OpenAI Completion plugin for Sublime Text provides first-class code assistant support within the editor. It utilizes LLM models to manipulate code, engage in chat mode, and perform various tasks. The plugin supports OpenAI, llama.cpp, and ollama models, allowing users to customize their AI assistant experience. It offers separated chat histories and assistant settings for different projects, enabling context-specific interactions. Additionally, the plugin supports Markdown syntax with code language syntax highlighting, server-side streaming for faster response times, and proxy support for secure connections. Users can configure the plugin's settings to set their OpenAI API key, adjust assistant modes, and manage chat history. Overall, the OpenAI Completion plugin enhances the Sublime Text editor with powerful AI capabilities, streamlining coding workflows and fostering collaboration with AI assistants.

For similar tasks

comfy-cli

comfy-cli is a command line tool designed to simplify the installation and management of ComfyUI, an open-source machine learning framework. It allows users to easily set up ComfyUI, install packages, manage custom nodes, download checkpoints, and ensure cross-platform compatibility. The tool provides comprehensive documentation and examples to aid users in utilizing ComfyUI efficiently.

comfy-cli

Comfy-cli is a command line tool designed to facilitate the installation and management of ComfyUI, an open-source machine learning framework. Users can easily set up ComfyUI, install packages, and manage custom nodes directly from the terminal. The tool offers features such as easy installation, seamless package management, custom node management, checkpoint downloads, cross-platform compatibility, and comprehensive documentation. Comfy-cli simplifies the process of working with ComfyUI, making it convenient for users to handle various tasks related to the framework.

desktop

ComfyUI Desktop is a packaged desktop application that allows users to easily use ComfyUI with bundled features like ComfyUI source code, ComfyUI-Manager, and uv. It automatically installs necessary Python dependencies and updates with stable releases. The app comes with Electron, Chromium binaries, and node modules. Users can store ComfyUI files in a specified location and manage model paths. The tool requires Python 3.12+ and Visual Studio with Desktop C++ workload for Windows. It uses nvm to manage node versions and yarn as the package manager. Users can install ComfyUI and dependencies using comfy-cli, download uv, and build/launch the code. Troubleshooting steps include rebuilding modules and installing missing libraries. The tool supports debugging in VSCode and provides utility scripts for cleanup. Crash reports can be sent to help debug issues, but no personal data is included.

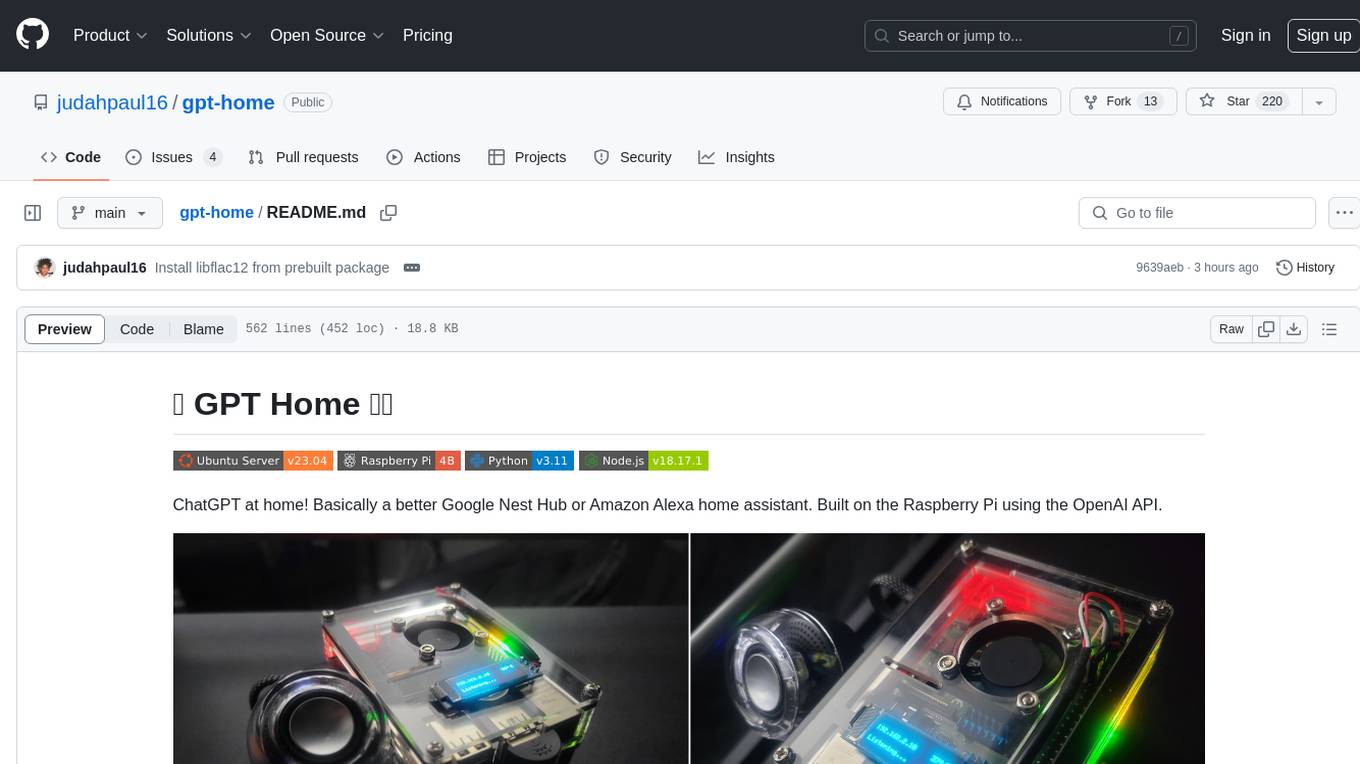

gpt-home

GPT Home is a project that allows users to build their own home assistant using Raspberry Pi and OpenAI API. It serves as a guide for setting up a smart home assistant similar to Google Nest Hub or Amazon Alexa. The project integrates various components like OpenAI, Spotify, Philips Hue, and OpenWeatherMap to provide a personalized home assistant experience. Users can follow the detailed instructions provided to build their own version of the home assistant on Raspberry Pi, with optional components for customization. The project also includes system configurations, dependencies installation, and setup scripts for easy deployment. Overall, GPT Home offers a DIY solution for creating a smart home assistant using Raspberry Pi and OpenAI technology.

crewAI-tools

The crewAI Tools repository provides a guide for setting up tools for crewAI agents, enabling the creation of custom tools to enhance AI solutions. Tools play a crucial role in improving agent functionality. The guide explains how to equip agents with a range of tools and how to create new tools. Tools are designed to return strings for generating responses. There are two main methods for creating tools: subclassing BaseTool and using the tool decorator. Contributions to the toolset are encouraged, and the development setup includes steps for installing dependencies, activating the virtual environment, setting up pre-commit hooks, running tests, static type checking, packaging, and local installation. Enhance AI agent capabilities with advanced tooling.

aipan-netdisk-search

Aipan-Netdisk-Search is a free and open-source web project for searching netdisk resources. It utilizes third-party APIs with IP access restrictions, suggesting self-deployment. The project can be easily deployed on Vercel and provides instructions for manual deployment. Users can clone the project, install dependencies, run it in the browser, and access it at localhost:3001. The project also includes documentation for deploying on personal servers using NUXT.JS. Additionally, there are options for donations and communication via WeChat.

Agently-Daily-News-Collector

Agently Daily News Collector is an open-source project showcasing a workflow powered by the Agent ly AI application development framework. It allows users to generate news collections on various topics by inputting the field topic. The AI agents automatically perform the necessary tasks to generate a high-quality news collection saved in a markdown file. Users can edit settings in the YAML file, install Python and required packages, input their topic idea, and wait for the news collection to be generated. The process involves tasks like outlining, searching, summarizing, and preparing column data. The project dependencies include Agently AI Development Framework, duckduckgo-search, BeautifulSoup4, and PyYAM.

BentoDiffusion

BentoDiffusion is a BentoML example project that demonstrates how to serve and deploy diffusion models in the Stable Diffusion (SD) family. These models are specialized in generating and manipulating images based on text prompts. The project provides a guide on using SDXL Turbo as an example, along with instructions on prerequisites, installing dependencies, running the BentoML service, and deploying to BentoCloud. Users can interact with the deployed service using Swagger UI or other methods. Additionally, the project offers the option to choose from various diffusion models available in the repository for deployment.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.