fish-ai

Supercharge your command line with LLMs and get shell scripting assistance in Fish. 💪

Stars: 478

fish-ai is a tool that adds AI functionality to Fish shell. It can be integrated with various AI providers like OpenAI, Azure OpenAI, Google, Hugging Face, Mistral, or a self-hosted LLM. Users can transform comments into commands, autocomplete commands, and suggest fixes. The tool allows customization through configuration files and supports switching between contexts. Data privacy is maintained by redacting sensitive information before submission to the AI models. Development features include debug logging, testing, and creating releases.

README:

fish-ai adds AI functionality to Fish.

It's awesome! I built it to make my life easier, and I hope it will make

yours easier too. Here is the complete sales pitch:

- It can turn a comment into a shell command and vice versa, which means

less time spent

reading manpages, googling and copy-pasting from Stack Overflow. Great

when working with

git,kubectl,curland other tools with loads of parameters and switches. - Did you make a typo? It can also fix a broken command (similarly to

thefuck). - Not sure what to type next or just lazy? Let the LLM autocomplete your commands with a built in fuzzy finder.

- Everything is done using two (configurable) keyboard shortcuts, no mouse needed!

- It can be hooked up to the LLM of your choice (even a self-hosted one!).

- The whole thing is open source, hopefully somewhat easy to read and around 2000 lines of code, which means that you can audit the code yourself in an afternoon.

- Install and update with ease using

fisher. - Tested on both macOS and the most common Linux distributions.

- Does not interfere with

fzf.fish,tideor any of the other plugins you're already using! - Does not wrap your shell, install telemetry or force you to switch to a proprietary terminal emulator.

This plugin was originally based on Tom Dörr's fish.codex repository.

Without Tom, this repository would not exist!

If you like it, please add a ⭐.

Bug fixes are welcome! I consider this project largely feature complete. Before opening a PR for a feature request, consider opening an issue where you explain what you want to add and why, and we can talk about it first.

Make sure git and either uv, or

a supported version of Python

along with pip and venv is installed. Then grab the plugin using

fisher:

fisher install realiserad/fish-aiCreate a configuration file $XDG_CONFIG_HOME/fish-ai.ini (use

~/.config/fish-ai.ini if $XDG_CONFIG_HOME is not set) where

you specify which LLM fish-ai should talk to. If you're not sure,

use GitHub Models.

To use Anthropic:

[anthropic]

provider = anthropic

api_key = <your API key>To use Azure OpenAI:

[fish-ai]

configuration = azure

[azure]

provider = azure

server = https://<your instance>.openai.azure.com

model = <your deployment name>

api_key = <your API key>To use Cohere:

[cohere]

provider = cohere

api_key = <your API key>To use DeepSeek:

[deepseek]

provider = deepseek

api_key = <your API key>

model = deepseek-chatTo use GitHub Models:

[fish-ai]

configuration = github

[github]

provider = self-hosted

server = https://models.github.ai/inference

api_key = <paste GitHub PAT here>

model = gpt-4o-miniYou can create a personal access token (PAT) here. The PAT does not require any permissions.

To use Gemini from Google:

[google]

provider = google

api_key = <your API key>

model = gemini-3-pro-previewTo use Groq:

[groq]

provider = groq

api_key = <your API key>To use Mistral:

[fish-ai]

configuration = mistral

[mistral]

provider = mistral

api_key = <your API key>To use OpenAI:

[fish-ai]

configuration = openai

[openai]

provider = openai

model = gpt-4o

api_key = <your API key>

organization = <your organization>To use OpenRouter:

[fish-ai]

configuration = openrouter

[openrouter]

provider = self-hosted

server = https://openrouter.ai/api/v1

model = google/gemini-3-flash-preview

api_key = <your API key>

extra_body = {"reasoning": {"effort": "minimal", "exclude": true}}To use a self-hosted LLM (behind an OpenAI-compatible API):

[fish-ai]

configuration = self-hosted

[self-hosted]

provider = self-hosted

server = https://<your server>:<port>/v1

model = <your model>

api_key = <your API key>If you are self-hosting, my recommendation is to use

Ollama with

Llama 3.3 70B. An out of the box

configuration running on localhost could then look something

like this:

[fish-ai]

configuration = local-llama

[local-llama]

provider = self-hosted

model = llama3.3

server = http://localhost:11434/v1Available models are listed here.

Instead of putting the API key in the configuration file, you can let

fish-ai load it from your keyring. To save a new API key or transfer

an existing API key to your keyring, run fish_ai_put_api_key.

Type a comment (anything starting with #), and press Ctrl + P to turn it

into shell command! Note that if your comment is very brief or vague, the LLM

may decide to improve the comment instead of providing a shell command. You

then need to press Ctrl + P again.

You can also run it in reverse. Type a command and press Ctrl + P to turn it into a comment explaining what the command does.

Begin typing your command or comment and press Ctrl + Space to display a list

of completions in fzf (it is bundled

with the plugin, no need to install it separately).

To refine the results, type some instructions and press Ctrl + P

inside fzf.

If a command fails, you can immediately press Ctrl + Space at the command prompt

to let fish-ai suggest a fix!

You can tweak the behaviour of fish-ai by putting additional options in your

fish-ai.ini configuration file.

By default, fish-ai binds to Ctrl + P and Ctrl + Space. You

may want to change this if there is interference with any existing key

bindings on your system.

To change the key bindings, set keymap_1 (defaults to Ctrl + P)

and keymap_2 (defaults to Ctrl + Space) to the key binding escape

sequence of the key binding you want to use.

To get the correct key binding escape sequence, use

fish_key_reader.

For example, if you have the following output from fish_key_reader:

$ fish_key_reader

Press a key:

bind ctrl-p 'do something'

$ fish_key_reader

Press a key:

bind ctrl-space 'do something'Then put the following in your configuration file:

[fish-ai]

keymap_1 = 'ctrl-p'

keymap_2 = 'ctrl-space'Restart the shell for the changes to take effect.

To explain shell commands in a different language, set the language option

to the name of the language. For example:

[fish-ai]

language = SwedishThis will only work well if the LLM you are using has been trained on a dataset with the chosen language.

To change the number of completions suggested by the LLM when pressing

Ctrl + Space, set the completions option. The default value is 5.

Here is an example of how you can increase the number of completions to 10:

[fish-ai]

completions = 10To change the number of refined completions suggested by the LLM when pressing

Ctrl + P in fzf, set the refined_completions option. The default value

is 3.

[fish-ai]

refined_completions = 5You can personalise completions suggested by the LLM by sending an excerpt of your commandline history.

To enable it, specify the maximum number of commands from the history

to send to the LLM using the history_size option. The default value

is 0 (do not send any commandline history).

[fish-ai]

history_size = 5If you enable this option, consider the use of sponge

to automatically remove broken commands from your commandline history.

To send the output of a pipe to the LLM when completing a command, use the

preview_pipe option.

[fish-ai]

preview_pipe = TrueThis will send the output of the longest consecutive pipe after the last

unterminated parenthesis before the cursor. For example, if you autocomplete

az vm list | jq, the output from az vm list will be sent to the LLM.

This behaviour is disabled by default, as it may slow down the completion process and lead to commands being executed twice.

You can change the progress indicator (the default is ⏳) shown when the plugin is waiting for a response from the LLM.

To change the default, set the progress_indicator option to zero or

more characters.

[fish-ai]

progress_indicator = wait...You can send custom HTTP headers using the headers option. Specify one

or more headers using comma-separated Key: Value pairs. For example:

[fish-ai]

headers = Header-1: value1, Header-2: value2You can switch between different sections in the configuration using the

fish_ai_switch_context command.

When using the plugin, fish-ai submits the name of your OS and the

commandline buffer to the LLM.

When you codify or complete a command, it also sends the contents of any

files you mention (as long as the file is readable), and when you explain

or complete a command, the output from <command> --help is provided to

the LLM for reference.

fish-ai can also send an excerpt of your commandline history

when completing a command. This is disabled by default.

Finally, to fix the previous command, the previous commandline buffer, along with any terminal output and the corresponding exit code is sent to the LLM.

If you are concerned with data privacy, you should use a self-hosted LLM. When hosted locally, no data ever leaves your machine.

The plugin attempts to redact sensitive information from the prompt

before submitting it to the LLM. Sensitive information is replaced by

the <REDACTED> placeholder.

The following information is redacted:

- Passwords and API keys supplied as commandline arguments

- PEM-encoded private keys stored in files

- Bearer tokens, provided to e.g. cURL

If you trust the LLM provider (e.g. because you are hosting locally)

you can disable redaction using the redact = False option.

If you want to contribute, I recommend to read ARCHITECTURE.md

first.

This repository ships with a devcontainer.json which can be used with

GitHub Codespaces or Visual Studio Code with

the Dev Containers extension.

To install fish-ai from a local copy, use fisher:

fisher install .Enable debug logging by putting debug = True in your fish-ai.ini.

Logging is done to syslog by default (if available). You can also enable

logging to file using log = <path to file>, for example:

[fish-ai]

debug = True

log = /tmp/fish-ai.logThe installation tests are currently running on macOS, Fedora, Ubuntu and Arch Linux.

The Python modules containing most of the business logic can be tested using pytest.

A release is created by GitHub Actions when a new tag is pushed.

set tag (grep '^version =' pyproject.toml | \

cut -d '=' -f2- | \

string replace -ra '[ "]' '')

git tag -a "v$tag" -m "🚀 v$tag"

git push origin "v$tag"For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for fish-ai

Similar Open Source Tools

fish-ai

fish-ai is a tool that adds AI functionality to Fish shell. It can be integrated with various AI providers like OpenAI, Azure OpenAI, Google, Hugging Face, Mistral, or a self-hosted LLM. Users can transform comments into commands, autocomplete commands, and suggest fixes. The tool allows customization through configuration files and supports switching between contexts. Data privacy is maintained by redacting sensitive information before submission to the AI models. Development features include debug logging, testing, and creating releases.

mods

AI for the command line, built for pipelines. LLM based AI is really good at interpreting the output of commands and returning the results in CLI friendly text formats like Markdown. Mods is a simple tool that makes it super easy to use AI on the command line and in your pipelines. Mods works with OpenAI, Groq, Azure OpenAI, and LocalAI To get started, install Mods and check out some of the examples below. Since Mods has built-in Markdown formatting, you may also want to grab Glow to give the output some _pizzazz_.

termax

Termax is an LLM agent in your terminal that converts natural language to commands. It is featured by: - Personalized Experience: Optimize the command generation with RAG. - Various LLMs Support: OpenAI GPT, Anthropic Claude, Google Gemini, Mistral AI, and more. - Shell Extensions: Plugin with popular shells like `zsh`, `bash` and `fish`. - Cross Platform: Able to run on Windows, macOS, and Linux.

fabric

Fabric is an open-source framework for augmenting humans using AI. It provides a structured approach to breaking down problems into individual components and applying AI to them one at a time. Fabric includes a collection of pre-defined Patterns (prompts) that can be used for a variety of tasks, such as extracting the most interesting parts of YouTube videos and podcasts, writing essays, summarizing academic papers, creating AI art prompts, and more. Users can also create their own custom Patterns. Fabric is designed to be easy to use, with a command-line interface and a variety of helper apps. It is also extensible, allowing users to integrate it with their own AI applications and infrastructure.

opencommit

OpenCommit is a tool that auto-generates meaningful commits using AI, allowing users to quickly create commit messages for their staged changes. It provides a CLI interface for easy usage and supports customization of commit descriptions, emojis, and AI models. Users can configure local and global settings, switch between different AI providers, and set up Git hooks for integration with IDE Source Control. Additionally, OpenCommit can be used as a GitHub Action to automatically improve commit messages on push events, ensuring all commits are meaningful and not generic. Payments for OpenAI API requests are handled by the user, with the tool storing API keys locally.

slack-bot

The Slack Bot is a tool designed to enhance the workflow of development teams by integrating with Jenkins, GitHub, GitLab, and Jira. It allows for custom commands, macros, crons, and project-specific commands to be implemented easily. Users can interact with the bot through Slack messages, execute commands, and monitor job progress. The bot supports features like starting and monitoring Jenkins jobs, tracking pull requests, querying Jira information, creating buttons for interactions, generating images with DALL-E, playing quiz games, checking weather, defining custom commands, and more. Configuration is managed via YAML files, allowing users to set up credentials for external services, define custom commands, schedule cron jobs, and configure VCS systems like Bitbucket for automated branch lookup in Jenkins triggers.

hash

HASH is a self-building, open-source database which grows, structures and checks itself. With it, we're creating a platform for decision-making, which helps you integrate, understand and use data in a variety of different ways.

gpt-cli

gpt-cli is a command-line interface tool for interacting with various chat language models like ChatGPT, Claude, and others. It supports model customization, usage tracking, keyboard shortcuts, multi-line input, markdown support, predefined messages, and multiple assistants. Users can easily switch between different assistants, define custom assistants, and configure model parameters and API keys in a YAML file for easy customization and management.

illume

Illume is a scriptable command line program designed for interfacing with an OpenAI-compatible LLM API. It acts as a unix filter, sending standard input to the LLM and streaming its response to standard output. Users can interact with the LLM through text editors like Vim or Emacs, enabling seamless communication with the AI model for various tasks.

magic-cli

Magic CLI is a command line utility that leverages Large Language Models (LLMs) to enhance command line efficiency. It is inspired by projects like Amazon Q and GitHub Copilot for CLI. The tool allows users to suggest commands, search across command history, and generate commands for specific tasks using local or remote LLM providers. Magic CLI also provides configuration options for LLM selection and response generation. The project is still in early development, so users should expect breaking changes and bugs.

kwaak

Kwaak is a tool that allows users to run a team of autonomous AI agents locally from their own machine. It enables users to write code, improve test coverage, update documentation, and enhance code quality while focusing on building innovative projects. Kwaak is designed to run multiple agents in parallel, interact with codebases, answer questions about code, find examples, write and execute code, create pull requests, and more. It is free and open-source, allowing users to bring their own API keys or models via Ollama. Kwaak is part of the bosun.ai project, aiming to be a platform for autonomous code improvement.

vectorflow

VectorFlow is an open source, high throughput, fault tolerant vector embedding pipeline. It provides a simple API endpoint for ingesting large volumes of raw data, processing, and storing or returning the vectors quickly and reliably. The tool supports text-based files like TXT, PDF, HTML, and DOCX, and can be run locally with Kubernetes in production. VectorFlow offers functionalities like embedding documents, running chunking schemas, custom chunking, and integrating with vector databases like Pinecone, Qdrant, and Weaviate. It enforces a standardized schema for uploading data to a vector store and supports features like raw embeddings webhook, chunk validation webhook, S3 endpoint, and telemetry. The tool can be used with the Python client and provides detailed instructions for running and testing the functionalities.

APIMyLlama

APIMyLlama is a server application that provides an interface to interact with the Ollama API, a powerful AI tool to run LLMs. It allows users to easily distribute API keys to create amazing things. The tool offers commands to generate, list, remove, add, change, activate, deactivate, and manage API keys, as well as functionalities to work with webhooks, set rate limits, and get detailed information about API keys. Users can install APIMyLlama packages with NPM, PIP, Jitpack Repo+Gradle or Maven, or from the Crates Repository. The tool supports Node.JS, Python, Java, and Rust for generating responses from the API. Additionally, it provides built-in health checking commands for monitoring API health status.

simpleAI

SimpleAI is a self-hosted alternative to the not-so-open AI API, focused on replicating main endpoints for LLM such as text completion, chat, edits, and embeddings. It allows quick experimentation with different models, creating benchmarks, and handling specific use cases without relying on external services. Users can integrate and declare models through gRPC, query endpoints using Swagger UI or API, and resolve common issues like CORS with FastAPI middleware. The project is open for contributions and welcomes PRs, issues, documentation, and more.

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

For similar tasks

fish-ai

fish-ai is a tool that adds AI functionality to Fish shell. It can be integrated with various AI providers like OpenAI, Azure OpenAI, Google, Hugging Face, Mistral, or a self-hosted LLM. Users can transform comments into commands, autocomplete commands, and suggest fixes. The tool allows customization through configuration files and supports switching between contexts. Data privacy is maintained by redacting sensitive information before submission to the AI models. Development features include debug logging, testing, and creating releases.

incidentfox

IncidentFox is an open-source AI SRE tool designed to assist in incident response by automatically investigating incidents, finding root causes, and suggesting fixes. It integrates with observability stack, infrastructure, and collaboration tools, forming hypotheses, collecting data, and reasoning through to find root causes. The tool is built for production on-call scenarios, handling log sampling, alert correlation, anomaly detection, and dependency mapping. IncidentFox is highly customizable, Slack-first, and works on various platforms like web UI, GitHub, PagerDuty, and API. It aims to reduce incident resolution time, alert noise, and improve knowledge retention for engineering teams.

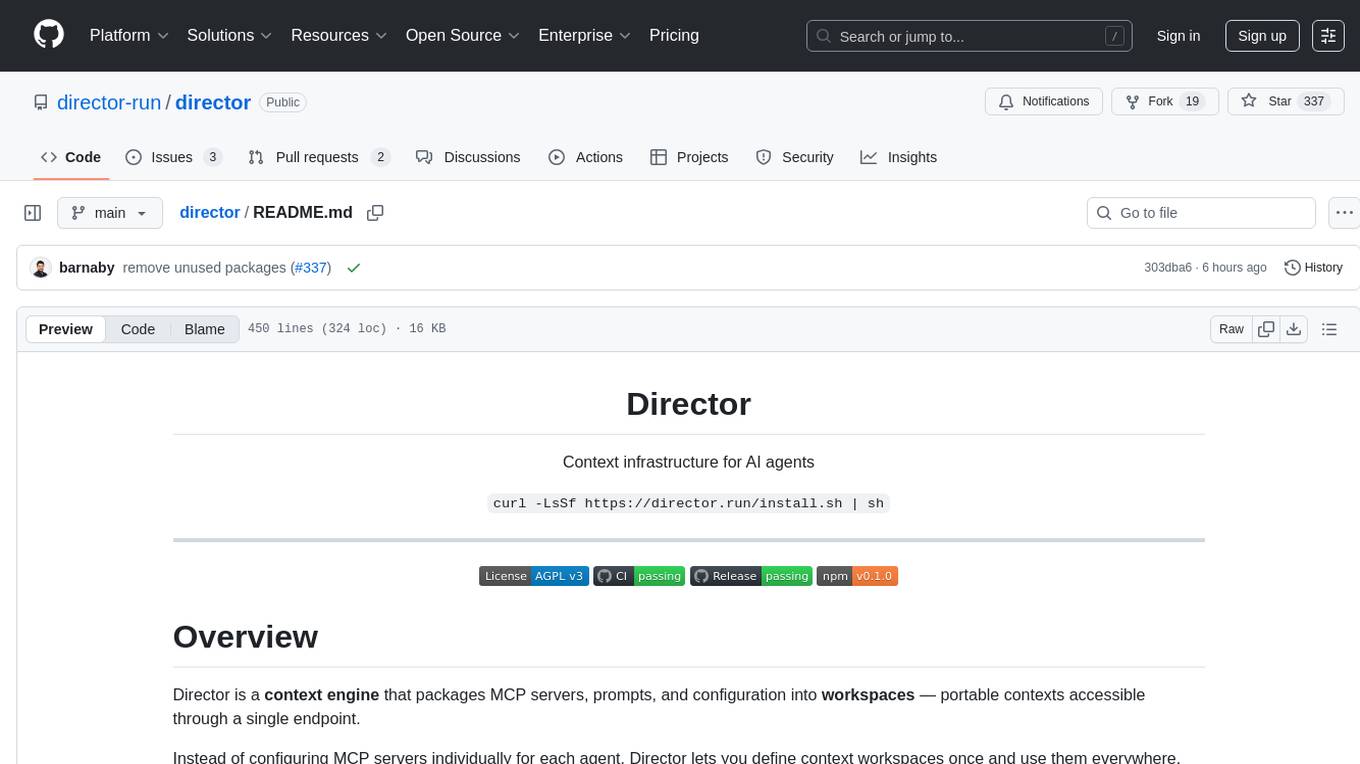

director

Director is a context infrastructure tool for AI agents that simplifies managing MCP servers, prompts, and configurations by packaging them into portable workspaces accessible through a single endpoint. It allows users to define context workspaces once and share them across different AI clients, enabling seamless collaboration, instant context switching, and secure isolation of untrusted servers without cloud dependencies or API keys. Director offers features like workspaces, universal portability, local-first architecture, sandboxing, smart filtering, unified OAuth, observability, multiple interfaces, and compatibility with all MCP clients and servers.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.