HyperChat

HyperChat is a Chat client that strives for openness, utilizing APIs from various LLMs to achieve the best Chat experience, as well as implementing productivity tools through the MCP protocol.

Stars: 408

HyperChat is an open Chat client that utilizes various LLM APIs to enhance the Chat experience and offer productivity tools through the MCP protocol. It supports multiple LLMs like OpenAI, Claude, Qwen, Deepseek, GLM, Ollama. The platform includes a built-in MCP plugin market for easy installation and also allows manual installation of third-party MCPs. Features include Windows and MacOS support, resource support, tools support, English and Chinese language support, built-in MCP client 'hypertools', 'fetch' + 'search', Bot support, Artifacts rendering, KaTeX for mathematical formulas, WebDAV synchronization, and a MCP plugin market. Future plans include permission pop-up, scheduled tasks support, Projects + RAG support, tools implementation by LLM, and a local shell + nodejs + js on web runtime environment.

README:

HyperChat is an open-source chat client that supports MCP and can use the APIs of various LLMs to provide the best chat experience and productivity tools.

- Supports OpenAI-style LLMs,

OpenAI,Claude(OpenRouter),Qwen,Deepseek,GLM,Ollama. - Built-in MCP plugin market with a user-friendly MCP installation configuration, one-click installation, and welcome to submit HyperChatMCP.

- Also supports manual installation of third-party MCP, just fill in

command,args,env.

- HyperChat on Docker

- [x] 🪟Windows + 🍏MacOS + Linux

- [x] Command line execution,

npx -y @dadigua/hyper-chat, default port 16100, password 123456, Web access http://localhost:16100/123456/ - [x] Docker

- Command line version

docker pull dadigua/hyperchat-mini:latest - Ubuntu desktop + Chrome + BrowserUse version (coming soon)

- Command line version

- [x]

WebDAVsynchronization - [x]

MCPexpansion market + third-party MCP support - [x] Supports free creation and design of

Agent, with preset prompts and selection of MCP functions - [x] Supports dark mode🌙

- [x] Resources, Prompts, and Tools support

- [x] Supports English and Chinese

- [x] Supports

Artifacts,SVG,HTMLrendering, js error capturing, and access to the Chrome console - [x] Agent display optimization, supports searching and drag-and-drop sorting

- [x] Supports

KaTeX, displays mathematical formulas, code rendering adds highlighting and quick copy - [x] Added

RAG, based on MCP knowledge base - [x] Added ChatSpace concept, supports multiple conversations at the same time

- [x] Supports Agent Call Agent, through HyperAgent's MCP

- [x] Added scheduled tasks, specify Agent to complete tasks on time and view task completion status.

- Support official Claude protocol

| LLM | Usability | Remarks |

|---|---|---|

| claude | ⭐⭐⭐⭐⭐ | No explanation |

| openai | ⭐⭐⭐⭐ | Also perfectly supports multi-step function calls (gpt-4o-mini also works) |

| gemini flash 2.0 | ⭐⭐⭐⭐ | Very easy to use |

| qwen | ⭐⭐⭐⭐ | Very easy to use |

| doubao | ⭐⭐⭐ | Feels okay to use |

| deepseek | ⭐⭐⭐ | Multi-step function calls may have issues |

-

- Configure APIKEY, ensure your LLM service is compatible with OpenAI style.

-

- Ensure that

uv + nodejsis installed on your system.

- Ensure that

Install using the command line, or check the official GitHub tutorial uv

# MacOS

brew install uv

# windows

winget install --id=astral-sh.uv -e

Install using the command line, or download and install from the official website nodejs

# MacOS

brew install node

# windows

winget install OpenJS.NodeJS.LTS

cd electron && npm install

cd web && pnpm install

npm install

npm run dev

- This project is for learning and communication purposes only. If you use this project for any operations, such as web scraping, etc., it has nothing to do with the developers of this project.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for HyperChat

Similar Open Source Tools

HyperChat

HyperChat is an open Chat client that utilizes various LLM APIs to enhance the Chat experience and offer productivity tools through the MCP protocol. It supports multiple LLMs like OpenAI, Claude, Qwen, Deepseek, GLM, Ollama. The platform includes a built-in MCP plugin market for easy installation and also allows manual installation of third-party MCPs. Features include Windows and MacOS support, resource support, tools support, English and Chinese language support, built-in MCP client 'hypertools', 'fetch' + 'search', Bot support, Artifacts rendering, KaTeX for mathematical formulas, WebDAV synchronization, and a MCP plugin market. Future plans include permission pop-up, scheduled tasks support, Projects + RAG support, tools implementation by LLM, and a local shell + nodejs + js on web runtime environment.

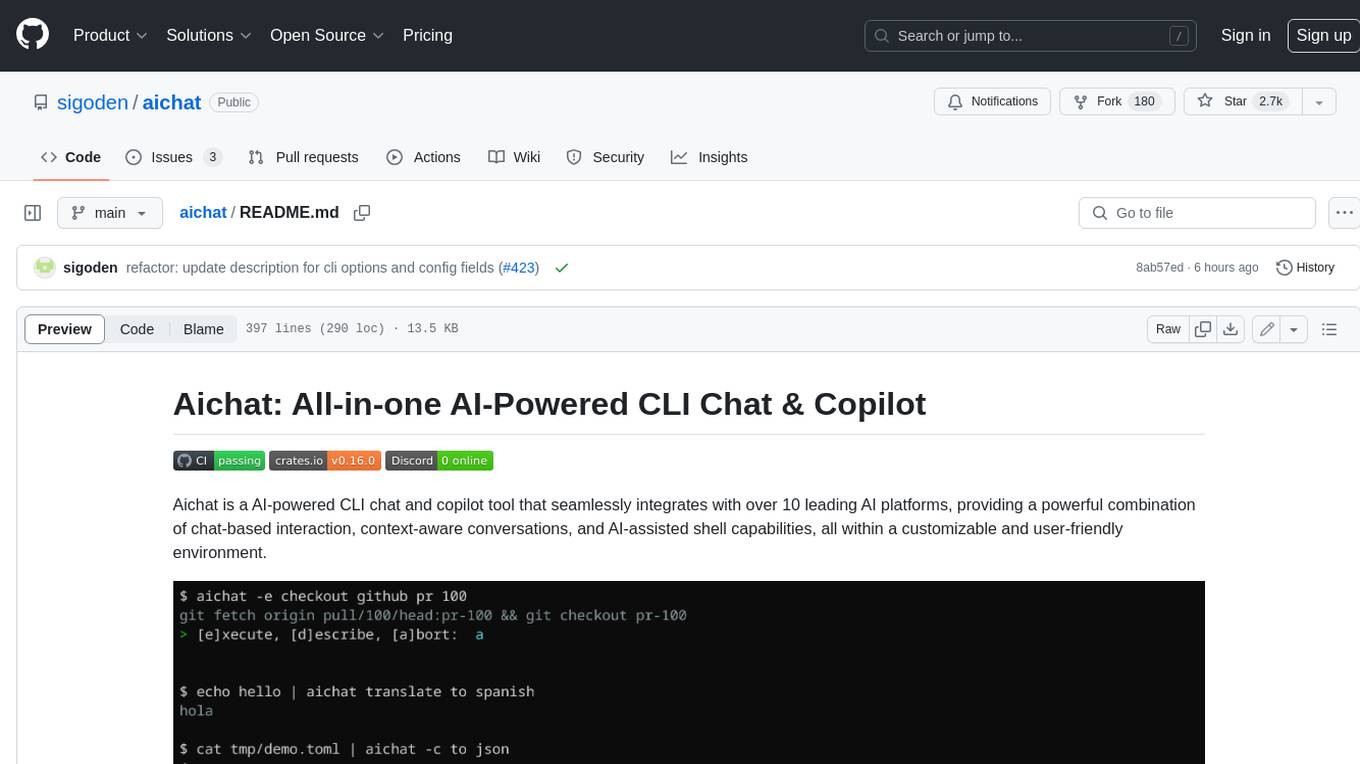

aichat

Aichat is an AI-powered CLI chat and copilot tool that seamlessly integrates with over 10 leading AI platforms, providing a powerful combination of chat-based interaction, context-aware conversations, and AI-assisted shell capabilities, all within a customizable and user-friendly environment.

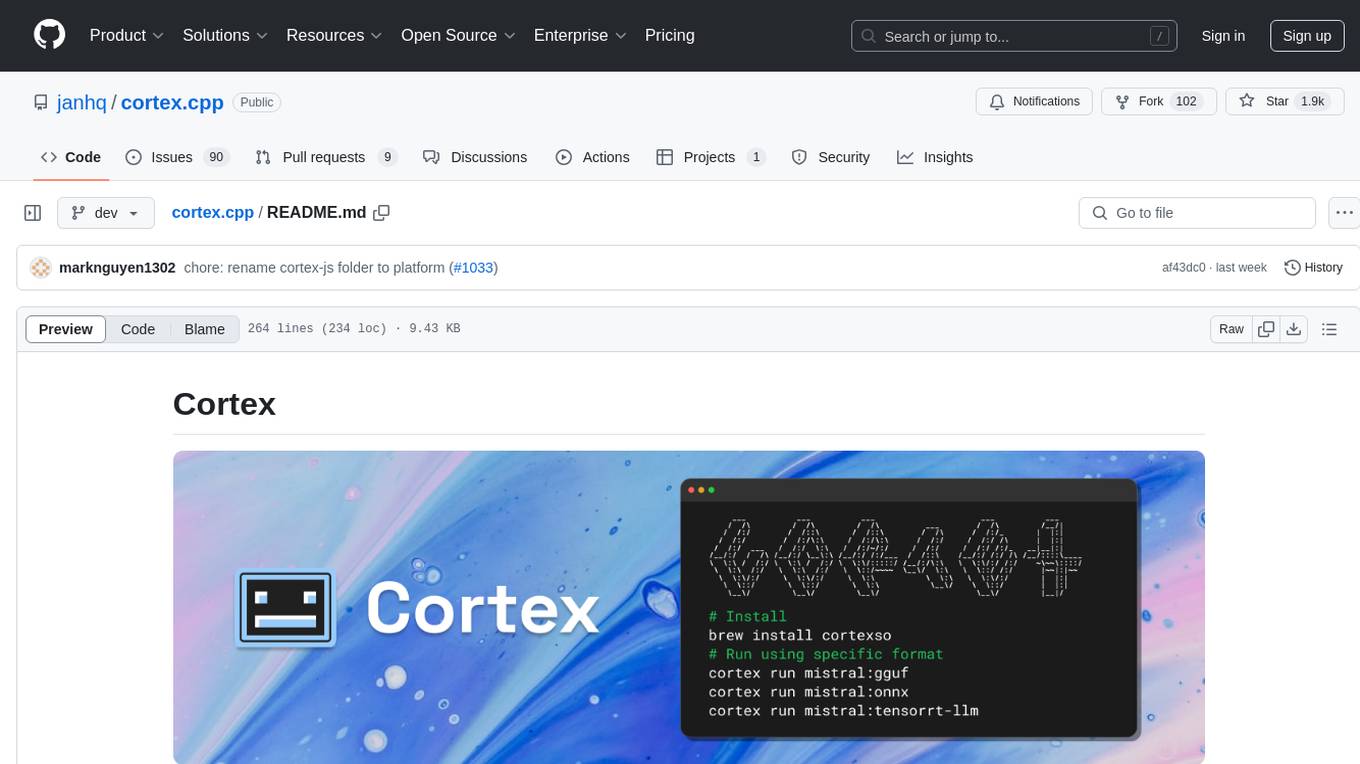

cortex.cpp

Cortex is a C++ AI engine with a Docker-like command-line interface and client libraries. It supports running AI models using ONNX, TensorRT-LLM, and llama.cpp engines. Cortex can function as a standalone server or be integrated as a library. The tool provides support for various engines and models, allowing users to easily deploy and interact with AI models. It offers a range of CLI commands for managing models, embeddings, and engines, as well as a REST API for interacting with models. Cortex is designed to simplify the deployment and usage of AI models in C++ applications.

NextChat

NextChat is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro. It offers a compact client for Linux, Windows, and MacOS, with features like self-deployed LLMs compatibility, privacy-first data storage, markdown support, responsive design, and fast loading speed. Users can create, share, and debug chat tools with prompt templates, access various prompts, compress chat history, and use multiple languages. The tool also supports enterprise-level privatization and customization deployment, with features like brand customization, resource integration, permission control, knowledge integration, security auditing, private deployment, and continuous updates.

nexa-sdk

Nexa SDK is a comprehensive toolkit supporting ONNX and GGML models for text generation, image generation, vision-language models (VLM), and text-to-speech (TTS) capabilities. It offers an OpenAI-compatible API server with JSON schema mode and streaming support, along with a user-friendly Streamlit UI. Users can run Nexa SDK on any device with Python environment, with GPU acceleration supported. The toolkit provides model support, conversion engine, inference engine for various tasks, and differentiating features from other tools.

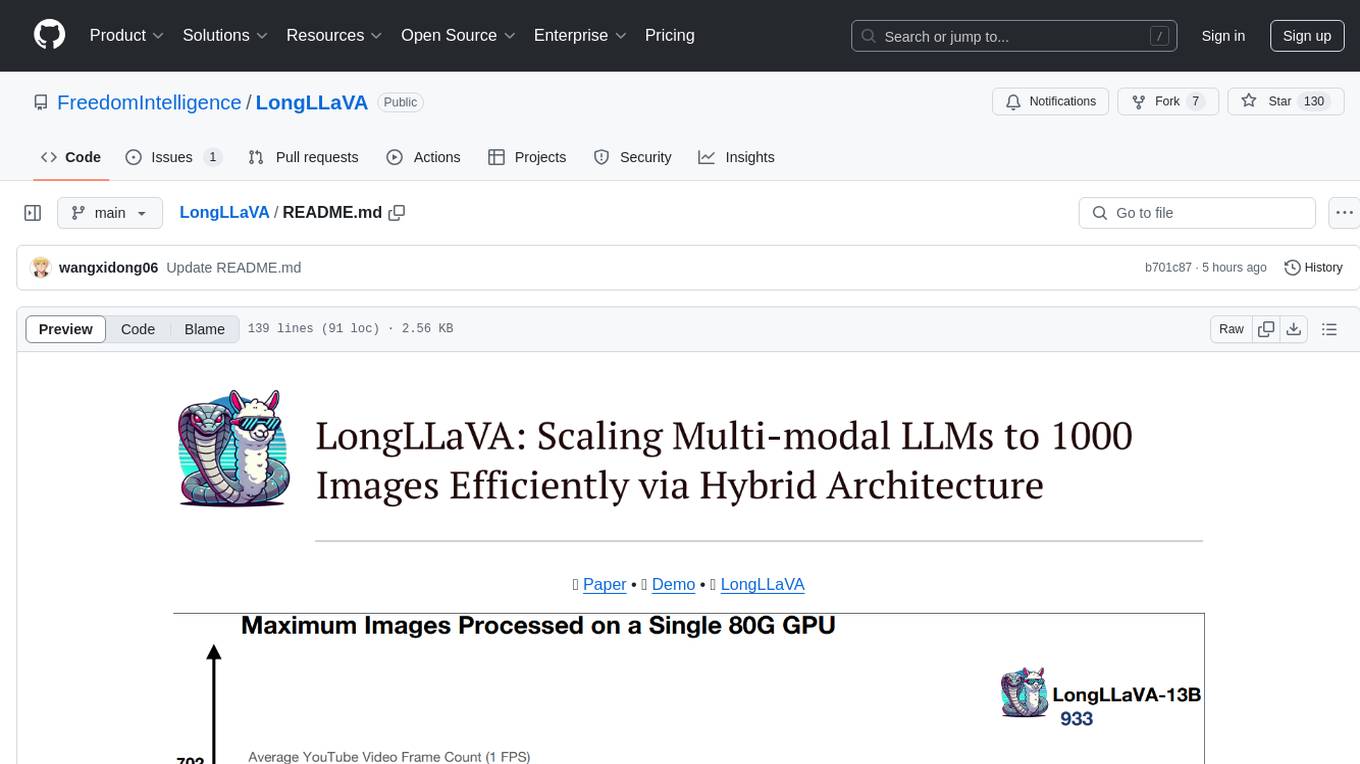

LongLLaVA

LongLLaVA is a tool for scaling multi-modal LLMs to 1000 images efficiently via hybrid architecture. It includes stages for single-image alignment, instruction-tuning, and multi-image instruction-tuning, with evaluation through a command line interface and model inference. The tool aims to achieve GPT-4V level capabilities and beyond, providing reproducibility of results and benchmarks for efficiency and performance.

Kord-Ai

Kord-Ai is a WhatsApp bot designed to automate interactions on WhatsApp by executing predefined commands or responding to user inputs. It can handle tasks like sending messages, sharing media, and managing group activities, providing convenience and efficiency for users and businesses. The bot offers features for deployment on various platforms, including Heroku, Replit, Koyeb, Glitch, Codespace, Render, Railway, VPS, and PC. Users can deploy the bot by obtaining a session ID, forking the repository, setting configurations in the Config.js file, and starting/stopping the bot using npm commands. It is important to note that Kord-Ai is a bot created by M3264, not affiliated with WhatsApp, and users should be cautious in its usage.

WilliamButcherBot

WilliamButcherBot is a Telegram Group Manager Bot and Userbot written in Python using Pyrogram. It provides features for managing Telegram groups and users, with ready-to-use methods available. The bot requires Python 3.9, Telegram API Key, Telegram Bot Token, and MongoDB URI. Users can install it locally or on a VPS, run it directly, generate Pyrogram session for Heroku, or use Docker for deployment. Additionally, users can write new modules to extend the bot's functionality by adding them to the wbb/modules/ directory.

GPULlama3.java

GPULlama3.java powered by TornadoVM is a Java-native implementation of Llama3 that automatically compiles and executes Java code on GPUs via TornadoVM. It supports Llama3, Mistral, Qwen2.5, Qwen3, and Phi3 models in the GGUF format. The repository aims to provide GPU acceleration for Java code, enabling faster execution and high-performance access to off-heap memory. It offers features like interactive and instruction modes, flexible backend switching between OpenCL and PTX, and cross-platform compatibility with NVIDIA, Intel, and Apple GPUs.

Qmedia

QMedia is an open-source multimedia AI content search engine designed specifically for content creators. It provides rich information extraction methods for text, image, and short video content. The tool integrates unstructured text, image, and short video information to build a multimodal RAG content Q&A system. Users can efficiently search for image/text and short video materials, analyze content, provide content sources, and generate customized search results based on user interests and needs. QMedia supports local deployment for offline content search and Q&A for private data. The tool offers features like content cards display, multimodal content RAG search, and pure local multimodal models deployment. Users can deploy different types of models locally, manage language models, feature embedding models, image models, and video models. QMedia aims to spark new ideas for content creation and share AI content creation concepts in an open-source manner.

flowgram.ai

FlowGram.AI is a node-based flow building engine that helps developers create workflows in fixed or free connection layout modes. It provides interaction best practices and is suitable for visual workflows with clear inputs and outputs. The tool focuses on empowering workflows with AI capabilities.

libllm

libLLM is an open-source project designed for efficient inference of large language models (LLM) on personal computers and mobile devices. It is optimized to run smoothly on common devices, written in C++14 without external dependencies, and supports CUDA for accelerated inference. Users can build the tool for CPU only or with CUDA support, and run libLLM from the command line. Additionally, there are API examples available for Python and the tool can export Huggingface models.

polaris

Polaris establishes a novel, industry‑certified standard to foster the development of impactful methods in AI-based drug discovery. This library is a Python client to interact with the Polaris Hub. It allows you to download Polaris datasets and benchmarks, evaluate a custom method against a Polaris benchmark, and create and upload new datasets and benchmarks.

x-hiring

X-Hiring is a job search tool that uses Google AI to extract summaries of the latest job postings. It is easy to install and run, and can be used to find jobs in a variety of fields. X-Hiring is also open source, so you can contribute to its development or create your own custom version.

conduit

Conduit is an open-source, cross-platform mobile application for Open-WebUI, providing a native mobile experience for interacting with your self-hosted AI infrastructure. It supports real-time chat, model selection, conversation management, markdown rendering, theme support, voice input, file uploads, multi-modal support, secure storage, folder management, and tools invocation. Conduit offers multiple authentication flows and follows a clean architecture pattern with Riverpod for state management, Dio for HTTP networking, WebSocket for real-time streaming, and Flutter Secure Storage for credential management.

painting-droid

Painting Droid is an AI-powered cross-platform painting app inspired by MS Paint, expandable with plugins and open. It utilizes various AI models, from paid providers to self-hosted open-source models, as well as some lightweight ones built into the app. Features include regular painting app features, AI-generated content filling and augmentation, filters and effects, image manipulation, plugin support, and cross-platform compatibility.

For similar tasks

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

superagent-js

Superagent is an open source framework that enables any developer to integrate production ready AI Assistants into any application in a matter of minutes.

chainlit

Chainlit is an open-source async Python framework which allows developers to build scalable Conversational AI or agentic applications. It enables users to create ChatGPT-like applications, embedded chatbots, custom frontends, and API endpoints. The framework provides features such as multi-modal chats, chain of thought visualization, data persistence, human feedback, and an in-context prompt playground. Chainlit is compatible with various Python programs and libraries, including LangChain, Llama Index, Autogen, OpenAI Assistant, and Haystack. It offers a range of examples and a cookbook to showcase its capabilities and inspire users. Chainlit welcomes contributions and is licensed under the Apache 2.0 license.

neo4j-generative-ai-google-cloud

This repo contains sample applications that show how to use Neo4j with the generative AI capabilities in Google Cloud Vertex AI. We explore how to leverage Google generative AI to build and consume a knowledge graph in Neo4j.

MemGPT

MemGPT is a system that intelligently manages different memory tiers in LLMs in order to effectively provide extended context within the LLM's limited context window. For example, MemGPT knows when to push critical information to a vector database and when to retrieve it later in the chat, enabling perpetual conversations. MemGPT can be used to create perpetual chatbots with self-editing memory, chat with your data by talking to your local files or SQL database, and more.

py-gpt

Py-GPT is a Python library that provides an easy-to-use interface for OpenAI's GPT-3 API. It allows users to interact with the powerful GPT-3 model for various natural language processing tasks. With Py-GPT, developers can quickly integrate GPT-3 capabilities into their applications, enabling them to generate text, answer questions, and more with just a few lines of code.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.