NextChat

✨ Local and Fast AI Assistant. Support: Web | iOS | MacOS | Android | Linux | Windows

Stars: 78723

NextChat is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro. It offers a compact client for Linux, Windows, and MacOS, with features like self-deployed LLMs compatibility, privacy-first data storage, markdown support, responsive design, and fast loading speed. Users can create, share, and debug chat tools with prompt templates, access various prompts, compress chat history, and use multiple languages. The tool also supports enterprise-level privatization and customization deployment, with features like brand customization, resource integration, permission control, knowledge integration, security auditing, private deployment, and continuous updates.

README:

English / 简体中文

One-Click to get a well-designed cross-platform ChatGPT web UI, with Claude, GPT4 & Gemini Pro support.

NextChatAI / Web App Demo / Desktop App / Discord / Enterprise Edition / Twitter

Meeting Your Company's Privatization and Customization Deployment Requirements:

- Brand Customization: Tailored VI/UI to seamlessly align with your corporate brand image.

- Resource Integration: Unified configuration and management of dozens of AI resources by company administrators, ready for use by team members.

- Permission Control: Clearly defined member permissions, resource permissions, and knowledge base permissions, all controlled via a corporate-grade Admin Panel.

- Knowledge Integration: Combining your internal knowledge base with AI capabilities, making it more relevant to your company's specific business needs compared to general AI.

- Security Auditing: Automatically intercept sensitive inquiries and trace all historical conversation records, ensuring AI adherence to corporate information security standards.

- Private Deployment: Enterprise-level private deployment supporting various mainstream private cloud solutions, ensuring data security and privacy protection.

- Continuous Updates: Ongoing updates and upgrades in cutting-edge capabilities like multimodal AI, ensuring consistent innovation and advancement.

For enterprise inquiries, please contact: [email protected]

- Deploy for free with one-click on Vercel in under 1 minute

- Compact client (~5MB) on Linux/Windows/MacOS, download it now

- Fully compatible with self-deployed LLMs, recommended for use with RWKV-Runner or LocalAI

- Privacy first, all data is stored locally in the browser

- Markdown support: LaTex, mermaid, code highlight, etc.

- Responsive design, dark mode and PWA

- Fast first screen loading speed (~100kb), support streaming response

- New in v2: create, share and debug your chat tools with prompt templates (mask)

- Awesome prompts powered by awesome-chatgpt-prompts-zh and awesome-chatgpt-prompts

- Automatically compresses chat history to support long conversations while also saving your tokens

- I18n: English, 简体中文, 繁体中文, 日本語, Français, Español, Italiano, Türkçe, Deutsch, Tiếng Việt, Русский, Čeština, 한국어, Indonesia

- [x] System Prompt: pin a user defined prompt as system prompt #138

- [x] User Prompt: user can edit and save custom prompts to prompt list

- [x] Prompt Template: create a new chat with pre-defined in-context prompts #993

- [x] Share as image, share to ShareGPT #1741

- [x] Desktop App with tauri

- [x] Self-host Model: Fully compatible with RWKV-Runner, as well as server deployment of LocalAI: llama/gpt4all/rwkv/vicuna/koala/gpt4all-j/cerebras/falcon/dolly etc.

- [x] Artifacts: Easily preview, copy and share generated content/webpages through a separate window #5092

- [x] Plugins: support network search, calculator, any other apis etc. #165 #5353

- [x] Supports Realtime Chat #5672

- [ ] local knowledge base

- 🚀 v2.15.8 Now supports Realtime Chat #5672

- 🚀 v2.15.4 The Application supports using Tauri fetch LLM API, MORE SECURITY! #5379

- 🚀 v2.15.0 Now supports Plugins! Read this: NextChat-Awesome-Plugins

- 🚀 v2.14.0 Now supports Artifacts & SD

- 🚀 v2.10.1 support Google Gemini Pro model.

- 🚀 v2.9.11 you can use azure endpoint now.

- 🚀 v2.8 now we have a client that runs across all platforms!

- 🚀 v2.7 let's share conversations as image, or share to ShareGPT!

- 🚀 v2.0 is released, now you can create prompt templates, turn your ideas into reality! Read this: ChatGPT Prompt Engineering Tips: Zero, One and Few Shot Prompting.

- Get OpenAI API Key;

- Click

, remember that

CODEis your page password; - Enjoy :)

If you have deployed your own project with just one click following the steps above, you may encounter the issue of "Updates Available" constantly showing up. This is because Vercel will create a new project for you by default instead of forking this project, resulting in the inability to detect updates correctly.

We recommend that you follow the steps below to re-deploy:

- Delete the original repository;

- Use the fork button in the upper right corner of the page to fork this project;

- Choose and deploy in Vercel again, please see the detailed tutorial.

If you encounter a failure of Upstream Sync execution, please manually update code.

After forking the project, due to the limitations imposed by GitHub, you need to manually enable Workflows and Upstream Sync Action on the Actions page of the forked project. Once enabled, automatic updates will be scheduled every hour:

If you want to update instantly, you can check out the GitHub documentation to learn how to synchronize a forked project with upstream code.

You can star or watch this project or follow author to get release notifications in time.

This project provides limited access control. Please add an environment variable named CODE on the vercel environment variables page. The value should be passwords separated by comma like this:

code1,code2,code3

After adding or modifying this environment variable, please redeploy the project for the changes to take effect.

Access password, separated by comma.

Your openai api key, join multiple api keys with comma.

Default:

https://api.openai.com

Examples:

http://your-openai-proxy.com

Override openai api request base url.

Specify OpenAI organization ID.

Example: https://{azure-resource-url}/openai

Azure deploy url.

Azure Api Key.

Azure Api Version, find it at Azure Documentation.

Google Gemini Pro Api Key.

Google Gemini Pro Api Url.

anthropic claude Api Key.

anthropic claude Api version.

anthropic claude Api Url.

Baidu Api Key.

Baidu Secret Key.

Baidu Api Url.

ByteDance Api Key.

ByteDance Api Url.

Alibaba Cloud Api Key.

Alibaba Cloud Api Url.

iflytek Api Url.

iflytek Api Key.

iflytek Api Secret.

ChatGLM Api Key.

ChatGLM Api Url.

DeepSeek Api Key.

DeepSeek Api Url.

Default: Empty

If you do not want users to input their own API key, set this value to 1.

Default: Empty

If you do not want users to use GPT-4, set this value to 1.

Default: Empty

If you do want users to query balance, set this value to 1.

Default: Empty

If you want to disable parse settings from url, set this to 1.

Default: Empty Example:

+llama,+claude-2,-gpt-3.5-turbo,gpt-4-1106-preview=gpt-4-turbomeans addllama, claude-2to model list, and removegpt-3.5-turbofrom list, and displaygpt-4-1106-previewasgpt-4-turbo.

To control custom models, use + to add a custom model, use - to hide a model, use name=displayName to customize model name, separated by comma.

User -all to disable all default models, +all to enable all default models.

For Azure: use modelName@Azure=deploymentName to customize model name and deployment name.

Example:

+gpt-3.5-turbo@Azure=gpt35will show optiongpt35(Azure)in model list. If you only can use Azure model,-all,+gpt-3.5-turbo@Azure=gpt35willgpt35(Azure)the only option in model list.

For ByteDance: use modelName@bytedance=deploymentName to customize model name and deployment name.

Example:

+Doubao-lite-4k@bytedance=ep-xxxxx-xxxwill show optionDoubao-lite-4k(ByteDance)in model list.

Change default model

Default: Empty Example:

gpt-4-vision,claude-3-opus,my-custom-modelmeans add vision capabilities to these models in addition to the default pattern matches (which detect models containing keywords like "vision", "claude-3", "gemini-1.5", etc).

Add additional models to have vision capabilities, beyond the default pattern matching. Multiple models should be separated by commas.

You can use this option if you want to increase the number of webdav service addresses you are allowed to access, as required by the format:

- Each address must be a complete endpoint

https://xxxx/yyy

- Multiple addresses are connected by ', '

Customize the default template used to initialize the User Input Preprocessing configuration item in Settings.

Stability API key.

Customize Stability API url.

NodeJS >= 18, Docker >= 20

Before starting development, you must create a new .env.local file at project root, and place your api key into it:

OPENAI_API_KEY=<your api key here>

# if you are not able to access openai service, use this BASE_URL

BASE_URL=https://chatgpt1.nextweb.fun/api/proxy

# 1. install nodejs and yarn first

# 2. config local env vars in `.env.local`

# 3. run

yarn install

yarn devdocker pull yidadaa/chatgpt-next-web

docker run -d -p 3000:3000 \

-e OPENAI_API_KEY=sk-xxxx \

-e CODE=your-password \

yidadaa/chatgpt-next-webYou can start service behind a proxy:

docker run -d -p 3000:3000 \

-e OPENAI_API_KEY=sk-xxxx \

-e CODE=your-password \

-e PROXY_URL=http://localhost:7890 \

yidadaa/chatgpt-next-webIf your proxy needs password, use:

-e PROXY_URL="http://127.0.0.1:7890 user pass"bash <(curl -s https://raw.githubusercontent.com/Yidadaa/ChatGPT-Next-Web/main/scripts/setup.sh)| 简体中文 | English | Italiano | 日本語 | 한국어

Please go to the [docs][./docs] directory for more documentation instructions.

- Deploy with cloudflare (Deprecated)

- Frequent Ask Questions

- How to add a new translation

- How to use Vercel (No English)

- User Manual (Only Chinese, WIP)

If you want to add a new translation, read this document.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for NextChat

Similar Open Source Tools

NextChat

NextChat is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro. It offers a compact client for Linux, Windows, and MacOS, with features like self-deployed LLMs compatibility, privacy-first data storage, markdown support, responsive design, and fast loading speed. Users can create, share, and debug chat tools with prompt templates, access various prompts, compress chat history, and use multiple languages. The tool also supports enterprise-level privatization and customization deployment, with features like brand customization, resource integration, permission control, knowledge integration, security auditing, private deployment, and continuous updates.

ChatGPT-Next-Web

ChatGPT Next Web is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro models. It allows users to deploy their private ChatGPT applications with ease. The tool offers features like one-click deployment, compact client for Linux/Windows/MacOS, compatibility with self-deployed LLMs, privacy-first approach with local data storage, markdown support, responsive design, fast loading speed, prompt templates, awesome prompts, chat history compression, multilingual support, and more.

TalkWithGemini

Talk With Gemini is a web application that allows users to deploy their private Gemini application for free with one click. It supports Gemini Pro and Gemini Pro Vision models. The application features talk mode for direct communication with Gemini, visual recognition for understanding picture content, full Markdown support, automatic compression of chat records, privacy and security with local data storage, well-designed UI with responsive design, fast loading speed, and multi-language support. The tool is designed to be user-friendly and versatile for various deployment options and language preferences.

gemini-next-chat

Gemini Next Chat is an open-source, extensible high-performance Gemini chatbot framework that supports one-click free deployment of private Gemini web applications. It provides a simple interface with image recognition and voice conversation, supports multi-modal models, talk mode, visual recognition, assistant market, support plugins, conversation list, full Markdown support, privacy and security, PWA support, well-designed UI, fast loading speed, static deployment, and multi-language support.

obsei

Obsei is an open-source, low-code, AI powered automation tool that consists of an Observer to collect unstructured data from various sources, an Analyzer to analyze the collected data with various AI tasks, and an Informer to send analyzed data to various destinations. The tool is suitable for scheduled jobs or serverless applications as all Observers can store their state in databases. Obsei is still in alpha stage, so caution is advised when using it in production. The tool can be used for social listening, alerting/notification, automatic customer issue creation, extraction of deeper insights from feedbacks, market research, dataset creation for various AI tasks, and more based on creativity.

catai

CatAI is a tool that allows users to run GGUF models on their computer with a chat UI. It serves as a local AI assistant inspired by Node-Llama-Cpp and Llama.cpp. The tool provides features such as auto-detecting programming language, showing original messages by clicking on user icons, real-time text streaming, and fast model downloads. Users can interact with the tool through a CLI that supports commands for installing, listing, setting, serving, updating, and removing models. CatAI is cross-platform and supports Windows, Linux, and Mac. It utilizes node-llama-cpp and offers a simple API for asking model questions. Additionally, developers can integrate the tool with node-llama-cpp@beta for model management and chatting. The configuration can be edited via the web UI, and contributions to the project are welcome. The tool is licensed under Llama.cpp's license.

plexe

Plexe is a tool that allows users to create machine learning models by describing them in plain language. Users can explain their requirements, provide a dataset, and the AI-powered system will build a fully functional model through an automated agentic approach. It supports multiple AI agents and model building frameworks like XGBoost, CatBoost, and Keras. Plexe also provides Docker images with pre-configured environments, YAML configuration for customization, and support for multiple LiteLLM providers. Users can visualize experiment results using the built-in Streamlit dashboard and extend Plexe's functionality through custom integrations.

ragflow

RAGFlow is an open-source Retrieval-Augmented Generation (RAG) engine that combines deep document understanding with Large Language Models (LLMs) to provide accurate question-answering capabilities. It offers a streamlined RAG workflow for businesses of all sizes, enabling them to extract knowledge from unstructured data in various formats, including Word documents, slides, Excel files, images, and more. RAGFlow's key features include deep document understanding, template-based chunking, grounded citations with reduced hallucinations, compatibility with heterogeneous data sources, and an automated and effortless RAG workflow. It supports multiple recall paired with fused re-ranking, configurable LLMs and embedding models, and intuitive APIs for seamless integration with business applications.

browser-use

Browser Use is a tool designed to make websites accessible for AI agents. It provides an easy way to connect AI agents with the browser, enabling users to perform tasks such as extracting vision and HTML elements, managing multiple tabs, and executing custom actions. The tool supports various language models and allows users to parallelize multiple agents for efficient processing. With features like self-correction and the ability to register custom actions, Browser Use offers a versatile solution for interacting with web content using AI technology.

genius-ai

Genius is a modern Next.js 14 SaaS AI platform that provides a comprehensive folder structure for app development. It offers features like authentication, dashboard management, landing pages, API integration, and more. The platform is built using React JS, Next JS, TypeScript, Tailwind CSS, and integrates with services like Netlify, Prisma, MySQL, and Stripe. Genius enables users to create AI-powered applications with functionalities such as conversation generation, image processing, code generation, and more. It also includes features like Clerk authentication, OpenAI integration, Replicate API usage, Aiven database connectivity, and Stripe API/webhook setup. The platform is fully configurable and provides a seamless development experience for building AI-driven applications.

fragments

Fragments is an open-source tool that leverages Anthropic's Claude Artifacts, Vercel v0, and GPT Engineer. It is powered by E2B Sandbox SDK and Code Interpreter SDK, allowing secure execution of AI-generated code. The tool is based on Next.js 14, shadcn/ui, TailwindCSS, and Vercel AI SDK. Users can stream in the UI, install packages from npm and pip, and add custom stacks and LLM providers. Fragments enables users to build web apps with Python interpreter, Next.js, Vue.js, Streamlit, and Gradio, utilizing providers like OpenAI, Anthropic, Google AI, and more.

Flowise

Flowise is a tool that allows users to build customized LLM flows with a drag-and-drop UI. It is open-source and self-hostable, and it supports various deployments, including AWS, Azure, Digital Ocean, GCP, Railway, Render, HuggingFace Spaces, Elestio, Sealos, and RepoCloud. Flowise has three different modules in a single mono repository: server, ui, and components. The server module is a Node backend that serves API logics, the ui module is a React frontend, and the components module contains third-party node integrations. Flowise supports different environment variables to configure your instance, and you can specify these variables in the .env file inside the packages/server folder.

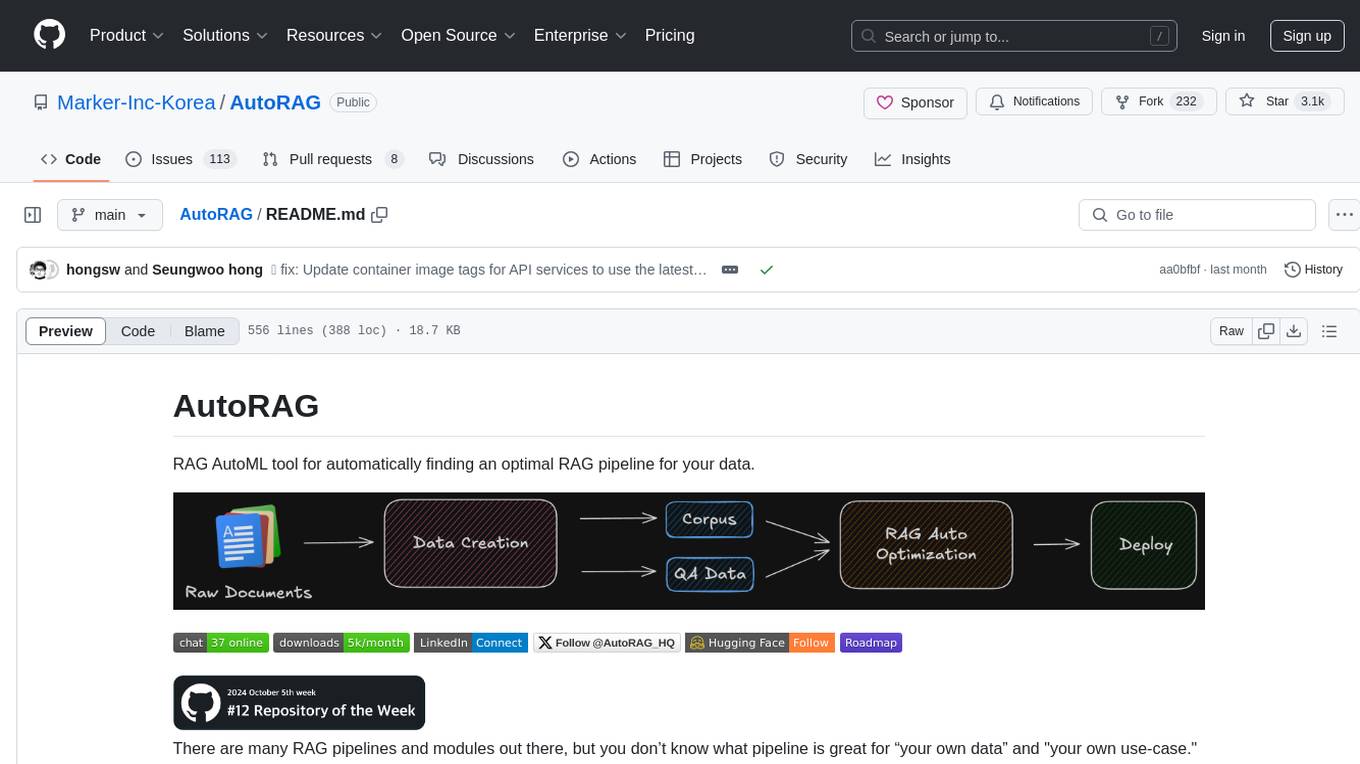

AutoRAG

AutoRAG is an AutoML tool designed to automatically find the optimal RAG pipeline for your data. It simplifies the process of evaluating various RAG modules to identify the best pipeline for your specific use-case. The tool supports easy evaluation of different module combinations, making it efficient to find the most suitable RAG pipeline for your needs. AutoRAG also offers a cloud beta version to assist users in running and optimizing the tool, along with building RAG evaluation datasets for a starting price of $9.99 per optimization.

gpt-translate

Markdown Translation BOT is a GitHub action that translates markdown files into multiple languages using various AI models. It supports markdown, markdown-jsx, and json files only. The action can be executed by individuals with write permissions to the repository, preventing API abuse by non-trusted parties. Users can set up the action by providing their API key and configuring the workflow settings. The tool allows users to create comments with specific commands to trigger translations and automatically generate pull requests or add translated files to existing pull requests. It supports multiple file translations and can interpret any language supported by GPT-4 or GPT-3.5.

Kord-Ai

Kord-Ai is a WhatsApp bot designed to automate interactions on WhatsApp by executing predefined commands or responding to user inputs. It can handle tasks like sending messages, sharing media, and managing group activities, providing convenience and efficiency for users and businesses. The bot offers features for deployment on various platforms, including Heroku, Replit, Koyeb, Glitch, Codespace, Render, Railway, VPS, and PC. Users can deploy the bot by obtaining a session ID, forking the repository, setting configurations in the Config.js file, and starting/stopping the bot using npm commands. It is important to note that Kord-Ai is a bot created by M3264, not affiliated with WhatsApp, and users should be cautious in its usage.

DB-GPT

DB-GPT is a personal database administrator that can solve database problems by reading documents, using various tools, and writing analysis reports. It is currently undergoing an upgrade. **Features:** * **Online Demo:** * Import documents into the knowledge base * Utilize the knowledge base for well-founded Q&A and diagnosis analysis of abnormal alarms * Send feedbacks to refine the intermediate diagnosis results * Edit the diagnosis result * Browse all historical diagnosis results, used metrics, and detailed diagnosis processes * **Language Support:** * English (default) * Chinese (add "language: zh" in config.yaml) * **New Frontend:** * Knowledgebase + Chat Q&A + Diagnosis + Report Replay * **Extreme Speed Version for localized llms:** * 4-bit quantized LLM (reducing inference time by 1/3) * vllm for fast inference (qwen) * Tiny LLM * **Multi-path extraction of document knowledge:** * Vector database (ChromaDB) * RESTful Search Engine (Elasticsearch) * **Expert prompt generation using document knowledge** * **Upgrade the LLM-based diagnosis mechanism:** * Task Dispatching -> Concurrent Diagnosis -> Cross Review -> Report Generation * Synchronous Concurrency Mechanism during LLM inference * **Support monitoring and optimization tools in multiple levels:** * Monitoring metrics (Prometheus) * Flame graph in code level * Diagnosis knowledge retrieval (dbmind) * Logical query transformations (Calcite) * Index optimization algorithms (for PostgreSQL) * Physical operator hints (for PostgreSQL) * Backup and Point-in-time Recovery (Pigsty) * **Continuously updated papers and experimental reports** This project is constantly evolving with new features. Don't forget to star ⭐ and watch 👀 to stay up to date.

For similar tasks

NextChat

NextChat is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro. It offers a compact client for Linux, Windows, and MacOS, with features like self-deployed LLMs compatibility, privacy-first data storage, markdown support, responsive design, and fast loading speed. Users can create, share, and debug chat tools with prompt templates, access various prompts, compress chat history, and use multiple languages. The tool also supports enterprise-level privatization and customization deployment, with features like brand customization, resource integration, permission control, knowledge integration, security auditing, private deployment, and continuous updates.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.