ChatGPT-Next-Web

A cross-platform ChatGPT/Gemini UI (Web / PWA / Linux / Win / MacOS). 一键拥有你自己的跨平台 ChatGPT/Gemini/Claude LLM 应用。

Stars: 78500

ChatGPT Next Web is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro models. It allows users to deploy their private ChatGPT applications with ease. The tool offers features like one-click deployment, compact client for Linux/Windows/MacOS, compatibility with self-deployed LLMs, privacy-first approach with local data storage, markdown support, responsive design, fast loading speed, prompt templates, awesome prompts, chat history compression, multilingual support, and more.

README:

English / 简体中文

One-Click to get a well-designed cross-platform ChatGPT web UI, with Claude, GPT4 & Gemini Pro support.

一键免费部署你的跨平台私人 ChatGPT 应用, 支持 Claude, GPT4 & Gemini Pro 模型。

NextChatAI / Web App Demo / Desktop App / Discord / Enterprise Edition / Twitter

NextChatAI / 自部署网页版 / 客户端 / 企业版 / 反馈

Meeting Your Company's Privatization and Customization Deployment Requirements:

- Brand Customization: Tailored VI/UI to seamlessly align with your corporate brand image.

- Resource Integration: Unified configuration and management of dozens of AI resources by company administrators, ready for use by team members.

- Permission Control: Clearly defined member permissions, resource permissions, and knowledge base permissions, all controlled via a corporate-grade Admin Panel.

- Knowledge Integration: Combining your internal knowledge base with AI capabilities, making it more relevant to your company's specific business needs compared to general AI.

- Security Auditing: Automatically intercept sensitive inquiries and trace all historical conversation records, ensuring AI adherence to corporate information security standards.

- Private Deployment: Enterprise-level private deployment supporting various mainstream private cloud solutions, ensuring data security and privacy protection.

- Continuous Updates: Ongoing updates and upgrades in cutting-edge capabilities like multimodal AI, ensuring consistent innovation and advancement.

For enterprise inquiries, please contact: [email protected]

满足企业用户私有化部署和个性化定制需求:

- 品牌定制:企业量身定制 VI/UI,与企业品牌形象无缝契合

- 资源集成:由企业管理人员统一配置和管理数十种 AI 资源,团队成员开箱即用

- 权限管理:成员权限、资源权限、知识库权限层级分明,企业级 Admin Panel 统一控制

- 知识接入:企业内部知识库与 AI 能力相结合,比通用 AI 更贴近企业自身业务需求

- 安全审计:自动拦截敏感提问,支持追溯全部历史对话记录,让 AI 也能遵循企业信息安全规范

- 私有部署:企业级私有部署,支持各类主流私有云部署,确保数据安全和隐私保护

- 持续更新:提供多模态、智能体等前沿能力持续更新升级服务,常用常新、持续先进

企业版咨询: [email protected]

- Deploy for free with one-click on Vercel in under 1 minute

- Compact client (~5MB) on Linux/Windows/MacOS, download it now

- Fully compatible with self-deployed LLMs, recommended for use with RWKV-Runner or LocalAI

- Privacy first, all data is stored locally in the browser

- Markdown support: LaTex, mermaid, code highlight, etc.

- Responsive design, dark mode and PWA

- Fast first screen loading speed (~100kb), support streaming response

- New in v2: create, share and debug your chat tools with prompt templates (mask)

- Awesome prompts powered by awesome-chatgpt-prompts-zh and awesome-chatgpt-prompts

- Automatically compresses chat history to support long conversations while also saving your tokens

- I18n: English, 简体中文, 繁体中文, 日本語, Français, Español, Italiano, Türkçe, Deutsch, Tiếng Việt, Русский, Čeština, 한국어, Indonesia

- [x] System Prompt: pin a user defined prompt as system prompt #138

- [x] User Prompt: user can edit and save custom prompts to prompt list

- [x] Prompt Template: create a new chat with pre-defined in-context prompts #993

- [x] Share as image, share to ShareGPT #1741

- [x] Desktop App with tauri

- [x] Self-host Model: Fully compatible with RWKV-Runner, as well as server deployment of LocalAI: llama/gpt4all/rwkv/vicuna/koala/gpt4all-j/cerebras/falcon/dolly etc.

- [x] Artifacts: Easily preview, copy and share generated content/webpages through a separate window #5092

- [x] Plugins: support network search, calculator, any other apis etc. #165 #5353

- [x] Supports Realtime Chat #5672

- [ ] local knowledge base

- 🚀 v2.15.8 Now supports Realtime Chat #5672

- 🚀 v2.15.4 The Application supports using Tauri fetch LLM API, MORE SECURITY! #5379

- 🚀 v2.15.0 Now supports Plugins! Read this: NextChat-Awesome-Plugins

- 🚀 v2.14.0 Now supports Artifacts & SD

- 🚀 v2.10.1 support Google Gemini Pro model.

- 🚀 v2.9.11 you can use azure endpoint now.

- 🚀 v2.8 now we have a client that runs across all platforms!

- 🚀 v2.7 let's share conversations as image, or share to ShareGPT!

- 🚀 v2.0 is released, now you can create prompt templates, turn your ideas into reality! Read this: ChatGPT Prompt Engineering Tips: Zero, One and Few Shot Prompting.

- 在 1 分钟内使用 Vercel 免费一键部署

- 提供体积极小(~5MB)的跨平台客户端(Linux/Windows/MacOS), 下载地址

- 完整的 Markdown 支持:LaTex 公式、Mermaid 流程图、代码高亮等等

- 精心设计的 UI,响应式设计,支持深色模式,支持 PWA

- 极快的首屏加载速度(~100kb),支持流式响应

- 隐私安全,所有数据保存在用户浏览器本地

- 预制角色功能(面具),方便地创建、分享和调试你的个性化对话

- 海量的内置 prompt 列表,来自中文和英文

- 自动压缩上下文聊天记录,在节省 Token 的同时支持超长对话

- 多国语言支持:English, 简体中文, 繁体中文, 日本語, Español, Italiano, Türkçe, Deutsch, Tiếng Việt, Русский, Čeština, 한국어, Indonesia

- 拥有自己的域名?好上加好,绑定后即可在任何地方无障碍快速访问

- [x] 为每个对话设置系统 Prompt #138

- [x] 允许用户自行编辑内置 Prompt 列表

- [x] 预制角色:使用预制角色快速定制新对话 #993

- [x] 分享为图片,分享到 ShareGPT 链接 #1741

- [x] 使用 tauri 打包桌面应用

- [x] 支持自部署的大语言模型:开箱即用 RWKV-Runner ,服务端部署 LocalAI 项目 llama / gpt4all / rwkv / vicuna / koala / gpt4all-j / cerebras / falcon / dolly 等等,或者使用 api-for-open-llm

- [x] Artifacts: 通过独立窗口,轻松预览、复制和分享生成的内容/可交互网页 #5092

- [x] 插件机制,支持

联网搜索、计算器、调用其他平台 api #165 #5353 - [x] 支持 Realtime Chat #5672

- [ ] 本地知识库

- 🚀 v2.15.8 现在支持Realtime Chat #5672

- 🚀 v2.15.4 客户端支持Tauri本地直接调用大模型API,更安全!#5379

- 🚀 v2.15.0 现在支持插件功能了!了解更多:NextChat-Awesome-Plugins

- 🚀 v2.14.0 现在支持 Artifacts & SD 了。

- 🚀 v2.10.1 现在支持 Gemini Pro 模型。

- 🚀 v2.9.11 现在可以使用自定义 Azure 服务了。

- 🚀 v2.8 发布了横跨 Linux/Windows/MacOS 的体积极小的客户端。

- 🚀 v2.7 现在可以将会话分享为图片了,也可以分享到 ShareGPT 的在线链接。

- 🚀 v2.0 已经发布,现在你可以使用面具功能快速创建预制对话了! 了解更多: ChatGPT 提示词高阶技能:零次、一次和少样本提示。

- 💡 想要更方便地随时随地使用本项目?可以试下这款桌面插件:https://github.com/mushan0x0/AI0x0.com

- Get OpenAI API Key;

- Click

, remember that

CODEis your page password; - Enjoy :)

If you have deployed your own project with just one click following the steps above, you may encounter the issue of "Updates Available" constantly showing up. This is because Vercel will create a new project for you by default instead of forking this project, resulting in the inability to detect updates correctly.

We recommend that you follow the steps below to re-deploy:

- Delete the original repository;

- Use the fork button in the upper right corner of the page to fork this project;

- Choose and deploy in Vercel again, please see the detailed tutorial.

If you encounter a failure of Upstream Sync execution, please manually update code.

After forking the project, due to the limitations imposed by GitHub, you need to manually enable Workflows and Upstream Sync Action on the Actions page of the forked project. Once enabled, automatic updates will be scheduled every hour:

If you want to update instantly, you can check out the GitHub documentation to learn how to synchronize a forked project with upstream code.

You can star or watch this project or follow author to get release notifications in time.

This project provides limited access control. Please add an environment variable named CODE on the vercel environment variables page. The value should be passwords separated by comma like this:

code1,code2,code3

After adding or modifying this environment variable, please redeploy the project for the changes to take effect.

Access password, separated by comma.

Your openai api key, join multiple api keys with comma.

Default:

https://api.openai.com

Examples:

http://your-openai-proxy.com

Override openai api request base url.

Specify OpenAI organization ID.

Example: https://{azure-resource-url}/openai

Azure deploy url.

Azure Api Key.

Azure Api Version, find it at Azure Documentation.

Google Gemini Pro Api Key.

Google Gemini Pro Api Url.

anthropic claude Api Key.

anthropic claude Api version.

anthropic claude Api Url.

Baidu Api Key.

Baidu Secret Key.

Baidu Api Url.

ByteDance Api Key.

ByteDance Api Url.

Alibaba Cloud Api Key.

Alibaba Cloud Api Url.

iflytek Api Url.

iflytek Api Key.

iflytek Api Secret.

ChatGLM Api Key.

ChatGLM Api Url.

DeepSeek Api Key.

DeepSeek Api Url.

Default: Empty

If you do not want users to input their own API key, set this value to 1.

Default: Empty

If you do not want users to use GPT-4, set this value to 1.

Default: Empty

If you do want users to query balance, set this value to 1.

Default: Empty

If you want to disable parse settings from url, set this to 1.

Default: Empty Example:

+llama,+claude-2,-gpt-3.5-turbo,gpt-4-1106-preview=gpt-4-turbomeans addllama, claude-2to model list, and removegpt-3.5-turbofrom list, and displaygpt-4-1106-previewasgpt-4-turbo.

To control custom models, use + to add a custom model, use - to hide a model, use name=displayName to customize model name, separated by comma.

User -all to disable all default models, +all to enable all default models.

For Azure: use modelName@Azure=deploymentName to customize model name and deployment name.

Example:

+gpt-3.5-turbo@Azure=gpt35will show optiongpt35(Azure)in model list. If you only can use Azure model,-all,+gpt-3.5-turbo@Azure=gpt35willgpt35(Azure)the only option in model list.

For ByteDance: use modelName@bytedance=deploymentName to customize model name and deployment name.

Example:

+Doubao-lite-4k@bytedance=ep-xxxxx-xxxwill show optionDoubao-lite-4k(ByteDance)in model list.

Change default model

Default: Empty Example:

gpt-4-vision,claude-3-opus,my-custom-modelmeans add vision capabilities to these models in addition to the default pattern matches (which detect models containing keywords like "vision", "claude-3", "gemini-1.5", etc).

Add additional models to have vision capabilities, beyond the default pattern matching. Multiple models should be separated by commas.

You can use this option if you want to increase the number of webdav service addresses you are allowed to access, as required by the format:

- Each address must be a complete endpoint

https://xxxx/yyy

- Multiple addresses are connected by ', '

Customize the default template used to initialize the User Input Preprocessing configuration item in Settings.

Stability API key.

Customize Stability API url.

NodeJS >= 18, Docker >= 20

Before starting development, you must create a new .env.local file at project root, and place your api key into it:

OPENAI_API_KEY=<your api key here>

# if you are not able to access openai service, use this BASE_URL

BASE_URL=https://chatgpt1.nextweb.fun/api/proxy

# 1. install nodejs and yarn first

# 2. config local env vars in `.env.local`

# 3. run

yarn install

yarn devdocker pull yidadaa/chatgpt-next-web

docker run -d -p 3000:3000 \

-e OPENAI_API_KEY=sk-xxxx \

-e CODE=your-password \

yidadaa/chatgpt-next-webYou can start service behind a proxy:

docker run -d -p 3000:3000 \

-e OPENAI_API_KEY=sk-xxxx \

-e CODE=your-password \

-e PROXY_URL=http://localhost:7890 \

yidadaa/chatgpt-next-webIf your proxy needs password, use:

-e PROXY_URL="http://127.0.0.1:7890 user pass"bash <(curl -s https://raw.githubusercontent.com/Yidadaa/ChatGPT-Next-Web/main/scripts/setup.sh)| 简体中文 | English | Italiano | 日本語 | 한국어

Please go to the [docs][./docs] directory for more documentation instructions.

- Deploy with cloudflare (Deprecated)

- Frequent Ask Questions

- How to add a new translation

- How to use Vercel (No English)

- User Manual (Only Chinese, WIP)

If you want to add a new translation, read this document.

仅列出捐赠金额 >= 100RMB 的用户。

@mushan0x0 @ClarenceDan @zhangjia @hoochanlon @relativequantum @desenmeng @webees @chazzhou @hauy @Corwin006 @yankunsong @ypwhs @fxxxchao @hotic @WingCH @jtung4 @micozhu @jhansion @Sha1rholder @AnsonHyq @synwith @piksonGit @ouyangzhiping @wenjiavv @LeXwDeX @Licoy @shangmin2009

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ChatGPT-Next-Web

Similar Open Source Tools

ChatGPT-Next-Web

ChatGPT Next Web is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro models. It allows users to deploy their private ChatGPT applications with ease. The tool offers features like one-click deployment, compact client for Linux/Windows/MacOS, compatibility with self-deployed LLMs, privacy-first approach with local data storage, markdown support, responsive design, fast loading speed, prompt templates, awesome prompts, chat history compression, multilingual support, and more.

NextChat

NextChat is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro. It offers a compact client for Linux, Windows, and MacOS, with features like self-deployed LLMs compatibility, privacy-first data storage, markdown support, responsive design, and fast loading speed. Users can create, share, and debug chat tools with prompt templates, access various prompts, compress chat history, and use multiple languages. The tool also supports enterprise-level privatization and customization deployment, with features like brand customization, resource integration, permission control, knowledge integration, security auditing, private deployment, and continuous updates.

TalkWithGemini

Talk With Gemini is a web application that allows users to deploy their private Gemini application for free with one click. It supports Gemini Pro and Gemini Pro Vision models. The application features talk mode for direct communication with Gemini, visual recognition for understanding picture content, full Markdown support, automatic compression of chat records, privacy and security with local data storage, well-designed UI with responsive design, fast loading speed, and multi-language support. The tool is designed to be user-friendly and versatile for various deployment options and language preferences.

Free-GPT4-WEB-API

FreeGPT4-WEB-API is a Python server that allows you to have a self-hosted GPT-4 Unlimited and Free WEB API, via the latest Bing's AI. It uses Flask and GPT4Free libraries. GPT4Free provides an interface to the Bing's GPT-4. The server can be configured by editing the `FreeGPT4_Server.py` file. You can change the server's port, host, and other settings. The only cookie needed for the Bing model is `_U`.

obsei

Obsei is an open-source, low-code, AI powered automation tool that consists of an Observer to collect unstructured data from various sources, an Analyzer to analyze the collected data with various AI tasks, and an Informer to send analyzed data to various destinations. The tool is suitable for scheduled jobs or serverless applications as all Observers can store their state in databases. Obsei is still in alpha stage, so caution is advised when using it in production. The tool can be used for social listening, alerting/notification, automatic customer issue creation, extraction of deeper insights from feedbacks, market research, dataset creation for various AI tasks, and more based on creativity.

genius-ai

Genius is a modern Next.js 14 SaaS AI platform that provides a comprehensive folder structure for app development. It offers features like authentication, dashboard management, landing pages, API integration, and more. The platform is built using React JS, Next JS, TypeScript, Tailwind CSS, and integrates with services like Netlify, Prisma, MySQL, and Stripe. Genius enables users to create AI-powered applications with functionalities such as conversation generation, image processing, code generation, and more. It also includes features like Clerk authentication, OpenAI integration, Replicate API usage, Aiven database connectivity, and Stripe API/webhook setup. The platform is fully configurable and provides a seamless development experience for building AI-driven applications.

llama-api-server

This project aims to create a RESTful API server compatible with the OpenAI API using open-source backends like llama/llama2. With this project, various GPT tools/frameworks can be compatible with your own model. Key features include: - **Compatibility with OpenAI API**: The API server follows the OpenAI API structure, allowing seamless integration with existing tools and frameworks. - **Support for Multiple Backends**: The server supports both llama.cpp and pyllama backends, providing flexibility in model selection. - **Customization Options**: Users can configure model parameters such as temperature, top_p, and top_k to fine-tune the model's behavior. - **Batch Processing**: The API supports batch processing for embeddings, enabling efficient handling of multiple inputs. - **Token Authentication**: The server utilizes token authentication to secure access to the API. This tool is particularly useful for developers and researchers who want to integrate large language models into their applications or explore custom models without relying on proprietary APIs.

openchamber

OpenChamber is a web and desktop interface for the OpenCode AI coding agent, designed to work alongside the OpenCode TUI. The project was built entirely with AI coding agents under supervision, serving as a proof of concept that AI agents can create usable software. It offers features like integrated terminal, Git operations with AI commit message generation, smart tool visualization, permission management, multi-agent runs, task tracker UI, model selection UX, UI scaling controls, session auto-cleanup, and memory optimizations. OpenChamber provides cross-device continuity, remote access, and a visual alternative for developers preferring GUI workflows.

retro-aim-server

Retro AIM Server is an instant messaging server that revives AOL Instant Messenger clients from the 2000s. It supports Windows AIM client versions 5.0-5.9, away messages, buddy icons, buddy list, chat rooms, instant messaging, user profiles, blocking/visibility toggle/idle notification, and warning. The Management API provides functionality for administering the server, including listing users, creating users, changing passwords, and listing active sessions.

duolingo-clone

Lingo is an interactive platform for language learning that provides a modern UI/UX experience. It offers features like courses, quests, and a shop for users to engage with. The tech stack includes React JS, Next JS, Typescript, Tailwind CSS, Vercel, and Postgresql. Users can contribute to the project by submitting changes via pull requests. The platform utilizes resources from CodeWithAntonio, Kenney Assets, Freesound, Elevenlabs AI, and Flagpack. Key dependencies include @clerk/nextjs, @neondatabase/serverless, @radix-ui/react-avatar, and more. Users can follow the project creator on GitHub and Twitter, as well as subscribe to their YouTube channel for updates. To learn more about Next.js, users can refer to the Next.js documentation and interactive tutorial.

Lumina-Note

Lumina Note is a local-first AI note-taking app designed to help users write, connect, and evolve knowledge with AI capabilities while ensuring data ownership. It offers a knowledge-centered workflow with features like Markdown editor, WikiLinks, and graph view. The app includes AI workspace modes such as Chat, Agent, Deep Research, and Codex, along with support for multiple model providers. Users can benefit from bidirectional links, LaTeX support, graph visualization, PDF reader with annotations, real-time voice input, and plugin ecosystem for extended functionalities. Lumina Note is built on Tauri v2 framework with a tech stack including React 18, TypeScript, Tailwind CSS, and SQLite for vector storage.

R2R

R2R (RAG to Riches) is a fast and efficient framework for serving high-quality Retrieval-Augmented Generation (RAG) to end users. The framework is designed with customizable pipelines and a feature-rich FastAPI implementation, enabling developers to quickly deploy and scale RAG-based applications. R2R was conceived to bridge the gap between local LLM experimentation and scalable production solutions. **R2R is to LangChain/LlamaIndex what NextJS is to React**. A JavaScript client for R2R deployments can be found here. ### Key Features * **🚀 Deploy** : Instantly launch production-ready RAG pipelines with streaming capabilities. * **🧩 Customize** : Tailor your pipeline with intuitive configuration files. * **🔌 Extend** : Enhance your pipeline with custom code integrations. * **⚖️ Autoscale** : Scale your pipeline effortlessly in the cloud using SciPhi. * **🤖 OSS** : Benefit from a framework developed by the open-source community, designed to simplify RAG deployment.

chatgpt-adapter

ChatGPT-Adapter is an interface service that integrates various free services together. It provides a unified interface specification and integrates services like Bing, Claude-2, Gemini. Users can start the service by running the linux-server script and set proxies if needed. The tool offers model lists for different adapters, completion dialogues, authorization methods for different services like Claude, Bing, Gemini, Coze, and Lmsys. Additionally, it provides a free drawing interface with options like coze.dall-e-3, sd.dall-e-3, xl.dall-e-3, pg.dall-e-3 based on user-provided Authorization keys. The tool also supports special flags for enhanced functionality.

Kord-Ai

Kord-Ai is a WhatsApp bot designed to automate interactions on WhatsApp by executing predefined commands or responding to user inputs. It can handle tasks like sending messages, sharing media, and managing group activities, providing convenience and efficiency for users and businesses. The bot offers features for deployment on various platforms, including Heroku, Replit, Koyeb, Glitch, Codespace, Render, Railway, VPS, and PC. Users can deploy the bot by obtaining a session ID, forking the repository, setting configurations in the Config.js file, and starting/stopping the bot using npm commands. It is important to note that Kord-Ai is a bot created by M3264, not affiliated with WhatsApp, and users should be cautious in its usage.

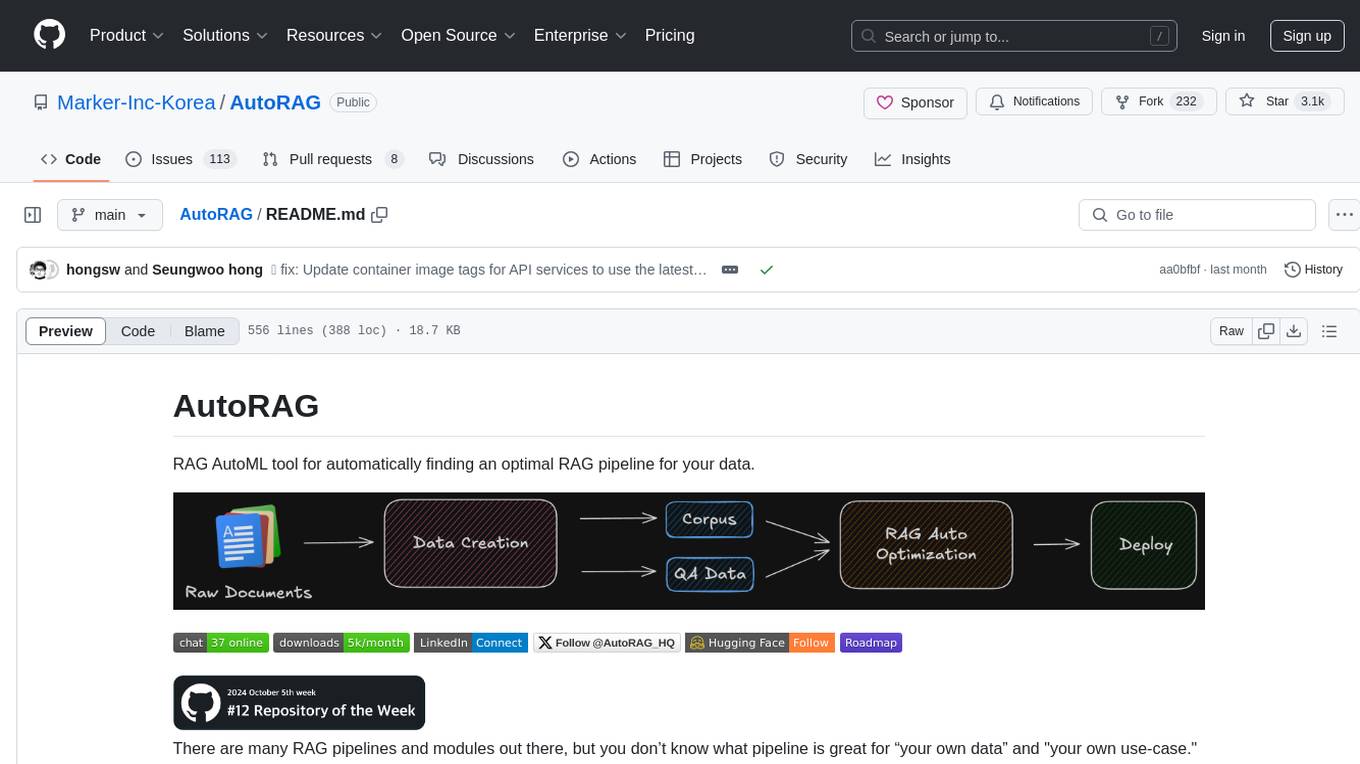

AutoRAG

AutoRAG is an AutoML tool designed to automatically find the optimal RAG pipeline for your data. It simplifies the process of evaluating various RAG modules to identify the best pipeline for your specific use-case. The tool supports easy evaluation of different module combinations, making it efficient to find the most suitable RAG pipeline for your needs. AutoRAG also offers a cloud beta version to assist users in running and optimizing the tool, along with building RAG evaluation datasets for a starting price of $9.99 per optimization.

local-cocoa

Local Cocoa is a privacy-focused tool that runs entirely on your device, turning files into memory to spark insights and power actions. It offers features like fully local privacy, multimodal memory, vector-powered retrieval, intelligent indexing, vision understanding, hardware acceleration, focused user experience, integrated notes, and auto-sync. The tool combines file ingestion, intelligent chunking, and local retrieval to build a private on-device knowledge system. The ultimate goal includes more connectors like Google Drive integration, voice mode for local speech-to-text interaction, and a plugin ecosystem for community tools and agents. Local Cocoa is built using Electron, React, TypeScript, FastAPI, llama.cpp, and Qdrant.

For similar tasks

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

superagent-js

Superagent is an open source framework that enables any developer to integrate production ready AI Assistants into any application in a matter of minutes.

chainlit

Chainlit is an open-source async Python framework which allows developers to build scalable Conversational AI or agentic applications. It enables users to create ChatGPT-like applications, embedded chatbots, custom frontends, and API endpoints. The framework provides features such as multi-modal chats, chain of thought visualization, data persistence, human feedback, and an in-context prompt playground. Chainlit is compatible with various Python programs and libraries, including LangChain, Llama Index, Autogen, OpenAI Assistant, and Haystack. It offers a range of examples and a cookbook to showcase its capabilities and inspire users. Chainlit welcomes contributions and is licensed under the Apache 2.0 license.

neo4j-generative-ai-google-cloud

This repo contains sample applications that show how to use Neo4j with the generative AI capabilities in Google Cloud Vertex AI. We explore how to leverage Google generative AI to build and consume a knowledge graph in Neo4j.

MemGPT

MemGPT is a system that intelligently manages different memory tiers in LLMs in order to effectively provide extended context within the LLM's limited context window. For example, MemGPT knows when to push critical information to a vector database and when to retrieve it later in the chat, enabling perpetual conversations. MemGPT can be used to create perpetual chatbots with self-editing memory, chat with your data by talking to your local files or SQL database, and more.

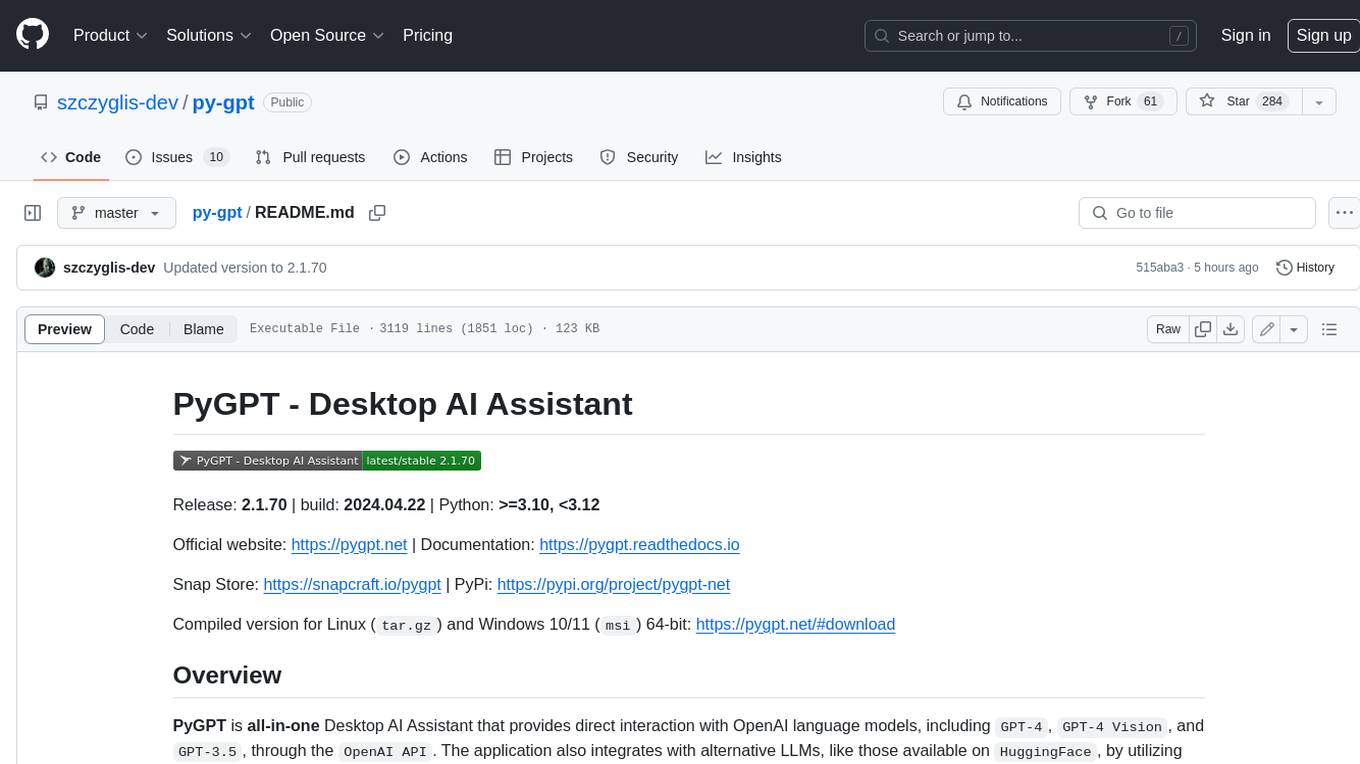

py-gpt

Py-GPT is a Python library that provides an easy-to-use interface for OpenAI's GPT-3 API. It allows users to interact with the powerful GPT-3 model for various natural language processing tasks. With Py-GPT, developers can quickly integrate GPT-3 capabilities into their applications, enabling them to generate text, answer questions, and more with just a few lines of code.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.