auto-subs

Generate Subtitles & Diarize Speakers in Davinci Resolve using AI.

Stars: 799

Auto-subs is a tool designed to automatically transcribe editing timelines using OpenAI Whisper and Stable-TS for extreme accuracy. It generates subtitles in a custom style, is completely free, and runs locally within Davinci Resolve. It works on Mac, Linux, and Windows, supporting both Free and Studio versions of Resolve. Users can jump to positions on the timeline using the Subtitle Navigator and translate from any language to English. The tool provides a user-friendly interface for creating and customizing subtitles for video content.

README:

Experience faster transcription, a sleek new interface, and powerful new features:

- ⚡ Blazing Fast Speeds: Transcribe audio over 2x faster (optimised for Mac and Windows).

- 🗣️ Speaker Diarization: Auto-detect speakers and color-code subtitles effortlessly.

- 🌏 English Translation: Speak in your native language and get subtitles in English (if enabled).

- 🎨 Modern UI: A stylish, streamlined interface for seamless workflows.

- 📦 One-Click Installer: Apple-approved for quick, hassle-free setup.

Download: Windows ✨ MacOS (ARM)

AutoSubs V2 has already surpassed 12,000 downloads in less than 2 months.

Helpful Tutorial: https://www.youtube.com/watch?v=U36KbpoAPxM

- Download the installer above and open it.

- Follow the instructions on screen.

- Open Davinci Resolve and click "Workspace" in the top menu bar, and then "Scripts" and select AutoSubs V2.

[!Note] For Windows Users: Smart screen or Windows Defender may pop up when you open the installer. This is because my code signing certificate hasn't gained enough reputation yet. This screen will eventually go away as more people download it.

| Generate Subtitles & Label Speakers | Advanced Settings |

|---|---|

Automatic transcription for your editing timeline using OpenAI Whisper (running locally).

- 🎨 Generate subtitles in your own custom style

- 🌐 Translate from any language to English.

- 🕹️ Jump to positions on the timeline using the Subtitle Navigator.

- 🎁 Completely free and runs locally within Davinci Resolve.

- 💻 Works on Mac, Linux, and Windows.

- 🎥 Supported on both Free and Studio versions of Resolve.

[!Caution] AutoSubs V1 Broken on Resolve 19.1 Free - Blackmagic has decided to remove the built in UI manager from the free version of Resolve. It is now required to have Resolve 19.0.3 or below, unless on Studio, so you may need to downgrade. (Resolve Studio is unaffected)

[!TIP] Video Tutorials: English Tutorial or Spanish Tutorial

6. ❓ FAQ

| Transcription Settings + Subtitle Navigator | Subtitle Example |

|---|---|

Afrikaans, Arabic, Armenian, Azerbaijani, Belarusian, Bosnian, Bulgarian, Catalan, Chinese, Croatian, Czech, Danish, Dutch, English, Estonian, Finnish, French, Galician, German, Greek, Hebrew, Hindi, Hungarian, Icelandic, Indonesian, Italian, Japanese, Kannada, Kazakh, Korean, Latvian, Lithuanian, Macedonian, Malay, Marathi, Maori, Nepali, Norwegian, Persian, Polish, Portuguese, Romanian, Russian, Serbian, Slovak, Slovenian, Spanish, Swahili, Swedish, Tagalog, Tamil, Thai, Turkish, Ukrainian, Urdu, Vietnamese, and Welsh.

Click on Workspace in Resolve's top menu bar, then within Scripts select auto-subs from the list.

Workspace -> Scripts -> auto-subs

Add a Text+ to the timeline, customise it to your liking, then drag it into the Media Pool. This will be used as the template for your subtitles.

Mark the beginning ("In") and end ("Out") of the area to subtitle using the I and O keys on your keyboard.

Click "Generate Subtitles" to transcribe the selected timeline area.

[!NOTE] Temporarily removed until I have time to update it to work correctly

- Install

Python 3.8 - 3.12 - Install

OpenAI Whisper - Install

FFMPEG(used by Whisper for audio processing) - Install

Stable-TS(improves subtitles) - Download + copy

auto-subs.pyto Fusion Scripts folder.

Windows Setup

Download Python 3.12 (or any version between 3.8 and 3.12) and run the installer. Make sure to tick "Add python.exe to PATH" during installation.

From the Whisper setup guide - Run the following command to install OpenAI Whisper for your OS.

pip install -U openai-whisper

Install FFMPEG (for audio processing). I recommend using a package manager as it makes the install process less confusing.

# on Windows using Chocolatey (https://chocolatey.org/install)

choco install ffmpeg

# on Windows using Scoop (https://scoop.sh/)

scoop install ffmpeg

Install Stable-TS by running this command in the terminal:

pip install -U stable-ts

Download auto-subs.py and place it in one of the following directories:

If you don't see the Scripts or Utility folder, create it manually and place the file there

- All users:

%PROGRAMDATA%\Blackmagic Design\DaVinci Resolve\Fusion\Scripts\Utility - Specific user:

%APPDATA%\Blackmagic Design\DaVinci Resolve\Support\Fusion\Scripts\Utility

MacOS Setup

-

Install Homebrew package manager:

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)" -

Install Python:

brew install python⚠️ Possible Error:<urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self-signed certificate in certificate chain (_ssl.c:1006)>

✔️ Solution: Run this command in the terminal/Applications/Python\ 3.11/Install\ Certificates.command(replace the Python directory with wherever Python is installed on your computer). -

Install FFMPEG (used by Whisper for audio processing):

brew install ffmpeg -

Install OpenAI Whisper:

pip install -U openai-whisper # if previous command does not work pip3 install -U openai-whisper -

Install Stable-TS:

pip install -U stable-ts # if previous command does not work pip3 install -U stable-ts -

Download

auto-subs.pyand place it in one of the following directories:- All users:

/Library/Application Support/Blackmagic Design/DaVinci Resolve/Fusion/Scripts/Utility - Specific user:

/Users/<UserName>/Library/Application Support/Blackmagic Design/DaVinci Resolve/Fusion/Scripts/Utility

- All users:

Linux Setup

-

Python

# on Ubuntu or Debian sudo apt-get install python3.11 # on Arch Linux sudo pacman -S python3.11 -

FFMPEG

# on Ubuntu or Debian sudo apt update && sudo apt install ffmpeg # on Arch Linux sudo pacman -S ffmpeg -

OpenAI Whisper

pip install -U openai-whisper -

Stable-TS

pip install -U stable-ts -

Download

auto-subs.pyand place it in one of the following directories:- All users:

/opt/resolve/Fusion/Scripts/Utility(or/home/resolve/Fusion/Scripts/Utilitydepending on installation) - Specific user:

$HOME/.local/share/DaVinciResolve/Fusion/Scripts/Utility

- All users:

Download the auto-subs.py file and add it to one of the following directories:

-

Windows:

- All users:

%PROGRAMDATA%\Blackmagic Design\DaVinci Resolve\Fusion\Scripts\Utility - Specific user:

%APPDATA%\Blackmagic Design\DaVinci Resolve\Support\Fusion\Scripts\Utility

- All users:

-

Mac OS:

- All users:

/Library/Application Support/Blackmagic Design/DaVinci Resolve/Fusion/Scripts/Utility - Specific user:

/Users/<UserName>/Library/Application Support/Blackmagic Design/DaVinci Resolve/Fusion/Scripts/Utility

- All users:

-

Linux:

- All users:

/opt/resolve/Fusion/Scripts/Utility(or/home/resolve/Fusion/Scripts/Utilitydepending on installation) - Specific user:

$HOME/.local/share/DaVinciResolve/Fusion/Scripts/Utility

- All users:

[!NOTE] Audio transcription has been removed on this version. This means less setup, but a subtitles (SRT) file is required as input. Use this if you already have a way of transcribing video (such as Davinci Resolve Studio's built-in subtitles feature, or CapCut subtitles) and you just want subtitles with a custom theme.

Install any version of Python (tick "Add python.exe to PATH" during installation)

Download auto-subs-light.py and place it in the Utility folder of the Fusion Scripts folder.

...\Blackmagic Design\DaVinci Resolve\Fusion\Scripts\Utility

- Check out the Youtube Video Tutorial 📺

- Thanks to everyone who has supported this project ❤️

- If you have any issues, get in touch on my Discord server for support 📲

Verify that Resolve detects your Python installation by opening the Console from the top menu/toolbar in Resolve and clicking py3 at the top of the console.

Ensure that Path in your system environment variables contains the following:

C:\Users\<your-user-name>\AppData\Local\Programs\Python\Python312C:\Users\<your-user-name>\AppData\Local\Programs\Python\Python312\Scripts\

Use Everything to quickly search your computer for it (Windows only).

<urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self-signed certificate in certificate chain (_ssl.c:1006)>

Solution: Run this command in the terminal (replace the Python directory with wherever Python is installed on your computer).

/Applications/Python\ 3.11/Install\ Certificates.command

import sys + print (sys.version) in the Resolve console.

This video may help you (Only the first 6 minutes are necessary).

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for auto-subs

Similar Open Source Tools

auto-subs

Auto-subs is a tool designed to automatically transcribe editing timelines using OpenAI Whisper and Stable-TS for extreme accuracy. It generates subtitles in a custom style, is completely free, and runs locally within Davinci Resolve. It works on Mac, Linux, and Windows, supporting both Free and Studio versions of Resolve. Users can jump to positions on the timeline using the Subtitle Navigator and translate from any language to English. The tool provides a user-friendly interface for creating and customizing subtitles for video content.

pianotrans

ByteDance's Piano Transcription is a PyTorch implementation for transcribing piano recordings into MIDI files with pedals. This repository provides a simple GUI and packaging for Windows and Nix on Linux/macOS. It supports using GPU for inference and includes CLI usage. Users can upgrade the tool and report issues to the upstream project. The tool focuses on providing MIDI files, and any other improvements to transcription results should be directed to the original project.

fiftyone

FiftyOne is an open-source tool designed for building high-quality datasets and computer vision models. It supercharges machine learning workflows by enabling users to visualize datasets, interpret models faster, and improve efficiency. With FiftyOne, users can explore scenarios, identify failure modes, visualize complex labels, evaluate models, find annotation mistakes, and much more. The tool aims to streamline the process of improving machine learning models by providing a comprehensive set of features for data analysis and model interpretation.

llama-assistant

Llama Assistant is an AI-powered assistant that helps with daily tasks, such as voice recognition, natural language processing, summarizing text, rephrasing sentences, answering questions, and more. It runs offline on your local machine, ensuring privacy by not sending data to external servers. The project is a work in progress with regular feature additions.

Windows-Use

Windows-Use is a powerful automation agent that interacts directly with the Windows OS at the GUI layer. It bridges the gap between AI agents and Windows to perform tasks such as opening apps, clicking buttons, typing, executing shell commands, and capturing UI state without relying on traditional computer vision models. It enables any large language model (LLM) to perform computer automation instead of relying on specific models for it.

NextChat

NextChat is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro. It offers a compact client for Linux, Windows, and MacOS, with features like self-deployed LLMs compatibility, privacy-first data storage, markdown support, responsive design, and fast loading speed. Users can create, share, and debug chat tools with prompt templates, access various prompts, compress chat history, and use multiple languages. The tool also supports enterprise-level privatization and customization deployment, with features like brand customization, resource integration, permission control, knowledge integration, security auditing, private deployment, and continuous updates.

CrewAI-Studio

CrewAI Studio is an application with a user-friendly interface for interacting with CrewAI, offering support for multiple platforms and various backend providers. It allows users to run crews in the background, export single-page apps, and use custom tools for APIs and file writing. The roadmap includes features like better import/export, human input, chat functionality, automatic crew creation, and multiuser environment support.

llama-assistant

Llama Assistant is a local AI assistant that respects your privacy. It is an AI-powered assistant that can recognize your voice, process natural language, and perform various actions based on your commands. It can help with tasks like summarizing text, rephrasing sentences, answering questions, writing emails, and more. The assistant runs offline on your local machine, ensuring privacy by not sending data to external servers. It supports voice recognition, natural language processing, and customizable UI with adjustable transparency. The project is a work in progress with new features being added regularly.

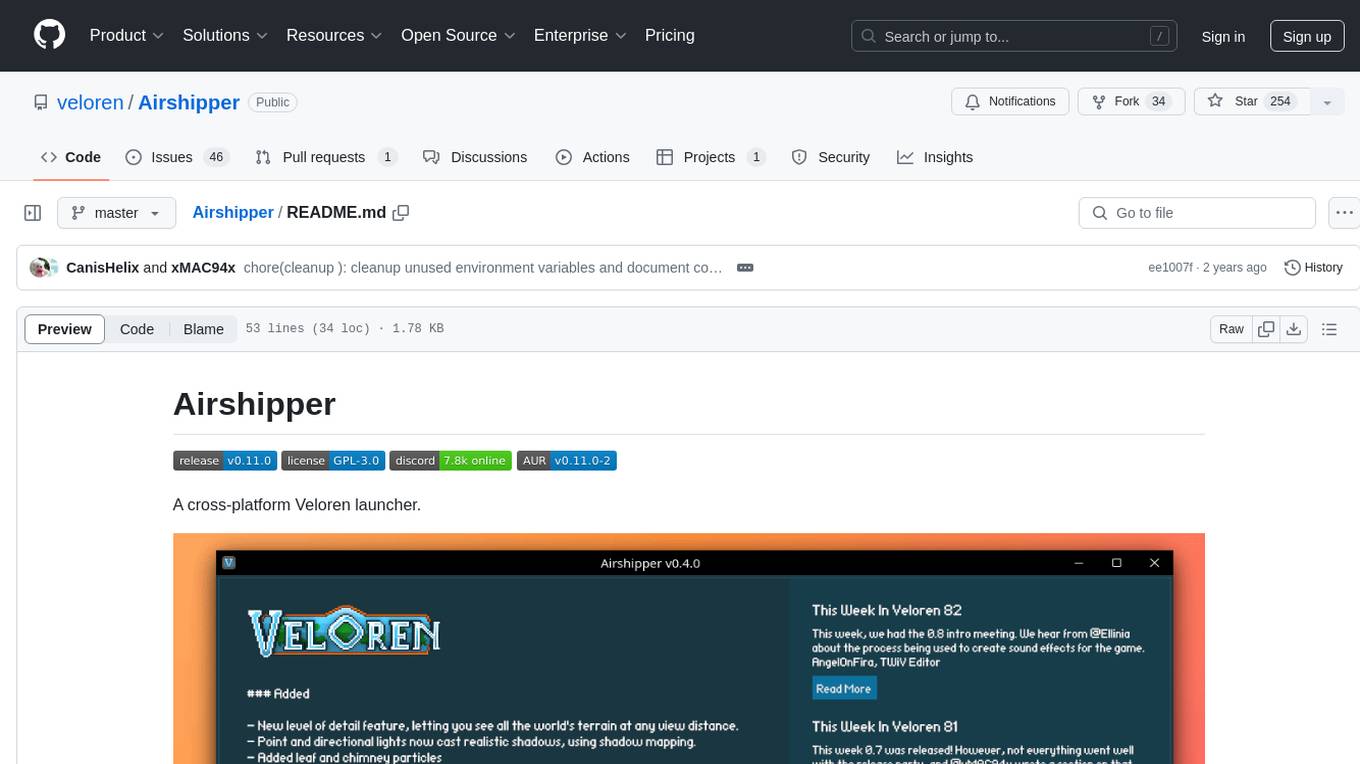

Airshipper

Airshipper is a cross-platform Veloren launcher that allows users to update/download and start nightly builds of the game. It features a fancy UI with self-updating capabilities on Windows. Users can compile it from source and also have the option to install Airshipper-Server for advanced configurations. Note that Airshipper is still in development and may not be stable for all users.

quickvid

QuickVid is an open-source video summarization tool that uses AI to generate summaries of YouTube videos. It is built with Whisper, GPT, LangChain, and Supabase. QuickVid can be used to save time and get the essence of any YouTube video with intelligent summarization.

RWKV-Runner

RWKV Runner is a project designed to simplify the usage of large language models by automating various processes. It provides a lightweight executable program and is compatible with the OpenAI API. Users can deploy the backend on a server and use the program as a client. The project offers features like model management, VRAM configurations, user-friendly chat interface, WebUI option, parameter configuration, model conversion tool, download management, LoRA Finetune, and multilingual localization. It can be used for various tasks such as chat, completion, composition, and model inspection.

TalkWithGemini

Talk With Gemini is a web application that allows users to deploy their private Gemini application for free with one click. It supports Gemini Pro and Gemini Pro Vision models. The application features talk mode for direct communication with Gemini, visual recognition for understanding picture content, full Markdown support, automatic compression of chat records, privacy and security with local data storage, well-designed UI with responsive design, fast loading speed, and multi-language support. The tool is designed to be user-friendly and versatile for various deployment options and language preferences.

Flowise

Flowise is a tool that allows users to build customized LLM flows with a drag-and-drop UI. It is open-source and self-hostable, and it supports various deployments, including AWS, Azure, Digital Ocean, GCP, Railway, Render, HuggingFace Spaces, Elestio, Sealos, and RepoCloud. Flowise has three different modules in a single mono repository: server, ui, and components. The server module is a Node backend that serves API logics, the ui module is a React frontend, and the components module contains third-party node integrations. Flowise supports different environment variables to configure your instance, and you can specify these variables in the .env file inside the packages/server folder.

snipkit

SnipKit is a CLI tool designed to manage snippets efficiently, allowing users to execute saved scripts or generate new ones with the help of AI directly from the terminal. It supports loading snippets from various sources, parameter substitution, different parameter types, themes, and customization options. The tool includes an interactive chat-style interface called SnipKit Assistant for generating parameterized scripts. Users can also work with different AI providers like OpenAI, Anthropic, Google Gemini, and more. SnipKit aims to streamline script execution and script generation workflows for developers and users who frequently work with code snippets.

Free-GPT4-WEB-API

FreeGPT4-WEB-API is a Python server that allows you to have a self-hosted GPT-4 Unlimited and Free WEB API, via the latest Bing's AI. It uses Flask and GPT4Free libraries. GPT4Free provides an interface to the Bing's GPT-4. The server can be configured by editing the `FreeGPT4_Server.py` file. You can change the server's port, host, and other settings. The only cookie needed for the Bing model is `_U`.

obs-localvocal

LocalVocal is a Speech AI assistant OBS Plugin that enables users to transcribe speech into text and translate it into any language locally on their machine. The plugin runs OpenAI's Whisper for real-time speech processing and prediction. It supports features like transcribing audio in real-time, displaying captions on screen, sending captions to files, syncing captions with recordings, and translating captions to major languages. Users can bring their own Whisper model, filter or replace captions, and experience partial transcriptions for streaming. The plugin is privacy-focused, requiring no GPU, cloud costs, network, or downtime.

For similar tasks

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

auto-subs

Auto-subs is a tool designed to automatically transcribe editing timelines using OpenAI Whisper and Stable-TS for extreme accuracy. It generates subtitles in a custom style, is completely free, and runs locally within Davinci Resolve. It works on Mac, Linux, and Windows, supporting both Free and Studio versions of Resolve. Users can jump to positions on the timeline using the Subtitle Navigator and translate from any language to English. The tool provides a user-friendly interface for creating and customizing subtitles for video content.

VideoLingo

VideoLingo is an all-in-one video translation and localization dubbing tool designed to generate Netflix-level high-quality subtitles. It aims to eliminate stiff machine translation, multiple lines of subtitles, and can even add high-quality dubbing, allowing knowledge from around the world to be shared across language barriers. Through an intuitive Streamlit web interface, the entire process from video link to embedded high-quality bilingual subtitles and even dubbing can be completed with just two clicks, easily creating Netflix-quality localized videos. Key features and functions include using yt-dlp to download videos from Youtube links, using WhisperX for word-level timeline subtitle recognition, using NLP and GPT for subtitle segmentation based on sentence meaning, summarizing intelligent term knowledge base with GPT for context-aware translation, three-step direct translation, reflection, and free translation to eliminate strange machine translation, checking single-line subtitle length and translation quality according to Netflix standards, using GPT-SoVITS for high-quality aligned dubbing, and integrating package for one-click startup and one-click output in streamlit.

voice-pro

Voice-Pro is an integrated solution for subtitles, translation, and TTS. It offers features like multilingual subtitles, live translation, vocal remover, and supports OpenAI Whisper and Open-Source Translator. The tool provides a Studio tab for various functions, Whisper Caption tab for subtitle creation, Translate tab for translation, TTS tab for text-to-speech, Live Translation tab for real-time voice recognition, and Batch tab for processing multiple files. Users can download YouTube videos, improve voice recognition accuracy, create automatic subtitles, and produce multilingual videos with ease. The tool is easy to install with one-click and offers a Web-UI for user convenience.

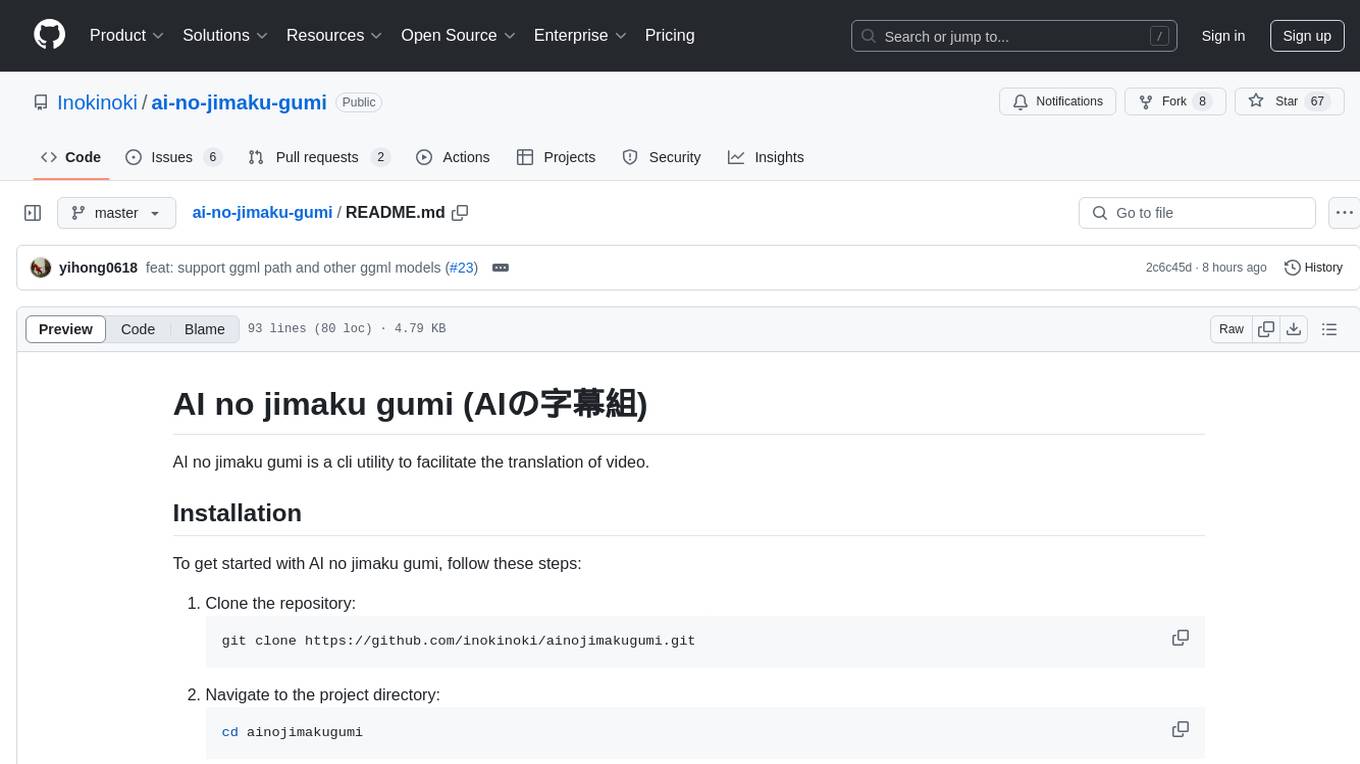

ai-no-jimaku-gumi

AI no jimaku gumi is a command-line utility designed to assist in video translation. It supports translating subtitles using AI models and provides options for different translation and subtitle sources. Users can easily set up the tool by following the installation steps and use it to translate videos to different languages with customizable settings. The tool currently supports DeepL and llm translation backends and SRT subtitle export. It aims to simplify the process of adding subtitles to videos by leveraging AI technology.

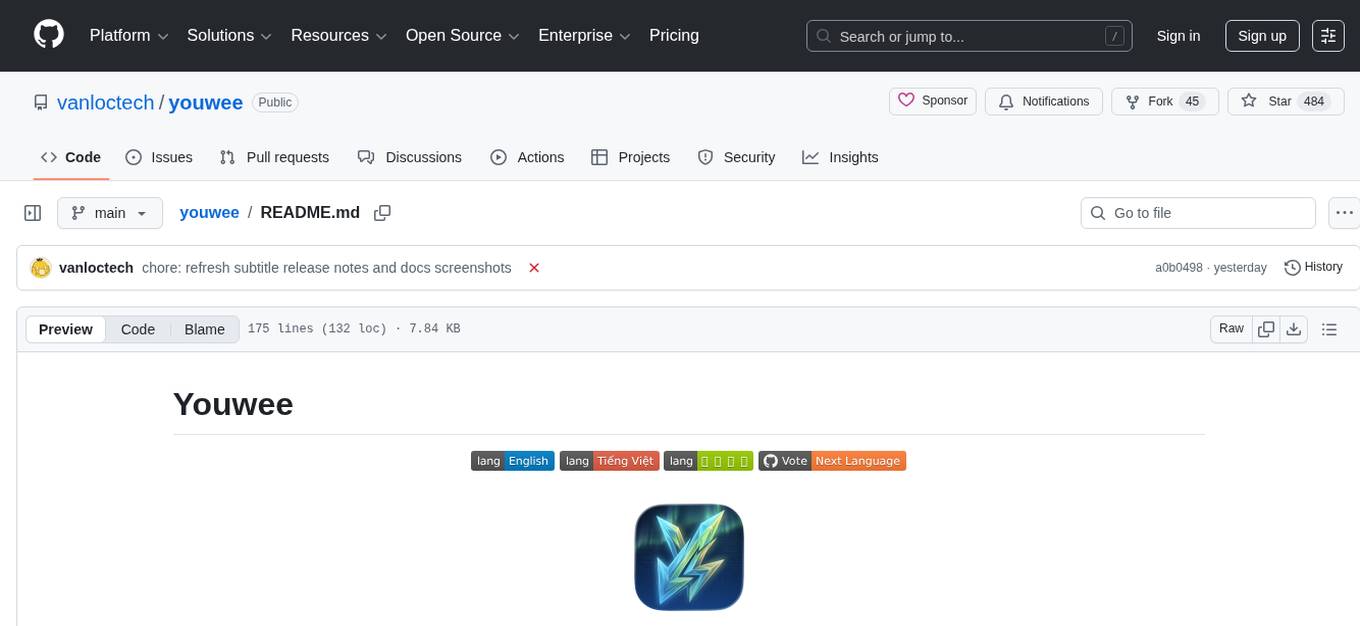

youwee

Youwee is a modern YouTube video downloader tool built with Tauri and React. It offers features like downloading videos from various platforms, following channels, fetching metadata, live stream support, AI video summary and processing, time range download, batch and playlist downloads, audio extraction, subtitle support, subtitle workshop, post-processing, SponsorBlock, speed limit control, download library, multiple themes, and is fast and lightweight.

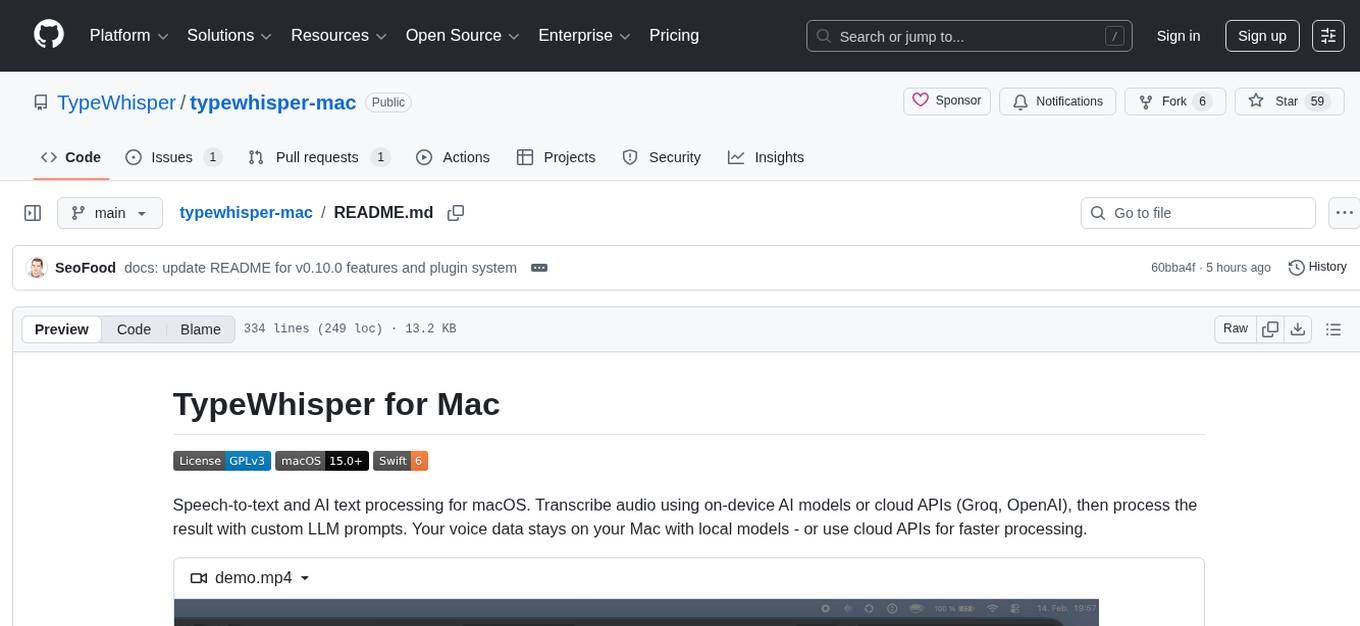

typewhisper-mac

TypeWhisper for Mac is a speech-to-text and AI text processing tool designed for macOS. It allows users to transcribe audio using on-device AI models or cloud APIs like Groq and OpenAI, and process the results with custom LLM prompts. The tool offers features such as multiple transcription engines, on-device or cloud processing, streaming preview, file transcription, subtitle export, system-wide dictation with hotkeys, AI processing with custom prompts and translation, personalization through profiles, dictionary, snippets, and history, integration and extensibility via plugins, HTTP API, and CLI tool. The tool is designed for macOS 15.0 and later, supports Apple Silicon, and offers a multilingual UI with English and German languages.

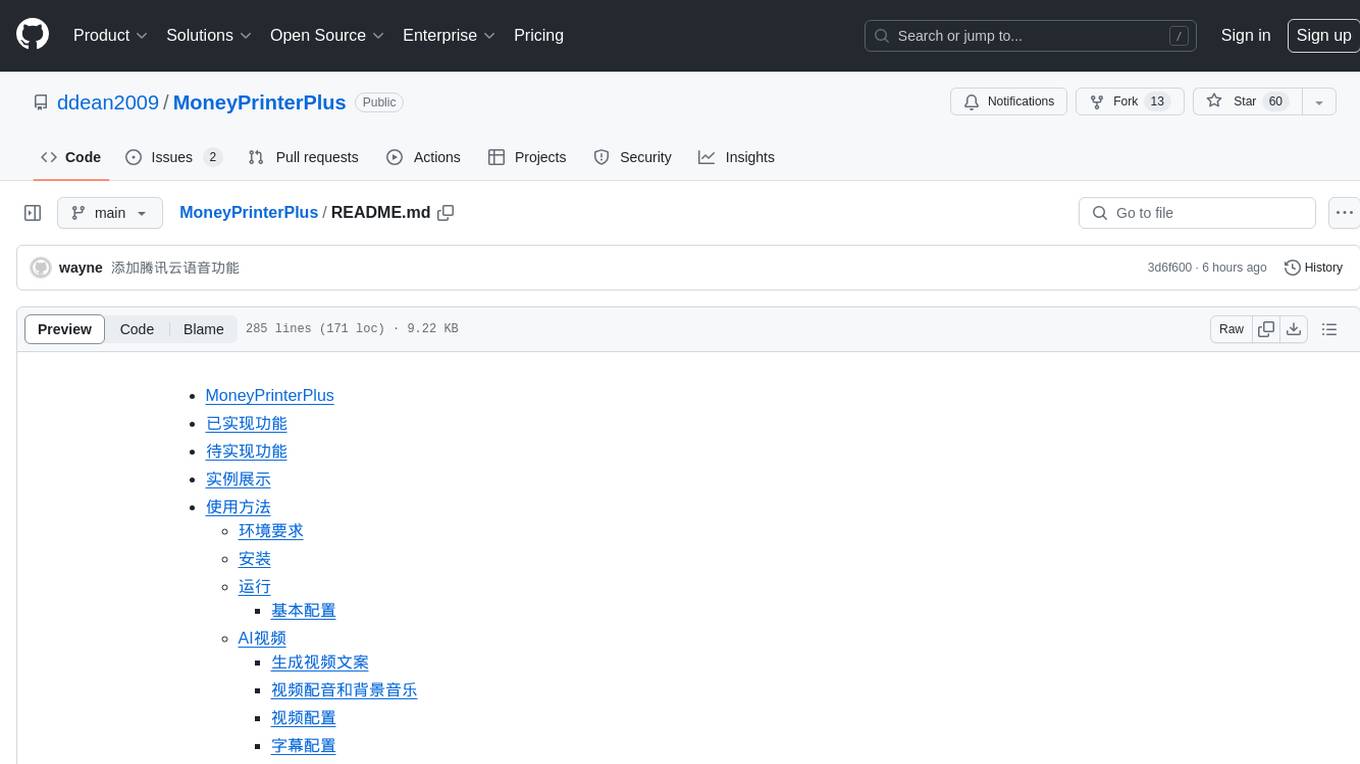

MoneyPrinterPlus

MoneyPrinterPlus is a project designed to help users easily make money in the era of short videos. It leverages AI big model technology to batch generate various short videos, perform video editing, and automatically publish videos to popular platforms like Douyin, Kuaishou, Xiaohongshu, and Video Number. The tool covers a wide range of functionalities including integrating with major AI big model tools, supporting various voice types, offering video transition effects, enabling customization of subtitles, and more. It aims to simplify the process of creating and sharing videos to monetize traffic.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.